Panoramic Mosaics from Chang’E-3 PCAM Images at Point A

Abstract

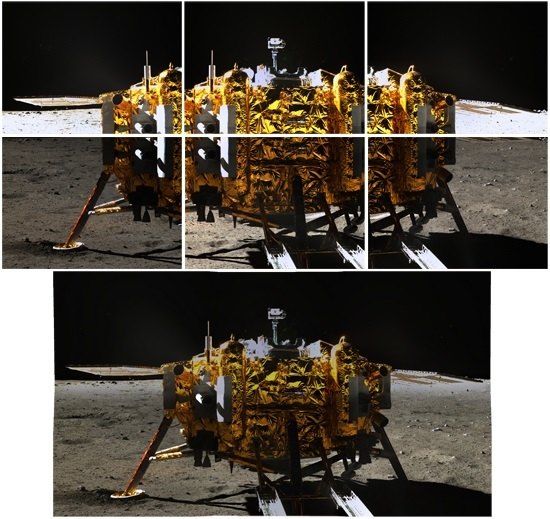

:1. Introduction

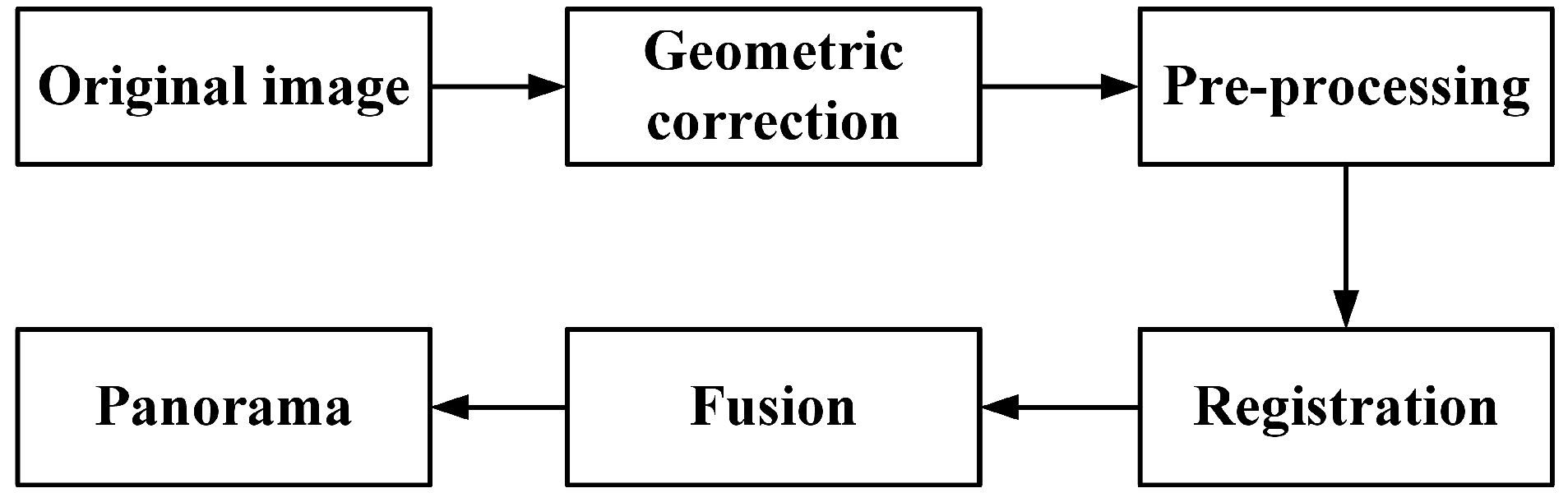

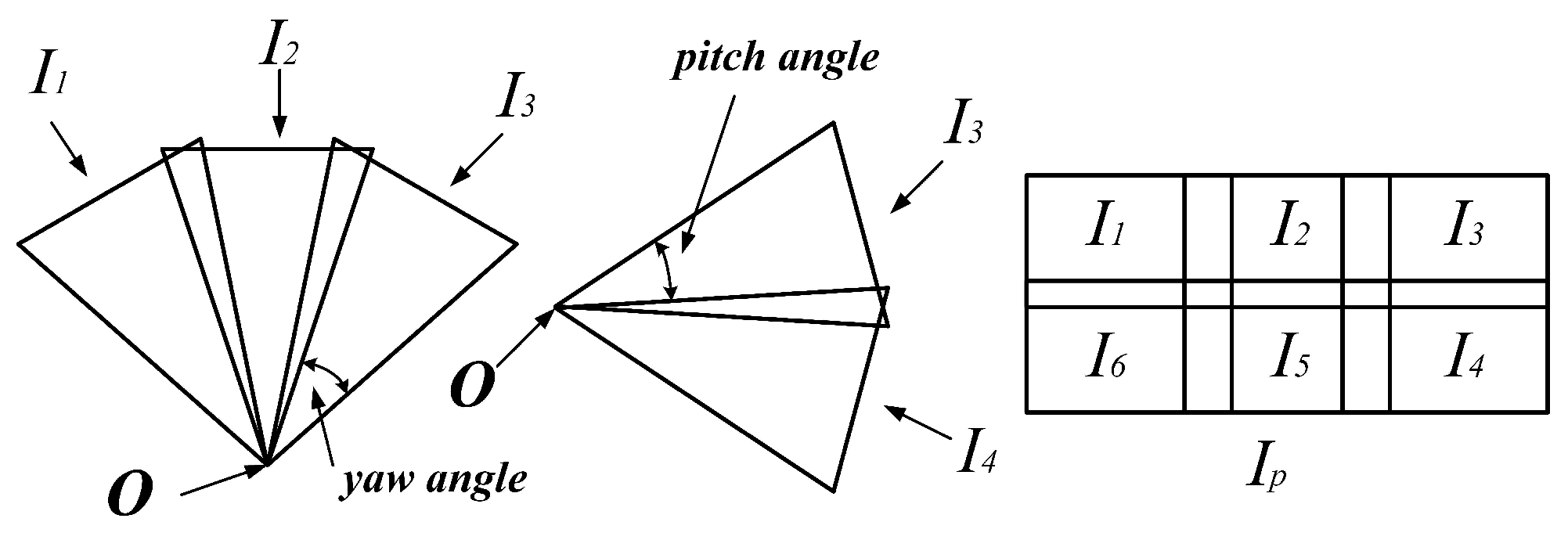

2. Methodology Used in Panoramic Mosaics

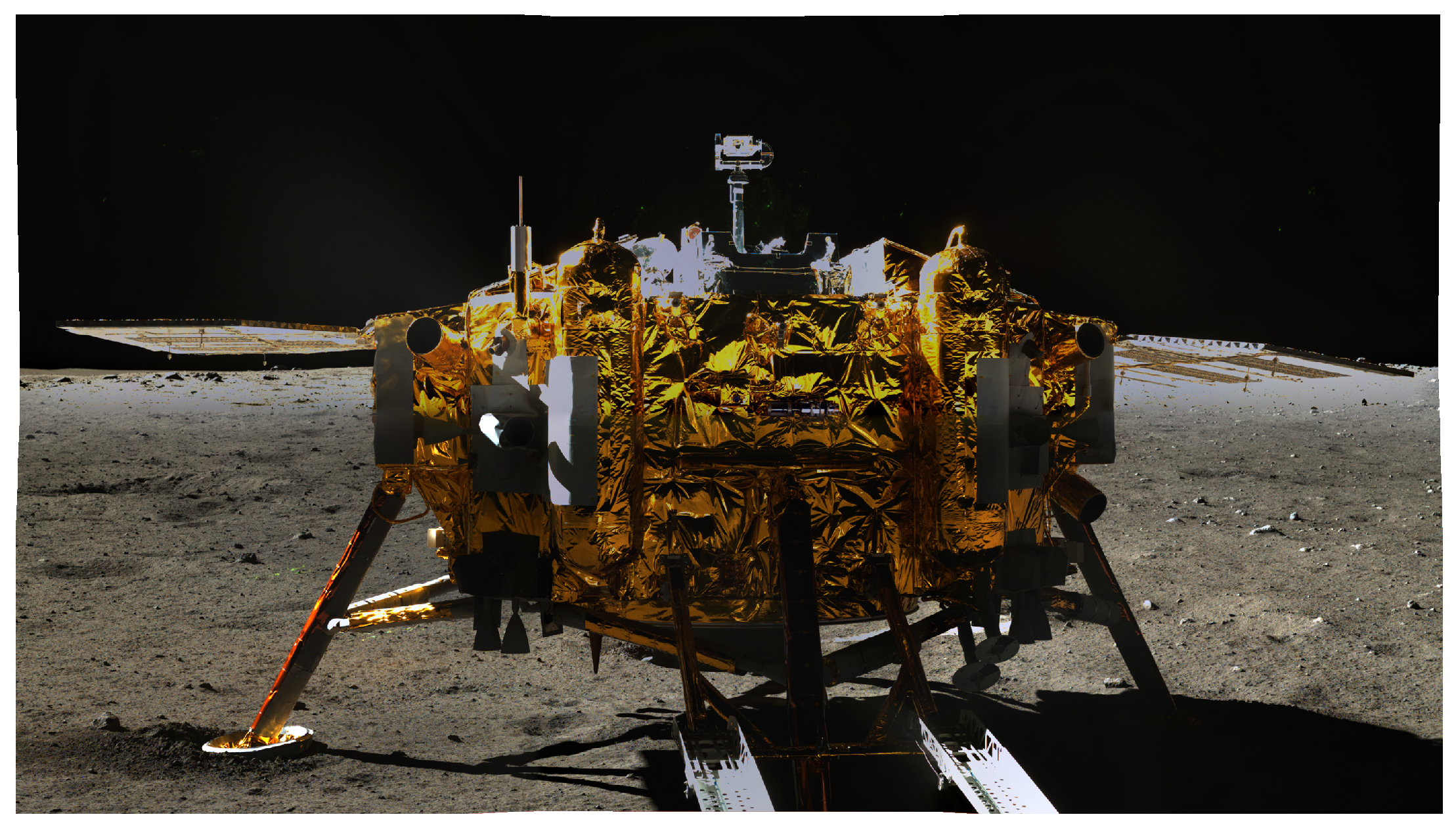

2.1. Geometric Correction

2.2. Pre-Processing

2.3. Registration

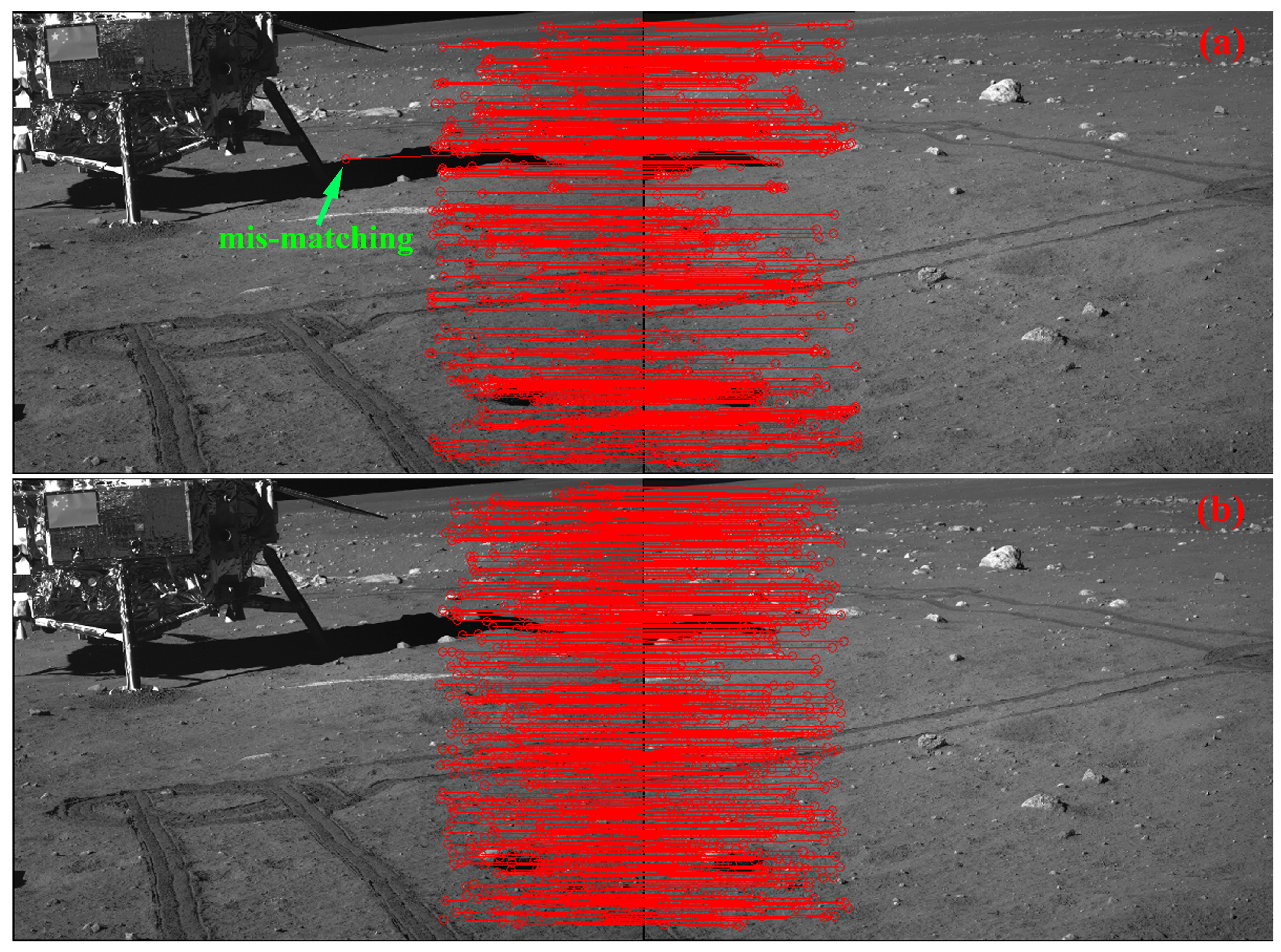

2.3.1. Feature Matching

- First step:

- NNDR is applied in the initial matching. is the nearest neighbor and is the second-nearest neighbor to , ifand are matched, and is the threshold.

- Second step:

- Normally, false matches still exist after the NNDR based matching. Assume the number of corresponding points obtained from the initial matching is n, sorting these by values (smallest to largest), the smaller the , the higher the precision of matching is. Therefore, the first matching points are taken as the correct matches.

2.3.2. Establishment and Optimization of Transformation Matrix

2.4. Fusion of Overlapped Images

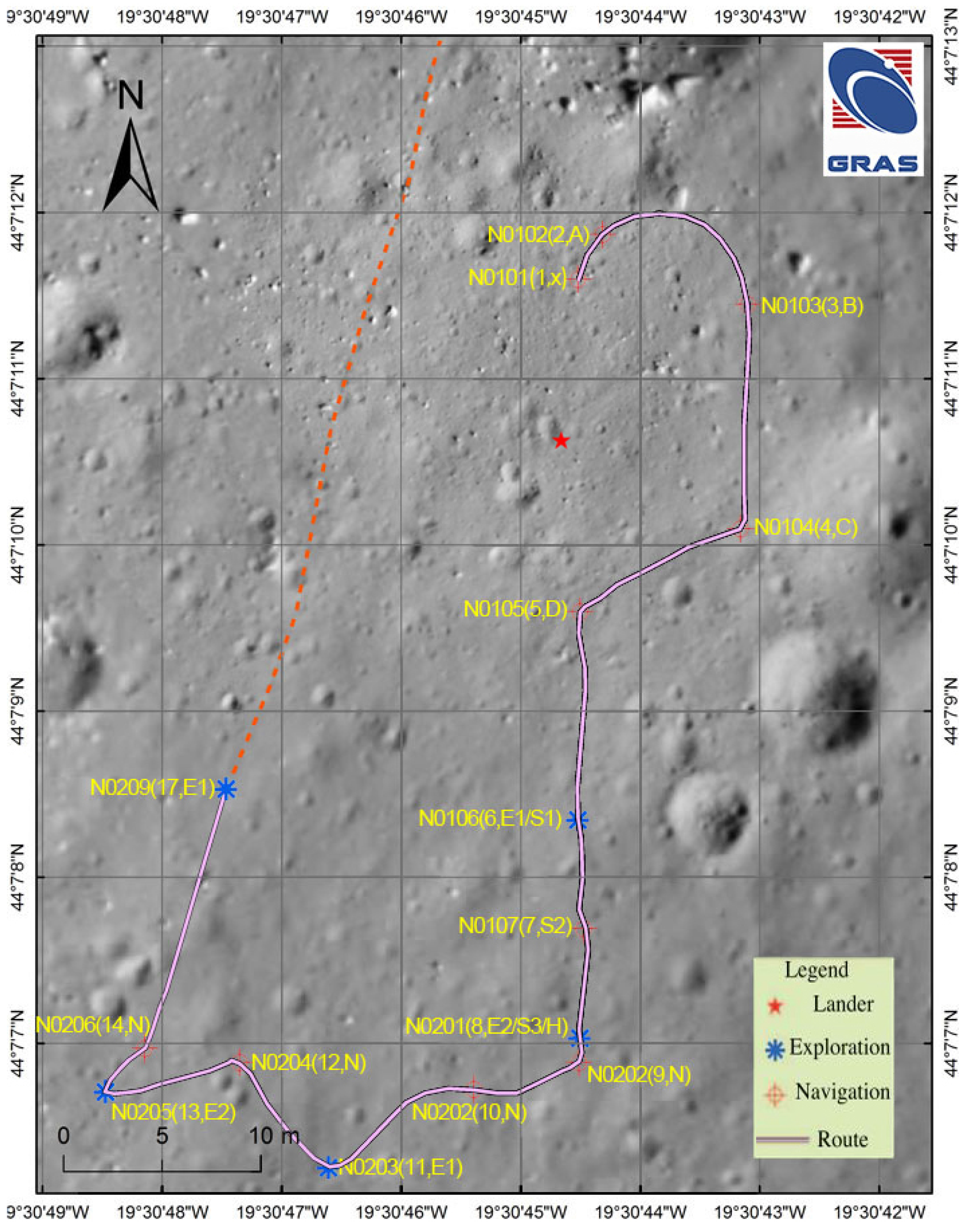

3. Experiments

3.1. Experiments of Feature Matching

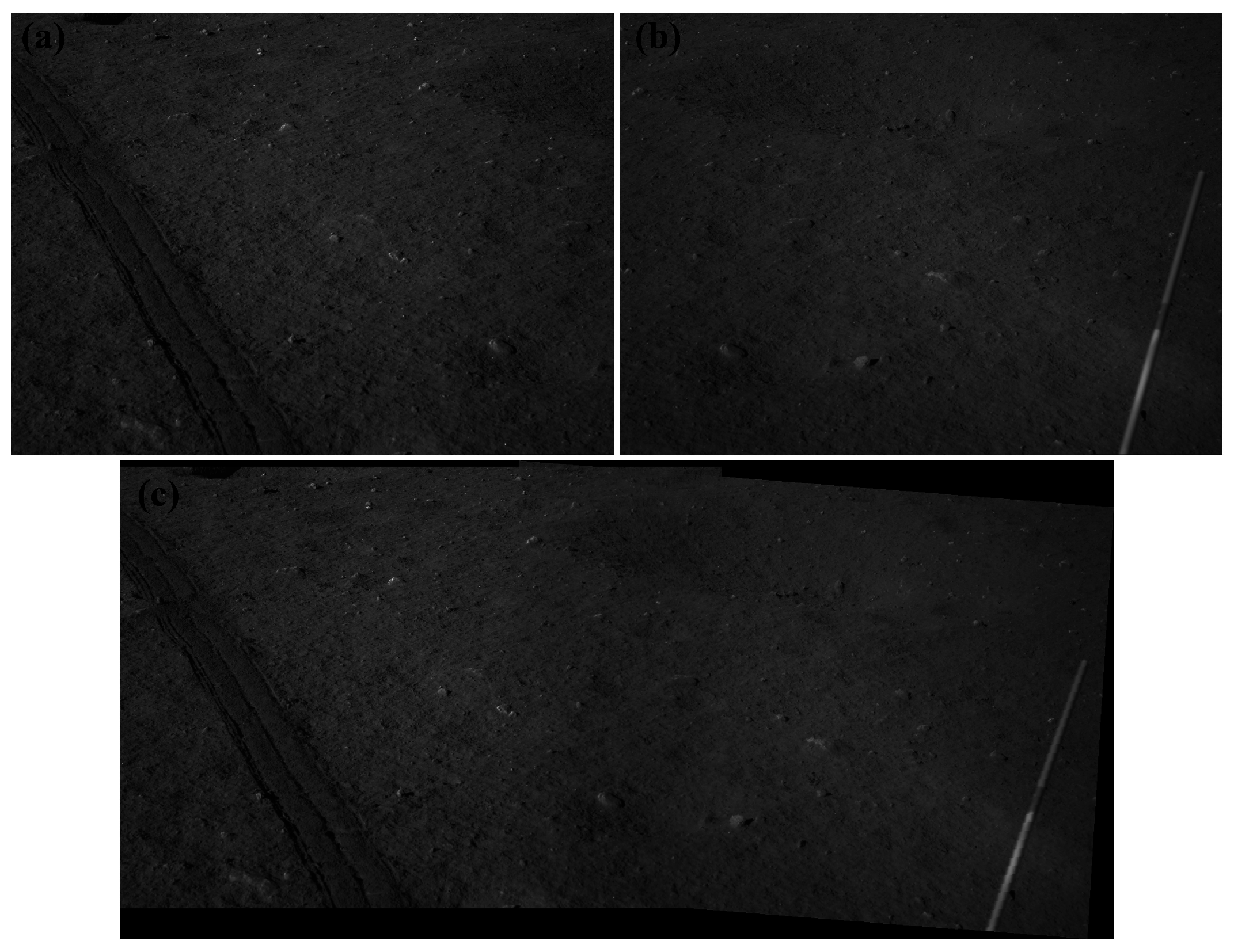

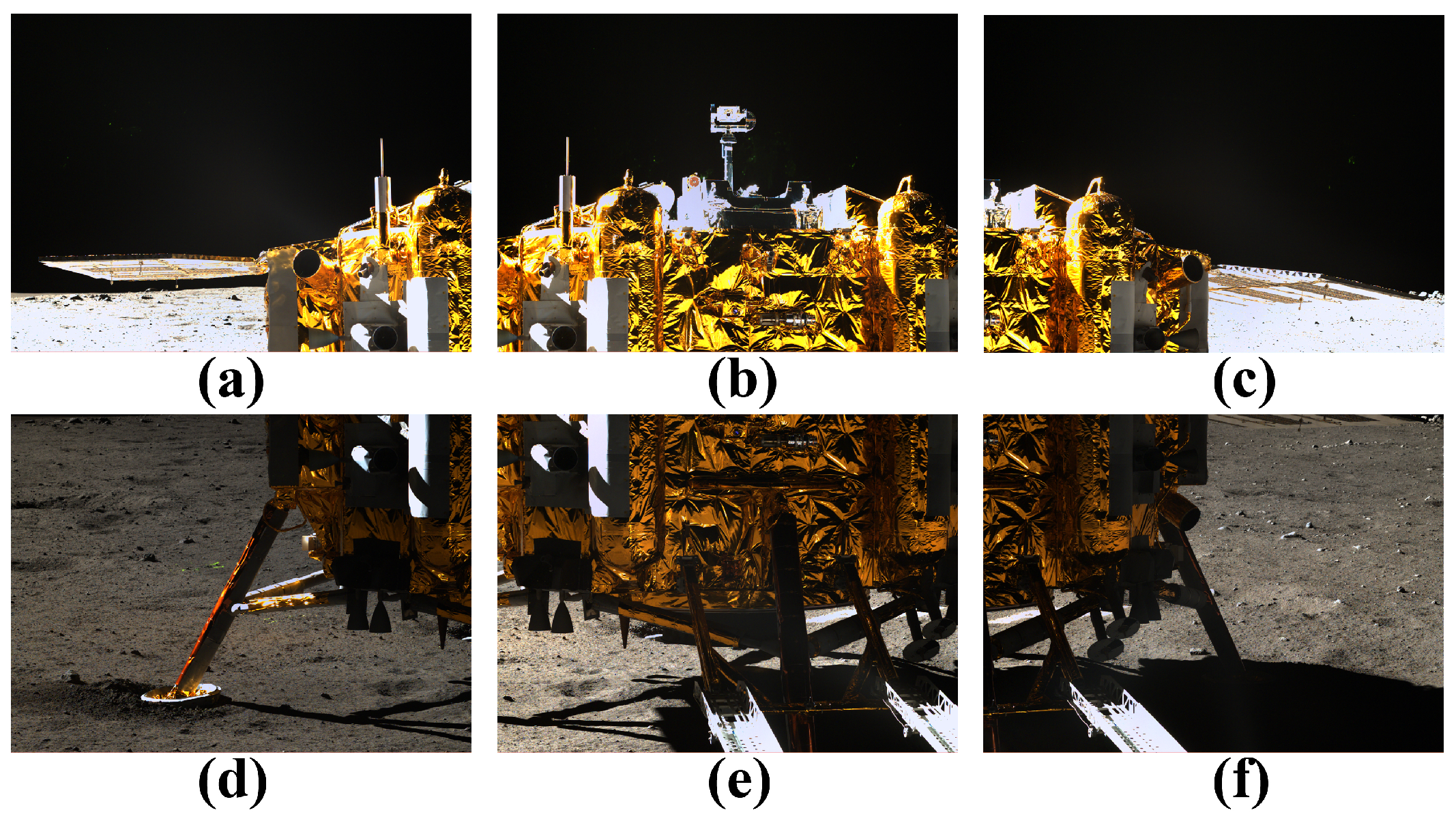

3.2. Experiments for Fusion

4. Results and Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Ip, W.H.; Yan, J.; Li, C.L.; Ouyang, Z.Y. Preface: The Chang’E-3 lander and rover mission to the Moon. Res. Astron. Astrophys. 2014, 14, 1511–1513. [Google Scholar] [CrossRef]

- Bell III, J.F.; Squyres, S.W.; Arvidson, R.E.; Arneson, H.M.; Bass, D.; Blaney, D.; Cabrol, N.; Calvin, W.; Farmer, J.; Farrand, W.H.; et al. Pancam multispectral imaging results from the Spirit rover at Gusev crater. Science 2004, 305, 800–806. [Google Scholar] [CrossRef] [PubMed]

- Bell, J.F., III; Squyres, S.W.; Arvidson, R.E.; Arneson, H.M.; Bass, D.; Calvin, W.; Farrand, W.H.; Goetz, W.; Golombek, M.; Greeley, R.; et al. Pancam multispectral imaging results from the Opportunity rover at Meridiani planum. Science 2004, 306, 1703–1709. [Google Scholar] [CrossRef] [PubMed]

- Blake, D.F.; Morris, R.V.; Kocurek, G.; Morrison, S.M.; Downs, R.T.; Bish, D.; Ming, D.W.; Edgett, K.S.; Rubin, D.; Goetz, W.; et al. Curiosity at Gale crater, Mars: Characterization and analysis of the Rocknest sand shadow. Science 2013, 341. [Google Scholar] [CrossRef] [PubMed]

- Grotzinger, J.P.; Sumner, D.Y.; Kah, L.C.; Stack, K.; Gupta, S.; Edgar, L.; Rubin, D.; Lewis, K.; Schieber, J.; Mangold, N.; et al. A habitable fluvio-lacustrine environment at Yellowknife bay, Gale crater, Mars. Science 2014, 343. [Google Scholar] [CrossRef] [PubMed]

- Shum, H.Y.; Ng, K.T.; Chan, S.C. A virtual reality system using the concentric mosaic: Construction, rendering, and data compression. IEEE Trans. Multimed. 2005, 7, 85–95. [Google Scholar] [CrossRef] [Green Version]

- Ngo, C.W.; Pong, T.C.; Zhang, H.J. Motion analysis and segmentation through spatio-temporal slices processing. IEEE Trans. Image Process. 2003, 12, 341–355. [Google Scholar] [PubMed]

- Ma, Y.; Wang, L.; Zomaya, A.Y.; Chen, D.; Ranjan, R. Task-tree based large-scale mosaicking for massive remote sensed imageries with dynamic DAG scheduling. IEEE Trans. Parallel Distrib. Syst. 2014, 25, 2126–2137. [Google Scholar] [CrossRef]

- Chen, C.; Chen, Z.; Li, M.; Liu, Y.; Cheng, L.; Ren, Y. Parallel relative radiometric normalisation for remote sensing image mosaics. Comput. Geosci. 2014, 73, 28–36. [Google Scholar] [CrossRef]

- Bradley, P.; Jutzi, B. Improved feature detection in fused intensity-range images with complex SIFT (CSIFT). Remote Sens. 2011, 3, 2076–2088. [Google Scholar] [CrossRef]

- Sima, A.; Buckley, S. Optimizing SIFT for matching of short wave infrared and visible wavelength images. Remote Sens. 2013, 5, 2073–2056. [Google Scholar] [CrossRef]

- Chen, Q.; Wang, S.; Wang, B.; Sun, M. Automatic registration method for fusion of ZY-1-02C satellite images. Remote Sens. 2013, 5, 157–179. [Google Scholar] [CrossRef]

- Wang, X.; Li, Y.; Wei, H.; Liu, F. An ASIFT-based local registration method for satellite imagery. Remote Sens. 2015, 7, 7044–7061. [Google Scholar] [CrossRef]

- Brown, L.G. A survey of image registration techniques. ACM Comput. Surv. 1992, 24, 325–376. [Google Scholar] [CrossRef]

- Matas, J.; Chum, O.; Urban, M.; Pajdla, T. Robust wide-baseline stereo from maximally stable extremal regions. Image Vis. Comput. 2004, 22, 761–767. [Google Scholar] [CrossRef]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 8, 679–698. [Google Scholar] [CrossRef] [PubMed]

- Mokhtarian, F.; Suomela, R. Robust image corner detection through curvature scale space. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1376–1381. [Google Scholar] [CrossRef]

- Mikolajczyk, K.; Schmid, C. A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1615–1630. [Google Scholar] [CrossRef] [PubMed]

- Wu, F.; Liu, J.; Ren, X.; Li, C. Deep space exploration panoramic camera calibration technique based on circular markers. Acta Opt. Sin. 2013, 33. [Google Scholar] [CrossRef]

- Brown, D. Decentering distortion of lenses. Photom. Eng. 1966, 32, 444–462. [Google Scholar]

- Brown, D. Close-range camera calibration. Photom. Eng. 1971, 37, 855–866. [Google Scholar]

- Fryer, J.; Brown, D. Lens distortion for close-range photogrammetry. Photogramm. Eng. Remote Sens. 1986, 52, 51–58. [Google Scholar]

- Tsai, R. A versatile camera calibration technique for high-accuracy 3D machine vision metrology using off-the-shelf TV cameras and lenses. IEEE J. Robot. Autom. 1987, 3, 323–344. [Google Scholar] [CrossRef]

- Wang, W.R.; Ren, X.; Wang, F.F.; Liu, J.J.; Li, C.L. Terrain reconstruction from Chang’e-3 PCAM images. Res. Astron. Astrophys. 2015, 15, 1057–1067. [Google Scholar] [CrossRef]

- More, J.J. The Levenberg–Marquardt algorithm: Implementation and theory. Numer. Anal. 1978, 630, 105–116. [Google Scholar]

- Dunlap, J.C.; Bodegom, E.; Widenhorn, R. Correction of dark current in consumer cameras. J. Electron. Imaging 2010, 19. [Google Scholar] [CrossRef]

- Dunlap, J.C.; Porter, W.C.; Bodegom, E.; Widenhorn, R. Dark current in an active pixel complementary metal-oxide-semiconductor sensor. J. Electron. Imaging 2011, 20. [Google Scholar] [CrossRef]

- Ren, X.; Li, C.; Liu, J.; Wang, F.; Yang, J.; Liu, E.; Xue, B.; Zhao, R. A method and results of color calibration for the Chang’e-3 terrain camera and panoramic camera. Res. Astron. Astrophys. 2014, 14, 1557–1566. [Google Scholar] [CrossRef]

- Moravec, H. Towards automatic visual obstacle avoidance. In Proceedings of the 5th International Joint Conference on Artificial Intelligence, Cambridge, MA, USA, 22–25 August 1977; p. 584.

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; pp. 147–151.

- Smith, S.; Brady, J. SUSAN—A new approach to low level image processing. Int. J. Comput. Vis. 1997, 23, 45–78. [Google Scholar] [CrossRef]

- Mukherjee, D.; Wu, Q.; Wang, G. A comparative experimental study of image feature detectors and descriptors. Mach. Vis. Appl. 2015, 26, 443–466. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Brown, M.; Lowe, D.G. Automatic panoramic image stitching using invariant features. Int. J. Comput. Vis. 2007, 74, 59–73. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

| Character | Value |

|---|---|

| Sensor mode | IA-G3 DALSA |

| Pixel numbers | 2352 × 1728 |

| Pixel size (m) | 7.4 |

| Frame frequency | 62 |

| Spectral range (nm) | 420∼700 |

| Color | (R, G, B) |

| Baseline (mm) | 270 |

| Imaging mode | Color or Panchromatic mode |

| Normal imaging distance (m) | 3∼∞ |

| Effective pixel numbers | 1176 × 864 (Color mode); 2352 × 1728 (Panchromatic mode) |

| FOV | 19.7× 14.5 |

| Focal length (mm) | 50 |

| Quantitative value (bit) | 10 |

| S/N (dB) | ≥40 (maximum); ≥30 (albedo: 0.09; solar elevation: 30) |

| Optical system static MTF | 0.33 |

| Weight (kg) | 0.64 |

| Algorithm | a | b | c | d |

|---|---|---|---|---|

| SIFT | 5445 | 3496 | 874 | 753 |

| SURF | 4955 | 4911 | 3267 | 2430 |

| a–b | c–d | |||

|---|---|---|---|---|

| SIFT | SURF | SIFT | SURF | |

| 0.70 | 890 (F) | 786 (F) | 242 (F) | 573 (F) |

| 0.65 | 855 (F) | 754 (F) | 226 (F) | 546 (F) |

| 0.60 | 813 (F) | 725 (F) | 214 (F) | 513 (F) |

| 0.55 | 767 (F) | 682 (F) | 198 (T) | 471(T) |

| 0.50 | 716 (F) | 636 (F) | 182 (T) | 411 (T) |

| 0.45 | 652 (F) | 580 (T) | 163 (T) | 349 (T) |

| 0.40 | 570 (F) | 493 (T) | 139 (T) | 282 (T) |

| 0.35 | 474 (F) | 388 (T) | 111 (T) | 211 (T) |

| 0.30 | 365 (F) | 277 (T) | 87 (T) | 127 (T) |

| 0.25 | 261 (T) | 160 (T) | 61 (T) | 70 (T) |

| 0.20 | 151 (T) | 75 (T) | 35 (T) | 35 (T) |

| Original Image | Adjacen Image | R-Channel | G-Channel | B-Channel | |||

|---|---|---|---|---|---|---|---|

| (dB) | (dB) | (dB) | |||||

| a | b | 14.6489 | 36.4727 | 13.5485 | 36.8119 | 8.3933 | 38.8915 |

| b | c | 18.2242 | 35.5243 | 18.6207 | 35.4308 | 8.0644 | 39.0651 |

| d | e | 42.3670 | 31.8605 | 38.7301 | 32.2503 | 29.6969 | 33.4037 |

| e | f | 32.7143 | 32.9834 | 26.7355 | 33.8599 | 12.0344 | 37.3266 |

| d | a | 5.9929 | 40.3545 | 4.7573 | 41.3572 | 2.5790 | 44.0163 |

| e | b | 30.8825 | 33.2337 | 26.3656 | 33.9204 | 4.0549 | 42.0510 |

| f | c | 4.5585 | 41.5425 | 3.8591 | 42.2660 | 1.8369 | 45.4899 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, F.; Wang, X.; Wei, H.; Liu, J.; Liu, F.; Yang, J. Panoramic Mosaics from Chang’E-3 PCAM Images at Point A. Remote Sens. 2016, 8, 812. https://doi.org/10.3390/rs8100812

Wu F, Wang X, Wei H, Liu J, Liu F, Yang J. Panoramic Mosaics from Chang’E-3 PCAM Images at Point A. Remote Sensing. 2016; 8(10):812. https://doi.org/10.3390/rs8100812

Chicago/Turabian StyleWu, Fanlu, Xiangjun Wang, Hong Wei, Jianjun Liu, Feng Liu, and Jinsheng Yang. 2016. "Panoramic Mosaics from Chang’E-3 PCAM Images at Point A" Remote Sensing 8, no. 10: 812. https://doi.org/10.3390/rs8100812