As mentioned, the RDCA detects CI areas from MTSAT-2 images using only one threshold value for each interest field [

35]. Such simple criteria of the interest fields might not be sufficient to accurately detect convective clouds driven by various weather systems, which can have diverse values for the interest fields. In this study, the new criteria of the interest fields were assessed to improve the CI detection performance and efficiency using COMS MI data. Binary classification was applied to detect CI from COMS MI data, in which the interest fields of MTSAT-2 RDCA were used as input variables.

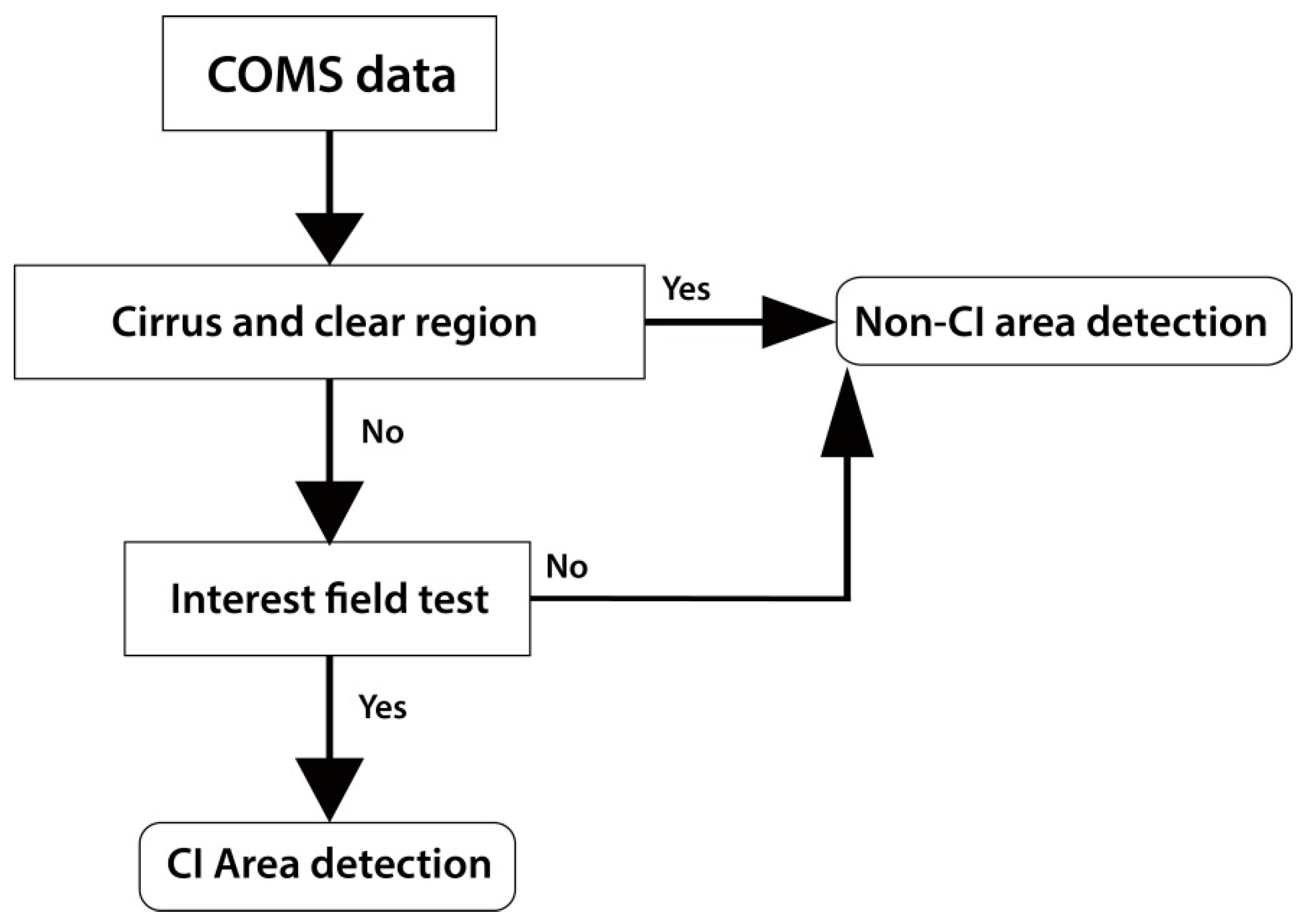

Figure 1 shows the processing flow of the binary classification based on machine learning approaches. This section describes the interest fields of RDCA and three machine learning approaches used for the binary classification of CI and non-CI. The method for collecting samples for classification and validation is also described in this section.

Figure 1.

Processing flow of the binary classification of COMS MI images for CI detection based on machine learning approaches.

3.1. Convective Initiation Interest Fields for COMS MI

The interest fields used in RDCA (

Table 2) can be used to develop a CI detection model for COMS due to the large similarity of spectral channels between the COMS MI and MTSAT-2 Imager. The VIS channel of MI has 1 km spatial resolution, while the other spectral channels have 4 km resolution. The different spatial resolutions make it difficult to combine the spectral channels. In order to efficiently process data, the COMS MI images were downscaled to 1 km resolution using bilinear interpolation.

Clear sky and thin cloudy areas were removed from MI images using reflectance (

) at VIS,

TB at IR1, and the difference of

TBs between the IR1 and IR2 channels. The term

at VIS varies by solar zenith angle, which produces incorrect interest fields related to the VIS channel. Therefore,

at VIS must be normalized to the solar zenith angle. In this study,

at VIS was normalized by

where

is the normalized reflectance and

is a solar zenith angle. A criterion of IR1

TB higher than 288.15 K was used to mask areas of clear sky, while criteria of

> 45% and

TB difference between IR1 and IR2 < 2 K was used to remove thin clouds and cirrus [

35]. These criteria are essential to mask clear sky and thin cloudy areas, but work only in summer season in the absence of snow [

35]. Thus, the CI detection models developed in this study can be applied to COMS MI images with such conditions.

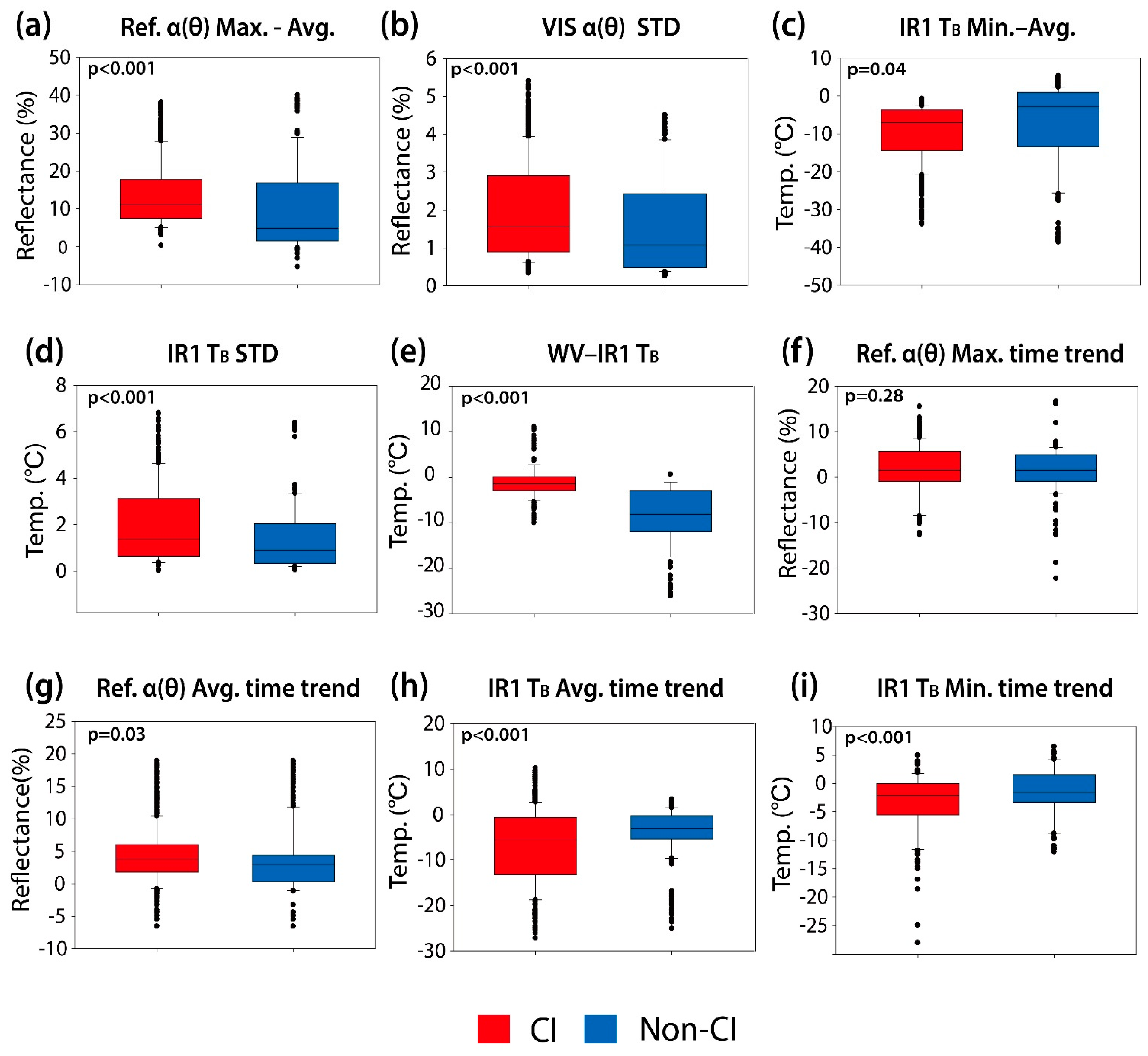

The interest fields in

Table 2 are closely related to the growth of clouds. The difference between the maximum and average of VIS

(VIS

Max.–Avg.), the difference between minimum

TB and averaged

TB at IR1 (IR1

TB Min.–Avg.), and the standard deviations of VIS

and IR1

TB (VIS

STD and IR1

TB STD) represent cloud roughness which is strongly correlated with the growth of clouds [

3,

35]. The maximum of VIS

and minimum of IR1

TB were calculated using a 7 × 7 pixel window, while the average of VIS

and IR1

TB, and their standard deviations were calculated based on a 21 × 21 pixel window. This follows the methods used in the MTSAT-2 RDCA [

35]. VIS

Max.–Avg. and IR1

TB Min.–Avg. represent minute characteristics of clouds developing in the vertical direction in a formative stage [

35]. The clouds developed by local strong upward flow show higher reflectance and lower temperature than their surrounding clouds, resulting in increasing VIS

Max.–Avg. and decreasing IR1

TB Min.–Avg. in CI areas [

35]. VIS

STD and IR1

TB STD indicate cloud-top asperity, which become apparent as vertically developing clouds [

35]. The difference between

TBs at WV and IR1 (WV–IR1

TB) is an indicator representing the cloud-top height relative to the tropopause [

3,

35], which makes possible to estimate the development stage of the clouds. As the surface is typically warmer than the upper troposphere, the value of WV – IR1

TB is usually negative. The values of WV–IR1

TB become negative but near to zero when cumulus clouds evolve into convective ones [

3,

24,

35].

Table 2.

Convective initiation interest fields used in this study.

Table 2.

Convective initiation interest fields used in this study.

| ID | Interest Fields | Physical Characteristics |

|---|

| 1 | VIS

Max.–Avg. difference | Detection of roughness which is observed at rising cloud top |

| 2 | VIS

STD |

| 3 | IR1

TB Min.–Avg. difference |

| 4 | IR1

TB STD |

| 5 | WV–IR1

TB difference | Detection of water above cloud top |

| 6 | VIS

Max. time trend | Presumption of development level of clouds |

| 7 | VIS

Avg. time trend |

| 8 | IR1

TB Avg. time trend |

| 9 | IR1

TB Min. time trend |

The time trends of the maximum and average of VIS

(VIS

Max. time trend and VIS

Avg. time trend, respectively) indicate the growth of clouds over time, while the minimum and average of IR1

TB (IR1

TB Min. time trend and IR1

TB Avg. time trend, respectively) represent the changes in cloud-top height over time [

3,

35]. To calculate the interest fields, it is necessary to trace the motion of cloud objects. Atmospheric motion vectors have been widely used for tracking clouds [

3,

24,

38]. However, since COMS MI provides atmospheric motion vectors every hour, it is not appropriate to use them for tracing cloud objects every 15 min. Mecikalski

et al. [

39] proposed a simple cloud object tracking method using two consecutive images obtained over the same area. We adopted the method [

39] to trace moving cloud objects from two consecutive IR1 images and then calculated the 15 min difference of the time-dependent interest fields.

3.2. Machine Learning Approaches for CI Detection

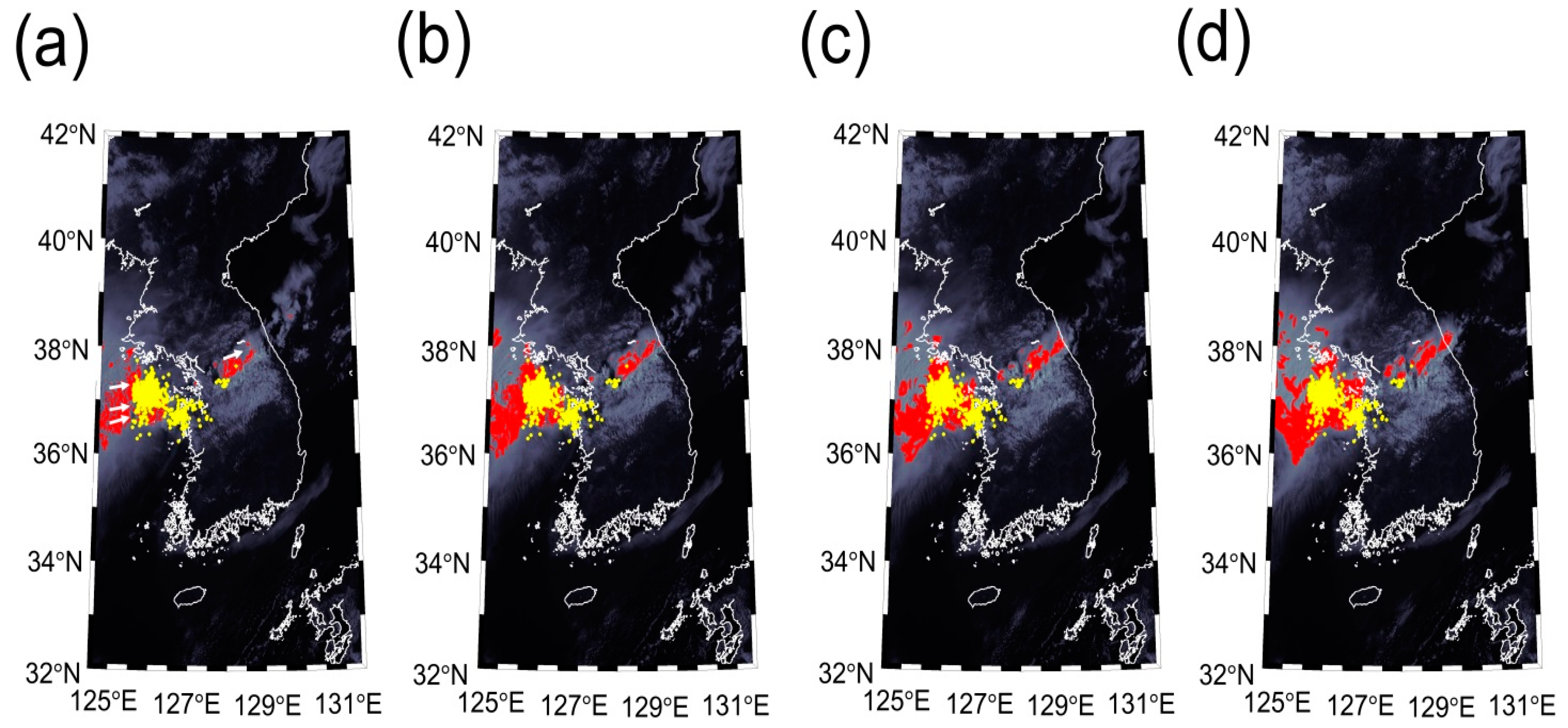

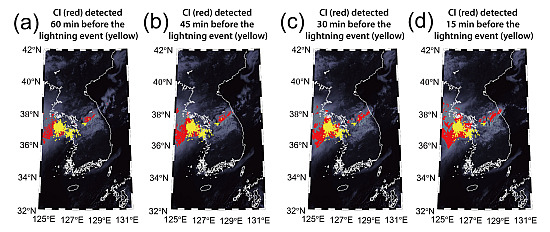

The occurrence of CI is set to a dependent variable for binary classification. For eight cases of CI over South Korea (ID CI1–CI8 in

Table 3), the areas of convective clouds (CI areas) were manually delineated from cloudy areas observed in IR1 images of MI by referring to the location and times of lightning. CI areas were defined in this study as clouds within a 16 × 16 km window with the location of the first detection of lightning occurring at the center. The other cloudy areas were considered as non-CI areas. To extract the samples of the interest fields, each CI area was visually tracked from the IR1 images obtained 15–75 min before the image that was acquired at the nearest time of the lightning occurrence, not using the cloud tracking method of Mecikalski

et al. [

39]. To train and validate machine learning-based classification models, a total of 1072 samples (

i.e., pixels) for the interest fields (624 CI and 448 non-CI samples) were selected from the MI images. Eighty percent of the samples for each class (498 samples for CI and 360 samples for non-CI) were used as a training dataset, while the remaining samples (124 samples for CI and 90 samples for non-CI) were used to validate the models.

Table 3.

Cases of convective initiations used for development and validation of machine learning based CI detection models.

Table 3.

Cases of convective initiations used for development and validation of machine learning based CI detection models.

| ID | Date | Time (hh:mm, UTC) | Source of CI |

|---|

| CI1 | 3 July 2011 | 02:00 | Frontal cyclone |

| CI2 | 3 August 2011 | 01:45 |

| CI3 | 23 August 2012 | 03:45 |

| CI4 | 14 July 2013 | 04:15 |

| CI5 | 17 May 2012 | 04:45 | Unstable atmosphere |

| CI6 | 9 August 2012 | 04:30 |

| CI7 | 5 July 2013 | 04:00 |

| CI8 | 30 June 2014 | 07:30 |

| CI9 | 3 August 2011 | 01:45 | Frontal cyclone |

| CI10 | 27 May 2012 | 04:45 | Unstable atmosphere |

| CI11 | 10 August 2013 | 01:00 | Frontal cyclone |

| CI12 | 30 June 2014 | 07:30 | Unstable atmosphere |

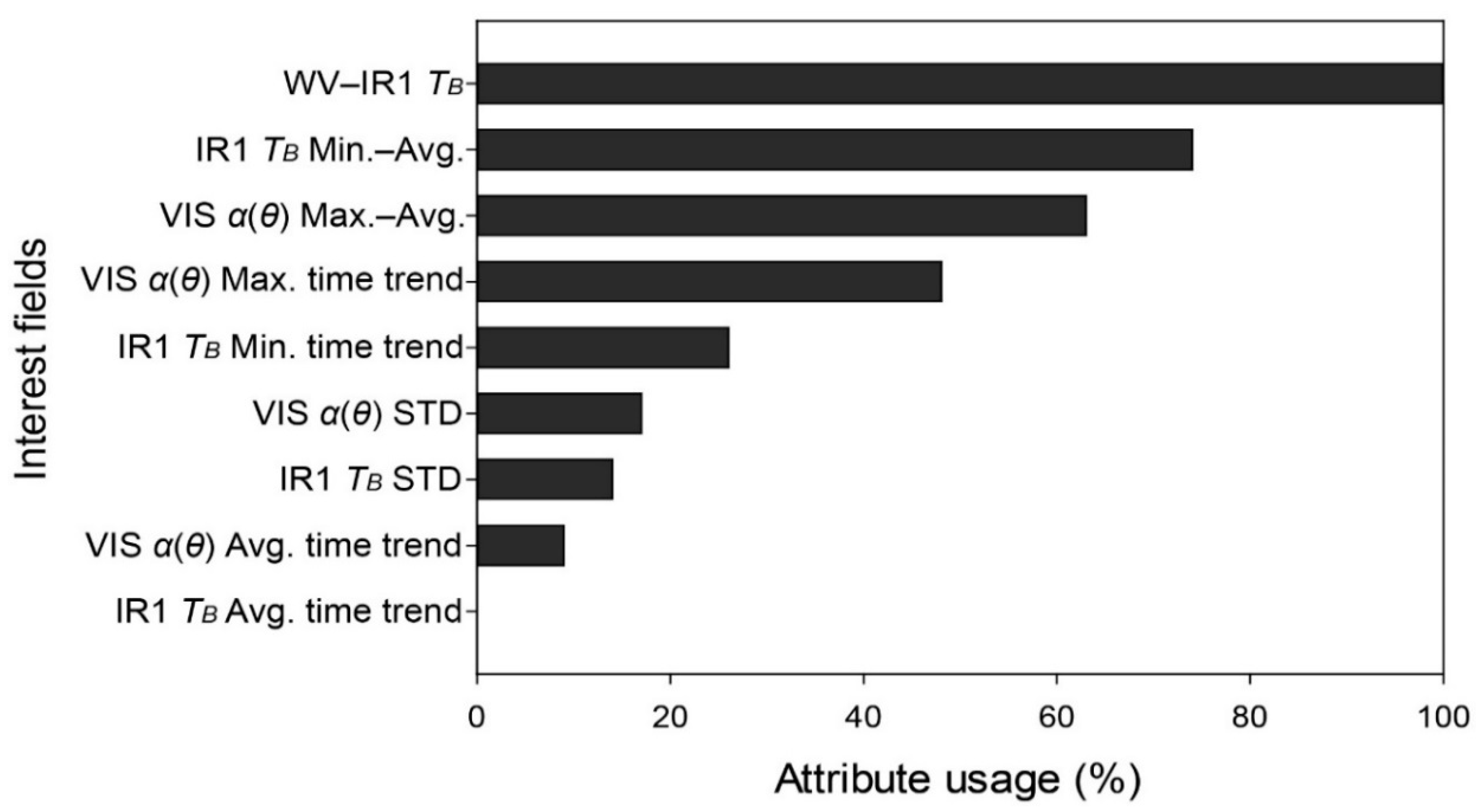

Machine learning, a novel approach used in various remote sensing applications including land use/land cover classification [

40,

41,

42,

43,

44], vegetation mapping [

42,

45,

46], and change detection [

42,

47,

48], was used for binary classification of CI and non-CI areas from COMS MI data. Three machine learning approaches—decision trees (DT), random forest (RF), and support vector machines (SVM)—were used in this study. To carry out decision tree-based classification, See5 developed by RuleQuest Research, Inc. [

49] was used in this study. See5 uses repeated binary splits based on an entropy-related metric to develop a tree. A generated tree can be converted into a series of if-then rules, which makes it easy to analyze the classification results [

49,

50]. RF builds a set of uncorrelated trees based on Classification and Regression Trees (CART) [

51], which is a rule-based decision tree. To overcome the well-known limitation of CART that classification results largely depend on the configuration and quality of training samples [

52], the numerous independent trees are grown by randomly selecting a subset of training samples for each tree and a subset of splitting variables at each node of the tree. After the learning process, a final conclusion from the independent decision trees is made by using either a simple majority voting or weighted majority voting strategy. In typical remote sensing applications, See5 and RF use samples extracted from remote sensing data as predictor variables to classify the samples into the target variable (e.g., class attributes in land cover mapping) [

50]. In this study, RF was implemented using an add-on package in R software. See5 and RF in R software produce the information on the relative importance of input variables with attribute usage and mean decrease accuracy, respectively. The attribute usage information shows how the contribution the each variable has to the rules [

49], while mean decrease accuracy represents how much accuracy decreases when a variable is randomly permuted [

51]. SVM is a supervised learning algorithm, which divides training samples into separate categories by constructing hyperplanes in a multidimensional space, typically a higher dimension than the original data, so the samples can be linearly separable. SVM assumes that the samples in multispectral data can be linearly separated in the input feature space. However, data points of different classes can overlap one another, leading to difficulty in linearly separating the samples. In order to solve such a problem, SVM uses a set of mathematical functions, called kernels, to project data into a higher dimension [

53]. Selection of a kernel function and its parameterization are crucial for successful implementation of SVM [

54,

55]. There are many kernel functions available, and radial basis functions (RBF) have been widely used for remote sensing applications [

41,

56,

57]. In this study, the library for SVM (LIBSVM) software package [

58] with a RBF kernel was adopted and the parameters of the kernel were optimized through a grid-search algorithm in LIBSVM.

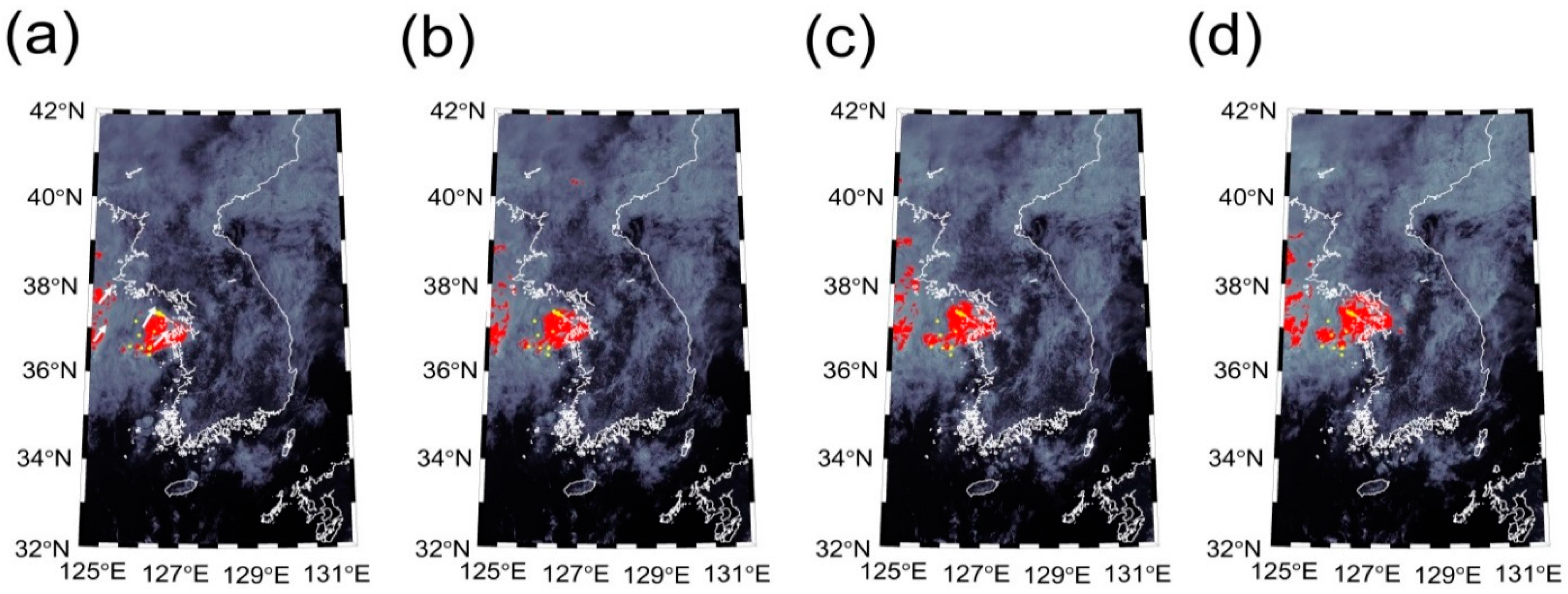

To evaluate the performance of the three machine learning models, the user’s accuracy, the producer’s accuracy, the overall accuracy, and the kappa coefficient were computed from a confusion matrix of the test dataset which is a specific table for evaluating the performance of a classification result. Overall accuracy can be derived from dividing the number of samples that were correctly classified by the total number of samples. User’s and producer’s accuracies show how well individual classes were classified correctly. The producer’s accuracy (

i.e., omission error) refers to the probability that a CI (or a non-CI) area is correctly classified as such, while the user’s accuracy (

i.e., commission error) refers to the probability that a sample labeled as a CI (or a non-CI) is correctly classified as a certain class. Kappa coefficient, another criterion used for the assessment of classification results, measures the degree of agreement between classification and reference data considering change agreement occurring by chance. Moreover, the developed machine learning models were further validated using the four cases of CI (ID CI9–CI12 in

Table 3), from which the probability of detection (

POD), false alarm rate (

FAR), and accuracy (

ACC) were computed as follows [

24]:

where

H is the number of actual CI objects that were correctly classified as CI (

i.e., hits),

M is the number of CI objects that were incorrectly marked as non-CI (

i.e., misses),

FA is the number of non-CI objects that were incorrectly marked as CI (

i.e., false alarm), and

CN is all the remaining objects that were correctly classified as non-CI (

i.e., correct negatives). A cloud object is defined as the lump of connected cloud pixels that resulted from machine learning-based CI detection. As the TLDS lightning data used for validation contain the locations of lightning occurrences only, for each case day, the

H,

M,

FA, and

CN were counted from the CI detection results derived from the COMS MI images obtained 15–30, 30–45, 45–60, and 60–75 min before lightning occurrence based on cloud objects. In the MI images obtained at the nearest time of the lightning occurrence, the cloud objects including the position of lightning were regarded as the actual CI. Meanwhile, in the MI images obtained 15–75 min before the image that was acquired at the nearest time of the lightning occurrence, the distances from clouds to the location of lightning occurrence were calculated using hourly atmospheric motion vector products of COMS MI by assuming constant velocity and direction of cloud drift over 1 h. For each case day, atmospheric motion vectors of clouds were averaged to find a typical value of drifting velocity. In the MI images obtained 15–75 min before lightning occurrence, the cloud objects within a given distance from the location of lightning occurrence were regarded as the actual CI. Overall

POD,

FAR, and

ACC were computed for each machine learning model using the

H,

M,

FA, and

CN of all case days.

The lead time, the period of time that has elapsed between the CI prediction and the beginning of actual CI, for the four case days was calculated by applying a weighted average depending on

H detected from the MI images obtained before lightning occurrence as follows:

where

Ht is the number of

H counted from the MI images obtained

t minutes before lightning occurrence and

n is the number of

Ht. The lead time of each machine learning model was determined using

Ht and

n of all case days.