Integration of UAV-Based Photogrammetry and Terrestrial Laser Scanning for the Three-Dimensional Mapping and Monitoring of Open-Pit Mine Areas

Abstract

:1. Introduction

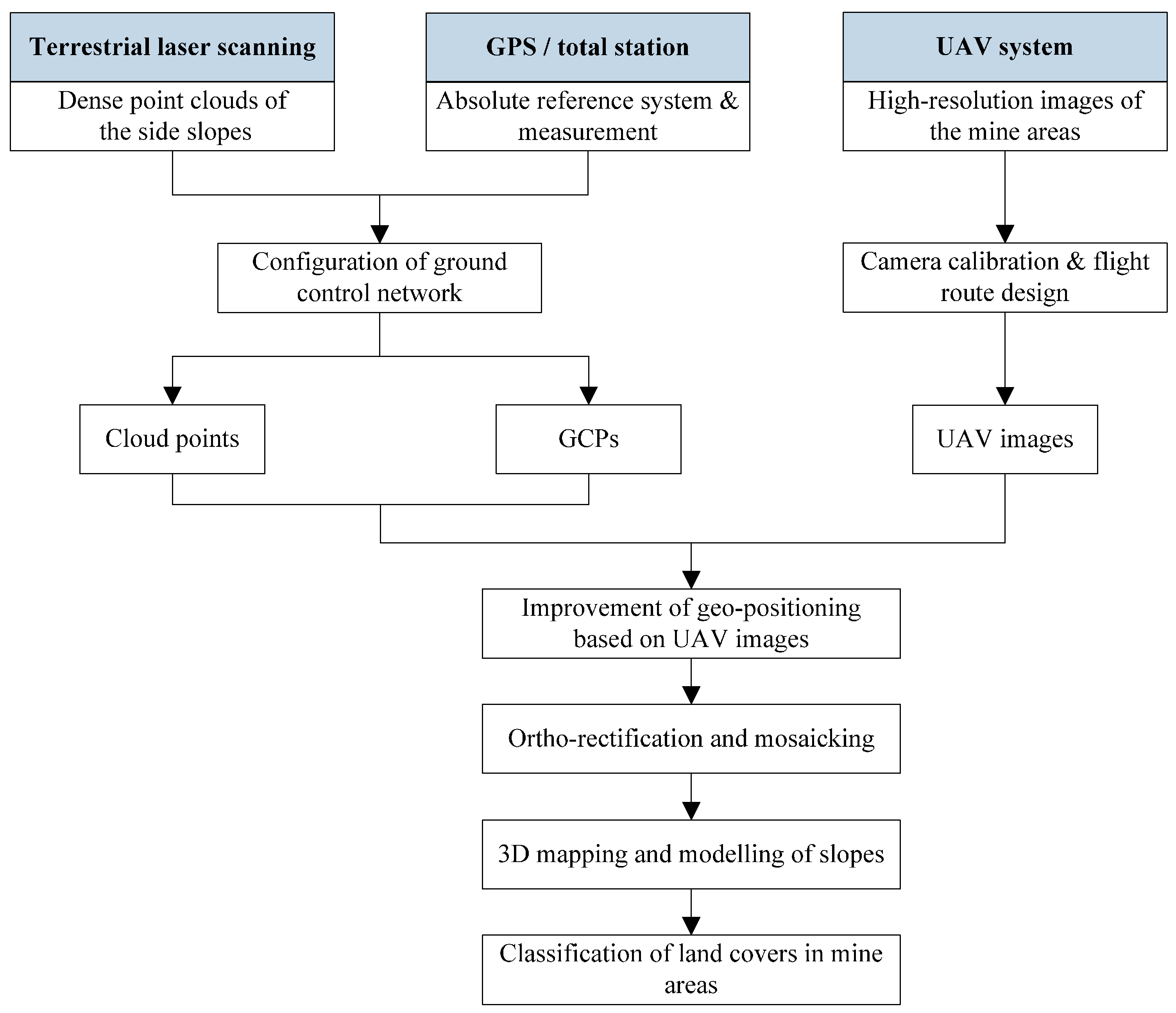

2. Methods

2.1. Materials and Data Acquisition

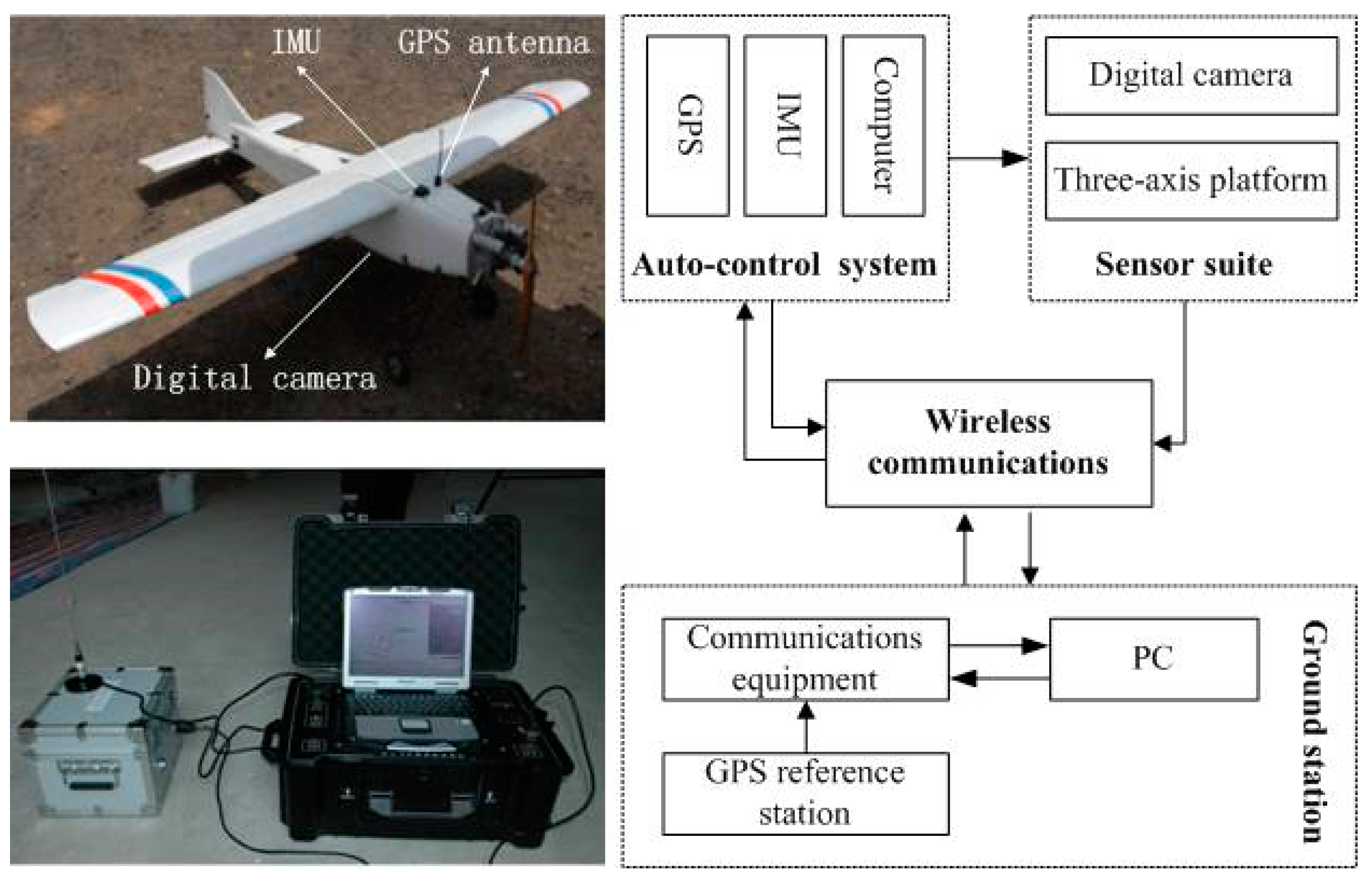

2.1.1. The UAV System, Sensors and the TLS System Used in the Experiment

| Item | Value |

|---|---|

| Length (m) | 1.8 |

| Wingspan (m) | 2.6 |

| Payload (kg) | 4 |

| Take-off weight (kg) | 14 |

| Endurance (h) | 1.8 |

| Flying height (m) | 300–6000 |

| Flying speed (km/h) | 80–120 |

| Capacity | Fuel |

| Flight mode | Manual, semi-autonomous and autonomous |

| Launch | Catapult, runway |

| Landing | Sliding, parachute |

| Sensor | Digital camera, video camera |

| Item | Value |

|---|---|

| Range | 2.5–2000 m |

| 1400 m to 80% albedo (rock) | |

| 500 m to 10% albedo (coal) | |

| Scan rate | 8800 points per second |

| Divergence | +0.25 mrad |

| Range accuracy | 10 mm to 200 m |

| 20 mm to 1000 m | |

| Angle accuracy | ±0.01° |

| Repeatability accuracy | 8 mm |

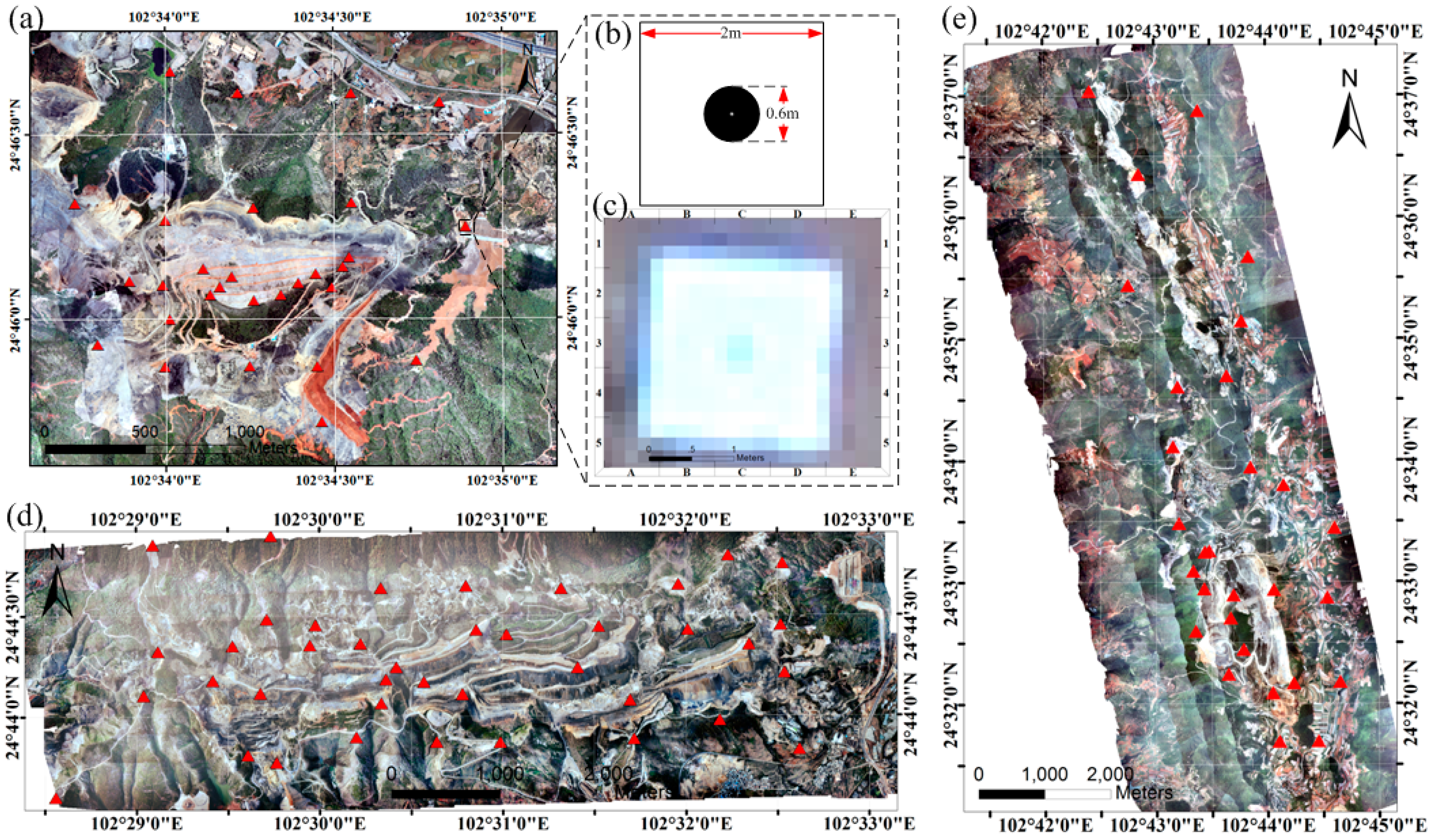

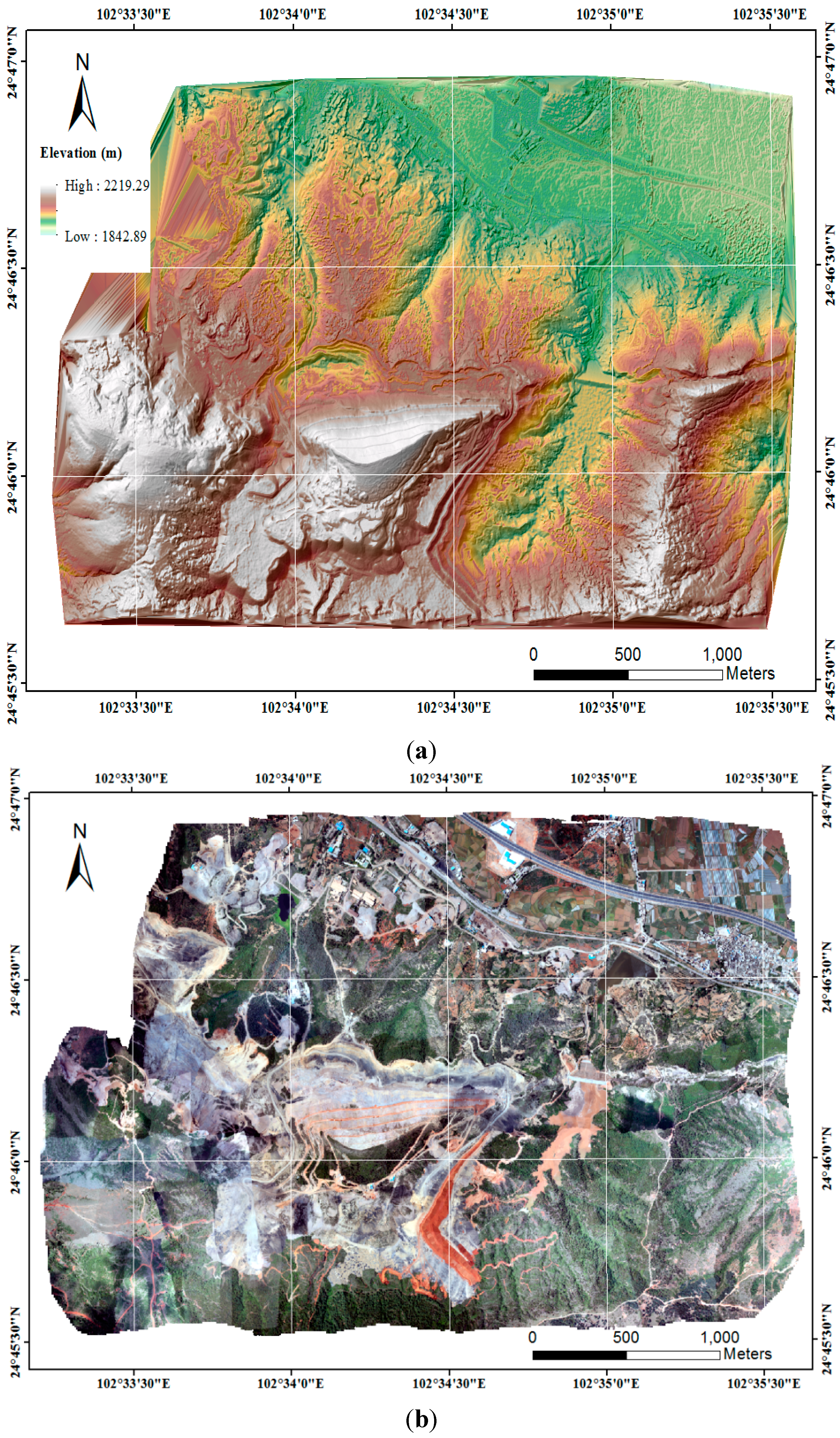

2.1.2. Study Area and Data Acquisition

| Area | Strips | Images | GCPs | Area (km2) | Consumed Time (min) | Average Flying Height (m) |

|---|---|---|---|---|---|---|

| Jianshan | 8 | 290 | 28 | 7.8 | 30 | 563.37 |

| Kunyang | 8 | 618 | 40 | 18 | 49 | 476.23 |

| Jinning | 8 | 780 | 29 | 43 | 64 | 563.37 |

| Item | Value | Deviation |

|---|---|---|

| Focal Length (mm) | 24.3704 | 0.0001 |

| Principal Point x0 (mm) | 0.2014 | 0.0001 |

| Principal Point y0 (mm) | 0.0638 | 0.0001 |

| Radial Distortion | k1: 7.80246 e-09 | 2.7 e-010 |

| k2: −5.20000 e-16 | −3.839 e-017 | |

| Decentering Distortion | p1: −1.10102 e-07 | 8.6 e-008 |

| p2: 9.25639 e-08 | 9.3 e-009 | |

| Affinity and Nonorthogonality | α: −5.24645 e-05 | – |

| β: −2.78373 e-06 | – |

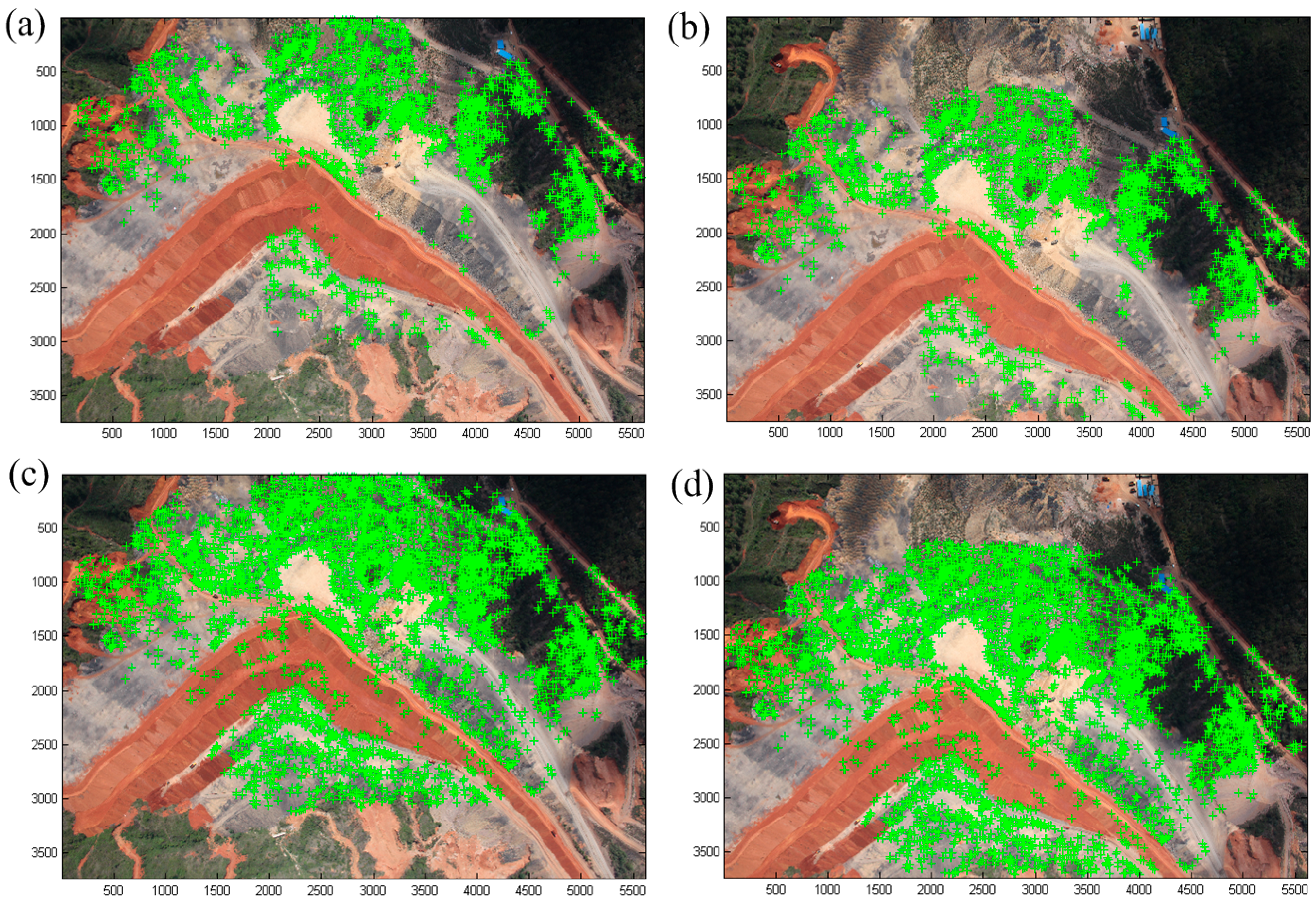

2.2. Image Matching and Extraction of Feature Point Cloud

2.3. Geo-Positioning Accuracy Improvement and 3D Textural Modelling

2.4. Classification of the Land Covers in the Mine Areas

3. Results and Discussion

3.1. Result of the Geo-Positioning and Accuracy Assessment

| Area | Scenario | Maximum Error | Mean Error | Root-Mean-Squared Error | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| X/m | Y/m | Z/m | |X|/m | |Y|/m | |Z|/m | X/m | Y/m | Z/m | ||

| Jianshan | Scenario one | −4.4216 | 2.1685 | 11.6474 | 3.8868 | 1.7411 | 8.0782 | 3.9091 | 1.7597 | 8.5113 |

| Scenario two | −0.2586 | 0.1391 | 1.4753 | 0.1116 | 0.0842 | 0.4850 | 0.1407 | 0.0949 | 0.6380 | |

| Scenario three | −0.2477 | −0.1488 | 1.6074 | 0.0974 | 0.0827 | 0.4183 | 0.1253 | 0.0933 | 0.6210 | |

| Kunyang | Scenario one | −4.2221 | −7.0257 | −16.7015 | 1.4489 | 4.4386 | 6.6686 | 1.8331 | 4.6088 | 8.0069 |

| Scenario two | −0.2529 | 0.2839 | −1.4345 | 0.0990 | 0.1248 | 0.4875 | 0.1414 | 0.1447 | 0.7766 | |

| Scenario three | −0.2250 | 0.2284 | −2.4785 | 0.1095 | 0.1262 | 0.5402 | 0.1378 | 0.1416 | 0.7080 | |

| Jinning | Scenario one | 2.2202 | 2.3067 | −5.0525 | 0.4131 | 1.2843 | 1.4831 | 0.7088 | 1.4342 | 1.9025 |

| Scenario two | −0.3473 | −0.8749 | 1.1805 | 0.1646 | 0.3344 | 0.6137 | 0.2153 | 0.4496 | 0.7813 | |

| Test | Maximum Error | Mean Error | Root-Mean-Squared Error | ||||||

|---|---|---|---|---|---|---|---|---|---|

| X/m | Y/m | Z/m | |X|/m | |Y|/m | |Z|/m | X/m | Y/m | Z/m | |

| Test one | 0.563 | 0.741 | 1.760 | 0.4108 | 0.3647 | 1.4427 | 0.4234 | 0.4178 | 1.6589 |

| Test two | 0.227 | 0.376 | 1.507 | 0.1540 | 0.1953 | 1.3078 | 0.1634 | 0.2260 | 1.5662 |

| Test three | 0.163 | 0.372 | 1.472 | 0.1048 | 0.1448 | 1.0603 | 0.1108 | 0.1726 | 1.3209 |

3.2. Results of the Digital Photogrammetric Products and the Accuracy Assessment

3.2.1. Results of the DSMs and DOMs for the Mine Areas

| Area | Maximum Error | Mean Error | Root-Mean-Squared Error | ||||||

|---|---|---|---|---|---|---|---|---|---|

| X/m | Y/m | Z/m | |X|/m | |Y|/m | |Z|/m | X/m | Y/m | Z/m | |

| Jianshan | 0.2923 | −0.2626 | 1.8293 | 0.1055 | 0.0773 | 0.5150 | 0.1334 | 0.1091 | 0.7245 |

| Kunyang | 0.4344 | −0.2529 | −1.6676 | 0.1152 | 0.1364 | 0.5510 | 0.1484 | 0.1545 | 0.7112 |

| Jinning | −0.8437 | −1.0807 | 1.4439 | 0.2382 | 0.4277 | 0.7142 | 0.3034 | 0.5104 | 0.8161 |

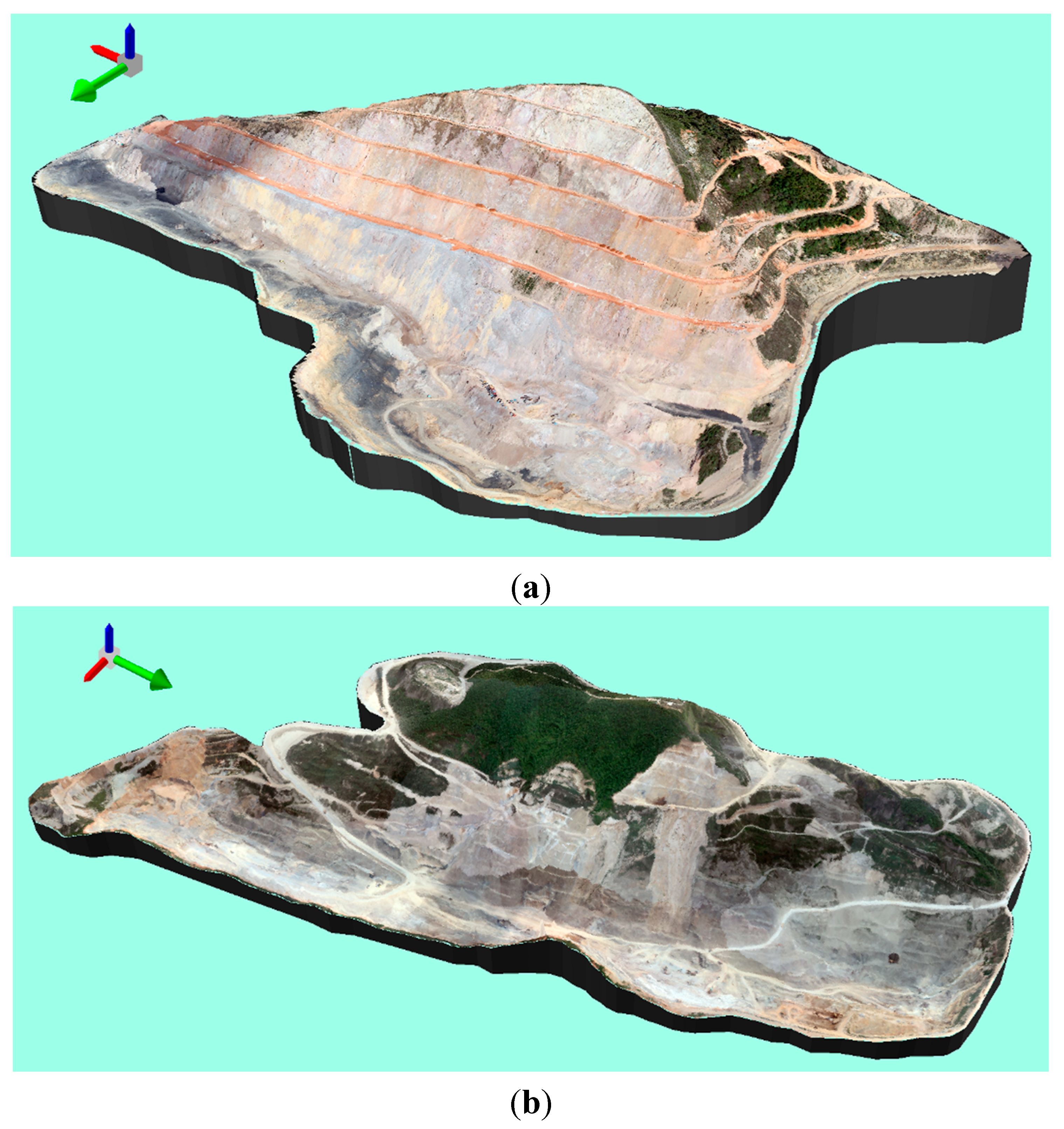

3.2.2. Results of the 3D Texture Models of the Side Slopes

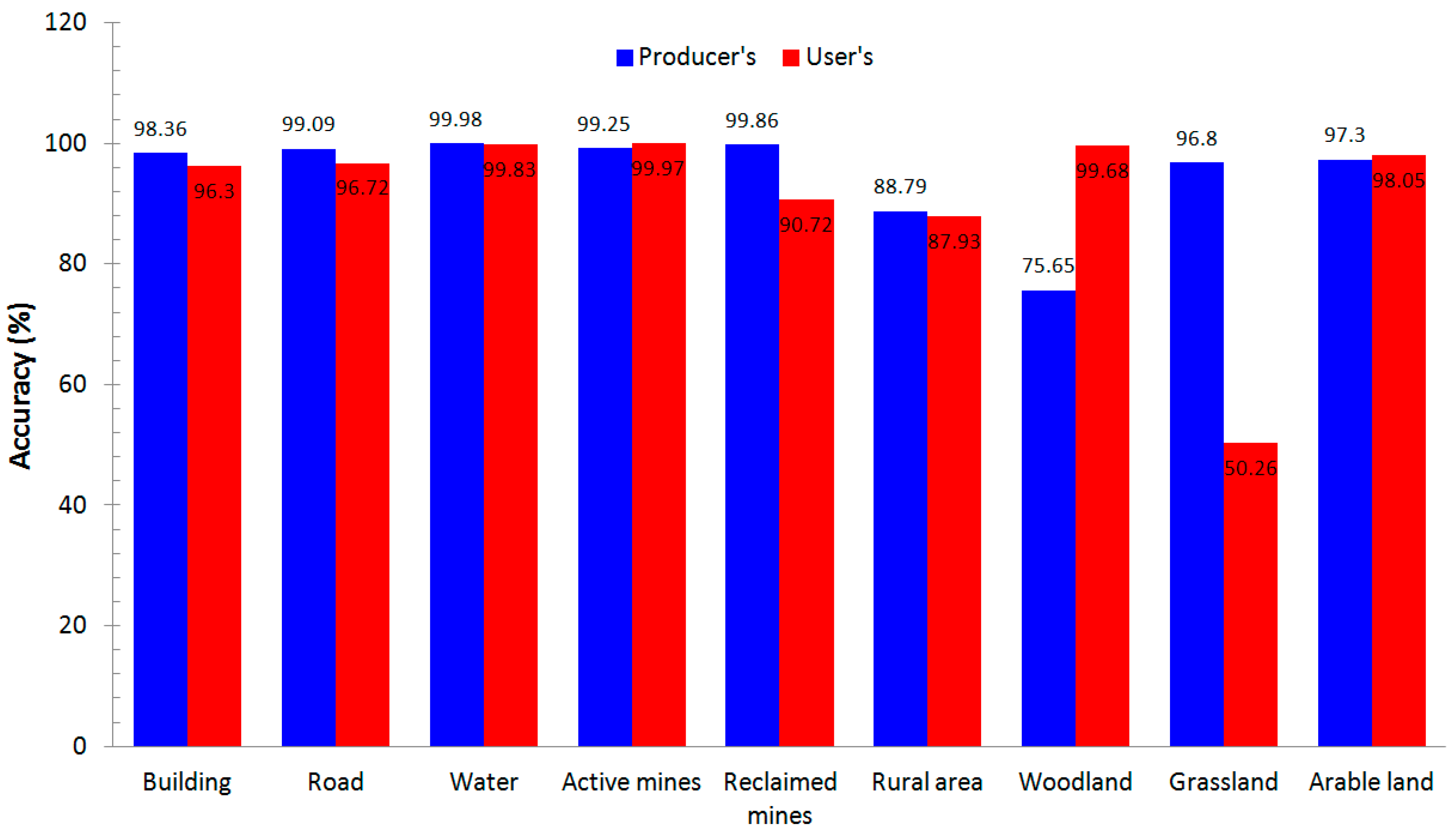

3.3. Results of the Image Classification and Accuracy Assessment

3.4. Discussion

- (1)

- By integration of 3D points extracted from the point clouds of TLS and ground points surveyed by GPS as GCPs in the bundle adjustment of UAV imagery, the geo-positioning accuracy of UAV images can be improved in open-pit mine areas. In our experiment, with the aid of POS equipped in UAV, the geo-positioning accuracies of UAV imagery are 4.29 m and 8.51 m in the planimetric and height directions, which are corresponding to 28.6 pixels and 56.7 pixels at the spatial resolution of 0.15 m of UAV imagery. Further, with the aid of POS, 19 GCPs from GPS survey and 10 3D points extracted from the point clouds of TLS, the accuracies can be improved to be 0.16 m and 0.62 m in the planimetric and height directions, which are corresponding to 1.07 pixels and 4.13 pixels with respect to the spatial resolution of 0.15 m of UAV imagery. In the existing studies on the geo-positioning accuracy of UAV imagery, by the use of a total of 33 GCPs in urban areas, Zhang et al. [16] achieved an accuracy of 0.02 m in planimetric direction and an accuracy of 0.03 m in vertical direction, which are corresponding to 0.4 pixels and 0.6 pixels compared with the 0.05 m ground sampling distance (GSD) of UAV imagery. Another result showed that the RMSEs of both planimetric position and height were better than 0.2 m, which are corresponding to 1 pixels with the 0.2 m GSD. In rangeland areas, Laliberte et al. [10] obtained an accuracy of 1.5 to 2 pixels in both planimetric and height directions. Laliberte et al. [65] showed the results of geometric accuracy in a relatively flat area with the RMSE of 0.65 m (corresponding to 10.8 pixels) and in the greater elevation difference area with the RMSE of 1.14 m (corresponding to 19 pixels), with the 6 cm GSD. In archaeological areas, Chiabrando et al. [7] achieved the standard deviations of 0.04 m, −0.034 m and 0.038 m in the X-, Y- and Z-directions, respectively, which are corresponding to 1 pixels, 0.85 pixels and 0.95 pixels, respectively, compared with the 0.04 m GSD. Therefore, from results of the geo-positioning accuracy based on our proposed approach and the existing ones, we can see that (1) the planimetric accuracy obtained in mine areas is higher than that obtained in rangeland areas, while the height accuracy obtained in mine areas is lower than that obtained in rangeland areas. The reason for this result might be that by introducing additional GCPs from TLS cloud points in our proposed approach, the number of GCPs used in the bundle adjustment of UAV imagery in mine areas is more than that in rangeland areas. However, due to the elevation change in open-pit mine areas, the vertical accuracy in mine areas is lower than that in rangeland areas with the flat topography. (2) Both planimetric and height accuracies obtained in mine areas are lower than those obtained in urban areas. The reason responsible for this result might be that there are more obvious ground features in urban areas than in open-pit mine areas, the topography is more flat in urban areas than in open-pit mine area, and it is easier to obtain more GCPs in urban areas than in open-pit mine areas. At the same time, the accuracy of geo-positioning achievable from UAV images based on our proposed approach meets the demand for the subsequent applications in open-pit mine areas.

- (2)

- The contribution of 3D point clouds from the TLS on the improvement of geo-positioning accuracy of UAV imagery shows the same level as that of GCPs surveyed by GPS. In our experiment in the side slope zone of the Jianshan area, with the aid of the POS and the GCPs surveyed by the GPS, the geo-positioning accuracies based on UAV imagery achieve 0.11 m, 0.17 m and 1.32 m in the X-, Y- and Z-directions, respectively. At the same time, the geo-positioning accuracies based on UAV imagery achieve 0.16 m, 0.23 m and 1.57 m in the X-, Y- and Z-directions, respectively, with the aid of the POS and 3D point clouds from the TLS. Therefore, the result shows that the accuracy of geo-positioning based on 3D point clouds from the TLS is closer to that based on GCPs from GPS survey. Further, this result shows the potential of using 3D point clouds from the TLS as GCPs in bundle adjustment, in the case where it is difficult to conduct a GPS survey in mountainous and remote mine areas. To the best of our knowledge, there is no similar report on the contribution of 3D point clouds obtained from the TLS as a supplement to the GCPs on the improvement of geo-positioning accuracy of UAV imagery.

- (3)

- By the use of the improved geo-positioning accuracy of UAV images, the accuracies achieved of the generated DOMs and DSMs may be about 0.13 m, 0.11 m and 0.72 m in the X-, Y- and Z-directions, with respect to the image resolution of approximately 0.15 m/pixel. In addition, an overall accuracy of the classification of the land covers is 90.67%, by the use of an object-based image analysis approach in mine areas. Laliberte et al. [10] achieved an accuracy of 1.5 to 2 m for the image mosaics with respect to the spatial resolution of 15 cm of the orthophoto, and overall classification accuracies for the two image mosaics were 83% and 88% in the rangeland area. By the use of UAV imagery with a spatial resolution of 21.8 cm, Dunford et al. [70] obtained an overall accuracy of 63% for classification of the vegetation units in species mapping in Mediterranean riparian forest. In addition, with respect to the accuracy of the classification of land covers in the open-pit mine area, the vegetation and exposed rock/soil can be classified with high accuracy by the use of the rule-based classification method. However, the classification accuracy of grasslands and woodland is significantly lower than those of the other land cover types due to the confusion of grasslands with the woodland in the mine areas.

- (4)

- Our study demonstrates a practical framework for the integration of UAV-based photogrammetry and TLS with application to the open-pit mine areas, which includes UAV image and TLS cloud point acquisition, image and cloud point processing and integration, object-oriented classification and three-dimensional (3D) mapping and monitoring of open-pit mine areas. Actually, the UAV-based photogrammetry has been widely employed in many fields, and some work has been reported in mine areas [31,32,33]. From the results of our experiments, we can see that UAVs, which need the permission of flight regulation, can be deployed quickly and repeatedly, flying with low altitude with less interference of clouds, and costing less than satellites and manned aircrafts. The novelty of the proposed framework is the joint use of UAV-based photogrammetry and TLS by introducing additional input data from TLS as GCPs can provide more accurate and detailed UAV images for monitoring of the mine areas. However, there are several limitations with respect to the proposed approach. The first one is that it is sometimes difficult to find ground characteristic features in the open-pit mine areas. As a result, some man-made markers need to be established as GCPs. The second one is that due to battery duration, UAV campaign needs more flight routes to cover a larger area. In addition, due to the limited payload capacity, only light and limit sensors can be equipped in UAVs. The third one is that due to the larger amount of UAV imagery and TLS point clouds, high performance parallel processing computation needs to be further developed.

4. Conclusions

- (1)

- In the case of performing the bundle adjustment only with the support of the position and orientation system (POS) in the Jianshan area, the geo-positioning accuracies achieved based on UAV imagery are 3.91 m, 1.76 m and 8.51 m in the X-, Y- and Z-directions, respectively. In the case of performing the bundle adjustment with both the POS and the GCPs surveyed by the GPS, the geo-positioning accuracies achieved based on UAV imagery are 0.14 m, 0.09 m and 0.64 m in the X-, Y- and Z-directions, respectively. In the case of performing the bundle adjustment with POS, GCPs and 3D point clouds from the TLS, the geo-positioning accuracies based on UAV imagery achieved are 0.13 m, 0.09 m and 0.62 m in the X-, Y- and Z-directions, respectively.

- (2)

- For the test of the contribution of the 3D point clouds from the TLS in the accuracy improvement in the bundle block adjustment in the side slope zone of the Jianshan area, the geo-positioning accuracies achieved based on UAV imagery are 0.16 m, 0.23 m and 1.57 m in the X-, Y- and Z-directions, respectively, with the aid of the POS and 3D point clouds from the TLS. In addition, with the aid of the POS and the GCPs surveyed by the GPS, the geo-positioning accuracies achieved based on UAV imagery are 0.11 m, 0.17 m and 1.32 m in the X-, Y- and Z-directions, respectively.

- (3)

- With the use of the improved geo-positioning based on UAV images in the bundle adjustment, an accuracy of the decimeter-level was achieved for the generated digital surface models (DSMs) and digital orthophoto maps (DOMs) in the study areas, and the overall accuracy of the classification of the land covers was 90.67%, based on an object-based image analysis approach in the mine areas.

Acknowledgments

Author Contributions

Conflicts of Interest

References

- American Institute of Aeronautics and Astronautics (AIAA). Committee of Standards “Terminology for Unmanned Aerial Vehicles and Remotely Operated Aircraft”; AIAA: Reston, VA, USA, 2004. [Google Scholar]

- Zhou, G.Q. Geo-referencing of video flow from small low-cost civilian UAV. IEEE Trans. Autom. Sci. Eng. 2010, 7, 156–166. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; Watson, C. An automated technique for generating georectified mosaics from ultra-high resolution unmanned aerial vehicle (UAV) imagery, based on Structure from Motion (SfM) point clouds. Remote Sens. 2012, 4, 1392–1410. [Google Scholar] [CrossRef]

- Blyenburgh, P.V. UAVs: An overview. Air Space Eur. 1999, 1, 43–47. [Google Scholar] [CrossRef]

- Rango, A.; Laliberte, A.; Steele, C.; Herrick, J.E.; Bestelmeyer, B.; Schmugge, T.; Roanhorse, A.; Jenkins, V. Using unmanned aerial vehicles for rangelands: Current applications and future potentials. Environ. Pract. 2006, 8, 159–168. [Google Scholar] [CrossRef]

- Vericat, D.; Brasington, J.; Wheaton, J.; Cowie, M. Accuracy assessment of aerial photographs acquired using lighter-than-air blimps: Low-cost tools for mapping river corridors. River. Res. Applic. 2009, 25, 985–1000. [Google Scholar] [CrossRef]

- Chiabrando, F.; Nex, F.; Piatti, D.; Rinaudo, F. UAV and RPV systems for photogrammetric surveys in archaelogical areas: Two tests in the Piedmont region (Italy). J. Archaeol. Sci. 2011, 38, 697–710. [Google Scholar] [CrossRef]

- Watts, A.C.; Ambrosia, V.G.; Hinkley, E.A. Unmanned aircraft systems in remote sensing and scientific research: Classification and considerations of use. Remote Sens. 2012, 4, 1671–1692. [Google Scholar] [CrossRef]

- Nagai, M.; Chen, T.; Shibasaki, R.; Kumugai, H.; Ahmed, A. UAV-borne 3-D mapping system by multisensory integration. IEEE Trans. Geosci. Remote Sens. 2009, 47, 701–708. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Herrick, J.E.; Rango, A.; Winters, C. Acquisition, orthorectification, and object-based classification of unmanned aerial vehicle (UAV) imagery for rangeland monitoring. Photogramm. Eng. Remote Sens. 2010, 76, 661–672. [Google Scholar] [CrossRef]

- Rango, A.; Laliberte, A.S. Impact of flight regulations on effective use of unmanned aircraft systems for natural resources applications. J. Appl. Remote Sens. 2010, 4, 043539. [Google Scholar]

- Peterson, D.L.; Brass, J.A.; Smith, W.H.; Langford, G.; Wegener, S.; Dunagan, S.; Hammer, P.; Snook, K. Platform options of free-flying satellites, UAVs or the International Space Station for remote sensing assessment of the littoral zone. Int. J. Remote Sens. 2003, 24, 2785–2804. [Google Scholar] [CrossRef]

- Wang, J.Z.; Li, C.M. Acquisition of UAV images and the application in 3D city modeling. Proc. SPIE 2008. [Google Scholar] [CrossRef]

- Eisenbeiss, H. UAV Photogrammetry. Ph.D. Thesis, University of Technology Dresden, Dresden, Germany, 2009. [Google Scholar]

- Laliberte, A.S.; Rango, A. Texture and scale in object-based analysis of subdecimeter resolution unmanned aerial vehicle (UAV) imagery. IEEE Trans. Geosci. Remote Sens. 2009, 47, 761–770. [Google Scholar] [CrossRef]

- Zhang, Y.J.; Xiong, J.X.; Hao, L.J. Photogrammetric processing of low-altitude images acquired by unpiloted aerial vehicles. Photogramm. Rec. 2011, 26, 190–211. [Google Scholar] [CrossRef]

- Przybilla, H.-J.; Wester-Ebbinghaus, W. Bildflug Mit Ferngelenktem Kleinflugzeug. In Bildmessung und Luftbildwesen; Zeitschrift fuer Photogrammetrie und Fernerkundung, Herbert Wichman Verlag: Karlsruhe, Germany, 1979; Volume 47, pp. 137–142. [Google Scholar]

- Herwitz, S.R.; Johnson, L.F.; Dunagan, S.E.; Higgins, R.G.; Sullivan, D.V.; Zheng, J.; Lobitz, B.M.; Leung, J.G.; Gallmeyer, B.A.; Aoyagi, M.; et al. Imaging from an unmanned aerial vehicle: Agricultural surveillance and decision support. Comput. Electron. Agric. 2004, 44, 49–61. [Google Scholar]

- Xiang, H.; Tian, L. Development of a low-cost agricultural remote sensing system based on an autonomous unmanned aerial vehicle (UAV). Biosyst. Eng. 2011, 108, 174–190. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV-LiDAR system with application to forest inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef]

- Watai, T.; Machida, T.; Ishizaki, N.; Inoue, G. A lightweight observation system for atmospheric carbon dioxide concentration using a small unmanned aerial vehicle. J. Atmos. Ocean. Technol. 2006, 23, 700–710. [Google Scholar] [CrossRef]

- McGonigle, A.J.S.; Aiuppa, A.; Giudice, G.; Tamburello, G.; Hodson, A.J.; Gurrieri, S. Unmanned aerial vehicle measurements of volcanic carbon dioxide fluxes. Geophys. Res. Lett. 2008, 35, L06303. [Google Scholar] [CrossRef]

- Hardin, P.J.; Jensen, R.R. Small-scale unmanned aerial vehicles in environmental remote sensing: Challenges and opportunities. GISci. Remote Sens. 2011, 48, 99–111. [Google Scholar] [CrossRef]

- Khan, A.; Schaefer, D.; Tao, L.; Miller, D.J.; Sun, K.; Zondlo, M.A.; Harrison, W.A.; Roscoe, B.; Lary, D.J. Low power greenhouse gas sensors for unmanned aerial vehicles. Remote Sens. 2012, 4, 1355–1368. [Google Scholar] [CrossRef]

- Zhang, Z.X.; Zhang, Y.J.; Ke, T.; Guo, D.H. Photogrammetry for first response in Wenchuan earthquake. Photogramm. Eng. Remote Sens. 2009, 75, 510–513. [Google Scholar]

- Zhou, G.Q. Near real-time orthorectification and mosaic of small UAV video flow for time-critical event response. IEEE Trans. Geosci. Remote Sens. 2009, 47, 739–747. [Google Scholar] [CrossRef]

- Niethammer, U.; James, M.R.; Rothmund, S.; Travelletti, J.; Joswig, M. UAV-based remote sensing of the Super-Sauze landslide: Evaluation and results. Eng. Geol. 2012, 128, 2–11. [Google Scholar] [CrossRef]

- Marzolff, I.; Poesen, J. The potential of 3D gully monitoring with GIS using high-resolution aerial photography and a digital photogrammetry system. Geomorphology 2009, 111, 48–60. [Google Scholar] [CrossRef]

- Verhoeven, G.J.J.; Loenders, J.; Vermeulen, F.; Docter, R. Helikite aerial photography—A versatile means of unmanned, radio controlled, low-altitude aerial archaeology. Archaeol. Prospect. 2009, 16, 125–138. [Google Scholar] [CrossRef]

- Eisenbeiss, H; Sauerbier, M. Investigation of UAV systems and flight modes for photogrammetric applications. Photogramm. Rec. 2011, 26, 400–421. [Google Scholar]

- Salvini, R.; Riccucci, S.; Gullì, D.; Giovannini, R.; Vanneschi, C.; Francioni, M. Geological Application of UAV photogrammetry and terrestrial laser scanning in Marble Quarrying (Apuan Alps, Italy). In Engineering Geology for Society and Territory—Urban Geology, Sustainable Planning and Landscape Exploitation; Springer International Publishing: Cham, Switzerland, 2015; Volume 5, pp. 979–983. [Google Scholar]

- Shan, B.; Luo, X.; Liang, L. Application of UAV in open-pit mine disaster monitoring. Opencast Min. Technol. 2013, 6, 69–71. [Google Scholar]

- González-Aguilera, D.; Fernández-Hernández, J.; Mancera-Taboada, J.; Rodríguez-Gonzálvez, P.; Hernández-López, D.; Felipe-García, B.; Arias-Perez, B. 3D modelling and accuracy assessment of granite quarry using unmannend aerial vehicle. In Proceedings of the ISPRS Annals of Photogrammetry, Remote Sensing and Spatial Information Sciences, Melbourne, VIC, Australia, 25 August–1 September 2012; Volume I-3, pp. 37–42.

- McLeod, T.; Samson, C.; Labrie, M.; Shehata, K.; Mah, J.; Lai, P.; Wang, L.; Elder, J. Using video data acquired from an unmanned aerial vehicle to measure fracture orientation in an open-pit mine. Geomatica 2013, 67, 163–171. [Google Scholar] [CrossRef]

- Harwin, S.; Lucieer, A. Assessing the accuracy of georeferenced point clouds produced via multi-view stereopsis from unmanned aerial vehicle (UAV) imagery. Remote Sens. 2012, 4, 1573–1599. [Google Scholar] [CrossRef]

- Rosnell, T.; Honkavaara, E. Point cloud generation from aerial image data acquired by a quadrocopter type micro unmanned aerial vehicle and a digital still camera. Sensors 2012, 12, 453–480. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.P.; Gao, J.X.; Liu, C.; Wang, J. High precision slope deformation monitoring model based on the GPS/Pseudolites technology in open-pit mine. Min. Sci. Technol. (China) 2010, 20, 126–132. [Google Scholar] [CrossRef]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 2, pp. 1150–1157.

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM. 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Kraus, K. Photogrammetry: Geometry from Images and Laser Scans; Walter de Gruyter: Goettingen, Germany, 2007. [Google Scholar]

- Fraser, C.S. Digital camera self-calibration. ISPRS J. Photogramm. Remote Sens. 1997, 52, 149–159. [Google Scholar] [CrossRef]

- Skaloud, J. Optimizing Georeferencing of Airborne Survey Systems by INS/DGPS. Ph.D. Thesis, The University of Calgary, Calgary, Alberta, 1999. [Google Scholar]

- Kocaman, S. GPS and INS Integration with kalman Filtering for Direct Georeferencing of Airborne Imagery. Ph.D. Thesis, Institute of Geodesy and Photogrammetry, ETH Hönggerberg, Zurich, Switzerland, 2003. [Google Scholar]

- Tong, X.H.; Hong, Z.H.; Liu, S.J.; Zhang, X.; Xie, H.; Li, Z.Y.; Yang, S.L.; Wang, W.; Bao, F. Building-damage detection using pre-and post-seismic high-resolution satellite stereo imagery: A case study of the May 2008 Wenchuan earthquake. ISPRS J. Photogramm. Remote Sens. 2012, 68, 13–27. [Google Scholar] [CrossRef]

- Xiang, H.; Tian, L. Method for automatic georeferencing aerial remote sensing (RS) images from an unmanned aerial vehicle (UAV) platform. Biosyst. Eng. 2011, 108, 104–113. [Google Scholar] [CrossRef]

- Lichti, D.; Gordon, S. Error propagation in directly georeferenced terrestrial laser scanner point clouds for cultural heritage recording. In Proceedings of the FIG Working Week, Athens, Greece, 22–27 May 2004.

- Reshetyuk, Y. Self-Calibration and Direct Georeferencing in Terrestrial Laser Scanners. Ph.D. Thesis, Royal Institute of Technology, Stockholm, Sweden, 2009. [Google Scholar]

- Harvey, B.R. Registration and transformation of multiple site terrestrial laser scanning. Geomat. Res. Aust. 2004, 80, 33–50. [Google Scholar]

- Wang, Y.; Wang, G. Integrated registration of range images from terrestrial LiDAR. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, XXXVII(Part B3b), 361–365. [Google Scholar]

- Hodge, R.A. Using simulated terrestrial laser scanning to analyse errors in high-resolution scan data of irregular surfaces. ISPRS J. Photogramm. Remote Sens. 2010, 65, 227–240. [Google Scholar] [CrossRef]

- Zhang, Y.; Gong, L.; Yan, L. Research on error propagation of point cloud registration. In Proceedings of the 2012 IEEE International Conference on Computer Science and Automation Engineering (CSAE), Zhangjiajie, China, 25–27 May 2012; Volume 2, pp. 18–21.

- Lingua, A.; Marenchino, D.; Nex, F. Performance analysis of the SIFT operator for automatic feature extraction and matching in photogrammetric applications. Sensors 2009, 9, 3745–3766. [Google Scholar] [CrossRef] [PubMed]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: New York, NY, USA, 2004. [Google Scholar]

- Pesci, A.; Fabris, M.; Conforti, D.; Loddo, F.; Baldi, P.; Anzidei, M. Integration of ground-based laser scanner and aerial digital photogrammetry for topographic modelling of Vesuvio volcano. J. Volcanol. Geotherm. Res. 2007, 162, 123–138. [Google Scholar] [CrossRef]

- Baltsavias, E.P. A comparison between photogrammetry and laser scanning. ISPRS J. Photogramm. Remote Sens. 1999, 54, 83–94. [Google Scholar] [CrossRef]

- Wang, J.; Di, K.; Li, R. Evaluation and improvement of geopositioning accuracy of IKONOS stereo imagery. J. Surv. Eng. 2005, 131, 35–42. [Google Scholar] [CrossRef]

- Tong, X.H.; Liang, D.; Xu, G.S.; Zhang, S.L. Positional accuracy improvement: A comparative study in Shanghai, China. Int. J. Geogr. Inf. Sci. 2011, 25, 1147–1171. [Google Scholar] [CrossRef]

- Tong, X.H.; Liu, S.J.; Weng, Q.H. Bias-corrected rational polynomial coefficients for high accuracy geo-positioning of QuickBird stereo imagery. ISPRS J. Photogramm. Remote Sens. 2010, 65, 218–226. [Google Scholar] [CrossRef]

- Liu, S.J.; Fraser, C.S.; Zhang, C.S.; Ravanbakhsh, M.; Tong, X.H. Georeferencing performance of THEOS satellite imagery. Photogramm. Rec. 2011, 26, 250–262. [Google Scholar] [CrossRef]

- Henry, J.B.; Malet, J.-P.; Maquaire, O.; Grussenmeyer, P. The use of small-format and low-altitude aerial photos for the realization of high-resolution DEMs in mountainous areas: Application to the Super-Sauze earthflow (Alpes-de-Haute-Provence, France). Earth Surf. Process. Landf. 2002, 27, 1339–1350. [Google Scholar] [CrossRef] [Green Version]

- Guarnieri, A.; Remondino, F.; Vettore, A. Digital photogrammetry and TLS data fusion applied to cultural heritage 3D modelling. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2006, XXXVI(Part 5). [Google Scholar]

- Burnett, C.; Blaschke, T. A multi-scale segmentation/object relationship modeling methodology for landscape analysis. Ecol. Model. 2003, 168, 233–249. [Google Scholar] [CrossRef]

- Yu, Q.; Gong, P.; Clinton, N.; Biging, G.; Kelly, M.; Schirokauer, D. Object-based detailed vegetation classification with airborne high spatial resolution remote sensing imagery. Photogramm. Eng. Remote Sens. 2006, 72, 799–811. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Rango, A. Image processing and classification procedures for analysis of sub-decimeter imagery acquired with an unmanned aircraft over arid rangelands. GISci. Remote Sens. 2011, 48, 4–23. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Winters, C.; Rango, A. UAS remote sensing missions for rangeland applications. Geocarto Int. 2011, 26, 141–156. [Google Scholar] [CrossRef]

- Definiens. eCognition Developer 8.0 User Guide; Definiens AG.: Munich, Germany, 2009. [Google Scholar]

- Stumpf, A.; Kerle, N. Object-oriented mapping of landslides using random forests. Remote Sens. Environ. 2011, 115, 2564–2577. [Google Scholar] [CrossRef]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices; CRC Press: Boca Raton, FL, USA, 2009. [Google Scholar]

- Tong, X.; Li, X.; Xu, X.; Xie, H.; Feng, T.; Sun, T.; Jin, Y.; Liu, X. A two-phase classification of urban vegetation using airborne LiDAR data and aerial photography. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014. [Google Scholar] [CrossRef]

- Dunford, R.; Michel, K.; Gagnage, M.; Piégay, H.; Trémelo, M.L. Potential and constraints of unmanned aerial vehicle technology for the characterization of Mediterranean riparian forest. Int. J. Remote Sens. 2009, 30, 4915–4935. [Google Scholar] [CrossRef]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tong, X.; Liu, X.; Chen, P.; Liu, S.; Luan, K.; Li, L.; Liu, S.; Liu, X.; Xie, H.; Jin, Y.; et al. Integration of UAV-Based Photogrammetry and Terrestrial Laser Scanning for the Three-Dimensional Mapping and Monitoring of Open-Pit Mine Areas. Remote Sens. 2015, 7, 6635-6662. https://doi.org/10.3390/rs70606635

Tong X, Liu X, Chen P, Liu S, Luan K, Li L, Liu S, Liu X, Xie H, Jin Y, et al. Integration of UAV-Based Photogrammetry and Terrestrial Laser Scanning for the Three-Dimensional Mapping and Monitoring of Open-Pit Mine Areas. Remote Sensing. 2015; 7(6):6635-6662. https://doi.org/10.3390/rs70606635

Chicago/Turabian StyleTong, Xiaohua, Xiangfeng Liu, Peng Chen, Shijie Liu, Kuifeng Luan, Lingyun Li, Shuang Liu, Xianglei Liu, Huan Xie, Yanmin Jin, and et al. 2015. "Integration of UAV-Based Photogrammetry and Terrestrial Laser Scanning for the Three-Dimensional Mapping and Monitoring of Open-Pit Mine Areas" Remote Sensing 7, no. 6: 6635-6662. https://doi.org/10.3390/rs70606635