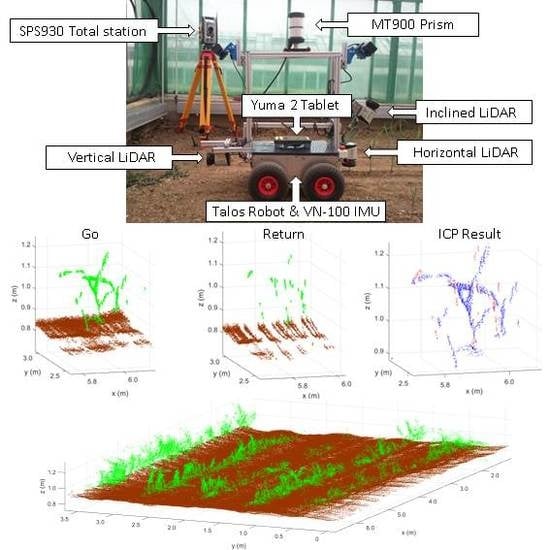

2.1. Hardware, Sensors and Configuration

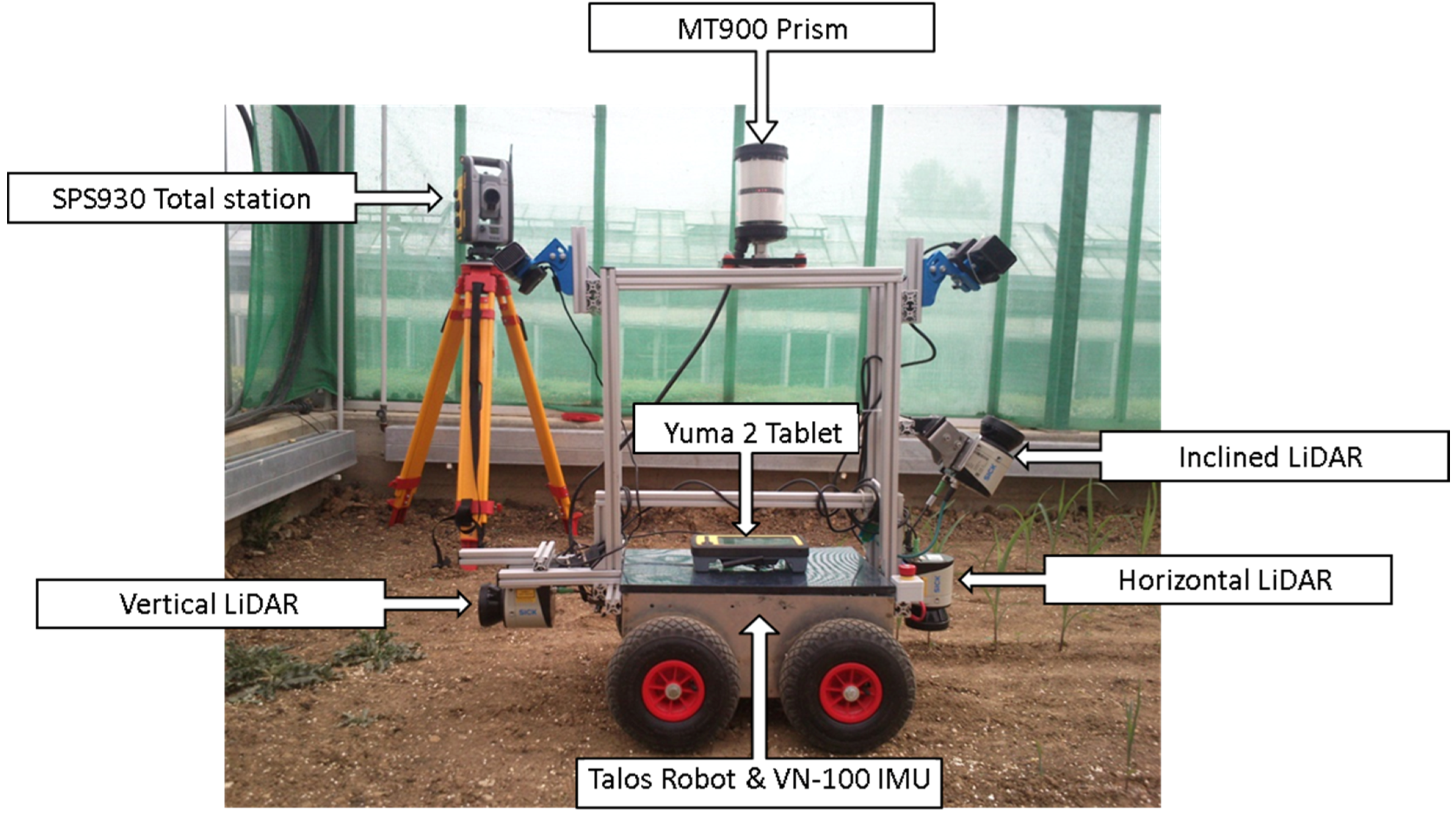

A small four-wheel autonomous robot called “Talos”, developed at the University of Hohenheim, was used to move the sensors between the maize crop rows. On this robot, different sensors were installed (see

Figure 1): three LiDARs, an inertial measurement unit (IMU), and a prism which was tracked by a total station for georeferencing [

26].

Figure 1.

Equipment mounted on the “Talos” robot platform and the used total station.

Figure 1.

Equipment mounted on the “Talos” robot platform and the used total station.

The model chosen for LiDAR sensors was the LMS 111 (SICK AG, Waldkirch, Germany). Its main characteristics are summarized in

Table 1.

Table 1.

LMS 111 technical data [

27].

Table 1.

LMS 111 technical data [27].

| Functional Data | General Data |

|---|

| Operating range: from 0.5 m to 20 m | LiDAR Class: 1 (IEC 60825-1) |

| Field of view/Scanning angle: 270° | Enclosure Rating: IP 67 |

| Scanning Frequency: 25 Hz | Temperature Range: −30 °C to +50 °C |

| Angular resolution: 0.5° | Light source: Infrared (905 nm) |

| Systematic error: ±30 mm | Total weight: 1.1 kg |

| Statistical error: 12 mm | Light spot size at optics cover/18 m: 8 mm/300 mm |

Each LiDAR was installed with a different orientation and at a different location: horizontally, mounted at the front of the robot at a height of 0.2 m above the ground level; push-broom or inclined at an angle of 30°, mounted at the front of the robot at a height of 0.58 m; and vertically, mounted at the back of the robot at a height of 0.2 m (see

Figure 1).

In all LiDAR sensors, two digital filters were activated for optimizing the measured distance values: a fog filter (becoming less sensitive in the near range (up to approx. 4 m); and a N-pulse-to-1-pulse filter, which filters out the first reflected pulse in case that two pulses are reflected by two objects during a measurement [

27].

To acquire the three dimensional orientation of the robot in space during the field experiments, a VN-100 IMU (VectorNav, Dallas, TX, USA) was placed at the center of the robot. The accuracy values of the sensor were 2.0° RMS for the heading at static and dynamic mode, and 0.5° RMS and 1.0° RMS for both pitch and roll in static and dynamic mode, respectively.

In order to georeference the acquired LiDAR data, a SPS930 Universal Total Station was used (Trimble, Sunnyvale, CA, USA), with a distance measurement accuracy in tracking prism mode of ± (4 mm + 2 ppm). For that tracking mode, a Trimble MT900 Machine Target Prism was mounted on top of the robot, at the same axis as the IMU, and at a height of 1.07 m to always guarantee line of sight to the total station (see

Figure 1). To ensure that all heights measured by the total station were positive, the software of the Total Station was configured in a way that the device had a height of 2 m above the ground, when in reality it was located only 1.2 m above the ground. The total station data was sent to a Yuma 2 rugged tablet computer (Trimble, Sunnyvale, CA, USA), which was connected to the robot computer via a serial RS232 interface for continuous data exchange. Five fixed control ground points were used for setting up the total station. In the specific setup the total station position was chosen as the origin of the coordinate system. If a predefined geodetic coordinate reference system (e.g., WGS84) is desired, the absolute coordinates of the fixed ground points are measured and are given as parameters during the total station set up. This variation does not affect the presented methodology.

Sensor acquisition and recording was accomplished by an embedded computer, equipped with an i3-Quadcore 3.3 GHz processor, 4 GB RAM and SSD hard drive. The robot computer ran on Linux (Ubuntu 14.04) and used the Robot Operating System (ROS-Indigo) middleware. The communications between the sensors and the robot computer were performed via RS232 interface for the IMU and Yuma 2; and via Ethernet for the LiDARs. All sensor data were time-stamped, according to the computer system time, and individually saved, respecting their acquisition frequencies: 25, 15, and 40 Hz for the LiDAR, total station, and IMU, respectively.

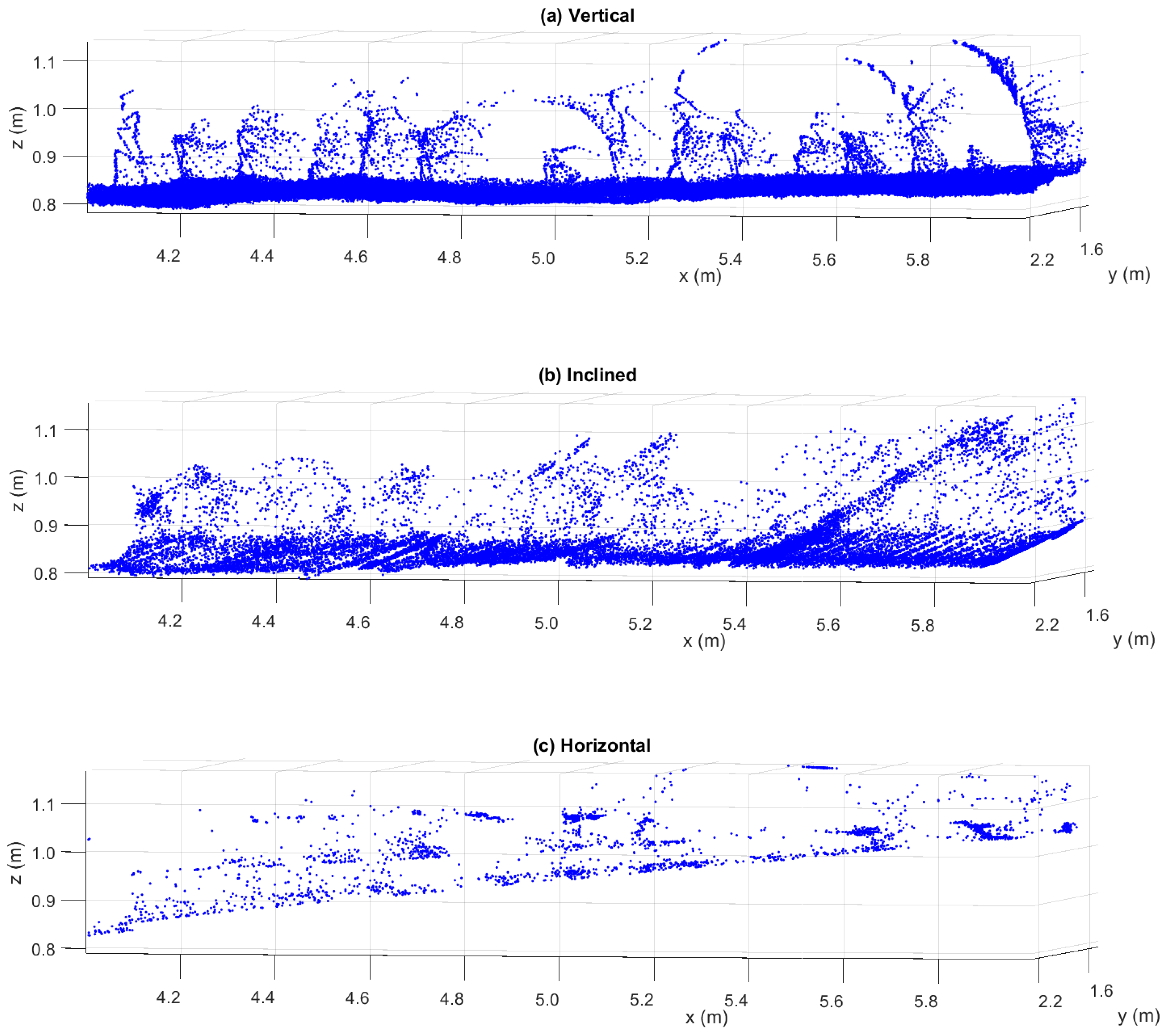

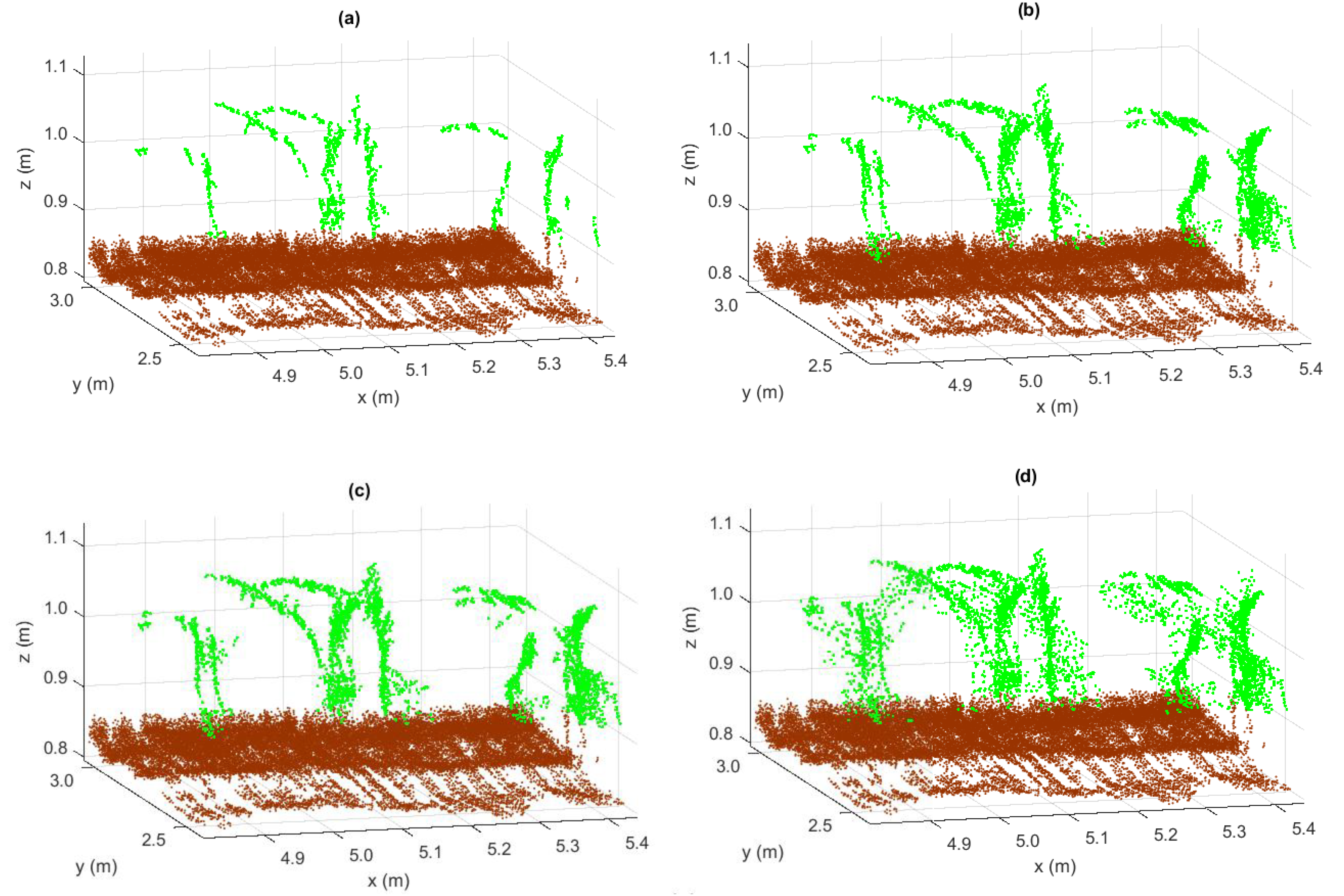

2.4. Point Cloud Overlapping

For developing the proposed methodology (

i.e., georeferenced overlapping of point clouds for three-dimensional maize plant reconstruction), each LiDAR orientation processed data was evaluated individually (see

Figure 5). Thus, considering the goal of this research, it was possible to determine which configurations were satisfactory.

Surroundings characterization by the horizontal LiDAR’s orientation was directly related to ground unevenness as its scanned points were at a larger distance compared to the other two LiDARs. In case of a leveled surface, only a small part of the plant will be detected by obtaining a single cross section for each plant at the corresponding LiDAR height. The soil will not be detected, thereby hampering the procedure of noise removal. If the robot suffers fluctuations or moves on a sloping ground (e.g., a small downslope suffered by the “Talos” robot, see

Figure 5c), a greater number of incorrect plant detections would be obtained (not just at the LiDAR height), making possible the ground detection in a higher distance as LiDAR and ground plane are not parallel. In this way, a horizontal LiDAR’s orientation could be useful for row navigation of an autonomous vehicle, but not for plant reconstruction, as the plant detections are limited. Thus, its use was discarded for the purpose of the present study, which was based on the vertical and inclined LiDAR’s orientations.

To do the evaluations of the aerial point clouds (of the selected LiDAR’s orientation: vertical and inclined), in addition to their shapes and resolutions, it was also important to evaluate the outlier effect obtained at each orientation. According to a previous study: “

An outlier is an observation that deviates so much from other observations. It can be caused by different sources and they are mainly measurements that do not belong to the local neighborhood and do not obey the local surface geometry” [

32].

This effect is enhanced when smaller objects than the footprint of the laser beam are detected. In these cases, instead of registering a clear detection, in which the entire beam hits the object, the reflected pulse received at the LiDAR will belong partially (depending on the shutter percentage) to the desired object, as well as to the unwished second target, which is behind the first one.

Figure 5.

Raw data point cloud obtained with (a) vertical; (b) inclined; and (c) horizontal LiDAR orientation while “Talos” was moving in descending order in x-axis.

Figure 5.

Raw data point cloud obtained with (a) vertical; (b) inclined; and (c) horizontal LiDAR orientation while “Talos” was moving in descending order in x-axis.

In this regard, Sanz-Cortiella

et al. in [

33] evaluated the effect of partial blockages of the beam on the distance measured by the LiDAR, deducting that distance value depends more on the blocked radiant power than on the blocked surface area of the beam, and that there was an area of influence which was dependent on the shutter percentage. In their case, this varied from 1.5 to 2.5 m with respect to the foreground target, so if the second impact of the laser beam occurred within that range it would affect the measurement given by the sensor. However, when the second target was outside this area of influence, the sensor ignored this second target and gave the distance to the first impact target. Thereby, its understanding was an essential process before the point clouds overlapping, adjusting the noise removal filter to their need.

Vertical LiDAR’s orientation provided basically the plant projection. Its resolution was greatly affected by the robot’s speed. Consequently, keeping the speed constant (automatically) was of great importance. On the other hand, the inclined LiDAR’s orientation had a wider field of view, and the plants were detected more than once by different beam angles and at different times. Due to this inclined orientation, the robot’s speed was not that crucial as it was for the vertical orientation. For the inclined LiDAR, the ground roughness was highly important, as the detections were performed at a larger distance. Therefore, small precision flaws during the transformations would affect, to a greater extent, the point cloud’s precision (see

Figure 5).

Regarding the outlier effect, cumulative observations were detected from the vertical LiDAR’s orientation. These detections were mainly at the back side of the plant in respect to the LiDAR view, in line with the projection angle of the scan. The outlier shape remained constant as the plants were scanned just once. On the other hand, the outlier shape which was obtained from the inclined LiDAR’s orientation was not constant. In this case, each plant was detected at different scan angle and at different time, requiring a more exhaustive filtering (see

Figure 5).

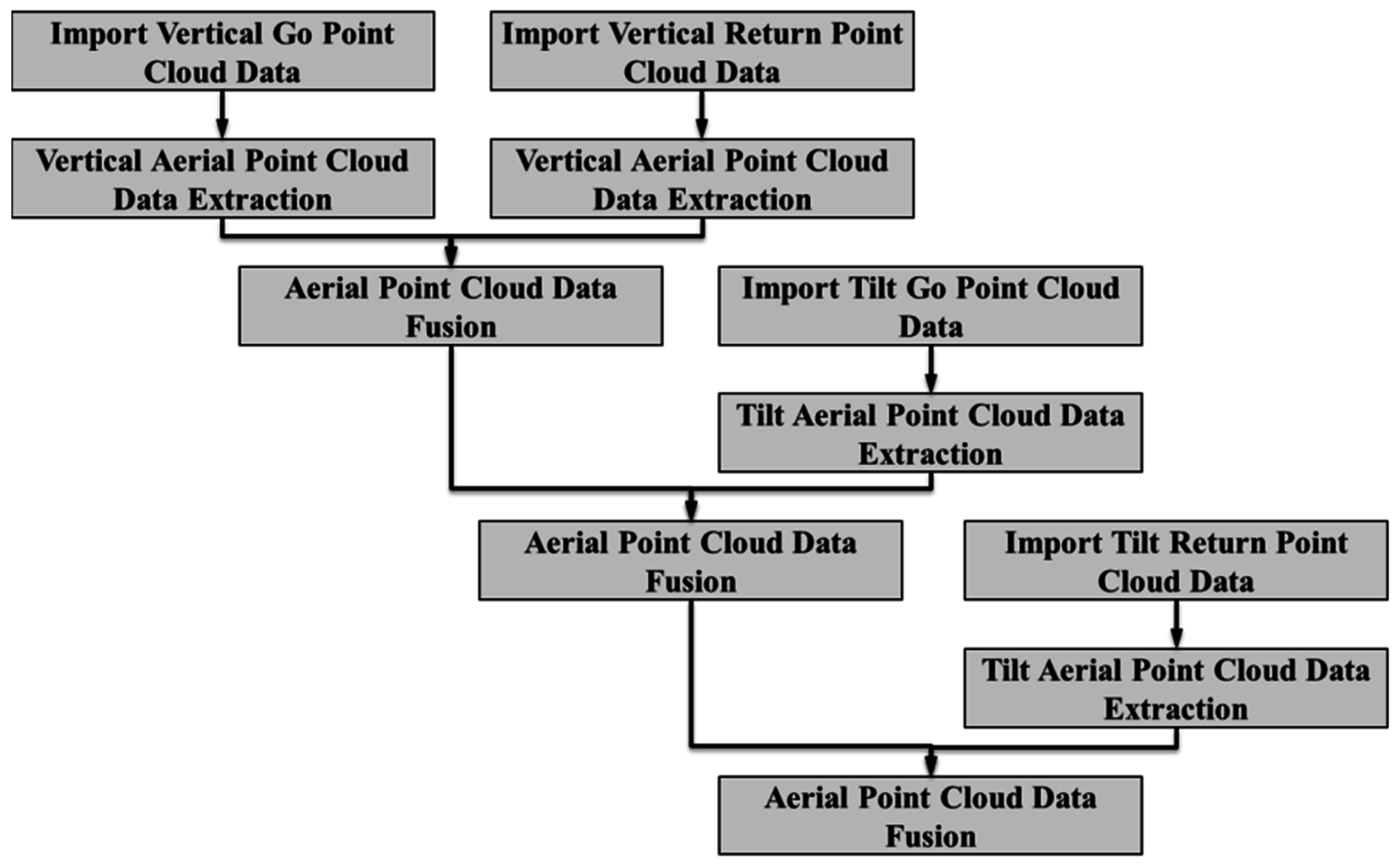

The 3D point cloud overlapping was performed at each path and crop line using both go and return robot's travelling direction. Thus, by manual distance delimitation, four different 3D point clouds were used for each row: vertical and inclined go and return.

The aerial point data cloud was extracted by a succession of pre-filters. In the first place, all the points which did not provide new information were removed using a gridding filter, thus reducing the size of the point cloud. Then, the point cloud noise was reduced by a sparse outlier removal filter [

34], making the application of the RANSAC algorithm easier, in order to distinguish the aerial from the ground points. The applied pre-filters were:

Gridding filter: returns a down sampled point cloud using box grid filter. GridStep specifies the size of a 3D box. Points within the same box are merged to a single point in the output (see

Table 3).

Noise Removal filter: returns a filtered point cloud that removes outliers. A point is considered to be an outlier if the average distance to its K nearest neighbors is above a threshold [

34] (see

Table 3). In the case of the inclined LiDAR’s orientation it was necessary to apply serially, as mentioned earlier, a second noise removal filter.

RANSAC fit plane: this function, developed by Peter Kovesi [

35], uses the RANSAC algorithm to robustly fit a plane. It only requires the selection of the distance threshold value between a data point and the defined plane to decide whether a point is an inlier or not. For plane detection, which was the same as ground detection in our case, the evaluations were conducted at every defined evaluation interval or vehicle advance (see

Table 3).

Table 3.

Point clouds overlapping parameters.

Table 3.

Point clouds overlapping parameters.

| LiDAR Orient. | GridStep (m3) | Noise Removal Filter I | Noise Removal II | RANSAC |

|---|

| Neighbors | Std. threshold | Neighbors | Std. threshold | Eval. Intervals (m) | Threshold (m) |

|---|

| Vertical | (3 × 3 × 3) × 10−9 | 10 | 1 | - | - | 0.195 | 0.07 |

| Inclined | 5 | 0.3 | 10 | 1 |

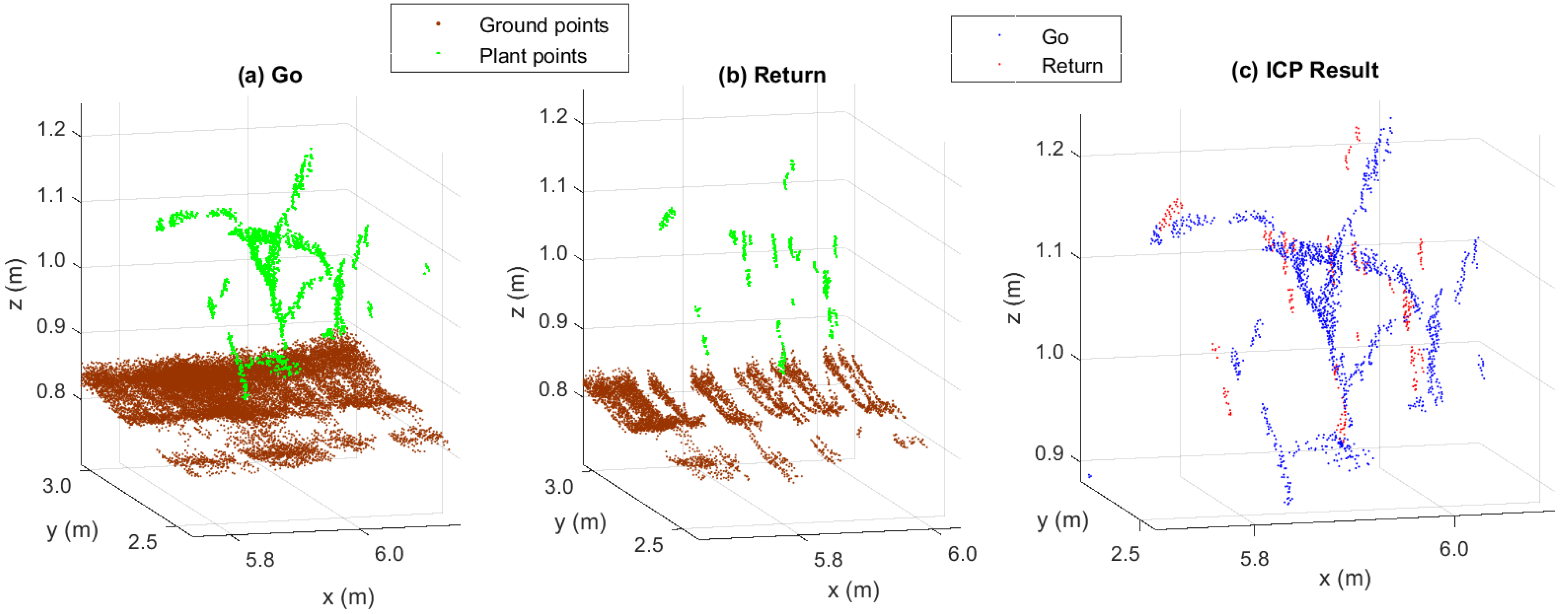

To perform the point cloud data overlapping, considering only the resulting aerial points from the previous process, serial fusions were performed. Firstly, the aerial vertical point clouds (go and return) were fused, followed by the aerial inclined point cloud when the robot goes, and finally by the aerial inclined point cloud when the robot returns (see

Figure 6). The required steps for point cloud fusion were:

- 4.

Iterative Closest Point (ICP): the ICP algorithm takes two point clouds as an input and returns the rigid transformation (rotation matrix R and translation vector T) that best aligns the new point cloud to the previous referenced point cloud. It is using the least squares minimization criterion [

36]. The starting referenced point cloud was the one obtained by the vertical LiDAR’s orientation when going.

- 5.

Gridding filter: the same 3D box size was considered, as defined at the aerial point cloud extraction (see

Table 3).

Figure 6.

Overlapping process followed at each path and row.

Figure 6.

Overlapping process followed at each path and row.

Table 3 shows the parameter values that were chosen during the point clouds overlapping. In order to achieve a fine resolution or detailed characterization of the plant impacts during the point’s merge process, a box grid filter length of 3 mm was selected. For ground removal, the RANSAC algorithm was performed within a factor of 1.5 of the theoretical distance between the plants, thus ensuring its adaptation to ground relief and the presence of maize plants at each interval, and not only ground detections that would result in a malfunction of the algorithm. For ensuring complete ground detection, the distance threshold was set 3.5 times higher than the statistical LiDAR error.

Regarding noise removal filter parameters, nearest neighbor points were used to adjust the sensitivity of the filter to noise. By decreasing the value of nearest neighbor points a more sensitive filter was obtained. On the other hand, the outlier threshold set the standard deviation from the mean of the average distance to neighbors of all points, to assess whether the point is an outlier or not. By increasing the value of outlier threshold a more sensitive filter was obtained. A neighbor point’s parameter was evaluated by keeping constant the outlier threshold to its default value equal to one. This was accomplished by trial and error, selecting those filter values that better reduce the point cloud noise without losing much useful information.

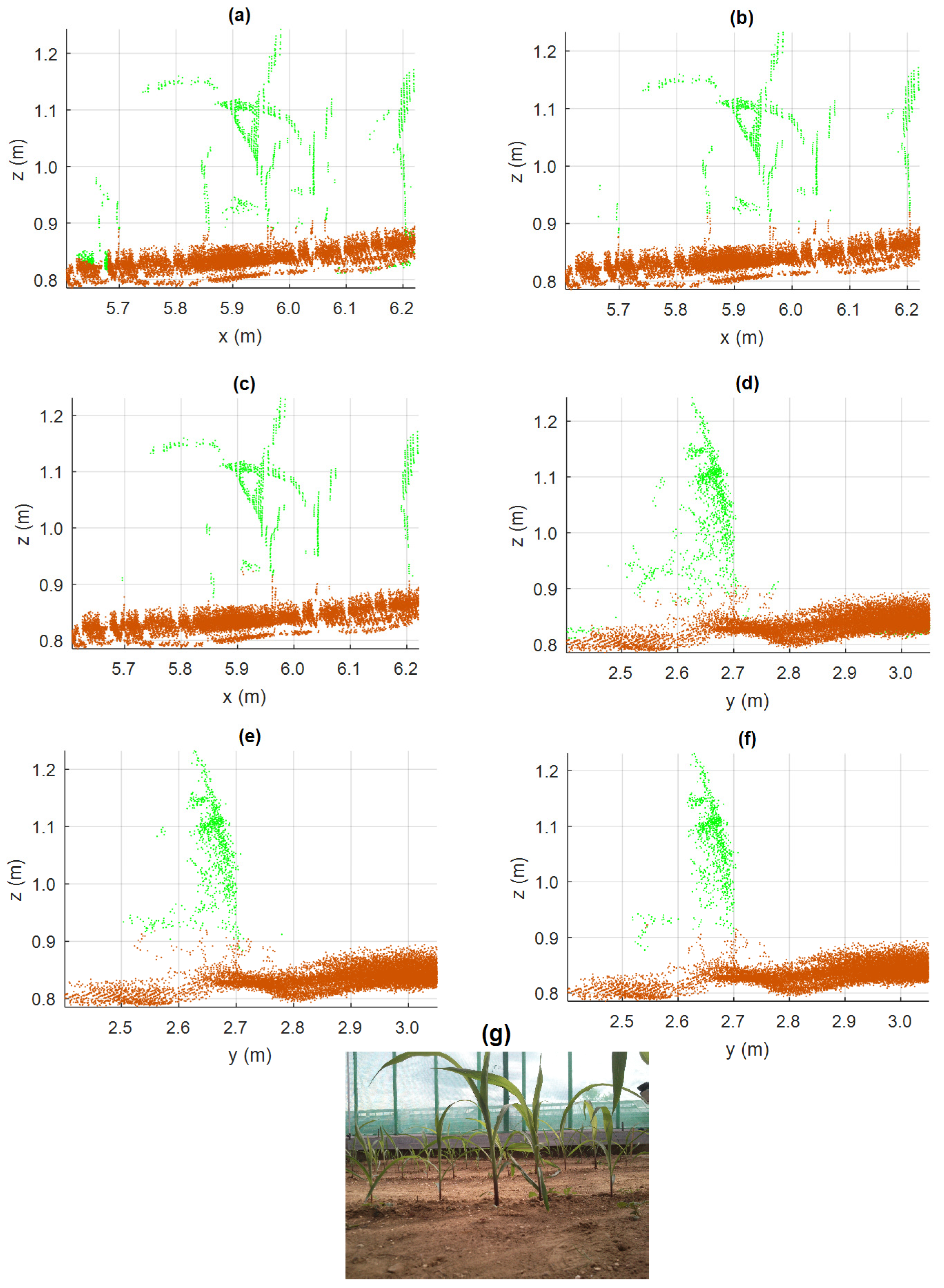

Figure 7 presents the front (XZ) and lateral (YZ) view during vertical orientation noise removal filter evaluation for five, 10, and 20 of nearest neighbor points.

Figure 7 shows how, by increasing the number of considered neighbors, a greater number of outliers were removed, but eliminating at the same time useful information (see the removed maize at the left side in

Figure 7c). At the vertical orientation the number of neighbors was configured to a value of 10. On the other hand, the inclined orientation, with a more dispersed but less dense point cloud, needed the inclusion of a previous noise removal filter. For this filter the same approach as at the vertical orientation was followed.

Figure 7.

Plants reconstruction during vertical orientation noise removal filter evaluation. (a–c) XZ view considering five, 10, and 20 nearest neighbor points. (d–f) YZ view considering five, 10, and 20 nearest neighbor points. (g) Image of the front view of the plants.

Figure 7.

Plants reconstruction during vertical orientation noise removal filter evaluation. (a–c) XZ view considering five, 10, and 20 nearest neighbor points. (d–f) YZ view considering five, 10, and 20 nearest neighbor points. (g) Image of the front view of the plants.