Using UAV-Based Photogrammetry and Hyperspectral Imaging for Mapping Bark Beetle Damage at Tree-Level

Abstract

:1. Introduction

2. Materials and Methods

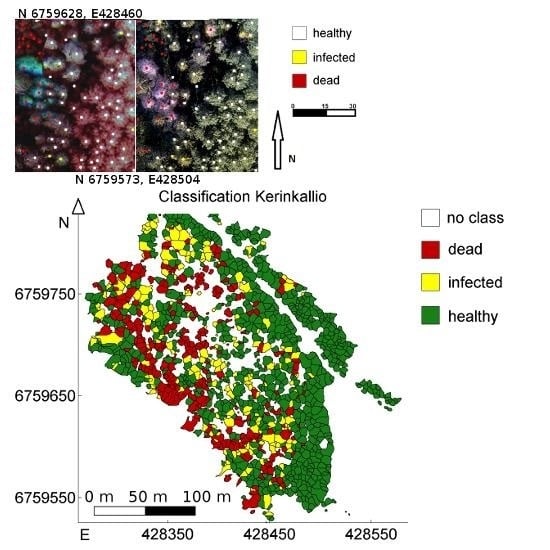

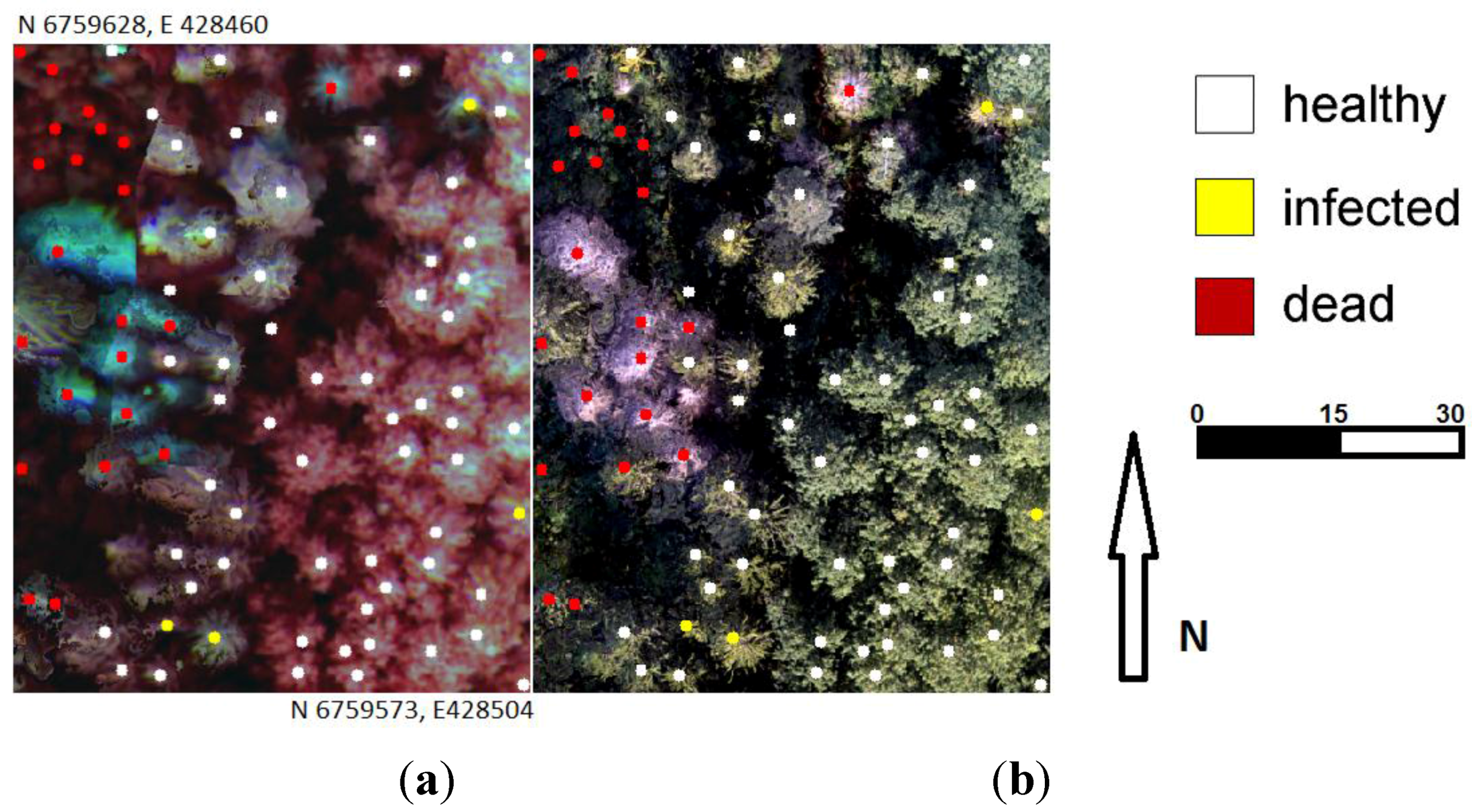

2.1. Test Area and Ground Truth

| 2013 | n | Dmean | Dmin | Dmax | Dsd | Hmean | Hmin | Hmax | Hsd |

|---|---|---|---|---|---|---|---|---|---|

| Healthy | 36 | 37.4 | 26.2 | 60.2 | 8.4 | 29.7 | 23.9 | 35.3 | 4.6 |

| Infested | 15 | 43.4 | 29.7 | 62.0 | 10.4 | 30.8 | 25.9 | 34.8 | 4.3 |

| Dead | 27 | 39.6 | 26.8 | 52.7 | 7.6 | 30.2 | 29.3 | 32.0 | 1.3 |

| Area | Healthy | Infested | Dead | Total |

|---|---|---|---|---|

| Mukkula | 26 | 4 | 9 | 39 |

| Kerinkallio | 10 | 11 | 18 | 39 |

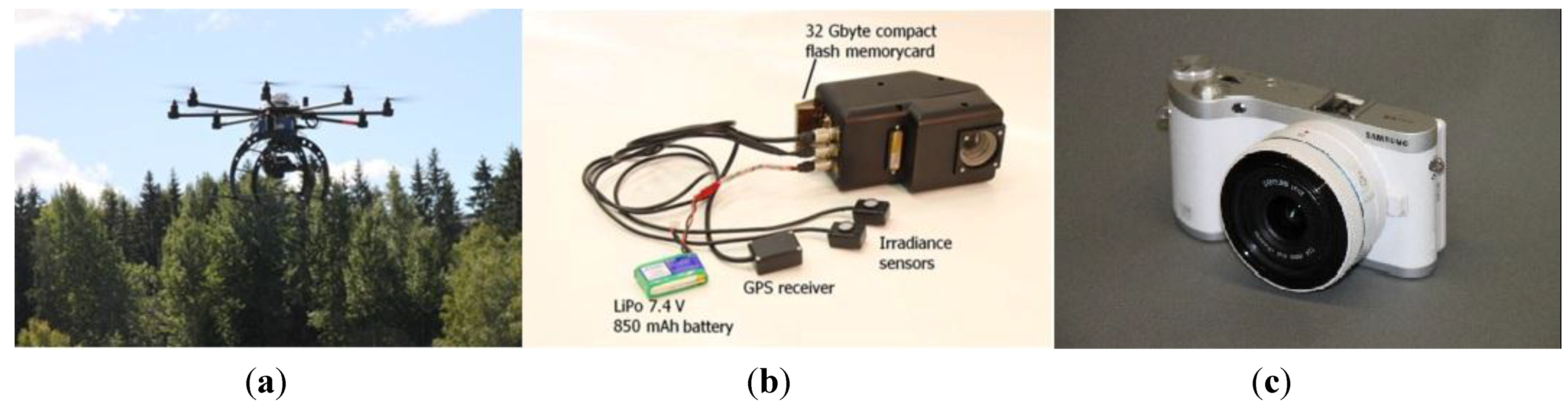

2.2. Remote Sensing Acquisition

| Area | Camera | GSD (cm) | Flying Alt. (m) | Time (UTC + 3) | Solar Elevation | Sun Azimuth | Exposure (ms) | Overlap f; s (%) |

|---|---|---|---|---|---|---|---|---|

| Mukkula | FPI | 9.0 | 55–90 | 10:29–10:35 a.m. | 31.88 | 130.06 | 6 | 55; 55 |

| Mukkula | RGB | 2.4 | 55–90 | 11:20–11:27 a.m. | 35.98 | 143.97 | 70; 65 | |

| Kerinkallio | FPI | 9.0 | 70–90 | 1:48–1:55 p.m. | 40.01 | 190.27 | 8 | 55; 55 |

| Kerinkallio | RGB | 2.4 | 70–90 | 1:10–1:17 p.m. | 40.35 | 178.05 | 70; 65 |

| L0 (nm): 516.50, 522.30, 525.90, 526.80, 538.20, 539.20, 548.90, 550.60, 561.60, 568.30, 592.20, 607.50, 613.40, 626.30, 699.00, 699.90, 706.20, 712.00, 712.40, 725.80, 755.60, 772.80, 793.80, 813.90 |

| FWHM (nm): 20.00, 16.00, 22.00, 18.00, 24.00, 20.00, 18.00, 24.00, 16.00, 32.00, 22.00, 28.00, 30.00, 30.00, 18.00, 30.00, 28.00, 22.00, 28.00, 22.00, 28.00, 32.00, 30.00, 30.00 |

| dt to first exposure (s): 0.825, 1.5, 0.9, 1.2, 0.975, 1.275, 1.35, 1.05, 1.65, 0.075, 0.15, 0.225, 0.3, 0.375, 1.65, 0.525, 0.6, 1.275, 0.675, 1.35, 0.825, 0.9, 0.975, 1.05 |

| ds (computational) to first exposure (m): 4.1, 7.5, 4.5, 6.0, 4.9, 6.4, 6.8, 5.3, 8.3, 0.4, 0.8, 1.1, 1.5, 1.9, 8.3, 2.6, 3.0, 6.4, 3.4, 6.8, 4.1, 4.5, 4.9, 5.3 |

2.3. The Workflow for Analysis

- System corrections of the images using laboratory calibrations, spectral smile correction, and dark signal corrections

- Determination of image orientations

- Use of dense matching methods to create three dimensional (3D) geometric model of the object

- Calculation of a radiometric imaging model to transform the digital numbers (DNs) to reflectance

- Calculation of the reflectance output products: spectral image mosaics and bidirectional reflectance factor (BRF) data

- Identification of individual trees

- Spectral feature extraction for each tree

- The final classification

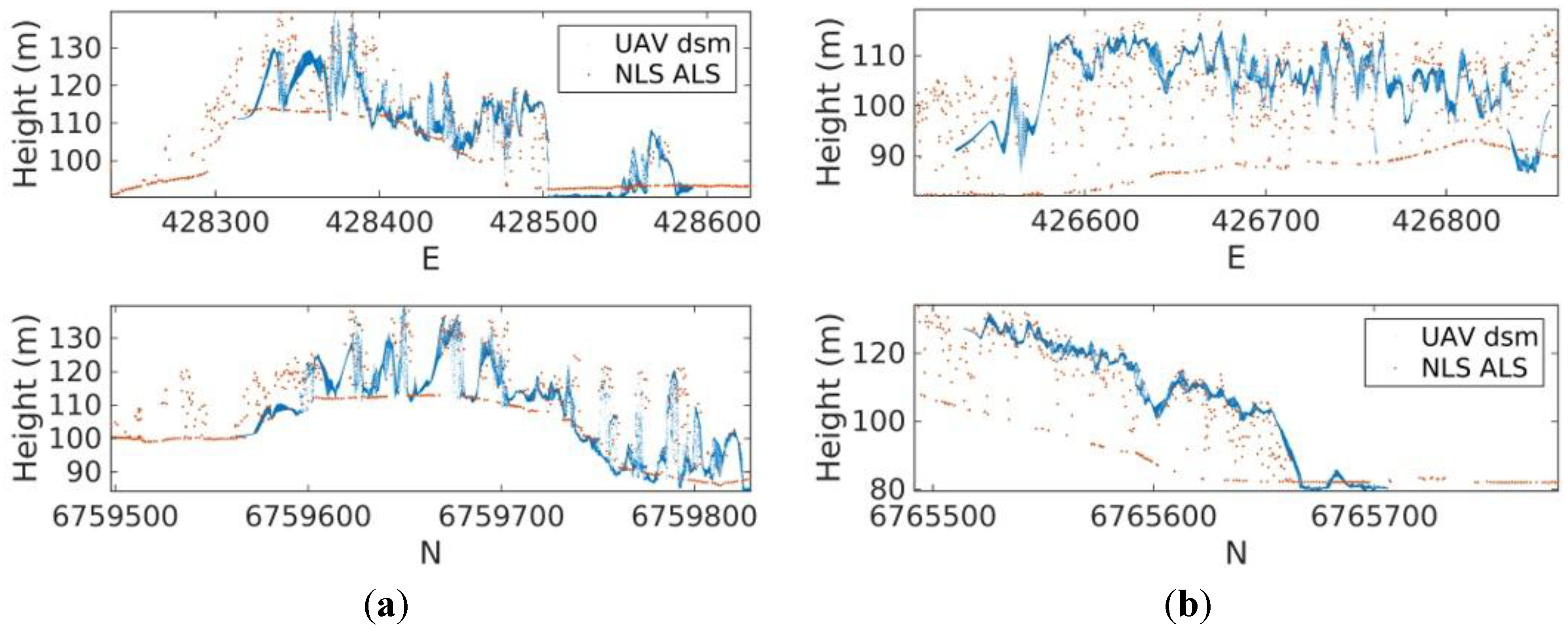

2.4. Geometric Processing

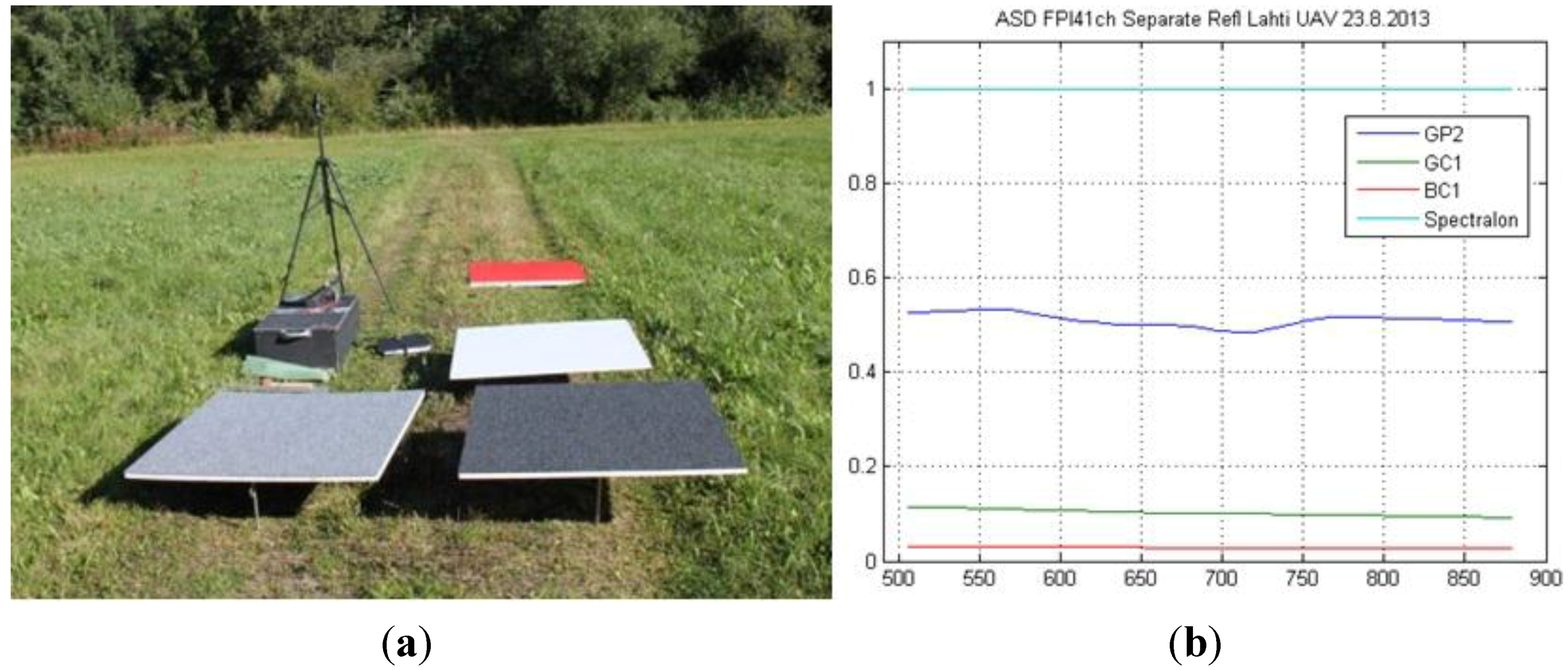

2.5. Radiometric Processing and Mosaic Generation

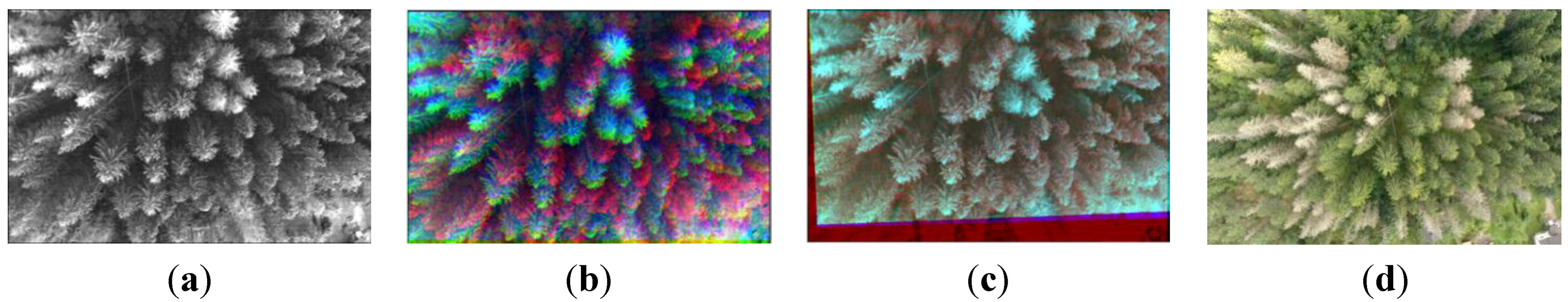

2.6. Individual Tree Detection

2.7. Spectrum and Feature Extraction

- The original 22-band spectra.

- Three different normalized channel ratios (indices) were computed using the reflectance (R) of two bands with wavelengths λ1 and λ2.

2.8. Classification

3. Results

3.1. Geometric Processing

| Area | N Images | FH (m) | Tie Points | Reprojection Error (pix) | GPS RMSE X; Y; Z (m) | Point Density (Points per m2) |

|---|---|---|---|---|---|---|

| Kerinkallio | 357 | 90 | 75,008 | 0.505 | 0.989; 0.900; 0.875 | 423.91 |

| Mukkula | 291 | 90 | 76,700 | 0.353 | 1.031; 1.946; 0.386 | 70.64 |

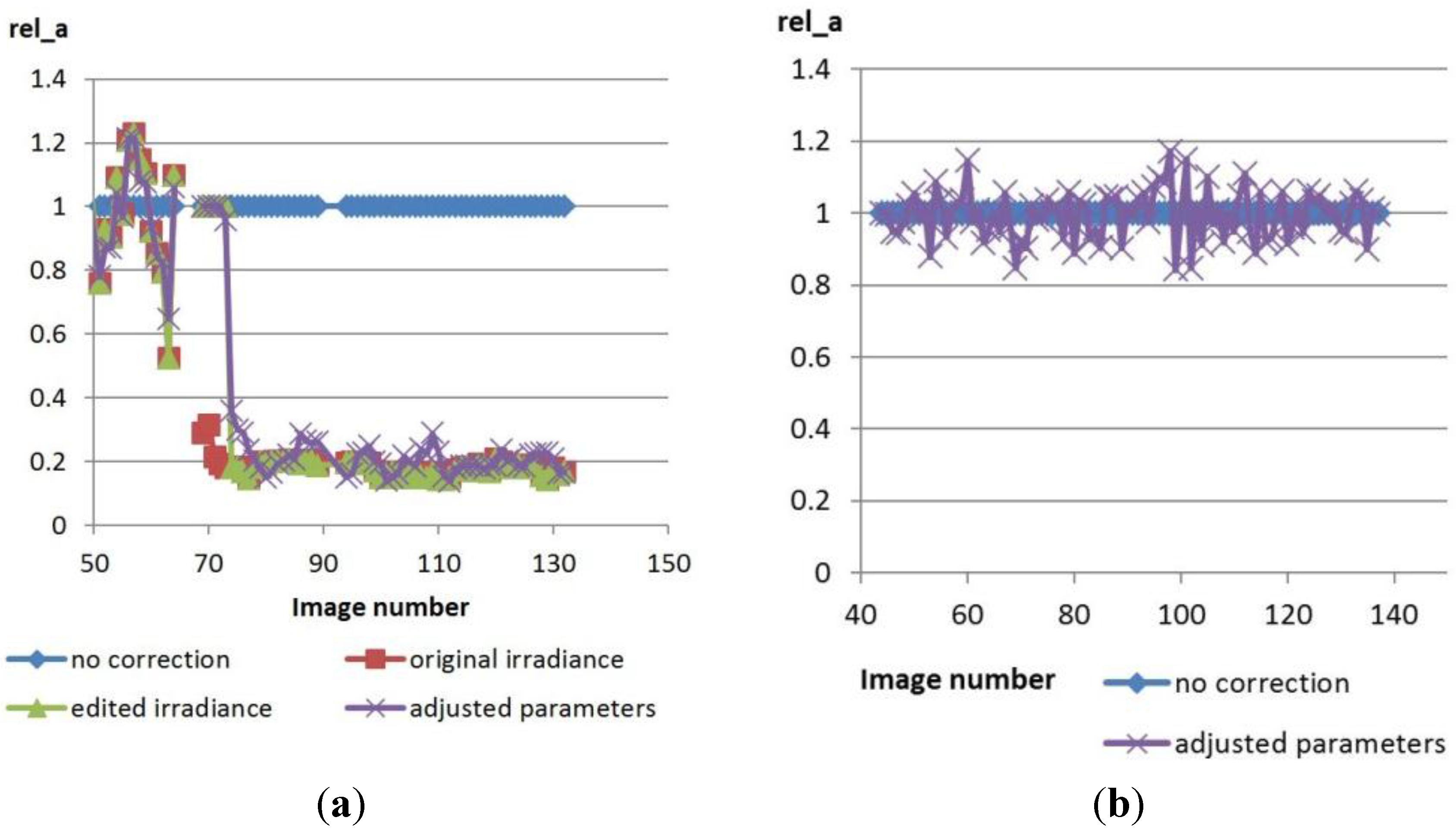

3.2. Radiometric Processing

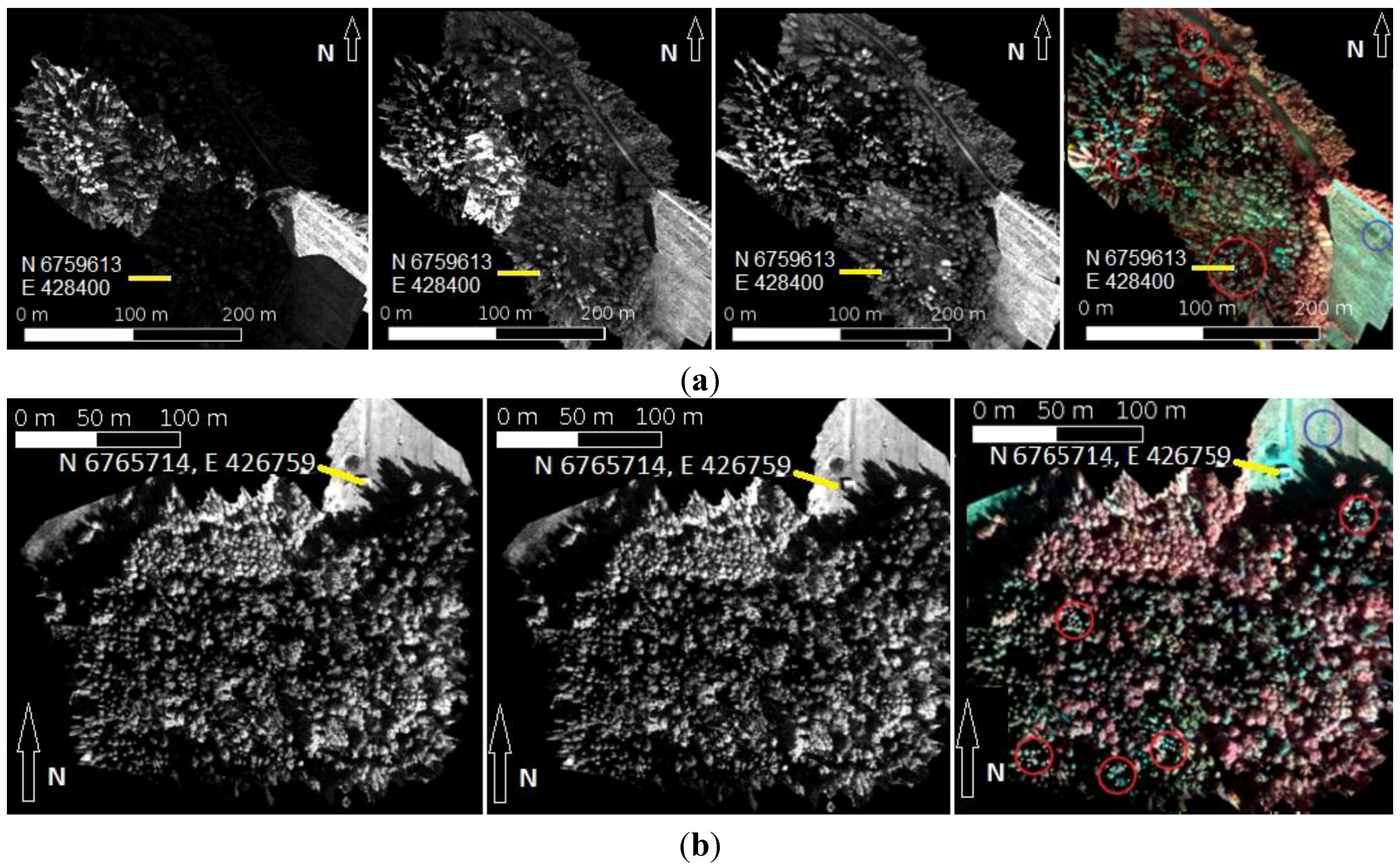

3.3. Individual Tree Detection

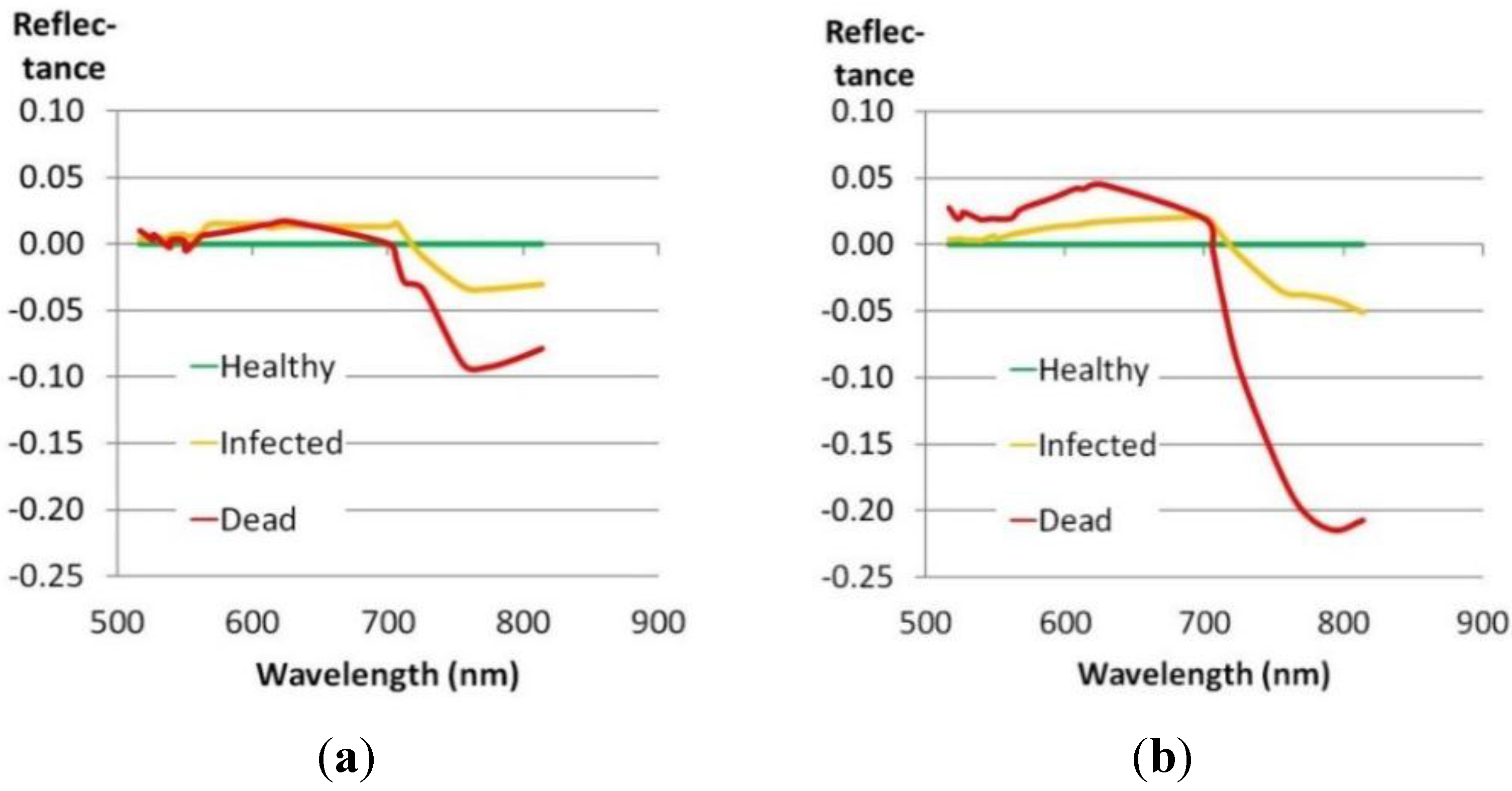

3.4. Spectral Data of Trees

3.5. Classification Results

| Features | Area | N | k | Number of Classes | Overall Accuracy (%) | Kappa | Producer’s Accuracy (%) | ||

|---|---|---|---|---|---|---|---|---|---|

| Healthy | Infested | Dead | |||||||

| Spectrum | both | 78 | 4 | 3 | 75.64 | 0.31 | 77.78 | 46.67 | 88.89 |

| Indices | both | 78 | 4 | 3 | 75.64 | 0.60 | 86.11 | 33.33 | 85.19 |

| Spectrum | Kerinkallio | 39 | 3 | 3 | 71.79 | 0.56 | 50.00 | 63.64 | 88.89 |

| Indices | Kerinkallio | 39 | 3 | 3 | 69.23 | 0.53 | 50.00 | 54.55 | 88.89 |

| Spectrum | Mukkula | 39 | 3 | 3 | 79.49 | 0.55 | 88.46 | 0.00 | 88.89 |

| Indices | Mukkula | 39 | 3 | 3 | 89.74 | 0.79 | 96.15 | 50.00 | 88.89 |

| Features | Area | N | k | Number of Classes | Overall Accuracy (%) | Kappa | Producer’s Accuracy (%) | ||

|---|---|---|---|---|---|---|---|---|---|

| Healthy | Infested | Dead | |||||||

| Spectrum | both | 78 | 4 | 2 | 90.48 | 0.81 | 91.67 | - | 88.89 |

| Indices | both | 78 | 4 | 2 | 90.48 | 0.80 | 94.44 | - | 85.19 |

| Spectrum | Kerinkallio | 39 | 3 | 2 | 89.29 | 0.77 | 90.00 | - | 88.89 |

| Indices | Kerinkallio | 39 | 3 | 2 | 85.71 | 0.70 | 90.00 | - | 83.33 |

| Spectrum | Mukkula | 39 | 3 | 2 | 91.43 | 0.78 | 92.31 | - | 88.89 |

| Indices | Mukkula | 39 | 3 | 2 | 94.29 | 0.85 | 96.15 | - | 88.19 |

4. Discussion

4.1. Monitoring Infestation of Ips typographus

4.2. Geometric Performance

4.3. Radiometric Aspects

4.4. Individual Tree Detection

4.5. Classification

4.6. Outlook

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Seidl, R.; Schelhaas, M.J.; Lexer, M. Unraveling the drivers of intensifying forest disturbance regimes in Europe. Glob. Chang. Biol. 2011, 17, 2842–2852. [Google Scholar] [CrossRef]

- Lytikäinen-Saarenmaa, P.; Tomppo, E. Impact of sawfly defoliation on growth of Scots pine Pinus sylvestris (Pinaceae) and associated economic losses. Bull. Entomol. Res. 2002, 92, 137–140. [Google Scholar] [CrossRef] [PubMed]

- Björkman, C.; Bylund, H.; Klapwijk, M.J.; Kollberg, I.; Schroeder, M. Insect pests in future forests: More severe problems? Forests 2011, 2, 474–485. [Google Scholar] [CrossRef]

- Battisti, A.; Stastny, M.; Netherer, S.; Robinet, C.; Schopf, A.; Roques, A.; Larsson, S. Expansion of geographic range in the pine processionary moth caused by increased winter temperatures. Ecol. Appl. 2005, 15, 2084–2096. [Google Scholar] [CrossRef]

- Vanhanen, H.; Veteli, T.; Päivinen, S.; Kellomäki, S.; Niemelä, P. Climate change and range shifts in two insect defoliators: Gypsy moth and Nun moth—A model study. Silva Fenn. 2007, 41, 621–638. [Google Scholar] [CrossRef]

- Kurz, W.A.; Dymond, C.C.; Stinson, G.; Rampley, G.J.; Neilson, E.T.; Carroll, A.L.; Ebata, T.; Safranyik, L. Mountain pine beetle and forest carbon feedback to climate change. Nature 2008, 452, 987–990. [Google Scholar] [CrossRef] [PubMed]

- Jönsson, A.M.; Harding, S.; Krokene, P.; Lange, H.; Lindelöv, Å.; Okland, B.; Ravn, H.P.; Schroeder, L.M. Modelling the potential impact of global warming on Ips typographus voltinism and reproductive diapause. Clim. Chang. 2011, 109, 695–718. [Google Scholar] [CrossRef]

- Nabuurs, G.-J.; Lindner, M.; Verkerk, P.; Gunia, K.; Deda, P.; Michalak, R.; Grassi, G. First signs of carbon sink saturation in European forest biomass. Nat. Clim. Chang. 2013, 3, 792–796. [Google Scholar] [CrossRef]

- Bakke, A. The recent Ips typographus outbreak in Norway—Experiences from a control program. Holarct. Ecol. 1989, 12, 515–519. [Google Scholar] [CrossRef]

- Mezei, P.; Grodzki, W.; Blazenec, M.; Jakus, R. Factors influencing the wind—Bark beetles’ disturbance system in the course of an Ips typographus outbreak in the Tatra Mountains. For. Ecol. Manag. 2014, 312, 67–77. [Google Scholar] [CrossRef]

- Öhrn, P.; Långström, B.; Lindelöv, Å.; Björklund, N. Seasonal flight patterns of Ips typographus in southern Sweden and thermal sums required for emergence. Agric. For. Entomol. 2014, 16, 147–157. [Google Scholar] [CrossRef]

- Viiri, H.; Ahola, A.; Ihalainen, A.; Korhonen, K.T.; Muinonen, E.; Parikka, H.; Pitkänen, J. Kesän 2010 myrskytuhot ja niistä seuraavat hyönteistuhot. Metsätieteen Aikakauskirja 2011, 3, 221–225. [Google Scholar]

- Kärvemo, S.; Rogell, B.; Schroeder, M. Dynamics of spruce bark beetle infestation spots: Importance of local population size and landscape characteristics after a storm disturbance. For. Ecol. Manag. 2014, 334, 232–240. [Google Scholar] [CrossRef]

- Lausch, A.; Heurich, M.; Gordalla, D.; Dobner, H.-J.; Gwillym-Margianto, S.; Salbach, C. Forecasting potential bark beetle outbreaks based on spruce forest vitality using hyperspectral remote-sensing techniques at different scales. For. Ecol. Manag. 2013, 308, 76–89. [Google Scholar] [CrossRef]

- Schroeder, L.M. Colonization of storm gaps by the spruce bark beetle: Influence of gap and landscape characteristics. Agric. For. Entomol. 2010, 12, 29–39. [Google Scholar] [CrossRef]

- Faccoli, M. Effect of weather on Ips typographus (Coleoptera, Curculionidae) phenology, voltinism, and associated spruce mortality in the southeastern Alps. Environ. Entomol. 2009, 38, 307–316. [Google Scholar] [CrossRef] [PubMed]

- Ortiz, S.; Breidenbach, J.; Kändler, G. Early detection of bark beetle green attack using TerraSAR-X and RapidEye data. Remote Sens. 2013, 5, 1912–1931. [Google Scholar] [CrossRef]

- Wulder, M.A.; White, J.C.; Benz, B.; Alvarez, M.F.; Coops, N.C. Estimating the probability of mountain pine beetle red-attack damage. Remote Sens. Environ. 2006, 101, 150–166. [Google Scholar] [CrossRef]

- Göthlin, E.; Schroeder, L.M.; Lindelöw, Å. Attacks by Ips typographus and Pityogenes chalcographus on windthrown spruces (Picea abies) during the two years following a storm felling. Scand. J. For. Res. 2000, 15, 542–549. [Google Scholar] [CrossRef]

- Wulder, M.A.; Dymond, C.C.; White, J.C.; Leckie, D.G.; Carroll, A.L. Surveying mountain pine beetle damage of forests: A review of remote sensing opportunities. For. Ecol. Manag. 2006, 221, 27–41. [Google Scholar] [CrossRef]

- Kharuk, V.; Ranson, K.J.; Fedotova, E.V. Spatial pattern of Siberian silkmoth outbreak and taiga mortality. Scand. J. For. Res. 2007, 22, 531–536. [Google Scholar] [CrossRef]

- Coops, N.C.; Gillanders, S.N.; Wulder, M.A.; Gergel, S.E.; Nelson, T.; Goodwin, N.R. Assessing changes in forest fragmentation following infestation using time series Landsat imagery. For. Ecol. Manag. 2010, 259, 2355–2365. [Google Scholar] [CrossRef]

- Kantola, T.; Vastaranta, M.; Yu, X.; Lyytikainen-Saarenmaa, P.; Holopainen, M.; Talvitie, M.; Kaasalainen, S.; Solberg, S.; Hyyppa, J. Classification of defoliated trees using tree-level airborne laser scanning data combined with aerial images. Remote Sens. 2010, 2, 2665–2679. [Google Scholar] [CrossRef]

- Kantola, T.; Vastaranta, M.; Lyytikainen-Saarenmaa, P.; Holopainen, M.; Kankare, V.; Talvitie, M.; Hyyppa, J. Classification accuracy of the needle loss of individual Scots pines from airborne laser point clouds. Forests 2013, 4, 386–403. [Google Scholar] [CrossRef]

- De Somviele, B.; Lyytikäinen-Saarenmaa, P.; Niemelä, P. Stand edge effects on distribution and condition of Diprionid sawflies. Agric. For. Entomol. 2007, 9, 17–30. [Google Scholar] [CrossRef]

- Campbell, P.E.; Rock, B.N.; Martin, M.E.; Neefus, C.D.; Irons, J.R.; Middleton, E.M.; Albrechtova, J. Detection of initial damage in Norway spruce canopies using hyperspectral airborne data. Int. J. Remote Sens. 2004, 25, 5557–5584. [Google Scholar] [CrossRef]

- Fassnacht, F.E.; Latifi, H.; Ghosh, A.; Joshi, P.K.; Koch, B. Assessing the potential of hyperspectral imagery to map bark beetle-induced tree mortality. Remote Sens. Environ. 2014, 140, 533–548. [Google Scholar] [CrossRef]

- Lehmann, J.R.; Nieberding, F.; Prinz, T.; Knoth, C. Analysis of unmanned aerial system-based CIR images in forestry—A new perspective to monitor pest infestation levels. Forests 2015, 6, 594–612. [Google Scholar] [CrossRef] [Green Version]

- Calderón, R.; Navas-Cortés, J.A.; Lucena, C.; Zarco-Tejada, P.J. High-resolution airborne hyperspectral and thermal imagery for early detection of verticillium wilt of olive using fluorescence, temperature and narrow-band spectral indices. Remote Sens. Environ. 2013, 139, 231–245. [Google Scholar] [CrossRef]

- Garcia-Ruiz, F.; Sankaran, S.; Maja, J.M.; Lee, W.S.; Rasmussen, J.; Ehsani, R. Comparison of two aerial imaging platforms for identification of Huanglongbing-infected citrus trees. Comput. Electron. Agric. 2013, 91, 106–115. [Google Scholar] [CrossRef]

- Lisein, J.; Deseilligny, M.P.; Bonnet, S.; Lejeune, P. A photogrammetric workflow for the creation of a forest canopy height model from small unmanned aerial system imagery. Forests 2013, 4, 922–944. [Google Scholar] [CrossRef]

- Dandois, J.P.; Ellis, E.C. High spatial resolution three-dimensional mapping of vegetation spectral dynamics using computer vision. Remote Sens. Environ. 2013, 136, 259–276. [Google Scholar] [CrossRef]

- Puliti, S.; Ørka, H.O.; Gobakken, T.; Næsset, E. Inventory of small forest areas using an unmanned aerial system. Remote Sens. 2015, 7, 9632–9654. [Google Scholar] [CrossRef] [Green Version]

- Cajander, A.K. The theory of forest types. Acta For. Fenn. 1926, 29, 1–108. [Google Scholar]

- Mäkynen, J.; Holmlund, C.; Saari, H.; Ojala, K.; Antila, T. Unmanned aerial vehicle (UAV) operated megapixel spectral camera. Proc. SPIE 2011, 8186. [Google Scholar] [CrossRef]

- Saari, H.; Pellikka, I.; Pesonen, L.; Tuominen, S.; Heikkilä, J.; Holmlund, C.; Mäkynen, J.; Ojala, K.; Antila, T. Unmanned Aerial Vehicle (UAV) operated spectral camera system for forest and agriculture applications. Proc. SPIE 2011, 8174. [Google Scholar] [CrossRef]

- Honkavaara, E.; Saari, H.; Kaivosoja, J.; Pölönen, I.; Hakala, T.; Litkey, P.; Mäkynen, J.; Pesonen, L. Processing and assessment of spectrometric, Stereoscopic imagery collected using a lightweight UAV spectral camera for precision agriculture. Remote Sens. 2013, 5, 5006–5039. [Google Scholar] [CrossRef] [Green Version]

- Hakala, T.; Honkavaara, E.; Saari, H.; Mäkynen, J.; Kaivosoja, J.; Pesonen, L.; Pölönen, I. Spectral Imaging from UAVs under Varying Illumination Conditions. In Proceedings of International Society for Photogrammetry and Remote Sensing (ISPRS), Paris, France, 4–6 September 2013; pp. 189–194.

- National Land Survey of Finland Open Data License. Available online: http://www.maanmittauslaitos.fi/en/opendata/acquisition (accessed on 15 November 2015).

- Verhoeven, G. Taking computer vision aloft—Archaeological three-dimensional reconstructions from aerial photographs with PhotoScan. Archaeol. Prospect. 2011, 18, 67–73. [Google Scholar] [CrossRef]

- Eltner, A.; Schneider, D. Analysis of different methods for 3D reconstruction of natural surfaces from parallel-axes UAV images. Photogramm. Rec. 2015, 30, 279–299. [Google Scholar] [CrossRef]

- Smith, G.M.; Milton, E.J. The use of the empirical line method to calibrate remotely sensed data to reflectance. Int. J. Remote Sens. 1999, 20, 2653–2662. [Google Scholar] [CrossRef]

- Hyyppä, J.; Kelle, O.; Lehikoinen, M.; Inkinen, M. A segmentation-based method to retrieve stem volume estimates from 3-D tree height models produced by laser scanners. IEEE Trans. Geosci. Remote Sens. 2001, 39, 969–975. [Google Scholar] [CrossRef]

- Tanhuanpää, T.; Vastaranta, M.; Kankare, V.; Holopainen, M.; Hyyppä, J.; Hyyppä, H.; Alho, P.; Raisio, J. Mapping of urban roadside trees—A case study in the tree register update process in Helsinki City. Urban For. Urban Green. 2014, 13, 562–570. [Google Scholar] [CrossRef]

- Sokal, R.; Rohlf, F. Biometry: The Principles and Practice of Statistics in Biological Research; W.H. Freeman and Company: New York, NY, USA, 1995. [Google Scholar]

- Kotsiantis, S.B. Supervised machine learning: A review of classification techniques. Informatica 2007, 31, 249–268. [Google Scholar]

- Cohen, J. Weighted kappa: Nominal scale agreement provision for scaled disagreement or partial credit. Psychol. Bull. 1968, 70, 213–220. [Google Scholar] [CrossRef] [PubMed]

- Turner, D.; Lucieer, A.; Watson, C. An automated technique for generating georectified mosaics from ultra-high resolution unmanned aerial vehicle (UAV) imagery, based on structure from motion (SFM) point clouds. Remote Sens. 2012, 4, 1392–1410. [Google Scholar] [CrossRef]

- Vander Jagt, B.; Lucieer, A.; Wallace, L.; Turner, D.; Durand, M. Snow depth retrieval with UAS using photogrammetric techniques. Geosciences 2015, 5, 264–285. [Google Scholar] [CrossRef]

- Korpela, I.; Heikkinen, V.; Honkavaara, E.; Rohrbach, F.; Tokola, T. Variation and directional anisotropy of reflectance at the crown scale—Implications for tree species classification in digital aerial images. Remote Sens. Environ. 2011, 115, 2062–2074. [Google Scholar] [CrossRef]

- Puttonen, E.; Litkey, P.; Hyyppä, J. Individual tree species classification by illuminated-shaded area separation. Remote Sens. 2010, 2, 19–35. [Google Scholar] [CrossRef]

- Schaepman-Strub, G.; Schaepman, M.E.; Painter, T.H.; Dangel, S.; Martonchik, J.V. Reflectance quantities in optical remote sensing—Definitions and case studies. Remote Sen. Environ. 2006, 103, 27–42. [Google Scholar] [CrossRef]

- Honkavaara, E.; Arbiol, R.; Markelin, L.; Martinez, L.; Cramer, M.; Bovet, S.; Chandelier, L.; Ilves, R.; Klonus, S.; Marshal, P.; et al. Digital Airborne photogrammetry—A new tool for quantitative remote sensing?—A state-of-the-art review on radiometric aspects of digital photogrammetric images. Remote Sens. 2009, 1, 577–605. [Google Scholar] [CrossRef] [Green Version]

- Vastaranta, M.; Wulder, M.A.; White, J.; Pekkarinen, A.; Tuominen, S.; Ginzler, C.; Kankare, V.; Holopainen, M.; Hyyppä, J.; Hyyppä, H. Airborne laser scanning and digital stereo imagery measures of forest structure: Comparative results and implications to forest mapping and inventory update. Can. J. Remote Sens. 2013, 39, 382–395. [Google Scholar] [CrossRef]

- White, J.C.; Wulder, M.A.; Vastaranta, M.; Coops, N.C.; Pitt, D.; Woods, M. The utility of image-based point clouds for forest inventory: A comparison with airborne laser scanning. Forests 2013, 4, 518–536. [Google Scholar] [CrossRef]

- Persson, Å.; Holmgren, J.; Söderman, U. Detecting and measuring individual trees using an airborne laser scanner. Photogramm. Eng. Remote Sens. 2002, 68, 925–932. [Google Scholar]

- Koch, B.; Heyder, U.; Weinacker, H. Detection of individual tree crowns in airborne LiDAR data. Photogramm. Eng. Remote Sens. 2006, 72, 357–363. [Google Scholar] [CrossRef]

- Bishop, C. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006. [Google Scholar]

- Schröder, T.; McNamara, D.G.; Gaar, V. Guidance on sampling to detect pine wood nematode Bursaphelenchus Xylophilus in trees, wood and insects. EPPO Bull. 2009, 39, 179–188. [Google Scholar] [CrossRef]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Näsi, R.; Honkavaara, E.; Lyytikäinen-Saarenmaa, P.; Blomqvist, M.; Litkey, P.; Hakala, T.; Viljanen, N.; Kantola, T.; Tanhuanpää, T.; Holopainen, M. Using UAV-Based Photogrammetry and Hyperspectral Imaging for Mapping Bark Beetle Damage at Tree-Level. Remote Sens. 2015, 7, 15467-15493. https://doi.org/10.3390/rs71115467

Näsi R, Honkavaara E, Lyytikäinen-Saarenmaa P, Blomqvist M, Litkey P, Hakala T, Viljanen N, Kantola T, Tanhuanpää T, Holopainen M. Using UAV-Based Photogrammetry and Hyperspectral Imaging for Mapping Bark Beetle Damage at Tree-Level. Remote Sensing. 2015; 7(11):15467-15493. https://doi.org/10.3390/rs71115467

Chicago/Turabian StyleNäsi, Roope, Eija Honkavaara, Päivi Lyytikäinen-Saarenmaa, Minna Blomqvist, Paula Litkey, Teemu Hakala, Niko Viljanen, Tuula Kantola, Topi Tanhuanpää, and Markus Holopainen. 2015. "Using UAV-Based Photogrammetry and Hyperspectral Imaging for Mapping Bark Beetle Damage at Tree-Level" Remote Sensing 7, no. 11: 15467-15493. https://doi.org/10.3390/rs71115467