The second data were collected by the AVIRIS sensor over an area in Salinas Valley, California with a spatial resolution of 3.7 m. The image comprises 512 × 217 pixels with 204 bands (after 20 water absorption bands are removed). It mainly contains vegetables, bar soils, and vineyard fields. There are 16 classes from the ground truth map. With the same sensor, another scene was collected over northwest Indiana’s Indian Pine test site in 1992. The scene consists of 145 × 145 pixels with a spatial resolution of 20 m. There are also 16 different land-cover classes.

3.1. Parameter Tuning

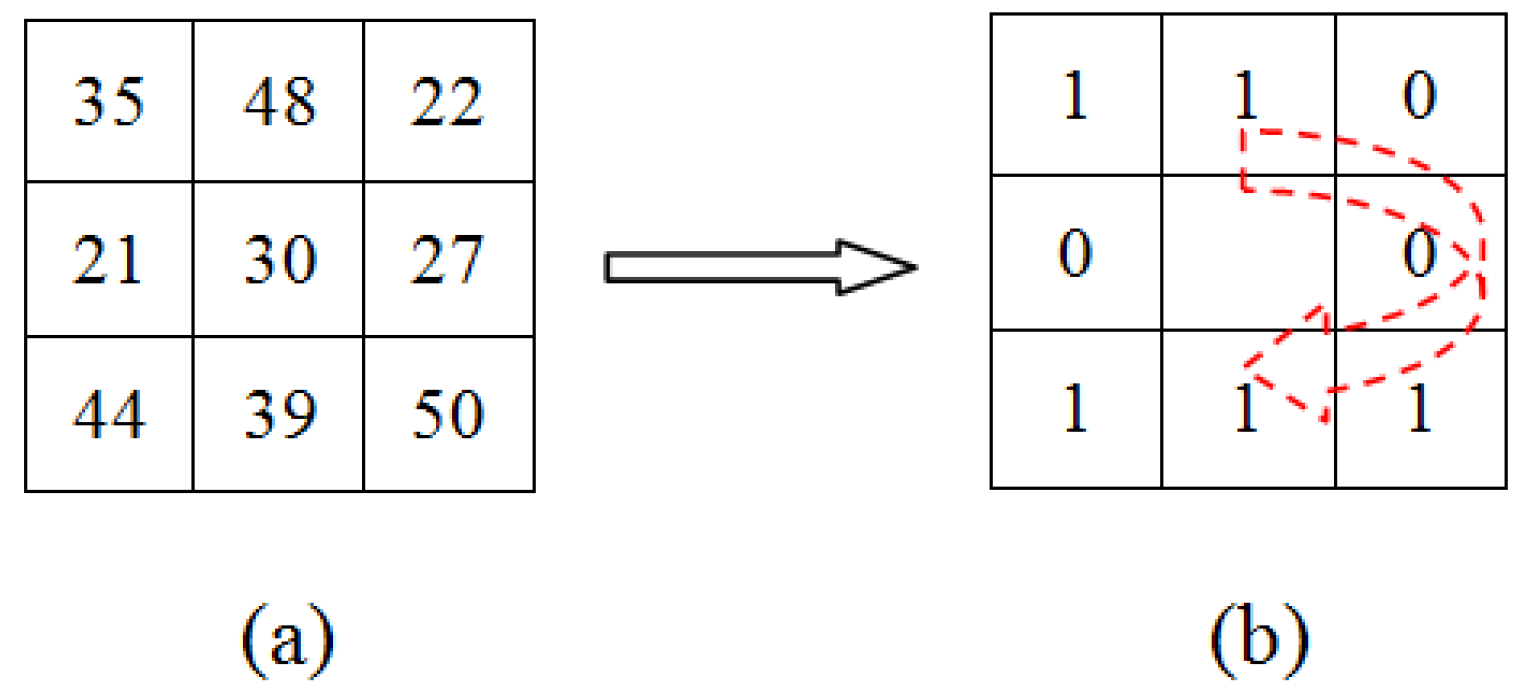

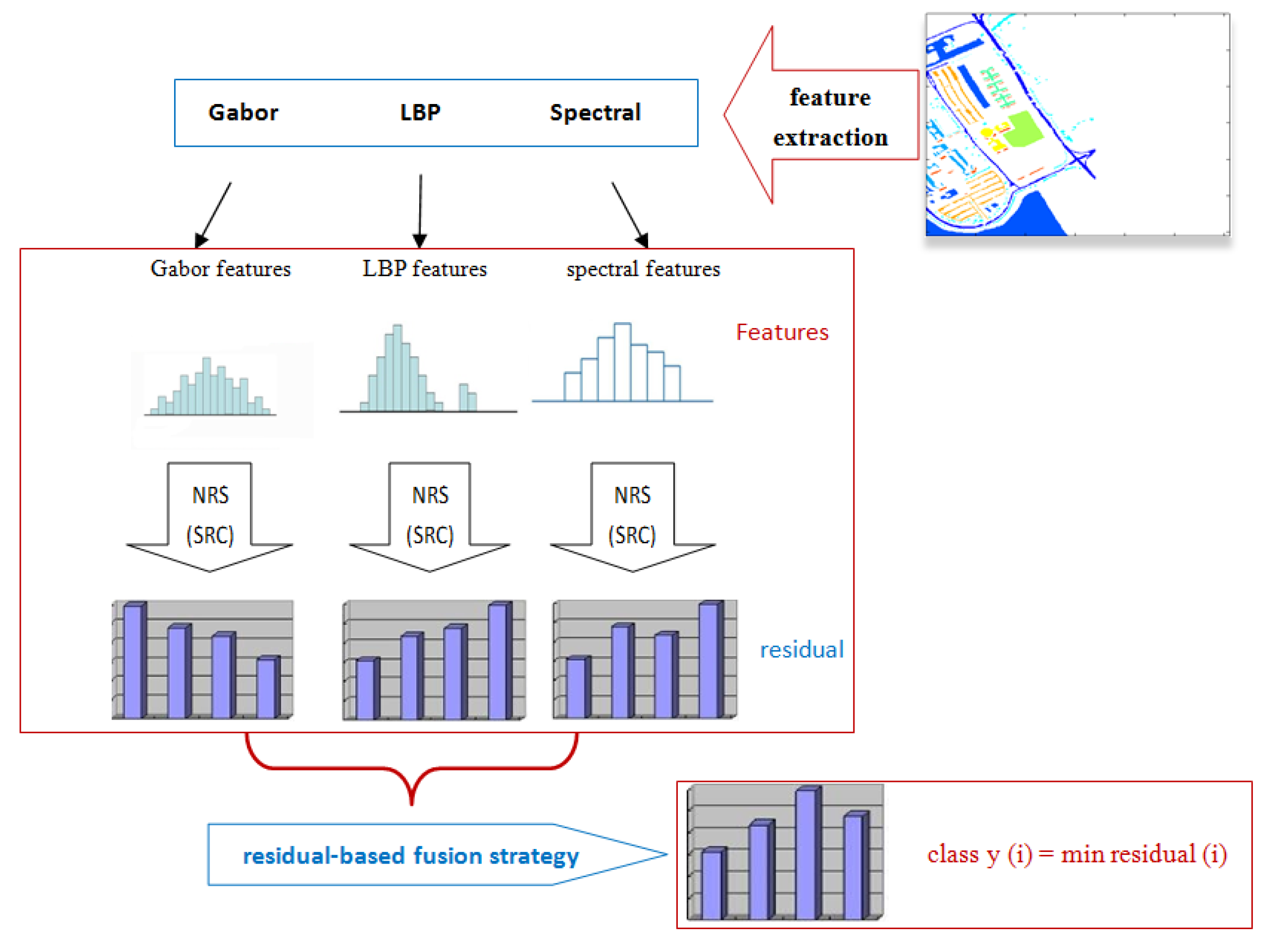

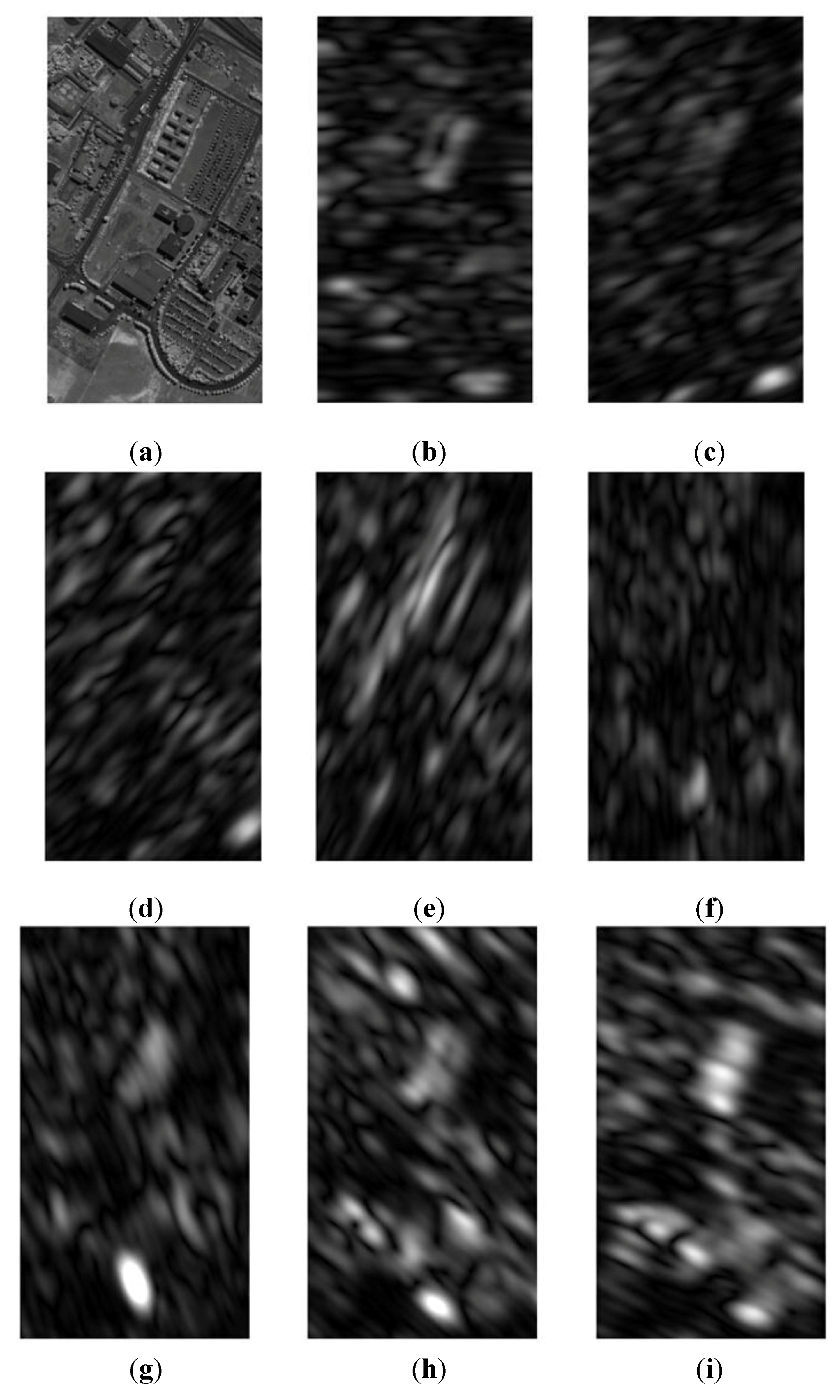

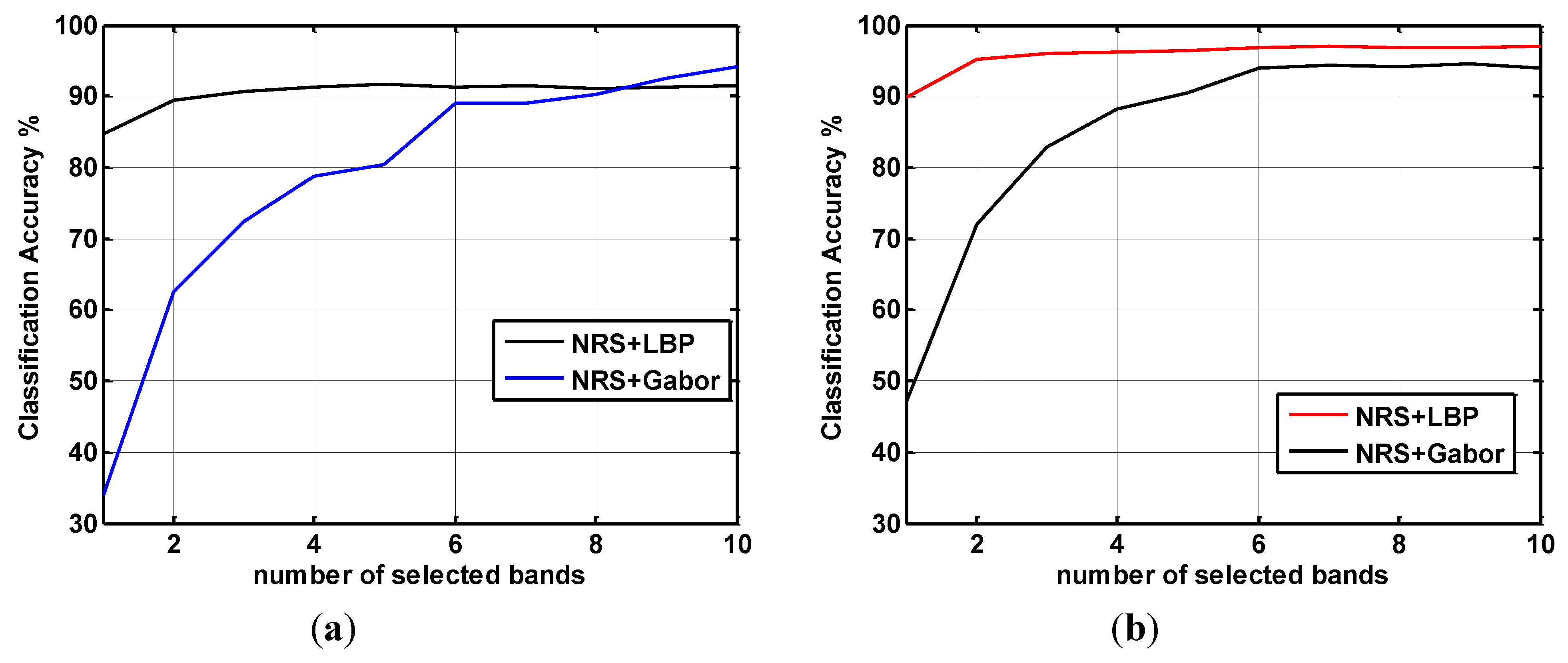

Several significant parameters for the proposed classification framework are tuned with the leave-one-out cross validation (LOOCV) strategy based on available training samples. For the parameters of Gabor filtering, all the eight orientations

shown in

Figure 3 are used in this work according to [

47], and the parameter

bw in Equation (4) is set to five and 10 bands for the Gabor filter according to our empirical study shown in

Figure 4. Note that selecting more bands may not significantly improve classification accuracy, but definitely increases computational cost due to the resulting higher feature dimensionality. Thus, only three bands are selected for the LBP operator and 10 bands for the Gabor filter.

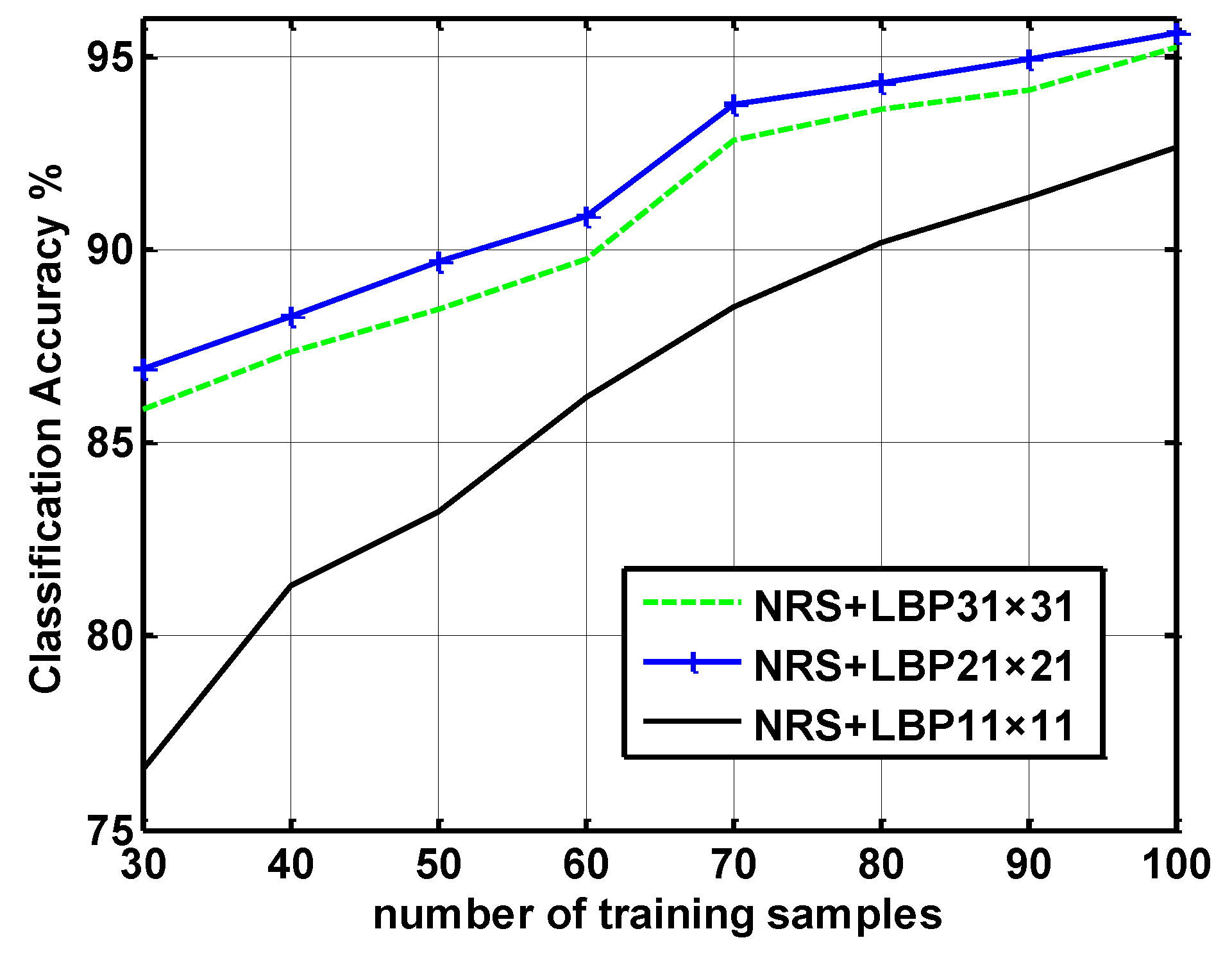

Figure 5 further illustrates the effect of patch sizes on the LBP. It can be seen that classification accuracy tends to be the maximum with 21 × 21 patch size for the University of Pavia data.

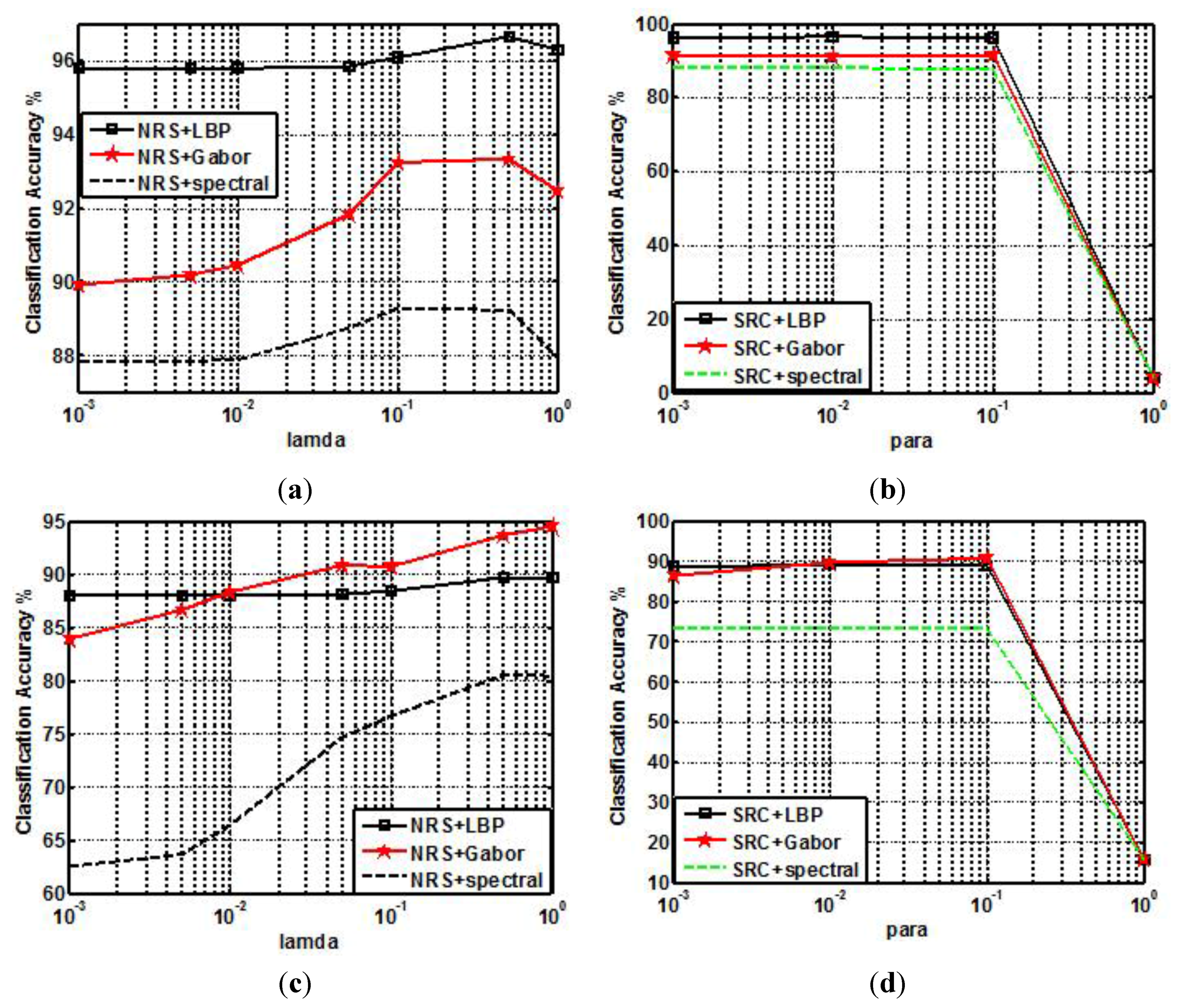

To estimate an optimal

λ,

Figure 6 shows accuracy at different values of

λ for NRS and SRC classifiers. The number of training samples per class is 30 in the Salinas dataset, and 60 in the University of Pavia data. In different datasets, the optimal values of

λ are different as shown in

Figure 6. In the Salinas data, the optimal parameter is 0.5 for NRS and 0.1 for SRC; in the University of Pavia data, it is 1 for NRS and 0.1 for SRC.

Figure 3.

(a) Band 95 of the University dataset. (b)–(i) Eight Gabor feature images corresponding to eight orientations of the band.

Figure 3.

(a) Band 95 of the University dataset. (b)–(i) Eight Gabor feature images corresponding to eight orientations of the band.

Another three important parameters are

w1,

w2 and

w3.

Table 3,

Table 4,

Table 5 and

Table 6 demonstrate classification accuracy

versus the values of

w1 and

w2. It is interesting to observe that in University of Pavia dataset, the Gabor features provide a better performance, and the LBP features need higher weights in Salinas dataset explaining the high classification accuracy of LBP-NRS in

Figure 6a,b. As shown in

Table 3,

Table 4,

Table 5 and

Table 6, the best values of

w1,

w2 and

w3 are: 0.2, 0.3, 0.5; 0.6, 0.1, 0.4; 0.2, 0.7, 0.1; 0.6(0.7), 0.1, 0.3 (0.2), respectively. The weight of each residual indicates the importance of the corresponding feature. For example, in

Table 3, as Gabor features perform well in the University of Pavia dataset, its weight is 0.5, much higher than the other two; in

Table 4 for the Salinas dataset, where the spectral signatures actually provide the highest classification accuracy, the corresponding weight is 0.6, larger than others.

Figure 4.

Classification accuracy versus the number of selected bands for: (a) University of Pavia dataset (60 training samples per class); (b) Salinas dataset (30 training samples per class).

Figure 4.

Classification accuracy versus the number of selected bands for: (a) University of Pavia dataset (60 training samples per class); (b) Salinas dataset (30 training samples per class).

Figure 5.

Impact of patch size on LBP generation using the University of Pavia dataset.

Figure 5.

Impact of patch size on LBP generation using the University of Pavia dataset.

3.2. Classification Performance

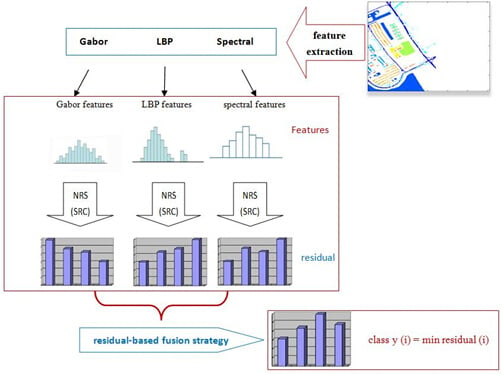

The effectiveness of the proposed RF-NRS classifier is evaluated by the comparison with the original NRS [

3], Gabor-NRS [

16], and LBP-NRS; similarly, RF-SRC is compared with the original SRC [

6], Gabor-SRC, and LBP-SRC. In the University of Pavia dataset, the number of training samples per class is varied from 30–100. In the dataset of Salinas Valley, the number of training samples per class is from 10–30. All the samples are selected randomly to avoid bias.

Figure 6.

Classification accuracy (%) on two datasets using different parameters for λ: (a) and (b) Salinas dataset with 30 training samples per class, and (c) and (d) University of Pavia dataset with 60 training samples per class.

Figure 6.

Classification accuracy (%) on two datasets using different parameters for λ: (a) and (b) Salinas dataset with 30 training samples per class, and (c) and (d) University of Pavia dataset with 60 training samples per class.

Table 3.

Classification accuracy (%) versus the values of w1 and w2 for RF-NRS in University of Pavia dataset with 60 training samples per class.

Table 3.

Classification accuracy (%) versus the values of w1 and w2 for RF-NRS in University of Pavia dataset with 60 training samples per class.

| | w1 | 0 | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | 1 |

| w2 | |

| 0 | 0.8257 | 0.9637 | 0.9796 | 0.9833 | 0.9835 | 0.9823 | 0.9795 | 0.9755 | 0.9667 | 0.9464 | 0.9067 |

| 0.1 | 0.9312 | 0.9813 | 0.9874 | 0.9884 | 0.9878 | 0.9855 | 0.9816 | 0.9761 | 0.9595 | 0.9248 | - |

| 0.2 | 0.9596 | 0.9872 | 0.9915 | 0.9912 | 0.9899 | 0.9874 | 0.9831 | 0.9724 | 0.9394 | - | - |

| 0.3 | 0.9697 | 0.989 | 0.9923 | 0.9922 | 0.9908 | 0.9873 | 0.981 | 0.9536 | - | - | - |

| 0.4 | 0.973 | 0.9888 | 0.9919 | 0.9921 | 0.9904 | 0.9856 | 0.9666 | - | - | - | - |

| 0.5 | 0.9725 | 0.9869 | 0.9908 | 0.9911 | 0.9881 | 0.9753 | - | - | - | - | - |

| 0.6 | 0.9701 | 0.9839 | 0.9878 | 0.9877 | 0.9794 | - | - | - | - | - | - |

| 0.7 | 0.9667 | 0.9797 | 0.9829 | 0.9784 | - | - | - | - | - | - | - |

| 0.8 | 0.9625 | 0.9736 | 0.973 | - | - | - | - | - | - | - | - |

| 0.9 | 0.9552 | 0.9626 | - | - | - | - | - | - | - | - | - |

| 1 | 0.9428 | - | - | - | - | - | - | - | - | - | - |

Table 4.

Classification accuracy (%) versus the values of w1 and w2 for RF-NRS in Salinas dataset with 30 training samples per class.

Table 4.

Classification accuracy (%) versus the values of w1 and w2 for RF-NRS in Salinas dataset with 30 training samples per class.

| | w1 | 0 | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | 1 |

| w2 | |

| 0 | 0.8759 | 0.9652 | 0.9795 | 0.9839 | 0.9852 | 0.9853 | 0.9856 | 0.9852 | 0.9837 | 0.9787 | 0.9667 |

| 0.1 | 0.9006 | 0.969 | 0.9808 | 0.9848 | 0.9856 | 0.9858 | 0.9859 | 0.9849 | 0.9817 | 0.9755 | - |

| 0.2 | 0.9174 | 0.969 | 0.9795 | 0.9832 | 0.9846 | 0.9855 | 0.9848 | 0.9827 | 0.9785 | - | - |

| 0.3 | 0.9301 | 0.969 | 0.9775 | 0.9812 | 0.9831 | 0.9836 | 0.982 | 0.9792 | - | - | - |

| 0.4 | 0.9374 | 0.9685 | 0.9759 | 0.9794 | 0.981 | 0.9811 | 0.9792 | - | - | - | - |

| 0.5 | 0.9407 | 0.967 | 0.9739 | 0.9773 | 0.9785 | 0.9779 | - | - | - | - | - |

| 0.6 | 0.941 | 0.9652 | 0.9718 | 0.9749 | 0.9756 | - | - | - | - | - | - |

| 0.7 | 0.9401 | 0.9633 | 0.9699 | 0.9716 | - | - | - | - | - | - | - |

| 0.8 | 0.9382 | 0.9609 | 0.967 | - | - | - | - | - | - | - | - |

| 0.9 | 0.9365 | 0.9575 | - | - | - | - | - | - | - | - | - |

| 1 | 0.9332 | - | - | - | - | - | - | - | - | - | - |

Table 5.

Classification accuracy (%) versus the values of w1 and w2 for RF-SRC in University of Pavia dataset with 60 training samples per class.

Table 5.

Classification accuracy (%) versus the values of w1 and w2 for RF-SRC in University of Pavia dataset with 60 training samples per class.

| | w1 | 0 | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | 1 |

| w2 | |

| 0 | 0.7359 | 0.8297 | 0.8895 | 0.9269 | 0.9495 | 0.962 | 0.9676 | 0.9628 | 0.9491 | 0.9278 | 0.901 |

| 0.1 | 0.7581 | 0.8486 | 0.9072 | 0.941 | 0.9607 | 0.9702 | 0.971 | 0.9605 | 0.94 | 0.9146 | - |

| 0.2 | 0.7773 | 0.8678 | 0.923 | 0.9537 | 0.9694 | 0.9757 | 0.9708 | 0.9537 | 0.9246 | - | - |

| 0.3 | 0.7951 | 0.8868 | 0.9383 | 0.9645 | 0.9765 | 0.9771 | 0.965 | 0.9387 | - | - | - |

| 0.4 | 0.8117 | 0.9045 | 0.9523 | 0.974 | 0.9803 | 0.9722 | 0.9531 | - | - | - | - |

| 0.5 | 0.8286 | 0.9238 | 0.965 | 0.9811 | 0.9771 | 0.9612 | - | - | - | - | - |

| 0.6 | 0.8457 | 0.942 | 0.9753 | 0.981 | 0.9667 | - | - | - | - | - | - |

| 0.7 | 0.864 | 0.9587 | 0.9814 | 0.9712 | - | - | - | - | - | - | - |

| 0.8 | 0.8854 | 0.9701 | 0.9745 | - | - | - | - | - | - | - | - |

| 0.9 | 0.9068 | 0.9678 | - | - | - | - | - | - | - | - | - |

| 1 | 0.9215 | - | - | - | - | - | - | - | - | - | - |

To validate the effectiveness of the proposed RF-NRS and RF-SRC, the original NRS, SRC, LBP-NRS, LBP-SRC, Gabor-NRS, and Gabor-SRC are compared.

Figure 7 illustrates the classification accuracy (%) of these methods

versus the number of training samples per class for the two experimental datasets. It is obvious that the accuracy of all the classifiers increases with more training samples, and the classifiers using both spectral and spatial information are much better than the one with solely spectral information. For instance, in

Figure 7d, the classification accuracy of the original SRC is at least 23% lower than that of RF-SRC with 60 training samples per class. Furthermore, the proposed RF-based classifiers are consistently much better than that of LBP-NRS, Gabor-NRS, LBP-SRC, and Gabor-SRC. Taking the Salinas Valley as an example, the accuracy difference between RF-NRS and LBP-NRS is approximately 2%. When the number of training samples per class is 30 for the University of Pavia dataset, the accuracy of RF-NRS is 6% higher than the others, indicating the proposed method is more robust in SSS situations. For the Salinas dataset,

Figure 7 shows that the classification accuracy of RF-SRC is 1% higher than that of LBP-SRC. For the University of Pavia dataset, RF-SRC obviously outperforms others, with an approximate 4% improvement.

Table 6.

Classification accuracy (%) versus the values of w1 and w2 for RF-SRC in the Salinas dataset with 30 training samples per class.

Table 6.

Classification accuracy (%) versus the values of w1 and w2 for RF-SRC in the Salinas dataset with 30 training samples per class.

| | w1 | 0 | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | 1 |

| w2 | |

| 0 | 0.8853 | 0.9387 | 0.958 | 0.9671 | 0.9724 | 0.9753 | 0.9766 | 0.977 | 0.9766 | 0.9737 | 0.9622 |

| 0.1 | 0.888 | 0.9417 | 0.9603 | 0.9691 | 0.9736 | 0.9761 | 0.9771 | 0.9771 | 0.9756 | 0.9702 | - |

| 0.2 | 0.8901 | 0.9444 | 0.9619 | 0.9698 | 0.974 | 0.976 | 0.9763 | 0.9753 | 0.9717 | - | - |

| 0.3 | 0.8928 | 0.947 | 0.9635 | 0.9708 | 0.9743 | 0.9751 | 0.975 | 0.9717 | - | - | - |

| 0.4 | 0.8956 | 0.9502 | 0.9649 | 0.9709 | 0.9734 | 0.9741 | 0.9716 | - | - | - | - |

| 0.5 | 0.8987 | 0.9539 | 0.9665 | 0.9717 | 0.9726 | 0.9711 | - | - | - | - | - |

| 0.6 | 0.902 | 0.9575 | 0.9679 | 0.9709 | 0.97 | - | - | - | - | - | - |

| 0.7 | 0.9066 | 0.9605 | 0.9685 | 0.969 | - | - | - | - | - | - | - |

| 0.8 | 0.911 | 0.9622 | 0.9664 | - | - | - | - | - | - | - | - |

| 0.9 | 0.9161 | 0.9597 | - | - | - | - | - | - | - | - | - |

| 1 | 0.9144 | - | - | - | - | - | - | - | - | - | - |

Figure 7.

Classification accuracy (%) versus different numbers of training samples per class for two experimental datasets: (a) and (b) Salinas Valley dataset; (c) and (d) University of Pavia dataset.

Figure 7.

Classification accuracy (%) versus different numbers of training samples per class for two experimental datasets: (a) and (b) Salinas Valley dataset; (c) and (d) University of Pavia dataset.

The classification accuracy for each class and overall accuracy (OA) are listed in

Table 7 and

Table 8 for the two datasets. For the Salinas dataset, 30 samples are randomly selected from each class for training and the rest for testing, while for the University of Pavia dataset, the number of training samples is 60 per class. As can be seen in

Table 7 and

Table 8, using spatial features enhances classification accuracy. For example, in

Table 7, accuracy is increased by approximately 8% with the integration of the LBP feature, and

Table 8 shows the Gabor feature brings about 9% improvement.

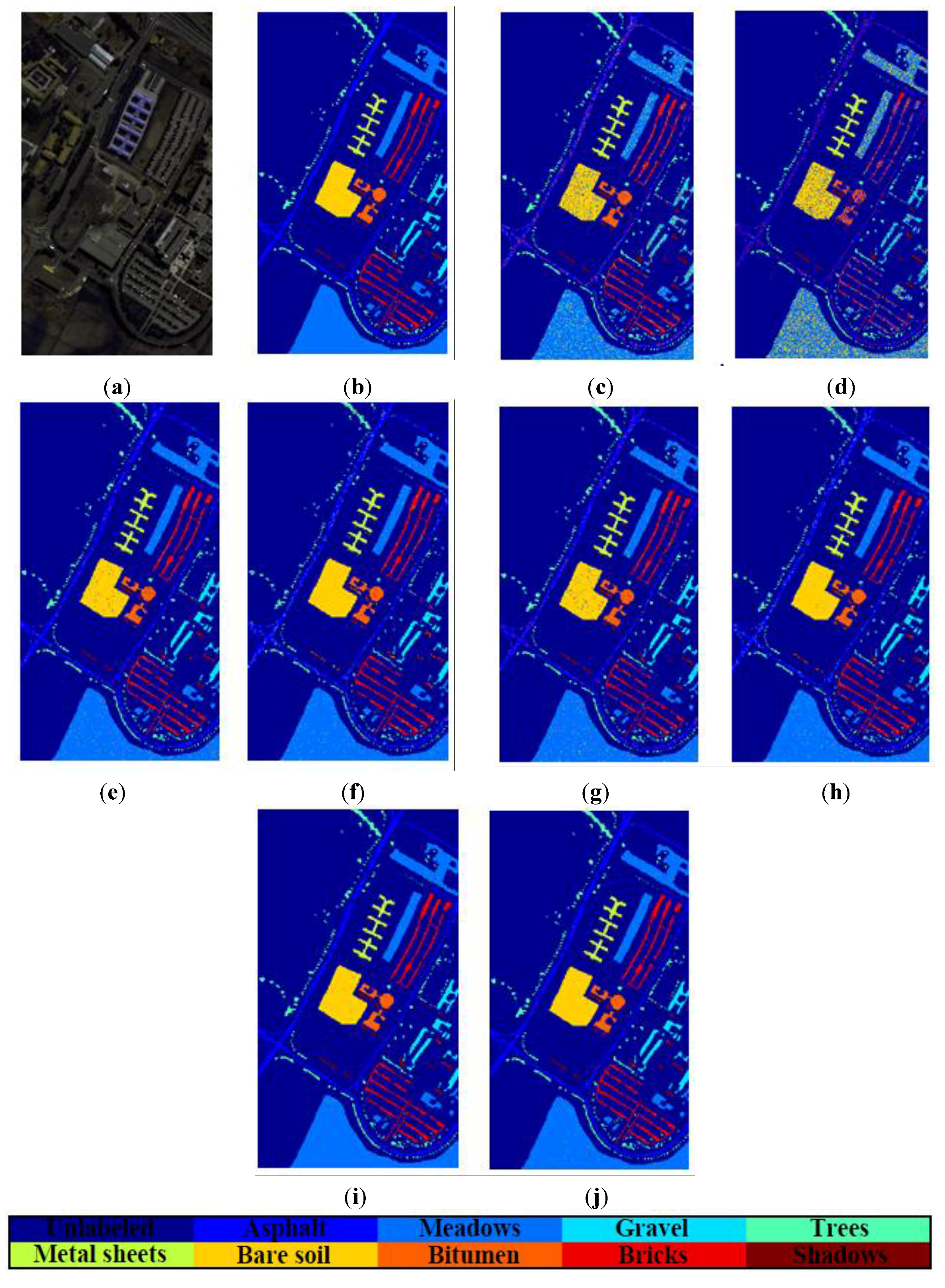

Figure 8 and

Figure 9 are the thematic maps of these hyperspectral datasets. Clearly, the classification maps of the proposed residual-fusion methods are less noisy and more accurate, which are consistent with the results listed in

Table 7 and

Table 8.

Table 7.

Classification accuracy (%) for the Salinas dataset.

Table 7.

Classification accuracy (%) for the Salinas dataset.

| Class | Samples | LBP-NRS | Gabor-NRS | NRS | RF-NRS | LBP-SRC | Gabor-SRC | SRC | RF-SRC | LBP-SVM | Gabor-SVM |

|---|

| Train | Test |

|---|

| 1 | 30 | 1979 | 100 | 98.95 | 99.15 | 100 | 99.8 | 99.05 | 99.35 | 100 | 100 | 99.24 |

| 2 | 30 | 3696 | 97.05 | 96.78 | 99.6 | 99.46 | 96.51 | 94.66 | 92.38 | 99.49 | 94.53 | 96.19 |

| 3 | 30 | 1946 | 100 | 99.85 | 98.89 | 100 | 100 | 92.76 | 90.08 | 100 | 99.69 | 99.49 |

| 4 | 30 | 1364 | 99.28 | 98.13 | 98.49 | 100 | 98.28 | 98.06 | 99.71 | 99.71 | 95.53 | 97.73 |

| 5 | 30 | 2648 | 95.29 | 97.76 | 97.35 | 98.17 | 96.12 | 98.32 | 96.83 | 97.61 | 95.96 | 99.06 |

| 6 | 30 | 3929 | 97.17 | 99.97 | 99.42 | 100 | 97.17 | 99.77 | 99.77 | 99.95 | 97.23 | 99.72 |

| 7 | 30 | 3549 | 97.15 | 99.86 | 99.44 | 100 | 96.45 | 99.19 | 99.5 | 99.97 | 97.21 | 99.86 |

| 8 | 30 | 11241 | 93.43 | 78.12 | 69.96 | 94.37 | 92.5 | 79.5 | 79.9 | 91.53 | 93.34 | 77.36 |

| 9 | 30 | 6173 | 99.21 | 98.05 | 96.94 | 99.61 | 98.31 | 97.45 | 99.58 | 99.71 | 98.64 | 98.67 |

| 10 | 30 | 3248 | 99.39 | 98.23 | 94.87 | 99.97 | 99.33 | 96.77 | 91.76 | 99.66 | 99.38 | 97.14 |

| 11 | 30 | 1038 | 100 | 98.22 | 96.63 | 99.72 | 99.81 | 97.94 | 99.25 | 99.63 | 100 | 98.17 |

| 12 | 30 | 1897 | 96.42 | 100 | 100 | 100 | 95.9 | 100 | 98.13 | 99.95 | 97 | 100 |

| 13 | 30 | 886 | 92.9 | 97.16 | 99.67 | 99.34 | 94.21 | 95.74 | 97.16 | 97.6 | 99.23 | 98.87 |

| 14 | 30 | 1040 | 97.48 | 98.88 | 92.06 | 99.91 | 95.33 | 98.04 | 93.18 | 98.6 | 96.83 | 96.83 |

| 15 | 30 | 7238 | 94.85 | 84.3 | 73.39 | 96.31 | 94.7 | 79.55 | 60.61 | 94.7 | 92.05 | 84.84 |

| 16 | 30 | 1777 | 100 | 99.67 | 98.17 | 100 | 99.72 | 98.78 | 98.28 | 99.17 | 99.72 | 99.04 |

| OA | - | - | 96.67 | 92.45 | 88.87 | 98.14 | 96.22 | 91.44 | 88.53 | 97.2 | 96.01 | 92.29 |

Table 8.

Classification accuracy (%) for the University of Pavia dataset.

Table 8.

Classification accuracy (%) for the University of Pavia dataset.

| Class | Samples | LBP-NRS | Gabor-NRS | NRS | RF-NRS | LBP-SRC | Gabor-SRC | SRC | RF-SRC | LBP-SVM | Gabor-SVM |

|---|

| Train | Test |

|---|

| 1 | 60 | 6571 | 83.19 | 91.31 | 80.77 | 99.53 | 83.12 | 86.08 | 76.34 | 98.91 | 79.97 | 80.5 |

| 2 | 60 | 18589 | 92.43 | 94.01 | 84.74 | 98.66 | 91.59 | 93.05 | 66.68 | 94.98 | 89.92 | 90.55 |

| 3 | 60 | 2039 | 94.33 | 96.9 | 86.71 | 99.71 | 95.09 | 94.24 | 74.18 | 99.29 | 90.73 | 90.34 |

| 4 | 60 | 3004 | 73.89 | 96.87 | 98.4 | 99.45 | 72.32 | 96.61 | 94.39 | 99.35 | 81.95 | 94.49 |

| 5 | 60 | 1285 | 95.32 | 100 | 99.93 | 100 | 94.28 | 100 | 99.55 | 99.93 | 94.05 | 100 |

| 6 | 60 | 4969 | 97.97 | 96.9 | 82.04 | 99.56 | 96.82 | 94.59 | 83.18 | 99.54 | 98.49 | 95.32 |

| 7 | 60 | 1270 | 97.89 | 94.89 | 93.68 | 99.92 | 98.35 | 94.14 | 72.93 | 98.65 | 97.89 | 94.45 |

| 8 | 60 | 3622 | 94.62 | 91.09 | 76.75 | 99.76 | 95.06 | 86.23 | 60.97 | 98.32 | 93.06 | 87.78 |

| 9 | 60 | 887 | 83.42 | 95.67 | 99.68 | 99.47 | 83.53 | 94.19 | 100 | 98.1 | 79.82 | 95.09 |

| OA | - | - | 90.67 | 94.28 | 85.28 | 99.2 | 90.1 | 92.15 | 73.95 | 97.28 | 89.28 | 90.08 |

Figure 8.

Thematic maps for the University of Pavia dataset with 60 training samples per class; (a) Pseudo-color Image; (b) Ground truth Map; (c) NRS; (d) SRC; (e) NRS + Gabor; (f) NRS + LBP; (g) SRC + Gabor; (h) SRC + LBP; (i) RF-NRS; (j) RF-SRC.

Figure 8.

Thematic maps for the University of Pavia dataset with 60 training samples per class; (a) Pseudo-color Image; (b) Ground truth Map; (c) NRS; (d) SRC; (e) NRS + Gabor; (f) NRS + LBP; (g) SRC + Gabor; (h) SRC + LBP; (i) RF-NRS; (j) RF-SRC.

Figure 9.

Thematic maps for the Salinas dataset with 30 training samples per class; (a) Pseudo-color Image; (b) Ground truth Map; (c) NRS; (d) SRC; (e) NRS + Gabor; (f) NRS + LBP; (g) SRC + Gabor (h) SRC + LBP; (i) RF-NRS; (j) RF-SRC.

Figure 9.

Thematic maps for the Salinas dataset with 30 training samples per class; (a) Pseudo-color Image; (b) Ground truth Map; (c) NRS; (d) SRC; (e) NRS + Gabor; (f) NRS + LBP; (g) SRC + Gabor (h) SRC + LBP; (i) RF-NRS; (j) RF-SRC.

Classification results from the aforementioned classifiers using the Indian Pines data are shown in

Table 9 with the same number of training and testing samples as in [

48]. It is apparent that the proposed RF-NRS and RF-SRC still provide superior performance, which further affirms that classification accuracy can be greatly improved by fusing two complementary spatial features (

i.e., Gabor features and LBP features).

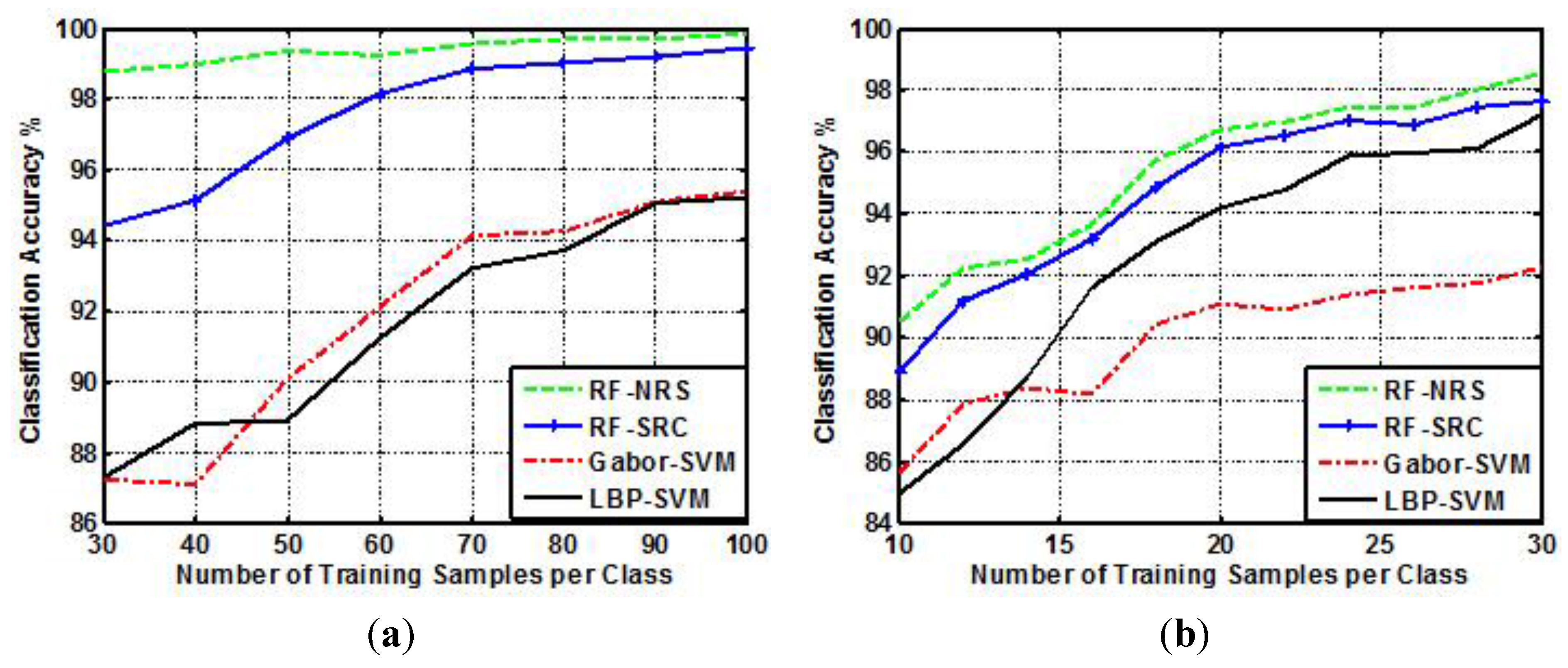

In

Figure 10, the classification results of the proposed methods are compared with SVM using spatial features,

i.e., LBP-SVM [

49] and Gabor-SVM [

50]. As can be seen, the classification accuracy of RF-NRS is more than 1% higher than that of RF-SRC. It is due to the calculation of weight coefficients is sparse in SRC, which is prone to misclassify a testing sample when the number of training samples is limited or the quality of training samples is poor. The proposed RF-NRS and RF-SRC have higher classification accuracy (at least 4%) than Gabor-SVM and LBP-SVM especially in the University of Pavia dataset. Moreover, with the increase of the number of training samples, the proposed RF-NRS and RF-SRC outperform Gabor-SVM and LBP-SVM. It also illustrates that the residual-based fusion methods provide even more solid and robust performance in SSS situations (e.g., 30 training samples per class).

Table 9.

Classification accuracy (%) for the Indian Pine dataset.

Table 9.

Classification accuracy (%) for the Indian Pine dataset.

| Class | Samples | LBP-NRS | Gabor-NRS | RF-NRS | LBP-SRC | Gabor-SRC | RF-SRC | SVM-LBP | SVM-Gabor |

|---|

| Train | Test |

|---|

| 1 | 15 | 39 | 100 | 96.3 | 90.74 | 100 | 96.3 | 100 | 100 | 94.87 |

| 2 | 50 | 1384 | 87.95 | 80.06 | 92.68 | 88.21 | 71.69 | 91 | 86.63 | 77.96 |

| 3 | 50 | 784 | 97.72 | 90.65 | 98.56 | 96.88 | 81.77 | 98.44 | 98.47 | 88.61 |

| 4 | 50 | 184 | 100 | 98.72 | 99.15 | 98.72 | 95.3 | 99.15 | 99.46 | 100 |

| 5 | 50 | 447 | 97.18 | 96.58 | 98.99 | 97.18 | 93.96 | 97.79 | 97.99 | 94.85 |

| 6 | 50 | 697 | 97.59 | 96.92 | 97.99 | 96.92 | 93.04 | 98.8 | 96.7 | 97.56 |

| 7 | 15 | 11 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 |

| 8 | 50 | 439 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 |

| 9 | 15 | 5 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 |

| 10 | 50 | 918 | 86.88 | 85.12 | 90.39 | 88.02 | 82.54 | 88.33 | 86.93 | 75.49 |

| 11 | 50 | 2418 | 89.67 | 82.46 | 94.04 | 89.26 | 78.08 | 88.53 | 86.35 | 73.9 |

| 12 | 50 | 574 | 91.21 | 93.16 | 97.72 | 91.53 | 94.3 | 95.93 | 90.6 | 90.43 |

| 13 | 50 | 162 | 99.06 | 98.11 | 100 | 98.58 | 98.58 | 99.53 | 100 | 99.38 |

| 14 | 50 | 1244 | 96.99 | 93.66 | 99 | 97.76 | 95.29 | 99.69 | 97.51 | 96.78 |

| 15 | 50 | 330 | 99.21 | 96.32 | 100 | 99.47 | 95.53 | 100 | 99.39 | 98.18 |

| 16 | 50 | 45 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 |

| OA | - | - | 93.16 | 89.12 | 96.04 | 93.23 | 85.73 | 94.31 | 92.08 | 85.43 |

Figure 10.

Classification accuracy (%) versus the number of training samples per class for: (a) University of Pavia dataset; (b) Salinas dataset.

Figure 10.

Classification accuracy (%) versus the number of training samples per class for: (a) University of Pavia dataset; (b) Salinas dataset.