Removal of Optically Thick Clouds from Multi-Spectral Satellite Images Using Multi-Frequency SAR Data

Abstract

:1. Introduction

2. Removal of Optical Thick Clouds

2.1. Substitution Techniques

2.2. Interpolation Techniques

3. The Need for More Generic Data Restoration Strategies

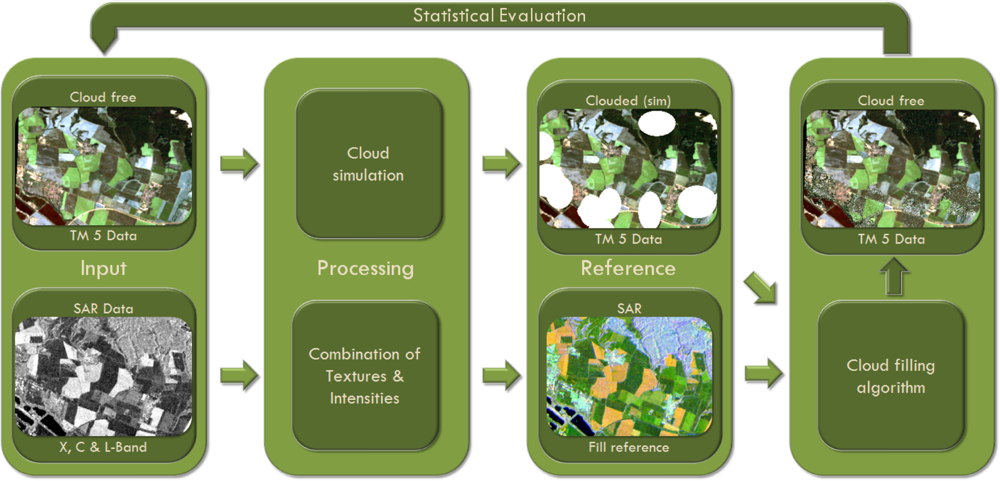

4. Data and Methods

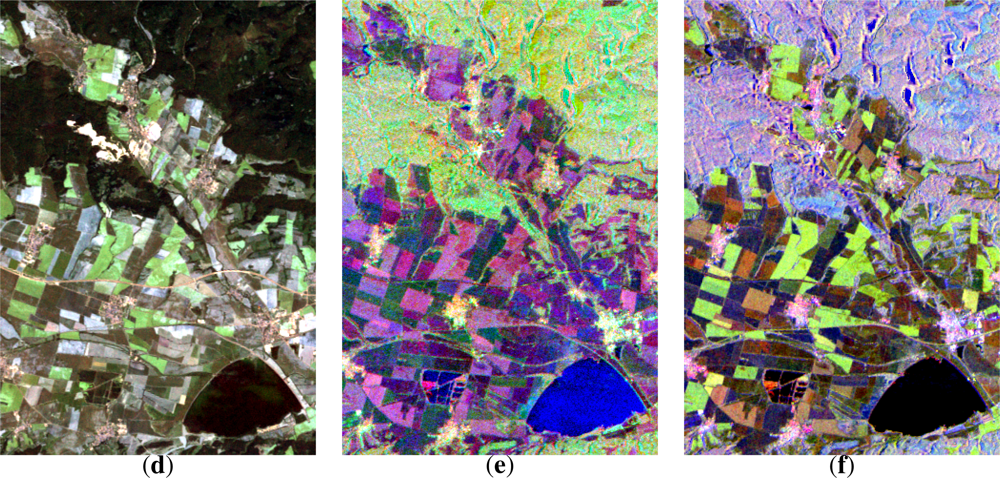

4.1. Investigation Area and Data

4.2. Methodological Considerations and Requirements

- Implementation of a generic pixel-based cloud removal algorithm as a step towards the compensation of the drawbacks of substitution and interpolation techniques. The algorithm is supposed to be:

- ○ sensor independent,

- ○ based on physical interrelations,

- ○ independent from the land cover,

- ○ methodologically simple, and

- ○ generating reproducible outputs;

- Establishment of a framework for the statistical evaluation of the performance of the cloud cover removal algorithm;

- Evaluation of the potential of mono-temporal, multi-frequency SAR imagery to serve as data basis for the image restoration of a multi-spectral image.

4.3. Cloud Simulation

- cloud cover fraction (%),

- mean cloud size (m),

- spatial distribution ().

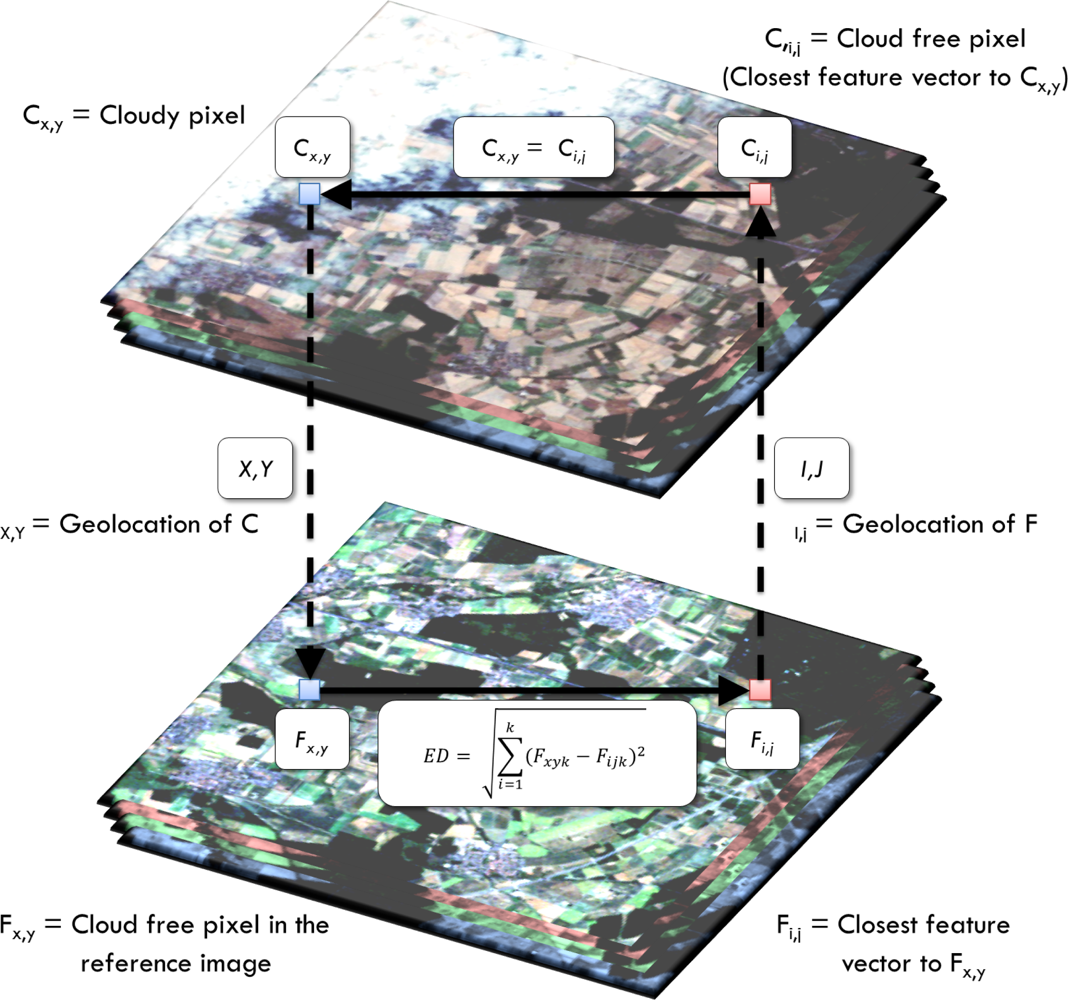

4.4. Image Restoration Algorithm

4.5. Statistical Quality Assessment

Mean Bias

Loss/Gain of Image Content

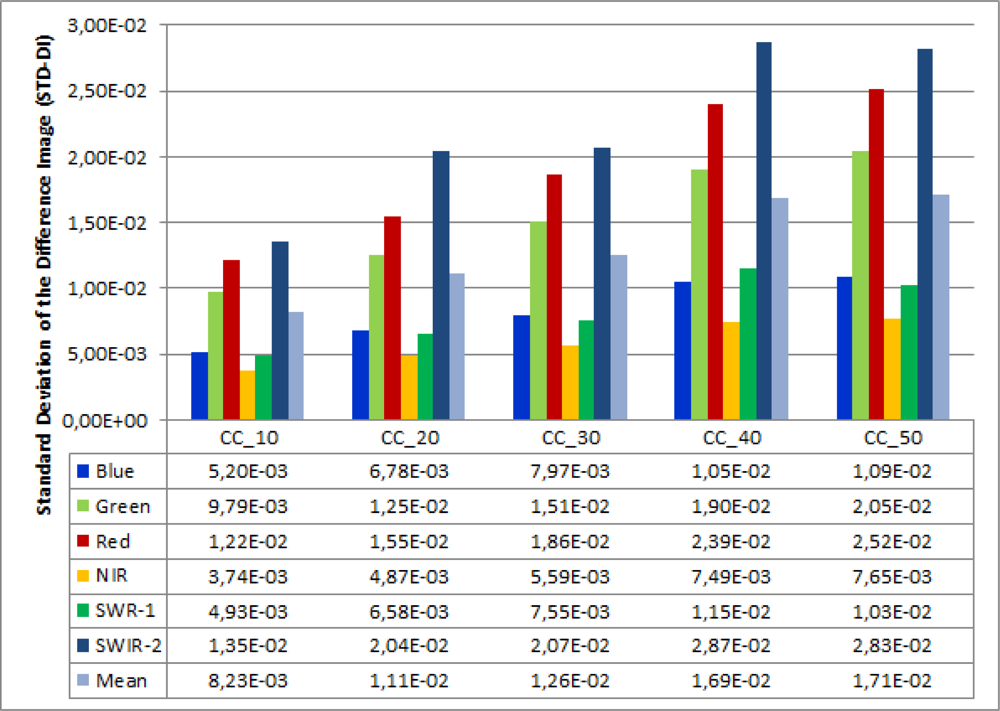

Image of Difference

Structural Similarity

Spectral Fidelity

4.6. Design of the Performance Evaluation

4.6.1. Proof of Concept

- Cloud removal based on a perfect fill image.

- Cloud removal based on a multispectral image.

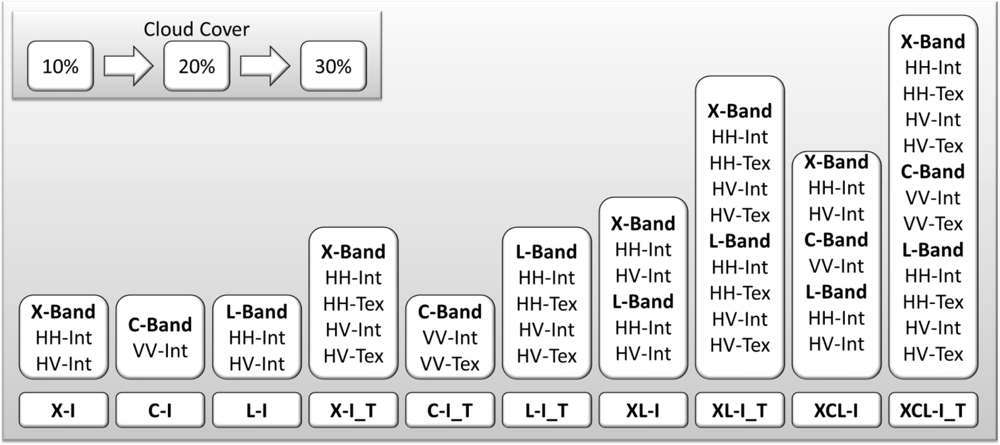

4.6.2. Experimental Setup of the Multi-Frequency SAR Analysis

5. Results

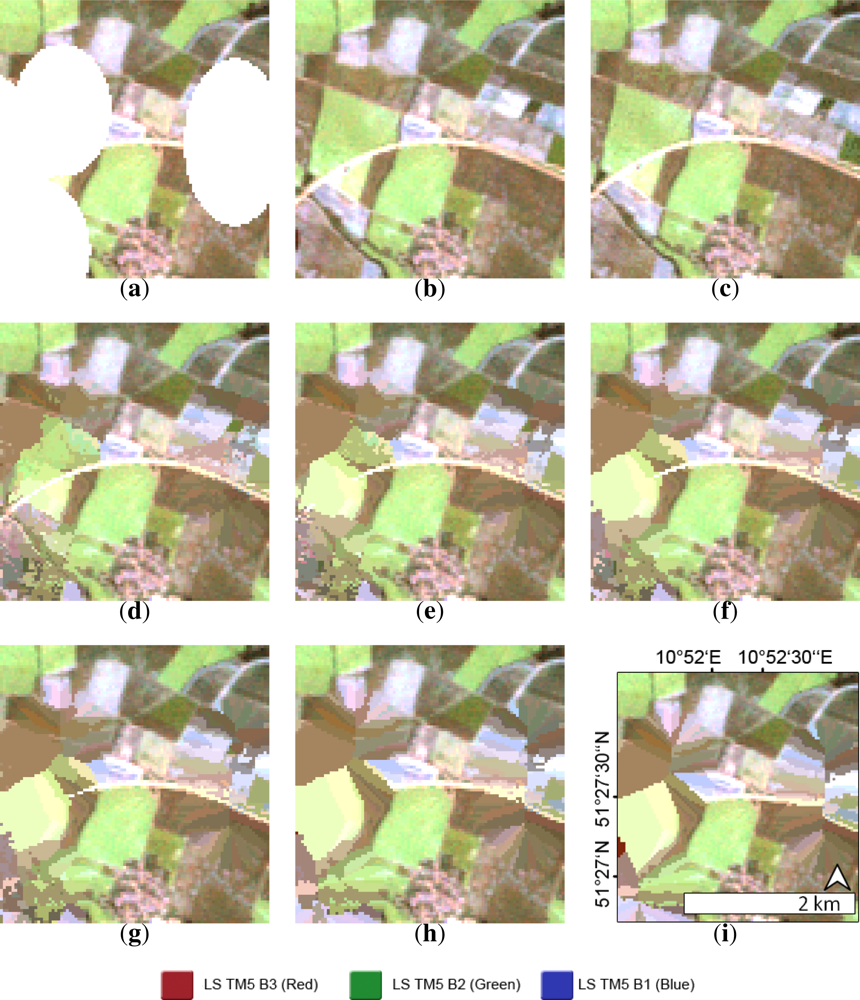

5.1. Results of the Proof of Concept

5.1.1. Sensitivity to the Distance Weighting

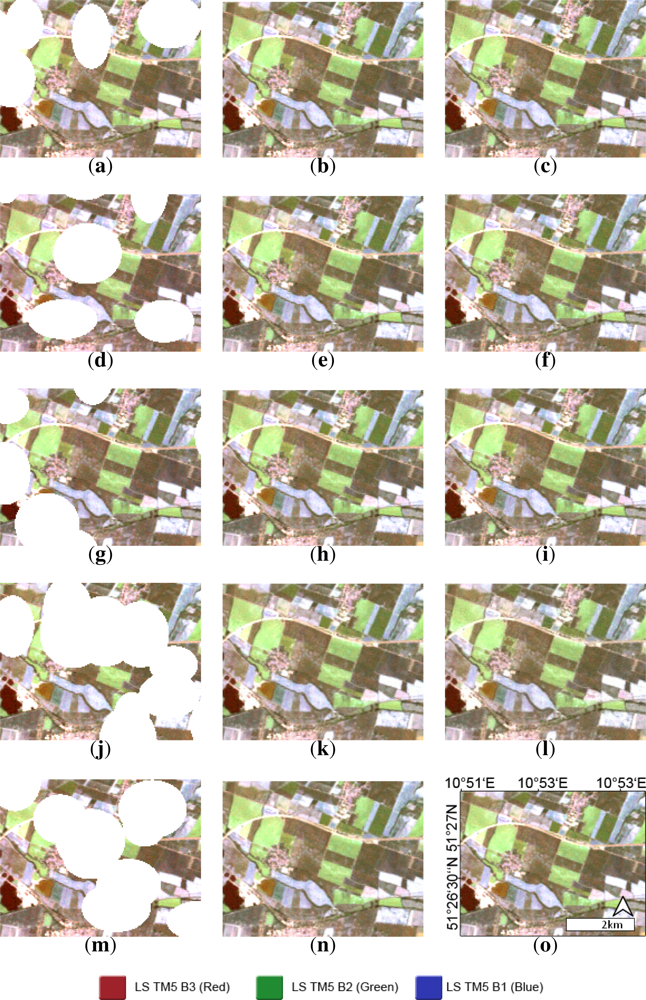

5.1.2. Sensitivity to the Amount of Cloud Cover

- The integration of an inverse distance weighting function causes a loss of image restoration quality. IDW is therefore neglected in further image restoration steps.

- The cloud removal algorithm is capable to compensate for a high percentage of cloud cover, provided that the quality of the data source is sufficient.

- The set of statistical measures is not sufficient to completely describe the image quality. Therefore a visual comparison is mandatory.

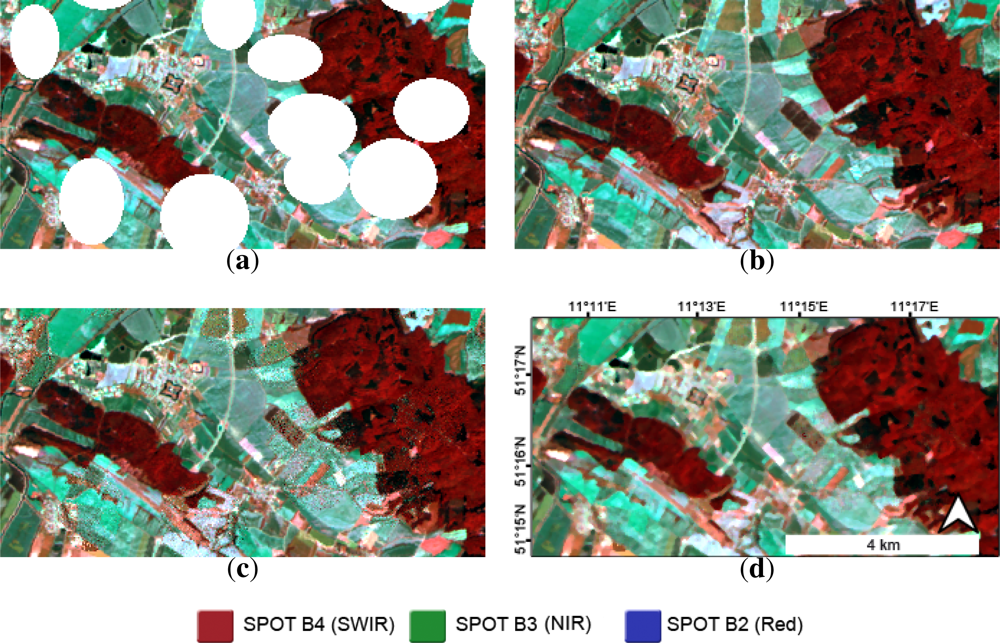

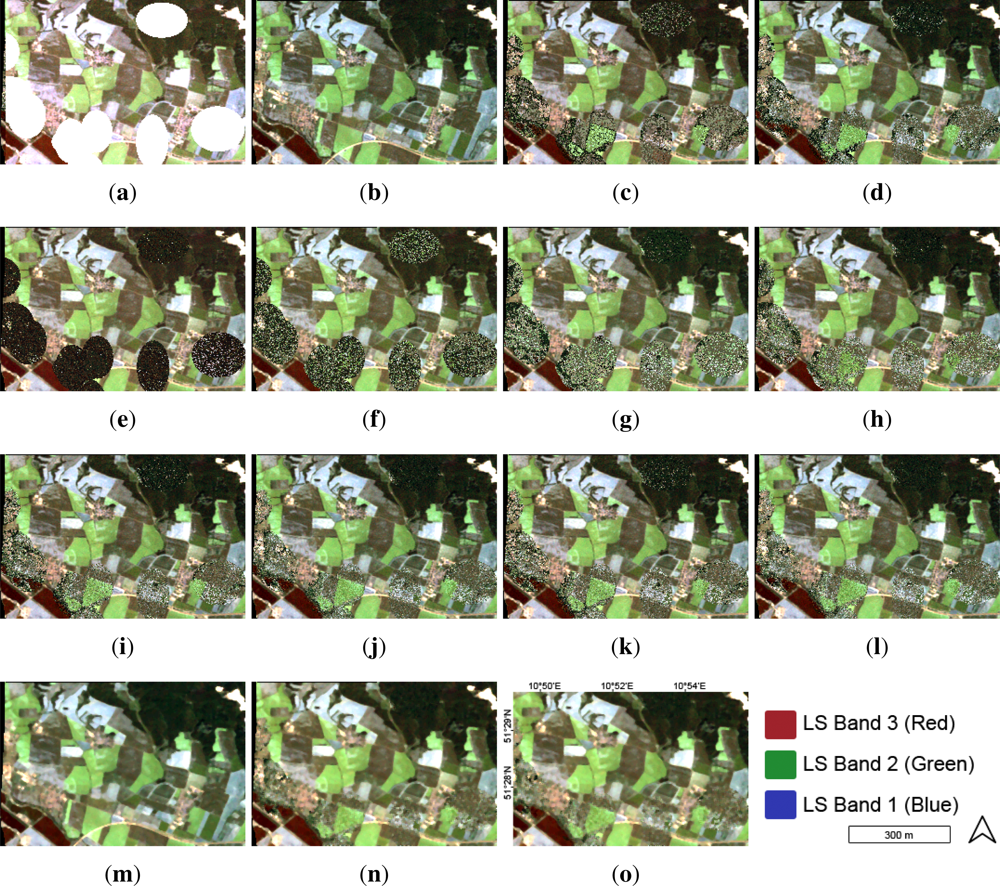

5.1.3. Cloud Removal by Means of a Multi-Spectral Reference Image

- The integration of a real existing dataset in the algorithm enables the removal of cloud cover from multi-spectral images, resulting in a meaningful image content of the restored image.

- The visual appearance of the restored areas is affected by clutter noise. The origin of this noise needs further investigation. It appears to be helpful to compensate for this effect by means of spatial averaging.

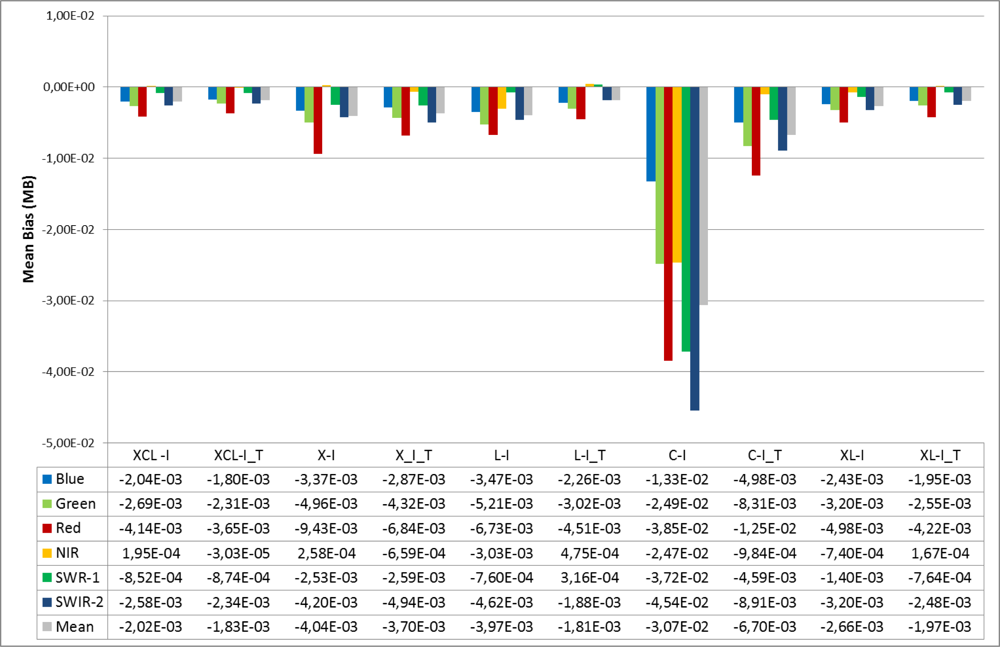

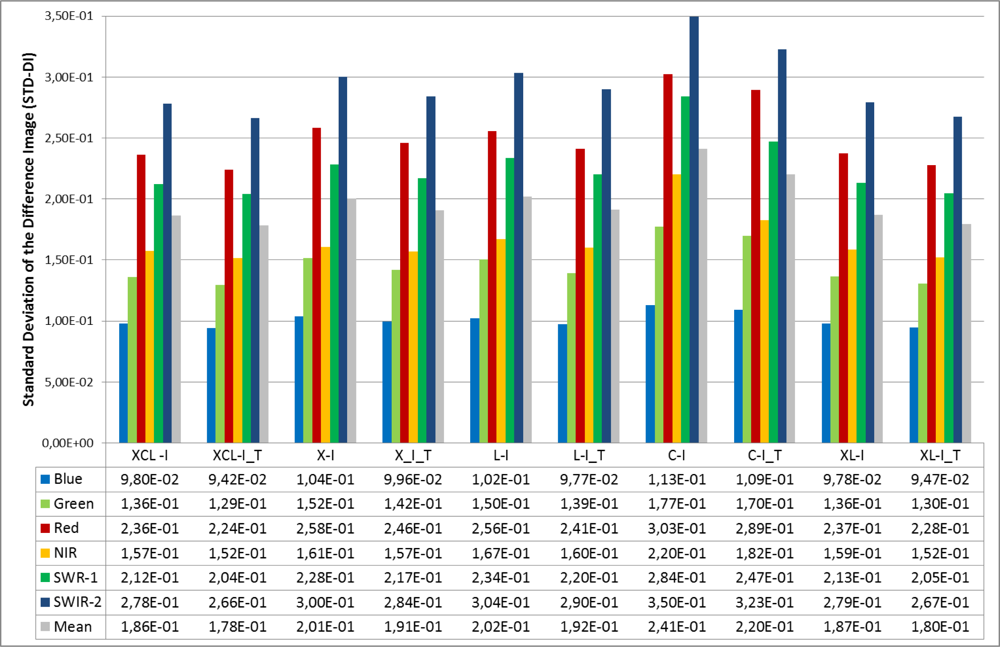

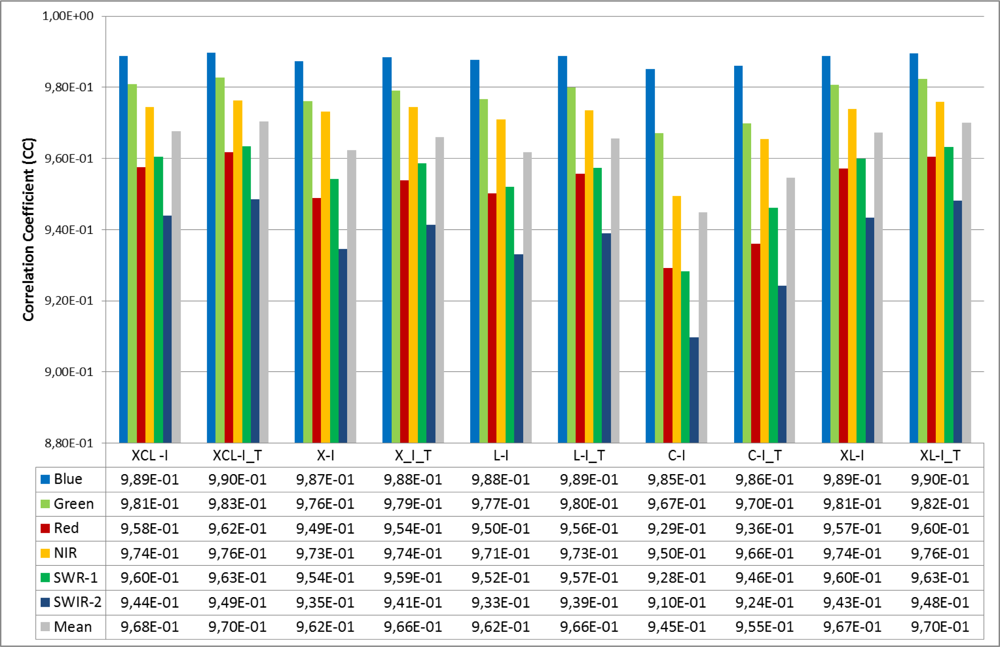

5.2. Findings of the Multi-Frequency SAR Analysis

- The combination of more than one SAR frequency increases the restoration quality.

- The restoration with inclusion of the derived texture measures outperforms the frequency combinations without texture.

- Concerning mono-frequency SAR data, L-Band is most suitable for image restoration. For multi-frequency SAR data, the frequency combination XCL features the best statistical budget.

- Due to the poor data quality of the used ERS image, C-Band data is excluded from any interpretation of the study results.

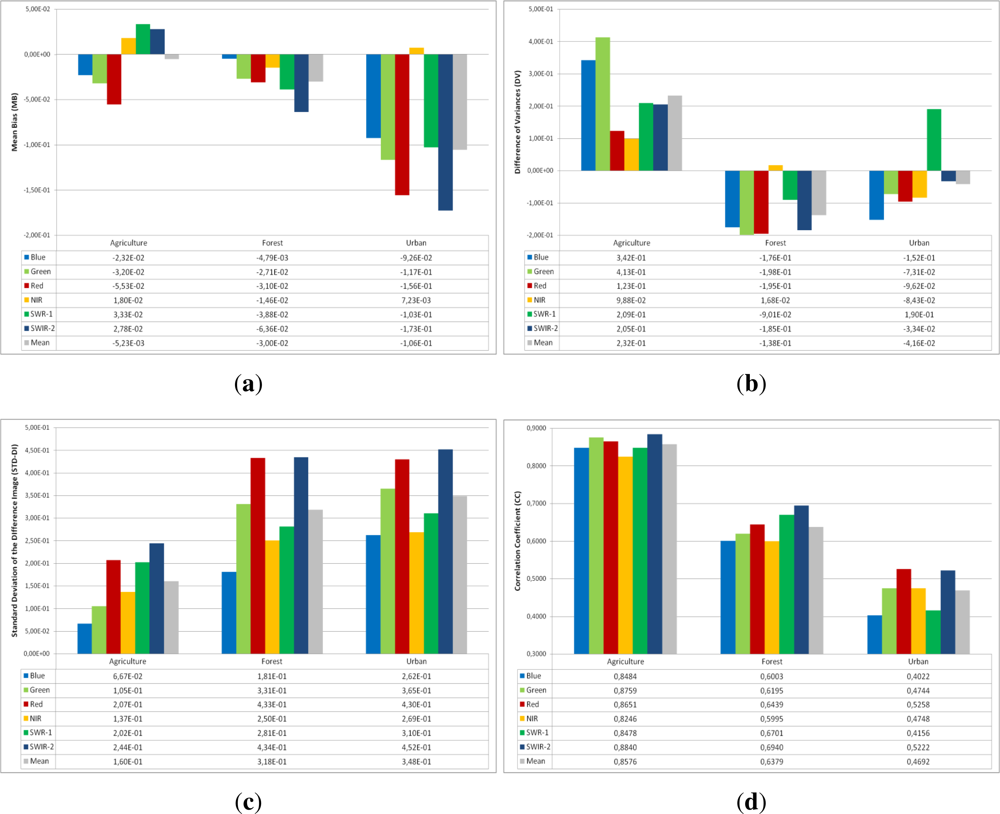

5.3. Sensitivity to Land Cover Types

- Urban areas are restored with the lowest quality. The forest and agriculture areas are restored with a similar quality, but with certain differences depending on the facet of image restoration that is focused on.

- The statistics can be regarded as verification of the theoretical anticipations that more homogeneous land cover classes achieve better restorations results than heterogeneous categories.

6. Discussion

6.1. Methodology

6.2. Is Cloud Removal Feasible with SAR Data?

7. Conclusions and Outlook

Acknowledgments

- Conflict of InterestThe authors declare no conflict of interest.

References

- Rees, W.G. Physical Principles of Remote Sensing, 2 ed.; Cambridge University Press: Cambridge, UK, 2001; p. 343. [Google Scholar]

- Schowengerdt, R.A. Remote Sensing: Models and Methods for Image Processing, 3 ed.; Elsevier: San Diego, CA, USA, 2007; p. 558. [Google Scholar]

- Cihlar, J.; Howarth, J. Detection and removal of cloud contamination from AVHRR images. IEEE Trans. Geosci Remote Sens 1994, 32, 583–589. [Google Scholar]

- Helmer, E.H.; Ruefenacht, B. Cloud-free satellite image mosaics with regression trees and histogram matching. Photogramm. Eng. Remote Sensing 2005, 71, 1079–1089. [Google Scholar]

- Roerink, J.G.; Menenti, M.; Verhoef, W. Reconstructing cloudfree NDVI composites using Fourier analysis of time series. Int. J. Remote Sens 2000, 21, 1911–1917. [Google Scholar]

- Sahoo, T.; Patnaik, S. Cloud Removal from Satellite Images Using Auto Associative Neural Network and Stationary Wevlet Transform. Proceedings of First International Conference on Emerging Trends in Engineering and Technology, Nagpur, India, 16–18 July 2008; pp. 100–105.

- Wang, B.; Ono, A.; Muramatsu, K.; Fuhiwara, N. Automated detection and removal of clouds and their shadows from landsat TM images. IEICE Trans. Inf. Syst. 1999, E82-D, 453–460. [Google Scholar]

- Lu, D. Detection and substitution of clouds/hazes and their cast shadows on IKONOS images. Int. J. Remote Sens 2007, 28, 4027–4035. [Google Scholar]

- Martinuzzi, S.; Gould, W.A.; González, O.M.R. Creating Cloud-Free Landsat ETM + Data Sets in Tropical Landscapes: Cloud and Cloud-Shadow Removal; Technical Report; Pacific Northwest Research Statio, US Department of Agriculture-Forest Service: Portland, OR, USA, 2007. [Google Scholar]

- Song, C.; Woodcock, C.E.; Seto, K.C.; Lenney, M.P.; Macomber, S.A. Classification and change detection using landsat TM data: When and how to correct atmospheric effects? Remote Sen. Environ 2001, 75, 230–244. [Google Scholar]

- Song, M.; Civco, D.L. A Knowledge-Based Approach for Reducing Cloud and Shadow. Proceedings of ASPRS-ACSM Annual Conference and FIG XXII Congress, Washington, DC, USA, 22–26 April 2002; pp. 1–7.

- Ren, R.; Guo, S.; Gu, L.; Wang, H. Automatic Thick Cloud Removal for MODIS Remote Sensing Imagery. Proceedings of 2009 IEEE International Conference on Information Engineering and Computer Science, Wuhan, China, 19–20 December 2009; pp. 1–4.

- Tseng, D.; Tseng, H.; Chien, C. Automatic cloud removal from multi-temporal SPOT images. Appl. Math. Comput 2008, 205, 584–600. [Google Scholar]

- Webster, R.; Oliver, M.A. Geostatistics for Environmental Scientists, 2nd ed.; Wiley: West Sussex, UK, 2007; p. 315. [Google Scholar]

- Wen, J.; Su, Z.; Ma, Y. Reconstruction of a cloud-free vegetation index time series for the tibetan plateau. Mt. Res. Dev 2004, 24, 348–353. [Google Scholar]

- Jupp, D.; Strahler, A.H.; Woodcock, C. Autocorrelation and regularization in digital images. I. Basic theory. IEEE Trans. Geosci. Remote Sens 1988, 26, 463–473. [Google Scholar]

- Lark, R.M. Geostatistical description of texture on an aerial photograph for discriminating classes of land cover. Int. J. Remote Sens 1996, 17, 2115–2133. [Google Scholar]

- Tobler, W.R. A computer movie simulating urban growth in the detroit region. Econ. Geogr 1970, 46, 234–240. [Google Scholar]

- Zhang, C.; Li, W.; Travis, D.J. Restoration of clouded pixels in multispectral remotely sensed imagery with cokriging. Int. J. Remote Sens 2009, 30, 2173–2195. [Google Scholar]

- Melgani, F. Contextual reconstruction of cloud-contaminated multitemporal multispectral images. IEEE Trans. Geosci. Remote Sens 2006, 44, 442–455. [Google Scholar]

- Solberg, A.H.S.; Jain, A.K.; Taxt, T. Multisource classification of remotely sensed data: Fusion of Landsat TM and SAR images. IEEE Trans. Geosci. Remote Sens 1994, 32, 768–778. [Google Scholar]

- Hoan, N.T.; Tateishi, R. Cloud removal of optical image using SAR data for ALOS application. Int. Arch. Photogr. Remote Sens. Spat. Inf. Sci 2008, 37, 379–384. [Google Scholar]

- Rossow, W.B. Measuring cloud properties from space: A review. J. Clim 1989, 2, 201–213. [Google Scholar]

- Rodts, S.M.A.; Duynkerke, P.G.; Jonker, H.J.J. Size distributions and dynamical properties of shallow cumulus clouds from aircraft observations and satellite data. J. Atmos. Sci 2003, 60, 1895–1912. [Google Scholar]

- Gardner, G.Y. Visual Simulation of Clouds. Proceedings of the 12th Annual Conference on Computer Graphics and Interactive Techniques (IGGRAPH ’85), San Francisco, CA, USA, 22–26 July 1985; 19, pp. 297–303.

- Machado, L.A.T.; Rossow, W.B. Structural characteristics and radiative properties of tropical cloud clusters. Mon. Wea. Rev 1993, 121, 3234–3260. [Google Scholar]

- Tompkins, A.M. The Parametrization of Cloud Cover; Technical Memorandum, Moist Processes Lecture Note Series; European Centre for Medium-Range Weather Forecasts (ECMWF): Shinfield Park, Reading, England, 2005. [Google Scholar]

- O’Brien, D.M. Size distributions of clouds in real time from satellite imagery. Int. J. Remote Sens 1987, 8, 817–837. [Google Scholar]

- Plank, V.G. The size distribution of cumulus clouds in representative Florida populations. J. Appl. Meteorol 1969, 8, 46–67. [Google Scholar]

- Joseph, J.H.; Cahalan, R.F. Nearest neighbor spacing of fair weather cumulus cluds. J. Appl. Meteorol 1990, 29, 793–805. [Google Scholar]

- Lee, J.; Chou, J.; Weger, R.C.; Welch, R.M. Clustering, randomness, and regularity in cloud fields. 4. Stratocumulus cloud fileds. J. Geophys. Res 1994, 99, 14461–14480. [Google Scholar]

- Sengupta, S.K.; Welch, R.M.; Navar, M.S.; Berendes, T.A.; Chen, D.W. Cumulus cloud field morphology ans spatial patterns derived from high spatial resolution landsat imagery. J. Appl. Meteorol 1990, 29, 1245–1267. [Google Scholar]

- Clark, P.J.; Evans, C.F. Distance to nearest neighbor as a measure of spatial relationships in populations. Ecology 1954, 35, 445–453. [Google Scholar]

- Pretzsch, H. Analysis and modeling of spatial stand structures. Methodological considerations based on mixed beech-larch stands in Lower Saxony. For. Ecol. Manag 1997, 97, 237–253. [Google Scholar]

- Meng, Q.; Borders, B.E.; Cieszewski, C.J.; Madden, M. Closest spectral fit for removing clouds and cloud shadows. Photogramm. Eng. Remote Sensing 2009, 75, 569–576. [Google Scholar]

- Thomas, C.; Wald, L. Assessment of the Quality of Fused Products. Proceedings of 24th EARSeL Symposium, Dubrovnik, Croatia, 25–27 May 2004; pp. 317–325.

- Thomas, C.; Wald, L. Comparing Distances for Quality Assessment of Fused Images. Proceedings of 26th EARSeL Symposium, Varsovie, Poland, 29 May–02 June 2006; Bochenek, Z., Ed.; 2006; pp. 101–111. [Google Scholar]

- Wald, L.; Ranchin, T.; Mangolini, M. Fusion of satellite images of different spatial resolutions: Assessing the quality of resulting images. Photogramm. Eng. Remote Sensing 1997, 63, 691–699. [Google Scholar]

- Berger, C.; Hese, S.; Schmullius, C. Fusion of High Resolution SAR Data and Multispectral Imagery at Pixel Level—A Comparison. Proceedings of 2nd Joint EARSeL Special Interest Groups Workshop, Ghent, Belgium, 22–24 September 2010; pp. 245–268.

- Kruse, F.A.; Lefkoff, A.B.; Boardman, J.W.; Heidebrecht, K.B.; Shapiro, A.T.; Barloon, P.J.; Gotz, A.F.H. The Spectral Image Processing System (SIPS)—Interactive visualization ans analysis of imaging spctrometer data. Remote Sens. Environ 1993, 44, 145–163. [Google Scholar]

- Berger, C. Fusion of High Resolution SAR Data and Multispectral Imagery at Pixel Level—A Comprehensive Evaluation. 2010. [Google Scholar]

- Chibani, Y. Additive integration of SAR features into multispectral SPOT images by means of the à trous wavelet decomposition. ISPRS J. Photogramm 2006, 60, 306–314. [Google Scholar]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural features for image classification. IEEE Trans. Syst. Man Cybern 1973, 3, 610–621. [Google Scholar]

- Hall-Beyer, M. GLCM Texture: A Tutorial. In National Council on Geographic Information and Analysis Remote Sensing Core Curriculum; University of Calgary: Calgary, AB, Canada, 2000. [Google Scholar]

- Pohl, C.; Van Genderen, J.L. Multisensor image fusion in remote sensing: Concepts, methods and applications. Int. J. Remote Sens 1998, 19, 823–854. [Google Scholar]

- Thiel, C.; Drezet, P.; Weise, C.; Quegan, S.; Schmullius, C. Radar remote sensing for the delineation of forest cover maps and the detection of deforestation. Forestry 2006, 79, 589–597. [Google Scholar]

- Attema, E.; Bargellini, P.; Edwards, P.; Levrini, G.; Lokas, S.; Moeller, L.; Rosich-Tell, B.; Secchi, P.; Torres, R.; Davidson, M.; et al. Sentinel-1. The radar mission for GMES operational land and sea services. ESA Bull 2007, 131, 10–17. [Google Scholar]

- Snoeij, P.; Attema, E.; Torres, R.; Levrini, G.; Croci, R.; L’Abbate, M.; Pietropaolo, A.; Rostan, F.; Huchler, M. Sentinel 1—The Future GMES C-Band SAR Mission. Proceedings of the 6th European Radar Conference, Rome, Italy, 30 September–2 October 2009; pp. 21–24.

- Justice, C.O.; Vermote, E.; Townshend, J.R.G.; Defries, R.; Roy, D.P.; Hall, D.K.; Salomonson, V.V.; Privette, J.L.; Riggs, G.; Strahler, A.; et al. The Moderate Resolution Imaging Spectroradiometer (MODIS): Land remote sensing for global change research. IEEE Trans. Geosci. Remote Sens 1998, 36, 1228–1249. [Google Scholar]

- Chen, J.; Zhu, X.; Vogelmann, J.E.; Gao, F.; Jin, S. A simple and effective method for filling gaps in Landsat ETM+ SLC-off images. Remote Sens. Environ 2011, 115, 1053–1064. [Google Scholar]

| Property | Landsat TM 5 | SPOT 4 |

|---|---|---|

| Acquisition Date | 2010-07-08 | 2010-07-09 |

| Original Resolution | 30 m | 20 m |

| Final Resolution | 30 m | 30 m |

| Spectral Bands (μm) | Blue (0.45–0.52) | |

| Green (0.52–0.60) | Green (0.50–0.59) | |

| Red (0.63–0.69) | Red (0.61–0.68) | |

| NIR (0.76–0.90) | NIR (0.78–0.89) | |

| SWIR-1 (1.55–1.75) | SWIR (1.58–1.75) | |

| Thermal (10.4–12.5) | ||

| SWIR-2 (2.08–2.35) |

| Property | TSX | ERS | ALOS |

|---|---|---|---|

| Acquisition Date | 2010-07-10 | 2010-06-09 | 2010-07-09 |

| Acquisition Mode | SM * | IM ** | FBD *** |

| Polarization State | HH/HV | VV | HH/HV |

| Incidence Angle | 22.13° | 23.18° | 38.74° |

| Band Name | X-Band | C-Band | L-Band |

| Frequency | 9.6 GHz | 5.3 GHz | 1.27 GHz |

| Wavelength | 3 cm | 5.6 cm | 23.6 cm |

| Pass Direction | Descending | Ascending | Ascending |

| Looking Direction | Right | Right | Right |

| R+/A++ Resolution | 4.22 m/4.39 m | 20.01 m/19.85 m | 15.2 m/12.53 m |

| R/A ML+++-Factor | 12/12 | 1/5 | 2/8 |

| Original Resolution | 1.1 +/2.4 ++ m | 3.9 +/7.9 ++ m | 9.3 +/3.1 ++ m |

| Final Resolution | 30 m | 30 m | 30 m |

| CC 10% | CC 20% | CC 30% | CC 40% | CC 50% | |

|---|---|---|---|---|---|

| SAM | 0.4535 | 0.4539 | 0.4545 | 0.4551 | 0.4567 |

| XCL-I | XCL-I_T | X-I | X-I_T | L-I | L-I_T | C-I | C-I_T | XL-I | XL-I_T | |

|---|---|---|---|---|---|---|---|---|---|---|

| SAM | 0.496 | 0.495 | 0.497 | 0.497 | 0.499 | 0.497 | 0.506 | 0.501 | 0.496 | 0.495 |

| X-I | XI_T | C-I | C-I_T | L-I | L-I_T | XL-I | XL-I_T | XCL-I | XCL-I_T | |

|---|---|---|---|---|---|---|---|---|---|---|

| MB_Red | 7 | 6 | 10 | 9 | 8 | 4 | 5 | 2 | 3 | 1 |

| MB_Green | 7 | 6 | 10 | 9 | 8 | 4 | 5 | 2 | 3 | 1 |

| MB_Blue | 8 | 7 | 10 | 9 | 6 | 4 | 5 | 3 | 2 | 1 |

| MB_NIR | 4 | 6 | 10 | 8 | 9 | 5 | 7 | 2 | 3 | 1 |

| MB_SWIR-1 | 7 | 8 | 10 | 9 | 2 | 1 | 6 | 3 | 4 | 5 |

| MB_SWIR-2 | 6 | 8 | 10 | 9 | 7 | 1 | 5 | 3 | 4 | 2 |

| MB_Mean | 8 | 6 | 10 | 9 | 7 | 1 | 5 | 3 | 4 | 2 |

| DV_Red | 8 | 6 | 10 | 9 | 5 | 4 | 7 | 2 | 3 | 1 |

| DV_Green | 8 | 6 | 10 | 9 | 7 | 4 | 5 | 3 | 1 | 2 |

| DV_Blue | 9 | 7 | 10 | 8 | 1 | 5 | 4 | 6 | 2 | 3 |

| DV_NIR | 8 | 7 | 10 | 6 | 9 | 1 | 2 | 3 | 5 | 4 |

| DV_SWIR-1 | 2 | 6 | 10 | 9 | 8 | 7 | 1 | 4 | 5 | 3 |

| DV_SWIR-2 | 7 | 6 | 10 | 9 | 8 | 1 | 3 | 5 | 2 | 4 |

| DV_Mean | 8 | 6 | 10 | 9 | 7 | 4 | 5 | 3 | 1 | 2 |

| STD-DI_Red | 8 | 6 | 10 | 9 | 7 | 3 | 4 | 2 | 5 | 1 |

| STD-DI_Green | 8 | 6 | 10 | 9 | 7 | 5 | 4 | 2 | 3 | 1 |

| STD-DI_Blue | 8 | 6 | 10 | 9 | 7 | 5 | 4 | 2 | 3 | 1 |

| STD-DI_NIR | 7 | 3 | 10 | 9 | 8 | 6 | 5 | 2 | 4 | 1 |

| STD-DI_SWIR-1 | 7 | 5 | 10 | 9 | 8 | 6 | 4 | 2 | 3 | 1 |

| STD-DI_SWIR-2 | 7 | 5 | 10 | 9 | 8 | 6 | 4 | 2 | 3 | 1 |

| STD-DI_Mean | 7 | 5 | 10 | 9 | 8 | 6 | 4 | 2 | 3 | 1 |

| CC_Red | 8 | 6 | 10 | 9 | 7 | 3 | 4 | 2 | 5 | 1 |

| CC_Green | 8 | 6 | 10 | 9 | 7 | 5 | 4 | 2 | 3 | 1 |

| CC_Blue | 8 | 6 | 10 | 9 | 7 | 5 | 4 | 2 | 3 | 1 |

| CC_NIR | 7 | 3 | 10 | 9 | 8 | 6 | 5 | 2 | 4 | 1 |

| CC_SWIR-1 | 7 | 5 | 10 | 9 | 8 | 6 | 4 | 2 | 3 | 1 |

| CC_SWIR-2 | 7 | 5 | 10 | 9 | 8 | 6 | 4 | 2 | 3 | 1 |

| CC_Mean | 7 | 5 | 10 | 9 | 8 | 6 | 4 | 2 | 3 | 1 |

| SAM | 7 | 5 | 10 | 9 | 8 | 6 | 4 | 2 | 3 | 1 |

| Mean | 7,17241 | 5,7931 | 10 | 8,82759 | 7,10345 | 4,34483 | 4,37931 | 2,55172 | 3,2069 | 1,62069 |

| Final Rank | 8 | 6 | 10 | 9 | 7 | 4 | 5 | 2 | 3 | 1 |

| Agriculture | Forest | Urban | |

|---|---|---|---|

| SAM | 0.0917 | 0.1100 | 0.1558 |

© 2013 by the authors; licensee MDPI, Basel, Switzerland This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Eckardt, R.; Berger, C.; Thiel, C.; Schmullius, C. Removal of Optically Thick Clouds from Multi-Spectral Satellite Images Using Multi-Frequency SAR Data. Remote Sens. 2013, 5, 2973-3006. https://doi.org/10.3390/rs5062973

Eckardt R, Berger C, Thiel C, Schmullius C. Removal of Optically Thick Clouds from Multi-Spectral Satellite Images Using Multi-Frequency SAR Data. Remote Sensing. 2013; 5(6):2973-3006. https://doi.org/10.3390/rs5062973

Chicago/Turabian StyleEckardt, Robert, Christian Berger, Christian Thiel, and Christiane Schmullius. 2013. "Removal of Optically Thick Clouds from Multi-Spectral Satellite Images Using Multi-Frequency SAR Data" Remote Sensing 5, no. 6: 2973-3006. https://doi.org/10.3390/rs5062973