Exploring the Potential for Automatic Extraction of Vegetation Phenological Metrics from Traffic Webcams

Abstract

:1. Introduction

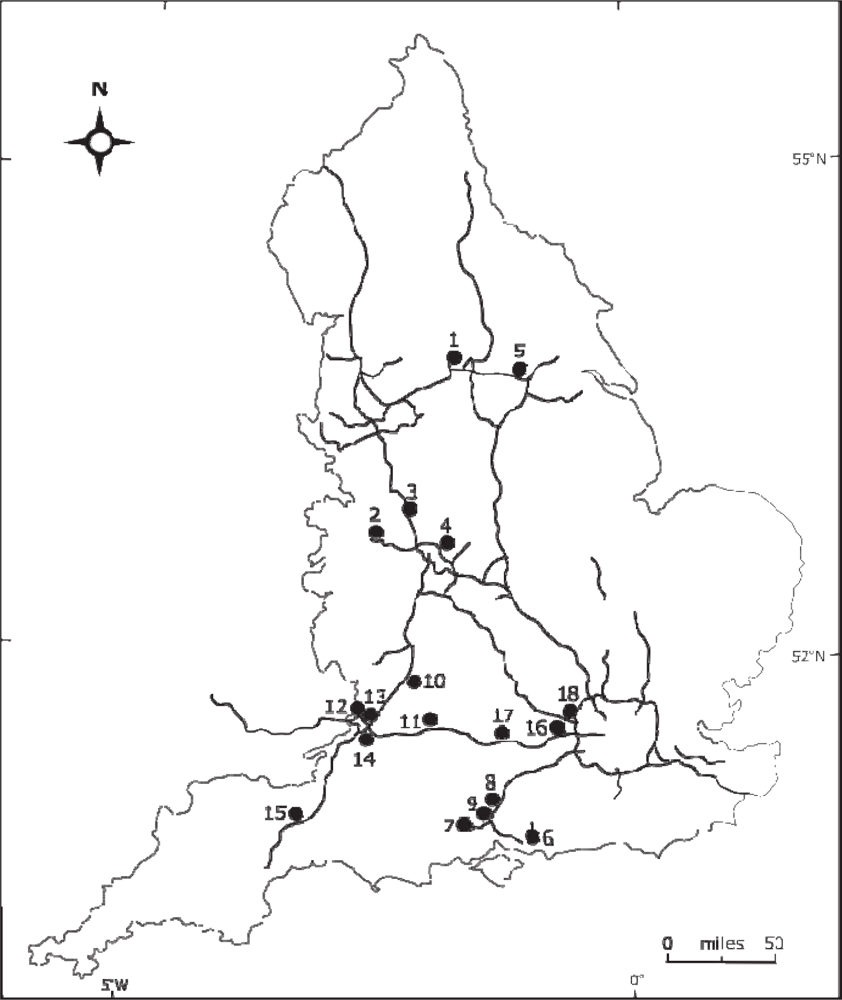

2. The Camera Network

3. Methods

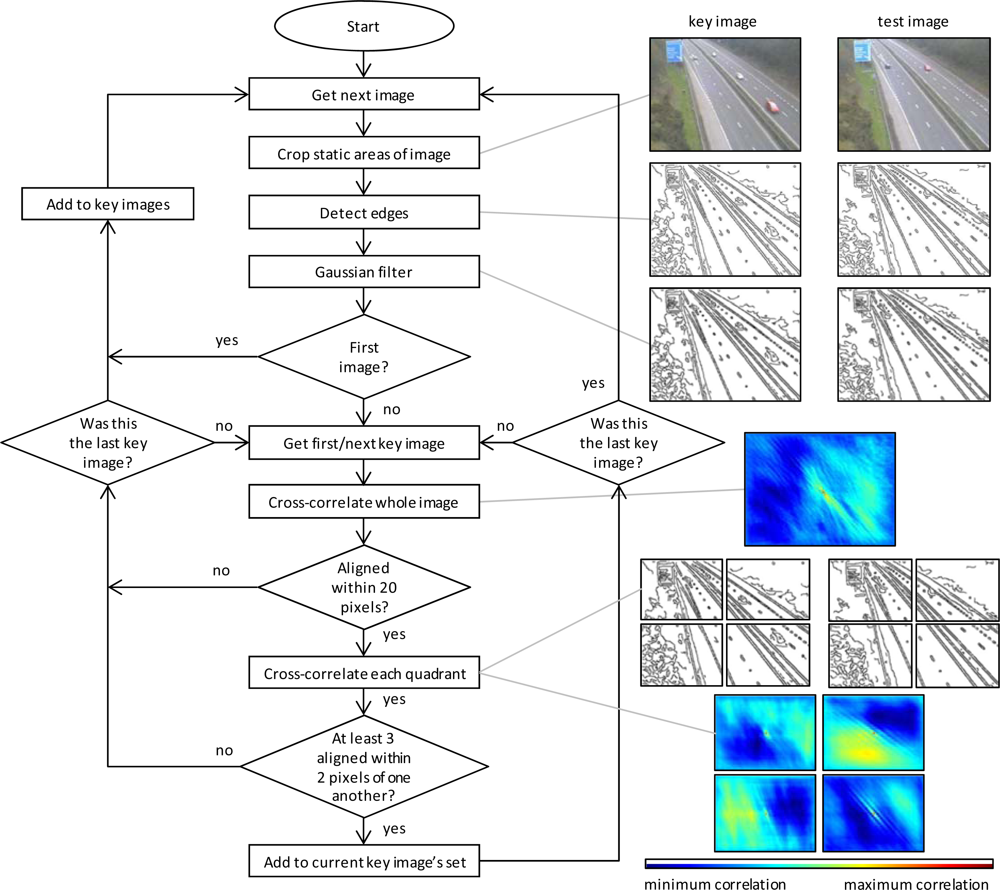

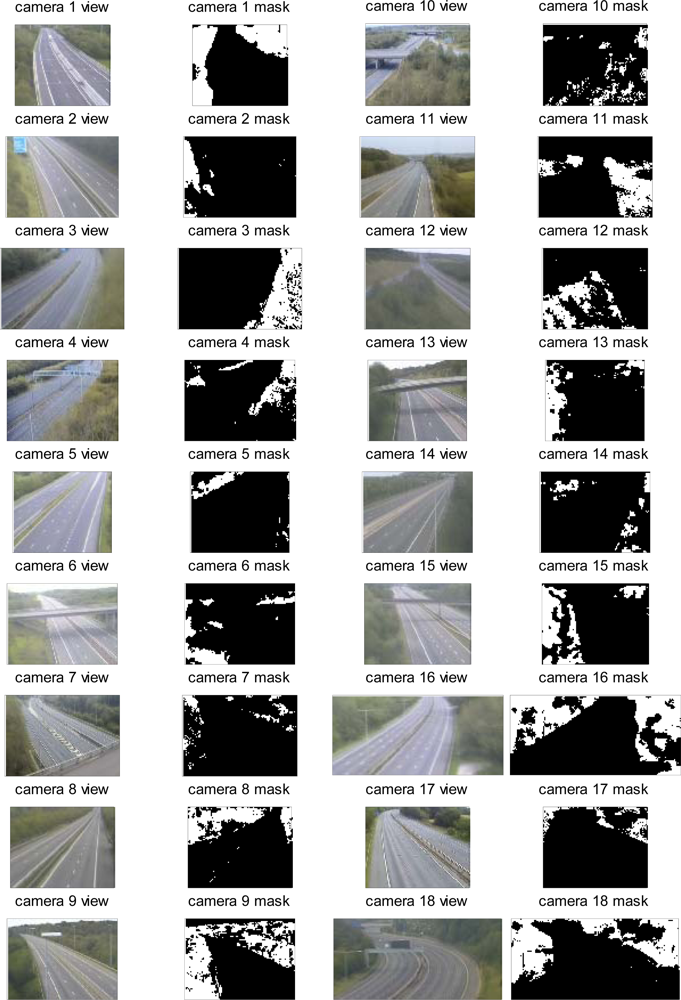

3.1. Image Pre-Processing

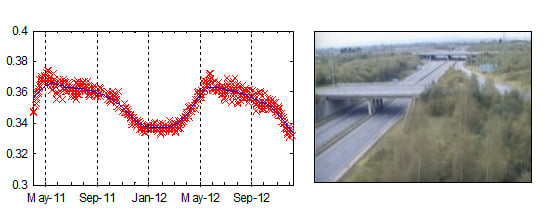

3.2. Determination of the Greenness of Pixels Containing Vegetation over Time

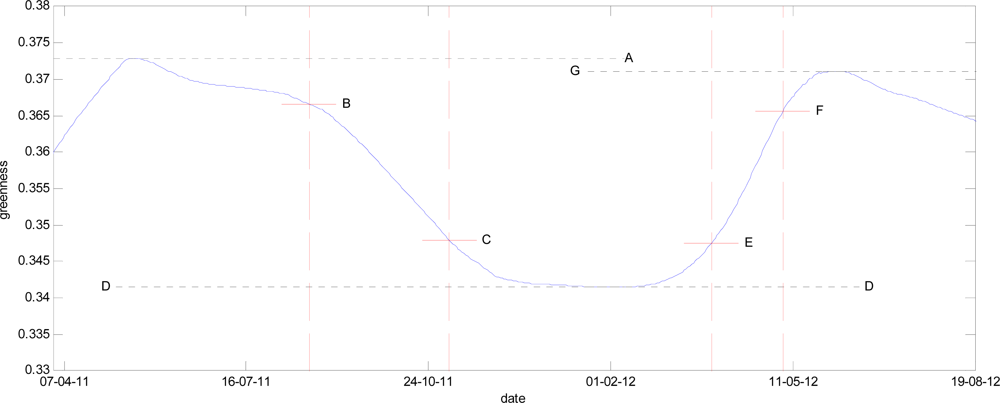

3.3. Extraction of Phenological Metrics

4. Results

4.1. Assessment of Network Usability

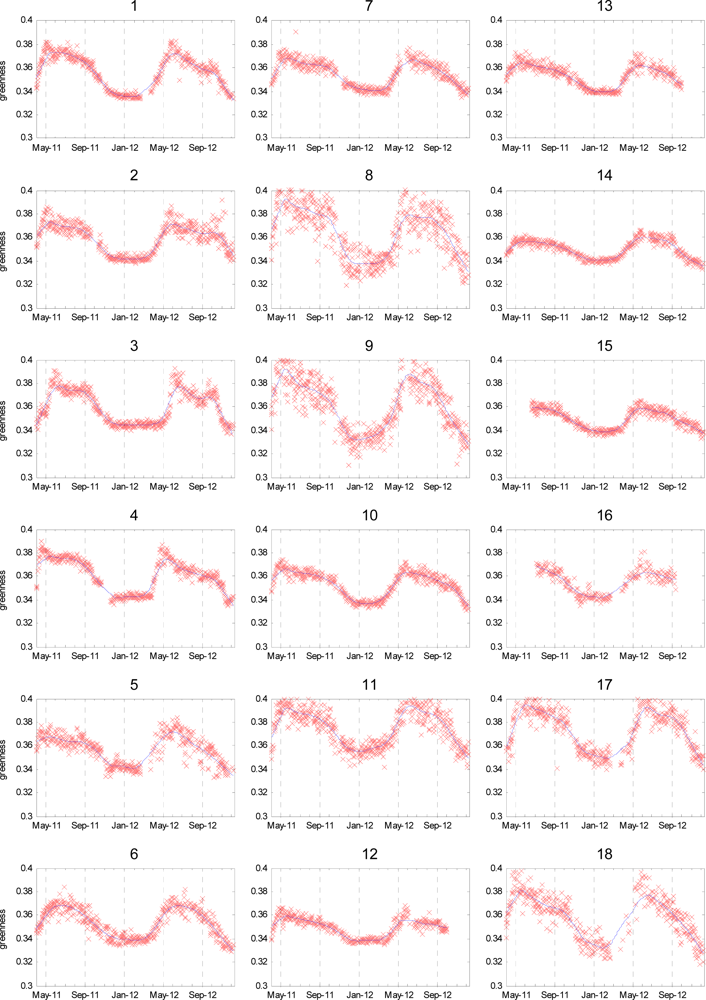

4.2. Extracted Time-Series Plots

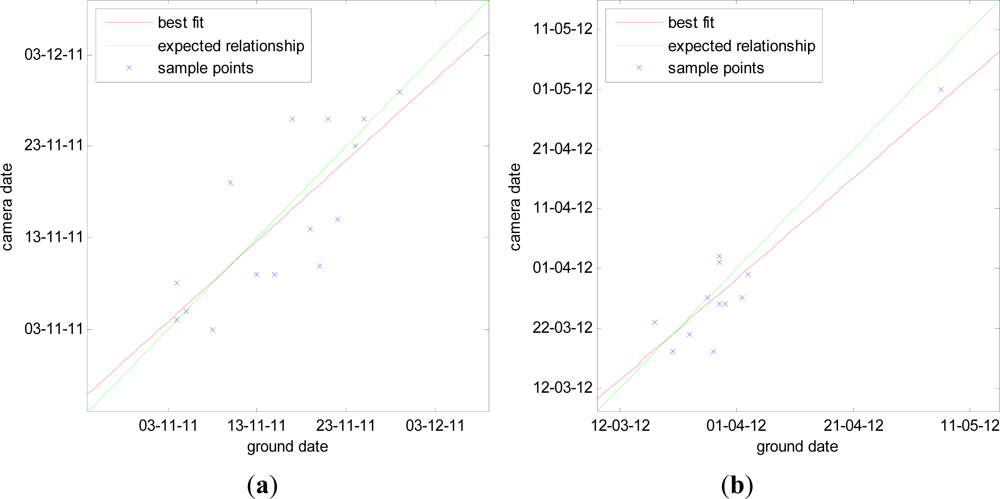

4.3. Computed Phenological Dates

5. Discussion

6. Conclusions

Acknowledgments

- Conflict of InterestThe authors declare no conflict of interest.

References

- Richardson, A.D.; Anderson, R.S.; Arain, M.A.; Barr, A.G.; Bohrer, G.; Chen, G.S.; Chen, J.M.; Ciais, P.; Davis, K.J.; Desai, A.R.; et al. Terrestrial biosphere models need better representation of vegetation phenology: Results from the North American Carbon Program Site Synthesis. Glob. Change Biol. 2012, 18, 566–584. [Google Scholar]

- Schwartz, M.D.; Betancourt, J.L.; Weltzin, J.F. From Caprio’s lilacs to the USA national phenology network. Front. Ecol. Environ. 2012, 10, 324–327. [Google Scholar]

- Brügger, R.; Dobbertin, M.; Kräuchi, N. Phenological Variation of Forest Trees. In Phenology: An Integrative Environmental Science; Schwartz, M., Ed.; Kluwer Academic Publishers: Dordrecht, The Netherlands, 2003; Volume 39, pp. 255–267. [Google Scholar]

- Molod, A.; Salmun, H.; Waugh, D.W. A new look at modeling surface heterogeneity: Extending its influence in the vertical. J. Hydrometeorol. 2003, 4, 810–825. [Google Scholar]

- Wilson, K.B.; Baldocchi, D.D. Seasonal and interannual variability of energy fluxes over a broadleaved temperate deciduous forest in North America. Agric. For. Meteorol. 2000, 100, 1–18. [Google Scholar]

- Cleland, E.E.; Chuine, I.; Menzel, A.; Mooney, H.A.; Schwartz, M.D. Shifting plant phenology in response to global change. Trends Ecol. Evol. 2007, 22, 357–365. [Google Scholar]

- Richardson, A.D.; Black, T.A.; Ciais, P.; Delbart, N.; Friedl, M.A.; Gobron, N.; Hollinger, D.Y.; Kutsch, W.L.; Longdoz, B.; Luyssaert, S.; et al. Influence of spring and autumn phenological transitions on forest ecosystem productivity. Philos. Trans. R. Soc. B Biol. Sci. 2010, 365, 3227–3246. [Google Scholar] [Green Version]

- Lucht, W.; Prentice, I.C.; Myneni, R.B.; Sitch, S.; Friedlingstein, P.; Cramer, W.; Bousquet, P.; Buermann, W.; Smith, B. Climatic control of the high-latitude vegetation greening trend and pinatubo effect. Science 2002, 296, 1687–1689. [Google Scholar]

- Betts, R.A. Offset of the potential carbon sink from boreal forestation by decreases in surface albedo. Nature 2000, 408, 187–190. [Google Scholar]

- Doi, H.; Takahashi, M. Latitudinal patterns in the phenological responses of leaf colouring and leaf fall to climate change in Japan. Glob. Ecol. Biogeogr. 2008, 17, 556–561. [Google Scholar]

- Badeck, F.W.; Bondeau, A.; Bottcher, K.; Doktor, D.; Lucht, W.; Schaber, J.; Sitch, S. Responses of spring phenology to climate change. New Phytol. 2004, 162, 295–309. [Google Scholar]

- Migliavacca, M.; Galvagno, M.; Cremonese, E.; Rossini, M.; Meroni, M.; Sonnentag, O.; Cogliati, S.; Manca, G.; Diotri, F.; Busetto, L.; et al. Using digital repeat photography and eddy covariance data to model grassland phenology and photosynthetic CO2 uptake. Agric. For. Meteorol. 2011, 151, 1325–1337. [Google Scholar]

- Wingate, L.; Richardson, A.D.; Weltzin, J.F.; Nasahara, K.N.; Grace, J. Keeping an eye on the carbon balance: Linking canopy development and net ecosystem exchange using a webcam. FluxLette 2008, 1, 14–17. [Google Scholar]

- Morisette, J.T.; Richardson, A.D.; Knapp, A.K.; Fisher, J.I.; Graham, E.A.; Abatzoglou, J.; Wilson, B.E.; Breshears, D.D.; Henebry, G.M.; Hanes, J.M.; et al. Tracking the rhythm of the seasons in the face of global change: Phenological research in the 21st century. Front. Ecol. Environ. 2009, 7, 253–260. [Google Scholar]

- Graham, E.A.; Yuen, E.M.; Robertson, G.F.; Kaiser, W.J.; Hamilton, M.P.; Rundel, P.W. Budburst and leaf area expansion measured with a novel mobile camera system and simple color thresholding. Environ. Exp. Bot. 2009, 65, 238–244. [Google Scholar]

- Liang, L.A.; Schwartz, M.D.; Fei, S.L. Validating satellite phenology through intensive ground observation and landscape scaling in a mixed seasonal forest. Remote Sens. Environ. 2011, 115, 143–157. [Google Scholar]

- Garrity, S.R.; Bohrer, G.; Maurer, K.D.; Mueller, K.L.; Vogel, C.S.; Curtis, P.S. A comparison of multiple phenology data sources for estimating seasonal transitions in deciduous forest carbon exchange. Agric. For. Meteorol. 2011, 151, 1741–1752. [Google Scholar]

- White, J.W.; Kimball, B.A.; Wall, G.W.; Ottman, M.J.; Hunt, L.A. Responses of time of anthesis and maturity to sowing dates and infrared warming in spring wheat. Field Crops Res. 2011, 124, 213–222. [Google Scholar]

- Studer, S.; Stockli, R.; Appenzeller, C.; Vidale, P.L. A comparative study of satellite and ground-based phenology. Int. J. Biometeorol. 2007, 51, 405–414. [Google Scholar]

- Sonnentag, O.; Hufkens, K.; Teshera-Sterne, C.; Young, A.M.; Friedl, M.; Braswell, B.H.; Milliman, T.; O’Keefe, J.; Richardson, A.D. Digital repeat photography for phenological research in forest ecosystems. Agric. For. Meteorol. 2012, 152, 159–177. [Google Scholar]

- Betancourt, J.L.; Schwartz, M.D.; Breshears, D.D.; Brewer, C.A.; Frazer, G.; Gross, J.E.; Mazer, S.J.; Reed, B.C.; Wilson, B.E. Evolving plans for the USA National Phenology Network. Eos Trans. AGU 2007, 88, 211. [Google Scholar]

- Collinson, N.; Sparks, T. Phenology—Nature’s calendar: An overview of results from the UK phenology network. Arboric. J. 2008, 30, 271–278. [Google Scholar]

- Jenkins, J.P.; Richardson, A.D.; Braswell, B.H.; Ollinger, S.V.; Hollinger, D.Y.; Smith, M.L. Refining light-use efficiency calculations for a deciduous forest canopy using simultaneous tower-based carbon flux and radiometric measurements. Agric. For. Meteorol. 2007, 143, 64–79. [Google Scholar]

- Wang, Z.J.; Wang, J.H.; Liu, L.Y.; Huang, W.J.; Zhao, C.J.; Wang, C.Z. Prediction of grain protein content in winter wheat (Triticum aestivum L.) using plant pigment ratio (PPR). Field Crops Res. 2004, 90, 311–321. [Google Scholar]

- Richardson, A.D.; Braswell, B.H.; Hollinger, D.Y.; Jenkins, J.P.; Ollinger, S.V. Near-surface remote sensing of spatial and temporal variation in canopy phenology. Ecol. Appl. 2009, 19, 1417–1428. [Google Scholar]

- Boyd, D.S.; Almond, S.; Dash, J.; Curran, P.J.; Hill, R.A. Phenology of vegetation in Southern England from Envisat MERIS terrestrial chlorophyll index (MTCI) data. Int. J. Remote Sens. 2011, 32, 8421–8447. [Google Scholar]

- Fisher, J.I.; Mustard, J.F.; Vadeboncoeur, M.A. Green leaf phenology at Landsat resolution: Scaling from the field to the satellite. Remote Sens. Environ. 2006, 100, 265–279. [Google Scholar]

- Graham, E.A.; Riordan, E.C.; Yuen, E.M.; Estrin, D.; Rundel, P.W. Public internet-connected cameras used as a cross-continental ground-based plant phenology monitoring system. Glob. Change Biol. 2010, 16, 3014–3023. [Google Scholar]

- Eklundh, L.; Jin, H.X.; Schubert, P.; Guzinski, R.; Heliasz, M. An optical sensor network for vegetation phenology monitoring and satellite data calibration. Sensors 2011, 11, 7678–7709. [Google Scholar]

- Fisher, J.I.; Mustard, J.F. Cross-scalar satellite phenology from ground, Landsat, and MODIS data. Remote Sens. Environ. 2007, 109, 261–273. [Google Scholar]

- Fisher, J.I.; Richardson, A.D.; Mustard, J.F. Phenology model from surface meteorology does not capture satellite-based greenup estimations. Glob. Change Biol. 2007, 13, 707–721. [Google Scholar]

- Boyd, C.S.; Svejcar, T.J. A visual obstruction technique for photo monitoring of willow clumps. Rangel. Ecol. Manag. 2005, 58, 434–438. [Google Scholar]

- Slaughter, D.C.; Giles, D.K.; Downey, D. Autonomous robotic weed control systems: A review. Comput. Electron. Agric. 2008, 61, 63–78. [Google Scholar]

- Richardson, A.D.; Jenkins, J.P.; Braswell, B.H.; Hollinger, D.Y.; Ollinger, S.V.; Smith, M.L. Use of digital webcam images to track spring green-up in a deciduous broadleaf forest. Oecologia 2007, 152, 323–334. [Google Scholar]

- Crimmins, M.A.; Crimmins, T.M. Monitoring plant phenology using digital repeat photography. Environ. Manage. 2008, 41, 949–958. [Google Scholar]

- Zhao, J.B.; Zhang, Y.P.; Tan, Z.H.; Song, Q.H.; Liang, N.S.; Yu, L.; Zhao, J.F. Using digital cameras for comparative phenological monitoring in an evergreen broad-leaved forest and a seasonal rain forest. Ecol. Inform. 2012, 10, 65–72. [Google Scholar]

- Hufkens, K.; Friedl, M.; Sonnentag, O.; Braswell, B.H.; Milliman, T.; Richardson, A.D. Linking near-surface and satellite remote sensing measurements of deciduous broadleaf forest phenology. Remote Sens. Environ. 2012, 117, 307–321. [Google Scholar]

- Li, H.F.; Fu, X.S. An intelligence traffic accidence monitor system using sensor web enablement. Procedia Eng. 2011, 15, 2098–2102. [Google Scholar]

- Highways Agency. Avaiable online: http://www.highways.gov.uk/about-us/what-we-do/ (accessed on 9 November 2012).

- Cleveland, W.S.; Devlin, S.J. Locally weighted regression—An approach to regression-analysis by local fitting. J. Am. Stat. Assoc. 1988, 83, 596–610. [Google Scholar]

- Armitage, R.P.; Alberto Ramirez, F.; Mark Danson, F.; Ogunbadewa, E.Y. Probability of cloud-free observation conditions across Great Britain estimated using MODIS cloud mask. Remote Sens. Lett. 2012, 4, 427–435. [Google Scholar]

- Kimball, J.S.; McDonald, K.C.; Running, S.W.; Frolking, S.E. Satellite radar remote sensing of seasonal growing seasons for boreal and subalpine evergreen forests. Remote Sens. Environ. 2004, 90, 243–258. [Google Scholar]

- Saitoh, T.M.; Nagai, S.; Saigusa, N.; Kobayashi, H.; Suzuki, R.; Nasahara, K.N.; Muraoka, H. Assessing the use of camera-based indices for characterizing canopy phenology in relation to gross primary production in a deciduous broad-leaved and an evergreen coniferous forest in Japan. Ecol. Inform. 2012, 11, 45–54. [Google Scholar]

- Nagai, S.; Maeda, T.; Gamo, M.; Muraoka, H.; Suzuki, R.; Nasahara, K.N. Using digital camera images to detect canopy condition of deciduous broad-leaved trees. Plant Ecol. Div. 2011, 4, 79–89. [Google Scholar]

- Motohka, T.; Nasahara, K.N.; Oguma, H.; Tsuchida, S. Applicability of green-red vegetation index for remote sensing of vegetation phenology. Remote Sens. 2010, 2, 2369–2387. [Google Scholar]

- Atkinson, P.M.; Jeganathan, C.; Dash, J.; Atzberger, C. Inter-comparison of four models for smoothing satellite sensor time-series data to estimate vegetation phenology. Remote Sens. Environ. 2012, 123, 400–417. [Google Scholar]

- Schwartz, M.D.; Hanes, J.M.; Liang, L. Comparing carbon flux and high-resolution spring phenological measurements in a northern mixed forest. Agric. For. Meteorol. 2013, 169, 136–147. [Google Scholar]

- Nasahara, K.N.; Muraoka, H.; Nagai, S.; Mikami, H. Vertical integration of leaf area index in a Japanese deciduous broad-leaved forest. Agric. For. Meteorol. 2008, 148, 1136–1146. [Google Scholar] [Green Version]

- Schleip, C.; Sparks, T.H.; Estrella, N.; Menzel, A. Spatial variation in onset dates and trends in phenology across Europe. Climate Res. 2009, 39, 249–260. [Google Scholar]

- Ahrends, H.E.; Etzold, S.; Kutsch, W.L.; Stoeckli, R.; Bruegger, R.; Jeanneret, F.; Wanner, H.; Buchmann, N.; Eugster, W. Tree phenology and carbon dioxide fluxes: Use of digital photography at for process-based interpretation the ecosystem scale. Climate Res. 2009, 39, 261–274. [Google Scholar]

- Mizunuma, T.; Wilkinson, M.; Eaton, E.L.; Mencuccini, M.; Morison, J.I.L.; Grace, J. The relationship between carbon dioxide uptake and canopy colour from two camera systems in a deciduous forest in southern England. Funct. Ecol. 2013, 27, 196–207. [Google Scholar]

- Ryu, Y.; Verfaillie, J.; Macfarlane, C.; Kobayashi, H.; Sonnentag, O.; Vargas, R.; Ma, S.; Baldocchi, D.D. Continuous observation of tree leaf area index at ecosystem scale using upward-pointing digital cameras. Remote Sens. Environ. 2012, 126, 116–125. [Google Scholar]

- Ide, R.; Oguma, H. Use of digital cameras for phenological observations. Ecol. Inform. 2010, 5, 339–347. [Google Scholar]

- Bater, C.W.; Coops, N.C.; Wulder, M.A.; Nielsen, S.E.; McDermid, G.; Stenhouse, G.B. Design and installation of a camera network across an elevation gradient for habitat assessment. Instrum. Sci. Technol. 2011, 39, 231–247. [Google Scholar]

- Doi, H.; Gordo, O.; Katano, I. Heterogeneous intra-annual climatic changes drive different phenological responses at two trophic levels. Climate Res. 2008, 36, 181–190. [Google Scholar]

- Jenkins, G.; Perry, M.; Prior, J. UKCIP08: The Climate of the United Kingdom and Recent Trends; Meteorological Office Hadley Centre: Exeter, UK, 2009. [Google Scholar]

- Lamb, R. UKCP 09-UK Climate Projections 2009; Oxford University: Oxford, UK, 2010. [Google Scholar]

- Murphy, J.; Sexton, D.; Jenkins, G.; Booth, B.; Brown, C.; Clark, R.; Collins, M.; Harris, G.; Kendon, E.; Betts, R. UK Climate Projections Science Report: Climate Change Projections; Meteorological Office Hadley Centre: Exeter, UK, 2009. [Google Scholar]

- Broadmeadow, M.; Morecroft, M.; Morison, J. Observed Impacts of Climate Change on UK Forests to Date. In Combating Climate Change: A Role for UK Forests. An Assessment of the Potential of the UK's Trees and Woodlands to Mitigate and Adapt to Climate Change; Read, D., Freer-Smith, P., Hanley, N., West, C., Snowdon, P., Eds.; The Stationery Office Limited: Norwich, UK, 2009; pp. 50–66. [Google Scholar]

- Graham, E.A.; Yuen, E.M.; Robertson, G.F.; Kaiser, W.J.; Hamilton, M.P.; Rundel, P.W. Budburst and leaf area expansion measured with a novel mobile camera system and simple color thresholding. Environ. Exp. Bot. 2009, 65, 238–244. [Google Scholar]

- Bater, C.W.; Coops, N.C.; Wulder, M.A.; Hilker, T.; Nielsen, S.E.; McDermid, G.; Stenhouse, G.B. Using digital time-lapse cameras to monitor species-specific understorey and overstorey phenology in support of wildlife habitat assessment. Environ. Monit. Assess. 2011, 180, 1–13. [Google Scholar]

- Almeida, J.; dos Santos, J.A.; Alberton, B.; Torres, R.D.; Morellato, L.P.C. Remote Phenology: Applying Machine Learning to Detect Phenological Patterns in a Cerrado Savanna. Proceedings of 2012 IEEE 8th International Conference on E-Science (E-Science), Chicago, IL, USA, 8–12 October 2012.

- Berger, M.; Moreno, J.; Johannessen, J.A.; Levelt, P.F.; Hanssen, R.F. ESA’s sentinel missions in support of Earth system science. Remote Sens. Environ. 2012, 120, 84–90. [Google Scholar]

- Polgar, C.A.; Primack, R.B. Leaf-out phenology of temperate woody plants: From trees to ecosystems. New Phytol. 2011, 191, 926–941. [Google Scholar]

| No. | Latitude | Longitude | Elevation (m) | Direction | Vegetation Types |

|---|---|---|---|---|---|

| 1 | 53.78°N | 1.57°W | 40 | SW | Dense mixed hedgerow including hawthorn, with sycamore or maple, and oak trees. |

| 2 | 52.69°N | 2.50°W | 132 | ESE | Dense mature hedgerow 15 m high, with some coniferous trees. |

| 3 | 52.83°N | 2.15°W | 98 | NW | Dense mixed hedgerow 15 m high, with some hawthorn and ash trees. |

| 4 | 52.51°N | 1.76°W | 105 | W | Dense mixed hedgerow including hawthorn and blackthorn bushes. |

| 5 | 53.61°N | 0.98°W | 6 | NE | Mixed stand of trees containing sycamore, gorse, hawthorn, and conifer species. |

| 6 | 50.91°N | 1.00°W | 69 | S | Mixed stand of trees, all deciduous. |

| 7 | 50.96°N | 1.42°W | 83 | E | Dense mixed stand of conifer, ash, gorse and scots pine. |

| 8 | 50.96°N | 1.40°W | 69 | E | Dense mixed stand of gorse, ash, sycamore, and hawthorn. |

| 9 | 51.08°N | 1.29°W | 51 | NE | Dense mixed hedgerow in front of a tree line containing ash, conifer, oak, birch, gorse, sycamore. |

| 10 | 51.86°N | 2.17°W | 46 | NEN | Junction intersection: mixed level vegetation with individual trees including conifers, silver birch, and buckthorn. |

| 11 | 51.51°N | 2.08°W | 71 | E | Dense mixed stand of gorse, ash, sycamore, and hawthorn. |

| 12 | 51.57°N | 2.59°W | 9 | SE | Individual mature trees, including silver birch. |

| 13 | 51.49°N | 2.55°W | 44 | SW | Mixed scattered trees including blackthorn, ash and hawthorn. |

| 14 | 51.51°N | 2.66°W | 8 | NE | Dense mixed hedgerow ∼12–15 m high including hawthorn and blackthorn bushes. |

| 15 | 50.98°N | 3.14°W | 70 | WSW | Dense stands of mixed trees, containing willow, and maple. |

| 16 | 51.50°N | 0.70°W | 24 | NE | Dense stands of mixed trees, containing poplar, ash, and elder. |

| 17 | 51.46°N | 1.09°W | 46 | SE | Dense mixed trees including blackthorn, gorse and ash. |

| 18 | 51.54°N | 0.51°W | 45 | NE | Mixed trees including blackthorn, gorse and ash. |

| No. | Vegetation Coverage on Image (%) | RMS Error on Fit to Time Series (× 10−3) | Valid Time-Points Present (% of Whole Sequence) |

|---|---|---|---|

| 1 | 32.3 | 3.96 | 84.8 |

| 2 | 7.5 | 5.27 | 87.9 |

| 3 | 21.7 | 5.19 | 88.0 |

| 4 | 11.4 | 4.49 | 81.1 |

| 5 | 6.6 | 5.63 | 75.4 |

| 6 | 11.5 | 4.38 | 84.3 |

| 7 | 6.1 | 3.72 | 87.7 |

| 8 | 19.2 | 10.82 | 89.2 |

| 9 | 34.8 | 10.28 | 90.5 |

| 10 | 12.1 | 3.46 | 85.6 |

| 11 | 21.7 | 7.11 | 87.2 |

| 12 | 18.6 | 3.39 | 80.3 |

| 13 | 15.3 | 3.24 | 84.1 |

| 14 | 7.0 | 2.41 | 85.3 |

| 15 | 20.3 | 2.72 | 74.5 |

| 16 | 30.1 | 4.18 | 46.6 |

| 17 | 8.3 | 7.44 | 76.6 |

| 18 | 27.4 | 8.20 | 66.7 |

| Camera No. | 2011 Season | 2012 Season | ||

|---|---|---|---|---|

| Start of Senescence | End of Senescence | Start of Green-up | End of Green-up | |

| 1 | 28-Aug | 08-Nov | 14-Mar | 09-May |

| 2 | 19-Aug | 04-Nov | 27-Mar | 05-May |

| 3 | 07-Sep | 05-Nov | 01-May | 01-Jun |

| 4 | 25-Aug | 05-Nov | 18-Mar | 22-Apr |

| 5 | 30-Aug | 09-Nov | 02-Mar | 28-Apr |

| 6 | 17-Aug | 03-Nov | 26-Mar | 09-May |

| 7 | 26-Jul | 26-Nov | 03-Apr | 16-May |

| 8 | 07-Aug | 15-Nov | 02-Apr | 09-May |

| 9 | 26-Jun | 10-Nov | 23-Mar | 10-May |

| 10 | 25-Aug | 26-Nov | 21-Mar | 02-May |

| 11 | 16-Aug | 09-Nov | 27-Mar | 12-May |

| 12 | 30-Jul | 14-Nov | 26-Mar | 30-Apr |

| 13 | 12-Aug | 29-Nov | 18-Mar | 27-Apr |

| 14 | 08-Sep | 26-Nov | 23-Mar | 02-May |

| 15 | 21-Aug | 07-Nov | 01-Mar | 02-May |

| 16 | 30-Aug | 20-Nov | 18-Mar | 03-May |

| 17 | 18-Aug | 19-Nov | 31-Mar | 22-May |

| 18 | 13-Jul | 23-Nov | 06-Mar | 11-May |

© 2013 by the authors; licensee MDPI, Basel, Switzerland This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license ( http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Morris, D.E.; Boyd, D.S.; Crowe, J.A.; Johnson, C.S.; Smith, K.L. Exploring the Potential for Automatic Extraction of Vegetation Phenological Metrics from Traffic Webcams. Remote Sens. 2013, 5, 2200-2218. https://doi.org/10.3390/rs5052200

Morris DE, Boyd DS, Crowe JA, Johnson CS, Smith KL. Exploring the Potential for Automatic Extraction of Vegetation Phenological Metrics from Traffic Webcams. Remote Sensing. 2013; 5(5):2200-2218. https://doi.org/10.3390/rs5052200

Chicago/Turabian StyleMorris, David E., Doreen S. Boyd, John A. Crowe, Caroline S. Johnson, and Karon L. Smith. 2013. "Exploring the Potential for Automatic Extraction of Vegetation Phenological Metrics from Traffic Webcams" Remote Sensing 5, no. 5: 2200-2218. https://doi.org/10.3390/rs5052200