Building Extraction from High Resolution Space Images in High Density Residential Areas in the Great Cairo Region

Abstract

:1. Introduction

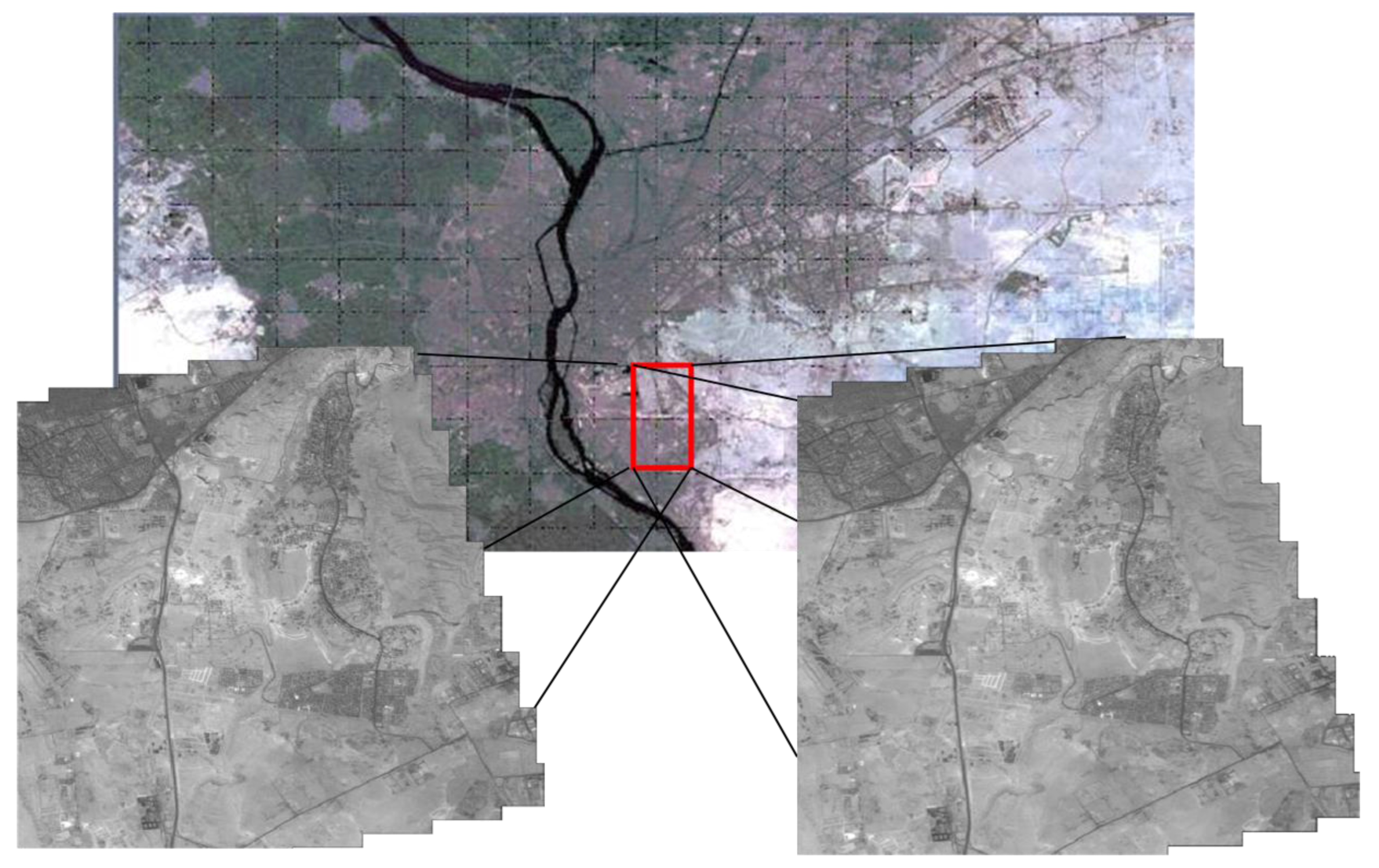

2. Study Area and Used Data

3. Methodology

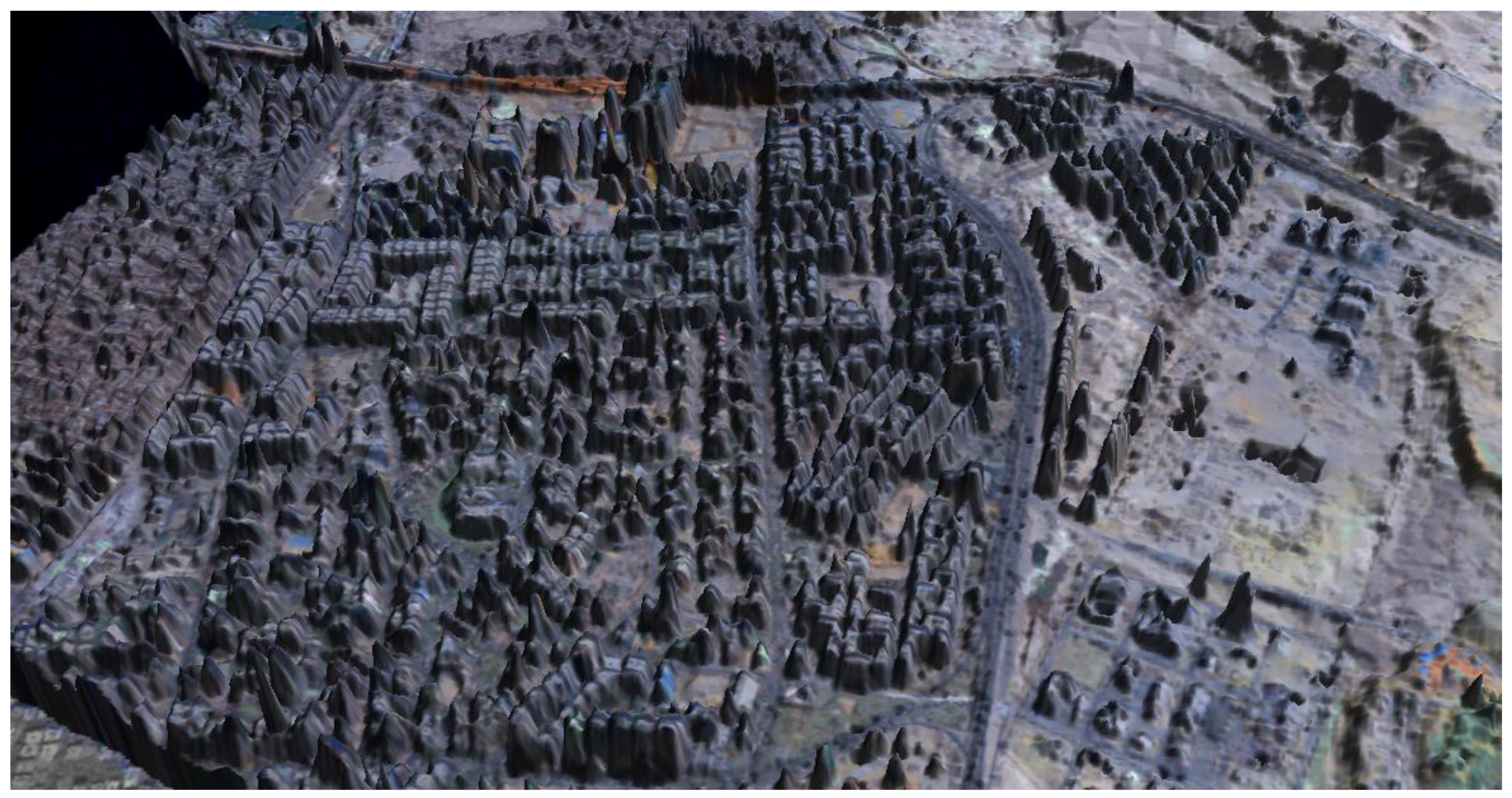

3.1. Digital Surface Model and Orthoimage Creation

3.2. Building Detection Using Image Classification and DSM

4. Results and Analysis

4.1. Accuracy Assessment of Building Extraction

- True Positive (TP): Both the automated and manual methods classified the area as building.

- True Negative (TN): Both the automated and manual methods classified the area as non-building.

- False Positive (FP): Only the automated method classified the area as building.

- False Negative (FN): Only the manual classification classified the area as building.

- Branching Factor: FP/TP

- Miss Factor: FN/TP

- Building Detection Percentage: 100 × TP/(TP + FN)

| Block (site) | TP | FP | FN | Total Buildings | Miss Factor | Branching Factor | Building Detection % |

|---|---|---|---|---|---|---|---|

| Block 1 | 42 | 6 | 12 | 54 | 0.14 | 14.3 | 77.8 |

| Block 2 | 173 | 12 | 36 | 209 | 0.20 | 6.9 | 82.8 |

| Block 3 | 158 | 7 | 23 | 181 | 0.15 | 4.4 | 87.3 |

| Average | 0.16 | 8.54 | 82.6 | ||||

4.2. Assessing the Positional Accuracy of Detected Buildings

| Building No. | Heights from Ground Survey | Heights Extracted from Image Model | Building Height Error |

|---|---|---|---|

| 1 | 29.88 | 29.02 | −0.86 |

| 2 | 27.38 | 30.08 | −2.70 |

| 3 | 32.27 | 32.84 | −0.57 |

| 4 | 20.14 | 21.29 | −1.15 |

| 5 | 20.15 | 21.3 | −1.15 |

| 6 | 15.21 | 15.68 | −0.47 |

| 7 | 15.47 | 15.15 | 0.31 |

| 8 | 16.77 | 17.66 | −0.90 |

| 9 | 16.93 | 17.67 | −0.74 |

| 10 | 15.79 | 16.86 | −1.07 |

| 11 | 15.50 | 16.21 | −0.71 |

| 12 | 15.61 | 16.4 | −0.79 |

| 13 | 15.24 | 16.92 | −1.68 |

| 14 | 29.89 | 31.03 | −1.14 |

| 15 | 30.54 | 32 | −1.46 |

| 16 | 30.77 | 32.36 | −1.59 |

| 17 | 31.02 | 33.57 | −2.55 |

| Max | 2.7 | ||

| RMSE | 1.33 | ||

5. Summary and Conclusions

References

- Baillard, C.; Dissard, O.; Jamet, O.; Maitre, H. Extraction and textural characterization of above-ground areas from aerial stereo pairs: A quality assessment. ISPRS J. Photogramm. 1998, 53, 130–141. [Google Scholar] [CrossRef]

- Lari, Z.; Ebadi, H. Automatic Extraction of Building Features from High Resolution Satellite Images Using Artificial Neural Networks. In Proceedings of ISPRS Conference on Information Extraction from SAR and Optical Data, with Emphasis on Developing Countries, Istanbul, Turkey, 16–18 May 2007.

- Liu, Z.; Gong, P.; Shi, P.; Chen, H.; Zhu, L.; Sasagawa, T. Automated building change detection using UltraCamD images and existing CAD data. Int. J. Remote. Sens. 2010, 31, 1505–1517. [Google Scholar] [CrossRef]

- Chehata, N.; Jung, F.; Deseilligny, M.P.; Stamon, G. A Region-based Matching Approach for 3D-Roof Reconstruction from HR Satellite Stereo Pairs. In Proceedings of the VIIth Digital Image Computing: Techniques and Applications, Sydney, Australia, 10–12 December 2003; pp. 889–898.

- Rottensteiner, F.; Trinder, J.; Clode, S.; Kubik, K.; Lovell, B. Building Detection by Dempster-Shafer Fusion of LIDAR Data and Multispectral Aerial Imagery. In Proceedings of the Seventeenth International Conference on Pattern Recognition, Cambridge, UK, 23–26 August 2004; pp. 339–342.

- Brenner, C. Building reconstruction from images and laser scanning. Int. J. Appl. Earth Obs. Geoinf. 2005, 6, 187–198. [Google Scholar] [CrossRef]

- Priestnall, G.; Jaafar, J.; Duncan, A. Extracting urban features from LiDAR digital surface models. Comput. Environ. Urban Syst. 2000, 24, 65–78. [Google Scholar] [CrossRef]

- Kim, Z.W.; Nevatia, R. Uncertain reasoning and learning for feature grouping. Comput. Vis. Image. Understand. 1999, 76, 278–288. [Google Scholar] [CrossRef]

- Lin, C.; Nevatia, R. Building detection and description from a single intensity image. Comput. Vis. Image. Understand. 1998, 72, 101–121. [Google Scholar] [CrossRef]

- Fraser, C.; Baltsavias, E.; Gruen, A. Processing of IKONOS imagery for sub-meter 3D positioning and building extraction. ISPRS J. Photogramm. 2002, 56, 177–194. [Google Scholar] [CrossRef]

- Thomas, N.; Hendrix, C.; Congalton, R.G. A comparison of urban mapping methods using high-resolution digital imagery. Photogramm. Eng. Remote Sensing 2003, 69, 963–972. [Google Scholar] [CrossRef]

- Sohn, G.; Dowman, I. Building Extraction Using LiDAR DEMs and IKONOS Images. In Proceedings of the ISPRS Working Group III/3 Workshop, Dresden, Germany, 8–10 October 2003; Volume 34, Part 3/W13. pp. 8–10.

- Wei, Y.; Zhao, Z.; Song, J. Urban Building Extraction from High-resolution Satellite Panchromatic Image Using Clustering and Edge Detection. In Proceedings of 2004 IEEE International Symposium on Geoscience and Remote Sensing, Anchorage, AK, USA, 20–24 September 2004; Volume 7, pp. 2008–2010.

- Liu, Z.; Wang, J.; Liu, W. Building Extraction from High Resolution Imagery Based on Multi-Scale Object Oriented Classification and Probabilistic Hough Transform. In Proceedings of 2005 IEEE International Symposium on Geoscience and Remote Sensing, Seoul, Korea, 25–29 July 2005; pp. 2250–2253.

- Haverkamp, D. Automatic Building Extraction from IKONOS Imagery. In Proceedings of the ASPRS Annual Conference, Denver, CO, USA, 23–28 May 2004.

- Ortner, M.; Descombes, X.; Zerubia, J. Building Extraction from Digital Elevation Model. In Proceedings of 2003 IEEE International Conference on Acoustics, Speech, and Signal Processing, Hong Kong, 6–10 April 2003; Volume 3, pp. 337–340.

- Xu, J.; Wan, Y.; Yao, F. A Method of 3D Building Boundary Extraction from Airborne LiDAR Points Cloud, Photonics and Optoelectronic (SOPO). In Proceedings of 2010 IEEE Symposium on Security and Privacy (SP), Berkeley, CA, USA, 16–19 May 2010; pp. 1–4.

- Alharthy, A.; Bethel, J. Building Extraction and Reconstruction from LiDAR Data. In Proceedings of ASPRS Annual Conference, Washington, DC, USA, 22–26 April 2002; pp. 18–26.

- Ridley, H.M.; Atkonson, P.M.; Aplin, P.; Muller, J.P.; Dowman, I. Evaluating the potential of the forthcoming commercial US high-resolution satellite sensor imagery at the ordnance survey (R). Photogramm. Eng. Remote Sensing 1997, 63, 997–1005. [Google Scholar]

- Segl, K.; Kaufmann, H. Detection of small objects from high-resolution panchromatic satellite imagery based on supervised image segmentation. IEEE Trans. Geosci. Remote Sens. 2001, 39, 2080–2083. [Google Scholar] [CrossRef]

- Croitoru, A.; Doytsher, Y. Monocular right-angle building hypothesis generation in regularized urban areas by pose clustering. Photogramm. Eng. Remote Sensing 2003, 69, 151–169. [Google Scholar] [CrossRef]

- Dial, G.; Bowen, H.; Gerlach, F.; Grodecki, J.; Oleszczuk, R. IKONOS satellite, imagery, and products. Remote. Sens. Environ. 2003, 88, 23–36. [Google Scholar] [CrossRef]

- Tao, C.V.; Hu, Y. A comprehensive study of the rational function model for photogrammetric processing. Photogramm. Eng. Remote Sensing 2001, 67, 1347–1357. [Google Scholar]

- Jin, X.; Davis, C.H. Automated building extraction from high-resolution satellite imagery in urban areas using structural, contextual, and spectral information. EURASIP J. Appl. Signal. Process. 2005, 2005, 2198–2206. [Google Scholar] [CrossRef]

© 2011 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Shaker, I.F.; Abd-Elrahman, A.; Abdel-Gawad, A.K.; Sherief, M.A. Building Extraction from High Resolution Space Images in High Density Residential Areas in the Great Cairo Region. Remote Sens. 2011, 3, 781-791. https://doi.org/10.3390/rs3040781

Shaker IF, Abd-Elrahman A, Abdel-Gawad AK, Sherief MA. Building Extraction from High Resolution Space Images in High Density Residential Areas in the Great Cairo Region. Remote Sensing. 2011; 3(4):781-791. https://doi.org/10.3390/rs3040781

Chicago/Turabian StyleShaker, Ibrahim F., Amr Abd-Elrahman, Ahmed K. Abdel-Gawad, and Mohamed A. Sherief. 2011. "Building Extraction from High Resolution Space Images in High Density Residential Areas in the Great Cairo Region" Remote Sensing 3, no. 4: 781-791. https://doi.org/10.3390/rs3040781