Multispectral UAS Data Accuracy for Different Radiometric Calibration Methods

Abstract

:1. Introduction

2. Materials and Methods

2.1. UAS Platform

2.2. UAS Data Collection

2.3. UAS Data Processing

2.3.1. Method A: One-Point Calibration (Manufacturer Method)

2.3.2. Method B: One-Point Calibration plus Sunshine Sensor (Manufacturer-Recommended Method)

2.3.3. Method C: Pre-Calibration Using the Simplified Empirical Line Calibration

2.3.4. Method D: One-Point Calibration Plus Sunshine Sensor Plus Post-Calibration

2.3.5. Method E: Post-Calibration using the Simplified Empirical Line Calibration

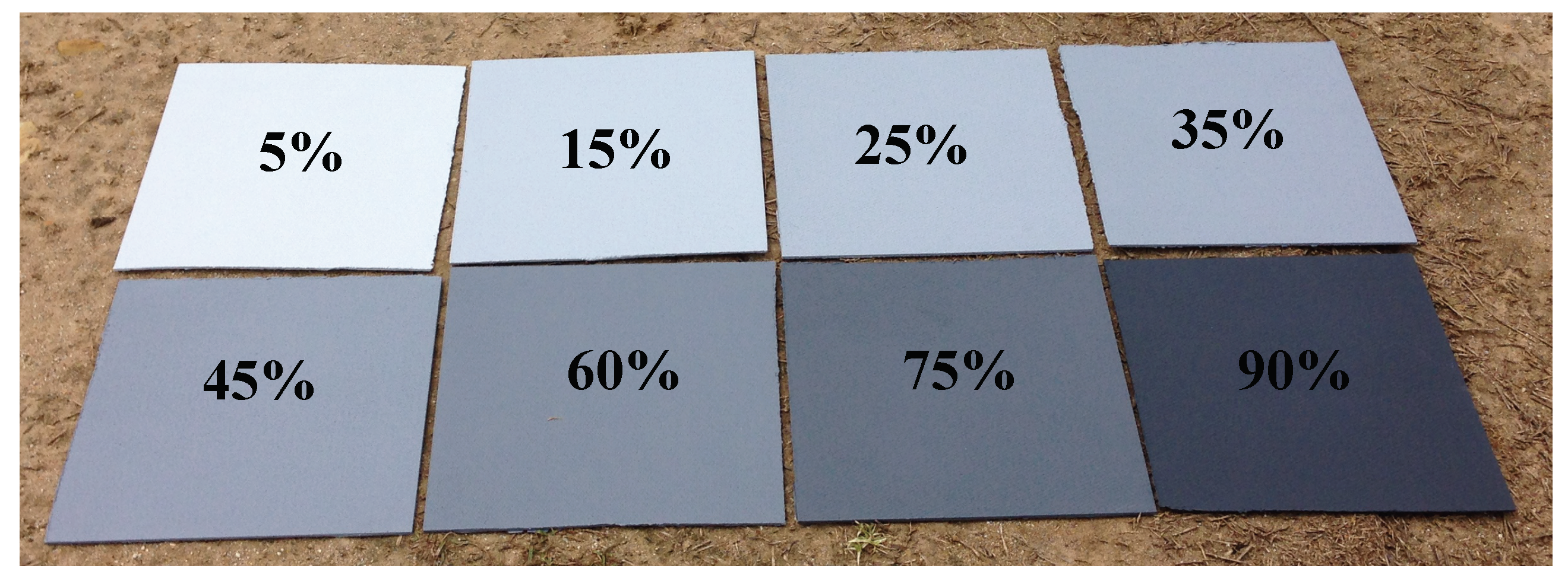

2.4. Grey Gradient Calibration Panel

2.5. Calibration Equations

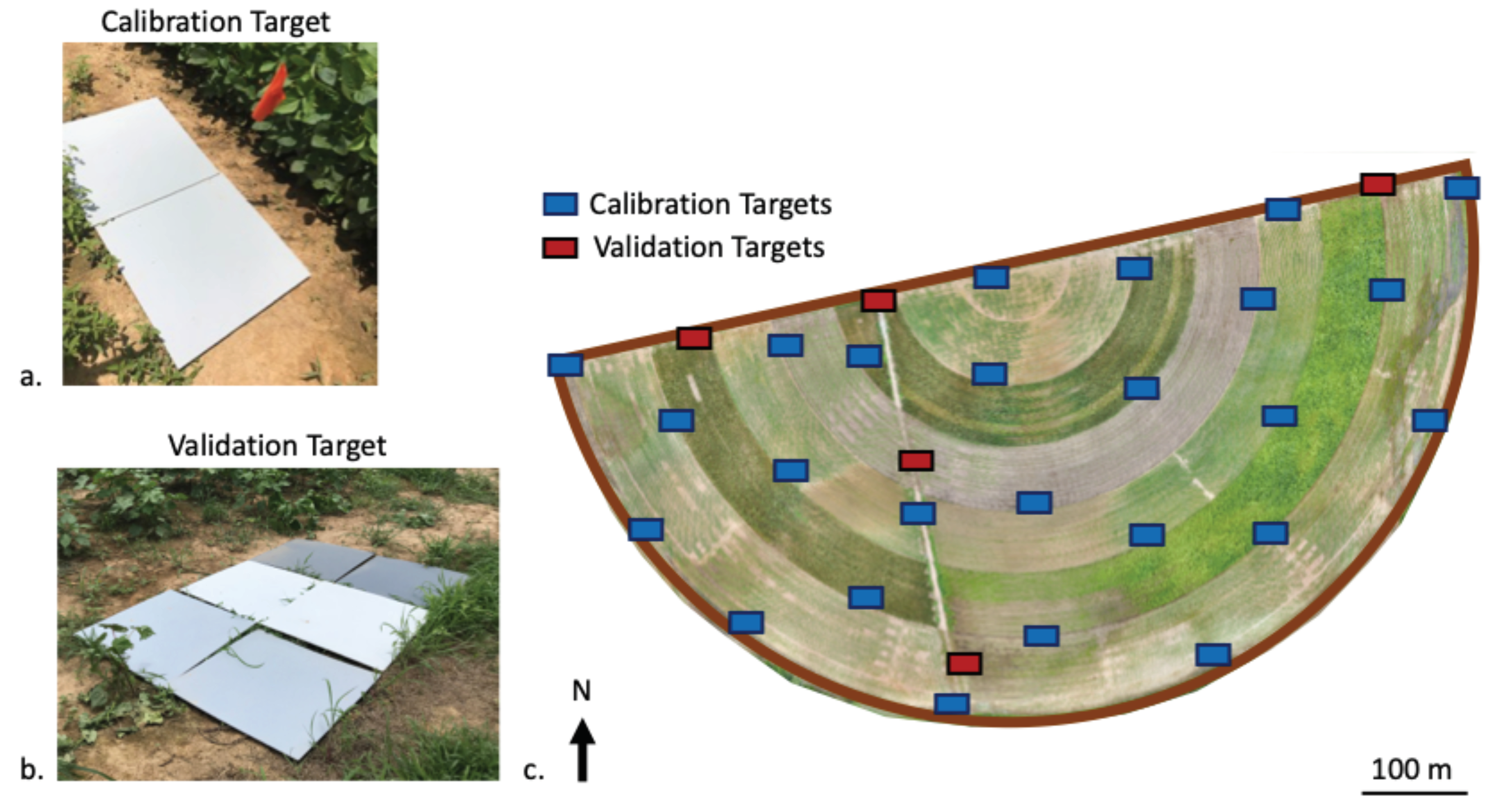

2.6. Data Collection

2.7. Raw Image Calibration with Method C

2.8. Data Analysis

2.8.1. Objective 1: Compare the Accuracy of the Different Radiometric Calibration Methods

2.8.2. Objective 2: Quantify the Radiometric Error Associated with Each Calibration Method

2.8.3. Objective 3: Quantify the Accuracy of Vegetation Indices

3. Results

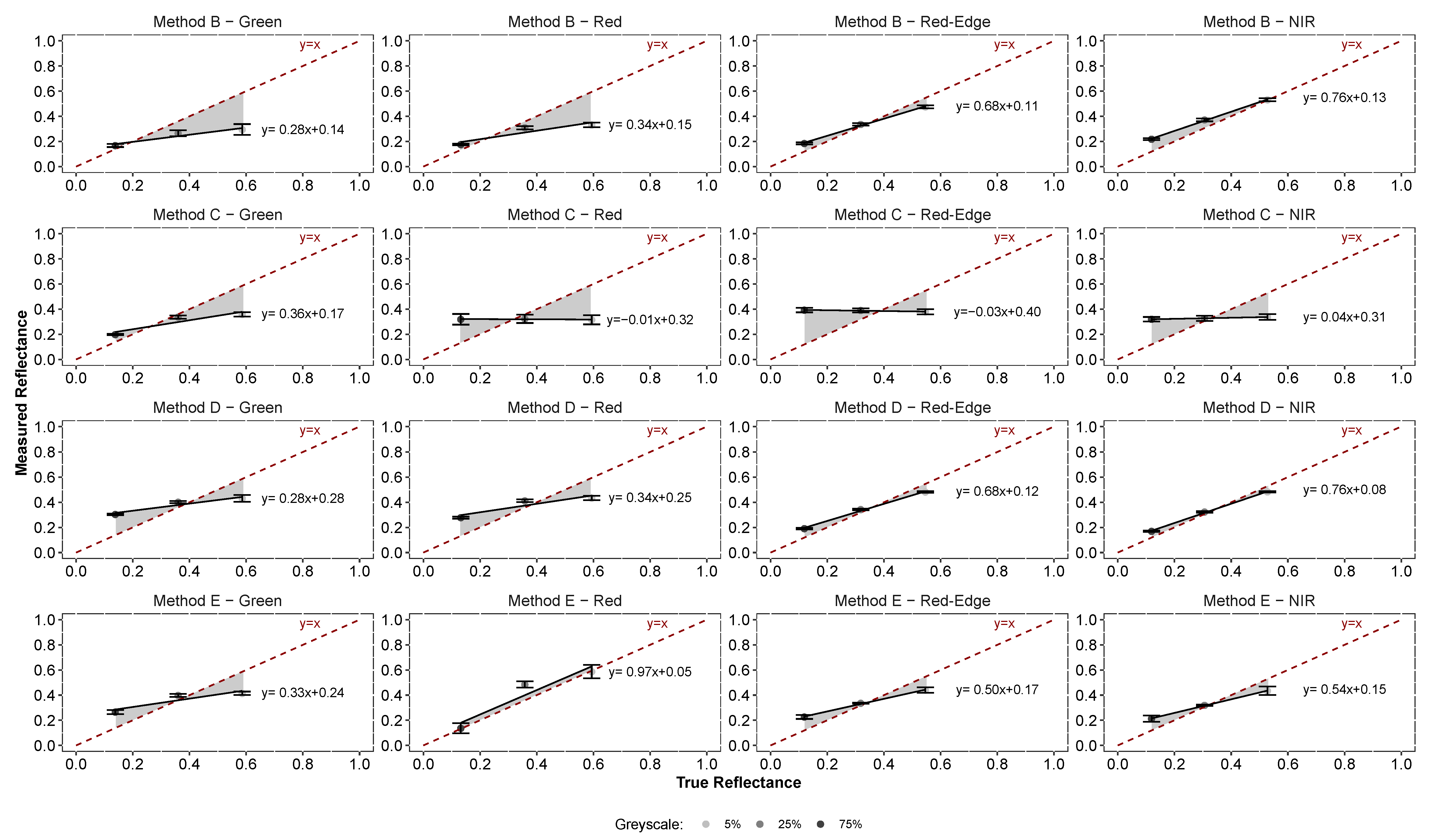

3.1. Objective 1: Compare the Performance of the Different Radiometric Calibration Methods

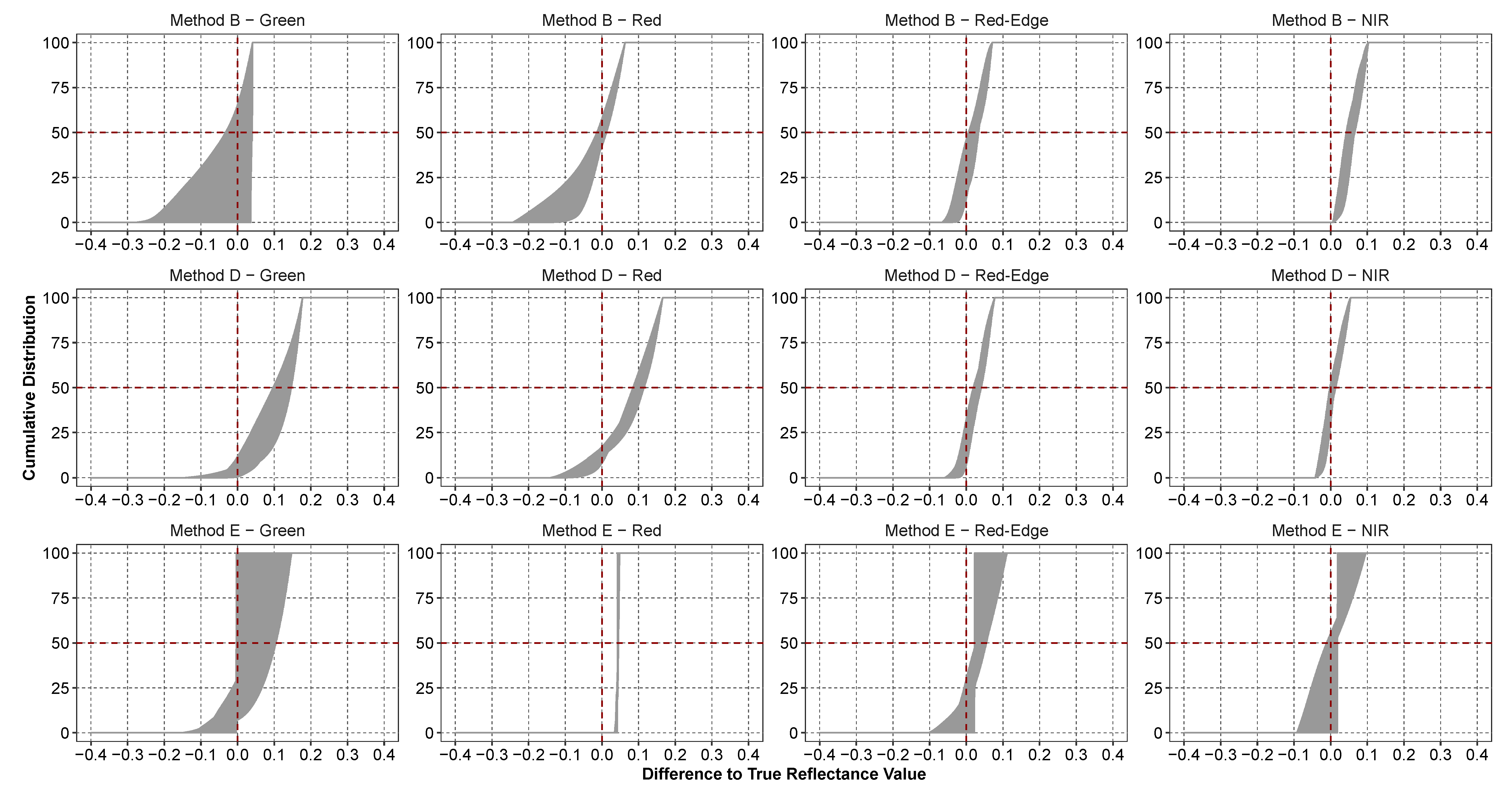

3.2. Objective 2. Quantify the Radiometric Error Associated with Each Calibration Method

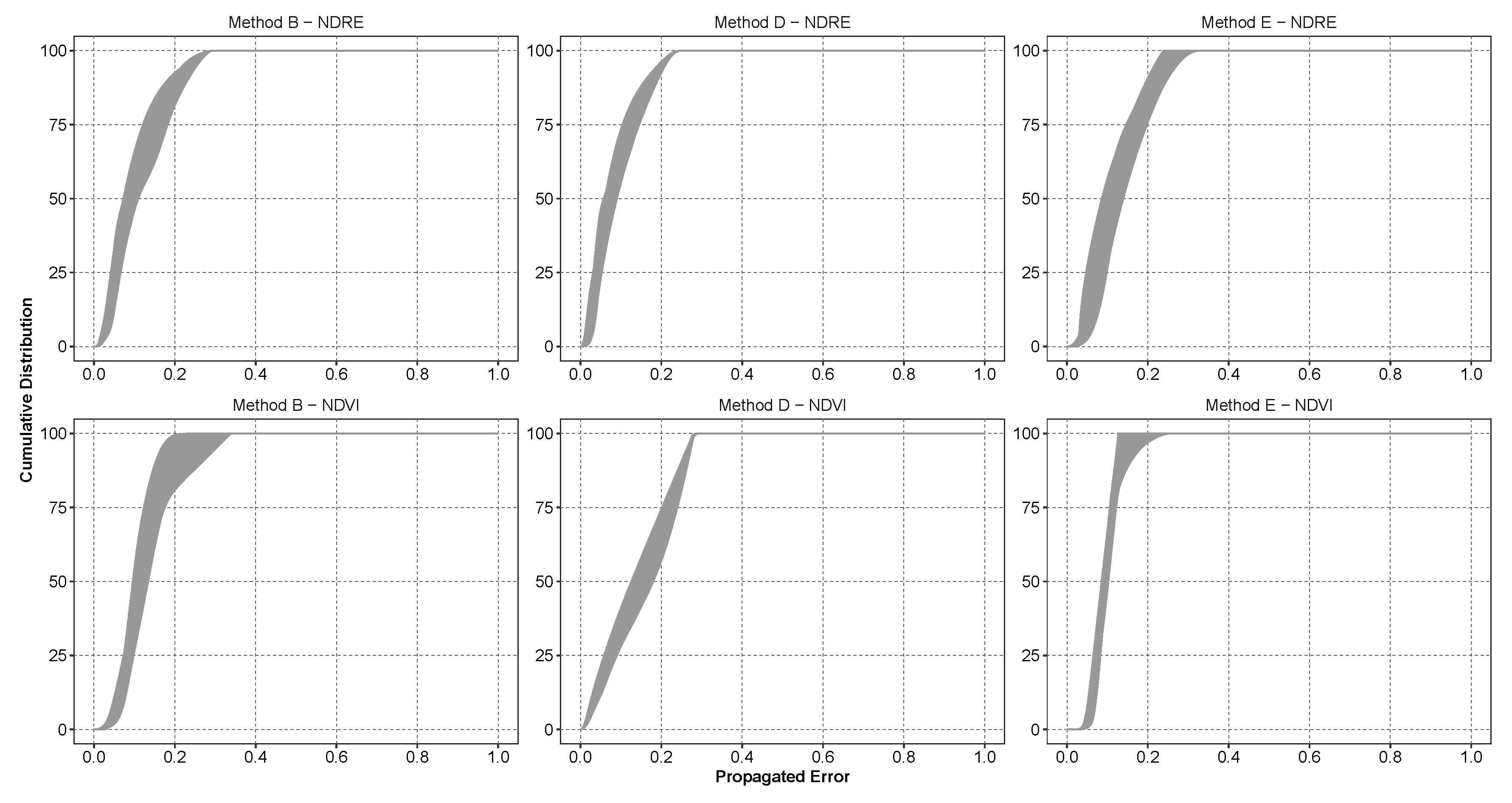

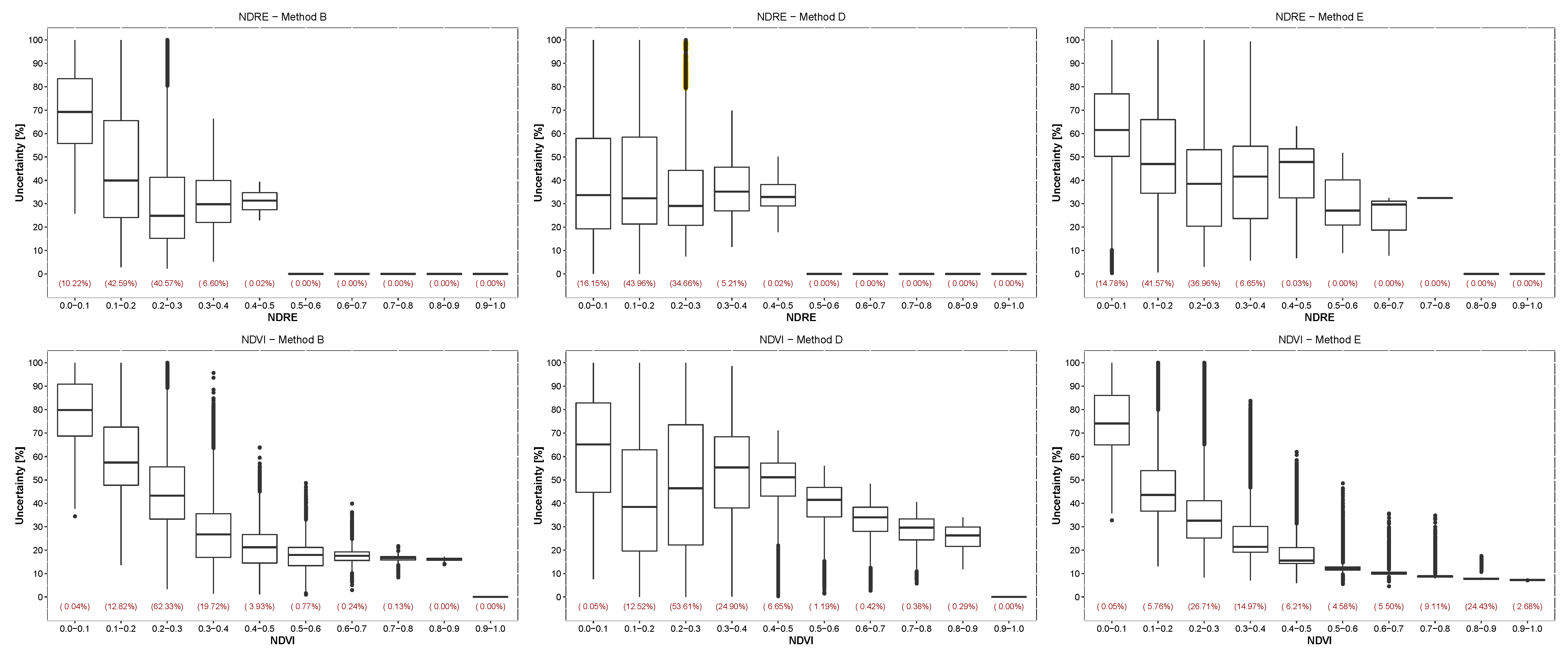

3.3. Objective 3: Quantify the Accuracy of Vegetation Indices

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- USGS. Landsat Missions—What Are the Acquisition Schedules for the Landsat Satellites; USGS: Reston, VA, USA, 2017.

- USGS. Landsat Missions—What Are the Band Designations for the Landsat Satellites; USGS: Reston, VA, USA, 2017.

- Mainy, A.; Agrawal, V. Satellite Technology: Principles and Applications; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Remondino, F.; Barazzetti, L.; Nex, F.; Scaioni, M.; Sarazzi, D. UAV Photogrammetry for Mapping and 3D Modeling—Current Status and Future Perspectives. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, 38, C22. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J. The Application of Small Unmanned Aerial Systems for Precision Agriculture: A Review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Laliberte, A.; Goforth, M.; Steele, C.; Rango, A. Multispectral Remote Sensing from Unmanned Aircraft: Image Processing Workflows and Applications for Rangeland Environments. Remote Sens. 2011, 3, 2529–2551. [Google Scholar] [CrossRef] [Green Version]

- Hruska, R.; Mitchell, J.; Anderson, M.; Glenn, N. Radiometric and Geometric Analysis of Hyperspectral Imagery Acquired from an Unmanned Aerial Vehicle. Remote Sens. 2012, 4, 2736–2752. [Google Scholar] [CrossRef] [Green Version]

- Gauci, A.; Brodbeck, C.; Poncet, A.; Knappenberger, T. Assessing the Geospatial Accuracy of Aerial Imagery Collected with Various UAS Platforms. Trans. ASABE 2018, 61, 1823–1829. [Google Scholar] [CrossRef]

- Jones, H.; Vaughan, R. Chapter 621, Preparation and Manipulation of Optical Data, Image Correction, Geometric Correction. In Remote Sensing of Vegetation: Principles, Techniques, and Applications; Oxford University Press: Oxford, UK, 2010. [Google Scholar]

- Jones, H.; Vaughan, R. Chapter 622, Preparation and Manipulation of Optical Data, Image Correction, Radiometric Correction. In Remote Sensing of Vegetation: Principles, Techniques, and Applications; Oxford University Press: Oxford, UK, 2010. [Google Scholar]

- Richter, R. Correction of Atmospheric and Topographic Effects for High Spatial Resolution Satelite Imagery. Remote Sens. 1997, 18, 1099–1111. [Google Scholar] [CrossRef]

- Bucholtz, A. Rayleigh Scattering Calculations for the Terrestrial Atmosphere. Appl. Opt. 1995, 34, 2765–2773. [Google Scholar] [CrossRef]

- Kedzierski, M.; Wierzbicki, D.; Sekrecka, A.; Fryskowska, A.; Walczykowski, P.; Siewert, J. Influence of Lower Atmosphere on the Radiometric Quality of Unmanned Aerial Vehicle Imagery. Remote Sens. 2019, 11, 1214. [Google Scholar] [CrossRef]

- Chiliński, M.; Ostrowski, M. Error Simulations of Uncorrected NDVI and DCVI during Remote Sensing Measurements from UAS. Misc. Geogr. 2014, 18, 35–45. [Google Scholar] [CrossRef]

- Iqbal, F.; Lucieer, A.; Barry, K. Simplified Radiometric Calibration for UAS-Mounted Multispectral Sensor. Eur. J. Remote Sens. 2018, 51, 301–313. [Google Scholar] [CrossRef]

- Herrero-Huerta, M.; Hernández-López, D.; Rodriguez-Gonzalvez, P.; González-Aguilera, D.; González-Piqueras, J. Vicarious Radiometric Calibration of a Multispectral Sensor from an Aerial Trike Applied to Precision Agriculture. Comput. Electron. Agric. 2014, 108, 28–38. [Google Scholar] [CrossRef]

- Del Pozo, S.; Rodríguez-Gonzálvez, P.; Hernández-López, D.; Felipe-García, B. Vicarious Radiometric Calibration of a Multispectral Camera on Board of an Unmanned Aerial System. Remote Sens. 2014, 6, 1918–1937. [Google Scholar] [CrossRef]

- Karpouzli, E.; Malthus, T. The Empirical Line Method for the Atmospheric Correction of IKONOS Imagery. Int. J. Remote Sens. 2003, 24, 1143–1150. [Google Scholar] [CrossRef]

- Gao, B.C.; Montes, M.; Davis, C.O.; Goetz, A. Atmospheric Correction Algorithms for Hyperspectral Remote Sensing Data of Land and Ocean. Remote Sens. Environ. 2009, 113, S17–S24. [Google Scholar] [CrossRef]

- Kutser, T.; Pierson, D.; Kallio, K.; Reinart, A.; Sobek, S. Mapping Lake CDOM by Satellite Remote Sensing. Remote Sens. Environ. 2005, 94, 535–540. [Google Scholar] [CrossRef]

- Jakob, S.; Zimmermann, R.; Gloaguen, R. The Need for Accurate Geometric and Radiometric Corrections of Drone-Borne Hyperspectral Data for Mineral Exploration: MEPHySTo—A Toolbox for Pre-Processing Drone-Born Hyperspectral Data. Remote Sens. 2017, 9, 88. [Google Scholar] [CrossRef]

- Smith, G.; Milton, E. The Use of the Empirical Line Method to Calibrate Remotely Sensed Data to Reflectance. Int. J. Remote Sens. 1999, 20, 2653–2662. [Google Scholar] [CrossRef]

- Von Bueren, S.; Burkard, A.; Hueni, A.; Rascher, U.; Tuohy, M.; Yule, I. Deploying Four Optical UAV-Based Sensors over Grassland: Challenges and Limitations. Biogeosciences 2015, 12, 163–175. [Google Scholar] [CrossRef]

- Baugh, W.; Groeneveld, D. Empirical Proof of the Empirical Line. Int. J. Remote Sens. 2008, 29, 665–672. [Google Scholar] [CrossRef]

- Stow, D.; Hope, A.; Nguyen, T.; Phinn, S.; Benkelman, C. Monitoring Detailed Land Surface Changes Using an Airborne Multispectral Digital Camera System. Trans. Geosci. Remote Sens. 1996, 34, 1191–1203. [Google Scholar] [CrossRef]

- Farrand, W.; Singer, R.; Merényu, E. Retrieval of Apparent Surface Reflectance from AVIRIS Data: A Comparison of Empirical Line, Radiative Transfer, and Spectral Mixture Methods. Remote Sens. Environ. 1994, 47, 311–321. [Google Scholar] [CrossRef]

- Price, R.; Anger, C.; Mah, S. Preliminary Evaluation of casi Preprocessing Techniques. In Proceedings of the 17th Canadian Symposium on Remote Sensing, Saskatoon, SK, Canada, 13–15 June 1995; pp. 694–697. [Google Scholar]

- Wang, C.; Myint, S. A Simplified Empirical Line Method of Radiometric Calibration for Small Unmanned Aircraft Systems-Based Remote Sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 1876–1885. [Google Scholar] [CrossRef]

- Tu, Y.; Phinn, S.; Johansen, K.; Robson, A. Assessing Radiometric Correction Approaches for Multi-Spectral UAS Imagery for Horticultural Applications. Remote Sens. 2018, 10, 1684. [Google Scholar] [CrossRef]

- Singh, K.; Frazier, A.E. A Meta-Analysis and Review of Unmanned Aircraft System (UAS) Imagery for Terrestrial Applications. Int. J. Remote Sens. 2018, 39, 5078–5098. [Google Scholar] [CrossRef]

- SenseFly. EBee Plus Drone User Manual; SenseFly Inc.: Cheseaux-sur-Lausanne, Switzerland, 2019. [Google Scholar]

- MicaSense. Parrot Sequoia® Datasheet: The Multi-Band Sensor Designed for Agriculture; MicaSense, Inc.: Seattle, WA, USA, 2019. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2019. [Google Scholar]

- Taylor, J. Introduction to Error Analysis, the Study of Uncertainties in Physical Measurements, 2nd ed.; University Science Books: New York, NY, USA, 1997. [Google Scholar]

- Defries, R.S.; Townshend, J.R.G. NDVI-derived land cover classifications at a global scale. Int. J. Remote Sens. 1994, 15, 3567–3586. [Google Scholar] [CrossRef]

- Teal, R.; Tubana, B.; Girma, K.; Freeman, K.; Arnall, D.; Walsh, O.; Raun, W. In-season prediction of corn grain yield potential using normalized difference vegetation index. Agron. J. 2006, 98, 1488–1494. [Google Scholar] [CrossRef]

- Behmann, J.; Steinrücken, J.; Plümer, L. Detection of early plant stress responses in hyperspectral images. ISPRS J.Photogramm. Remote Sens. 2014, 93, 98–111. [Google Scholar] [CrossRef]

- Li, F.; Miao, Y.; Feng, G.; Yuan, F.; Yue, S.; Gao, X.; Liu, Y.; Liu, B.; Ustin, S.L.; Chen, X. Improving estimation of summer maize nitrogen status with red edge-based spectral vegetation indices. Field Crops Res. 2014, 157, 111–123. [Google Scholar] [CrossRef]

| Aircraft | Horizontal Accuracy | ||

|---|---|---|---|

| Weight: | 1100 g | RTK Built-In: | Yes, activated |

| Size: | 110 cm | w/o GCP: | 3–5 cm |

| Wind Resistance: | 12 m/s | w/GCP: | 3–5 cm |

| Camera | Band Definition | ||

|---|---|---|---|

| Weight: | 72 g | Green: | [480–520] nm |

| Dimensions: | 59 × 41 × 28 mm | Red: | [640–680] nm |

| Image Resolution: | 1280 × 960 pixels | Red-Edge: | [730–740] nm |

| HFOV/VFOV/DFOV: | 61.9o/48.5o/73.7o | NIR: | [770–810] nm |

| Date | Start Time | End Time | Air Temperature | Humidity | Wind Speed | Cloud Cover |

|---|---|---|---|---|---|---|

| 19 July | 12:08 | 12:36 | 32.8 C | 66% | 0.0 m/s | Partly Cloudy |

| 2 August | 10:53 | 11:15 | 26.1 C | 75% | 2.6 m/s | Mostly Cloudy |

| 15 August | 12:04 | 12:40 | 31.1 C | 74% | 2.6 m/s | Partly Cloudy |

| 22 August | 10:45 | 11:09 | 31.1 C | 70% | 1.0 m/s | Clear |

| 29 August | 10:37 | 11:00 | 26.7 C | 68% | 1.5 m/s | Clear |

| 5 September | 11:27 | 11:51 | 29.4 C | 66% | 0.0 m/s | Clear |

| 19 September | 10:18 | 10:43 | 28.9 C | 73% | 0.0 m/s | Clear |

| Camera Band | Regression Equation | Goodness of Fit (r) |

|---|---|---|

| Green | 0.967 | |

| Red | 0.998 | |

| Red-Edge | 0.960 | |

| NIR | 0.996 |

| Radiometric | Green | Red | Red-Edge | NIR | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Calibration | 5% | 25% | 75% | All | 5% | 25% | 75% | All | 5% | 25% | 75% | All | 5% | 25% | 75% | All | All |

| Method A | 0.310 | 0.241 | 0.187 | 0.364 | 0.267 | 0.293 | 0.210 | 0.376 | 0.485 | 0.375 | 0.235 | 0.549 | 16,383 | 12,169 | 8441 | 18,477 | 15,616 |

| Method B | 0.310 | 0.113 | 0.042 | 0.278 | 0.266 | 0.058 | 0.046 | 0.231 | 0.077 | 0.030 | 0.068 | 0.089 | 0.029 | 0.071 | 0.101 | 0.106 | 0.327 |

| Method C | 0.231 | 0.040 | 0.062 | 0.203 | 0.293 | 0.089 | 0.214 | 0.312 | 0.174 | 0.082 | 0.277 | 0.282 | 0.198 | 0.056 | 0.206 | 0.243 | 0.445 |

| Method D | 0.168 | 0.047 | 0.167 | 0.202 | 0.165 | 0.060 | 0.147 | 0.192 | 0.066 | 0.030 | 0.074 | 0.087 | 0.047 | 0.024 | 0.053 | 0.062 | 0.252 |

| Method E | 0.175 | 0.047 | 0.134 | 0.189 | 0.132 | 0.140 | 0.098 | 0.181 | 0.117 | 0.020 | 0.114 | 0.138 | 0.125 | 0.018 | 0.112 | 0.141 | 0.277 |

| Green | Red | |

| Method B | (0.82) | (0.69) |

| Method C | (0.69) | (0.54) |

| Method D | (0.82) | (0.69) |

| Method E | (0.65) | (0.81) |

| Red-Edge | NIR | |

| Method B | (0.99) | (0.99) |

| Method C | (0.82) | (0.98) |

| Method D | (0.99) | (0.99) |

| Method E | (0.99) | (0.99) |

| Green | Red | Red-Edge | NIR | |

|---|---|---|---|---|

| [%] | [%] | [%] | [%] | |

| Method B | 0–4 | 3–17 | 70–88 | 63–87 |

| Method D | 2–29 | 3–25 | 68–86 | 67–94 |

| Method E | 2–15 | 29–58 | 65–90 | 54–100 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Poncet, A.M.; Knappenberger, T.; Brodbeck, C.; Fogle, M., Jr.; Shaw, J.N.; Ortiz, B.V. Multispectral UAS Data Accuracy for Different Radiometric Calibration Methods. Remote Sens. 2019, 11, 1917. https://doi.org/10.3390/rs11161917

Poncet AM, Knappenberger T, Brodbeck C, Fogle M Jr., Shaw JN, Ortiz BV. Multispectral UAS Data Accuracy for Different Radiometric Calibration Methods. Remote Sensing. 2019; 11(16):1917. https://doi.org/10.3390/rs11161917

Chicago/Turabian StylePoncet, Aurelie M., Thorsten Knappenberger, Christian Brodbeck, Michael Fogle, Jr., Joey N. Shaw, and Brenda V. Ortiz. 2019. "Multispectral UAS Data Accuracy for Different Radiometric Calibration Methods" Remote Sensing 11, no. 16: 1917. https://doi.org/10.3390/rs11161917