1. Introduction

With the imaging technique developed, the number of remote sensing (RS) images collected from earth observation (EO) satellites grows dramatically. Finding the specific region from a large number of RS images becomes the basic step for many RS applications [

1]. Therefore, developing the rapid and accurate retrieval method to obtain the contents according to users’ demands has drawn increasing attention recently. In the past, the metadata within the EO products (e.g., acquisition time, longitude, latitude, etc.) was the key clue for content retrieval [

2]. However, this text-based retrieval method is not able to deal with the increasingly complex tasks since the information within the metadata is limited. Thus, content-based image retrieval (CBIR) comes in the RS community. For a query image, CBIR utilizes a series of image processing techniques, such as feature extraction and similarity calculation, to retrieve the most similar target images from the image archive. Any approaches, focusing on organizing the image archive according to the images’ contents, can be regarded as the CBIR methods [

3]. An ocean of successful CBIR methods was presented, such as [

4,

5,

6], etc.

As an important application of CBIR, remote sensing image retrieval (RSIR) is developed based on the framework of CBIR. A basic flowchart of the RSIR method [

7] is displayed in

Figure 1, including two indispensable blocks. The first block is

feature learning/extraction, which maps the query and target images into the feature space. The second block is

similarity matching, which uses a proper measure to weigh the similarities between query and targets images. Then, the retrieval results

of query

q can be obtained by the similarity order. The main target of the feature learning/extraction block is obtaining the useful feature representation for RS images, while the primary goal of the similarity matching block is acquiring the resemblance between RS images efficiently. According to the basic framework, RSIR can be defined as follows. Assume that the RS image archive contains

N images

. For a query image

q, its retrieval results

can be described as

where

indicates the distances between

q and target images, which can be calculated in the feature space using a proper similarity metric, and

denotes the ranking process.

Although the basic RSIR system exhibited in

Figure 1 is simple, the implementation is not as easy as as imaginary. For one RS scene, there are many specific characteristics that increase the difficulty of RSIR [

8,

9,

10,

11]. For example, the objects within one RS scene are diverse in type and huge in volume; the scales of the same objects may be different; and various thematic classes may be contained. There is an RS image shown in

Figure 2. From the observation of

Figure 2, we can find there are at least five kinds of objects, including “Freeway”, “Vehicle”, “Tree”, “Building”, and “Parking lot”. In addition, the scales of the same object are different (e.g., “Vehicle”). These special properties make the RSIR is an open and tough task.

As displayed in

Figure 1, the first and crucial step of an RSIR method is the feature learning/extraction. The ideal RS image representation can not only capture the complex contents within the RS image, but also reflect the multi-scale characteristic of diverse objects [

12]. Traditional features used for RS images are always handcrafted features, including the low-level and mid-level features. The common low-level features are the texture feature obtained from gray-level co-occurrence matrix (GLCM) [

13], the energy of sub-band of discrete wavelet transform [

14], the homogeneous texture feature obtained by Gabor filters [

15], etc. The usual mid-level features are the Bag-of-Words (BOW) [

16] feature and its extensions. Recently, with the development of the deep learning, especially the convolutional neural network (CNN) [

17,

18], the great changes have been placed in the RS image processing field. More and more successful applications [

19,

20,

21,

22] prove that the features learned by CNNs are more effective for describing the RS images. These kinds of features are named high-level features or deep features.

Although the aforementioned features provide the positive contributions to RSIR, their drawbacks are also distinct. For low-level and mid-level features, their RSIR performance is stable but satisfactory enough [

1]. The deep feature achieves cracking behavior in RSIR; nevertheless, the learning process is expensive since the most of the CNNs are supervised models, which need a tremendous amount of labels. Therefore, it is necessary to develop an effective and cheap deep feature learning/extraction method for RSIR.

In this paper, we present a new deep feature learning method for RSIR. Different from most of the existing CNN based methods, our deep feature learning method is an unsupervised model. It is developed in the BOW framework, so we name it deep bag of words (DBOW) in this paper. Like the BOW feature, we can obtain the DBOW feature by the following two steps: (i) learning the deep descriptors, and (ii) encoding the DBOW feature. For step (i), to decrease the difficulty of deep descriptor learning, we divide an RS image into image patches. On the one hand, the sub-sampled patches are less complex and more compact for exploring the diverse contents of RS image. On the other hand, the multi-scale property can be fully captured though adjusting the size of patches, which is an ordinary process. Moreover, we propose the deep convolutional auto-encoder (DCAE) model to obtain the discriminative features from those patches, which are regarded as the deep descriptors of the RS image. For step (ii), the k-means algorithm is used to cluster all of the deep descriptors to get the codebook. Then, the DBOW feature corresponding to an RS image can be acquired by counting the frequency of the single codewords. Finally, the retrieval results are obtained according to the distances between DBOW features.

The main contributions of our work can be summarized as follows:

Taking the properties of complex contents and multiple semantic classes into account, we use image patches rather than the whole RS image to learn the descriptors. This is beneficial to capture the information of diverse objects.

Considering the multi-scale characteristic, we choose two patch generation schemes to generate two kinds of patches with different scales. In addition, the different kinds of patches can capture the complex contents within the RS image in a complementary manner.

To deeply mine the inner information of image patches and obtain the effective representations, rather than the handcrafted feature, we develop an unsupervised deep learning model to learn the discriminative descriptors.

Experiments are conducted on different RS image data sets, which cover different resolutions and different types of RS images. The encouraging results prove that the deep feature obtained by our method is effective for RSIR.

Before describing our RSIR method, there are two points we want to explain. The first is the difference between the RS image classification and RSIR, which are two hot research topics in the RS community. Image classification aims at grouping the images according to some common characters, while RSIR focuses on finding the similar images from the archive in accordance with the contents. We take three RS images exhibited in

Figure 3 above as an example. All of them are labeled “Agricultural” in the UCMerced archive [

23,

24]. The goal of image classification is developing a classifier to categorize them into same group. Nevertheless, the target of RSIR is finding the similar ones. In other words,

Figure 3b, rather than

Figure 3c, should be ranked higher if

Figure 3a is the query. In short, the biggest difference between image classification and image retrieval is that the images within the same class may not similar.

The second one is the difference between our retrieval method and another RSIR approach proposed in the literature [

25], which was developed on the basis of the BOW based CNN model. Although both of us utilize the BOW and the deep neural network paradigm to complete RSIR, there are still many differences between two methods.

First, the retrieval scenario is different. The method proposed in [

25] aims at text-based image retrieval. The original query is the text (e.g., “Daisy Flower”). In addition, to obtain the accurate retrieval results, the authors expand the input using the click through data. The final query is the combination of the text and clicked images. Our method focuses on image-based retrieval, i.e., the input is only an image without any text information.

Second, the units for BOW construction is different. The basic units for constructing the BOW feature in [

25] are the text words. For example, the BOW feature of a “puppy dog” image is {“small dog”, “white dog”, “dog chihuahua”}. The values of different elements can be represented by the inverse document frequency (IDF) score [

26]. The basic units for constructing the BOW feature in our method are visual words, i.e., image patches. Since we use a deep neural network to learn the representation of patches, we name the final BOW feature DBOW.

Third, the framework is different. For the method in [

25], the BOW features are used to train the CNN model. As mentioned above, when user inputs a query text, a set of images can be obtained according to the click through data. The function of the CNN model is ranking these images based on their similarity relationships, which are decided by BOW and high-level visual features simultaneously. For our method, the CNN model is used to generate the DBOW features. We use the DCAE model to learn the latent features from the image patches. Then, the learned deep features are integrated to construct the DBOW features. The retrieval results of a query image are decided by a proper distance metric (e.g., L1-norm) in the feature space.

Apart from the differences discussed above, there is another important point we want to touch. This is that the deep neural network in [

25] should be trained in the supervised manner, while our DCAE model can be trained in the unsupervised manner.

The rest of this paper is organized as follows. The published literature related RSIR are discussed in

Section 2.

Section 3 presents the proposed deep feature learning method, including the preliminaries, deep descriptor learning, and DBOW feature generation. The experimental results counted on different RS data sets are displayed and discussed in

Section 4.

Section 5 provides the conclusions.

3. Methodology

Similar with the basic RSIR pipeline, the framework of RSIR based on the proposed feature learning method is shown in

Figure 4. When a user inputs the query image

q, its DBOW feature is learned by our feature learning method. Then, the similarities between the query and target RS images are calculated in the feature space to obtain the retrieval results

. To ensure the obtained feature can capture the complex contents of RS image, our feature learning algorithm is divided into two parts, including “Patch-Based Deep Descriptor Learning” and “Deep Bag-of-Words Generation”. The first part aims at finding the effective image descriptors with the help of deep learning, while the second part focuses on integrating all deep descriptors together to generate the final DBOW.

3.1. Preliminaries

To describe our feature learning method more clearly, we first introduce some preliminaries in this part.

3.1.1. Convolutional Auto-Encoder

Convolutional Auto-Encoder (CAE) [

46] is a popular and effective unsupervised feature learning technology, which is developed based on Auto-Encoder (AE). Similar to AE, CAE is a hierarchical feature extractor, aiming at discovering the inner information of images. Unlike AE, due to the utilization of the two-dimensional convolution, CAE can preserve the images’ structure (e.g., neighborhood relationship, spatial locality, etc.) during the feature learning, which is beneficial to obtain the more discriminative features. A CAE model usually consists of an input layer, a hidden layer, and an output layer [

9]. The transformation from the input data to the hidden layer is named “encode”, while the procedure from the hidden layer to the output data is recoded “decode”. The target of encode is mining the inner information of the input data, and the goal of decode is re-constructing the input data. The framework of common CAE is exhibited in

Figure 5.

The basic operations within the encode section consist of convolution, nonlinearity and pooling, which aim at extracting the detail information, increasing the nonlinear capacity, and avoiding the overfitting, respectively [

46]. Assume that we use

I to represent the input image, and

I is a mono-channel image. Thus, the encode process of CAE can be formulated as

where

indicates the

k-th feature map in the hidden layer,

means the network parameters related to the

k-th feature map, ⊗ is the convolution, and

denotes the activation function. The modules within the decode stage contain unpooling and trans-convolution, which focus on up-sampling and reconstruction separately. We can formulate the decode process as

where

means the group of feature maps within the hidden layer,

is the transposition of

,

is the bias, and

denotes the re-constructed data. To update the parameters of CAE, the mean-squared error loss function is always selected:

where

indicates the set of train samples consisted of

N images

,

means the set of re-constructed samples

corresponding to the train sample set, and

denotes the parameters of CAE.

3.1.2. Bag-of-Words Feature

BOW feature [

47] is a popular histogrammic feature representation in the RS image processing community. Its usefulness is proved by many successful applications [

23,

48]. The usual steps for generating the BOW feature are: (i) collect the key points of the images; and (ii) transform the key points into the histogrammic features. For step (i), the SIFT descriptor is often used to find the key points. For step (ii), researchers always select the

k-means algorithm to construct a codebook, and then the BOW features can be obtained by counting the distances between key points and codewords. The performance of the BOW feature is impacted by several factors, such as the type of key points, the codebook size, etc. Among these factors, the key points extraction is the foundation, which restricts the behavior of the BOW feature directly.

Although the normal BOW feature has been achieved successes in different RS applications, its performance is always limited since the complex contents of RS images. In this paper, to capture the diverse information of RS images, the DCAE is used to learn the deep descriptors for RS images. Then, the more effective DBOW features can be generated using the learned descriptors.

3.2. Patch Based Deep Descriptor Learning

Due to the specific properties of RS image (e.g., contents are diverse in type and large in volume, the scale of different objects is different, etc.), we propose a patch based deep descriptor learning method to extract the effective image descriptors for DBOW. The framework of the proposed descriptor learning method is shown in

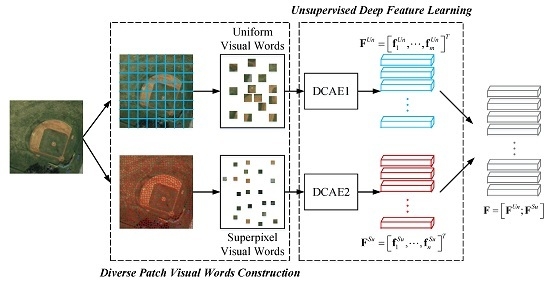

Figure 6. It consists of two parts, i.e., diverse visual words construction and unsupervised deep feature learning. The visual words of an RS image are generated by different region sampling schemes, in which both the diversity of objects and the multi-scale characteristic are taken into account. The unsupervised feature learning is accomplished by DCAE models, which are strong enough to learn the effective latent features from the patches. Finally, to grasp the information from the global aspect, we stack the learned deep features corresponding to different patches together to construct the deep descriptor.

3.2.1. Diverse Patch Visual Words Construction

To mine the RS image’s information comprehensively, we use different region sampling schemes to generate diverse types of visual words.

First, the uniform grid-sampling scheme [

49] is introduced. The scheme divides the RS image into a number of regular rectangle regions with the same size. Then, the RS image can be represented by a set uniform image patches. We record these patches uniform visual words here for short. The size

and spacing

of the grid influence the uniform visual words directly in general. In this paper, we divide the RS image into a series of non-overlapped patches, so that only the size of the grid

should be considered in the following experiments.

Second, the superpixel technique is selected to generate another set of visual words. We name them superpixel visual words in this paper. A superpixel is an image over-segmented region obtained by some constraints [

50], such as pixel value, location, etc. The desirable superpixels can adhere well to image boundaries, and group similar pixels together accurately. These advantages are beneficial for exploring the complicated objects within the RS images. Thus, choosing a good superpixel algorithm is important to superpixel visual words construction.

In this paper, the simple non-iterative clustering (SNIC) [

51] algorithm is chosen to generate the superpixels. The SNIC algorithm begins with the superpixels’ centroids initialization, which is completed by sampling the pixels in the image plane. Then, the priority queue and online update scheme are utilized to adjust the initial superpixels according to the distances between those superpixels and their 4- or 8-connected pixels. To accurately weigh the distances between pixels and centroids, each pixel is described using a 5-dimensional vector

, where

indicates the pixel’s location, and

denotes the pixel value in

CIELAB color space. The formulation of distance between pixels is

where

s and

are the parameters for normalizing the spatial and color distances. The parameter

s can be decided by the expected number of superpixels

K according to the formulation

, where

indicates the number of pixels in an image. The parameter

should be provided by users. SNIC is an improved version of the successful simple linear iterative clustering (SLIC) algorithm [

52]. Compared with SLIC, SNIC can achieve higher performance with less time. More details can found in the original literature [

51].

The shape of superpixels is irregular, and the size of superpixels is not strictly uniform. To use DCAE to learn their latent features, we should unify the shape and size of those superpixels. Here, a simple scheme is used to normalize the shape and size of superpixels. First, we record the locations (image plane) of all pixels within a superpixel. Then, the maxima and minima of the x-axis and y-axis are selected, which are recorded , , , and . Next, a rectangle region can be fixed, and the coordinates of the four vertices are , , , and . Finally, all of the rectangle regions are re-sized to the same size. The set of normalized superpixels is the superpixel visual words.

3.2.2. Unsupervised Deep Feature Learning

When we obtain the visual words of an image, the next step is learning their latent features using the CAE. To mine the deep latent feature from the visual patches, we expand the single CAE to the DCAE in this work. The framework of the utilized DCAE is shown in

Figure 7. Note that we stipulate that the size of different kinds of visual words is the same. In other words, the superpixels should be normalized to the size of

, which keeps pace with the uniform visual words.

The DCAE model transforms the input image

I into a latent feature vector

through

l encode layers. Symmetrically, there are also

l decode layers to re-construct the input image. Like CAE, we also use the mean-squared error loss function here to update the weights of our DCAE. The number of encode/decode layers

l could be valued according to the size of input data. Here, to speed up the DCAE training and ensure the convergence of network, we initialize the parameters of DCAE in greedy, layer-wise fashion [

9,

53]. In other words,

l CAEs are pre-trained for the initialized parameters.

As shown in

Figure 6, there are two sets of visual words for an RS image

I. Thus, we adopt two DCAE models to extract their latent features, respectively. Assume that there are

m patches in the uniform visual words, and

n patches in the superpixel visual words. Through two different DCAEs, we can obtain the latent features

and

, where

corresponds to the

i-th patch within the uniform visual words and

relates to the

j-th patch within the superpixel visual words. After that, two sets of latent features will be stacked together for constructing the deep descriptor

for

I.

3.3. Deep Bag-of-Words Generation

Suppose there are

N RS images

in the archive. After the deep descriptor learning procedure discussed in

Section 3.2, we can obtain

N deep descriptors

. Since the number of patches of different RS images is different, the size of the deep descriptors is not uniform. It is hard to calculate the similarities between RS images using their deep descriptors directly. Consequently, we imitate the BOW feature to transform deep descriptors into the histogrammic feature, and name the final representation DBOW. The pipeline of DBOW generation is exhibited in

Figure 8.

Like BOW feature, the codebook should be constructed first. Here, we integrate all of the deep descriptors together and use a k-means algorithm to group them into k clusters. The centers of different clusters are regarded as the codebook. Then, the deep descriptors learned from each RS images are mapped on the codebook and quantized by assigning the label of the nearest codeword. Finally, the DBOW feature of the RS image can be represented by the histogram of the codewords.

3.4. RSIR Formulation

When we get the DBOW features from the RS images, the retrieval results can be obtained according to the proper histogram-based distance metrics, such as L1-norm distance, L2-norm distance, cosine distance, etc. In more detail, for the query q and target RS images , the retrieval process can be formulated as , where indicates the distances between q and target images, which can be calculated using the DBOW features and . For example, suppose we select L1-norm distance to measure the similarities between images, the definition of is , where d indicates the dimension of DBOW features, means the j-dimensional element of , and illustrates the j-dimensional element of .

4. Experiment and Discussion

All of the experiments are completed using an HP Z840 workstation (Hewlett: Packard, Palo Alto, CA, USA) with GeForce GTX Titan X GPU (NVIDIA: Santa Clara, CA, USA), Inter Xeon E5-2630 CPU and 64G RAM (Intel: Santa Clara, CA, USA).

4.1. Experiment Settings

4.1.1. Test Dataset Introduction

To comprehensively verify the effectiveness of our method, we apply it on four published datasets. The first data set is the UCMerced archive [

23,

24] (

http://vision.ucmerced.edu/datasets/landuse.html). There are 21 land-use/land-cover (LULC) categories in this data set, and the number of high-resolution aerial images within each category is 100. The pixel resolution of these images is 0.3 m, while the size of them is

. We name the UCMerced archive

ImgDB1 in this paper for short, and some examples are exhibited in

Figure 9. The second one is a satellite optical RS image data set [

45] (

https://sites.google.com/site/xutanghomepage/downloads). There are a total of 3000 RS images with a ground sample distance (GSD) of 0.5 m in this archive. These RS images are manually labeled into 20 land-cover categories, and the number of images within each category is 150. The size of these RS images is also

. We record this archive

ImgDB2 in this paper for convenience, and some examples are displayed in

Figure 10. The third one for testing our method is a 12-class Google image data set [

48,

54,

55] (

http://www.lmars.whu.edu.cn/prof_web/zhongyanfei/e-code.html). For each land-use category, there are 200 RS images with the spatial resolution of 2 m. The size of images within this archive is

. We record Google image data set

ImgDB3 here for short, and some examples from different categories are shown in

Figure 11. The last one is the NWPU-RESISC45 (

http://www.escience.cn/people/JunweiHan/NWPU-RESISC45.html) data set, which was proposed in [

56]. The NWPU-RESISC45 data set is a large-scale RS image archive, which consists of 31,500 images and 45 semantic classes. All of the images are collected from Google Earth, covering more than 100 countries. The spatial resolution of those images varies from 30 to 0.2 m, and the size of each image is

. We record NWPU-RESISC45

ImgDB4 here for conveniens, and some examples are displayed in

Figure 12.

4.1.2. Experimental Settings

As displayed in

Figure 4, the pipeline of the RSIR method in this paper consists of feature learning and similarity matching. For feature learning, the proposed DBOW features are learned from each RS image. Then, the similarity matching is completed using the simple distance metric.

To accomplish our unsupervised feature learning method for RSIR, there are some parameters that should be set in advance. In the visual words construction stage, the size of uniform and superpixel visual words

is optimally set to be

for ImgDB1, ImgDB2, and ImgDB3, and

for ImgDB4. In the descriptors learning stage, the configurations from our DCAE model are shown in

Table 1. The number of convolution kernels

is optimally set 40, 36, 36, and 32 for ImgDB1, ImgDB2, ImgDB3 and ImgDB4, respectively. In addition, 80% images are selected randomly from the archive to train the DCAE model. In the DBOW generation stage, the size of codebook

k is set to be 1500 uniformly in the following experiments. The influence of different parameters is discussed in

Section 4.5.

Note that the parameters mentioned above are empirical, which should be adjusted according to the different image archives. In this paper, we tune these parameters through a fivefold cross-validation method using training set. In addition, to speed the tuning process, the parameters are decided through the two steps. First, only half of images within the archive are selected to tune the proper range of the parameters. Second, the whole training set (80% images within the archive) is used to decide the optimal values of parameters.

4.1.3. Assessment Criteria

In this paper, the retrieval precision and recall are adopted to be the assessment criteria. For a query image q, assume that the number of retrieved RS images is , the number of correct retrieval results is , and the number of correct target images within the archive is . The definition of retrieval precision is , while the formulation of retrieval recall is . Note that “correct” means that the retrieved image belongs to the same semantic class with the query q. To provide the objective numeric results, all of the images in the dataset are selected to be the queries, and the displayed results are the average value. An acceptable RSIR method can rank the most similar target images in the topper positions. Thus, only the top 20 retrieval results are used to count the assessment criteria in the following experiments unless otherwise stated.

4.2. Distance Metric Selection

When we get DBOW features from the RS images, we should choose a distance metric to measure the similarities between images for the retrieval results. Thus, the performance of the selected metric is important for the RSIR task. To find a proper metric for our DBOW features, we select eight common histogram-based distance metrics and study their performance on the retrieval. The metrics are L1-norm, intersection, Bhattacharyya, chi-square, cosine, correlation, L2-norm, and inner products. For two RS images

and

with DBOW features

and

of dimension

d, the definitions of different histogram-based distance metrics are

The retrieval results of different RS image archives counted on the top 20 retrieved images are shown in

Table 2. From the observation of the results, we can find that the behavior of L1-norm, intersection, Bhattacharyya and chi-square is better than that of other four distance metrics, and the best distance metric for our DBOW feature is L1-norm distance. In more detail, the weakest distance metric is inner product, which means that the inner product is improper for weighing the similarities between the images under the DBOW feature space. The behavior of L2-norm is stronger than that of the inner product; however, its performance cannot reach the satisfactory level. The performance of cosine and correlation is similar, which is better than that of inner product and L2-norm. Bhattacharyya and chi-square outperform the metrics mentioned above, and their behavior is similar to each other. Although the behavior of intersection is better than that of Bhattacharyya and chi-square, and its performance is close to L1-norm, L1-norm still outperforms intersection slightly. This indicates that L1-norm distance is the most suitable metric among eight metrics for DBOW features. Therefore, L1-norm distance is used as the distance metric in the following experiments.

4.3. Feature Structure

Before providing the numerical assessment, we first study the structure of our DBOW features to visually estimate if they are discriminative enough for RSIR tasks. In this paper, we choose the t-distributed stochastic neighbor embedding (t-SNE) algorithm [

57] to reduce the features’ dimension. Then, the structure of features is displayed in the 2-dimensional space. Here, besides the DBOW features, we also exhibit the other four common features’ structure as the reference, including the homogeneous texture (HT) features [

15] obtained by Gabor filters, the color histogram (CH) features [

45], the SIFT-based BOW (S-BOW) features [

23], and the deep network features (DN) extracted using the pre-trained Overfeat net [

58]. Note that the DN features mentioned above are the outputs of the seventh and eighth fully connected layers, so that we record them DN-7 and DN-8. The dimensions of six types of features are 1500 (DBOW/S-BOW), 60 (HT), 384 (CH), 4069 (DN-7/8), respectively. To accomplish the t-SNE algorithm, the L1-norm distance is selected for histogrammic features (i.e., DBOW, CH, and S-BOW), and Euclidean distance is adopted for others. The visual results of different RS image archives are shown in

Figure 13,

Figure 14,

Figure 15 and

Figure 16.

From the observation of figures, we can find that clusters derived by the low-level features (HT, CH) are mixed together (

Figure 13a–b,

Figure 14a–b,

Figure 15a–b, and

Figure 16a–b), which cannot provide enough discriminative information for RSIR. The mid-level features (S-BOW) perform better, which lead to the initial separation among diverse classes (

Figure 13c,

Figure 14c,

Figure 15c, and

Figure 16c). However, the information provided by S-BOW features is not adequate to complete the RSIR task with satisfaction. The structure of deep features (DN-7 and DN-8) is clearer than that of low-/mid-level features (

Figure 13d–e,

Figure 14d–e,

Figure 15d–e, and

Figure 16d–e). Although the derived clusters reach separable stage, the relative distances between diverse clusters are not distinct enough. This limits their performance on RSIR. Different from the other five kinds of features, the clusters obtained by our DBOW features are separable obviously (

Figure 13f,

Figure 14f,

Figure 15f, and

Figure 16f). In addition, the relative distances between different clusters are distinct. This is beneficial to process RS images to an effective content retrieval stage. The encouraging results indicate that the DBOW feature is useful to the RSIR task. The following experiments also prove this point.

4.4. Retrieval Performance

The following RSIR methods are selected to assess the behavior of our DBOW feature on RSIR objectively:

The RSIR approach developed by the fuzzy similarity measure [

41]. To explore useful information of RS images, they are segmented into different regions first. Then, the regions are represented by the fuzzy descriptors, which aims at overcoming the influence of uncertainty and blurry boundaries between segmented regions. Finally, the similarities between RS images are converted into the resemblance between fuzzy vectors, which is accomplished by the region-based fuzzy matching (RFM) measure [

41]. We record this method

RSIR-RFM for convenience.

The RSIR method presented in [

23]. The S-BOW features are adopted to represent RS images, and L1-norm distance is selected to decide the similarities between RS images. The RSIR results are obtained in accordance with the distance order. Here, the dimension of SBOW features is 1500. We name this method

RSIR-SBOW for short.

The RSIR method based on the hash features [

12]. The successful kernel-based hashing method [

59] is introduced to encode the RS images. Then, the similarities between images are obtained by hamming distance in the hash space. Here, we set the length of hash code to be 16 bits, and record this method

RSIR-Hash for short.

The RSIR method based on deep features obtained by the pre-trained CNNs [

60]. The Overfeat net [

58], which is pre-trained using the imageNet dataset [

61], is selected to extract the RS images’ features. The outputs of seventh and eighth layers are regarded as the deep features. The Euclidean distance is selected to weigh the similarities between deep features. The RSIR methods based on different deep features are recorded

RSIR-DN7 and

RSIR-DN8, respectively, and the dimensions of two deep features are 4096.

The results of three archives counted on top 20 retrieval images are shown in

Figure 17, where our RSIR method is recorded

RSIR-DBOW. Note that the parameters of different comparisons are set according to the original literature. From the observation of figures, it is obvious that our RSIR method outperforms other comparisons.

For ImgDB1, the performance of RSIR-RFM is the weakest among the six RSIR methods. This indicates that the region-based representations cannot explore the complex contents within the RS images, which limits their contributions on RSIR. RSIR-SBOW and RSIR-Hash perform better than RSIR-RFM. Their performance is similar to each other. The reason behind this is that they all use SBOW features to represent the RS images, resulting in the captured information from the RS images is similar. Due to the strong capacity of information mining, the features extracted by the pre-trained deep CNNs can describe the RS images clearly, which leads to the stronger performance on RSIR. Compared with three RSIR methods mentioned above, we can easily find that the behavior of RSIR-DN7 and RSIR-DN8 is enhanced to a large degree. An interesting observation is that the performance of RSIR-DN7 and RSIR-DN8 is almost the same, which means that the last two fully connected layers have the similar capacity for feature learning. Although the retrieval results of deep based methods are positive, the performance of them is still weaker than that of our RSIR-DBOW. Moreover, there is a distinct performance gap between our RSIR-DBOW and other comparisons, and the gap becomes larger with the number of retrieval images increases. This proves that the representations learned by our feature learning method can fully explore the complex contents within the RS images, which is beneficial to RSIR tasks. For retrieval precision, comparing with other comparisons, the highest improvements obtained by our method are 43.75% (RSIR-RFM), 29.66% (RSIR-SBOW), 29.30% (RSIR-Hash), 12.46% (RSIR-DN7), and 12.34% (RSIR-DN8). For retrieval recall, the biggest enhancements generated by our method are 8.75% (RSIR-RFM), 5.93% (RSIR-SBOW), 5.86% (RSIR-Hash), 2.49% (RSIR-DN7), and 2.47% (RSIR-DN8).

For the other three image archives, we can find the similar results, i.e., our RSIR-DBOW achieve the best performance compared with all of the comparisons. For ImgDB2, the highest enhancements on retrieval precision/recall generated by our method are 49.93%/6.66% (RSIR-RFM), 29.13%/3.88% (RSIR-SBOW), 28.93%/3.86% (RSIR-Hash), 19.28%/2.57% (RSIR-DN7), and 19.29%/2.58% (RSIR-DN8). For ImgDB3, the biggest improvements on retrieval precision/recall obtained by our method are 51.95%/5.19% (RSIR-RFM), 39.35%/3.93% (RSIR-SBOW), 40.28%/4.03% (RSIR-Hash), 22.66%/2.27% (RSIR-DN7), and 23.06%/2.31% (RSIR-DN8). For ImgDB4, the biggest improvements on retrieval precision/recall obtained by our method are 56.25%/1.61% (RSIR-RFM), 45.13%/1.29% (RSIR-SBOW), 47.66%/1.36% (RSIR-Hash), 21.61%/0.62% (RSIR-DN7), and 22.68%/0.65% (RSIR-DN8).

To further study the retrieval performance of our method, we add the recall–precision curve to observe the retrieval behavior with the number of retrieval images increasing. The comparisons’ results are also counted as reference. The results are exhibited in

Figure 18. From the observation of curves, we can easily find that our method achieves the best performance on four data sets. These encouraging results illustrate the effectiveness of our RSIR method again.

The retrieval behavior across the different semantic categories is summarized in

Table 3,

Table 4,

Table 5 and

Table 6. The results are counted using the top 20 retrieval images. From the observation of these tables, it is obvious that our method performs best in most of the semantic categories. In addition, an encouraging observation is that our RSIR method enhances the retrieval performance to a large degree for many categories, for which other approaches’ behavior is not satisfactory. For example, the retrieval precision of different comparisons for “Dense Residential” within ImgDB1 is less than 0.5, but our method can achieve 0.9595. Furthermore, ImgDB4 is a large-scale RS image data set, no matter on the scene classes or on the total number of images. Our method can still achieve the superior performance on ImgDB4, which proves that our unsupervised feature learning method is useful to RSIR.

4.5. Retrieval Example

Apart from the numerical assessment, we provide some retrieval examples in this section. Four queries are selected from different RS image archives randomly. Then, different RSIR methods are adopted to acquire the retrieval results. Due to space limitations, only top 10 retrieval images are exhibited in

Figure 19,

Figure 20,

Figure 21 and

Figure 22. The incorrect results are tagged in red, and the number of correct retrieval images within top 35 results is provided for reference. From the observation of visual exhibition, we can find that the retrieval performance of our method is the best compared with other approaches. These encouraging experimental results prove the effectiveness of our method again.

4.6. Parameter Analysis

As mentioned in

Section 4.1.2, there are four parameters that impact the performance of our DBOW directly, including the size of uniform and superpixel visual words

, the number of convolution kernels

and the percentage of training set

for DCAE training, and the size of codebook

k for DBOW generation. In this section, we study the influence of those four parameters in detail through changing their values. The size of visual words

is tuned from

to

with the interval of

, the number of convolution kernels

is changed from 24 to 40 with the interval of 4, the percentage of training set

is varied from 50% to 90% with the interval of 10%, and the size of codebook

k is set 50, 150, 500, 1000, 1500, and 1500, respectively. The results of three archives counted using the top-20 retrieval images are exhibited in

Figure 23,

Figure 24,

Figure 25 and

Figure 26.

For , the performance of our method is fluctuated within an acceptable range, and the peak values are reached when (ImgDB1, ImgDB2, and ImgDB3) and (ImgDB4). For , the whole trend of our method’s behavior is rising with increases. For different archives, the highest retrieval performance appears at for ImgDB1, for ImgDB2 and ImgDB3, and for ImgDB4. For , more training samples lead to more accurate retrieval results. Nevertheless, more training samples results in longer training time. In addition, the performance is enhanced slightly when for both of the data sets. Thus, we set in this paper. For k, it is obvious that the performance of our method is getting better when the value of k increases. However, the computational complexity will be increased dramatically when . Therefore, we set in this paper to balance the performance and the computational complexity.

4.7. Computational Cost

Apart from the average retrieval precision and recall, the computation cost is another important assessment criteria for the RSIR methods. In this section, we discuss the computational cost of our method. As mentioned in

Section 3, the RSIR method proposed in this paper consists of two parts, including DBOW feature learning and similarity matching. Therefore, we study the computational cost from those two aspects.

The process of DBOW feature learning can be split into offline training and online feature learning. The offline training process is time consuming. Taking ImgDB1 as an example, when the proportion of the training set equals 80% (more than 600,000 image patches with size of ), we need almost 40 min to train our DCAE models and generate the codebook using different kinds of visual words. After the model training, the offline feature extraction process is fast, which just needs around 300 milliseconds for a RS image.

For similarity matching, the common distance metric is used to measure the similarities between query and target images, and the exhaustive search through linear scan is used to obtain the retrieval results. The time cost of this part is impacted by the size of image archive and the dimension of image feature directly. Here, we further study the computational cost of similarity matching through changing the size of target archive. The time cost of different comparisons is also counted as the reference. The experimental results are shown in

Table 7. From the observation of the values, we can find that the most time-consuming method is RSIR-RFM. The reason is that the feature used in RFM measure is discrete distribution rather than the single vector. The fastest method is RSIR-Hash since the hashing code is the binary feature, and the similarities between hashing features can be calculated by the bit operation. For other RSIR methods, the similarity matching time cost is proportional to the feature dimension.