An Image Fusion Method Based on Image Segmentation for High-Resolution Remotely-Sensed Imagery

Abstract

:1. Introduction

2. Methodologies

2.1. Image Segmentation

2.2. Elimination of Over- and Under-Segmented Regions

2.3. Identification of MPs

2.4. Fusion of MPs Using Improved Spectral Values

3. Experiments

3.1. Datasets

3.2. Fusion Methods for Comparison and Evaluation Criteria

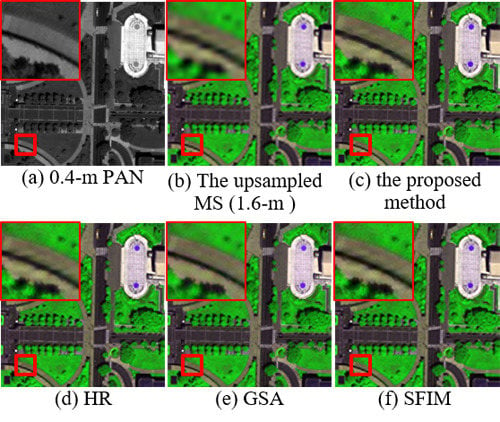

3.3. Results and Analysis

3.4. Analysis of the Determination of the Thresholds Involved

4. Discussion

5. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Alparone, L.; Wald, L.; Chanussot, J.; Thomas, C.; Gamba, P.; Bruce, L.M. Comparison of pansharpening algorithms: Outcome of the 2006 GRS-S data-fusion contest. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3012–3021. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Chen, X.; Ward, R.K.; Jane Wang, Z. Image fusion with convolutional sparse representation. IEEE Signal Process. Lett. 2016, 23, 1882–1886. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Carla, R.; Garzelli, A.; Santurri, L. Sensitivity of pansharpening methods to temporal and instrumental changes between multispectral and panchromatic data sets. IEEE Trans. Geosci. Remote Sens. 2017, 55, 308–319. [Google Scholar] [CrossRef]

- Li, H.; Jing, L.; Tang, Y.; Ding, H. An improved pansharpening method for misaligned panchromatic and multispectral data. Sensors 2018, 18, 557. [Google Scholar] [CrossRef] [PubMed]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Selva, M. Multispectral pansharpening based on pixel modulation: State of the art and new results. In Proceedings of SPIE, Image and Signal Processing for Remote Sensing XVII; Bruzzone, L., Ed.; SPIE: Bellingham, WA, USA, 2011; Volume 8180, p. 818002. [Google Scholar]

- Otazu, X.; Gonzalez-Audicana, M.; Fors, O.; Nunez, J. Introduction of sensor spectral response into image fusion methods: Application to wavelet-based methods. IEEE Trans. Geosci. Remote Sens. 2005, 43, 2376–2385. [Google Scholar] [CrossRef] [Green Version]

- Shensa, M.J. The discrete wavelet transform: Wedding the a trous and mallat algorithms. IEEE Trans. Signal Process. 1992, 40, 2464–2482. [Google Scholar] [CrossRef]

- Zhang, L.P.; Shen, H.F.; Gong, W.; Zhang, H.Y. Adjustable model-based fusion method for multispectral and panchromatic images. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2012, 42, 1693–1704. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.K.; Huang, B. A new look at image fusion methods from a bayesian perspective. Remote Sens. 2015, 7, 6828–6861. [Google Scholar] [CrossRef]

- Li, S.; Yin, H.; Fang, L. Remote sensing image fusion via sparse representations over learned dictionaries. IEEE Trans. Geosci. Remote Sens. 2013, 51, 4779–4789. [Google Scholar] [CrossRef]

- Zhu, X.X.; Bamler, R. A sparse image fusion algorithm with application to pan-sharpening. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2827–2836. [Google Scholar] [CrossRef]

- Yin, H. Sparse representation with learned multiscale dictionary for image fusion. Neurocomputing 2015, 148, 600–610. [Google Scholar] [CrossRef]

- Li, S.; Yang, B. A new pan-sharpening method using a compressed sensing technique. IEEE Trans. Geosci. Remote Sens. 2011, 49, 738–746. [Google Scholar] [CrossRef]

- Ghahremani, M.; Ghassemian, H. Remote sensing image fusion using ripplet transform and compressed sensing. IEEE Geosci. Remote Sens. Lett. 2015, 12, 502–506. [Google Scholar] [CrossRef]

- Ma, N.; Zhou, Z.-M.; Zhang, P.; Luo, L.-M. A new variational model for panchromatic and multispectral image fusion. Acta Autom. Sin. 2013, 39, 179–187. [Google Scholar] [CrossRef]

- Zhang, G.; Fang, F.; Zhou, A.; Li, F. Pan-sharpening of multi-spectral images using a new variational model. Int. J. Remote Sens. 2015, 36, 1484–1508. [Google Scholar] [CrossRef]

- Pohl, C.; Van Genderen, J.L. Remote Sensing Image Fusion: A Practical Guide; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Aiazzi, B.; Baronti, S.; Lotti, F.; Selva, M. A comparison between global and context-adaptive pansharpening of multispectral images. IEEE Geosci. Remote Sens. Lett. 2009, 6, 302–306. [Google Scholar] [CrossRef]

- Yang, J.; Zhang, J.; Li, H. Generalized model for remotely sensed data pixel-level fusion and its implement technology. J. Image Graph. 2009, 14, 604–614. [Google Scholar]

- Tu, T.-M.; Su, S.-C.; Shyu, H.-C.; Huang, P.S. A new look at IHS-like image fusion methods. Inf. Fusion 2001, 2, 177–186. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A. Context-driven fusion of high spatial and spectral resolution images based on oversampled multiresolution analysis. IEEE Trans. Geosci. Remote Sens. 2002, 40, 2300–2312. [Google Scholar] [CrossRef]

- Aiazzi, B.; Baronti, S.; Selva, M.; Alparone, L. Bi-cubic interpolation for shift-free pan-sharpening. ISPRS J. Photogramm. Remote Sens. 2013, 86, 65–76. [Google Scholar] [CrossRef]

- Schowengerdt, R.A. Remote Sensing, Models and Methods for Image Processing; Academic Press: San Diego and Chesnut Hill, USA, 1997. [Google Scholar]

- Zhukov, B.; Oertel, D.; Lanzl, F.; Reinhackel, G. Unmixing-based multisensor multiresolution image fusion. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1212–1226. [Google Scholar] [CrossRef]

- Gevaert, C.M.; García-Haro, F.J. A comparison of STARFM and an unmixing-based algorithm for Landsat and MODIS data fusion. Remote Sens. Environ. 2015, 156, 34–44. [Google Scholar] [CrossRef]

- Zhukov, B.; Oertel, D.; Lanzl, F. A multiresolution multisensor technique for satellite remote sensing. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Firenze, Italy, 10–14 July 1995; Volume 1, pp. 51–53. [Google Scholar]

- Jing, L.; Cheng, Q. Spectral change directions of multispectral subpixels in image fusion. Int. J. Remote Sens. 2011, 32, 1695–1711. [Google Scholar] [CrossRef]

- Palubinskas, G. Model-based view at multi-resolution image fusion methods and quality assessment measures. Int. J. Image Data Fusion 2016, 7, 203–218. [Google Scholar] [CrossRef]

- Jing, L.; Cheng, Q. An image fusion method based on object-oriented classification. Int. J. Remote Sens. 2012, 33, 2434–2450. [Google Scholar] [CrossRef]

- Li, H.; Jing, L.; Wang, L.; Cheng, Q. Improved pansharpening with un-mixing of mixed ms sub-pixels near boundaries between vegetation and non-vegetation objects. Remote Sens. 2016, 8, 83. [Google Scholar] [CrossRef]

- Li, H.; Jing, L.; Sun, Z.; Li, J.; Xu, R.; Tang, Y.; Chen, F. A novel image-fusion method based on the un-mixing of mixed ms sub-pixels regarding high-resolution dsm. Int. J. Digit. Earth 2016, 9, 606–628. [Google Scholar] [CrossRef]

- Gaetano, R.; Masi, G.; Scarpa, G.; Poggi, G. A marker-controlled watershed segmentation: Edge, mark and fill. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Munich, Germany, 22–27 July 2012; pp. 4315–4318. [Google Scholar]

- Gaetano, R.; Masi, G.; Poggi, G.; Verdoliva, L.; Scarpa, G. Marker-controlled watershed-based segmentation of multiresolution remote sensing images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2987–3005. [Google Scholar] [CrossRef]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 8, 679–698. [Google Scholar] [CrossRef] [PubMed]

- Johnson, B.; Xie, Z. Unsupervised image segmentation evaluation and refinement using a multi-scale approach. ISPRS J. Photogramm. Remote Sens. 2011, 66, 473–483. [Google Scholar] [CrossRef]

- Bieniek, A.; Moga, A. An efficient watershed algorithm based on connected components. Pattern Recognit. 2000, 33, 907–916. [Google Scholar] [CrossRef]

- Jing, L.; Cheng, Q. Two improvement schemes of pan modulation fusion methods for spectral distortion minimization. Int. J. Remote Sens. 2009, 30, 2119–2131. [Google Scholar] [CrossRef]

- Chavez, P.S. An improved dark-object subtraction technique for atmospheric scattering correction of multispectral data. Remote Sens. Environ. 1988, 24, 459–479. [Google Scholar] [CrossRef]

- Chavez, P.S. Image-based atmospheric corrections revisited and improved. Photogramm. Eng. Remote Sens. 1996, 62, 1025–1036. [Google Scholar]

- Moran, M.S.; Jackson, R.D.; Slater, P.N.; Teillet, P.M. Evaluation of simplified procedures for retrieval of land surface reflectance factors from satellite sensor output. Remote Sens. Environ. 1992, 41, 169–184. [Google Scholar] [CrossRef]

- Li, H.; Jing, L. Improvement of a pansharpening method taking into account haze. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 5039–5055. [Google Scholar] [CrossRef]

- Jing, L.; Cheng, Q.; Guo, H.; Lin, Q. Image misalignment caused by decimation in image fusion evaluation. Int. J. Remote Sens. 2012, 33, 4967–4981. [Google Scholar] [CrossRef]

- Vivone, G.; Alparone, L.; Chanussot, J.; Dalla Mura, M.; Garzelli, A.; Licciardi, G.A.; Restaino, R.; Wald, L. A critical comparison among pansharpening algorithms. IEEE Trans. Geosci. Remote Sens. 2015, 33, 2565–2586. [Google Scholar] [CrossRef]

- Yin, H.; Li, S. Pansharpening with multiscale normalized nonlocal means filter: A two-step approach. IEEE Trans. Geosci. Remote Sens. 2015, 53, 5734–5745. [Google Scholar]

- Hallabia, H.; Kallel, A.; Hamida, A.B.; Hégarat-Mascle, S.L. High spectral quality pansharpening approach based on MTF-matched filter banks. Multidimens. Syst. Signal Process. 2016, 27, 831–861. [Google Scholar] [CrossRef]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving component substitution pansharpening through multivariate regression of MS + PAN data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Liu, J.G. Smoothing filter-based intensity modulation: A spectral preserve image fusion technique for improving spatial details. Int. J. Remote Sens. 2000, 21, 3461–3472. [Google Scholar] [CrossRef]

- Wald, L.; Ranchin, T. Liu ‘Smoothing filter-based intensity modulation: A spectral preserve image fusion technique for improving spatial details’. Int. J. Remote Sens. 2002, 23, 593–597. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A. MTF-tailored multiscale fusion of high-resolution MS and Pan imagery. Photogramm. Eng. Remote Sens. 2006, 72, 591–596. [Google Scholar] [CrossRef]

- Ranchin, T.; Wald, L. Fusion of high spatial and spectral resolution images: The ARSIS concept and its implementation. Photogramm. Eng. Remote Sens. 2000, 66, 49–61. [Google Scholar]

- Wald, L. Quality of high resolution synthesised images: Is there a simple criterion? In Proceedings of the International Conference on Fusion Earth Data, Sophia Antipolis, France, 26–28 January 2000; pp. 99–103. [Google Scholar]

- Yuhas, R.; Goetz, A.; Boardman, J. Discrimination among semi-arid landscape endmembers using the spectral angle mapper (SAM) algorithm. In Proceedings of the Summaries of the Third Annual JPL Airborne Geoscience Workshop, Pasadena, CA, USA, 15 June 1992; pp. 147–149. [Google Scholar]

- Wang, Z.; Bovik, A.C. A universal image quality index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

- Alparone, L.; Baronti, S.; Garzelli, A.; Nencini, F. A global quality measurement of pan-sharpened multispectral imagery. IEEE Geosci. Remote Sens. Lett. 2004, 1, 313–317. [Google Scholar] [CrossRef]

- Garzelli, A.; Nencini, F. Hypercomplex quality assessment of multi/hyperspectral images. IEEE Geosci. Remote Sens. Lett. 2009, 6, 662–665. [Google Scholar] [CrossRef]

- Alparone, L.; Alazzi, B.; Baronti, S.; Garzelli, A.; Nencini, F.; Selva, M. Multispectral and panchromatic data fusion assessment without reference. Photogramm. Eng. Remote Sens. 2008, 74, 193–200. [Google Scholar] [CrossRef]

- Updike, T.; Comp, C. Radiometric Use of Worldview-2 Imagery; Digital Globe: Longmont, CO, USA, 2010. [Google Scholar]

| Dataset | Scale | TC | TV | NMP | NMP (without Excluding Some Regions) | NMP (Using Automatic Determined TC) |

|---|---|---|---|---|---|---|

| WV-2 | degraded | 0.07 | 0.1 | 25,700 | 90,286 | 10,709 |

| original | 0.07 | 0.2 | 895,243 | 954,407 | 881,755 | |

| WV-3 | degraded | 0.08 | 0.07 | 10,740 | 92,320 | 5110 |

| original | 0.08 | 0.18 | 825,574 | 980,506 | 834,269 | |

| GE-1 | degraded | 0.09 | 0.07 | 21,152 | 76,162 | 16,686 |

| original | 0.06 | 0.065 | 748,213 | 1,063,620 | 482,849 |

| Image | Method | Degraded Scale | Original Scale | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| RASE | ERGAS | SAM | Q2n | SCC | Dλ | DS | QNR | Time(s) | ||

| WV-2 | HR-E | 14.31 | 3.16 | 4.54 | 0.9316 | 0.8815 | 0.0082 | 0.0233 | 0.9688 | 34.08 |

| HR-E-A | 14.37 | 3.17 | 4.57 | 0.9314 | 0.8798 | 0.0081 | 0.0232 | 0.9689 | 32.94 | |

| HR-E-NE | 14.45 | 3.18 | 4.57 | 0.930 | 0.880 | 0.0082 | 0.0230 | 0.9690 | 29.21 | |

| HR | 14.41 | 3.18 | 4.59 | 0.931 | 0.878 | 0.0078 | 0.026 | 0.966 | 0.61 | |

| GSA | 14.60 | 3.36 | 5.22 | 0.927 | 0.8819 | 0.014 | 0.045 | 0.942 | 4.59 | |

| SFIM | 14.97 | 3.48 | 5.06 | 0.908 | 0.865 | 0.026 | 0.050 | 0.925 | 0.68 | |

| GLP-SDM | 15.01 | 3.45 | 5.06 | 0.910 | 0.871 | 0.035 | 0.058 | 0.909 | 1.73 | |

| AWLP | 14.88 | 3.44 | 5.06 | 0.916 | 0.866 | 0.031 | 0.052 | 0.918 | 3.04 | |

| ATWT | 15.12 | 3.59 | 5.35 | 0.911 | 0.857 | 0.040 | 0.060 | 0.902 | 2.37 | |

| EXP | 21.53 | 5.26 | 5.06 | 0.790 | 0.617 | 0.000 | 0.036 | 0.964 | - | |

| WV-3 | HR-E | 15.35 | 3.244 | 4.91 | 0.9187 | 0.874 | 0.0085 | 0.0427 | 0.9491 | 36.32 |

| HR-E-A | 15.36 | 3.247 | 4.92 | 0.9185 | 0.873 | 0.0086 | 0.0427 | 0.9491 | 38.34 | |

| HR-E-NE | 15.80 | 3.330 | 5.00 | 0.9147 | 0.867 | 0.0091 | 0.0423 | 0.9490 | 31.53 | |

| HR | 15.38 | 3.249 | 4.93 | 0.9182 | 0.873 | 0.0088 | 0.045 | 0.946 | 0.61 | |

| GSA | 17.03 | 3.79 | 6.42 | 0.900 | 0.807 | 0.026 | 0.063 | 0.912 | 4.71 | |

| SFIM | 16.03 | 3.58 | 5.58 | 0.872 | 0.839 | 0.053 | 0.075 | 0.876 | 0.67 | |

| GLP-SDM | 16.00 | 3.57 | 5.58 | 0.872 | 0.847 | 0.067 | 0.089 | 0.850 | 1.80 | |

| AWLP | 17.26 | 3.92 | 5.58 | 0.847 | 0.838 | 0.086 | 0.101 | 0.821 | 3.19 | |

| ATWT | 17.79 | 4.31 | 6.45 | 0.792 | 0.810 | 0.107 | 0.113 | 0.792 | 2.40 | |

| EXP | 21.34 | 4.92 | 5.58 | 0.769 | 0.616 | 0.000 | 0.071 | 0.929 | - | |

| GE-1 | HR-E | 9.25 | 1.96 | 3.15 | 0.9126 | 0.864 | 0.0160 | 0.029 | 0.9551 | 42.24 |

| HR-E-A | 9.26 | 1.96 | 3.16 | 0.9126 | 0.864 | 0.0157 | 0.030 | 0.9552 | 27.70 | |

| HR-E-NE | 9.31 | 1.97 | 3.16 | 0.911 | 0.863 | 0.0178 | 0.028 | 0.9547 | 31.73 | |

| HR | 9.29 | 1.97 | 3.18 | 0.9122 | 0.863 | 0.0164 | 0.031 | 0.953 | 0.34 | |

| GSA | 9.25 | 2.26 | 3.21 | 0.902 | 0.868 | 0.023 | 0.054 | 0.924 | 3.17 | |

| SFIM | 9.75 | 2.21 | 3.16 | 0.898 | 0.849 | 0.024 | 0.051 | 0.925 | 0.35 | |

| GLP-SDM | 10.90 | 2.38 | 3.16 | 0.897 | 0.854 | 0.028 | 0.059 | 0.915 | 1.16 | |

| AWLP | 9.25 | 2.12 | 3.16 | 0.911 | 0.862 | 0.022 | 0.052 | 0.927 | 2.78 | |

| ATWT | 8.62 | 1.98 | 2.99 | 0.915 | 0.874 | 0.025 | 0.054 | 0.922 | 2.31 | |

| EXP | 12.84 | 3.43 | 3.16 | 0.788 | 0.669 | 0.000 | 0.038 | 0.962 | - | |

| Parameter | Range | Step |

|---|---|---|

| TV | [0.1, 0.5] | 0.5 |

| TM | [0.2, 0.9] | 0.1 |

| TA | [10, 100] | 10 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, H.; Jing, L.; Tang, Y.; Wang, L. An Image Fusion Method Based on Image Segmentation for High-Resolution Remotely-Sensed Imagery. Remote Sens. 2018, 10, 790. https://doi.org/10.3390/rs10050790

Li H, Jing L, Tang Y, Wang L. An Image Fusion Method Based on Image Segmentation for High-Resolution Remotely-Sensed Imagery. Remote Sensing. 2018; 10(5):790. https://doi.org/10.3390/rs10050790

Chicago/Turabian StyleLi, Hui, Linhai Jing, Yunwei Tang, and Liming Wang. 2018. "An Image Fusion Method Based on Image Segmentation for High-Resolution Remotely-Sensed Imagery" Remote Sensing 10, no. 5: 790. https://doi.org/10.3390/rs10050790