1. Introduction

Wetlands, including of the coastal variety, are a vital part of the ecosystem and perform important related functions, including water quality protection, flood mitigation, water and soil conservation, and climate regulation [

1,

2,

3]. Vegetation, as the main component of wetlands [

4], plays a crucial role in carbon sequestration, shoreline protection, and wildlife habitats [

5,

6,

7]. Unfortunately, large areas of the natural vegetation community have been extensively degraded or even lost as a result of certain external factors, such as climate, exotic plant species invasion, and human activity [

8,

9]. In recent years, with the development of coastal areas in China, the latter has exerted increasing pressure on wetland vegetation and has brought about serious degradation. To manage and protect these wetlands, detailed vegetation mapping is required; accordingly, the study of approaches for the quick and accurate classification of coastal vegetation types is highly germane. However, most research involving coastal wetland mapping has been focused on the land-use/cover-type classification, while discussions on the wetlands’ interior vegetation patterns remain limited.

Interest in wetland vegetation has been growing since the Ramsar Convention of 1971. Traditionally, fieldwork has been the most commonly used method of investigating vegetation types; however, this requires a great deal of manpower, material resources, and time. Remote sensing, in contrast, offers a simple and efficient means of obtaining data. Initially, most studies focused on wetland changes in terms of land use/cover type did not include appraisals of alterations to the vegetation, but a gradual shift to incorporating them has been seen in recent years. For example, Tan et al. [

10] studied the classification of wetland vegetation types using the Normalized Difference Vegetation Index (NDVI) in Yancheng, China, based on Landsat7 TM images. Liu and Xu, meanwhile, studied vegetation’s ecological character using the NDVI in the north Jiangsu shoal of east China [

11]. In these studies, however, spectral data was applied in order to classify individual vegetation communities without considering mixed vegetation communities. In reality, such communities may exist within the boundaries of different types of vegetation. In moderate spatial resolution satellite images, various vegetation communities may be presented in the mixture of pixels, complicating their differentiation from their neighboring vegetation types using only spectral information. With the advent of high spatial resolution satellites, more spatial information can be obtained, especially regarding texture, which is closely related to object internal construction. Some studies have demonstrated the efficiency of texture for improving vegetation classification accuracy [

12,

13]. The Grey Level Co-occurrence Matrix (GLCM) is a commonly used method of analyzing texture and has proven an effective approach when applied to vegetation classification [

14,

15]. Based on GLCM’s popularity in vegetation classification, some researchers [

16,

17] have attempted to classify wetland vegetation using texture obtained from the approach. Berberoğlu et al. [

18] studied the land-use/cover change dynamics of a Mediterranean coastal wetland based on extraction of vegetation from Landsat TM images using GLCM. Elsewhere, Arzandeh and Wang [

19] used GLCM texture measurements to classify wetland and other types of land cover. Their results showed that GLCM texture analysis of Radarsat images improved the wetland classification’s accuracy. Thus, we are interested in whether wetland vegetation types, including mixed vegetation, can be classified to a high degree of accuracy based on spectral and texture features of high spatial resolution images. At the beginning of the research, we extracted texture features using GLCM; however, this approach can only be sensitive to changes in greyscale values in specific directions. The results are affected by the setting of parameters (distance and angle) and texture feature selection. Without extensive and complicated analysis, the best setting and texture feature, leading to optimal performance parameters, cannot be selected [

20]. Because researchers have not achieved a better understanding of wetland vegetation, the texture’s ancillary effect on classification may not be satisfactory when using the GLCM algorithm only, and further texture analysis methods are required to improve the coastal wetland vegetation classification.

Local Binary Pattern (LBP) is a simple and effective texture operator that is widely used in the field of computer vision, especially face recognition and target detection. LBP is a neighborhood operation that analyzes the grey value changes between a pixel and its neighbors, rather than following one pixel in a particular direction. The greyscale invariance, a characteristic of LBP, can effectively reduce the influence of illumination. Chowdhury et al. [

21] used Co-occurrence of Binary Pattern, an improved version of LBP, to classify dense and sparse grasses, obtaining 92.72% classification accuracy. Musci et al. [

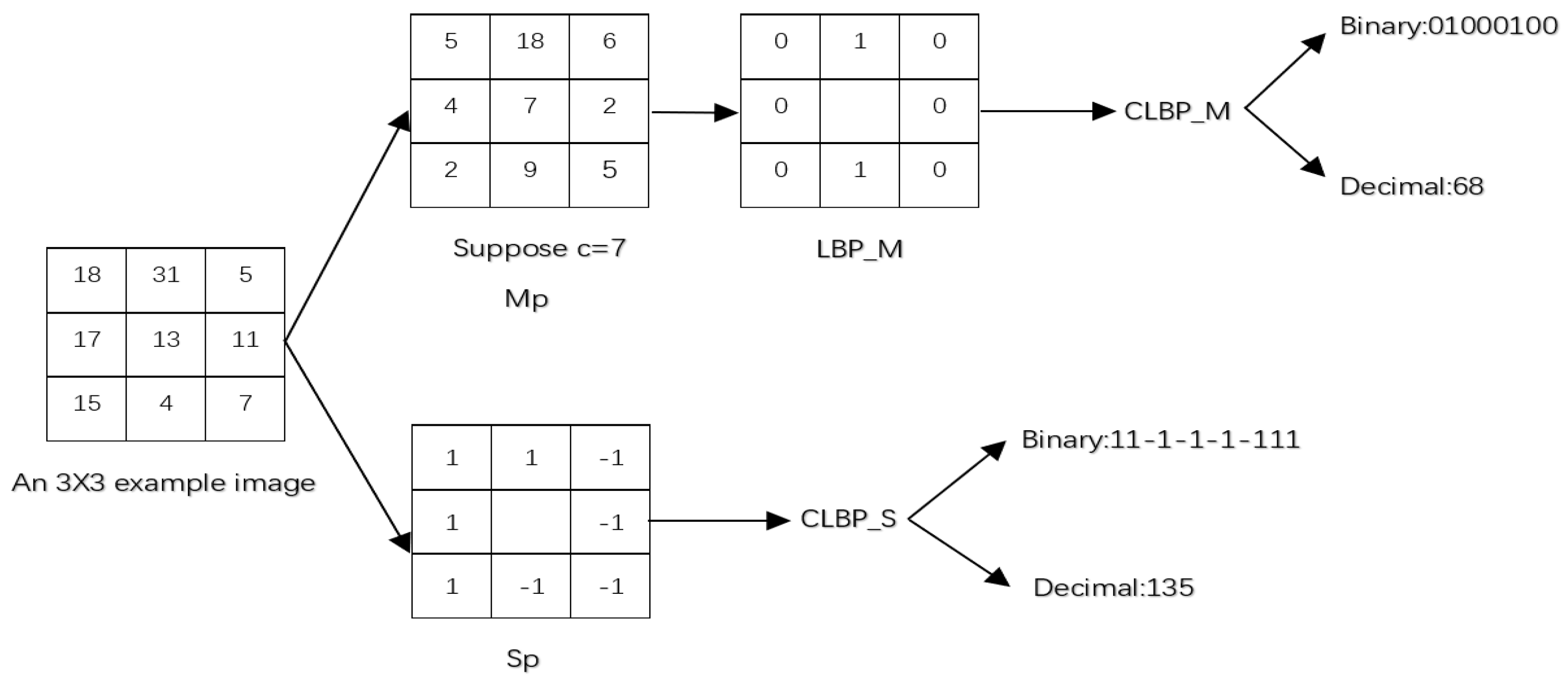

22], for their part, evaluated LBP performance in land-use/cover object-based classification of IKONOS-2 and Quickbird-2 satellite images, with the results showing that the LBP Kappa index was higher than GLCM. Since the advent of LBP, many LBP improvement algorithms have been proposed, one of which, Completed Local Binary Patterns (CLBP), takes two aspects of signs and magnitudes into account. These differences are broken down into two basic constituents, named CLBP_sign (CLBP_s) and CLBP_magnitude (CLBP_m). CLBP_s and LBP are, in fact, the same thing, just under different names. Basic constituent features can be combined through joint distributions to improve classification results. Singh et al. [

23] compared CLBP to LBP in facial expression recognition, their results showing that CLBP outperformed LBP. Some other studies have also shown that the average recognition efficiency of the proposed method, using CLBP, is better than with LBP. Thus far, CLBP has not been applied in coastal wetland vegetation classification using high spatial resolution satellite images.

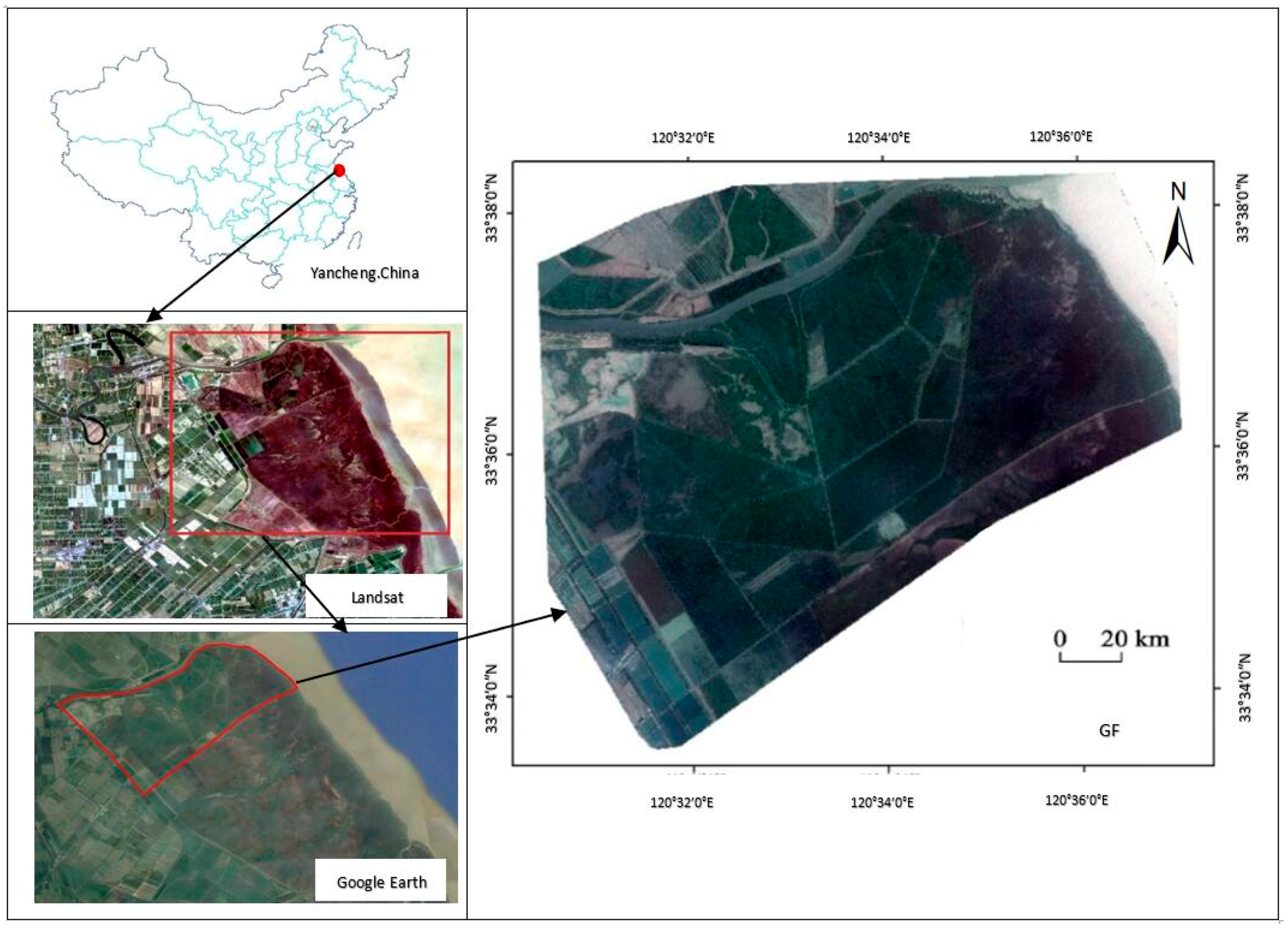

Although CLBP has been demonstrated to be superior to LBP in terms of texture analysis capabilities, our aim was to examine its performance on the classification of wetland vegetation in high spatial resolution images; accordingly, such classification was first applied based on spectrum and texture features, extracted by CLBP, via a case study of the largest tidal flat wetland in Jiangsu Province, China. The accuracy of CLBP classification was also evaluated by comparing it with GLCM.

4. Conclusions

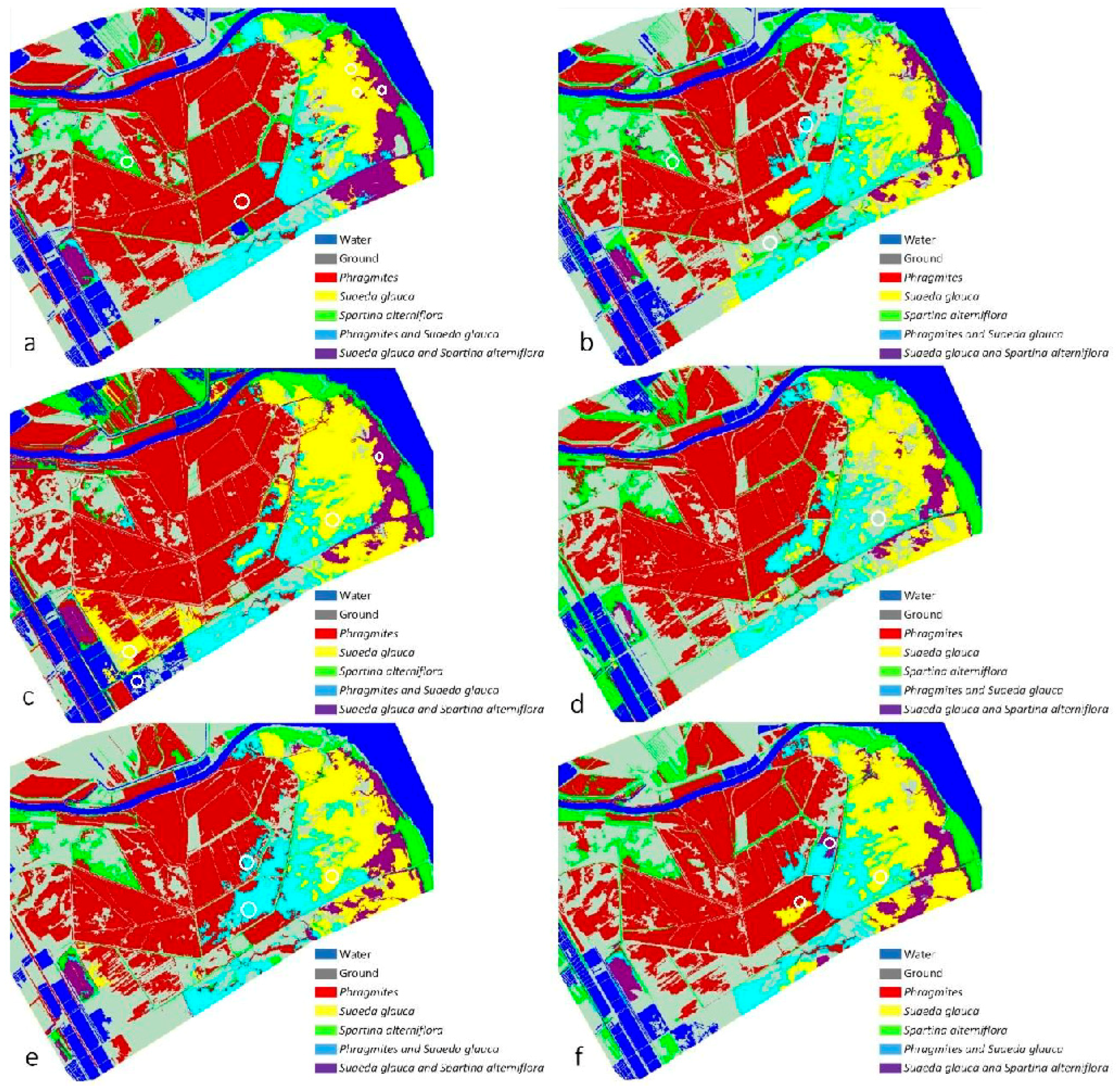

This study presented a performance assessment of texture features for the classification of coastal wetland vegetation from high spatial resolution imagery using CLBP. Based on the experimental results, it was found that both the CLBP and GLCM texture features improved classification accuracy effectively. The overall accuracy using CLBP was slightly better than with GLCM when combining spectral information with texture features. However, if comparing these vegetation classes, CLBP exhibits a better classification accuracy for Spartina alterniflora than when using GLCM. Thus, CLBP measures could be more suitable for extracting the details and regular graininess textures.

Among the various CLBP features, CLBP_m showed more effective improvement for vegetation classification, although the overall accuracy of the classification may be slightly different, including water and ground areas. However, jointed features, such as CLBP_s/m and CLBP_s/m/c, did not show greater advantages compared to CLBP_m for the mixture and complex vegetation classes.

In short, the study demonstrates that CLBP, an efficient and simple texture feature extraction method usually used in face recognition, could be applied to high spatial resolution Pléiades satellite images to classify coastal wetland vegetation. Our results evince that CLBP appears to be a promising method with regard to image classification of remote sensing data, especially in coastal wetland vegetation classification. Further research should be focused on mixed vegetation classification by refining the algorithm and attempting to combine CLBP with GLCM.