Multiscale Geoscene Segmentation for Extracting Urban Functional Zones from VHR Satellite Images

Abstract

:1. Introduction

1.1. Background

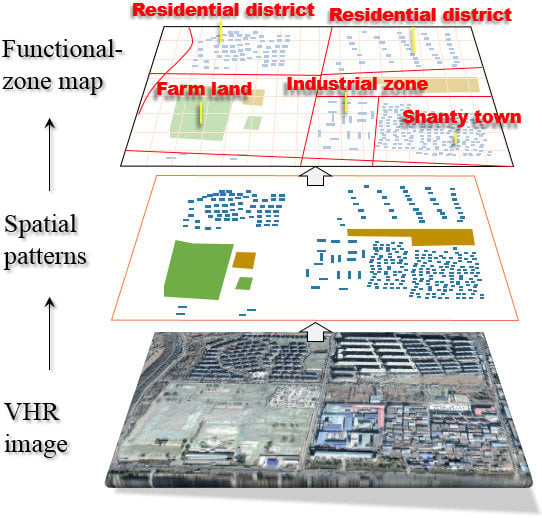

1.2. Geoscene: Representation of Urban Functional Zones

1.3. Technical Issues

- Feature representation: Features are basic cues to segment and recognize functional zones, which can be divided into three levels: low, middle and high. Firstly, low-level features, such as spectral, geometrical, and textural image features, are widely used in image analyses [11], but they are weak in characterizing functional zones which are usually composed of diverse objects with variant characteristics [12]. Then, middle-level features, including object semantics [4,8], visual elements [7], and bag-of-visual-word (BOVW) representations [13], are more effective than low-level features in representing functional zones [7], but they ignore spatial and contextual information of objects, leading to inaccurate recognition results. To resolve this issue, Hu et al. (2015) extracted high-level features using convolutional neural network (CNN) [10], which could measure contextual information and were more robust than visual features in recognizing functional zones [14,16]. Zhang et al. (2017) had a different opinion on the relevance of deep-learning features and stated that these features rarely had geographic meaning and were weak for the purpose of interpretability [4]. Additionally, the size and shape of the convolution window can influence deep-learning features. Recently, spatial-pattern features measuring spatial object distributions are proposed to characterize functional zones and produce satisfactory classification results [4]. However, these spatial-pattern features are measured based on roads blocks which cannot necessarily be applied to zone segmentation [36]. Accordingly, the application of spatial-pattern features for functional-zone segmentation needs further studies.

- Segmentation method: Functional-zone segmentation aims to spatially divide an image into non-overlapping patches with each representing a functional zone. This is the first and fundamental step to functional-zone analysis. Existing segmentation methods can be sorted into three types: region, edge, and graph based [9,17,18]. Among them, a region-based method named multiresolution segmentation (MRS) outperforms others and is widely used for geographic-object-based analysis (GEOBIA) [18,37]. MRS essentially aggregates neighboring pixels into homogeneous objects by considering their spectral and shape heterogeneities. It concentrates on different kinds of geographic objects which should be segmented at multiple scales [38]. However, neither MRS nor other segmentation techniques can extract functional zones, as they focus on homogeneous objects which have consistent visual cues, but functional zones have substantial discontinuities in visual cues and can be divided into many smaller segments. Accordingly, functional-zone segmentation methods are still open and will be the focus of this study.

- Scale parameter: Scale is important for segmentation regarding tolerable heterogeneities of segmented functional zones. It influences segmentation results [18] and controls the sizes of segments. The used scales in existing segmentations can fall into two types, i.e., fixed and multiple [19,20,21,37]. Multiple scales will be more applicable to functional-zone segmentation, as functional zones are often different in sizes and heterogeneities, which are related to variant scale parameters. Accordingly, how to select the appropriate scales for functional-zone segmentations is one of the key issues in this study.

- Result evaluation: Evaluation aims to measure accuracies of segmentation results and verify the effectiveness of segmentation methods. Existing studies on segmentations consider two kinds of evaluation approaches, i.e., supervised and unsupervised evaluations [39,40,41]. For the supervised evaluation, the ground truths of functional-zone boundaries are required. The differences between ground truths and segmentation results are used to measure segmentation errors [19]. However, the ground truths of functional zones are rarely available, thus it is not applicative to use traditional supervised evaluations here. On the other hand, unsupervised evaluation is rooted in the idea of comparing within-segment heterogeneity to between-segment similarity [40], but functional zones are typically heterogeneous; thus, unsupervised evaluation is ineffective. Accordingly, neither method is applicable to the evaluation of functional-zone segmentations, thus a novel evaluation method should be further developed.

2. Methodology

2.1. Spatial-Pattern Features

2.1.1. Spatial-Pattern Features of Neighboring Objects

2.1.2. Spatial-Pattern Features of Disjoint Objects

2.2. Geoscene Segmentation

2.2.1. Aggregation

2.2.2. Expanding

2.2.3. Overlaying

2.3. Multiscale Segmentation

2.4. Segmentation Evaluation

3. Experimental Verification

3.1. Study Area and Data Sets

- A VHR satellite image: A WorldView-II image (Figure 10a), acquired on 10 July 2010, is employed to extract object and spatial-pattern features for generating functional zones.

- Road lines: 74 main-road lines (Figure 10a), are used to restrict geoscene segmentations which are freely available from the national geographic database.

- POIs: 116,201 POIs (Figure 10b) are used to evaluate segmentation results, which are sorted into six classes, i.e., commercial services, public services, scenic spots, residential buildings, education institutes, and companies.

3.2. Multiscale Geoscene Segmentation Results

3.3. Importance of Spatial Patterns in Geoscene Segmentations

3.4. Process of Urban-Functional-Zone Mapping

4. Case Studies for Different Cities

4.1. Study Areas and Data Sets

4.2. Mapping Results of Urban Functional Zones

- For Putian, scale = 100, and = 0.8.

- For Zhuhai, scale = 120, and = 0.6.

5. Discussion

5.1. Comparing Functional Zonings among the Study Areas

5.2. A Comparison between This Study and Existing Urban-Functional-Zone Analyses

5.3. A Comparison between Geoscene-Based Image Analysis and GEOBIA

5.4. Potential Applications of Multiscale Geoscene Segmentation

6. Conclusions and Future Prospect

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix A

| Algorithm A1 geoscene segmentation is |

| Input: Land-cover objects //They are generated by GEOBIA |

| Output: Geoscene units boundaries |

| Step 1: Aggregation |

| for to do //L refers to the number of levels (also the number of object classes) |

| while do // is a variable for controlling iteration process |

| for each object cluster at level : do //Initially each cluster contains an object |

| for each neighbor of in the relationship graph: . do |

| (Equation (1)) |

| Select the with the smallest . |

| if // is a manually set parameter |

| then |

| Merge and |

| Update the relationship graph at level |

| else |

| continue |

| Step 2: Expanding |

| for to do |

| for each object cluster at level : do |

| for each neighbor of in the relationship graph: do |

| for each object, , located between and do |

| Calculate and (Equation (5)) |

| if > |

| then |

| Expand with |

| else |

| Expand with |

| Step 3: Overlaying |

| for to do |

| Overlay the object clusters at level and by using spatial union |

| output overlaying results as geoscene boundaries |

References

- Luck, M.; Wu, J. A gradient analysis of urban landscape pattern: A case study from the Phoenix metropolitan region, Arizona, USA. Landsc. Ecol. 2002, 17, 327–339. [Google Scholar]

- Matsuoka, R.H.; Kaplan, R. People needs in the urban landscape: Analysis of landscape and urban planning contributions. Landsc. Urban Plan. 2008, 84, 7–19. [Google Scholar] [CrossRef]

- Yuan, N.J.; Zheng, Y.; Xie, X.; Wang, Y.; Zheng, K.; Xiong, H. Discovering urban functional zones using latent activity trajectories. IEEE Trans. Knowl. Data Eng. 2015, 27, 712–725. [Google Scholar] [CrossRef]

- Zhang, X.; Du, S.; Wang, Q. Hierarchical semantic cognition for urban functional zones with VHR satellite images and POI data. ISPRS J. Photogramm. 2017, 132, 170–184. [Google Scholar] [CrossRef]

- Wu, J.; Hobbs, R.J. (Eds.) Key Topics in Landscape Ecology; Cambridge University Press: New York, NY, USA, 2007; ISBN 13 9780521616447. [Google Scholar]

- Hu, T.; Yang, J.; Li, X.; Gong, P. Mapping urban land use by using Landsat images and open social data. Remote Sens. 2016, 8, 151. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Guo, L.; Liu, Z.; Bu, S.; Ren, J. Effective and efficient midlevel visual elements-oriented land-use classification using VHR remote sensing images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 4238–4249. [Google Scholar] [CrossRef]

- Zhang, X.; Du, S.; Wang, Y.C. Semantic classification of heterogeneous urban scenes using intrascene feature similarity and interscene semantic dependency. IEEE J. Sel. Top. Appl. 2015, 8, 2005–2014. [Google Scholar] [CrossRef]

- Carreira, J.; Caseiro, R.; Batista, J.; Sminchisescu, C. Semantic segmentation with second-order pooling. In Proceedings of the Computer Vision—ECCV, Florence, Italy, 7–13 October 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 430–443. [Google Scholar]

- Hu, F.; Xia, G.S.; Hu, J.; Zhang, L. Transferring deep convolutional neural networks for the scene classification of high-resolution remote sensing imagery. Remote Sens. 2015, 7, 14680–14707. [Google Scholar] [CrossRef]

- Rhinane, H.; Hilali, A.; Berrada, A. Detecting slums from SPOT data in Casablanca Morocco using an object based approach. J. Geogr. Inf. Syst. 2011, 3, 217–224. [Google Scholar]

- Tang, H.; Maitre, H.; Boujemaa, N.; Jiang, W. On the relevance of linear discriminative features. Inf. Sci. 2010, 180, 3422–3433. [Google Scholar] [CrossRef]

- Cheng, G.; Guo, L.; Zhao, T.; Han, J.; Li, H.; Fang, J. Automatic landslide detection from remote-sensing imagery using a scene classification method based on BoVW and pLSA. Int. J. Remote Sens. 2013, 34, 45–59. [Google Scholar] [CrossRef]

- Zou, Q.; Ni, L.; Zhang, T.; Wang, Q. Deep learning based feature selection for remote sensing scene classification. IEEE Geosci. Remote Sens. 2015, 12, 2321–2325. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Lu, X. Remote Sensing Image Scene Classification: Benchmark and State of the Art. Proc. IEEE 2017, 150, 1865–1883. [Google Scholar] [CrossRef]

- Zhou, B.; Lapedriza, A.; Xiao, J.; Torralba, A.; Oliva, A. Learning deep features for scene recognition using places database. In Advances in Neural Information Processing Systems, Proceedings of the 27th International Conference on Neural Information Processing Systems; Montreal, QC, Canada, 8–13 December 2014; MIT Press: Cambridge, MA, USA, 2014; pp. 487–495. [Google Scholar]

- Teney, D.; Brown, M.; Kit, D.; Hall, P. Learning similarity metrics for dynamic scene segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2084–2093. [Google Scholar]

- Baatz, M.; Schape, A. Multiresolution Segmentation—An optimization approach for high quality multi-scale image segmentation. In Proceedings of the AGIT-Symposium XII, Salzburg, Austria, 5–7 July 2000; pp. 12–23. [Google Scholar]

- Hay, G.J.; Blaschke, T.; Marceau, D.J.; Bouchard, A. A comparison of three image-object methods for the multiscale analysis of landscape structure. ISPRS J. Photogramm. 2003, 57, 327–345. [Google Scholar] [CrossRef]

- Meinel, G.; Neubert, M. A comparison of segmentation programs for high resolution remote sensing data. Int. Arch. Photogr. Remote Sens. 2004, 35, 1097–1105. [Google Scholar]

- Silveira, M.; Nascimento, J.C.; Marques, J.S.; Marçal, A.R.; Mendonça, T.; Yamauchi, S.; Maeda, J.; Rozeira, J. Comparison of segmentation methods for melanoma diagnosis in dermoscopy images. IEEE J. Sel. Top. Signal Process. 2009, 3, 35–45. [Google Scholar] [CrossRef]

- Pertuz, S.; Garcia, M.A.; Puig, D. Focus-aided scene segmentation. Comput. Vis. Image Underst. 2015, 133, 66–75. [Google Scholar] [CrossRef]

- Wang, J.Z.; Li, J.; Gray, R.M.; Wiederhold, G. Unsupervised multiresolution segmentation for images with low depth of field. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 85–90. [Google Scholar] [CrossRef]

- Heiden, U.; Heldens, W.; Roessner, S.; Segl, K.; Esch, T.; Mueller, A. Urban structure type characterization using hyperspectral remote sensing and height information. Landsc. Urban Plan. 2012, 105, 361–375. [Google Scholar] [CrossRef]

- Zhang, X.; Du, S. A linear Dirichlet mixture model for decomposing scenes: Application to analyzing urban functional zonings. Remote Sens. Environ. 2015, 169, 37–49. [Google Scholar] [CrossRef]

- Voltersen, M.; Berger, C.; Hese, S.; Schmullius, C. Object-based land cover mapping and comprehensive feature calculation for an automated derivation of urban structure types at block level. Remote Sens. Environ. 2014, 154, 192–201. [Google Scholar] [CrossRef]

- Kirchhoff, T.; Trepl, L.; Vicenzotti, V. What is landscape ecology? An analysis and evaluation of six different conceptions. Landsc. Res. 2013, 38, 33–51. [Google Scholar] [CrossRef]

- Nielsen, M.M.; Ahlqvist, O. Classification of different urban categories corresponding to the strategic spatial level of urban planning and management using a SPOT4 scene. J. Spat. Sci. 2015, 60, 99–117. [Google Scholar] [CrossRef]

- Bhaskaran, S.; Paramananda, S.; Ramnarayan, M. Per-pixel and object-oriented classification methods for mapping urban features using Ikonos satellite data. Appl. Geogr. 2010, 30, 650–665. [Google Scholar] [CrossRef]

- Myint, S.W.; Gober, P.; Brazel, A.; Grossman-Clarke, S.; Weng, Q. Per-pixel vs. object-based classification of urban land cover extraction using high spatial resolution imagery. Remote Sens. Environ. 2011, 115, 1145–1161. [Google Scholar] [CrossRef]

- Yu, Q.; Gong, P.; Clinton, N.; Biging, G.; Kelly, M.; Schirokauer, D. Object-based detailed vegetation classification with airborne high spatial resolution remote sensing imagery. Photogramm. Eng. Remote Sens. 2006, 72, 799–811. [Google Scholar] [CrossRef]

- Huang, X.; Yuan, W.; Li, J.; Zhang, L. A new building extraction postprocessing framework for high-spatial-resolution remote-sensing imagery. IEEE J. Sel. Top. Appl. 2017, 10, 654–668. [Google Scholar] [CrossRef]

- Grinias, I.; Panagiotakis, C.; Tziritas, G. MRF-based segmentation and unsupervised classification for building and road detection in peri-urban areas of high-resolution satellite images. ISPRS J. Photogramm. 2016, 122, 145–166. [Google Scholar] [CrossRef]

- Benedek, C.; Descombes, X.; Zerubia, J. Building development monitoring in multitemporal remotely sensed image pairs with stochastic birth-death dynamics. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 33–50. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cheng, G.; Han, J. A survey on object detection in optical remote sensing images. ISPRS J. Photogramm. 2016, 117, 11–28. [Google Scholar] [CrossRef]

- Zhou, W.; Cadenasso, M.L.; Schwarz, K.; Pickett, S.T. Quantifying spatial heterogeneity in urban landscapes: Integrating visual interpretation and object-based classification. Remote Sens. 2014, 6, 3369–3386. [Google Scholar] [CrossRef]

- Zhang, X.; Du, S. Learning selfhood scales for urban land cover mapping with very-high-resolution VHR images. Remote Sens. Environ. 2016, 178, 172–190. [Google Scholar]

- Belgiu, M.; Draguţ, L. Comparing supervised and unsupervised multiresolution segmentation approaches for extracting buildings from very high resolution imagery. ISPRS J. Photogramm. 2014, 96, 67–75. [Google Scholar] [CrossRef] [PubMed]

- Clinton, N.; Holt, A.; Scarborough, J.; Yan, L.; Gong, P. Accuracy assessment measures for object-based image segmentation goodness. Photogramm. Eng. Remote Sens. 2010, 76, 289–299. [Google Scholar] [CrossRef]

- Drăguţ, L.; Tiede, D.; Levick, S.R. ESP: A tool to estimate scale parameter for multiresolution image segmentation of remotely sensed data. Int. J. Geogr. Inf. Sci. 2010, 24, 859–871. [Google Scholar] [CrossRef]

- Witharana, C.; Civco, D.L. Optimizing multi-resolution segmentation scale using empirical methods: Exploring the sensitivity of the supervised discrepancy measure euclidean distance 2 (ED2). ISPRS J. Photogramm. 2014, 87, 108–121. [Google Scholar] [CrossRef]

- Hay, G.J.; Castilla, G. Geographic Object-Based Image Analysis (GEOBIA): A new name for a new discipline. In Object-Based Image Analysis; Springer: Berlin/Heidelberg, Germany, 2008; pp. 75–89. ISBN 13 9783540770572. [Google Scholar]

- Suykens, J.A.; Vandewalle, J. Least squares support vector machine classifiers. Neural Process. Lett. 1999, 9, 293–300. [Google Scholar] [CrossRef]

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogramm. 2004, 58, 239–258. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J. Object-oriented image analysis and scale-space: Theory and methods for modeling and evaluating multiscale landscape structure. Int. Arch. Photogramm. 2001, 34, 22–29. [Google Scholar]

- Wang, F.; Hall, G.B. Fuzzy representation of geographical boundaries in GIS. Int. J. Geogr. Inf. Syst. 1996, 10, 573–590. [Google Scholar]

- Yao, Y.; Li, X.; Liu, X.; Liu, P.; Liang, Z.; Zhang, J.; Mai, K. Sensing spatial distribution of urban land use by integrating points-of-interest and Google Word2Vec model. Int. J. Geogr. Inf. Sci. 2016, 31, 1–24. [Google Scholar] [CrossRef]

- Drăguţ, L.; Csillik, O.; Eisank, C.; Tiede, D. Automated parameterisation for multi-scale image segmentation on multiple layers. ISPRS J. Photogramm. 2014, 88, 119–127. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; He, J.; Yao, Y.; Zhang, J.; Liang, H.; Wang, H.; Hong, Y. Classifying urban land use by integrating remote sensing and social media data. Int. J. Geogr. Inf. Sci. 2017, 31, 1675–1696. [Google Scholar] [CrossRef]

- Weinberger, K.Q.; Saul, L.K. Distance metric learning for large margin nearest neighbor classification. J. Mach. Learn. Res. 2009, 10, 207–244. [Google Scholar]

- Montanges, A.P.; Moser, G.; Taubenböck, H.; Wurm, M.; Tuia, D. Classification of urban structural types with multisource data and structured models. In Proceedings of the Urban Remote Sensing Event (JURSE), IEEE, Lausanne, Switzerland, 30 March–1 April 2015; pp. 1–4. [Google Scholar]

- Duro, D.C.; Franklin, S.E.; Dubé, M.G. A comparison of pixel-based and object-based image analysis with selected machine learning algorithms for the classification of agricultural landscapes using SPOT-5 HRG imagery. Remote Sens. Environ. 2012, 118, 259–272. [Google Scholar] [CrossRef]

- Peña-Barragán, J.M.; Ngugi, M.K.; Plant, R.E.; Six, J. Object-based crop identification using multiple vegetation indices, textural features and crop phenology. Remote Sens. Environ. 2011, 115, 1301–1316. [Google Scholar] [CrossRef]

- Borgström, S.; Elmqvist, T.; Angelstam, P.; Alfsen-Norodom, C. Scale mismatches in management of urban landscapes. Ecol. Soc. 2006, 11, 16. [Google Scholar] [CrossRef]

- Wen, D.; Huang, X.; Zhang, L.; Benediktsson, J.A. A novel automatic change detection method for urban high-resolution remotely sensed imagery based on multiindex scene representation. IEEE Trans. Geosci. Remote 2016, 54, 609–625. [Google Scholar] [CrossRef]

- Zhao, W.; Du, S. Scene classification using multi-scale deeply described visual words. Int. J. Remote Sens. 2016, 37, 4119–4131. [Google Scholar] [CrossRef]

- Garrigues, S.; Allard, D.; Baret, F.; Weiss, M. Quantifying spatial heterogeneity at the landscape scale using variogram models. Remote Sens. Environ. 2006, 103, 81–96. [Google Scholar] [CrossRef]

- Sweeney, K.E.; Roering, J.J.; Ellis, C. Experimental evidence for hillslope control of landscape scale. Science 2015, 349, 51–53. [Google Scholar] [CrossRef] [PubMed]

- Vihervaara, P.; Mononen, L.; Auvinen, A.P.; Virkkala, R.; Lü, Y.; Pippuri, I.; Valkama, J. How to integrate remotely sensed data and biodiversity for ecosystem assessments at landscape scale. Landsc. Ecol. 2015, 30, 501–516. [Google Scholar] [CrossRef]

- Boutell, M.R.; Luo, J.; Shen, X.; Brown, C.M. Learning multi-label scene classification. Pattern Recognit. 2004, 37, 1757–1771. [Google Scholar] [CrossRef]

- Farabet, C.; Couprie, C.; Najman, L.; LeCun, Y. Learning hierarchical features for scene labeling. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1915–1929. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tao, D.; Cheng, J.; Lin, X.; Yu, J. Local structure preserving discriminative projections for RGB-D sensor-based scene classification. Inf. Sci. 2015, 320, 383–394. [Google Scholar] [CrossRef]

- Bosch, A.; Zisserman, A.; Muñoz, X. Scene classification via pLSA. In Proceedings of the Computer Vision—ECCV 2006, Graz, Austria, 7–13 May 2006; pp. 517–530. [Google Scholar]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Rango, A.; Havstad, K.M.; Paris, J.F.; Beck, R.F.; McNeely, R.; Gonzalez, A.L. Object-oriented image analysis for mapping shrub encroachment from 1937 to 2003 in southern New Mexico. Remote Sens. Environ. 2004, 93, 198–210. [Google Scholar] [CrossRef]

- Powers, R.P.; Hay, G.J.; Chen, G. How wetland type and area differ through scale: A GEOBIA case study in Alberta's Boreal Plains. Remote Sens. Environ. 2012, 117, 135–145. [Google Scholar] [CrossRef]

- Stow, D.; Hamada, Y.; Coulter, L.; Anguelova, Z. Monitoring shrubland habitat changes through object-based change identification with airborne multispectral imagery. Remote Sens. Environ. 2008, 112, 1051–1061. [Google Scholar] [CrossRef]

- Brassel, K.E.; Weibel, R. A review and conceptual framework of automated map generalization. Int. J. Geogr. Inf. Syst. 1988, 2, 229–244. [Google Scholar] [CrossRef]

- Lamonaca, A.; Corona, P.; Barbati, A. Exploring forest structural complexity by multi-scale segmentation of VHR imagery. Remote Sens. Environ. 2008, 112, 2839–2849. [Google Scholar] [CrossRef] [Green Version]

- Tang, H.; Shen, L.; Qi, Y.; Chen, Y.; Shu, Y.; Li, J.; Clausi, D.A. A multiscale latent Dirichlet allocation model for object-oriented clustering of VHR panchromatic satellite images. IEEE Trans. Geosci. Remote Sens. 2013, 51, 1680–1692. [Google Scholar] [CrossRef]

- Wang, J.F.; Zhang, T.L.; Fu, B.J. A measure of spatial stratified heterogeneity. Ecol. Indic. 2016, 67, 250–256. [Google Scholar] [CrossRef]

- Plexida, S.G.; Sfougaris, A.I.; Ispikoudis, I.P.; Papanastasis, V.P. Selecting landscape metrics as indicators of spatial heterogeneity—A comparison among Greek landscapes. Int. J. Appl. Earth Obs. 2014, 26, 26–35. [Google Scholar] [CrossRef]

- Waclaw, B.; Bozic, I.; Pittman, M.E.; Hruban, R.H.; Vogelstein, B.; Nowak, M.A. A spatial model predicts that dispersal and cell turnover limit intratumour heterogeneity. Nature 2015, 525, 261–264. [Google Scholar] [CrossRef] [PubMed]

- Davies, K.F.; Chesson, P.; Harrison, S.; Inouye, B.D.; Melbourne, B.A.; Rice, K.J. Spatial heterogeneity explains the scale dependence of the native-exotic diversity relationship. Ecology 2005, 86, 1602–1610. [Google Scholar] [CrossRef]

- Regulska, E.; Kołaczkowska, E. Landscape patch pattern effect on relationships between soil properties and earthworm assemblages: A comparison of two farmlands of different spatial structure. Pol. J. Ecol. 2015, 63, 549–558. [Google Scholar] [CrossRef]

- Zhou, W.; Pickett, S.T.; Cadenasso, M.L. Shifting concepts of urban spatial heterogeneity and their implications for sustainability. Landsc. Ecol. 2017, 32, 15–30. [Google Scholar] [CrossRef]

- Forman, R.T. Land Mosaics: The Ecology of Landscapes and Regions; Cambridge University: Cambridge, UK, 1995; pp. 42–46. [Google Scholar]

- Naveh, Z.; Lieberman, A.S. Landscape Ecology: Theory and Application; Springer: New York, NY, USA, 2013; pp. 157–163. ISBN 13 9780387940595. [Google Scholar]

- Malavasi, M.; Carboni, M.; Cutini, M.; Carranza, M.L.; Acosta, A.T. Landscape fragmentation, land-use legacy and propagule pressure promote plant invasion on coastal dunes: A patch-based approach. Landsc. Ecol. 2014, 29, 1541–1550. [Google Scholar] [CrossRef]

- Mairota, P.; Cafarelli, B.; Boccaccio, L.; Leronni, V.; Labadessa, R.; Kosmidou, V.; Nagendra, H. Using landscape structure to develop quantitative baselines for protected area monitoring. Ecol. Indic. 2013, 33, 82–95. [Google Scholar] [CrossRef]

- Seidl, R.; Lexer, M.J.; Jäger, D.; Hönninger, K. Evaluating the accuracy and generality of a hybrid patch model. Tree Physiol. 2005, 25, 939–951. [Google Scholar] [CrossRef] [PubMed]

- Burnett, C.; Blaschke, T. A multi-scale segmentation/object relationship modelling methodology for landscape analysis. Ecol. Model. 2003, 168, 233–249. [Google Scholar] [CrossRef]

- Hay, G.J.; Marceau, D.J. Multiscale object-specific analysis (MOSA): An integrative approach for multiscale landscape analysis. In Remote Sensing Image Analysis: Including the Spatial Domain; Springer: Berlin, Germany, 2004; pp. 71–92. [Google Scholar]

- Clark, B.J.F.; Pellikka, P.K.E. Landscape analysis using multiscale segmentation and object orientated classification. Recent Adv. Remote Sens. Geoinf. Process. Land Degrad. Assess. 2009, 8, 323–341. [Google Scholar]

- Fu, B.; Liang, D.; Nan, L. Landscape ecology: Coupling of pattern, process, and scale. Chin. Geogr. Sci. 2011, 21, 385–391. [Google Scholar] [CrossRef]

- Wu, J. Key concepts and research topics in landscape ecology revisited: 30 years after the Allerton Park workshop. Landsc. Ecol. 2013, 28, 1–11. [Google Scholar] [CrossRef]

| Geographic Entities | Image Units | References |

|---|---|---|

| Separated trees/stones | Pixels | [29,30] |

| Woods/buildings | Objects | [31,32,33,34,35] |

| Forest ecological system/urban functional zones | Geoscenes |

| Terminologies | Meaning |

|---|---|

| Functional zones | Functional zones are basic units of city planning and management which are spatially aggregations of diverse geographic objects with regular patterns, and their functional categories are semantically abstracted from objects’ land uses. |

| Geoscenes | Spatially continuous and non-overlapping regions in remote sensing images, with each one composed of diverse objects. Each geoscene comprises similar spatial patterns, in which the same-category objects have similar object and pattern characteristics. |

| Geoscene-based image analysis (GEOSBIA) | An image analysis strategy, which uses geoscenes as basic units to analyze functional zones. It mainly includes geoscene segmentation, feature extraction, and classification. This new strategy greatly differs from per-pixel and object-based image analyses in terms of features, categories, segmentations, classifications, and applications. |

| Geoscene segmentation | A strategy to partition an image into geoscenes considering both object and spatial-pattern features. |

| Geoscene segmentation scale | An important parameter for controlling geoscene segmentation results, and representing the largest tolerable heterogeneity of segmented geoscenes. |

| Spatial-pattern features | Features used for measuring spatial arrangements of objects. Spatial-pattern features are used to characterize functional zones in this study. |

| Types | Names | Meanings |

|---|---|---|

| Spectral | (Mean spectrum) | Average spectrum of an object |

| (Std. dev) | Gray standard deviation in an object | |

| (Skewness) | Skewness of spectral histogram | |

| Textural | (GLDV) | The vector composed of diagonal elements of GLCM |

| (Homogeneity) | The homogeneity derived from GLCM | |

| (Dissimilarity) | The heterogeneity parameters derived from GLCM | |

| (Entropy) | Information entropy derived from GLCM | |

| (Correlation) | Correlation of pixels which is derived from GLCM | |

| Geometrical | (Area) | The number of pixels within image objects |

| (Length/width) | Length-width ratio of the object’s MBR | |

| (Main direction) | Eigenvectors of covariance matrix | |

| (Shape index) | The ratio of perimeter to four times side length |

| Scales | 70 | 90 | 110 | 130 | 150 |

|---|---|---|---|---|---|

| 1614 | 1067 | 830 | 703 | 218 | |

| Functions | Co | Re | Sh | In | Pa | Ca | Total | User’s Accuracy |

|---|---|---|---|---|---|---|---|---|

| Co | 109 | 11 | 0 | 2 | 10 | 0 | 132 | 82.6% |

| Re | 8 | 250 | 1 | 3 | 2 | 5 | 269 | 92.9% |

| Sh | 0 | 1 | 12 | 0 | 0 | 0 | 13 | 92.3% |

| In | 3 | 0 | 1 | 21 | 0 | 0 | 25 | 84.0% |

| Pa | 0 | 4 | 0 | 0 | 118 | 1 | 121 | 97.5% |

| Ca | 0 | 20 | 0 | 1 | 2 | 118 | 133 | 88.7% |

| Total | 120 | 286 | 14 | 27 | 132 | 124 | 703 | |

| Producer’s accuracy | 90.8% | 87.4% | 85.7% | 77.8% | 89.4% | 95.2% | OA = 89.3% |

| Image | Location | Data Source | Resolution | Acquisition Date | Area |

|---|---|---|---|---|---|

| Figure 18a | Putian | QuickBird | 0.61 m | 17 June 2010 | 7.5 |

| Figure 18b | Zhuhai | QuickBird | 0.61 m | 23 June 2010 | 27.9 |

| Study Areas | Beijing | Putian | Zhuhai | |||

|---|---|---|---|---|---|---|

| Area () | Proportion | Area () | Proportion | Area () | Proportion | |

| Commercial zones | 11.7 | 17.4% | 1.2 | 16.0% | 7.8 | 31.5% |

| Residential districts | 22.1 | 32.9% | 2.9 | 38.7% | 4.7 | 16.8% |

| Shanty towns | 3.0 | 4.5% | 1.3 | 17.3% | 1.7 | 6.1% |

| Campuses | 10.4 | 15.5% | 0.4 | 5.3% | 0.9 | 6.8% |

| Parks and greenbelt | 15.3 | 22.8% | 1.5 | 20.0 % | 9.7 | 34.8% |

| Industrial zones | 4.6 | 6.9% | 0.2 | 2.7% | 3.1 | 4.0% |

| Total | 67.1 | 100% | 7.5 | 100% | 27.9 | 100% |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Du, S.; Wang, Q.; Zhou, W. Multiscale Geoscene Segmentation for Extracting Urban Functional Zones from VHR Satellite Images. Remote Sens. 2018, 10, 281. https://doi.org/10.3390/rs10020281

Zhang X, Du S, Wang Q, Zhou W. Multiscale Geoscene Segmentation for Extracting Urban Functional Zones from VHR Satellite Images. Remote Sensing. 2018; 10(2):281. https://doi.org/10.3390/rs10020281

Chicago/Turabian StyleZhang, Xiuyuan, Shihong Du, Qiao Wang, and Weiqi Zhou. 2018. "Multiscale Geoscene Segmentation for Extracting Urban Functional Zones from VHR Satellite Images" Remote Sensing 10, no. 2: 281. https://doi.org/10.3390/rs10020281