Big Data Integration in Remote Sensing across a Distributed Metadata-Based Spatial Infrastructure

Abstract

:1. Introduction

2. Background on Architectures for Remote Sensing Data Integration

2.1. Distributed Integration of Remote Sensing Data

- (1)

- The data warehouse (DW)-based integration model, which copies all data sources of each heterogeneous database system into a new and public database system, so as to provide users with a unified data access interface. However, due to the heterogeneity of each independent database system, vast data redundancy is generated, and a larger storage space is also required.

- (2)

- The federated database system (FDBS)-based integration model, which maintains the autonomy of each database system and establishes an association between each independent database system to form a database federation, then providing data retrieval services to users. However, this pattern can not solve the problems of database heterogeneity or system scalability [12].

- (3)

- The middleware-based integration model, which establishes middleware between the data layer and the application layer, providing a unified data access interface for the upper layer users and realizing the centralized management for the lower layer database system. The middleware not only shields the heterogeneity of each database system, providing a unified data access mechanism, but also effectively improves the query concurrency, reducing the response time. Therefore, in this paper, we will adopt the middleware-based integration mode to realize the distributed remote sensing data integration.

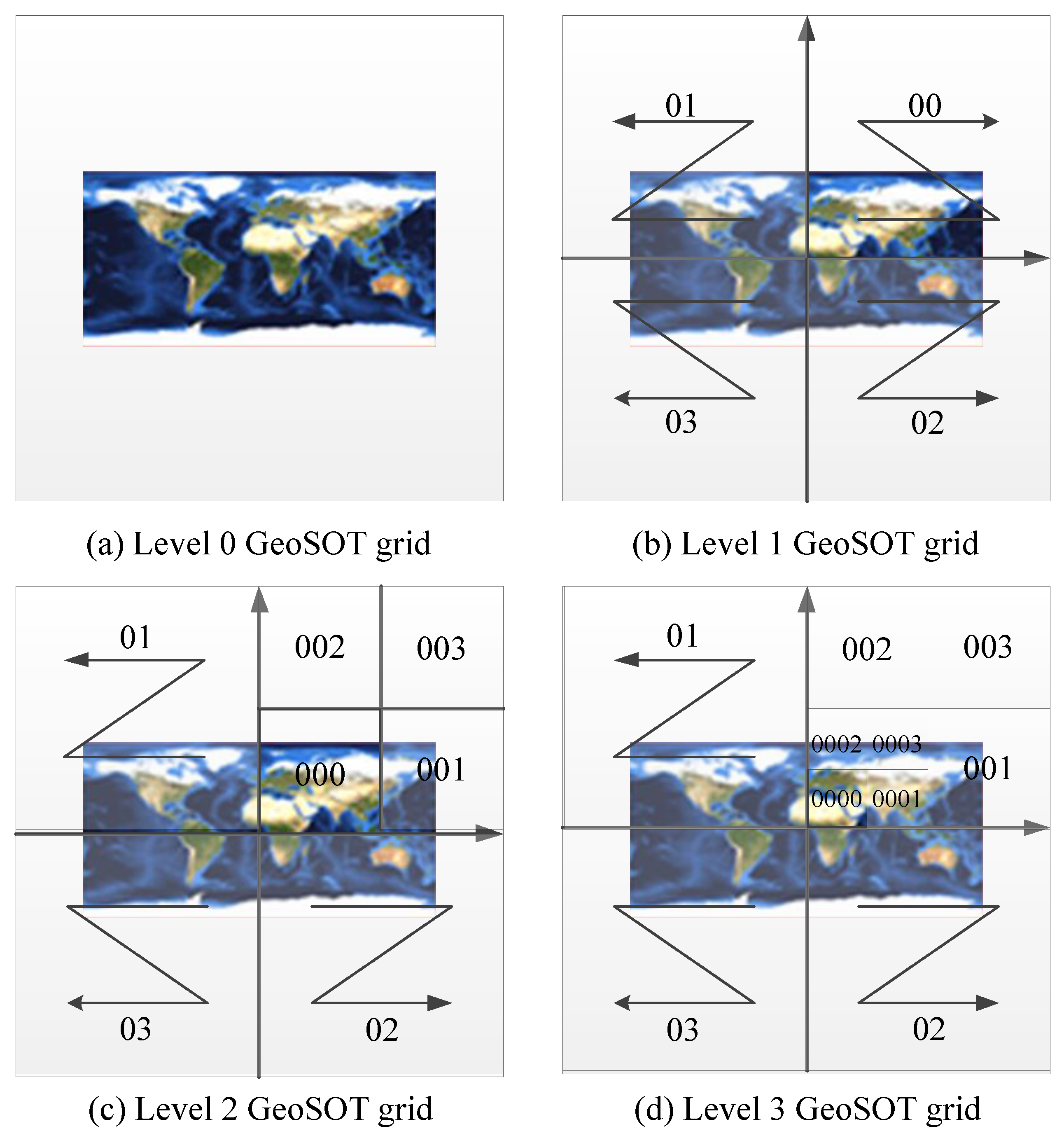

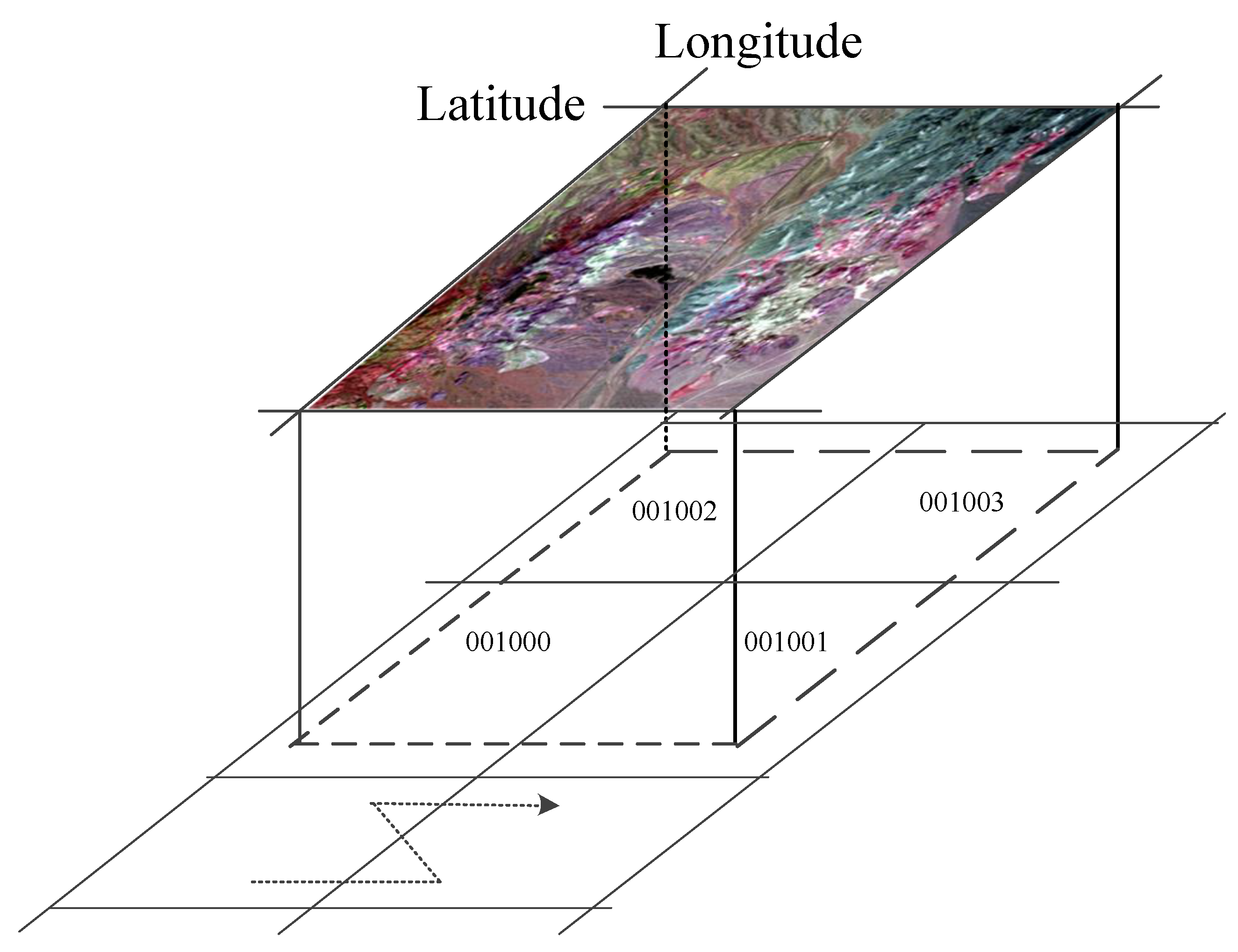

2.2. Spatial Organization of Remote Sensing Data

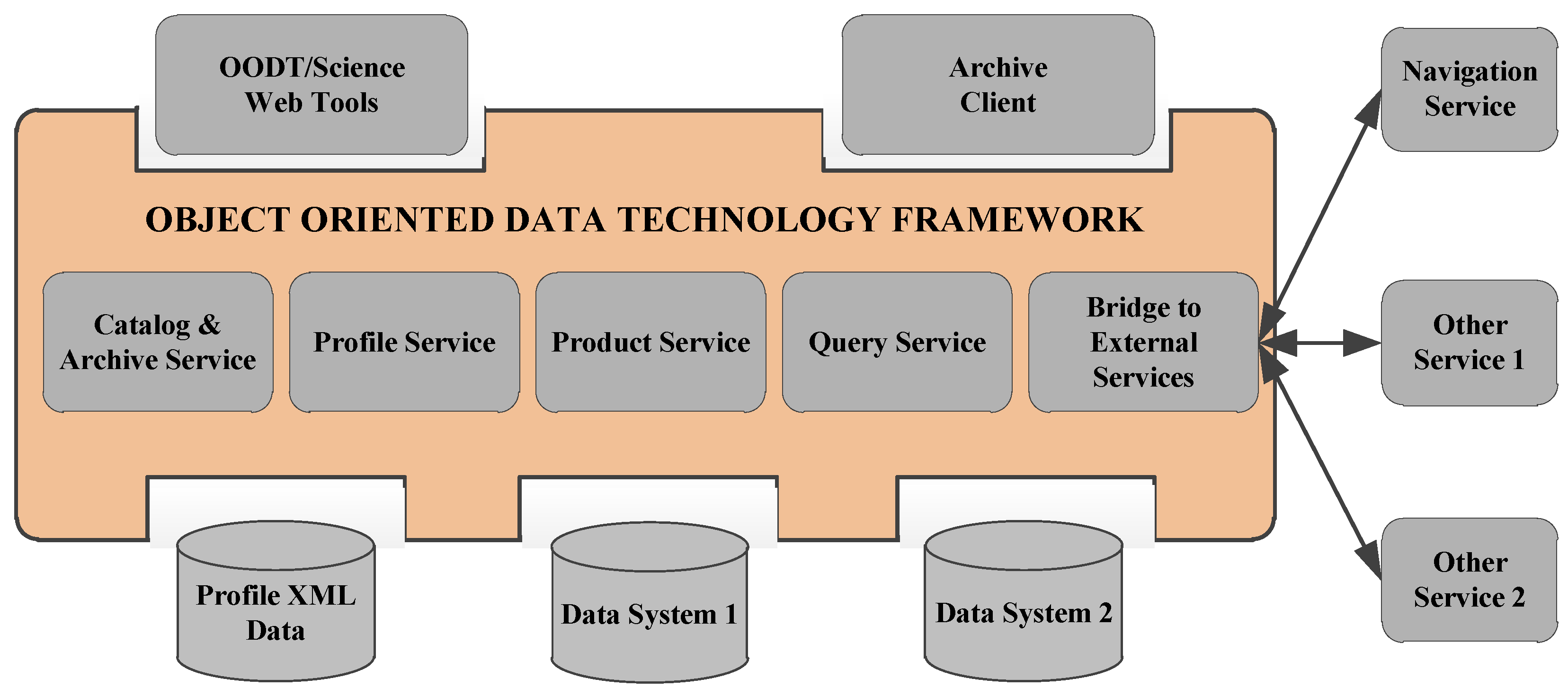

2.3. OODT: A Data Integration Framework

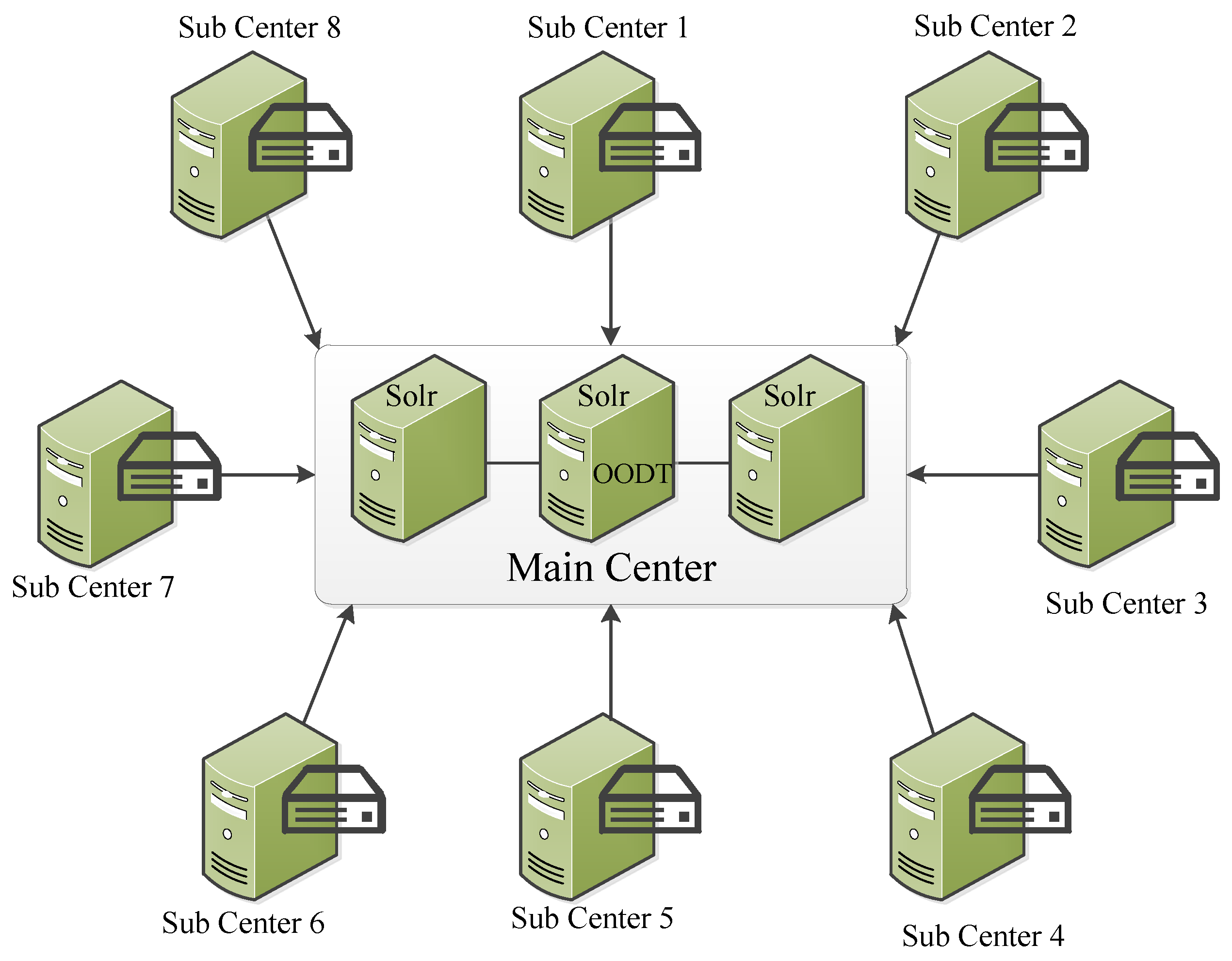

3. Distributed Integration of Multi-Source Remote Sensing Data

3.1. The ISO 19115-Based Metadata Transformation

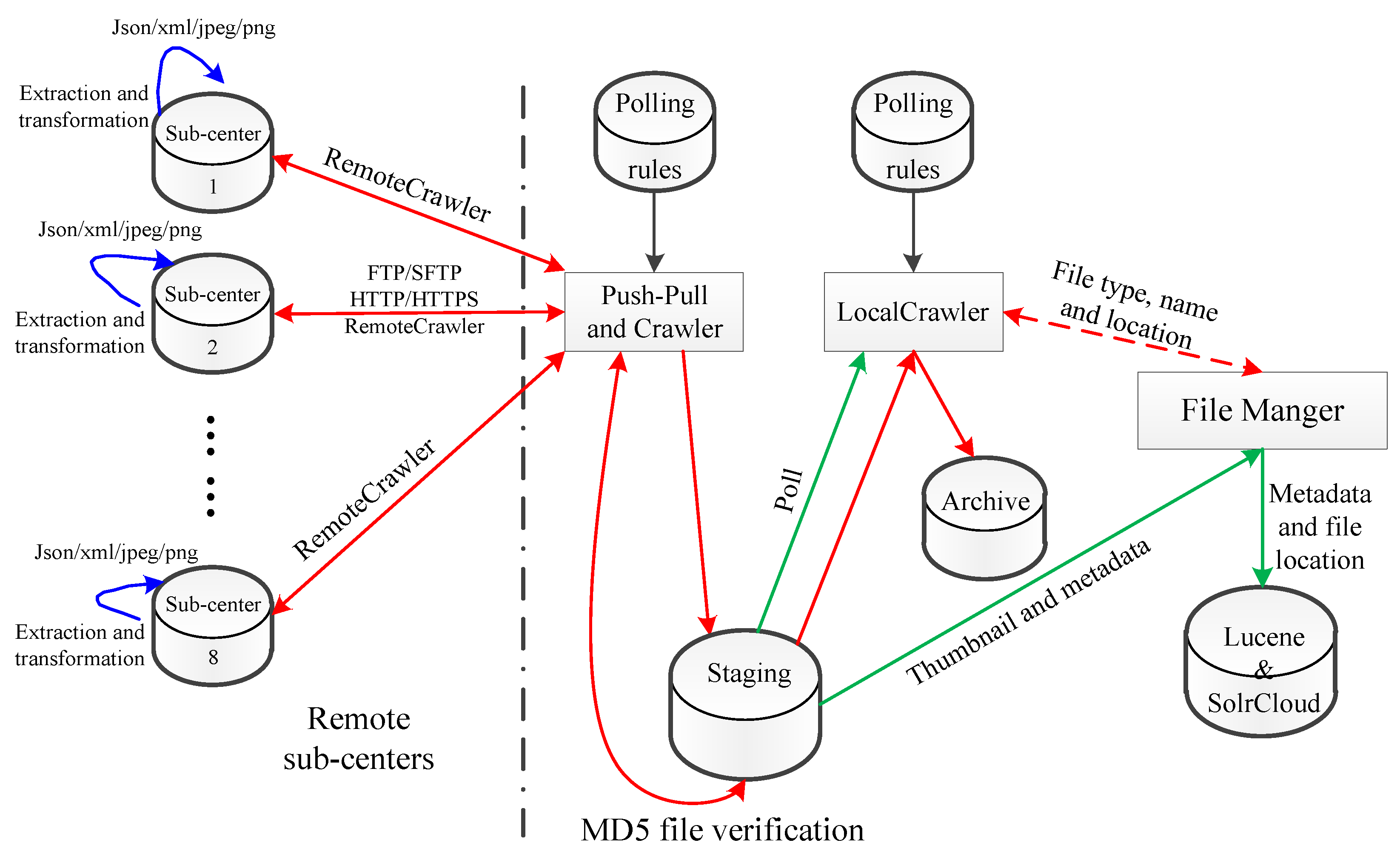

3.2. Distributed Multi-Source Remote Sensing Data Integration

4. Spatial Organization and Management of Remote Sensing Data

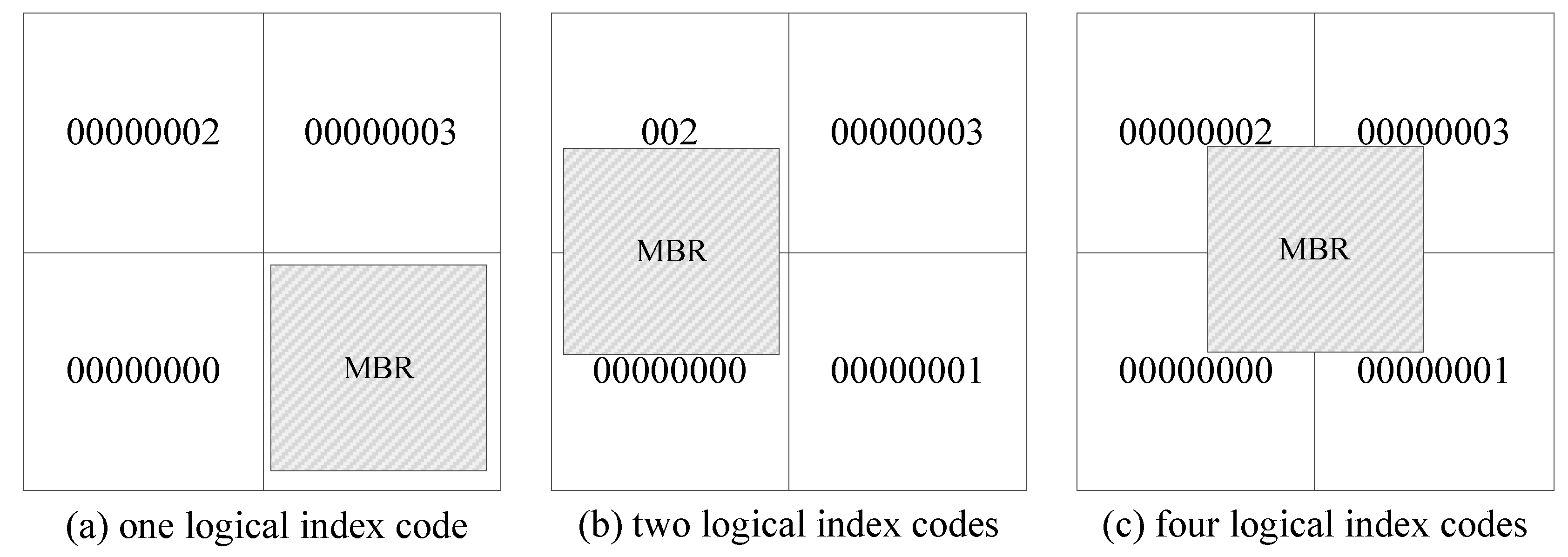

4.1. LSI Organization Model of Multi-Source Remote Sensing Data

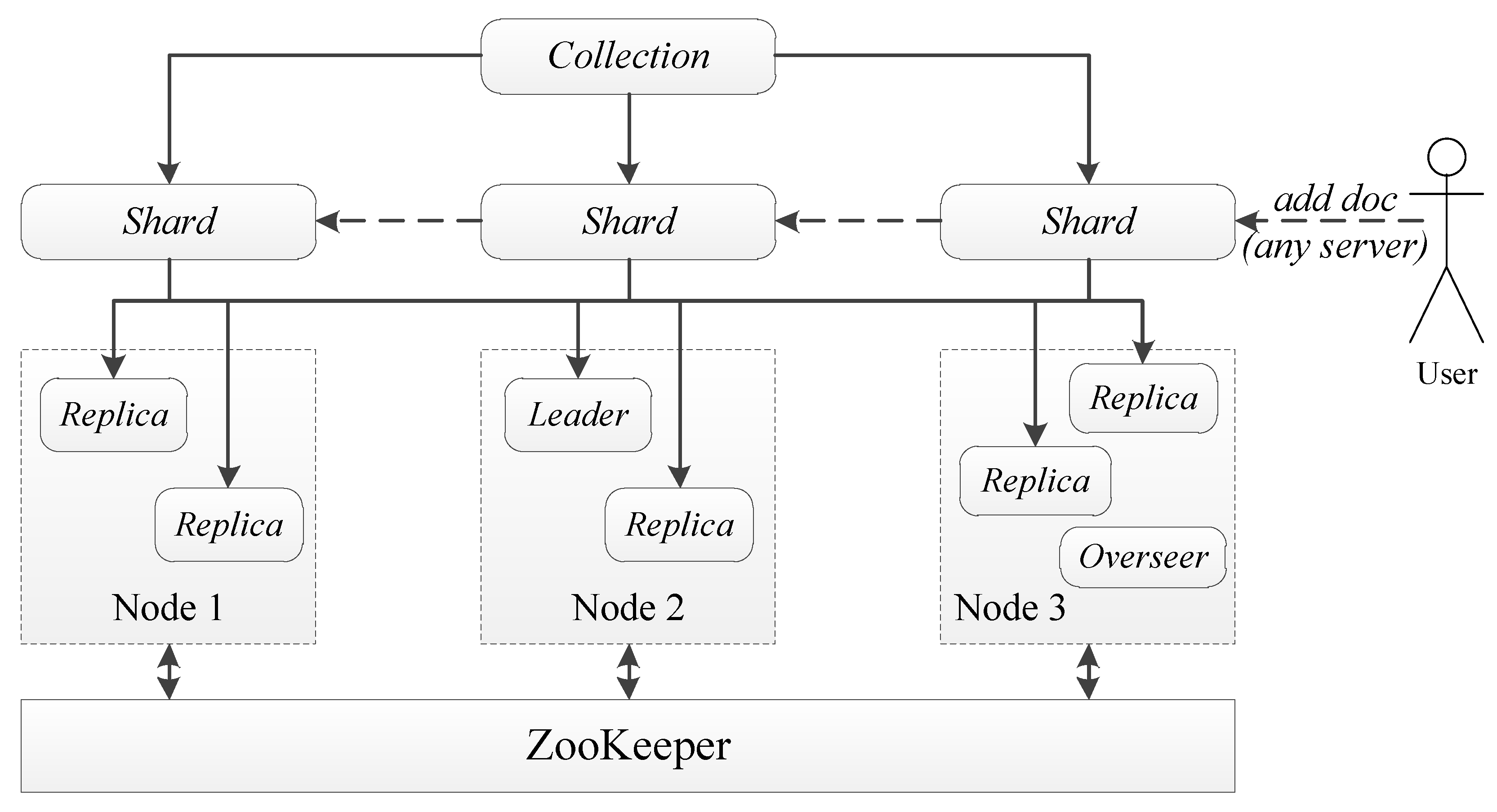

4.2. Full-Text Index of Multi-Sourced Remote Sensing Metadata

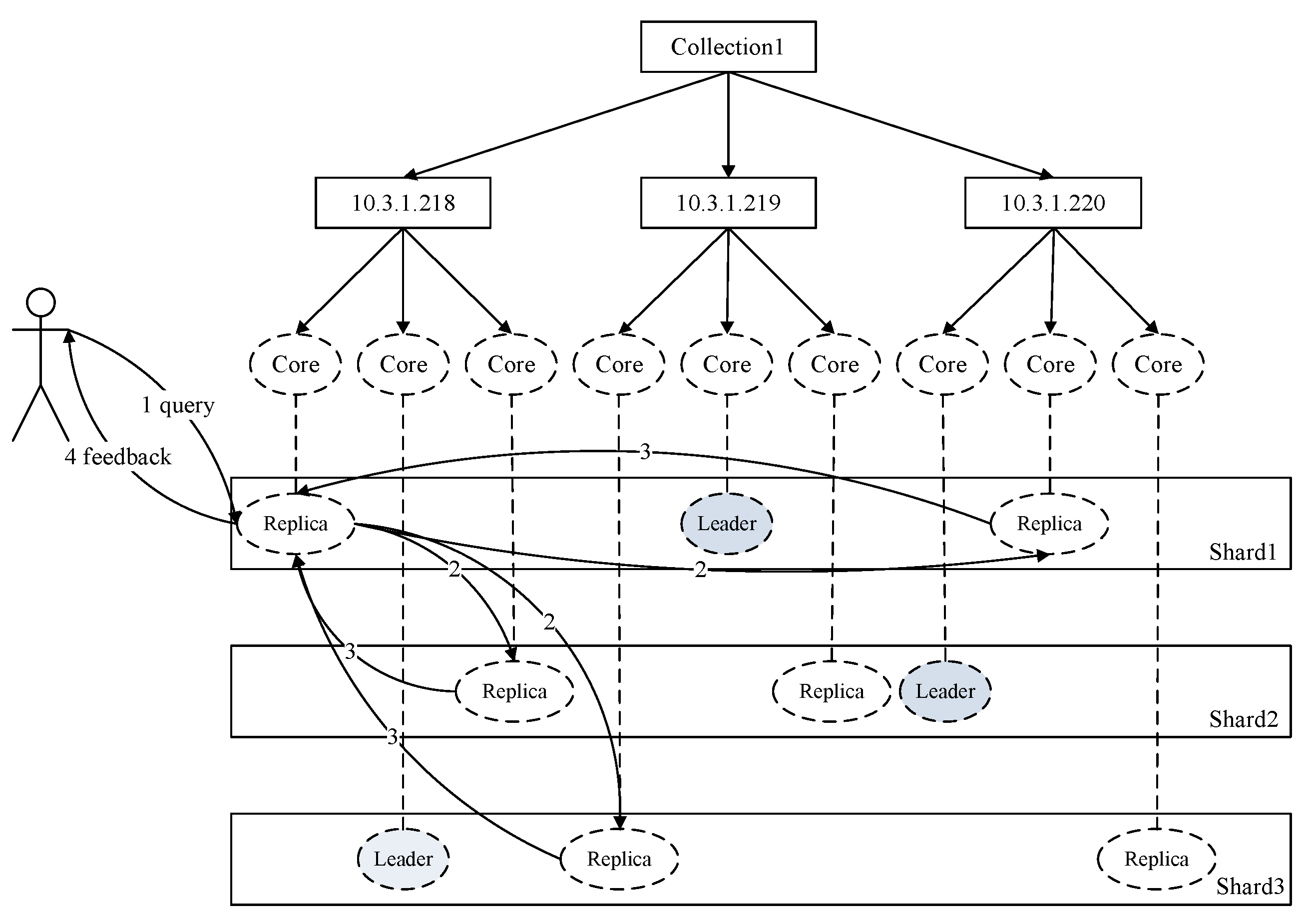

4.3. Distributed Data Retrieval

5. Experiment and Analysis

5.1. Distributed Data Integration Experiment

5.2. LSI Model-Based Metadata Retrieval Experiment

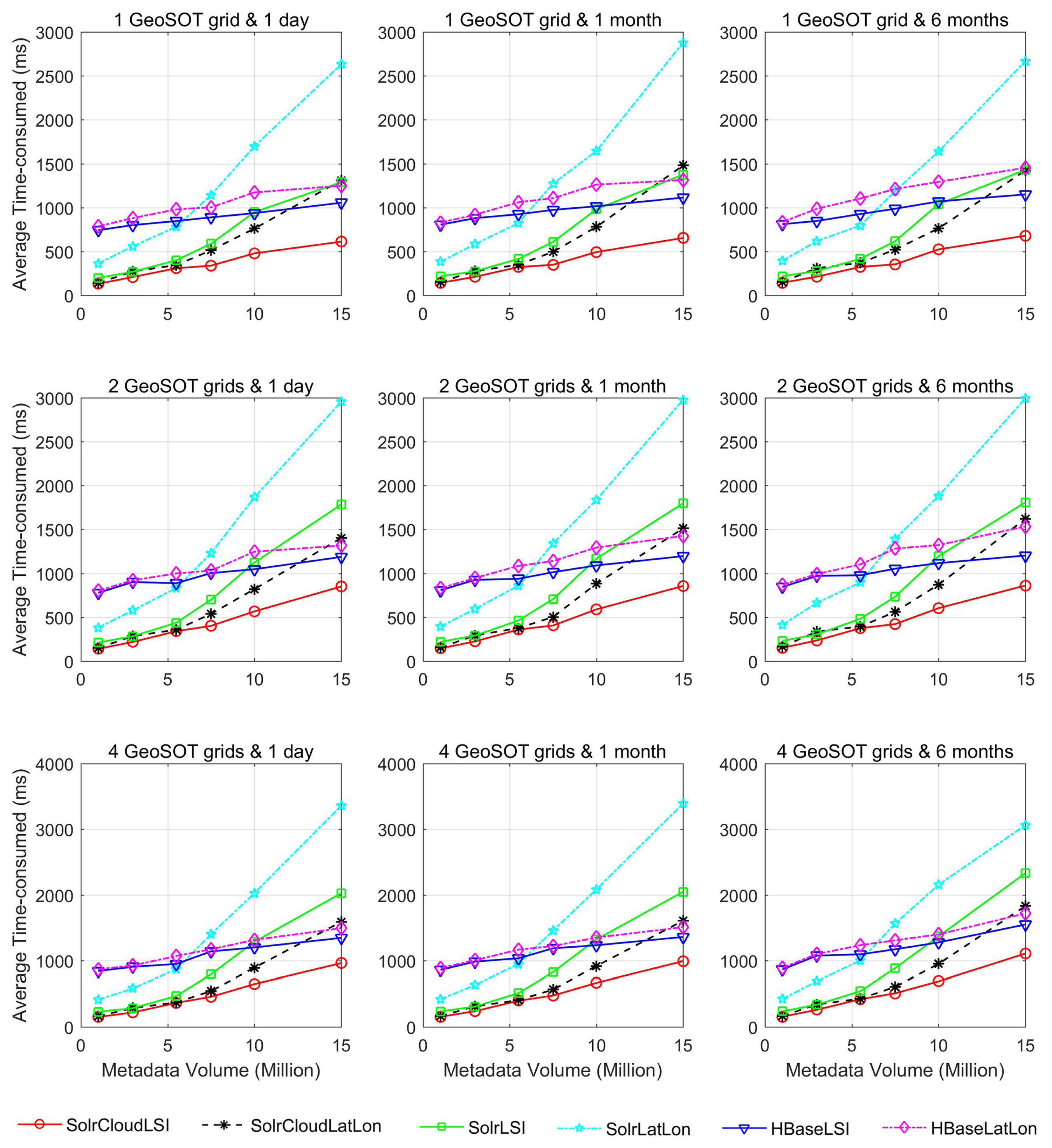

5.3. Comparative Experiments and Analysis

5.3.1. Comparative Experiments

- (1)

- In order to show the advantages of our proposed LSI mode, the longitude and latitude were directly used to perform a full-text search, and other parameters were the same as in the LSI model-based experiments. For simplicity, the LSI model-based metadata retrieval method is simply referred to as SolrCloudLSI, and the longitude and latitude retrieval method is referred to as SolrCloudLatLon.

- (2)

- In order to show the big data management and retrieval capabilities of SolrCloud, we built a single Solr node environment in a new virtual machine, with the same configuration as the SolrCloud nodes. The comparative experiment included two types: LSI model-based data retrieval, and the longitude- and latitude-based data retrieval on the single Solr node. The query parameters of the two types of experiments were the same as the LSI model-based data retrieval experiments. Similarly, the LSI model-based data retrieval on the single Solr node is referred to as SolrLSI, and the longitude- and latitude-based data retrieval on the single Solr node is referred to as SolrLatLon.

- (3)

- In order to show the superiority of our proposed data management scheme with respect to other existing schemes, we chose HBase as the comparison object [45]. As a column-oriented key-value data store, HBase has been idolized widely because of its lineage with Hadoop and HDFS [46,47]. Therefore, LSI model-based data retrieval and the longitude- and latitude-based data retrieval experiments in HBase clusters were carried out. The cluster was provisioned with one NameNode and two DataNodes. The NameNode and DataNodes were configured in the same way as the SolrCloud cluster, 2 virtual processor cores and 4 GB of RAM. Hadoop 2.7.3, HBase 0.98.4 and Java 1.7.0 were installed on both the NameNode and the DataNodes. The query parameters and metadata volume of comparative experiments in the HBase cluster were the same as in the above experiments. Similarly, the LSI model-based data retrieval in the HBase cluster is referred to as HBaseLSI, and the longitude- and latitude-based data retrieval is referred to as HBaseLatLon.

5.3.2. Results Analysis

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Li, X.; Wang, L. On the study of fusion techniques for bad geological remote sensing image. J. Ambient Intell. Humaniz. Comput. 2015, 6, 141–149. [Google Scholar] [CrossRef]

- Jeansoulin, R. Review of Forty Years of Technological Changes in Geomatics toward the Big Data Paradigm. ISPRS Int. J. Geo-Inf. 2016, 5, 155. [Google Scholar] [CrossRef]

- Lowe, D.; Mitchell, A. Status Report on NASA’s Earth Observing Data and Information System (EOSDIS). In Proceedings of the 42nd Meeting of the Working Group on Information Systems & Services, Frascati, Italy, 19–22 September 2016. [Google Scholar]

- China’s FY Satellite Data Center. Available online: http://satellite.cma.gov.cn/portalsite/default.aspx (accessed on 25 August 2017).

- China Center for Resources Satellite Data and Application. Available online: http://www.cresda.com/CN/sjfw/zxsj/index.shtml (accessed on 25 August 2017).

- Yan, J.; Wang, L. Suitability evaluation for products generation from multisource remote sensing data. Remote Sens. 2016, 8, 995. [Google Scholar] [CrossRef]

- Dou, M.; Chen, J.; Chen, D.; Chen, X.; Deng, Z.; Zhang, X.; Xu, K.; Wang, J. Modeling and simulation for natural disaster contingency planning driven by high-resolution remote sensing images. Future Gener. Comput. Syst. 2014, 37, 367–377. [Google Scholar] [CrossRef]

- Lü, X.; Cheng, C.; Gong, J.; Guan, L. Review of data storage and management technologies for massive remote sensing data. Sci. China Technol. Sci. 2011, 54, 3220–3232. [Google Scholar] [CrossRef]

- Wu, D.; Zhu, L.; Xu, X.; Sakr, S.; Sun, D.; Lu, Q. Building pipelines for heterogeneous execution environments for big data processing. IEEE Softw. 2016, 33, 60–67. [Google Scholar] [CrossRef]

- Nagi, K. Bringing search engines to the cloud using open source components. In Proceedings of the 7th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management (IC3K), Lisbon, Portugal, 12–14 November 2015; pp. 116–126. [Google Scholar]

- Yang, C.; Huang, Q.; Li, Z.; Liu, K.; Hu, F. Big Data and cloud computing: Innovation opportunities and challenges. Int. J. Digit. Earth 2017, 10, 13–53. [Google Scholar] [CrossRef]

- Luo, X.; Wang, M.; Dai, G. A Novel Technique to Compute the Revisit Time of Satellites and Its Application in Remote Sensing Satellite Optimization Design. Int. J. Aerosp. Eng. 2017, 2017, 6469439. [Google Scholar] [CrossRef]

- Wang, W.; De, S.; Cassar, G.; Moessner, K. An experimental study on geospatial indexing for sensor service discovery. Expert Syst. Appl. 2015, 42, 3528–3538. [Google Scholar] [CrossRef] [Green Version]

- He, Z.; Wu, C.; Liu, G.; Zheng, Z.; Tian, Y. Decomposition tree: A spatio-temporal indexing method for movement big data. Clust. Comput. 2015, 18, 1481–1492. [Google Scholar] [CrossRef]

- Leptoukh, G. Nasa remote sensing data in earth sciences: Processing, archiving, distribution, applications at the ges disc. In Proceedings of the 31st International Symposium of Remote Sensing of Environment, Saint Petersburg, Russia, 20–24 June 2005. [Google Scholar]

- Geohash. Available online: https://en.wikipedia.org/wiki/Geohash (accessed on 25 August 2017).

- Zhe, Y.; Weixin, Z.; Dong, C.; Wei, Z.; Chengqi, C. A fast UAV image stitching method on GeoSOT. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 1785–1788. [Google Scholar]

- Dev, D.; Patgiri, R. Performance evaluation of HDFS in big data management. In Proceedings of the 2014 International Conference on High Performance Computing and Applications (ICHPCA), Bhubaneswar, India, 22–24 December 2014; pp. 1–7. [Google Scholar]

- Mitchell, A.; Ramapriyan, H.; Lowe, D. Evolution of web services in eosdis-search and order metadata registry (echo). In Proceedings of the 2009 IEEE International Geoscience and Remote Sensing Symposium, Cape Town, South Africa, 12–17 July 2009; pp. 371–374. [Google Scholar]

- OODT. Available online: http://oodt.apache.org/ (accessed on 25 January 2017).

- Mattmann, C.A.; Crichton, D.J.; Medvidovic, N.; Hughes, S. A software architecture-based framework for highly distributed and data intensive scientific applications. In Proceedings of the 28th international conference on Software engineering, Shanghai, China, 20–28 May 2006; pp. 721–730. [Google Scholar]

- Mattmann, C.A.; Freeborn, D.; Crichton, D.; Foster, B.; Hart, A.; Woollard, D.; Hardman, S.; Ramirez, P.; Kelly, S.; Chang, A.Y.; et al. A reusable process control system framework for the orbiting carbon observatory and npp. sounder peate missions. In Proceedings of the 2009 Third IEEE International Conference on Space Mission Challenges for Information Technology, Pasadena, CA, USA, 19–23 July 2009; pp. 165–172. [Google Scholar]

- Di, L.; Moe, K.; Zyl, T.L.V. Earth observation sensor web: An overview. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2010, 3, 415–417. [Google Scholar] [CrossRef]

- Reuter, D.C.; Richardson, C.M.; Pellerano, F.A.; Irons, J.R.; Allen, R.G.; Anderson, M.; Jhabvala, M.D.; Lunsford, A.W.; Montanaro, M.; Smith, R.L. The thermal infrared sensor (tirs) on landsat 8: Design overview and pre-launch characterization. Remote Sens. 2015, 7, 1135–1153. [Google Scholar] [CrossRef]

- Wei, Y.; Di, L.; Zhao, B.; Liao, G.; Chen, A. Transformation of hdf-eos metadata from the ecs model to iso 19115-based xml. Comput. Geosci. 2007, 33, 238–247. [Google Scholar] [CrossRef]

- Khandelwal, S.; Goyal, R. Effect of vegetation and urbanization over land surface temperature: Case study of jaipur city. In Proceedings of the EARSeL Symposium, Paris, France, 31 May–3 June 2010; pp. 177–183. [Google Scholar]

- Mahaxay, M.; Arunpraparut, W.; Trisurat, Y.; Tangtham, N. Modis: An alternative for updating land use and land cover in large river basin. Thai J. For 2014, 33, 34–47. [Google Scholar]

- Zhong, B.; Zhang, Y.; Du, T.; Yang, A.; Lv, W.; Liu, Q. Cross-calibration of hj-1/ccd over a desert site using landsat etm+ imagery and aster gdem product. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7247–7263. [Google Scholar] [CrossRef]

- Devarakonda, R.; Palanisamy, G.; Wilson, B.E.; Green, J.M. Mercury: Reusable metadata management, data discovery and access system. Earth Sci. Inf. 2010, 3, 87–94. [Google Scholar] [CrossRef]

- Chen, N.; Hu, C. A sharable and interoperable meta-model for atmospheric satellite sensors and observations. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1519–1530. [Google Scholar] [CrossRef]

- Yue, P.; Gong, J.; Di, L. Augmenting geospatial data provenance through metadata tracking in geospatial service chaining. Comput. Geosci. 2010, 36, 270–281. [Google Scholar] [CrossRef]

- Gilman, J.A.; Shum, D. Making metadata better with cmr and mmt. In Proceedings of the Federation of Earth Science Information Partners 2016 Summer Meeting, Durham, NC, USA, 19–22 July 2016. [Google Scholar]

- Burgess, A.B.; Mattmann, C.A. Automatically classifying and interpreting polar datasets with apache tika. In Proceedings of the 2014 IEEE 15th International Conference on Information Reuse and Integration (IEEE IRI 2014), Redwood City, CA, USA, 13–15 August 2014; pp. 863–867. [Google Scholar]

- Cheng, C.; Ren, F.; Pu, G. Introduction for the Subdivision and Organization of Spatial Information; Science Press: Beijing, China, 2012. [Google Scholar]

- Lu, N.; Cheng, C.; Jin, A.; Ma, H. An index and retrieval method of spatial data based on geosot global discrete grid system. In Proceedings of the 2013 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Melbourne, Australia, 21–26 July 2013; pp. 4519–4522. [Google Scholar]

- Yan, J.; Chengqi, C. Dynamic representation method of target in remote sensed images based on global subdivision grid. In Proceedings of the 2014 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Quebec City, QC, Canada, 13–18 July 2014; pp. 3097–3100. [Google Scholar]

- Happ, P.; Ferreira, R.S.; Bentes, C.; Costa, G.; Feitosa, R.Q. Multiresolution segmentation: A parallel approach for high resolution image segmentation in multicore architectures. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2010, 38, C7. [Google Scholar]

- Wang, L.; Cheng, C.; Wu, S.; Wu, F.; Teng, W. Massive remote sensing image data management based on hbase and geosot. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 4558–4561. [Google Scholar]

- Vyverman, M.; De Baets, B.; Fack, V.; Dawyndt, P. Prospects and limitations of full-text index structures in genome analysis. Nucleic Acids Res. 2012, 40, 6993–7015. [Google Scholar] [CrossRef] [PubMed]

- SolrCloud. Available online: https://cwiki.apache.org/confluence/display/solr/SolrCloud (accessed on 25 January 2017).

- Singh, S.; Liu, Y.; Khan, M. Exploring cloud monitoring data using search cluster and semantic media wiki. In Proceedings of the 2015 IEEE 12th International Conference on Ubiquitous Intelligence and Computing and 2015 IEEE 12th International Conference on Autonomic and Trusted Computing and 2015 IEEE 15th International Conference on Scalable Computing and Communications and Its Associated Workshops (UIC-ATC-ScalCom), Beijing, China, 10–14 August 2015; pp. 901–908. [Google Scholar]

- Kassela, E.; Konstantinou, I.; Koziris, N. A generic architecture for scalable and highly available content serving applications in the cloud. In Proceedings of the 2015 IEEE Fourth Symposium on Network Cloud Computing and Applications (NCCA), Munich, Germany, 11–12 June 2015; pp. 83–90. [Google Scholar]

- Bai, J. Feasibility analysis of big log data real time search based on hbase and elasticsearch. In Proceedings of the 2013 Ninth International Conference on Natural Computation (ICNC), Shenyang, China, 23–25 July 2013; pp. 1166–1170. [Google Scholar]

- Baldoni, R.; Damore, F.; Mecella, M.; Ucci, D. A software architecture for progressive scanning of on-line communities. In Proceedings of the 2014 IEEE 34th International Conference on Distributed Computing Systems Workshops (ICDCSW), Madrid, Spain, 30 June–3 July 2014; pp. 207–212. [Google Scholar]

- Rathore, M.M.U.; Paul, A.; Ahmad, A.; Chen, B.W.; Huang, B.; Ji, W. Real-time big data analytical architecture for remote sensing application. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4610–4621. [Google Scholar] [CrossRef]

- Giachetta, R. A framework for processing large scale geospatial and remote sensing data in MapReduce environment. Comput. Graph. 2015, 49, 37–46. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, Z.; Liao, H.; Li, C. Improving the performance of GIS polygon overlay computation with MapReduce for spatial big data processing. Clust. Comput. 2015, 18, 507–516. [Google Scholar] [CrossRef]

- Wei, Y.; Qiu, J.; Karimi, H.R. Reliable output feedback control of discrete-time fuzzy affine systems with actuator faults. IEEE Trans. Circuits Syst. I Regul. Pap. 2017, 64, 170–181. [Google Scholar] [CrossRef]

- Wei, Y.; Qiu, J.; Karimi, H.R.; Wang, M. Model reduction for continuous-time Markovian jump systems with incomplete statistics of mode information. Int. J. Syst. Sci. 2014, 45, 1496–1507. [Google Scholar] [CrossRef]

- Wei, Y.; Qiu, J.; Karimi, H.R.; Wang, M. New results on H∞ dynamic output feedback control for Markovian jump systems with time-varying delay and defective mode information. Optim. Control Appl. Methods 2014, 35, 656–675. [Google Scholar] [CrossRef]

- Wei, Y.; Qiu, J.; Karimi, H.R.; Wang, M. Filtering design for two-dimensional Markovian jump systems with state-delays and deficient mode information. Inf. Sci. 2014, 269, 316–331. [Google Scholar] [CrossRef]

- Wei, Y.; Wang, M.; Qiu, J. New approach to delay-dependent H∞ filtering for discrete-time Markovian jump systems with time-varying delay and incomplete transition descriptions. IET Control Theory Appl. 2013, 7, 684–696. [Google Scholar] [CrossRef]

| Categories | ISO Metadata | HDF-EOS Metadata | CCRSDA Metadata |

|---|---|---|---|

| Metadata information | Creation | FILE_DATE | - |

| LastRevision | - | - | |

| Image Information | MD_Identifier | LOCALGRANULEID | - |

| TimePeriod_beginposition | RangeBeginningDate + RangeBeginningTime | imagingStartTime | |

| TimePeriod_endPosition | RangeEndingDate + RangeEndingTime | imagingStopTime | |

| Platform | AssociatedPlatformShortName | satelliteId | |

| Instrument | AssociatedinstrumentShortName | - | |

| Sensor | AssociatedsensorShortName | sensorId | |

| Datacenter | PROCESSINGCENTER | - | |

| recStationId | STATION_ID | recStationId | |

| spatialResolution | NADIRDATARESOLUTION | pixelSpacing | |

| westBoundLongtude | WESTBOUNDINGCOORDINATE | productUpperLeftLong | |

| eastBoundLongtude | EASTBOUNDINGCOORDINATE | productUpperRightLong | |

| southBoundLatitude | SOUTHBOUNDINGCOORDINATE | productLowerLeftLat | |

| northBoundLatitude | NORTHBOUNDINGCOORDINATE | productUpperLeftLat | |

| centerLongtude | - | sceneCenterLong | |

| centerLatitude | - | sceneCenterLat | |

| scenePath | WRS_PATH | scenePath | |

| sceneRow | WRS_ROW | sceneRow | |

| referenceSystemIdentifier | PROJECTION_PARAMETERS | earthModel+mapProjection | |

| cloudCoverPercentage | - | cloudCoverPercentage | |

| imageQualityCode | - | overallQuality | |

| processingLevel | DATA_TYPE | productLevel |

| Index Type | Field | Field Type | Indexed |

|---|---|---|---|

| static | ID | string | true |

| CAS.ProductId | string | true | |

| CAS.ProductName | string | true | |

| CAS.ProductTypeName | date | true | |

| CAS.ProductTypeId | string | true | |

| CAS.ProductReceivedTime | string | true | |

| CAS.ProductTransferStatus | string | true | |

| CAS.ReferenceOriginal | string | true | |

| CAS.ReferenceDatastore | string | true | |

| CAS.ReferenceFileSize | long | true | |

| CAS.ReferenceMimeType | string | true | |

| dynamic | * | string | true |

| Sub-Center | Satellite | Data Type | Volume of Images | Image Format |

|---|---|---|---|---|

| 1 | Landsat 8 | OLI_TIRS | 310 | GeoTIFF |

| 2 | HJ-1A | HSI | 350 | HDF5 |

| 2 | CEBERS-1/2 | CCD | 270 | GeoTIFF |

| 3 | Landsat 7 | ETM+ | 450 | GeoTIFF |

| 4 | Landsat1-5 | MSS | 260 | GeoTIFF |

| 5 | HJ-1A/B | CCD | 710 | GeoTIFF |

| 6 | Landsat 5 | TM | 430 | GeoTIFF |

| 7 | FY-3A/B | VIRR | 450 | HDF5 |

| 8 | Aster | L1T | 150 | HDF4 |

| Satellite | Data Type | Volume of Images Stored in Sub-Center | Volume of Images Integrated by Main Center | Average Transfer Rate (MB/s) |

|---|---|---|---|---|

| Landsat 8 | OLI_TIRS | 310 | 310 | 9.8 |

| HJ-1A | HSI | 350 | 350 | 10.1 |

| CEBERS-1/2 | CCD | 270 | 270 | 11.7 |

| Landsat 7 | ETM+ | 450 | 450 | 10.5 |

| Landsat1-5 | MSS | 260 | 260 | 12.8 |

| HJ-1A/B | CCD | 710 | 710 | 9.9 |

| Landsat 5 | TM | 430 | 430 | 13.8 |

| FY-3A/B | VIRR | 450 | 450 | 11.2 |

| Aster | L1T | 150 | 150 | 10.8 |

| Satellite | Data Type | Volume of Metadata | Metadata Format |

|---|---|---|---|

| Landsat 8 | OLI_TIRS | 896,981 | HDF-EOS |

| HJ-1A | HSI | 85,072 | Customized XML |

| CEBERS-1/2 | CCD | 889,685 | Customized XML |

| Landsat 7 | ETM+ | 2,246,823 | HDF-EOS |

| Landsat1-5 | MSS | 1,306,579 | HDF-EOS |

| HJ-1A/B | CCD | 2,210,352 | Customized XML |

| Landsat 5 | TM | 2,351,899 | HDF-EOS |

| FY-3A/B | VIRR | 2,343,288 | Customized HDF5-FY |

| Aster | L1T | 2,951,298 | HDF-EOS |

| Group | Subgroup | Query Time Frames | ||

|---|---|---|---|---|

| Metadata Volume (Million) | Spatial Parameters | 1 Day | 1 Month | 6 Months |

| 1 | 1 GeoSOT Grid | 133 ms | 144 ms | 145 ms |

| 2 GeoSOT Grids | 139 ms | 144 ms | 151 ms | |

| 4 GeoSOT Grids | 151 ms | 154 ms | 155 ms | |

| 3 | 1 GeoSOT Grid | 211 ms | 213 ms | 215 ms |

| 2 GeoSOT Grids | 218 ms | 224 ms | 235 ms | |

| 4 GeoSOT Grids | 220 ms | 239 ms | 261 ms | |

| 5.5 | 1 GeoSOT Grid | 310 ms | 324 ms | 325 ms |

| 2 GeoSOT Grids | 340 ms | 359 ms | 375 ms | |

| 4 GeoSOT Grids | 365 ms | 398 ms | 421 ms | |

| 7.5 | 1 GeoSOT Grid | 340 ms | 350 ms | 355 ms |

| 2 GeoSOT Grids | 401 ms | 405 ms | 421 ms | |

| 4 GeoSOT Grids | 457 ms | 476 ms | 510 ms | |

| 10 | 1 GeoSOT Grid | 480 ms | 495 ms | 525 ms |

| 2 GeoSOT Grids | 566 ms | 589 ms | 603 ms | |

| 4 GeoSOT Grids | 650 ms | 668 ms | 691 ms | |

| 15 | 1 GeoSOT Grid | 613 ms | 655 ms | 681 ms |

| 2 GeoSOT Grids | 850 ms | 856 ms | 861 ms | |

| 4 GeoSOT Grids | 965 ms | 994 ms | 1110 ms | |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, J.; Yan, J.; Ma, Y.; Wang, L. Big Data Integration in Remote Sensing across a Distributed Metadata-Based Spatial Infrastructure. Remote Sens. 2018, 10, 7. https://doi.org/10.3390/rs10010007

Fan J, Yan J, Ma Y, Wang L. Big Data Integration in Remote Sensing across a Distributed Metadata-Based Spatial Infrastructure. Remote Sensing. 2018; 10(1):7. https://doi.org/10.3390/rs10010007

Chicago/Turabian StyleFan, Junqing, Jining Yan, Yan Ma, and Lizhe Wang. 2018. "Big Data Integration in Remote Sensing across a Distributed Metadata-Based Spatial Infrastructure" Remote Sensing 10, no. 1: 7. https://doi.org/10.3390/rs10010007