1. Introduction

In recent years, solar energy from rooftop photovoltaic (PV) systems has seen a rapid growth in United States (US), thanks to decreasing installation costs, improved module efficiencies, innovative business models, and favorable policy support [

1,

2,

3]. A great amount of research has studied various aspects of rooftop solar PV installations, including but not limited to their design, siting, potential, environmental benefit, and performance [

4,

5,

6]. However, the solar installers, a pivotal driving force behind the proliferation of rooftop PV systems [

7], have received relatively little attention. Have they done a proper job in installing the rooftop systems? Which installers have the best practice in installation? What are the opportunities to improve the performance of PV installers? These issues are of particular importance for the solar installation industry and policymakers to better pave the road ahead for solar energy [

4]. In this paper, we aim to shed some light on these issues. Specifically, we benchmark the performance of PV installations and analyze the link between installation performance and installer characteristics.

Solar capacity build-up and electricity generation are the two immediate outcomes of PV installation. Both are widely-used measures to assess PV system performance by solar installers and policymakers. For a solar installer, its installed capacity is an important indicator of its customer base and competitiveness in the market. For policymakers, capacity is used to design renewable energy quota policies and solar incentive policies in many regions such as mainland China, Hong Kong, and California [

8,

9,

10]. The electricity generation is the total amount of electricity generated by the system in reality. Real-time electricity generation is a complex physical process and hinges on a number of factors. Some are environmental factors exogenous to the installers [

11], including but not limited to the solar irradiance, the ambient temperature, the humidity, and the wind speed [

12,

13,

14]. The other factors pertain to the installation process, such as choice of module technology, mounting rack, and orientation of the system [

15,

16,

17]. In this paper, we would like to employ an approach to assess the PV installation performance based on environmental factors, capacity, and electricity generation simultaneously. To this end, our empirical strategy draws on data envelopment analysis (DEA). DEA is a non-parametric mathematical programming approach that is particularly tailored for the evaluation of multi-input multi-output processes. In recent years, DEA has been demonstrated as a powerful tool to evaluate the performance of electricity generation facilities, such as hydro power plants, thermal power plants, and wind farms [

18,

19,

20,

21]. However, its application to solar PV systems is still a nascent research topic [

22,

23,

24]. In this study, we incorporate the major determinants of PV installation into consideration and map the PV installation as a multi-input multi-output process as in literature [

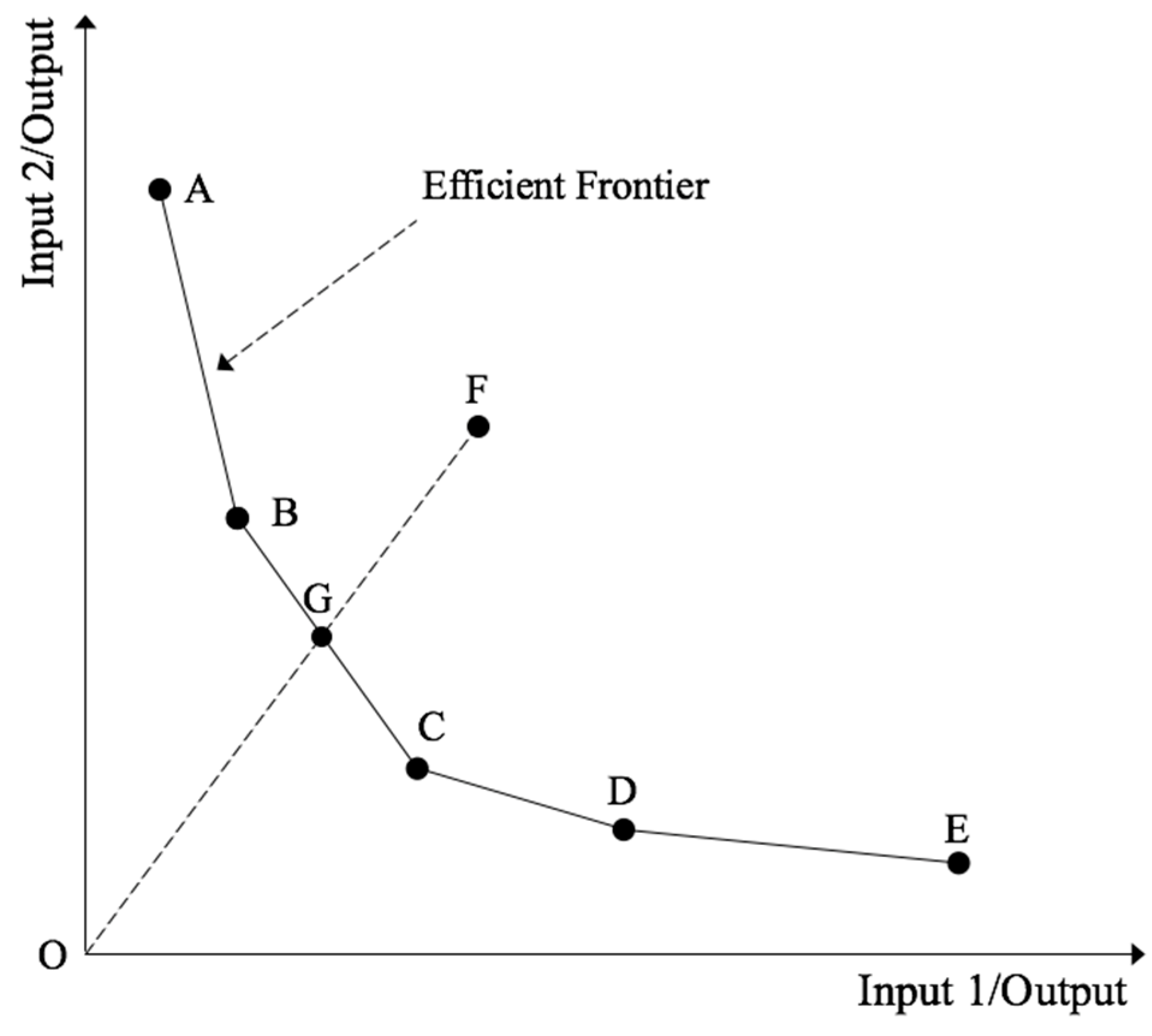

23]. The model allows us to go beyond the conventional output-to-input ratio analysis which typically employs a single output such as capacity and a single input such as cost. Existing DEA study has examined the performance of solar installations and found diverse efficiencies among the systems [

23]. However, it is unclear what causes the divergence in performance. This paper analyzes the determinant factors of PV system performance through the installer perspective. Specifically, this paper focuses on analyzing the relationship between installer characteristics and PV system performance.

Among all the states in US, California leads in both installed solar capacity and solar generation. At the end of November 2015, the state had a total solar electricity generating capacity of 9976 MW, of which 87% was from solar PV and the remaining 13% was from solar thermal [

25]. In 2014, California also became the first state that generated more than 5% of annual utility-scale electricity from utility-scale solar power [

26]. The rapid development of solar energy in California has been supported by various policy instruments [

27]. The most important policies include renewable portfolio standards [

28], tax credits [

29], cash incentives [

30], and net energy metering [

31]. These policies target at various entities in the solar value chain. At the same time, the development of solar PV energy has fostered a blooming solar installation industry in California [

7].

In this study, we apply the DEA method to evaluate a dataset of 1035 rooftop PV systems mounted by 213 installers in California. The data is obtained from the California Solar Initiative (CSI), the most prominent solar incentive program in the state [

32]. We find very diverse performance of the PV systems installed by different installers. Throughout the paper, we refer to systems installed in the vertically integrated manner (e.g., installer is also the manufacturer of the modules) as vertically integrated systems. We refer to all other systems as independent systems. Please note independent systems include systems mounted by vertically integrated installers with modules from a separate manufacturer. We find that vertically integrated PV systems on average are significantly more efficient than independent systems. This finding provides supportive arguments for vertical integration of the solar installation industry. We find that the size of a PV installer, defined as the number of installations accomplished, does not necessarily translate into better performance of PV installations. Instead, some of the largest installers in the market have a performance level far below the industry average. Furthermore, the geographic diversification of a PV installer’s operations is significantly and negatively associated with the installer’s installation performance. Also, PV systems installed by subsidiaries of oil companies such as BP and Chevron are very inefficient, which might relate to their withdrawal from the solar business. We discuss the reasons behind our empirical findings in detail and elaborate on the implications for policymakers.

The remainder of the paper is structured as follows.

Section 2 describes the solar installation industry in California.

Section 3 illustrates the empirical methods.

Section 4 describes the data sample.

Section 5 summarizes the results.

Section 6 concludes with further discussions and policy implications.

2. Solar Installation Industry in California

The solar installation industry is at the downstream of the solar energy value chain, and bridges the module manufacturers and the end customers. A typical installer’s service includes the following components: system design, site condition, system installation, interconnection to the power grid, system activation and test, and finally, routine maintenance. Other than these basic functions, installers may also integrate other functions such as financing as part of the service [

7].

To obtain a better look into the installation industry, we extract and compile data from the CSI working dataset [

33]. The CSI is the most salient solar incentive program in the state and is administered by the California Public Utilities Commission (CPUC). The CSI collects and maintains the most comprehensive and detailed database on solar energy deployment in the state. As of January 2016, the database reports data on 173,690 systems with a total capacity of 2600 MW.

Table 1 documents preliminary analysis of the installers. We can identify 2965 installers from the database. For the ease of presentation, we classify installers into five bins, from small installers with no more than 5 systems to giant installers with more than 5000 systems. The results indicate that most of the installers are small, private companies or organizations serving the local area. In the dataset, 1849 installers, representing 62.36% of the installers in the whole industry, have mounted no more than 5 systems. Collectively, these 1849 installers have developed a total of 3568 systems, which account for only 2.05% of all the installations and 4.29% of total capacity in the dataset. In contrast, the five biggest installers in terms of number of systems developed have done 56,730 installations, accounting for almost one third of total installations and 17.98% of the total capacity. The ratio between percentage of capacity and percentage of installations is less than one for the largest installers, meaning that the capacity per installation by the largest installers is below the industry average. Therefore, if the largest installers have better performance than smaller installers, their outperformance is not likely to be caused by the economy of scale in developing a single PV project. We also observe sharp contrast in geographical distribution of operations. The smallest installers on average have business in 1.25 counties and 1.64 ZIP code areas, while the biggest installers on average have business spread over 39.60 counties and 712.80 ZIP code areas. In sum,

Table 1 indicates that the installers are very diverse in terms of business scale and geographical outreach of operations.

4. Data

We collect data of rooftop PV systems from two CSI datasets, the CSI working dataset and the CSI measured production dataset [

33]. The working dataset reports characteristics of all solar PV installations that have been approved for grid connection within the territories of the three investor-owned utilities (e.g., Pacific Gas and Electric, San Diego Gas and Electric, and Southern California Edison). For each solar installation, we can observe the location (city, county, and ZIP code), the capacity, the application date, the installer name, the model and manufacturer of the PV modules, and the quantity of the modules. For a subset of PV installations in the working dataset, CSI reports the monthly production data in the measured production dataset. Under the CSI rules, incentives for large systems are based on actual performance. Therefore, electricity generation of eligible systems is metered and reported on a monthly basis to CSI. By January 2016, a total of 157,802 system-month observations have been entered into the production dataset. For the purpose of our study, we extract the total cost and monthly production of the PV systems from the production dataset. To protect the privacy of the system owner, the name and address of the owner have been removed from the dataset by CSI. But the working data and production data can be matched through the CSI application number of the system. We have attached the matched CSI data as

supplementary material.

In order to assess the performance of the solar systems, we need to restrict the sample to a specific time period and measure the performance of all systems during this period. Because of seasonality of weather conditions, we should measure the PV performance over at least a one-year period. Furthermore, a careful examination of the production data shows that production of different systems may not be recorded on the same date. Some systems are measured on the first day of each month and many others are measured half way into the month. Due to the above two considerations, we restrict our production data to the period from 1 January 2013 to 31 December 2013. The time period is a plausible choice that can accommodate an adequate number of systems. Extending the time period to 2012 or 2014 would force us to significantly trim the sample. Finally, we obtain a sample of 1035 PV installations, the geographical distribution of which is plotted in

Figure 2 similar to [

23]. The graph shows that majority of the commercial systems locate along the coast and around densely populated areas such as the bay area, Los Angeles, and San Diego. There are few installations near the eastern and northern state borders.

Moreover, we would like to point out that a PV system’s performance depends on its technology type (e.g., monocrystalline, polycrystalline, thin-film, etc.). The conversion efficiency ranges from 13% of certain thin-film modules to more than 20% of high-performance monocrystalline modules [

39]. It would be of interest to know the technology type of the systems. However, CSI does not report the technology information, so we do not know the fraction of each technology in our sample. But there is evidence that monocrystalline modules together with building-integrated PV account for over 80% of installations in California residential sector since 2010 ([

39], p. 34). In addition, we would like to comment that the choice of the technology is a decision made by the system owner/installer. Therefore, technology choice can be regarded as an endogenous factor in the assessment of installer performance.

We collect the solar irradiance data compiled by NREL. Since there is no downloadable irradiance data after 2010, we use the solar irradiation data for 1998–2009 [

40]. The data provides monthly average daily solar irradiation at 10-km resolution for the United States. We convert the irradiation data to irradiance as specified by the model. The ZIP code for each 10 × 10 km grid cell is also given in the data. Therefore, we assign the irradiance to a PV system by matching the ZIP code.

We obtain the monthly average temperature data from weather stations in California maintained in the National Climatic Data by the National Oceanic and Atmospheric Administration [

41]. The coordinates of the weather station are included in the data. We proxy a solar installation’s coordinates based on its ZIP code. Then for each installation, we use the inverse distance weighting interpolation to derive the ambient temperature [

42]. The interpolation selects the three nearest weather stations and uses the inverse quadratic distances between the installation and weather stations as the weights to calculate the weighted average temperature for the installation.

Since we assess the performance in year 2013, all monthly data are aggregated or averaged to yield the annual measure.

Table 2 shows the distribution of PV systems by installers. A total of 213 installers are present in the sample.

Table 3 shows the top 25 installers in the sample. Collectively, the top 25 installers build roughly 60% of all systems (655 out of 1035) in the sample. The top five installers build around one third of the systems (344 out of 1035).

Table 4 shows the descriptive statistics of the above variables in the sample. The PV installations in the sample on average have capacity of 215.15 kW, 1041.06 modules, cost of

$1381.89 thousand, and electricity generation of 402.98 MWh. The biggest system in sample has a maximum capacity of 1523.26 kW whereas the smallest one is only 1.33 kW.

6. Discussion and Conclusions

The solar installation industry plays an important role in the rapid expansion of rooftop solar energy. Understanding how the installers perform in building up solar capacity to generate solar electricity is critical for the future development of solar energy. Through the analysis of a large sample of rooftop PV installations, we have obtained the following key findings about the solar energy in California:

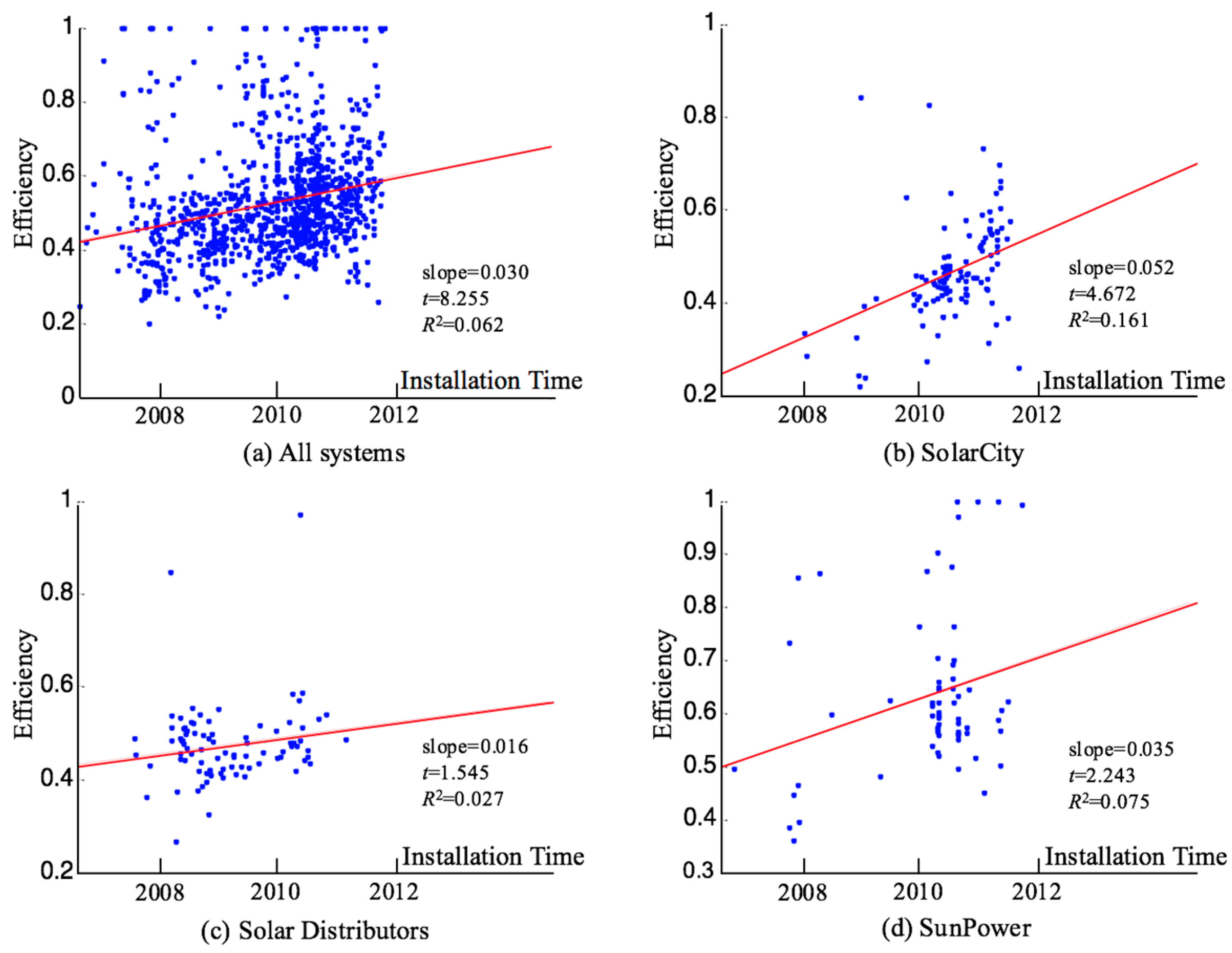

Larger installers on average do not develop rooftop PV systems in a more efficient way than smaller installers. In fact, some of the largest installers display significantly worse installation performance than the industry average.

Geographic diversification of an installer’s operations is significantly and negatively associated with performance of the installations.

Solar subsidiaries of oil companies like BP and Chevron have inferior installation performance.

PV systems installed in the vertically integrated way are significantly more efficient than other systems in the sample.

The findings of this study bear significant implications for the solar installation industry and policymakers. For instance, the advantage of the vertically integrated systems highlights the benefits of vertical integration in the solar installation industry. In fact, some recent deals in the industry show that major installers have started to seek opportunities to expand their operations vertically in the supply chain. In 2014, SolarCity acquired the solar module manufacturer Silevo. The acquisition marks the transition of SolarCity from an independent installer to a vertically integrated firm with operations spanning manufacturing and installation. In the same year, Sunrun, originally a solar developer and a financer, bought the residential solar installation division of REC Solar to extend its control over the solar supply chain. NRG Home Solar, the solar subsidiary of the energy giant NRG and a major installer in US, indicated that [

52], “vertical integration is the winning model.” The following reasons may motivate vertical integration. First, vertical integration provides a solar installer more market power. As our analysis of CSI data shows, the installation market is highly fragmented with many small and local installers. According to an interview with participants in the solar installation industry [

45], the market of small and residential rooftop systems has no barriers to entry and even a one-man installer can handle the job. For commercial projects, the main barrier is capital rather than technology. In such a fragmented market with low barrier of entry, vertical integration can increase the installer’s market power. Second, for manufacturers deeply entrenched in the module market where cut-throat competition has driven some companies out of business, vertical integration into the installation business provides a stepping stone to tap into a rapidly growing market. Hence, expansion into the downstream installation business is a very plausible move. We expect that consolidation of the solar industry through vertical integration of manufacturing and installation will improve the industry-wide efficiency of the rooftop solar installations. The policymakers should cater to the trend of vertical integration of the installation industry and try to remove regulatory and policy barriers for the vertical consolidation of the industry.

The underperformance of some large installers and geographically diversified installers should alert the installers themselves and the policymakers. In the past, typical large and geographically diversified installers like SolarCity have prioritized market growth in their competitive strategy, and consequently, may not pay sufficient attention to installation efficiency. This growth-oriented strategy has provided them with a rapidly growing market share but has also cost them dearly, since maintaining a high growth rate requires significant investments in infrastructure, sales, and administration. The trend has started to change, as the installers are undergoing a strategic pivot from growth to cash generation. For example, in a recent shareholder letter [

53], SolarCity made the following statement regarding the company’s strategy, “going forward we are focusing our strategy on cost reductions and cash flow. Though we expect our deployments to grow in 2016 we are not targeting the same growth rates that have gotten us to our current scale going forward. Specifically, it is our goal to achieve positive cash flow by 2016 year-end.” We expect that other major installers will follow suit and such a shift of focus will lead to more efforts from large and geographically diversified installers to improve installation efficiency. Policymakers may play a more proactive role to facilitate the pivot from growth to efficiency. A potential policy instrument that the policymakers can leverage is to make the installers’ historical installation performance more transparent and visible to customers. While the raw data of solar PV installations have been published through the CSI, the data in its current format is still opaque to the general public. Enhancing the visibility of installation performance data to the customers can induce more peer pressure on installers to improve installation performance. In addition, the current CSI program assigns incentive based on system size, customer class, and performance and installation factors. The policymakers may explore ways of introducing installer factors such as capacity installed and solar electricity generated relative to cost and weather conditions into incentive design.