2.1. Two-Stage Structure of R&BD Performance Measure

As Guan and Wang [

17], Hsu and Hseuh [

8], and Liu and Lu [

18] noted, R&D performance evaluation focuses on how efficiently R&D inputs (researchers, budget, etc.) are allocated to secure R&D outputs (patents, papers, etc.). In addition, as Jeon and Lee [

11] noted, R&BD focuses on how well the R&D outputs are commercialized, such as for profit or royalty generation (hereafter referred to as BD outcome). Accordingly, there are correlations among the R&D inputs, R&D outputs, and BD outcomes. Moreover, from the performance evaluation perspective, fewer R&D inputs and higher R&D outputs are the preferred means of improving R&D performance, while fewer R&D outputs and higher BD outcomes are the preferred means of improving BD performance. In this respect, although allocating more R&D inputs can result in more R&D outputs, it will worsen R&D performance. Further, more R&D outputs can result in higher BD outcomes, but will worsen BD performance.

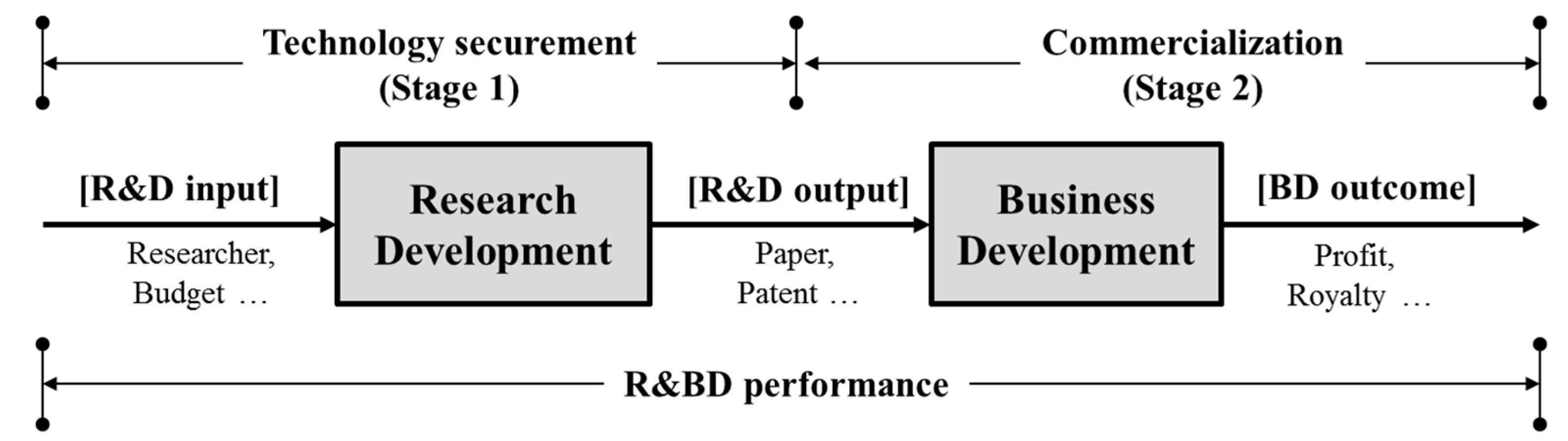

This study constructed an R&BD diagram, as shown in

Figure 1, as a conceptual framework for interpreting the R&BD performance evaluation. As

Figure 1 describes, R&BD focuses on the upstream technology securement and downstream commercialization sub-processes from the performance perspective. Specifically, the upstream technology securement sub-process from the R&D inputs to the R&D outputs (i.e., intermediate outputs in terms of the whole R&BD process) is the first stage (stage one). The stage one operation is related to such activities as researching, developing, learning by doing, or importing, and it is linked with the second stage (stage two), that is, the downstream commercialization sub-process from the R&D outputs to the BD outcomes. The stage two operation supports such economic activities as marketing and manufacturing. Note that the two sub-processes are related, rather than independent, since they are connected by the R&D outputs. This means that the intermediate R&D outputs have a double identity in their role in R&BD as the outputs in the first sub-process and the inputs in the second sub-process (Guan and Chen [

19]). In this sense, the integrated conceptual framework not only leads us to assign primary importance to the upstream indigenous R&D performance, but also reminds us to attach particular importance to the downstream commercialization performance in the economic sense.

Based on

Figure 1, we argue that R&BD performance aims to increase R&D outputs at lower R&D inputs, and at the same time, increase BD outcomes for the given R&D outputs. Thus, in terms of performance, the performance in stage one is evaluated by how efficiently the researchers or budgets are utilized to secure new technologies such as papers and patents, and the performance in stage two is evaluated by how well the new technologies from the technology securement are commercialized. In other words, stage one deals with the performance issue of R&D inputs and intermediate R&D outputs for the securement of technology, and stage two deals with the performance issue of intermediate R&D outputs and BD outcomes for commercialization.

R&BD concentrates on the transformation performance from the R&D inputs directly to the BD outcomes. Note that technology securement is only an upstream sub-process, so a better performance in technology securement alone cannot guarantee a better performance in R&BD. The R&BD performance can improve only when both sub-processes work well. Thus, a performance measure model must simultaneously describe the inclusive relationship between the R&BD process and the two sub-processes, as well as the relationship between the two sub-processes.

The term performance is used in a variety of ways. In order to evaluate the R&BD performance, this study utilized DEA, which was initially developed by Charnes, Cooper, and Rhodes [

4]. DEA and the stochastic frontier are two popular approaches to the evaluation of technical efficiency. DEA is a linear programming methodology that evaluates the relative efficiencies of DMUs using a set of inputs to produce a set of outputs. The technique envelopes observed production possibilities to obtain an empirical frontier, and measures efficiency as the distance to the frontier. The primary advantage of this approach is its non-parametric nature; therefore, it is not necessary to have strict normality assumptions about the data and errors when trying parametric analysis. DEA can handle multiple inputs and outputs, and does not need to predefine the functional relationship between inputs and outputs. Nevertheless, econometricians have argued that the approach produces biased estimates in the presence of measurement error and other statistical noise [

20]. The principal reasons for skepticism regarding the traditional standard DEA on the part of economists are follows. Traditional standard DEA is a non-parametric method; no production, cost, or profit function is estimated from the data. This precludes evaluating marginal products, partial elasticities, marginal costs, or elasticities of substitution from a fitted model. Consequentially, one cannot derive the usual conclusions about the technology, which are possible from a parametric functional form. Most importantly, being non-statistical in nature, the linear programing (LP) solution of a DEA problem produces no standard errors and leaves no room for hypothesis testing. In traditional standard DEA, any deviation from the frontier is treated as inefficiency, and there is no provision for random shocks. By contrast, the far more popular stochastic frontier model explicitly allows the frontier to move up or down because of random shocks. Additionally, a parametric frontier yields elasticities and other measures of the technology that are useful for marginal analysis. As mentioned in Ray [

21], the lack of standard errors for the DEA efficiency measures stems from the stochastic properties of inefficiency-constrained estimators not being well established in the econometric literature. However, there are several research studies underway to address the weakness of the DEA model. Banker [

22] conceptualized a convex and monotonic non-parametric frontier with a one-sided disturbance term, and showed that the DEA estimator converges in distribution with the maximum likelihood estimators. Simar [

23] and Simar and Wilson [

24,

25] combined bootstrapping with DEA to generate empirical distributions of the efficiency measures of individual firms. This has generated a lot of interest in the profession, and one may expect the DEA model to incorporate the bootstrapping option in the near future. Simar and Wilson [

26] described a data-generating process (DGP) that is logically consistent with the regression of non-parametric DEA efficiency estimates on some covariates in a second stage. They then proposed single and double bootstrap procedures; both permit valid inference, and the double bootstrap procedure improves statistical efficiency in the second-stage regression. The use of traditional standard DEA in evaluating a more realistic and accurate efficiency may be controversial among researchers of parametric and non-parametric methods due to the characteristics of DEA. Nevertheless, due to its advantages, DEA has been theoretically extended and very widely applied in various fields, including: education (Ahn [

27]; Beasley [

28]), aviation (Schefezyk [

29]), health care (Pina and Torres [

30]), inventory and manufacturing (Park et al. [

31]; Park et al. [

32]; Park et al. [

33]), etc. In addition, as mentioned earlier, there are quite a number of studies that utilize the DEA model in evaluating R&D and R&BD performance. For more details on the DEA model, refer to Charnes et al. [

4].

However, the traditional standard DEA model treats DMUs as a black box, and cannot incorporate multiple sub-processes into an integrated measurement framework. The divisional and independent measurement of process performance using traditional standard DEA destroys the integrity and linkage between sub-process performances. Thus, this study utilized the network DEA, which takes intermediate factors into account when evaluating performance. The network DEA model is an extension of the traditional standard DEA model in which each DMU is comprised of two or many sub-DMUs (or internal processes) connected in parallel, as shown in

Figure 1. The objective of the network DEA model is to evaluate the relative performance of each DMU and each of its sub-DMUs. In particular, this study applied the two-stage relational network DEA model introduced by Kao and Hwang [

34] to evaluate R&BD performance. The main reason for applying the two-stage relational network DEA model is that this model can help measure the technical performance of the R&BD and the two sub-processes in an integrated analytical framework, in the sense that the overall and sub-process performances are estimated simultaneously. In

Figure 1, the R&D input and BD outcome are regarded as the input and output, respectively, and the R&D output is treated as an intermediate factor. For the performance evaluation, we assumed that all of the R&D inputs are positively related to R&D output production, and that all of the R&D outputs are positively related to the BD outcome generation. We show that the R&DB performance of DMU

k, which has

m R&D inputs,

xi (

i = 1, 2, …,

m),

t R&D outputs,

lq (

q = 1, 2, …,

t), and

s BD outcomes,

yr (

r = 1, 2, …,

s), is measured based on how efficiently the R&D inputs are used to produce the BD outcomes, and, at the same time, how efficiently the R&D inputs are used to provide a high quality and quantity of R&D outputs, and how efficiently the R&D outputs contribute to the commercialization. Here,

ur is the weight given to the

r-th BD outcomes,

vi is the weight given to the

i-th R&D input,

wq is the weight given to the

q-th R&D outputs, and

n is the number of DMUs.

Based on the two-stage relational network DEA model, the R&BD performance evaluation is calculated as

where

Sj*,

Sj(C1)*, and

Sj(C2)* are the slack variables associated with second, third and fourth constraints, respectively. Model (1) is based on the input-oriented CCR (Charnes, Cooper and Rhodes) model in DEA. As in Kao and Hwang’s [

34] two-stage relational network DEA model, the same weights were assigned to the R&D output variables,

lq. Second constraint represents the R&BD performance of the

j-th DMU. Third and fourth constraints represent the R&BD performance in the technology securement and commercialization stages, respectively. As in the two-stage relational network DEA model, we can validate the performance decomposition principle, according to which the multiplication of third and fourth constraints is equal to second constraint, that is,

sk* =

sk(C1)* +

sk(C2)*. Note that we utilized this performance decomposition principle to analyze the influences of technology securement and commercialization on R&DB performance. Let

vi*,

ur*, and

wq* be the optimal weights assessed by Model (1). The performance of the

k-th DMU in the R&DB, technology securement stage, and commercialization stage can be revised as Models (2)–(4), respectively.

If the performance score of Ek(C1)* is 1, the DMU is considered to have achieved a best-practice status in the technology securement stage. If the performance score of Ek(C2*) is 1, the DMU is said to have obtained a best-practice status in the commercialization stage. By contrast, and indicate underperformance in the technology securement and commercialization stages, respectively. Based on the performance decomposition principle in the two-stage relational network DEA model, we obtained two equations, Ek* = Ek(C1) × Ek(C2) and sk*= sk(C1)* + sk(C2)*, respectively. Ek = Ek(C1) × Ek(C2) signifies that the multiplication of Models (3) and (4) equals Model (2), and sk* = sk(C1)* + sk(C2)* signifies that the sum of third and fourth constraints equals second constraint. From Models (2)–(4), we substituted , , and for sk*, sk(C1)*, and sk(C2)*, respectively. Since sk* = sk(C1)* + sk(C2)*, it can be said that the underperformance of the k-th DMU can be distributed to the technology securement and commercialization stages in (sk(C1)*/sk*) and (sk(C2)*/sk*) proportions, respectively. If (sk(C1)*/sk*) and (sk(C2)*/sk*) are assumed to be IA(C1) and IA(C2), respectively, IA(C1) and IA(C2) can be obtained as

The performance proportions signify relative values, which can be utilized as the evaluation ratings for the underperformance of R&D inputs in technology securement and the underperformance of R&D outputs in commercialization. For example, using the proportion (1 − IA(C1)), we can determine how well the R&D inputs contribute to R&DB performance in terms of technology securement, and likewise, using the relative proportion (1 − IA(C2)), we can determine how well the R&D outputs contribute to R&BD performance in terms of commercialization. A proportion that is close to 1 indicates a significant contribution to R&BD performance. In other words, since R&BD performance is calculated as the product of performances in the technology securement and commercialization stages, it is more effective to improve the R&BD performance by focusing on improving the process that has a lower contribution.

2.2. R&BD Performance Improvement

Since the approach of this study is based on DEA, we can provide information on whether the underperforming DMUs improve their performance in each stage. For a simple illustration of this approach, we calculated the R&BD performance and performance improvement using the sample data shown in

Table 1. The sample data consist of data for seven DMUs, and each DMU consumes two R&D inputs and yields one R&D output and one BD outcome. The R&BD performance results, which include the R&BD performance by the proposed method, and include

Ej(C1),

Ej(C2),

IA(C1), and

IA(C2), are summarized starting from the sixth column in

Table 1. We find that the R&BD performance is calculated by the multiplication of the technology securement stage (

Ej(C1)) and commercialization stage (

Ej(C2)). Only two DMUs, A and B, are best-practice DMUs in the technology securement stage, and two others, A and C, are best-practice DMUs in the commercialization stage. We see that DMU A, which has a relatively high performance in both stages, also achieved the highest R&BD performance. Although DMUs B and C are best-practice DMUs in the technology securement and commercialization stages, respectively, they cannot be best-practice DMUs in R&BD performance, because they have a relatively low performance in the commercialization and technology securement stages, respectively.

For example, DMU C has a relatively lower performance in the technology securement stage. However, it can achieve best-practice status by either (a) increasing its R&D output; or (b) decreasing its R&D inputs. If DMU C chooses to improve its performance by (a), it might no longer be a best-practice DMU in the commercialization stage because the R&D output, which is the input of the commercialization stage, has increased. Note that the R&D inputs and BD outcome are linked by the R&D output. Thus, in order to improve the performance in both stages, we focused on reducing the R&D inputs and increasing the BD outcome while maintaining the R&D output. In other words, we can calculate how much R&D inputs and BD outcome need to improve by using the R&D output given by Model (7), which is the envelopment-type version of Model (1). is obtained by , where is the q-th optimal weights for the BD outcome calculated in Model (1), is the efficiency score, and , , and are the dual variables.

Through Model (7), we can obtain guidelines on how much R&D inputs have to decrease or BD outcomes have to increase in order to improve the performances of underperforming DMUs, specifically in the setting

=

,

=

, where

and

indicate the negative and positive amounts of inputs and outputs, respectively, that need to be improved. If the inefficient DMU G reduces each of its R&D inputs (

X1 and

X2) by 40, and increases its BD outcome (

Q2) by 20, it can achieve best-practice status in R&BD performance with a score of 1. As we mentioned in

Section 2.1, (1 −

IA(C1)) and (1 −

IA(C2)) are relative values that contribute to the R&BD performance, and it is more effective to improve the R&BD performance by preferentially improving the performance of the process with the smaller value. Consider DMU G, where (1 −

IA(C1)) is relatively smaller than (1 −

IA(C2)) in

Table 1.

Table 2 shows the sensitivity analysis for DMU G to improve its performance with a BD outcome that is fixed and an R&D input that decreases by 10, or an R&D input that is fixed and a BD outcome that increases by 10. We found no performance improvement from the point where R&D inputs decrease by 40 from the existing value, and the BD outcomes increase by 20 from the existing value. This result is the same as the above-mentioned result that DMU G reduces R&D inputs by 40 and increases BD outcomes by 20 to achieve best-practice status in R&BD performance. In addition, we saw that the R&BD performance improvement is greater when R&D inputs improve more than the BD outcomes, which suggests that it is more effective to improve R&BD performance by preferentially improving R&D input performance.