Analysis of Unmanned Aerial System-Based CIR Images in Forestry—A New Perspective to Monitor Pest Infestation Levels

Abstract

:1. Introduction

2. Methods

2.1. UAS Sensor Platform

| Technical Feature | Microdrones MD4-200 |

|---|---|

| Payload | <200 g |

| Estimated flight time | ~30 min |

| Recommended flight altitude | <80 m |

| GPS autonomy flight mode | Yes |

| Radio up-/downlink | Yes |

| Ground control | Field control center |

| Camera system (modified) | IXUS 100 IS |

2.2. Field Survey

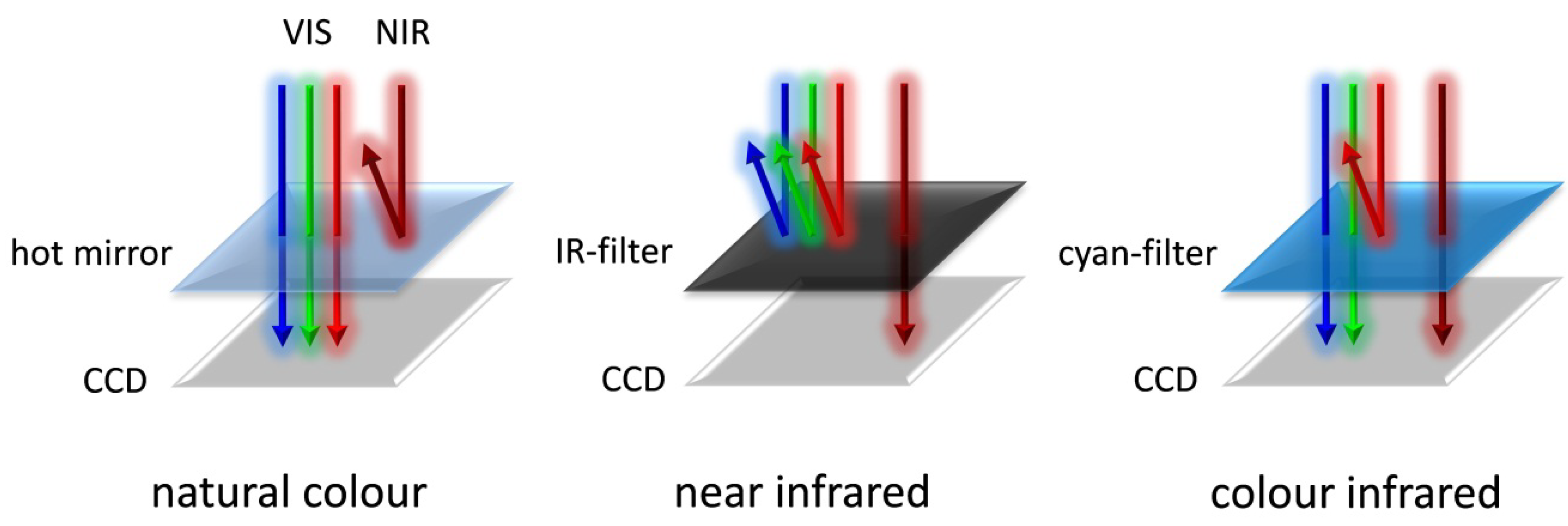

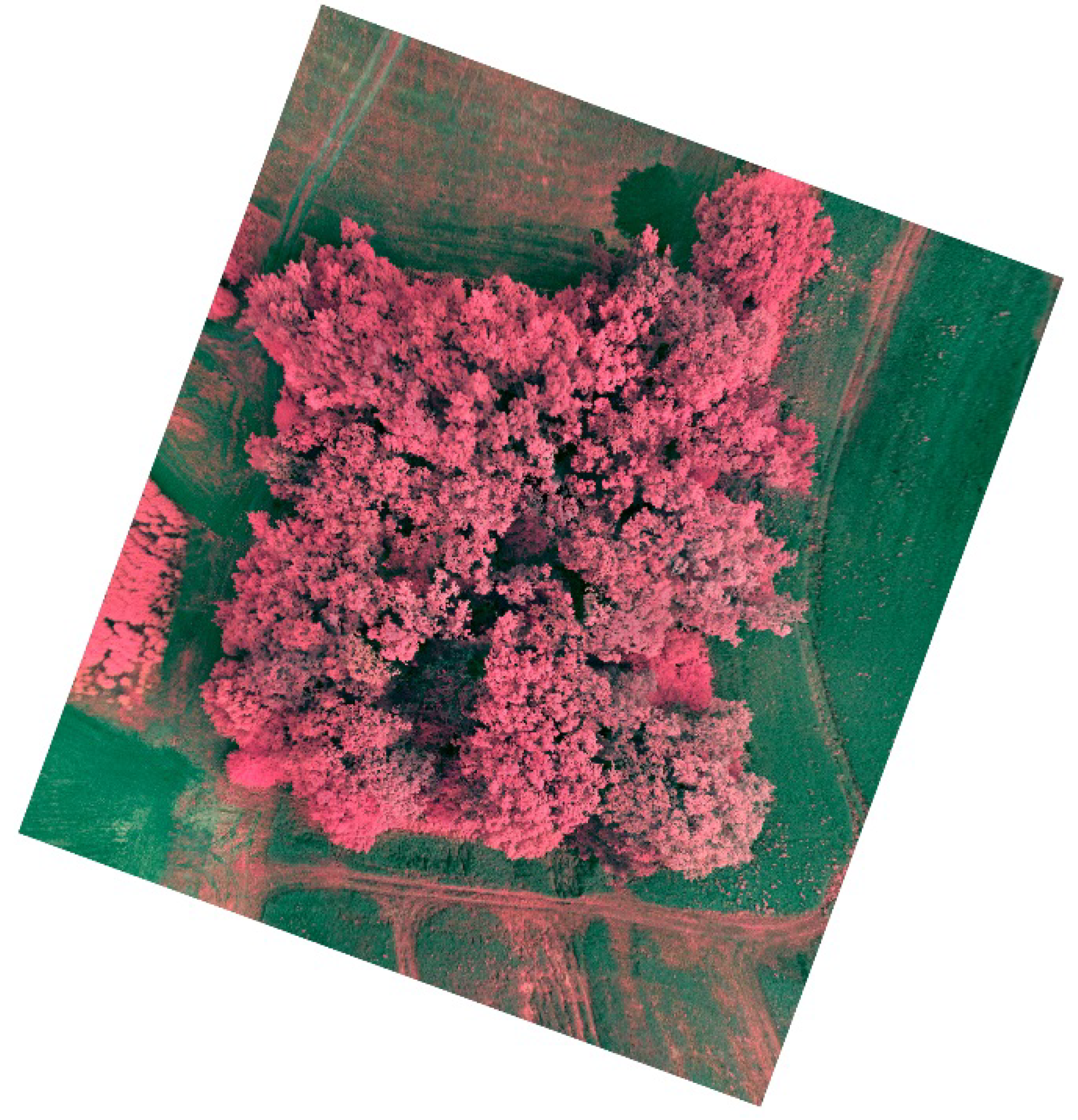

2.3. Sensor Technique and Data Processing

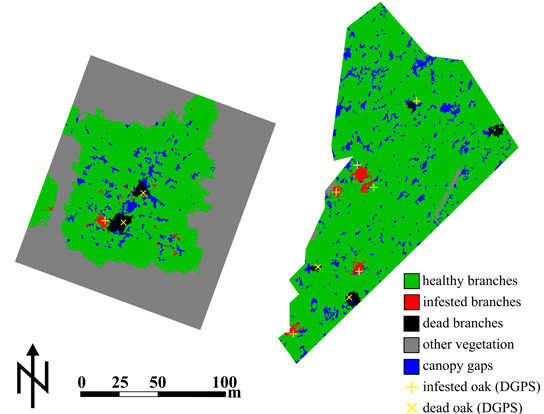

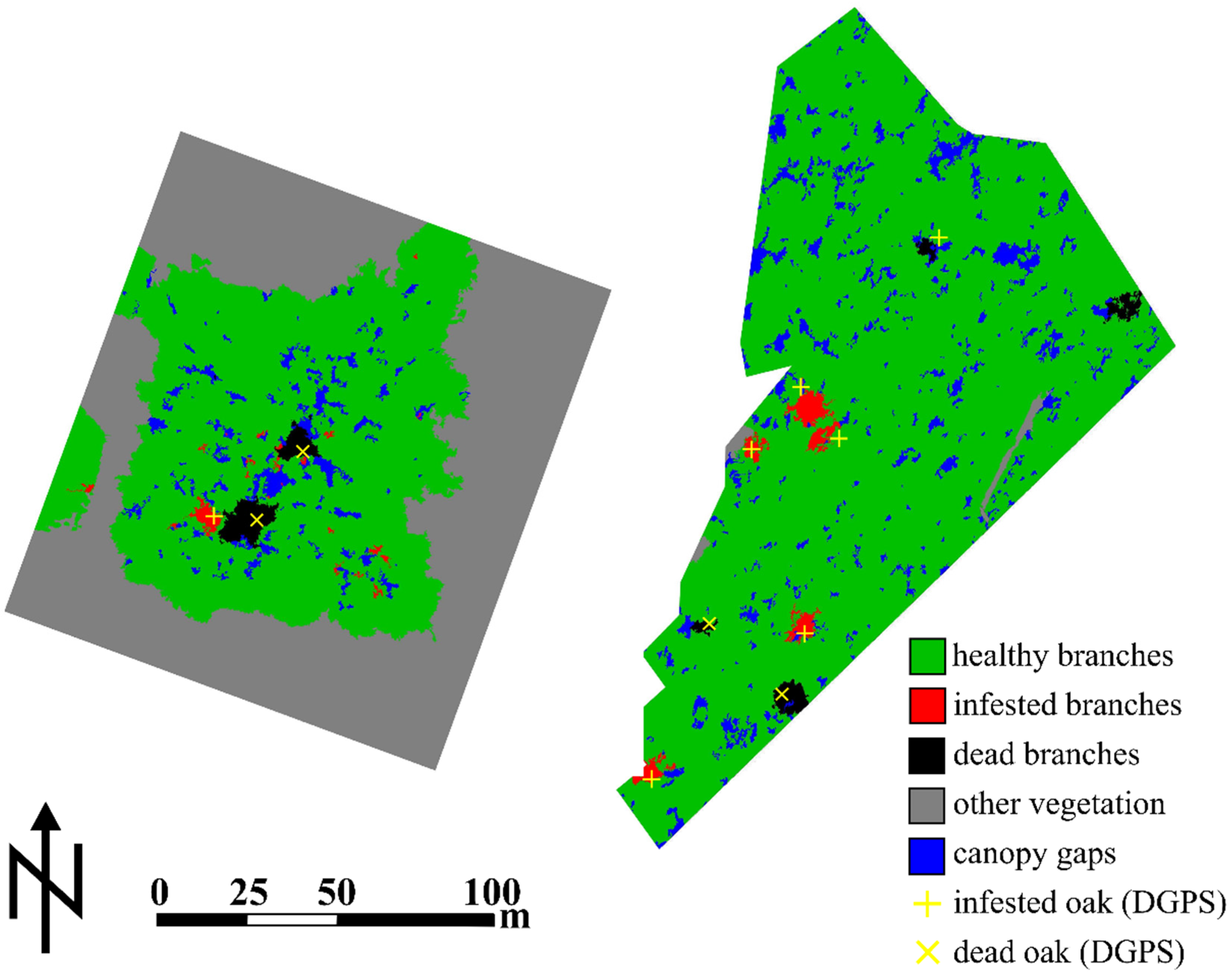

2.4. Image Analysis and Classification

| Customized |

| NDVImod: ([Mean nir] − [Mean blue])/([Mean nir] + [Mean blue]) |

| Layer Values |

| HSI Transformation Intensity (R = nir, G = green, B = blue) |

| HSI Transformation Hue (R = nir, G = green, B = blue) |

| HSI Transformation Saturation (R = nir, G = green, B = blue) |

| Mean NIR |

| Mean Green |

| Mean Blue |

| Mean Brightness |

| Standard Deviation NIR |

| Standard Deviation Green |

| Standard Deviation Blue |

3. Results

| Study Site A | Healthy | Infested | Dead | Other Vegetation | Canopy Gaps | Sum |

| healthy | 47 | 5 | 4 | 5 | 1 | 62 |

| infested | 1 | 11 | 0 | 0 | 0 | 12 |

| dead | 10 | 0 | 30 | 0 | 0 | 31 |

| other vegetation | 1 | 0 | 0 | 44 | 0 | 45 |

| canopy gaps | 5 | 0 | 8 | 0 | 44 | 57 |

| unclassified | 0 | 0 | 0 | 0 | 0 | 0 |

| Sum | 64 | 16 | 42 | 49 | 45 | |

| Producer’s accuracy | 85.5 | 68.8 | 71.4 | 89.8 | 97.8 | |

| User’s accuracy | 75.8 | 91.7 | 96.8 | 97.8 | 77.2 | |

| Overall Accuracy | 85.0 | |||||

| KIA per class | 0.79 | 0.67 | 0.66 | 0.87 | 0.97 | |

| KIA | 0.81 | |||||

| Study Site B | Healthy | Infested | Dead | Other Vegetation | Canopy Gaps | Sum |

| healthy | 49 | 6 | 8 | 1 | 11 | 75 |

| infested | 3 | 30 | 0 | 0 | 0 | 33 |

| dead | 1 | 0 | 44 | 0 | 4 | 49 |

| other vegetation | 1 | 0 | 1 | 28 | 0 | 30 |

| canopy gaps | 1 | 0 | 3 | 0 | 37 | 41 |

| unclassified | 0 | 0 | 0 | 0 | 0 | 0 |

| Sum | 55 | 36 | 56 | 29 | 52 | |

| Producer’s accuracy | 89.1 | 83.3 | 78.6 | 96.6 | 71.2 | |

| User’s accuracy | 65.3 | 90.9 | 89.8 | 93.3 | 90.2 | |

| Overall Accuracy | 82.5 | |||||

| KIA per class | 0.84 | 0.81 | 0.73 | 0.96 | 0.65 | |

| KIA | 0.78 |

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Lindner, M.; Maroschek, M.; Netherer, S.; Kremer, A.; Barbati, A.; Garcia, J.; Seidl, R.; Delzon, S.; Corona, P.; Kolström, M.; et al. Climate change impacts, adaptive capacity, and vulnerability of European forest ecosystems. Forest Ecol. Manag. 2010, 259, 698–709. [Google Scholar] [CrossRef]

- Sallé, A.; Nageleisen, L.-M.; Lieutier, F. Bark and wood boring insects involved in oak declines in Europe: Current knowledge and future prospects in a context of climate change. Forest Ecol. Manag. 2014, 328, 79–93. [Google Scholar] [CrossRef]

- Thomas, F.M.; Blank, R.; Hartmann, G. Abiotic and biotic factors and their interactions as causes of oak decline in Central Europe. Forest Pathol. 2002, 32, 4–5. [Google Scholar] [CrossRef]

- Vansteenkiste, D.; Tirry, L.; Van Acker, J.; Stevens, M. Predispositions and symptoms of Agrilus borer attack in declining oak trees. Ann. Forest Sci. 2004, 61, 815–823. [Google Scholar] [CrossRef]

- Lavoie, C.; Saint-Louis, A.; Lachance, D. Vegetation dynamics on an abandoned vacuum-mined peatland: 5 years of monitoring. Wetlands Ecol. Manag. 2005, 13, 621–633. [Google Scholar] [CrossRef]

- Pellerin, S.; Mercure, M.; Desaulniers, A.S.; Lavoie, C. Changes in plant communities over three decades on two disturbed bogs in southeastern Québec. Appl. Veg. Sci. 2008, 12, 107–118. [Google Scholar] [CrossRef]

- Skakun, R.S.; Wulder, M.A.; Franklin, S.E. Sensitivity of the thematic mapper enhanced wetness difference index to detect mountain pine beetle red-attack damage. Remote Sens. Environ. 2003, 86, 433–443. [Google Scholar] [CrossRef]

- Coops, N.C.; Johnson, M.; Wulder, M.A.; White, J.C. Assessment of QuickBird high spatial resolution imagery to detect red attack damage due to mountain pine beetle infestation. Remote Sens. Environ. 2006, 103, 67–80. [Google Scholar] [CrossRef]

- Heurich, M.; Ochs, T.; Andresen, T.; Schneider, T. Object-orientated image analysis for the semi-automatic detection of dead trees following a spruce bark beetle (Ips. typographus) outbreak. Eur. J. Forest Res. 2010, 129, 313–324. [Google Scholar] [CrossRef]

- Ortiz, S.M.; Breidenbach, J.; Kändler, G. Early detection of bark beetle green attack using Terrasar-X and RapidEye data. Remote Sens. 2013, 5, 1912–1931. [Google Scholar] [CrossRef]

- Medlin, C.; Shaw, D.; Gerard, P.; LaMastus, F. Using remote sensing to detect weed infestations in glycine max. Weed Sci. 2000, 48, 393–398. [Google Scholar] [CrossRef]

- Yu, Q.; Gong, P.; Clinton, N.; Biging, G.; Kelly, M.; Schirokauer, D. Object-based detailed vegetation classification with airbourne high spatial resolution remote sensing imagery. Photogramm. Eng. Remote Sens. 2006, 72, 799–811. [Google Scholar] [CrossRef]

- Lawes, R.; Wallace, J. Monitoring an invasive perennial at the landscape scale with remote sensing. Ecol. Manag. Restor. 2008, 9, 53–58. [Google Scholar] [CrossRef]

- Franklin, S.E.; Wulder, M.A.; Skakun, R.S.; Carroll, A.L. Mountain pine beetle red-attack forest damage classification using stratified Landsat TM data in British Columbia, Canada. Photogramm. Eng. Remote Sens. 2003, 69, 283–288, Germany. [Google Scholar] [CrossRef]

- Hais, M.; Jonášová, M.; Langhammer, J.; Kučera, T. Comparison of two types of forest disturbance using multitemporal Landsat TM/ETM+ imagery and field vegetation data. Remote Sens. Environ. 2009, 113, 835–845. [Google Scholar] [CrossRef]

- Milton, E.J.; Hughes, P.D.; Anderson, K.; Schulz, J.; Lindsay, R.; Kelday, S.B.; Hill, C.T. Remote Sensing of Bog Surfaces; Joint Nature Conservation Committee: Peterborough, UK, 2005. [Google Scholar]

- Ecker, K.; Küchler, M.; Feldmeyer-Christe, E.; Graf, U.; Waser, L.T. Predictive mapping of floristic site conditions across mire habitats: Evaluating data requirements. Community Ecol. 2008, 9, 133–146. [Google Scholar] [CrossRef]

- Shuman, C.S.; Ambrose, R.F. A comparison of remote sensing and ground-based methods for monitoring wetland restoration success. Restor. Ecol. 2003, 11, 325–333. [Google Scholar] [CrossRef]

- Brown, E.; Aitkenhead, M.; Wright, R; Aalders, I.H. Mapping and classification of peatland on the Isle of Lewis using Landsat ETM. Scott. Geogr. J. 2007, 123, 173–192. [Google Scholar] [CrossRef]

- Eitel, J.U.H.; Vierling, L.A.; Litvak, M.E.; Long, D.S.; Schulthess, U.; Ager, A.A.; Krofcheck, D.J.; Stoscheck, L. Broadband, red-edge information from satellites improves early stress detection in a New Mexico conifer woodland. Remote Sens. Environ. 2011, 115, 3640–3646. [Google Scholar] [CrossRef]

- Chambers, J.Q.; Asner, G.P.; Morton, D.C.; Morton, D.C.; Anderson, L.O.; Saatchi, S.S.; Espírito-Santo, F.D.B.; Souza, M.P.C., Jr. Regional ecosystem structure and function: Ecological insights from remote sensing of tropical forests. Trends Ecol. Evol. 2007, 22, 414–423. [Google Scholar] [CrossRef] [PubMed]

- Rango, A.; Laliberte, A.; Steele, C.; Herrick, K.; Bestelmeyer, B.; Schmugge, T.; Roanhorse, A.; Jenkins, V. Using unmanned aerial vehicles for rangelands: Current applications and future potentials. J. Environ. Practice 2006, 8, 159–168. [Google Scholar]

- Grenzdörffer, G.; Engel, A. A Comparative Study of Two Micro-UAV’s—Perspectives for the Current and Economic Geo-Information Extraction. Available online: http://www.wichmann-verlag.de/gis-fachzeitschriften/artikelarchiv/2008/gis-ausgabe-01-2008/eine-vergleichende-untersuchung-von-zwei-micro-uavs-perspektiven-fuer-die-aktuelle-und-kostenguenstige-geoinformationsgewinnung.html (accessed on 3 February 2015).

- Knoth, C.; Prinz, T.; Loef, P. Microcopter-based Color Infrared (CIR) close range remote sensing as a subsidiary tool for precision farming. In Proceedings of the Workshop on Remote Sensing Methods for Change Detection and Process Modelling, Cologne, DE, USA, 18–19 November 2010.

- Ishihama, F.; Watabe, Y.; Oguma, H. Validation of a high-resolution, remotely operated aerial remote-sensing system for the identification of herbaceous plant species. Appl. Veg. Sci. 2012, 15, 383–389. [Google Scholar] [CrossRef]

- Ye, X.; Sakai, K.; Asada, S.-I.; Sasao, A. Use of airborne multispectral imagery to discriminate and map weed infestations in a citrus orchard. Weed Biol. Manag. 2007, 7, 23–30. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Rango, A. Texture and scale in object-based analysis of subdecimeter resolution Unmanned Aerial Vehicle (UAV) imagery. IEEE Trans. Geosci. Remote Sens. 2009, 47, 761–770. [Google Scholar] [CrossRef]

- Rango, A.; Laliberte, A.; Herrick, J.E.; Winters, C.; Havstad, K.; Steele, C.; Browning, D. Unmanned aerial vehicle-based remote sensing for rangeland assessment, monitoring, and management. J. Appl. Remote Sens. 2009, 3. [Google Scholar] [CrossRef]

- Eisenbeiss, H.; Sauerbier, M. Investigation of UAV systems and flight modes for photogrammetric applications. Photogramm. Recor. 2010, 26, 400–421. [Google Scholar] [CrossRef]

- Breckenridge, R.P.; Dakins, M.; Bunting, S.; Harbour, J.L.; White, S. Comparison of unmanned aerial vehicle platforms for assessing vegetation cover in sagebrush steppe ecosystems. Rangeland Ecol. Manag. 2011, 64, 521–532. [Google Scholar] [CrossRef]

- Roberts, D.A.; Smith, M.O.; Adams, J.B. Green vegetation, nonphotosynthetic vegetation, and soils in AVIRIS data. Remote Sens. Environ. 1993, 44, 255–269. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 6, 2–16. [Google Scholar] [CrossRef]

- Michel, P.; Mathieu, R.; Mark, A.F. Spatial analysis of oblique photo-point images for quantifying spatio-temporal changes in plant communities. Appl. Veg. Sci. 2010, 13, 173–182. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Rango, A.; Herrick, J.E.; Fredrickson, E.L.; Burkett, L. An object-based image analysis approach for determining fractional cover of senescent and green vegetation with digital plot photography. J. Arid Environ. 2007, 69, 1–14. [Google Scholar] [CrossRef]

- Chen, Z.; Chen, W.; Leblanc, S.G.; Henry, G.H.R. Digital photograph analysis for measuring percent plant cover in the arctic. Arctic 2010, 63, 315–326. [Google Scholar] [CrossRef]

- Knoth, C.; Klein, B.; Prinz, T.; Kleinebecker, T. Unmanned aerial vehicles as innovative remote sensing platforms for high-resolution infrared imagery to support restoration monitoring in cut-over bogs. Appl. Veg. Sci. 2013, 16, 509–517. [Google Scholar] [CrossRef]

- Jensen, T.; Apan, A.; Young, F.; Zeller, L. Detecting the attributes of a wheat crop using digital imagery acquired from a low-altitude platform. Comput. Electron. Agr. 2007, 59, 66–77. [Google Scholar] [CrossRef] [Green Version]

- Hunt, E.R.; Hively, W.D.; Daughtry, C.S.T.; McCarty, G.W.; Fujikawa, S.J.; Ng, T.L.; Tranchitella, M.; Linden, D.S.; Yoel, D.W. Remote sensing of crop leaf area index using unmanned airborne vehicles. In Proceedings of the 17th William T. Pecora. Memorial Remote Sensing Symposium, Denver, CO, USA, 16–20 November 2008.

- Aber, J.S.; Marzolff, I.; Ries, J.B. Small-format aerial photography. Photogramm. Recor. 2011, 26. [Google Scholar] [CrossRef]

- Hunt, E.R.; Hively, W.D.; Fujikawa, S.J.; Linden, D.S.; Daughtry, C.S.T.; McCarty, G.W. Acquisition of NIR-green-blue digital photographs from unmanned aircraft for crop monitoring. Remote Sens. 2010, 2, 290–305. [Google Scholar] [CrossRef]

- Soloviov, O. Geospatial Assessment of Pest-Induced Forest Damage through the Use of UAV-based NIR Imaging and GI-Technology. Available online: http://run.unl.pt/bitstream/10362/11545/1/TGEO115.pdf (accessed on 3 February 2015).

- Benz, U.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogramm. Remote Sens. 2004, 58, 239–258. [Google Scholar] [CrossRef]

- Pasher, J.; J. King, D. Mapping dead wood distribution in a temperate hardwood forest using high resolution airborne imagery. Forest Ecol. Manag. 2009, 258, 1536–1548. [Google Scholar] [CrossRef]

- Foody, G. On the compensation for chance agreement in image classification accuracy assessment. Photogramm. Eng. Remote Sens. 1992, 58, 1459–1460. [Google Scholar]

- Landis, J.; Koch, G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef] [PubMed]

- Knipling, E.B. Physical and physiological basis for the reflectance of visible and near-infrared radiation from vegetation. Remote Sens. Environ. 1970, 1, 155–159. [Google Scholar] [CrossRef]

- Ambrosini, I.; Gherardi, L.; Viti, M.L.; Maresi, G.; Turchetti, T. Monitoring diseases of chestnut stands by small format aerial photography. Geocarto. Int. 1997, 12, 41–46. [Google Scholar] [CrossRef]

- Yang, Z.; Rao, M.N.; Kindler, S.D.; Elliott, N.C. Remote sensing to detect plant stress, with particular reference to stress caused by the greenbug: A review. Southwest. Entomol. 2004, 29, 227–236. [Google Scholar]

- Thomas, F.M. Recent Advances in Cause-Effect Research on Oak Decline in Europe. Available online: http://www.researchgate.net/publication/236200532_Recent_advances_in_cause-effect_research_on_oak_decline_in_Europe (accessed on 3 February 2015).

- Moraal, L.G.; Hilszczanski, J. The oak buprestid beetle, Agrilus. biguttatus (F.) (Col., Buprestidae), a recent factor in oak decline in Europe. J. Pest. Sci. 2009, 73, 134–138. [Google Scholar]

- Coppin, P.R.; Bauer, M.E. Digital change detection in forest ecosystems with remote sensing imagery. Remote Sens. Rev. 1996, 13, 207–234. [Google Scholar] [CrossRef]

- Everitt, J.H.; Escobar, D.E.; Appel, D.N.; Riggs, W.G.; Davis, M.R. Using airborne digital imagery for detecting oak wilt disease. Plant Dis. 1999, 83, 502–505. [Google Scholar] [CrossRef]

- Wang, C.; Lu, Z.; Haithcoat, T.L. Using Landsat images to detect oak decline in the Mark Twain National Forest, Ozark Highlands. Forest Ecol. Manag. 2007, 240, 70–78. [Google Scholar] [CrossRef]

- Liebold, J.E.; Peter, A.M.; McCay, M. A study of multi-copter power source selection: From lithium polymers to fuel cells. AUVSI Unmanned Syst. 2014, 1, 132–147. [Google Scholar]

- Moskal, L.M.; Franklin, S.E. Multi-layer forest stand discrimination with spatial co-occurrence texture analysis of high spatial detail airborne imagery. Geocarto. Int. 2002, 17, 55–68. [Google Scholar] [CrossRef]

- Tiede, D.; Lang, S.; Hoffmann, C. Domain-specific class modelling for one-level representation of single trees. In Object-based Image Analysis—Spatial Concepts for Knowledge-driven Remote Sensing Applications; Springer Berlin Heidelberg: New York, NY, USA, 2008; pp. 133–151. [Google Scholar]

- Lucieer, A.; Malenovsky, Z.; Veness, T.; Wallace, L. HyperUAS—Imaging spectroscopy from a multi-rotor unmanned aircraft system. J. Field Robot. 2014, 31, 571–590. [Google Scholar] [CrossRef]

- Kokaly, R.F.; Asner, G.P.; Ollinger, S.V.; Martin, M.E.; Wessman, C.A. Characterizing canopy biochemistry from imaging spectroscopy and its application to ecosystem studies. Remote Sens. Environ. 2009, 113, 78–91. [Google Scholar] [CrossRef]

- Hernández-Clemente, R.; Navarro-Cerrillo, R.M.; Suárez, L.; Morales, F.; Zarco-Tejada, P.J. Assessing structural effects on PRI for stress detection in conifer forests. Remote Sens. Environ. 2011, 115, 2360–2375. [Google Scholar] [CrossRef]

- Hunt, E.R., Jr.; Rock, B.N. Detection of changes in leaf water content using Near- and Middle-Infrared reflectances. Remote Sens. Environ. 1989, 30, 43–54. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Berni, J.A.J.; Suárez, L.; Sepulcre-Cantó, G.; Morales, F.; Miller, J.R. Imaging chlorophyll fluorescence with an airborne narrow-band multispectral camera for vegetation stress detection. Remote Sens. Environ. 2009, 113, 1262–1275. [Google Scholar] [CrossRef]

- Zuzana, L.; Lukáš, B.; Lucie, K.; Veronika, K.; Markéta, P.; Jan, M.; Aleš, K.; Monika, K.; Jana, A. Detection of multiple stresses in Scots pine growing at post-mining sites using visible to near-infrared spectroscopy. Environ. Sci. Process. Impacts 2013, 15, 2004–2015. [Google Scholar] [CrossRef] [PubMed]

- Askeyev, O.V.; Tischin, D.; Sparks, T.H.; Askeyev, I.V. The effect of climate on the phenology, acorn crop and radial increment of pedunculate oak (Quercus. robur) in the middle Volga region, Tatarstan, Russia. Int. J. Biometeorol. 2005, 49, 262–266. [Google Scholar] [CrossRef] [PubMed]

- Gaertig, T.; Schack-Kirchner, H.; Hildebrand, E.E.; von Wilpert, K. The impact of soil aeration on oak decline in south-western Germany. Forest Ecol. Manag. 2002, 159, 15–25. [Google Scholar] [CrossRef]

- Vrbek, B.; Pilas, I.; Dubravac, T.; Novotny, V.; Dekanić, S. Effect of deposition substances on the quality of throughfall and soil solution of pedunculate oak and common hornbeam forest. Period. Biol. 2008, 110, 269–275. [Google Scholar]

- Ito, S.; Kubono, T.; Sahashi, N.; Yamada, T. Associated fungi with the mass mortality of oak trees. J. Japanese Forest. Soc. 1998, 80, 170–175. [Google Scholar]

- Latifi, H.; Schumann, B.; Kautz, M.; Dech, S. Spatial characterization of bark beetle infestations by a multidate synergy of SPOT and Landsat imagery. Environ. Monit. Assess. 2014, 186, 441–456. [Google Scholar] [CrossRef] [PubMed]

- Woodman, O.J. An Introduction to Inertial Navigation. Available online: http://www.cl.cam.ac.uk/techreports/UCAM-CL-TR-696.pdf (accessed on 3 February 2015).

- Rieke, M.; Foerster, T.; Bröring, A. Unmanned Aerial Vehicles as mobile multi-sensor platforms. In Proceedings of the 14th AGILE International Conference on Geographic Information Science, Utrecht, NL, USA, 18–21 April 2011.

- Geipel, J.; Knoth, C.; Elsässer, O.; Prinz, T. DGPS- and INS-based orthophotogrammetry on micro UAV platforms for precision farming services. In Proceedings of the Geoinformatik, Muenster, Germany, 15–17 June 2011.

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lehmann, J.R.K.; Nieberding, F.; Prinz, T.; Knoth, C. Analysis of Unmanned Aerial System-Based CIR Images in Forestry—A New Perspective to Monitor Pest Infestation Levels. Forests 2015, 6, 594-612. https://doi.org/10.3390/f6030594

Lehmann JRK, Nieberding F, Prinz T, Knoth C. Analysis of Unmanned Aerial System-Based CIR Images in Forestry—A New Perspective to Monitor Pest Infestation Levels. Forests. 2015; 6(3):594-612. https://doi.org/10.3390/f6030594

Chicago/Turabian StyleLehmann, Jan Rudolf Karl, Felix Nieberding, Torsten Prinz, and Christian Knoth. 2015. "Analysis of Unmanned Aerial System-Based CIR Images in Forestry—A New Perspective to Monitor Pest Infestation Levels" Forests 6, no. 3: 594-612. https://doi.org/10.3390/f6030594