Monitoring Post Disturbance Forest Regeneration with Hierarchical Object-Based Image Analysis

Abstract

:1. Introduction

- (a)

- Evaluation of SOIT in hyperspatial image segmentation;

- (b)

- Development of a hierarchical OBIA classification approach; and,

- (c)

- Assessment of accuracy of the classification.

2. Experimental Section

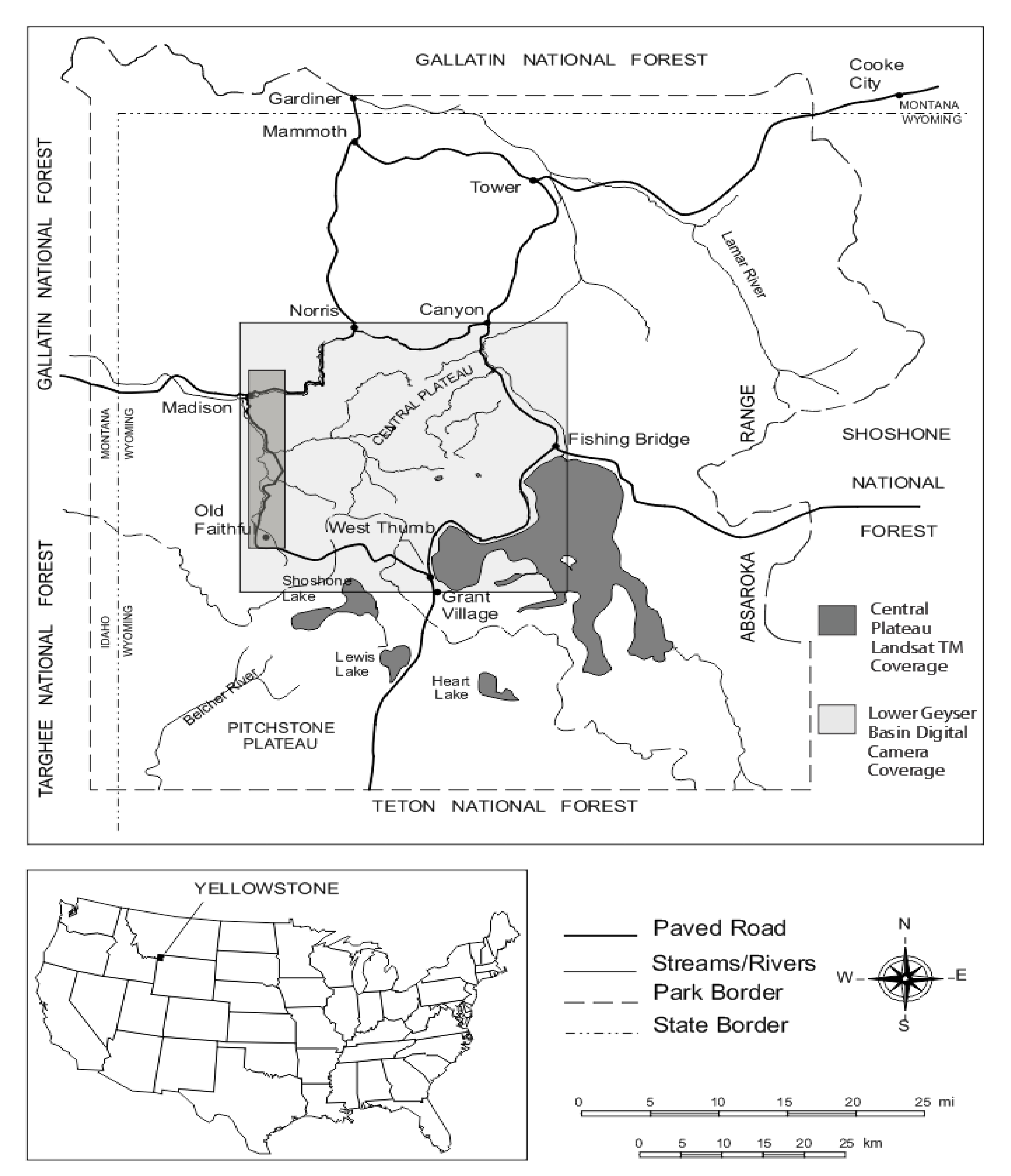

2.1. Study Area

2.2. Field Data

2.3. Remotely Sensed Data

2.4. Object-Based Image Analysis (OBIA) Approach

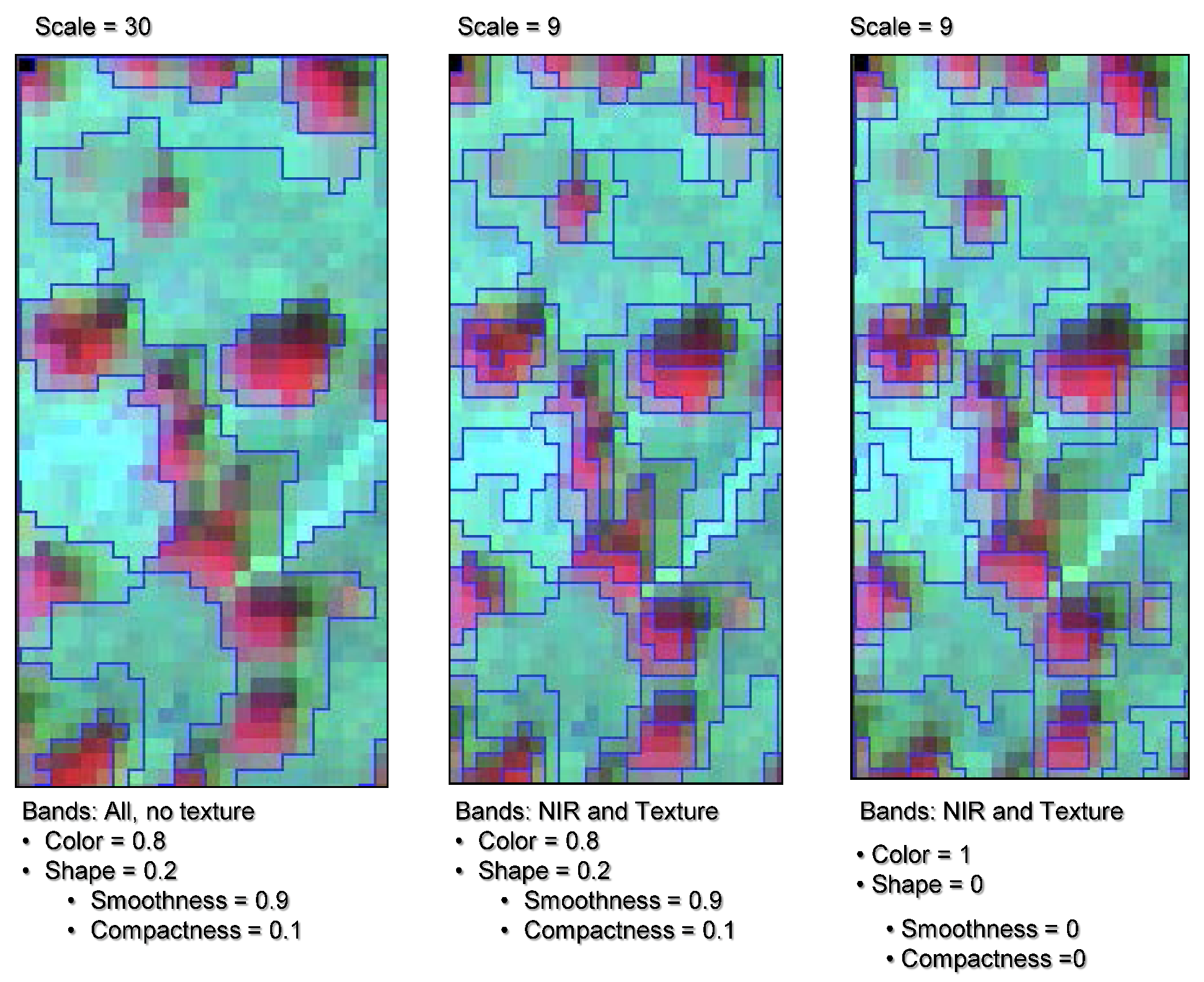

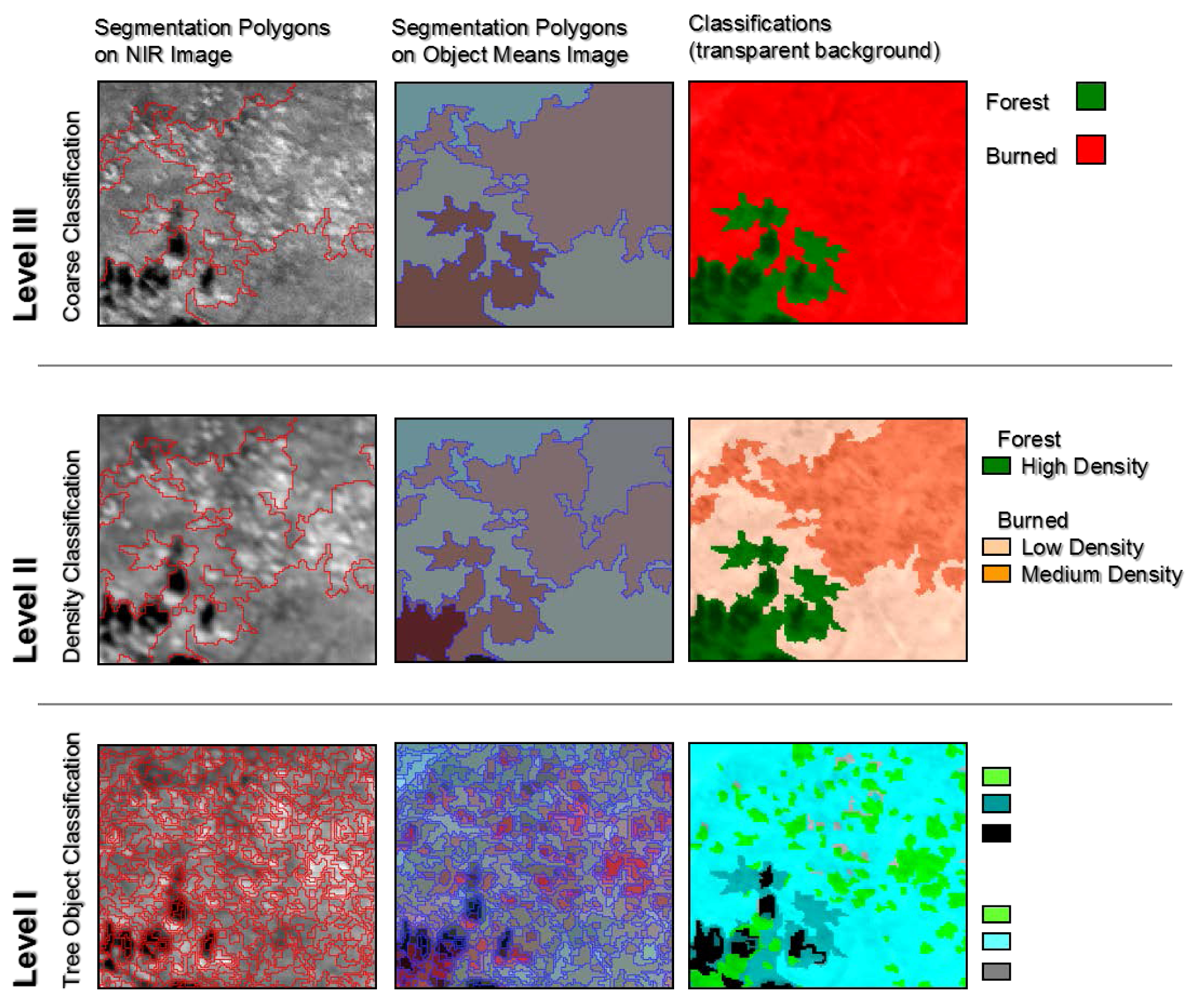

2.4.1. Hierarchical Segmentation

| Segmentation Level | Scale Parameter | Number of Objects | Average Object Size (m2) | Average Neighborhood (segments) |

|---|---|---|---|---|

| III | 200 | 84 | 160,000 | 4.4 |

| II | 100 | 317 | 4,245 | 4.71 |

| I | 9 | 39,665 | 33.92 | 5.36 |

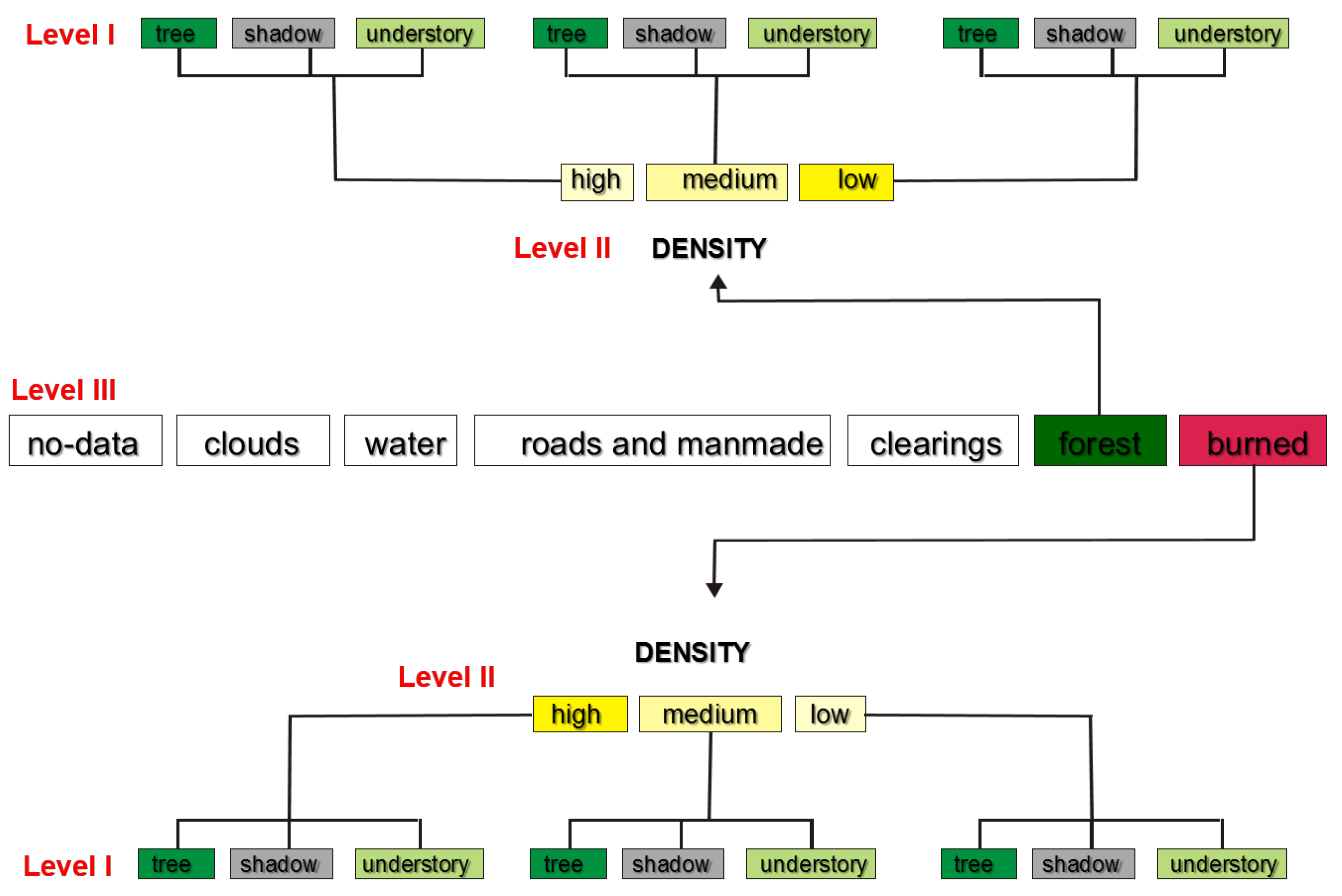

2.4.2. Hierarchical classification

2.5. Classification Assessment

3. Results and Discussion

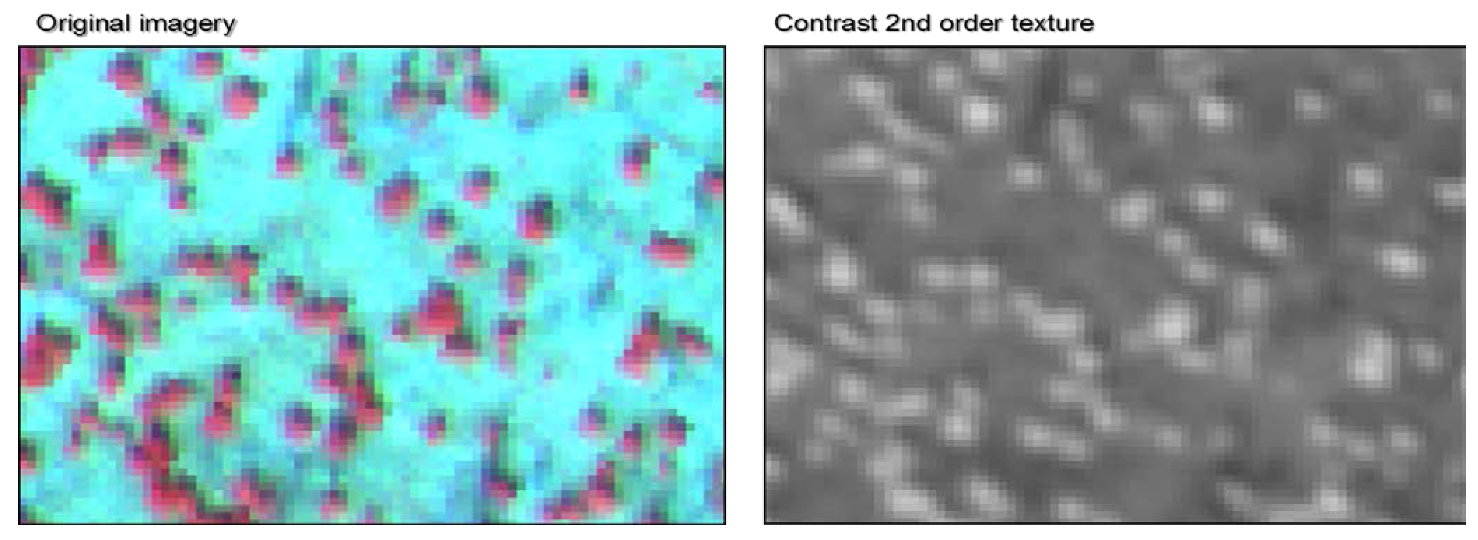

3.1. Application of Image Texture

| Mean Contrast Texture | ||

|---|---|---|

| Uniform object | Non-uniform object | |

| (i.e., image boundary) | (i.e., seedlings) | |

| fine | 0.9435 | 0.1090 |

| 0.9050 | 0.1910 | |

| 0.8148 | 0.4211 | |

| coarse | 0.6739 | 0.6725 |

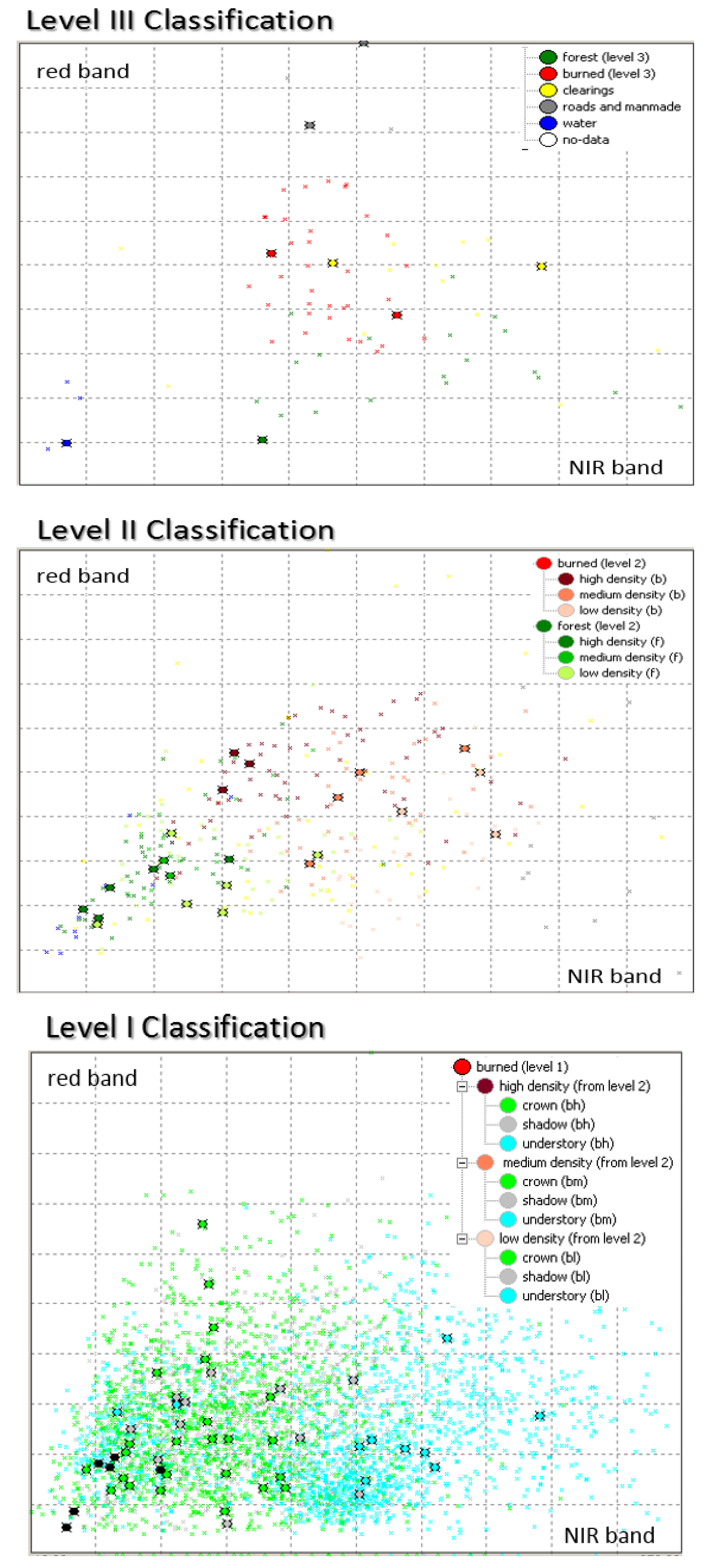

3.2. Hierarchical Classification

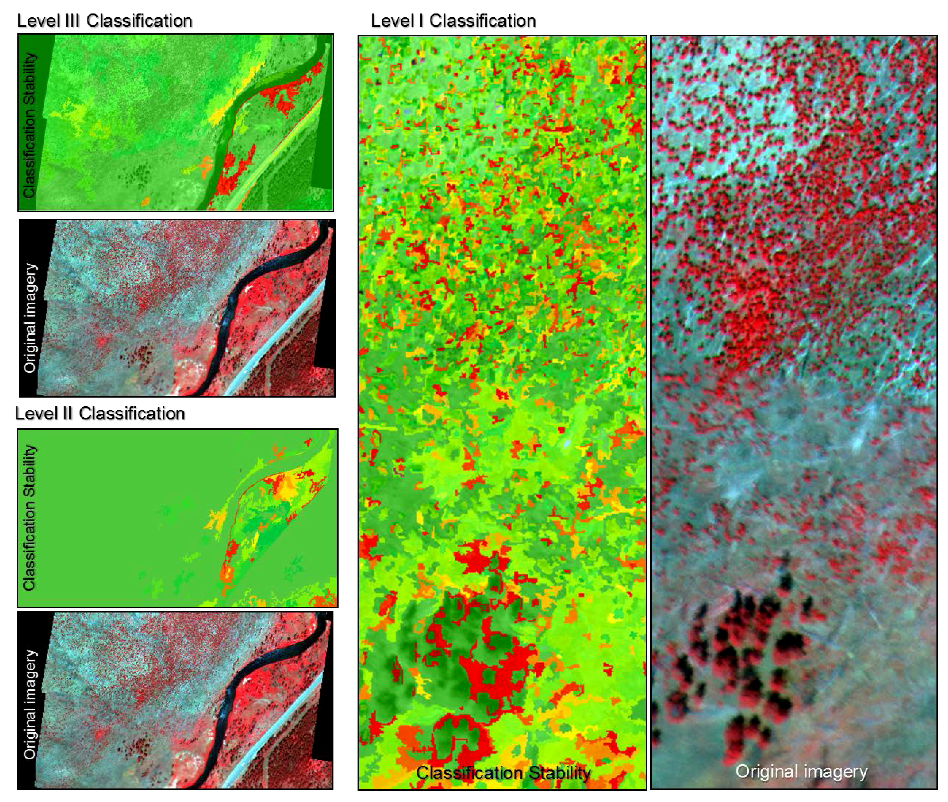

3.3. Class Separability and Classification Stability Assessments

| Reference Data | |||||||

| Class | Forest | Burned | Clearing | Total | Producer’s Accuracy | ||

| Classification Data | Forest | 17 | 3 | 0 | 20 | 85.0% | |

| Burned | 3 | 57 | 2 | 62 | 91.9% | ||

| Clearing | 0 | 2 | 8 | 10 | 80.0% | ||

| Total | 20 | 62 | 10 | 92 | |||

| User’s Accuracy | 85.0% | 91.9% | 80.0% | ||||

| Overall Accuracy | 89.1% | ||||||

| Khat | 0.78 | ||||||

| Reference Data | |||||||

| Class | Low | Medium | High | Total | Producer’s Accuracy | ||

| Classification Data | Low | 15 | 2 | 1 | 18 | 83.3% | |

| Medium | 3 | 19 | 0 | 22 | 86.4% | ||

| High | 2 | 1 | 19 | 22 | 86.4% | ||

| Total | 20 | 22 | 20 | 62 | |||

| User’s Accuracy | 75.0% | 86.4% | 95.0% | ||||

| Overall Accuracy | 85.5% | ||||||

| Khat | 0.78 | ||||||

| Reference Data | |||||||

| Class | Tree | Shadow | Understory | Total | Producer’s Accuracy | ||

| Classification Data | Tree | 149 | 2 | 13 | 200 | 74.5% | |

| Shadow | 39 | 149 | 12 | 200 | 74.5% | ||

| Understory | 12 | 13 | 175 | 200 | 87.5% | ||

| Total | 200 | 200 | 200 | 600 | |||

| User’s Accuracy | 74.5% | 74.5% | 87.5% | ||||

| Overall Accuracy | 78.8% | ||||||

| Khat | 0.68 | ||||||

4. Conclusions

Acknowledgments

Conflicts of Interest

References

- Romme, W.H.; Despain, D.G. On historical perspective fires of 1988 Yellowstone comparable. Perspective 2011, 39, 695–699. [Google Scholar]

- Romme, W.H.; Boyce, M.S.; Gresswell, R.; Merrill, E.H.; Minshall, G.W.; Whitlock, C.; Turner, M.G. Twenty years after the 1988 Yellowstone Fires: Lessons about disturbance and ecosystems. Ecosystems 2011, 14, 1196–1215. [Google Scholar] [CrossRef]

- Christensen, N.L.; Agee, J.K.; Brussard, P.F.; Hughes, J.; Dennis, H.; Minshall, G.W.; Peek, J.M.; Pyne, S.J.; Swanson, F.J.; Thomas, W.; Wells, S.; Williams, S.E.; Wright, H.A.; Knight, D.H.; Swanson, J.; Thomas, J.W.; Williams, S. The Yellowstone interpreting fires ecosystem responses and management implications. BioScience 1989, 39, 678–685. [Google Scholar] [CrossRef]

- Moskal, L.M.; Dunbar, M.; Jakubauskas, M.E. Visualizing the forest: a forest inventory characterization in the Yellowstone National Park based on geostatistical models. In A Message From the Tatras: Geographical Information Systems & Remote Sensing in Mountain Environmental Research; Widacki, W., Bytnerowicz, A., Riebau, A., Eds.; Institute of Geography & Spatial Management, Jagiellonian University: Krakow, Poland, 2004; pp. 219–232. [Google Scholar]

- Turner, M.G.; Romme, W.H.; Tinker, D.B. Surprises and lessons from the 1988 Yellowstone fires In a nutshell. Front. Ecol. Environ. 2003, 1, 351–358. [Google Scholar] [CrossRef]

- Merrill, E.H.; Bramble-brodahl, M.K.; Marrs, R.W.; Boyce, M.S. Estimation of green herbaceous phytomass from Landsat MSS data in Yellowstone National Park. J. Range Manag. 1993, 46, 151–157. [Google Scholar] [CrossRef]

- Wambolt, C.L.; Rens, R.J. Elk and fire impacts on mountain big sagebrush range in Yellowstone. Nat. Resour. Environ. Issues. 2011, 16, 1–6. [Google Scholar]

- Forester, J.D.; Anderson, D.P.; Turner, M.G. Do high-density patches of coarse wood and regenerating saplings create browsing refugia for aspen (Populus tremuloides Michx.) in Yellowstone National Park (USA)? For. Ecol. Manag. 2007, 253, 211–219. [Google Scholar] [CrossRef]

- Wulder, M. Optical remote-sensing techniques for the assessment of forest inventory and biophysical parameters. Prog. Phys. Geogr. 1998, 22, 449–476. [Google Scholar]

- Wang, J.; Sammis, T.W.; Gutschick, V.P.; Gebremichael, M.; Dennis, S.O.; Harrison, R.E. Review of satellite remote sensing use in forest health studies. Open Geogr. J. 2010, 3, 28–42. [Google Scholar] [CrossRef]

- Mumby, P.J.; Green, E.P.; Edwards, A.J.; Clark, C.D. The cost-effectiveness of remote sensing for tropical coastal resources assessment and management. J. Environ. Manag. 1999, 55, 157–166. [Google Scholar] [CrossRef]

- Smith, M.O.; Ustin, S.L.; Adams, J.B.; Gillespie, A.R. Vegetation in deserts: I. A regional measure of abundance from multispectral images. Science 1990, 26, 1–26. [Google Scholar]

- Hyyppa, H.; Inkinen, M.; Engdahl, M. Accuracy comparison of various remote sensing data sources in the retrieval of forest stand attributes. Science 2000, 128, 109–120. [Google Scholar]

- Miller, J.D.; Yool, S.R. Mapping forest post-fire canopy consumption in several overstory types using multi-temporal Landsat TM and ETM data. Remote Sens. Environ. 2002, 82, 481–496. [Google Scholar] [CrossRef]

- Kashian, D.M.; Tinker, D.B.; Turner, M.G.; Scarpace, F.L. Spatial heterogeneity of lodgepole pine sapling densities following the 1988 fires in Yellowstone National Park, Wyoming , USA. Can. J. For. Res. 2004, 34, 2263–2276. [Google Scholar] [CrossRef]

- Chambers, J.Q.; Asner, G.P.; Morton, D.C.; Anderson, L.O.; Saatchi, S.S.; Espírito-Santo, F.D.B.; Palace, M.; Souza, C. Regional ecosystem structure and function: ecological insights from remote sensing of tropical forests. Trends Ecol. Evol. 2007, 22, 414–423. [Google Scholar] [CrossRef]

- Moskal, L.M.; Styers, D.M.; Halabisky, M. Monitoring urban tree cover using object-based image analysis and public domain remotely sensed data. Remote Sens. 2011, 3, 2243–2262. [Google Scholar] [CrossRef]

- Platt, R.V.; Rapoza, L. An evaluation of an object-oriented paradigm for land use/land cover classification. Prof. Geogr. 2008, 60, 87–100. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Townshend, J.R.G.; Huang, C.; Kalluri, S.N.V.; Defries, R.S.; Liang, S.; Yang, K. Beware of per-pixel characterization of land cover. Intern. J. Remote Sens. 2000, 21, 839–843. [Google Scholar] [CrossRef]

- Myint, S.W.; Gober, P.; Brazel, A.; Grossman-Clarke, S.; Weng, Q. Per-pixel vs. object-based classification of urban land cover extraction using high spatial resolution imagery. Remote Sens. Environ. 2011, 115, 1145–1161. [Google Scholar] [CrossRef]

- Cleve, C.; Kelly, M.; Kearns, F.; Mortiz, M. Classification of the wildland–urban interface: A comparison of pixel- and object-based classifications using high-resolution aerial photography. Comput. Environ. Urban Syst. 2008, 32, 317–326. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Newman, M.E.; McLaren, K.P.; Wilson, B.S. Comparing the effects of classification techniques on landscape-level assessments: pixel-based versus object-based classification. Intern. J. Remote Sens. 2011, 32, 4055–4073. [Google Scholar] [CrossRef]

- Franks, S. Monitoring forest regrowth following large scale fire using satellite data-A case study of Yellowstone National Park, USA. Eur. J. Remote Sens. 2013, 46, 551–569. [Google Scholar] [CrossRef]

- Huang, S.; Crabtree, R.L.; Potter, C.; Gross, P. Estimating the quantity and quality of coarse woody debris in Yellowstone post-fire forest ecosystem from fusion of SAR and optical data. Remote Sens. Environ. 2009, 113, 1926–1938. [Google Scholar] [CrossRef]

- Fu, G.; Zhao, H.; Li, C.; Shi, L. Segmentation for high-resolution optical remote sensing imagery using improved quadtree and region adjacency graph technique. Remote Sens. 2013, 5, 3259–3279. [Google Scholar] [CrossRef]

- Polychronaki, A.; Gitas, I.Z. Burned area mapping in greece using spot-4 hrvir images and object-based image analysis. Remote Sens. 2012, 4, 424–438. [Google Scholar] [CrossRef]

- Mitri, G.H.; Gitas, I.Z. Mapping the severity of fire using object-based classification of IKONOS imagery. Intern. J. Wildland Fire 2008, 17, 431–442. [Google Scholar] [CrossRef]

- Potter, C.; Li, S.; Huang, S.; Crabtree, R.L. Analysis of sapling density regeneration in Yellowstone National Park with hyperspectral remote sensing data. Remote Sens. Environ. 2012, 121, 61–68. [Google Scholar] [CrossRef]

- Mitri, G.H.; Gitas, I.Z. Mapping post-fire forest regeneration and vegetation recovery using a combination of very high spatial resolution and hyperspectral satellite imagery. Intern. J. Appl. Earth Observ. Geoinform. 2013, 20, 60–66. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, 3, 610–621. [Google Scholar] [CrossRef]

- Irons, J.R.; Petersen, G.W. Texture transforms of remote sensing data. Remote Sens. Environ. 1981, 11, 359–370. [Google Scholar] [CrossRef]

- Weszka, J.S.; Dyer, C.R.; Rosenfeld, A. A comparative study of texture measures for terrain classification. Comp. General Pharm. 1976, I, 41–46. [Google Scholar]

- Carr, J.R.; de Miranda, F.P. The semivariogram in comparison to the co-occurrence matrix for classification of image texture. IEEE Trans. Geosci. Remote Sens. 1998, 36, 1945–1952. [Google Scholar] [CrossRef]

- Wulder, M.A.; LeDrew, E.F.; Franklin, S.E.; Lavigne, M.B. Aerial image texture information in the estimation of northern deciduous and mixed wood forest Leaf Area Index (LAI). Remote Sens. Environ. 1998, 64, 64–76. [Google Scholar] [CrossRef]

- Wulder, M.A.; White, J.C.; Fournier, R.A.; Luther, J.E.; Magnussen, S. Spatially explicit large area biomass estimation: Three approaches using forest inventory and remotely sensed imagery in a GIS. Sensors 2008, 8, 529–560. [Google Scholar] [CrossRef]

- Franklin, S.E.; McCaffrey, T.M.; Lavigne, M.B.; Wulder, M.A.; Moskal, L.M. An ARC/INFO Macro Language (AML) polygon update program (PUP) integrating forest inventory and remotely-sensed data. Can. J. Remote Sens. 2000, 26, 566–575. [Google Scholar]

- Moskal, L.M.; Franklin, S.E. Relationship between airborne multispectral image texture and aspen defoliation. Intern. J. Remote Sens. 2004, 25, 2701–2711. [Google Scholar] [CrossRef]

- Moskal, L.M.; Franklin, S.E. Multi-layer forest stand discrimination with multiscale texture from high spatial detail airborne imagery. Geocarto Intern. 2004, 17, 53–65. [Google Scholar]

- Wang, L.; Sousa, W.P.; Gong, P.; Biging, G.S. Comparison of IKONOS and QuickBird images for mapping mangrove species on the Caribbean coast of Panama. Remote Sens. Environ. 2004, 91, 432–440. [Google Scholar] [CrossRef]

- Kim, M.; Madden, M.; Warner, T.A. Forest type mapping using object-specific texture measures from multispectral ikonos imagery: Segmentation quality and image classification issues. Photogramm. Eng. Remote Sens. 2009, 75, 819–829. [Google Scholar]

- Turner, M.G.; Romme, W.H.; Gardner, R.H.; Hargrove, W.W. Effects of fire size and pattern on early succession in Yellowstone National Park. Ecol.l Monogr. 1997, 67, 411–433. [Google Scholar] [CrossRef]

- Zhang, C.; Franklin, S.; Wulder, M. Geostatistical and texture analysis of airborne-acquired images used in forest classification. Intern. J. Remote Sens. 2004, 25, 859–865. [Google Scholar] [CrossRef]

- Franklin, S.E.; Hall, R.J.; Moskal, L.M. Incorporating texture into classification of forest species composition from airborne multispectral images. Intern. J. Remote Sens. 2000, 21, 61–79. [Google Scholar] [CrossRef]

- Turner, M.G.; Tinker, D.B.; Romme, W.H.; Kashian, D.M.; Litton, C.M. Landscape patterns of sapling density, leaf area, and aboveground net primary production in postfire lodgepole pine forests, Yellowstone National Park (USA). Ecosystems 2004, 7, 751–775. [Google Scholar] [CrossRef]

- Halabisky, M.; Moskal, L.M.; Hall, S.A. Object-based classification of semi-arid wetlands. J. Appl. Remote Sens. 2011, 5, 053511. [Google Scholar] [CrossRef]

- Congalton, R.G.; Mead, R.A. A quantitative method to test for consistency and correctnes in photointerpretation. Photogramm. Eng. Remote Sens. 1983, 49, 69–74. [Google Scholar]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data; CRC Press: Boca Raton, FL, USA, 2009; p. 183. [Google Scholar]

- Kokaly, R.F.; Despain, D.G.; Clark, R.N.; Livo, K.E. Mapping vegetation in Yellowstone National Park using spectral feature analysis of AVIRIS data. Remote Sens. Environ. 2003, 84, 437–456. [Google Scholar] [CrossRef]

- Albrecht, F.; Lang, S. Spatial accuracy assessment of object boundaries for object-based image analysis. In Proceedings of GEOBIA 2010-Geographic Object-Based Image Analysis, Ghent University, Ghent, Belgium, 29 June–2 July 2010; Addink, E.A., van Coillie, F.M.B., Eds.; Volume XXXVIII-4/C7.

- Casady, G.M.; Marsh, S.E. Broad-scale environmental conditions responsible for post-fire vegetation dynamics. Remote Sens. 2010, 2, 2643–2664. [Google Scholar] [CrossRef]

- Tíscar, P.A.; Linares, J.C. Structure and regeneration patterns of Pinus nigra subsp. salzmannii natural forests: A basic knowledge for adaptive management in a changing climate. Forests 2011, 2, 1013–1030. [Google Scholar] [CrossRef]

© 2013 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Moskal, L.M.; Jakubauskas, M.E. Monitoring Post Disturbance Forest Regeneration with Hierarchical Object-Based Image Analysis. Forests 2013, 4, 808-829. https://doi.org/10.3390/f4040808

Moskal LM, Jakubauskas ME. Monitoring Post Disturbance Forest Regeneration with Hierarchical Object-Based Image Analysis. Forests. 2013; 4(4):808-829. https://doi.org/10.3390/f4040808

Chicago/Turabian StyleMoskal, L. Monika, and Mark E. Jakubauskas. 2013. "Monitoring Post Disturbance Forest Regeneration with Hierarchical Object-Based Image Analysis" Forests 4, no. 4: 808-829. https://doi.org/10.3390/f4040808