Fusion of Multiple Pyroelectric Characteristics for Human Body Identification

Abstract

:1. Introduction

- Reductions both in the number of measurements and in sampling frequency for human motion state estimation.

- Reductions in hardware cost, power consumption, privacy, infringement, computational complexity, communication overhead, and networking data throughput.

- Reductions in system deployment duration, limitations upon applications or application location restrictions (e.g., long range or crowded scene).

- Its performance is independent of illumination and has strong robustness to the color change of background.

- Its sensitivity range of angular rate is about 0.1 r/s to 3 r/s [5,6], which can cover the most human walking speeds at around 2–10 m. It can obtain better field of view (FOV) combined with low price Fresnel lens array. Thus, compared with the traditional video systems, distributed wireless pyroelectric sensor networks can provide better spatial coverage and reduce the deployment duration and deployment location restrictions.

- Different Fresnel lens and signal modulation mask can obtain more pyroelectric infrared information of the human target.

- The four sensors are installed with different heights, which can collect different pyroelectric infrared information from corresponding parts of the human body.

- The effective data is fused by multiple channel signals which are collected from the four sensor modules.

- Extracting different pyroelectric infrared features of the human target by different algorithms can help establish different target identification model databases.

2. Related Work

3. Sensor Modules and Deployment

3.1. PIR Sensor Module

| Parameters | Value |

|---|---|

| IR Receiving Electrode | 0.7 × 2.4 mm, 4 elements |

| Sensitivity | ≥4300 V/W |

| Detectivity (D*) | 1.6 × 108 cm (Hz)1/2/W |

| Supply Voltage | 3–15 V |

| Operating Temp | −30–70 °C |

| Offset Voltage | 0.3–1.2 V |

| FOV | 150° |

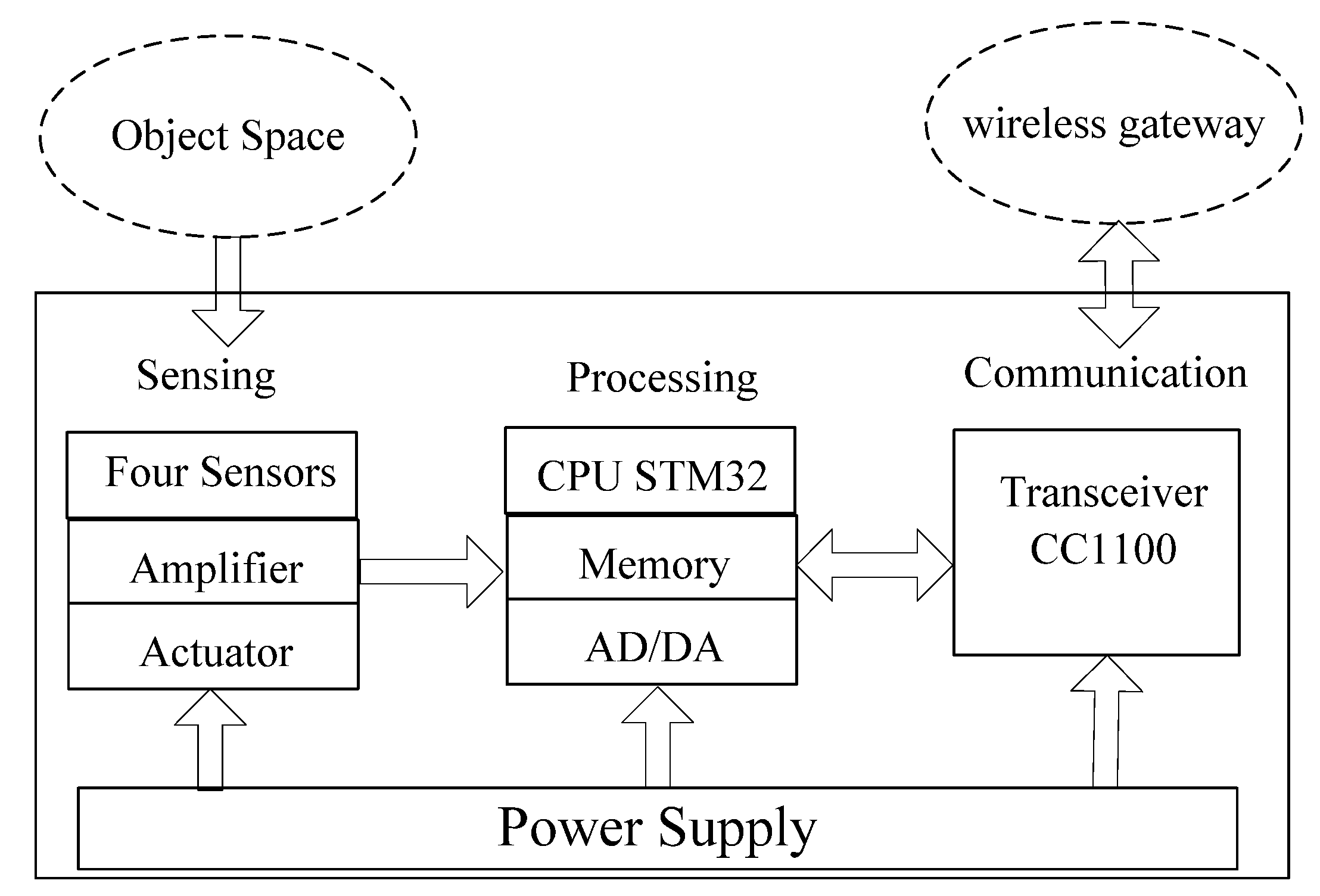

3.2. PIR Sensor Node

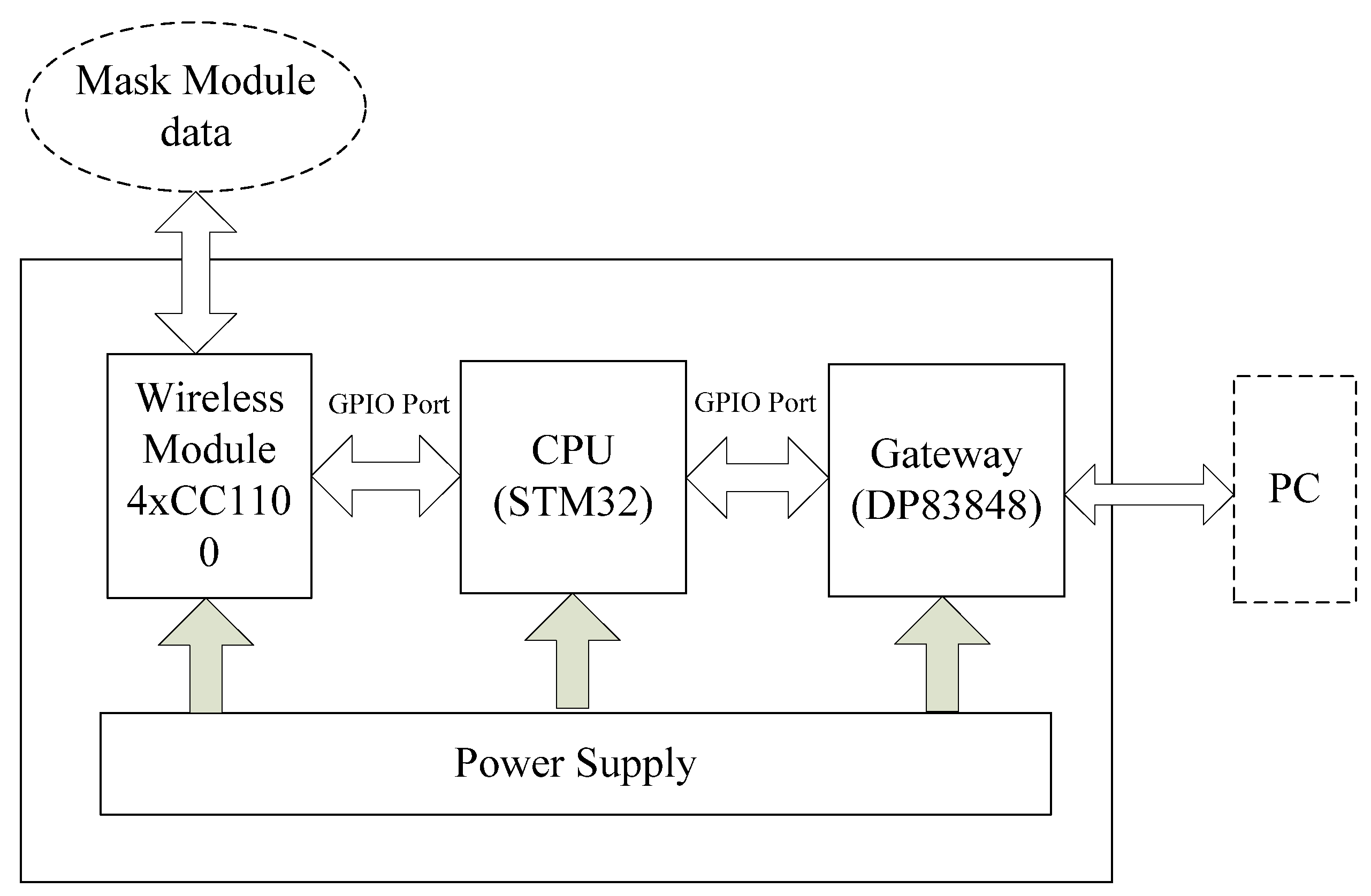

3.3. Gateway Module

4. Target Recognition System

4.1. System Architecture

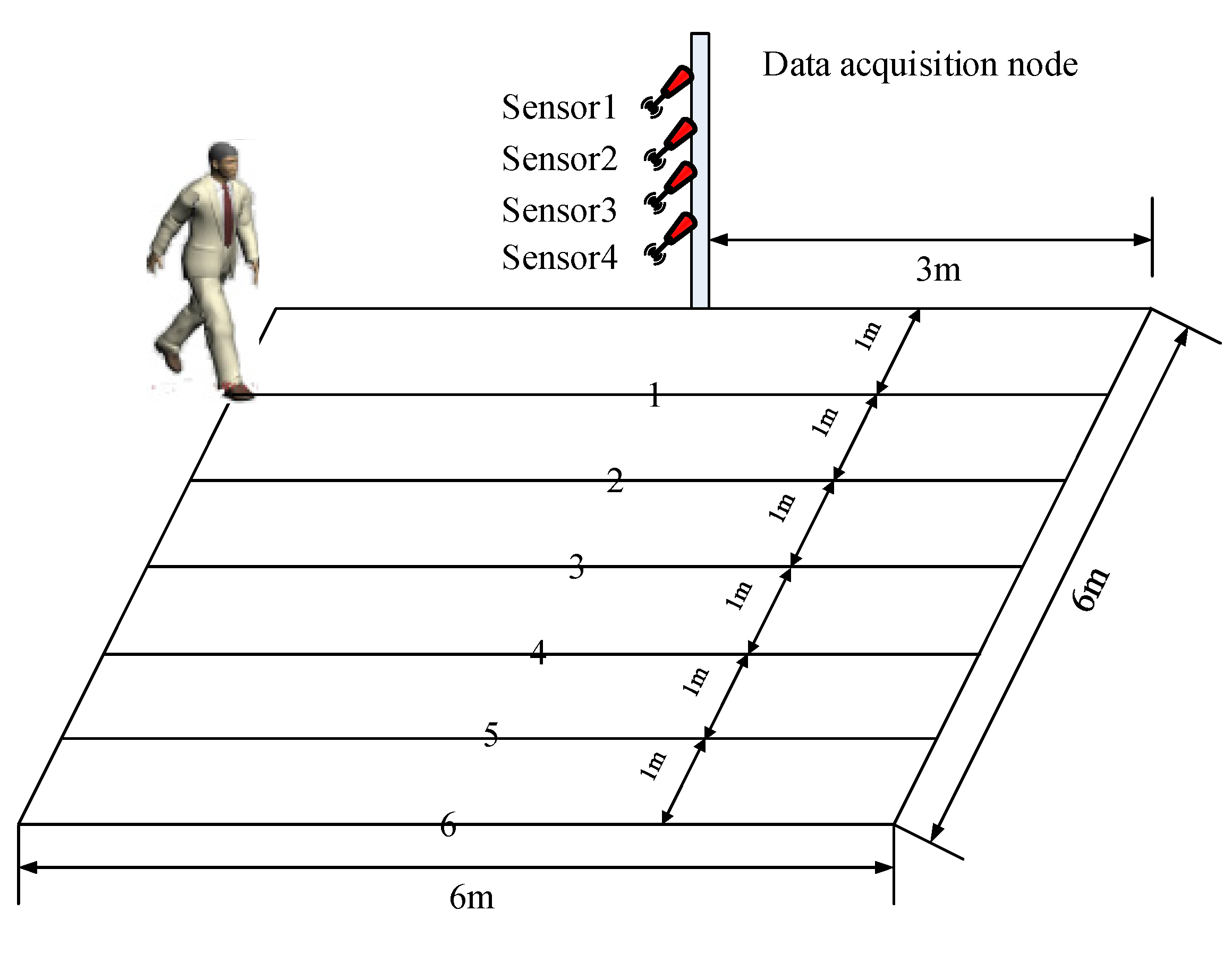

4.2. Experimental Program

| Object | Sexuality | Height | Step distance |

|---|---|---|---|

| a | male | 175 cm | 50 cm |

| b | male | 167 cm | 40 cm |

| c | female | 160 cm | 40 cm |

| d | male | 182 cm | 60 cm |

| e | male | 172 cm | 50 cm |

| f | female | 158 cm | 37 cm |

| g | female | 162 cm | 40 cm |

| h | male | 179 cm | 56 cm |

| i | male | 173 cm | 50 cm |

| j | female | 171 cm | 52 cm |

5. Algorithm Descriptions

5.1. Feature Extraction

5.1.1. FFT + PCA (Fast Fourier Transform and Principal Component Analysis)

- Standardize the observation matrix X to obtain matrix Y;

- Calculate Z which is the covariance of matrix Y;

5.1.2. STFT (Short-Time Fourier Transform)

5.1.3. WT (Wavelet Transform)

5.1.4. WPT (Wavelet Packet Transform)

5.2. Feature Fusion and Recognition

5.2.1. FCEM (Fuzzy Comprehensive Evaluation Method)

5.2.2. SVM (Support Vector Machine)

6. Experiments and Results Analysis

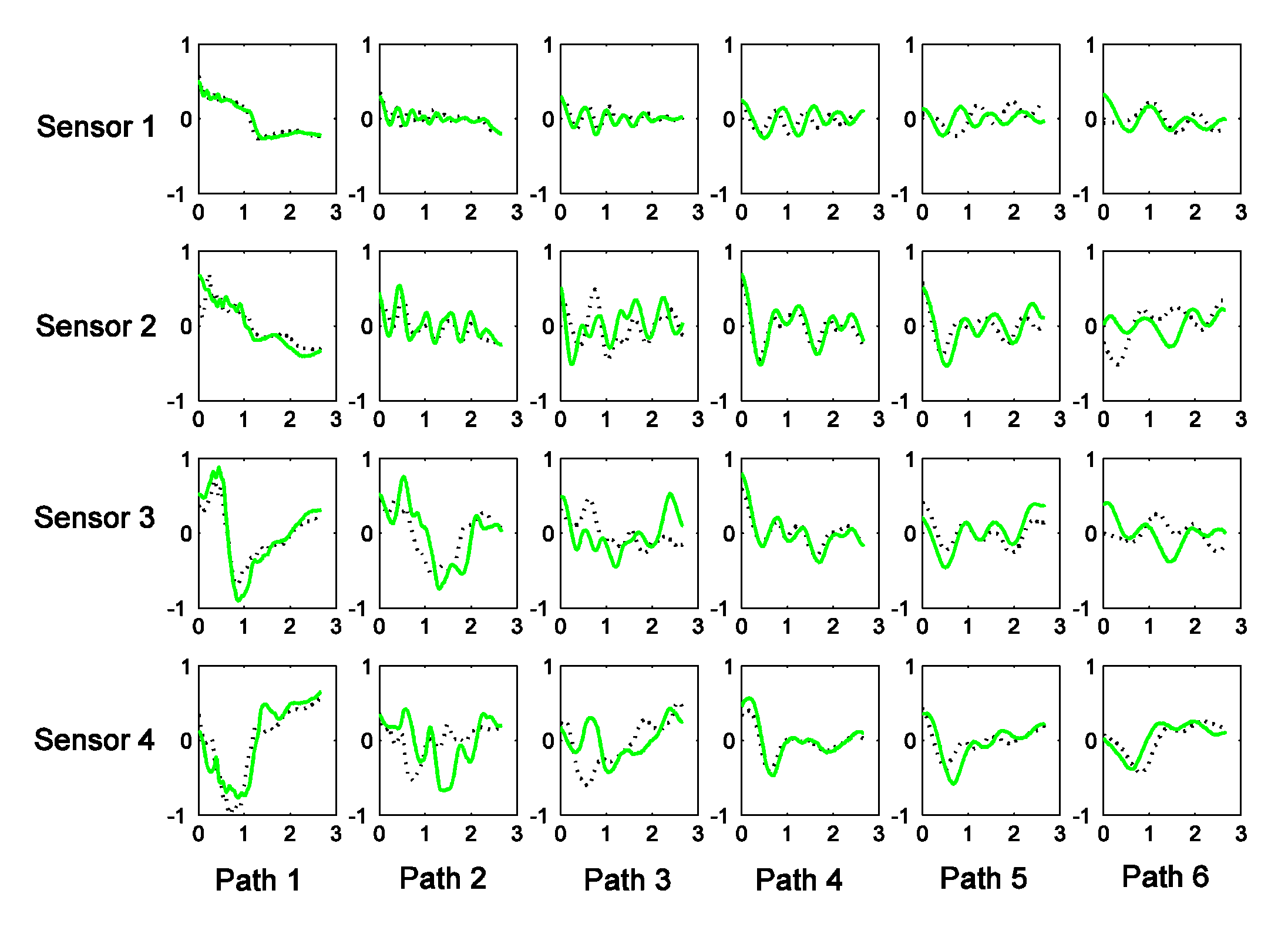

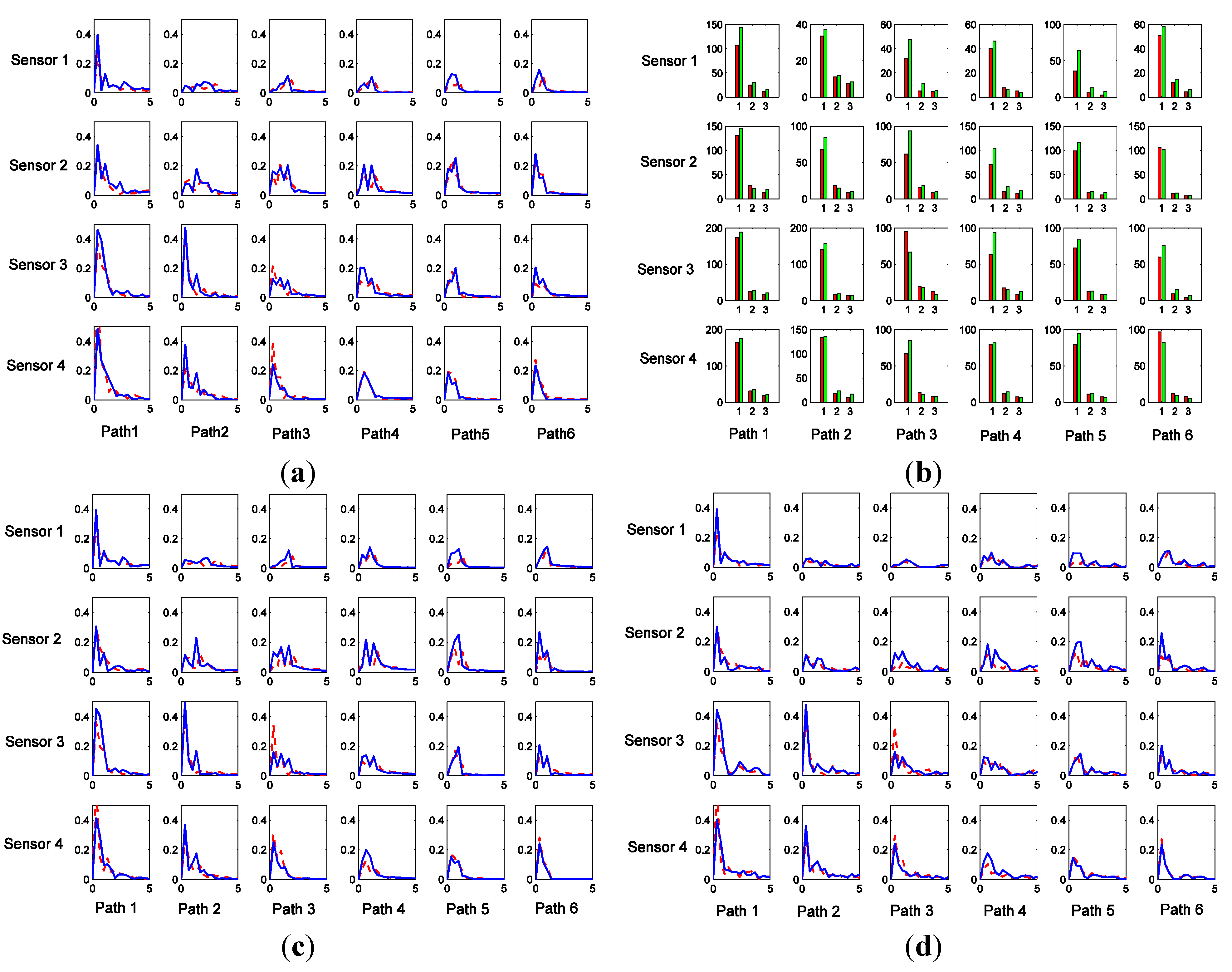

6.1. Feature Extraction

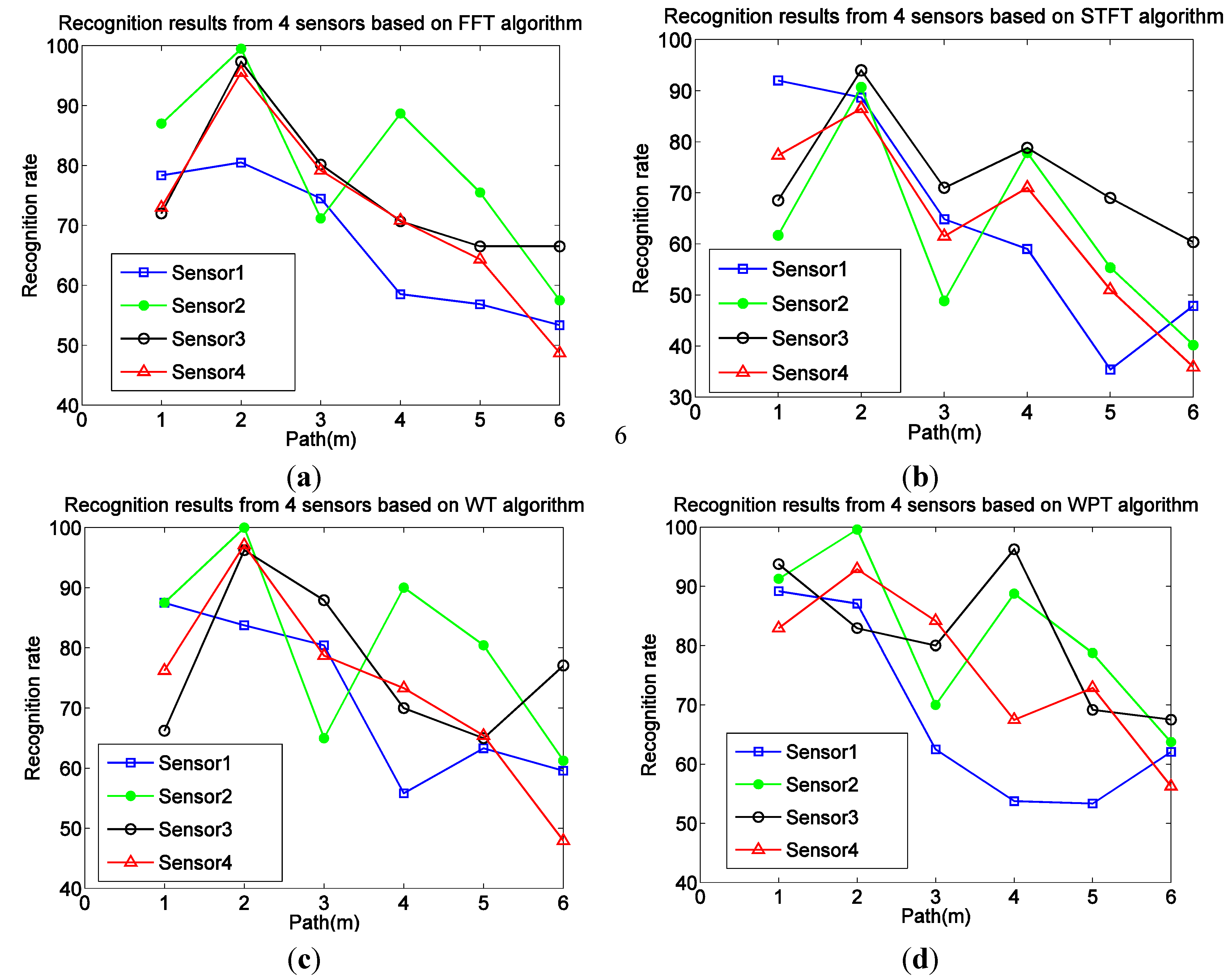

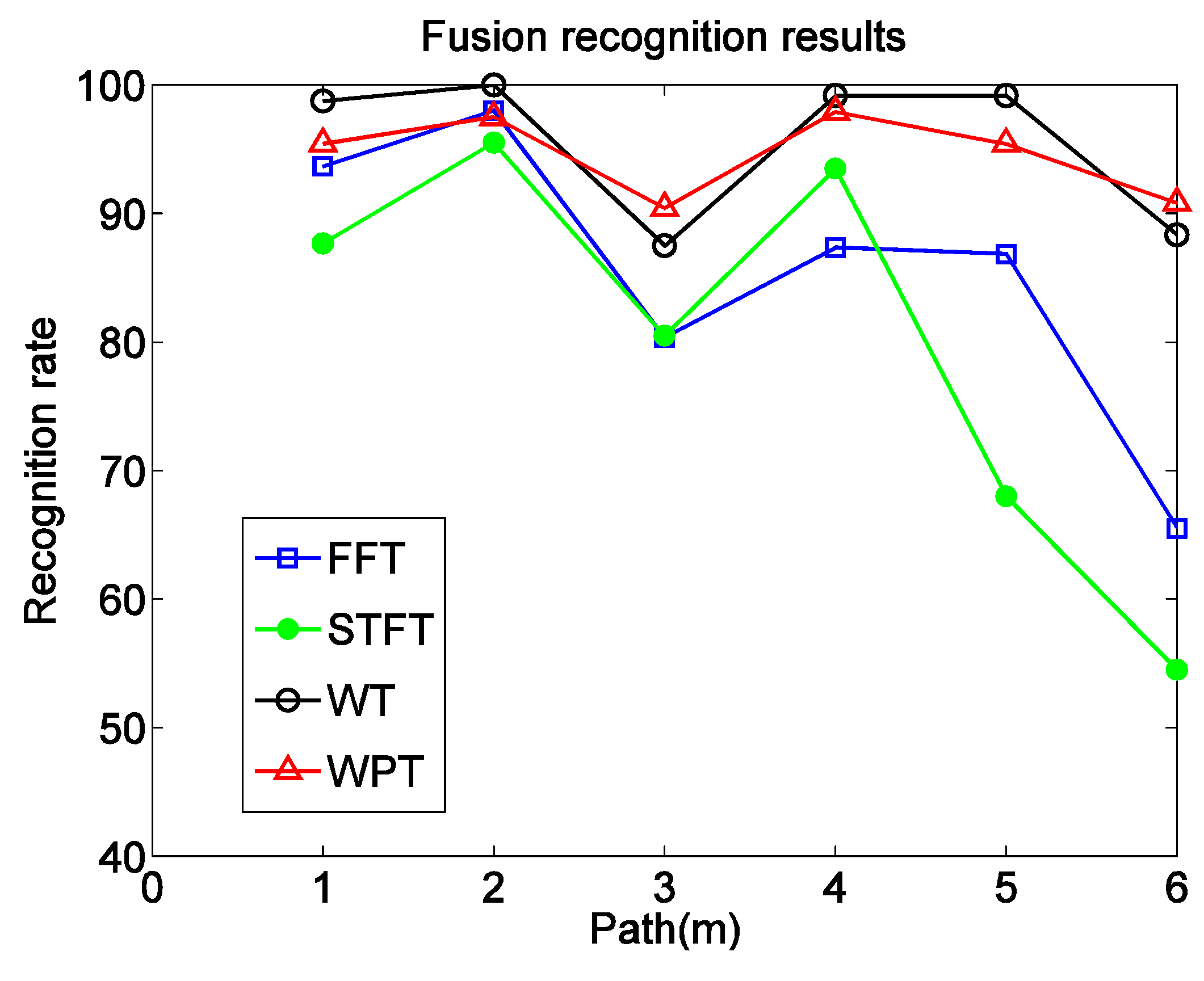

6.2. Comparison of Different Algorithms’ Recognition Rates

| Algorithm | Recognition rate in different path (%) | Computing time(s) | |||||

|---|---|---|---|---|---|---|---|

| Path1 | Path2 | Path3 | Path4 | Path5 | Path6 | ||

| FFT + PCA + SVM + FCE | 83.5 | 89.6 | 76.3 | 86.0 | 77.7 | 65.0 | 10.1 |

| STFT + SVM + FCE | 87.6 | 95.5 | 80.5 | 93.5 | 68.0 | 54.5 | 262.8 |

| WT + SVM + FCE | 98.7 | 100 | 87.5 | 99.1 | 99.1 | 88.3 | 56.2 |

| WPT + SVM + FCE | 95.4 | 97.5 | 90.4 | 97.9 | 95.4 | 90.8 | 25.0 |

7. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Hong, L.; Ze, Y.; Zha, H.; Zou, Y.; Zhang, L. Robust human tracking based on multi-cue integration and mean-shift. Pattern Recognit. Lett. 2009, 30, 827–837. [Google Scholar]

- Fan, J.; Xu, W.; Wu, Y.; Gong, Y. Human tracking using convolutional neural networks. IEEE Trans. Neural Netw. 2010, 21, 1610–1623. [Google Scholar] [PubMed]

- Kang, S.J.; Samoilov, V.B.; Yoon, Y.S. Low-frequency response of pyroelectric sensors. IEEE Trans. Ultrason. Ferroelect. Freq. Contr. 1998, 45, 1255–1260. [Google Scholar] [CrossRef] [PubMed]

- Hussain, T.M.; Baig, A.M.; Saddawi, T.N.; Ahmed, S.A. Infrared pyroelectric sensor for detection of vehicular traffic using digital signal processing techniques. IEEE Trans. Veh. Technol. 1995, 44, 683–689. [Google Scholar] [CrossRef]

- Hossain, A.; Rashid, M.H. Pyroelectric detectors and their applications. IEEE Trans. Ind. Applic. 1991, 27, 824–829. [Google Scholar] [CrossRef]

- Sekmen, A.S.; Wilkes, M.; Kawamura, K. An application of passive human-robot interaction: Human tracking based on attention distraction. IEEE Trans. Syst. Man Cybern. A 2002, 32, 248–259. [Google Scholar] [CrossRef]

- Hao, Q.; Brady, D.J.; Guenther, B.D.; Burchett, J.B.; Shankar, M.; Feller, S. Human tracking with wireless distributed pyroelectric sensors. IEEE Sens. J. 2006, 6, 1683–1696. [Google Scholar] [CrossRef]

- Moghavvemi, M.; Seng, L.C. Pyroelectric infrared sensor for intruder detection. In Proceedings of 2004 IEEE Region 10 Conference (TENCON 2004), Newark, NJ, USA, 21–24 November 2004; pp. 656–659.

- Bai, Y.W.; Ku, Y.T. Automatic room light intensity detection and control using a microprocessor and light sensors. IEEE Trans. Consum. Electron. 2008, 54, 1173–1176. [Google Scholar] [CrossRef]

- Rajgarhia, A.; Stann, F.; Heidemann, J. Privacy-sensitive monitoring with a mix of IR sensors and cameras. In Proceedings of the Second International Workshop on Sensor and Actor Network Protocols and Applications, Boston, MA, USA, 22 August 2004; pp. 21–29.

- Tao, S.; Kudo, M.; Nonaka, H.; Toyama, J. Person authentication and activities analysis in an office environment using a sensor network. Constr. Ambient Intell. 2012, 277, 119–127. [Google Scholar]

- Bai, Y.-W.; Teng, H. Enhancement of the sensing distance of an embedded surveillance system with video streaming recording triggered by an infrared sensor circuit. In Proceedings of SICE Annual Conference, Tokyo, Japan, 20–2 August 2008; pp. 1657–1662.

- Hao, Q.; Hu, F.; Xiao, Y. Multiple human tracking and identification with wireless distributed pyroelectric sensor systems. IEEE Sens. J. 2009, 3, 428–439. [Google Scholar]

- Shankar, M.; Burchett, J.B.; Hao, Q.; Guenther, B.D.; Brady, D.J. Human-tracking systems using pyroelectric infrared detectors. Opt. Eng. 2006, 45, 106401. [Google Scholar] [CrossRef]

- Kim, H.H.; Ha, K.N.; Lee, S.; Lee, K.C. Resident location recognition algorithm using a Bayesian classifier in the PIR sensor-based indoor location-aware system. IEEE Trans. Syst. Man Cybern. C Appl. Rev. Arch. 2000, 39, 2410–2459. [Google Scholar]

- Luo, X.; Shen, B.; Guo, X.; Luo, G.; Wang, G. Human tracking using ceiling pyroelectric infrared sensors. In Proceeding of 7th IEEE International Conference on Control and Automation, Christchurch, New Zealand, 9–11 December 2009; pp. 1716–1721, 2009.

- Lu, J.; Gong, J.; Hao, Q.; Hu, F. Space encoding based compressive multiple human tracking with distributed binary pyroelectric infrared sensor networks. In Proceedings of IEEE Conference on Multiple Sensor Fusion and Integration, Hamburg, Germany, 13–15 September 2012; pp. 180–185.

- Fang, J.-S.; Hao, Q.; Brady, D.J.; Shankar, M.; Guenther, B.D.; Pitsianis, N.P.; Hsu, K.Y. Path-dependent human identification using a pyroelectric infrared sensor and Fresnel lens arrays. Opt. Exp. 2006, 14, 609–624. [Google Scholar] [CrossRef]

- Fang, J.-S.; Hao, Q.; Brady, D.J.; Guenther, B.D.; Hsu, K.Y. Realtime human identification using a pyroelectric infrared detector array and hidden Markov models. Opt. Exp. 2006, 14, 6643–6658. [Google Scholar] [CrossRef]

- Zhou, X.; Hao, Q.; Fei, H. 1-bit walker recognition with distributed binary pyroelectric sensors. In Proceedings of 2010 IEEE Conference on Multisensor Fusion and Integration for Intelligent Systems, Salt Lake City, UT, USA, 5–7 September 2010; pp. 168–173.

- Sun, Q.; Hu, F.; Hao, Q. Context Awareness Emergence for Distributed Binary Pyroelectric Sensors. In Proceedings of 2010 IEEE Conference on Multisensor Fusion and Integration for Intelligent Systems, Salt Lake City, UT, USA, 5–7 September 2010; pp. 162–167.

- Hu, F.; Sun, Q.; Hao, Q. Mobile Targets Region-of-Interest via Distributed Pyroelectric Sensor Network: Towards a Robust, Real-time Context Reasoning. In Proceedings of 2010 IEEE Conference on Sensors, Kona, HI, USA, 1–4 November 2010; pp. 1832–1836.

- Sun, Q.; Hu, F.; Hao, Q. Mobile Target Scenario Recognition via Low-cost Pyroelectric Sensing System: Toward a Context-Enhanced Accurate Identification. IEEE Trans. on Syst. Man Cybern. Syst. 2014, 44, 375–384. [Google Scholar] [CrossRef]

- Sun, Q.; Lu, J.; Wu, Y.; Qiao, H.; Huang, X. Non-informative Hierarchical Bayesian Inference for Non-negative Matrix Factorization. Signal Process. 2015, 108, 309–321. [Google Scholar] [CrossRef]

- Chen, J.; Pi, D.; Liu, Z. An Insensitivity Fuzzy C-means Clustering Algorithm Based on Penalty Factor. J. Softw. 2013, 8, 2379–2384. [Google Scholar] [CrossRef]

- Su, X.; Jiang, W.; Xu, J.; Xu, P.; Deng, Y. A New Fuzzy Risk Analysis Method Based on Generalized Fuzzy Numbers. J. Softw. 2011, 6, 1755–1762. [Google Scholar] [CrossRef]

- Gumus, E.; Kilic, N.; Sertbas, A.; Ucan, O.N. Evaluation of face recognition techniques using PCA, wavelets and SVM. Expert Syst. Appl. 2010, 37, 6404–6408. [Google Scholar] [CrossRef]

- Jiang, Y.; Tang, B.; Qin, Y.; Liu, W. Feature extraction method of wind turbine based on adaptive Morlet wavelet and SVD. Renew. Energy 2011, 36, 2146–2153. [Google Scholar] [CrossRef]

- Kadambe, S.; Murray, R.; Boudreaux-Bartels, G.F. Wavelet transform-based QRS complex detector. IEEE Trans. Biomed. Eng. 1999, 46, 838–848. [Google Scholar] [CrossRef] [PubMed]

- Sun, Z.; Chang, C. Structural Damage Assessment Based on Wavelet Packet Transform. J. Struct. Eng. 2002, 128, 1354–1361. [Google Scholar] [CrossRef]

- Guo, L.; Gao, J.; Yang, J.; Kang, J. Criticality evaluation of petrochemical equipment based on fuzzy comprehensive evaluation and a BP neural network. J. Loss Prev. Process Ind. 2009, 22, 469–476. [Google Scholar] [CrossRef]

- Qian, H.; Mao, Y.; Xiang, W.; Wang, Z. Recognition of human activities using SVM multi-class classifier. Pattern Recognit. Lett. 2010, 31, 100–111. [Google Scholar] [CrossRef]

© 2014 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, W.; Xiong, J.; Li, F.; Jiang, N.; Zhao, N. Fusion of Multiple Pyroelectric Characteristics for Human Body Identification. Algorithms 2014, 7, 685-702. https://doi.org/10.3390/a7040685

Zhou W, Xiong J, Li F, Jiang N, Zhao N. Fusion of Multiple Pyroelectric Characteristics for Human Body Identification. Algorithms. 2014; 7(4):685-702. https://doi.org/10.3390/a7040685

Chicago/Turabian StyleZhou, Wanchun, Ji Xiong, Fangmin Li, Na Jiang, and Ning Zhao. 2014. "Fusion of Multiple Pyroelectric Characteristics for Human Body Identification" Algorithms 7, no. 4: 685-702. https://doi.org/10.3390/a7040685