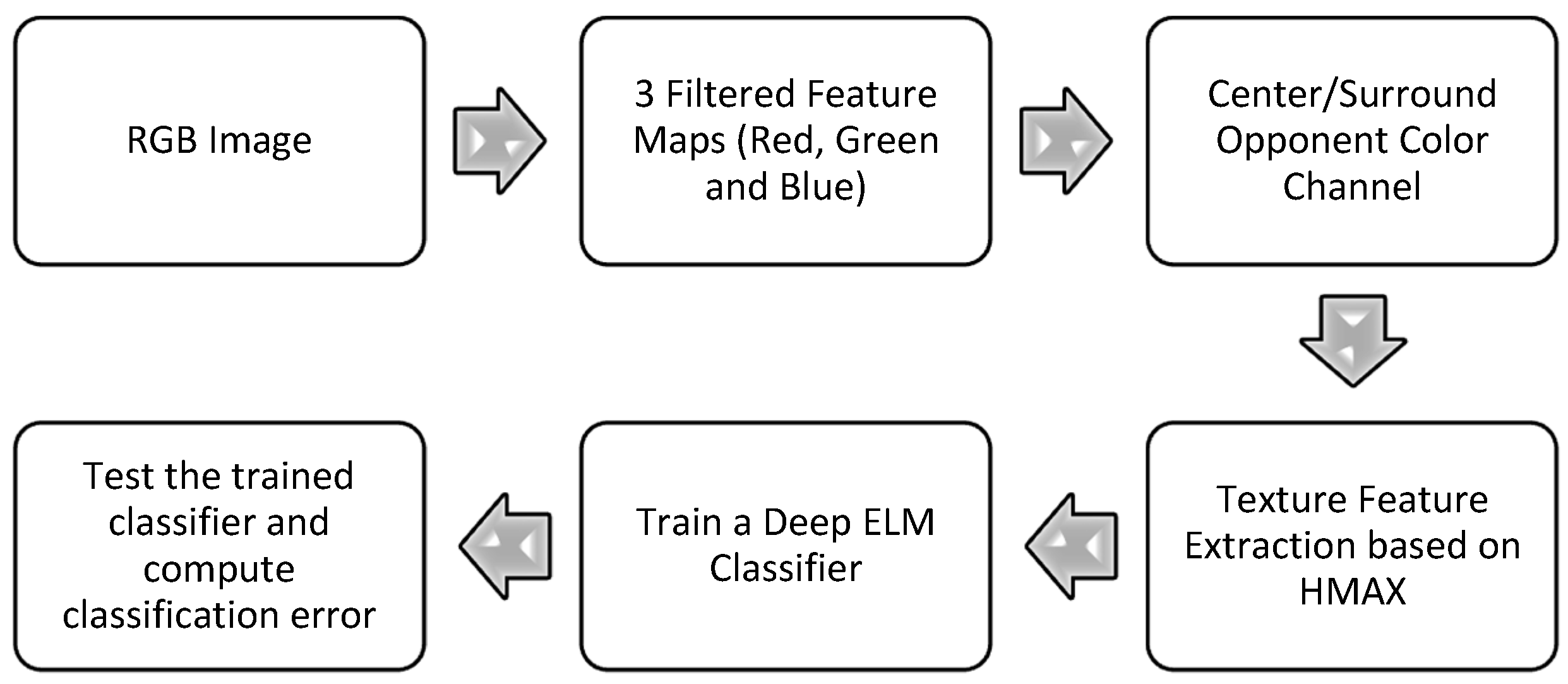

The overall flow of the proposed algorithm is summarized in

Figure 1. A fabric texture image is fed to the proposed fabric weave pattern recognition and classification system in RGB (red-green-blue) color format. Three color filters are applied over each input image to separate each color channel from the input fabric image. These three color channels, namely, red, green, and blue, are then combined into three opponent color channels, namely, red-green (RG), yellow-blue (YB), and white-black (WB). These three opponent color channels are inspired by human vision as proposed by the classical opponent color channel theory [

16]. These opponent color channel features are then fed to the feature descriptor, where they are processed further for the fabric weave pattern and yarn color feature extraction. The two main parts of our model are the feature descriptor and the weave pattern classifier, which will be described in detail in the following sub-sections of the method.

2.1. Biologically-Inspired Deep ELM-Based Pattern Recognition Network Design (D-ELM)

In most conventional multi-layer implementations of extreme learning machine, the computational complexity, memory capacity requirement, and the hidden layers that are required to be activated simultaneously are very high. To reduce the computational complexity, computation time, memory capacity requirement, and the simultaneously activated hidden layers, while maintaining good classification accuracy, we proposed a different approach. In conventional deep ELM structures, initially the ELM is trained with the training dataset as the desired target without the use of class labels. The input weights of the ELM are calculated by taking the transpose of the resultant trained output weights. Then the activation functions for the hidden layers are also trained in the similar way repeatedly, prior to finally training the large hidden layer as the classifier output [

26]. The deep ELM network we propose in this paper significantly differs with a conventional one in the following three ways. Firstly, the input weights are untrained in multiple ELM modules which are combined to create a deep ELM network. Secondly, each module’s input layer is auto-encoded with the input, rather than auto-encoding the hidden-layer responses. Thirdly, each module’s output weights are trained using labeled data. We further describe the proposed method in detail below.

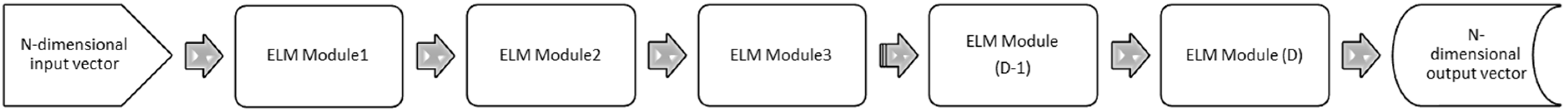

In our model, we create a multi-layered Deep ELM network by adding a cascade of standard ELM modules (three layer model, namely, the input layer, single hidden layer, and output layer), with each following ELM module receiving its input from the preceding module, as shown in

Figure 2. Therefore, the output of each ELM module acts as an input of the following ELM module, and vice versa. Thus, a D-module deep ELM can be implemented by repeatedly adding identical ELM modules D times.

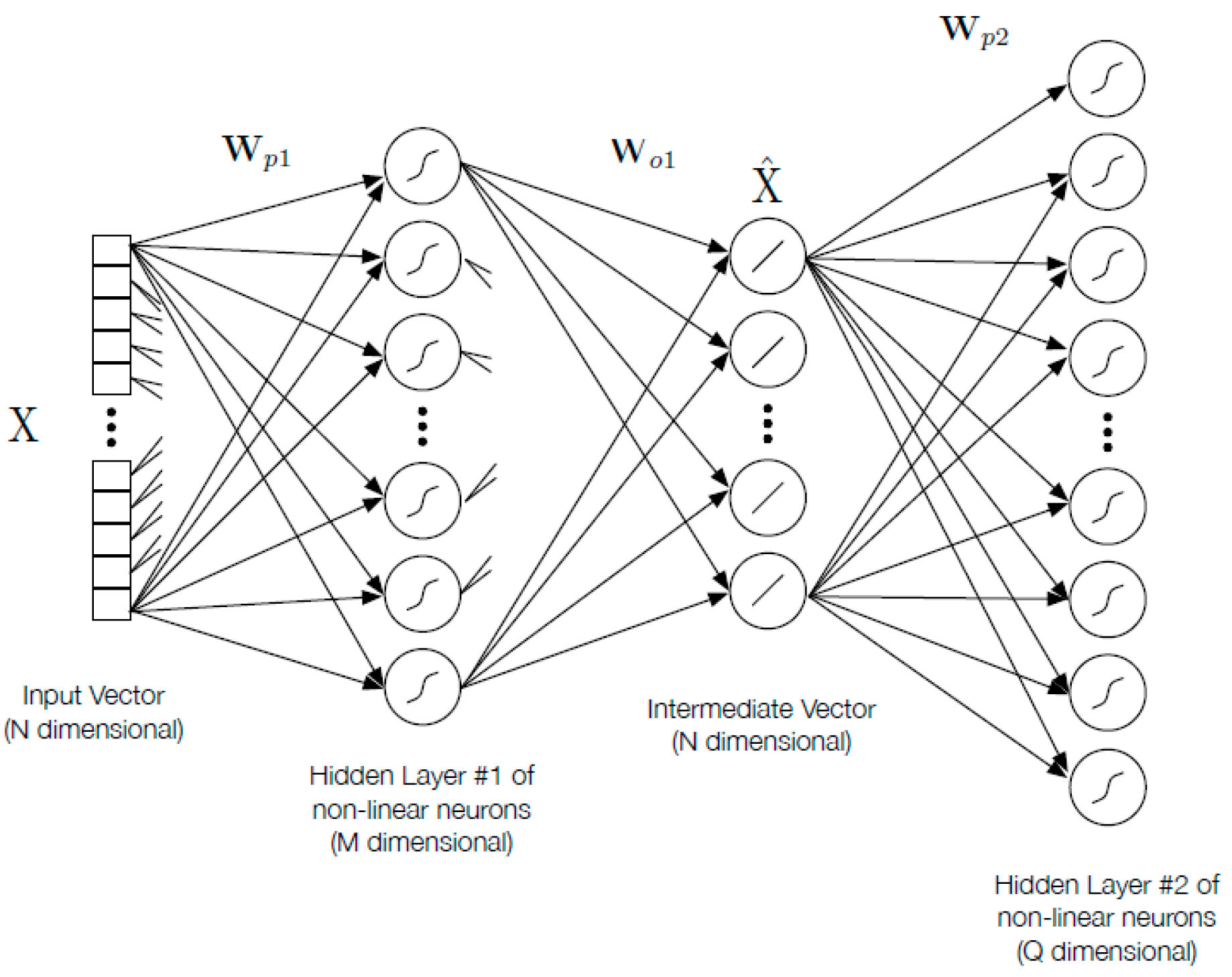

We describe the proposed network with the help of the functional flow of the N-dimensional vector input throughout the proposed network. As described earlier, the vector input to the deep ELM based classifier will be the feature representation output matrix of the training and testing images in the dataset from the color HMAX-based feature descriptor. Our deep ELM network is a multi-layer variant of the conventional ELM model, with multiple hidden layers. The training vector or test vector X ∊ ℝ

N⨯1 is fed as an input to the input layer. This input layer and the ELM module’s hidden layers (of size M) are connected with each other (as shown in

Figure 3) by an n weight matrix W

p1X ∊ ℝ

M⨯1. The ith hidden layer’s output is obtained by the logical sigmoid function:

The approximation of the input, X ∊ ℝ

N⨯1 is produced by the product (multiplication) of W

O1, the output weight with the hidden-layer responses. The autoencoding is performed by the training the output weight vectors using the K training vector, a matrix is defined as A ∊ ℝ

M⨯K where each column holds the hidden layer output f[H

1] at one training point. Then, in order to minimize the square mean error, we solve for W

O1 ∊ ℝ

N⨯M using the training data Y ∊ ℝ

N⨯1 where each column holds training vectors:

The original training set images, Y, like the supervised training of an extreme learning machine has been conducted elsewhere [

23,

28,

37], we find the solution of the following set of NM linear equations in NM unknown variables comprised from the elements of W

O1:

where c is the regularization parameter that can be optimized as a hyperparameter, and I is an identity matrix of order (M).

An input instance X is converted into a new vector

using this trained weight matrix W

O1 as follows:

which is the auto-encoded form of the input data.

The weight matrix W

p2 ∊ ℝ

Q⨯N is used to construct the next module of the proposed network, the second hidden layer input is with input H

2 ∊ ℝ

Q⨯1 is connected to X, where Q may not be the same as the size of the first hidden layer, M. Then the fashion in which the output weight vectors of the first module were trained, is repeated again to train the output weights of the second module to produce a new auto-encoded response:

A D-module deep ELM network can be formed by repeating the process explained above, several times.

The sequential steps involved in the training procedure is explained above. After completing the training process, the two weight matrices W

O1, W

O2 are combined to form one single weight matrix W

h12 ∊ ℝ

Q⨯M, that connects the first two hidden layers:

Similarly the entire process explained above can be repeated for the subsequent modules because the input layers and the output layers of an ELM module are linear.

We used an approach for the classification of the dataset images by embedding the class labels as integers in the first column of the image vector for training, where each row represents the class label for that respective feature vectors (to which it belongs). The addition of label pixels is shown in

Table 1, where each image contains “N” unique features are represented as attribute 1, attribute 2 through attribute N. Furthermore, the corresponding class category of the particular image can be represented as the “target” class in

Table 1. The first column in the

Table 1 represents the class to which the features of that row (particular input image) belong. Each row represents several features from an image, attribute1 through attributeN are the several features for an input image. The resulting training vector dataset is used as the target vector matrix, Y, to train every auto-encoding ELM module. The first module receives the image only as input. Hence, the labeled training image sets are contained in matrix Y, but the network’s first module’s input, X, are only the images. In the classification step, the elements of the prediction vector corresponds to the class labels.

The deep extreme learning machine (ELM) algorithm proposed in our study improves the training time of the network relative to a single ELM using a similar size of hidden layers and can also improve the classification accuracy relative to the other single-module ELM methods.

2.3. Brief Description of Color HMAX Based Feature Descriptor (Opponent Color Channels)

The biologically-inspired HMAX model [

39] was extended to include color cues for recognition from color images and significantly improved performance was reported on different color datasets images [

40,

41,

42]. We implemented the extension of HMAX model for pattern recognition to recognize and classify fabric weave patterns and yarn’s color [

17]. The main difference in the extension was the incorporation of the pattern’s color information in the feature descriptor using opponent color channels. The two main parts of this model are the feature descriptor and the classifier. The feature descriptor was based on the fusion of opponent color channel and HMAX model, and the classifier is implemented using deep ELM. The descriptor performs the extraction of the object texture and object color information from the image. While the classifier in the later stage uses this information from the preceding descriptor stage to perform the regression and to perform the classification. To improve the recognition accurateness of the previously developed algorithm [

17], we replaced the SVM classifier in the previously proposed algorithm with the ELM-based classifier in this paper.

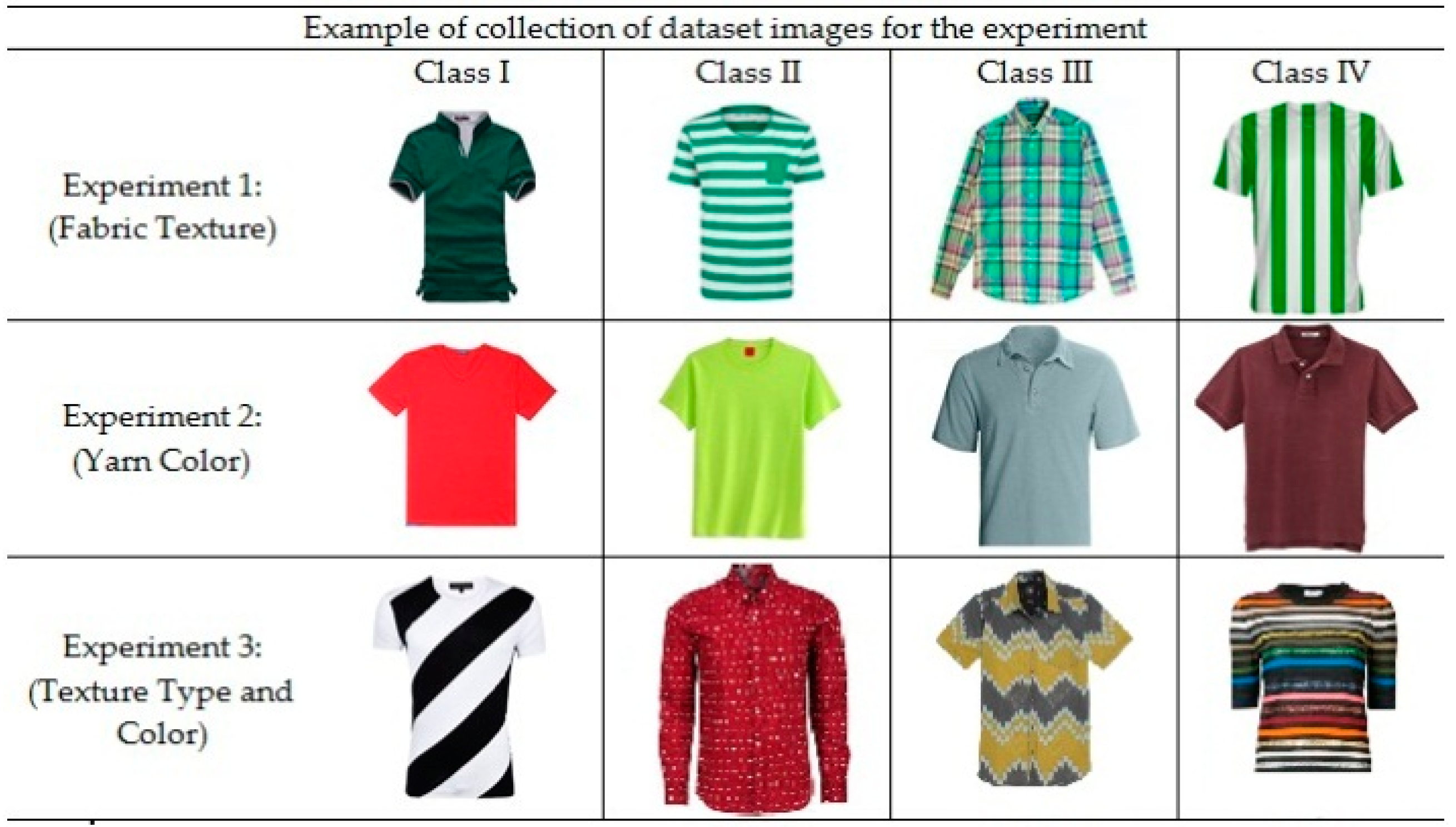

Processing starts with an input RGB image (see

Figure 1). The processing architecture comprises two stages, namely, the feature descriptor stage and the classification stage. In stage I, individual red, green, and blue color channels are combined in three pairs of opponent color channels, namely, red-green, yellow-blue, and white-black opponent color channels using a Gabor filter. The opponent color channels were integrated with the HMAX model, so that the pattern features and color features be processed simultaneously. The extracted features which correspond to the pattern and color are then fed to the deep neural network-based classification stage. In the classification stage (stage II), we first train the classifier over features of the training image set, and then test the trained classifier with the testing image set.

The process of filtering the RGB image into individual color channels and then combining individual color channels into opponent color channels and its integration with the HMAX model is explained in detail in [

17], as the feature descriptor stage is the same as the implementation in [

17]. We further improved the earlier proposed model by replacing the classifier stage with a neural network based classifier. Next, we describe the method used to construct our proposed deep extreme learning network briefly as a weave pattern and yarn color classifier.

The architecture and hierarchy of the layers of HMAX model and their functionality is discussed in detail elsewhere [

39,

43,

44,

45,

46,

47]. The color HMAX implementation for the fabric texture recognition and classification was also achieved in our earlier study [

17].