The probability of failing at or before a given length of time is then given by F(t) = 1 − S(t). The probability of failure in a very small increment of time, ∆t, is then f(t) = ∆F(t)/∆t. F(t) is often referred to as the cumulative distribution function (cdf) and its derivative, f(t), the probability density function (pdf).

The probability of failure can also be expressed through the hazard function. This function gives the rate of failure at time

t, given the specimen survives up to time

t

where |

T ≥

t reads given that T is greater than or equal to

t. As such, the hazard rate is a conditional probability of failure. A conditional probability is defined as P(A|B) = P(A and B)/P(B), where in terms of the hazard rate event B is the probability of surviving a length of time t and so equals S(

t). Event A and B would then be the probability of failing in the small increment of time ∆

t beyond

t, which is the pdf at time

t. Thus

Approaches to estimating the survivor function generally fall under three headings: parametric, non-parametric and semi-parametric. The assumption behind the parametric approach is that the form of the survivor function can be captured through a small number of parameters. For example, if failure times at a fixed test condition are normally distributed, then the survivor function is fully defined through two parameters—the mean and the standard deviation. In contrast, the non-parametric approach is model (parameter) free and as such makes no assumptions about how failure times are distributed. The semi-parametric approach combines these two approaches, for example, by specifying a base line hazard function at a particular test condition non-parametrically and then using a few parameters to model how this baseline function changes with the test conditions.

3.1.1. Non-Parametric Estimation

The starting point for many non-parametric techniques is to partition time into

j = 1 to

k equal intervals, with k being as large as practically possible. If

n equals the number of specimens placed on test at the same test condition and

dj the number of specimens failing during the

kth interval, then Kaplan and Meier [

33] proposed the following estimator of the survivor function (for uncensored data) that has as its basis the binomial distribution

where

dj is the number of failures in time interval

j. This estimator is also referred to as the product-limit estimator as originally these authors justified this estimator based on its properties when k tended to infinity or as the time interval tended to zero.

Nelson [

34] and Aalen [

35] proposed the following non-parametric estimator of the cumulative hazard function

where

rj is the total number of specimens at risk (or not yet failed) just prior to time

ti. The Fleming-Harrington [

36] estimator of the survivor function is, from Equations (7e) and (8b),

The above are of course estimates (designated by the hat symbol) of the survivor function computed in the above ways, but from a population or very large sample. The standard deviation of the above estimators provides a way to quantify the possible size of the difference between the true or population survivor function—S(

t)—and that calculated from a small sample or a randomly selected sub set of the population. The standard deviation of these estimators are in turn estimated by

In large samples, these estimates are unbiased and the Nelson Aalen estimator is then also approximately normally distributed.

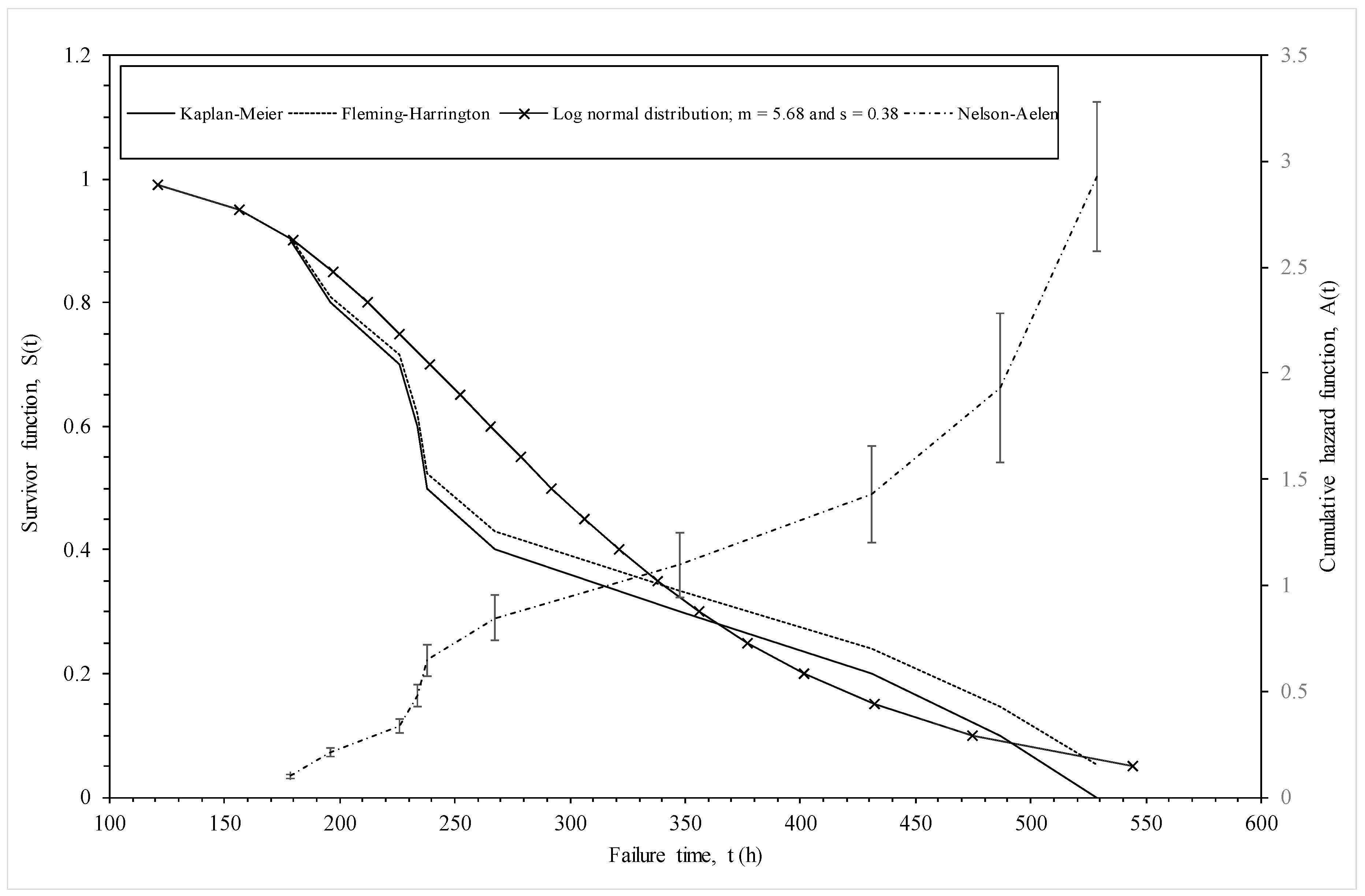

As an illustration,

Figure 2 compares these non-parametric estimators of the survivor function for the 1Cr-1Mo-0.25V specimens in the NIMS dataset tested at 823 K and 294 MPa. At low times to failure the above two estimators provide very similar values for the survivor function, but these estimators start to diverge at around 250 h—with the Fleming-Harrington estimator exceeding the Kaplan-Meier estimator. The Nelson-Aalen estimator of the cumulative hazard function is shown on the right hand side vertical axis. The errors bars associated with the estimated cumulative hazard function, which are made equal to one standard deviation, are also shown. As can be seen, the standard error increases quite dramatically with the time to failure, making the estimates at high survival probabilities quite unreliable in a sample this small.

3.1.2. Parametric Estimation

Any distribution defined for

t ∈ (0, ∞) can be used to specify parametric survivor and hazard rate functions. A good transformation for visualising many commonly used parametric distributions is the log transformation of failure time,

Y = ln(

T), with

y ∈ (−∞, ∞). Then, a whole family of distributions for Y opens up by introducing location (via parameter μ) and scale (via parameter b) changes of the form

where, like

T and

Y,

Z is a random (but standardised) variable,

z ∈ (−∞, ∞). To prevent the occurrence of a degenerate distribution for large values of

k1 and/or

k2, the following re-parameterisation is used

where

, σ = b/δ and where

W is therefore another standardised random variable defined as

W = δ

Z.

By specifying a very general distribution for

Z, it is possible to identify many of the familiar failure time distributions used in failure time analysis. Prentice [

37] and Kalbfleisch and Prentice [

38] for example defined the probability density function for

Z as

where

Z is said to be distributed as the logarithm of an F random variable with 2

k1 and 2

k2 degrees of freedom. T is described as following a four parameter generalised F distribution, T ~ GENF(μ, σ,

k1,

k2). Г(

k) is the gamma function at

k. The

Appendix A to this paper also shows that the pdf of this generalised gamma distribution can be re-parameterised as a function of time

where λ = exp(−μ) and β = 1/(δσ) = 1/b. Except, under some restricted values for

k1 and

k2, there is no closed form expression for the survivor and hazard functions, but they are related to the incomplete beta function and

Appendix A shows how this can be computed using percentiles from the F distribution. Equation (9d) is however degenerate when

k1 =

k2 = ∞ and then a different specification of the pdf must be used (see

Appendix A).

Particular values for these parameters define important sub families within the GENF family and these sub families are summarised in

Figure 3. It can be seen that some of these distributions are commonly used within engineering. When

k2 = ∞, failure times have a Generalised Gamma distribution, T ~ GENG(μ, σ,

k1). There are then three well known two parameter distributions within this Generalised Gamma family. T is gamma distributed, T ~ GAM(μ, σ,

k1), when

k2 = ∞ and σ = 1. T is log normally distributed, T ~ LOGNOR(μ, σ), when

k2 =

k1 = ∞; and T is Weibull distributed, T ~ WEIB(μ, σ), when

k2 = ∞ and

k1 = 1. In turn, the Weibull distribution collapses to the exponential distribution when

k2 = ∞,

k1 = 1 and σ = 1. The family, T ~ BURR(μ, σ,

k1), is obtained when either

k1 = 1 (Burr III) or

k2 = 1 (Burr XII). Then when

k2 =

k1 = 1, T has a log-logistic distribution, T ~ LOGLOGIS(μ, σ), and when

k2 =

k1 = 1 = σ the log-logistic distribution collapses to the logistic distribution, T ~ LOGIS(μ). The form and characteristics of all these special cases are further described in the

Appendix A.

Evans [

32] has shown how the parameters of these distributions can be estimated using maximum likelihood procedures. An alternative semi-parametric approach is to use the least-square procedure in conjunction with a probability plot. The procedure here is to linearise a plot of

t against S(

t) by finding suitable transformations of S(

t) and possibly

t. A least squares best fit line to the data on such a plot then yields estimates of the parameters μ (given by the intercept of the best fit line) and σ (the slope of the best fit line). However, as seen in

Figure 1, the non-parametric estimate

is a step function increasing by an amount 1/

n at each recoded failure time. Plotting at the bottom (top) of the steps would lead to the best fit line being above (below) the plotted points and so lead to a bias in the resulting parameter estimates. A reasonable compromise plotting position is the mid-point of the jump

where

i indexes the ordered failure times (

i = 1 for the smallest failure time,

i = 2 for the next smallest all the way up to n for the largest failure time), with t

1 being the smallest failure time up to

tn the largest failure time. From the

Appendix A to this paper, the log of the

pth percentile for

t is given by

where w

k1,k2,p is the

pth quantile of an F distribution with (2

k1, 2

k2) degrees of freedom. Percentiles of the F distribution are tabulated at the back of many well know engineering statistical text books (it can also be found in Excel using the FINV function). Using

in Equation (4a) for p in Equation (10b) allows values for w

k1,k2,p to be computed. Thus, when

is plotted against the ordered values for ln(

t), ln(

ti), the data points will reveal scatter around a linear line provided the data have a generalised F distribution with given values for

k1 and

k2.

The generality of Equation (10b) is clearly seen by considering the special case of

k2 = ∞ and

k1 = 1, which is the Weibull distribution, whose survivor function is shown in the

Appendix A of this paper to be

This can be linearised as

Then, replacing S(

t) with the parametric estimator

and

t by its ordered value

ti gives

Equations (11c) and (10b) imply that wk1,k2,p collapses to ln{−ln[S(t)]} when k2 = ∞ and k1 = 1.

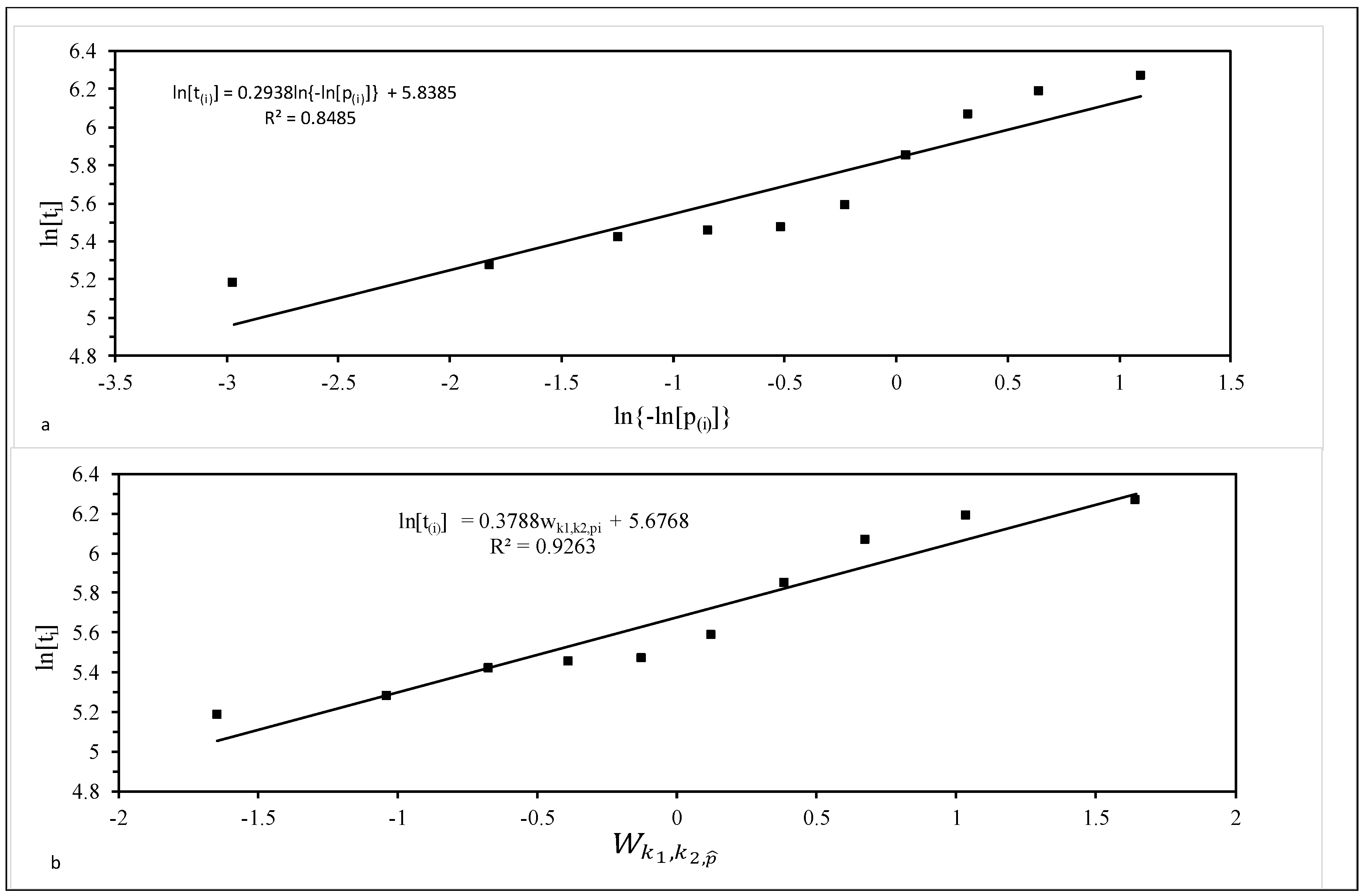

As an illustration,

Figure 4a is a

plot for the

ten 1Cr-1Mo-0.25V specimens tested at 823 K and 294 MPa. The best fit line obtained using the least squares technique is also shown. The slope of this best fit line is σ = 0.2938, with intercept μ = 5.8385. This implies β = 1/σ = 3.4 and λ = exp(−5.8385) = 0.0029. However, with a coefficient of determination (R

2) of just 85%, the Weibull distribution is unlikely to be the best description of this sample of failure times.

This R

2 value was computed over the range

p = 0 to 2 and

q = 0 to 1 (both in increments of 0.1), where

As such, this range covered all the distributions shown in

Figure 3. It was found that R

2 was maximised when

p =

q = 0, i.e., when

k1 =

k2 = ∞. It therefore appears that, within the generalised F distribution family, it is the log normal distribution that best describes the specimens tested at 823 K and 294 MPa. This is consistent with the findings by Evans [

32].

Figure 4b plots ln(

ti) against

when

k1 =

k2 = ∞, so that the variable on the horizontal axis is essentially a standard normal variate. The R

2 value is 93% and so much higher than the Weibull case. The slope of this best fit line is

σ = 0.3788 and can be interpreted as an estimate of the standard deviation in log times to failure. The intercept is

μ = 5.6768 and can be interpreted as an estimate for the mean of the log times to failure at the stated test conditions. The survivor function associated with his normal distribution (using these parameter estimate) is shown in

Figure 2. It tends to be lie above the parametric estimators at intermediate failure times, but below it as the higher failure times.