This section presents the application of the QMMP+ to forecast the water demand of the Barcelona drinking water network and the obtained performance results.

Application and Study Case

The water demand from the Barcelona drinking water network is used as a case study in this paper. This network is managed by Aguas de Barcelona SA (AGBAR), which supplies drinking water to Barcelona and its metropolitan area. The main water sources are the rivers Ter and Llobregat.

Figure 5 shows the general topology of the network, which has 88 main water consumption sectors. Currently, there are four water treatment plants: the Abrera and Sant Joan Despí plants, which extract water from the Llobregat river; the Cardedeu plant, which extracts water from the Ter river; and the Besòs plant, which treats underground water from the Besòs river aquifer. There are also several underground sources (wells) that can provide water through pumping.

This network has 4645/km of pipes supplying water from sources to serve 23 municipalities within an extension of 424 km, satisfying the water demand of 3 million people approximately, providing a total flow around of 7 m/s.

For the MPC control, a prediction horizon of 24 h is sufficient to operate with a good balance in accuracy and performance. MPC also operates hourly and is fed with the current and estimated water demand for 24 h ahead by a forecasting model. The QMMP+ approach is used to provide 24-h ahead water demand forecast.

For assessing the performance of the proposed approach, hourly time series are generated by representative flowmeter measurements of the year 2012 (from a total of 88) of the Barcelona network. The selection criterion is to consider only complete time series with regular data and few outliers according to the modified Thomson Tau () method. The part of its name is given by the statistical expression ), where t is the Student’s value and n the total number of elements.

The time series associated with different urban areas sectors, are identified with alphanumeric codes in the water demand database: p10007, p10015, p10017, p10026, p10095, p10109 and p10025. According to the Thomson Tau test, with a significance of , these sectors contain less than 70 outliers with exception of Sector p10025, which has an irregular data segment producing more outliers. Briefly, the Thomson Tau test detects the potential outlier using the Student’s t-test, labeling the data as outlier when its distance is larger than two standard deviations from the mean.

We enumerate the selected sensor sectors using new labels from 1 to 7, respectively, to simplify the legend in the table of results. All the time series are normalized in the [0,1] interval. The forecast accuracy of the QMMP+ is measured and compared with well-known forecasting models such as ARIMA [

26], where the ARIMA structures are estimated with R’s

autoarima function. The structure coefficients are optimized using MATLAB´s

estimate function (Matlab R2017a, MathWorks Inc., Natick, MA, USA), and the test is also implemented in MATLAB with the

forecast function. The Double Seasonal Holt Winters (DSHW) [

25], available in the R

forecast package, implemented the

dshw function to fit the model. The RBF Neural Networks [

27], available in the MATLAB Neural Network Toolbox package, implemented the

function to learn the neural network’s weights. MATLAB is also used to implement

k-means and silhouette coefficient to identify the qualitative patterns.

All methods are tuned and trained using a training set with

of data. The remaining data is used as validation set to measure the performance accuracy 24 steps or hours ahead using the Mean Absolute Error (

), Root Mean Squared Errors (

) and Mean Absolute Percentage Error (

) defined as,

where

n is the size of the training set,

h is the forecasting horizon and

, is the first element of the validation set.

We also report precision with the variance of all the independent forecasting residuals stored in a vector of size equal to the number of individual forecasts (given by multiplying the number of

forecasts by the horizon

h),

, where each residual is defined as the difference of the real and forecasted values defined as follows:

The index

j is the result of mapping the prediction time indexes at all different times

and horizons

to the vector

defined as,

Once we have

, the variance of the individual residuals is computed by

where

E is the statistical expectation.

The distribution patterns

are clustered using

k-means. Each distribution (or class) is represented by the normalized centroid

. The number of classes is defined by maximizing the silhuette coefficient. To achieve this,

k-means is executed testing different number of classes

k from 2 to 7. The silhouette coefficient for each time series is reported in

Figure 6, which indicates that a value of

maximizes the separability of the qualitative patterns for the studied time series. The centroids obtained with

k-means represent the average pattern of each pattern demand class used as initial mode or prototypes.

The training set is used to learn the NNME parameters associated with the

function, and the validation set measures its performance with different values of

and

m. We optimize Equation (

13) for

and

.

For the MA adaptive pattern, we test the lag values

.

Table 1 presents the SARIMA structures and the specific polynomial lags associated with each component of the model. Each model passed the Ljung–Box test once they are optimized.

Table 1 also reports the best

m and

for NNME, and the best

lag for MA for each time series. The initial distribution of consumption patterns are presented in

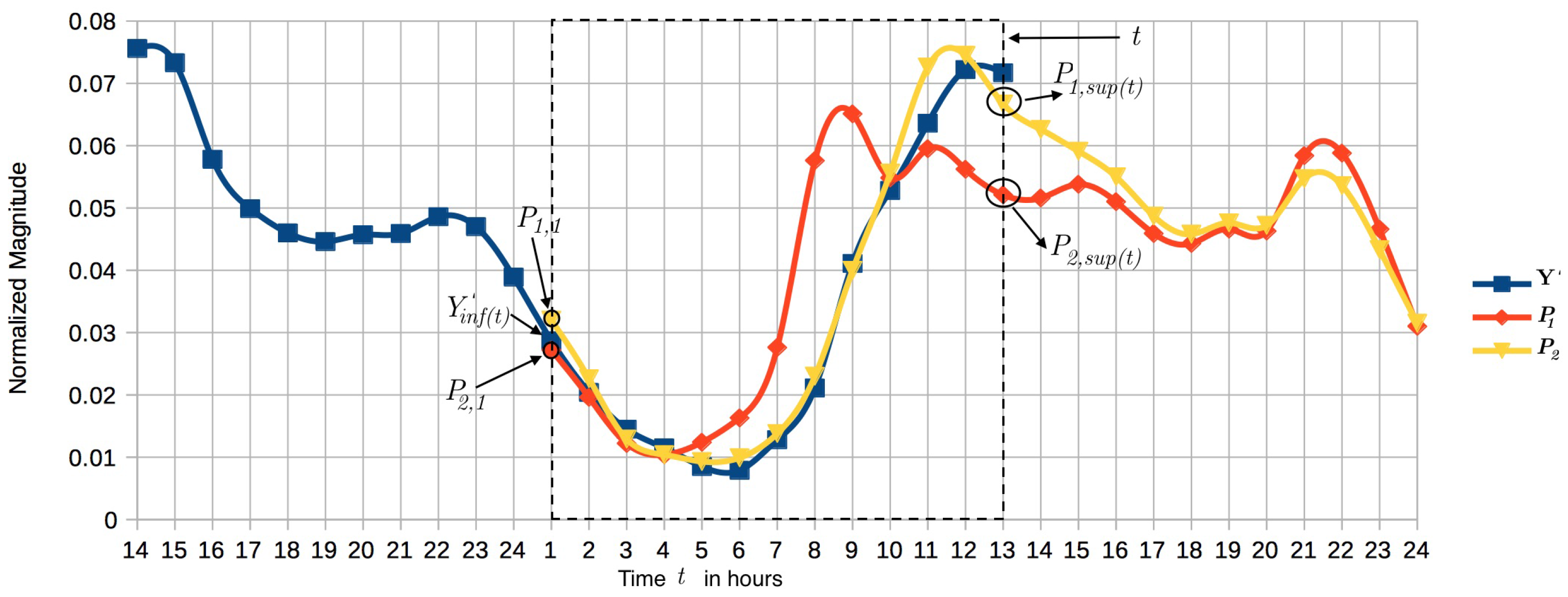

Figure 7 and

Figure 8 , where the blue line with squares represents the holiday pattern, and the orange line with rhombi the weekday pattern.

For the calendar model, we classify the pattern in two classes associated with the 2012 Catalan calendar activity [

30] (holidays and weekdays), in order to perform the mode prediction.

The QMMP+ model is compared against the DSHW, Radial Basis Function Neural Network (RBF-ANN), ARIMA and the decomposition based approaches Calendar and NNME, where NNME is the implementation of the QMMP introduced in [

6]. The DSHW model has only two manually adjusted parameters indicating the seasonality;

and

. Since we manage hourly data,

and

are set to 24 and 168 for the daily and weekly periods, respectively. We present the performance obtained using the implementation in R.

In the case of the RBF-NN, the structure size is implemented using 92 Gaussian neurons in the hidden layer with

, 24 inputs and 24 outputs to produce a prediction of 24 steps ahead each time step. We also include a Naïve prediction model as a reference that uses the last 24 observations to produce the forecast horizon 24 steps ahead. This model is described by

Table 2,

Table 3 and

Table 4 report the accuracy in terms of

,

and

of the proposed forecasting model QMMP+ compared with the Calendar (Cal), NNME, Naïve, ARIMA, RBF-ANN (ANN) and DSHW.

Table 5 reports the prediction uncertainty for each water distribution sector, and, at the bottom of the table, the mean of the variances produced with each model.

Regarding the accuracy results, we observe that the set of decomposition based approaches, QMMP+, Calendar, and NNME, perform better in average than RBF-ANN, ARIMA and DSHW for , , and for all the water demand time time series.

In particular, ARIMA presents the less accurate predictions for all the time series, even with errors above the Naïve model. DSHW shows better results than ARIMA, and Naïve, and, finally, ANN presents the best prediction accuracy among these approaches.

Regarding the accuracy in terms of mean errors of the decomposition based approaches, (i.e., Calendar and NNME), we note two facts: on the one hand, Calendar is generally more accurate than NNME, but it requires a priori information assuming that the qualitative modes are defined by an activity calendar. On the other hand, NNME is less accurate than Calendar but able to produce good qualitative mode predictions without any assumption. This fact is useful when Calendar does not explain the sequence of modes, as the case of time series 7, where Calendar is not better than NNME. Therefore, we can say that these characteristics are complementary, and, once they are combined (as QMMP+ does), both contribute to produce more accurate forecasts.

Regarding the mean of the individual variances of

Table 5, QMMP+, Calendar, and NNME are also the most precise approaches on average than Naïve, ANN, ARIMA and DSHW, where ARIMA also presents the worst precision, and only in time series 7, NNME and ANN are better than QMMP+.

In summary, we can conclude that our approach, QMMP+, outperforms the other forecasting models and shows the effectiveness of choosing probabilistically the best qualitative model throughout the experiments.