1. Introduction

The prediction of energy use and indoor environmental quality, such as temperature and relative humidity in buildings, is important for achieving energy conservation and is also employed in heating, ventilating, and air-conditioning (HVAC) applications [

1].

The energy consumption and indoor air temperature of buildings can be estimated using one of two models: (1) physical or white-box models; and (2) empirical or black-box models. Both models use weather parameters and actuators’ manipulated variables as inputs, with the predicted energy or temperature as their output [

2,

3]. The heating and cooling demand can be estimated by means of an energy simulation program, which can calculate the system capacity and zone temperature; this is what is referred to as physical modelling or white-box modelling. It is necessary to be aware of a building’s physical properties in order to create accurate models of the type mentioned above [

4]. Physical models can be used to provide forecasts of indoor climate variables before an actual building is constructed. However, black-box modeling is a data mining technique used to extract information from models. Black-box models, such as neural networks, need experimental data, which can be obtained after the actual building is constructed and measurements are made available. Some combination models which use energy simulation and genetic algorithms can also be utilized to select optimal design parameters and conserve energy [

5].

Several articles have been published on the incorporation of black-box artificial neural networks to predict room temperature and relative humidity. This data can then be used to design and operate HVAC control systems [

6,

7,

8]. Mustafaraj et al. used autoregressive linear and nonlinear neural network models to predict the room temperature and relative humidity of an open office. External and internal climate data from over three months were used to validate the models, and results showed that both models provided reasonable predictions; however, the nonlinear neural network model was superior in predicting the two variables. These predictions can be used in control strategies to save energy where air-conditioning systems operate [

4]. Indoor temperature data, predicted using neural networks by the application of the Levenberg-Marquardt algorithm, was used for the control of an air-conditioned system [

2]. Additionally, Kim et al. were able to make quite accurate predictions for each zone's temperature using accumulated building operational data [

9]. Zhao et al. reviewed many of the techniques used to predict building energy consumption. Of the many techniques used, the ones relevant to this study are: the engineering method, which uses computer simulations; neural networks, which use artificial intelligence concepts; Support Vector Machine (SVM), which produces relatively accurate results despite small quantities of training data; and grey models, which are used when there is incomplete or uncertain data [

1].

Neto et al. compared building energy consumption predicted by using an artificial neural network (ANN) and a physical model for a simulation program. Their results are suitable for energy consumption prediction with error ranges for ANNs and simulation at 10% and 13%, respectively [

10]. Kreider et al. concluded that, using a neural network, operation data alone can estimate electrical demand for assessing HVAC systems [

11]. Miller et al. used ANNs to predict the optimum start time for a heating plant during a setback and compared the results of ANN prediction with the conventional recursive least-squares method [

12,

13]. Nakahar used three different load prediction models (Kalman filter, group method of data handling (GMDH), and neural network) to estimate load for the following day and hours, to install optimal thermal storage [

14]. Using general regression neural networks, Ben-Nakhi successfully predicted the time of the end of a thermostat setback to restore the designated temperature inside a building, in time for the start of business hours [

6]. According to Yang et al., when the ANN prediction model is used to research optimal start and stop operation methods for cooling systems, energy consumption can be successfully reduced by 3% and 18%, respectively. Based on past building operational data, learning concepts have been introduced with optimal start and stop points discovered through analysis [

15]. Nabil developed self-tuning HVAC component models based on ANNs and validated against data collected from an existing HVAC system. Errors of these models are within 2–8% in terms of coefficient variance (CV). It has been shown that the optimization process can provide total energy savings of 11% [

16]. Kuisak et al. developed a data-driven approach for a daily steam load model. They used a neural network and researched 10 different data-mining algorithms to develop a predictive model [

17]. Predictive neural network models have also been developed for a chiller and ice thermal storage tank of a central plant HVAC system [

18]. The machine learning algorithm of an ANN is often adapted to complicated and nonlinear systems such as HVAC systems [

19]. The popularity of ANNs for various applications related to online control of HVAC systems and energy management in buildings, has been increasing in the past few years [

2].

The purpose of the present study was to develop a model to predict supply air temperature (SAT) of an air-handling unit (AHU) by applying AHU-historical data to a neural network, one of the black box approaches.

This current study details the process of finding various methods for improving prediction performances. Therefore, the model developed in this study was considered an adaptive model using recent hourly-operational data. When the SAT of an AHU can be estimated, it is possible to calculate the accurate amount of energy either to be eliminated or to be supplied into a building. In addition, this model can be used to calculate the heating and cooling coil load. Results of this study can be applied to control strategies and operational management of AHUs in order to reduce energy consumption.

2. Development of Models

2.1. Neural Network

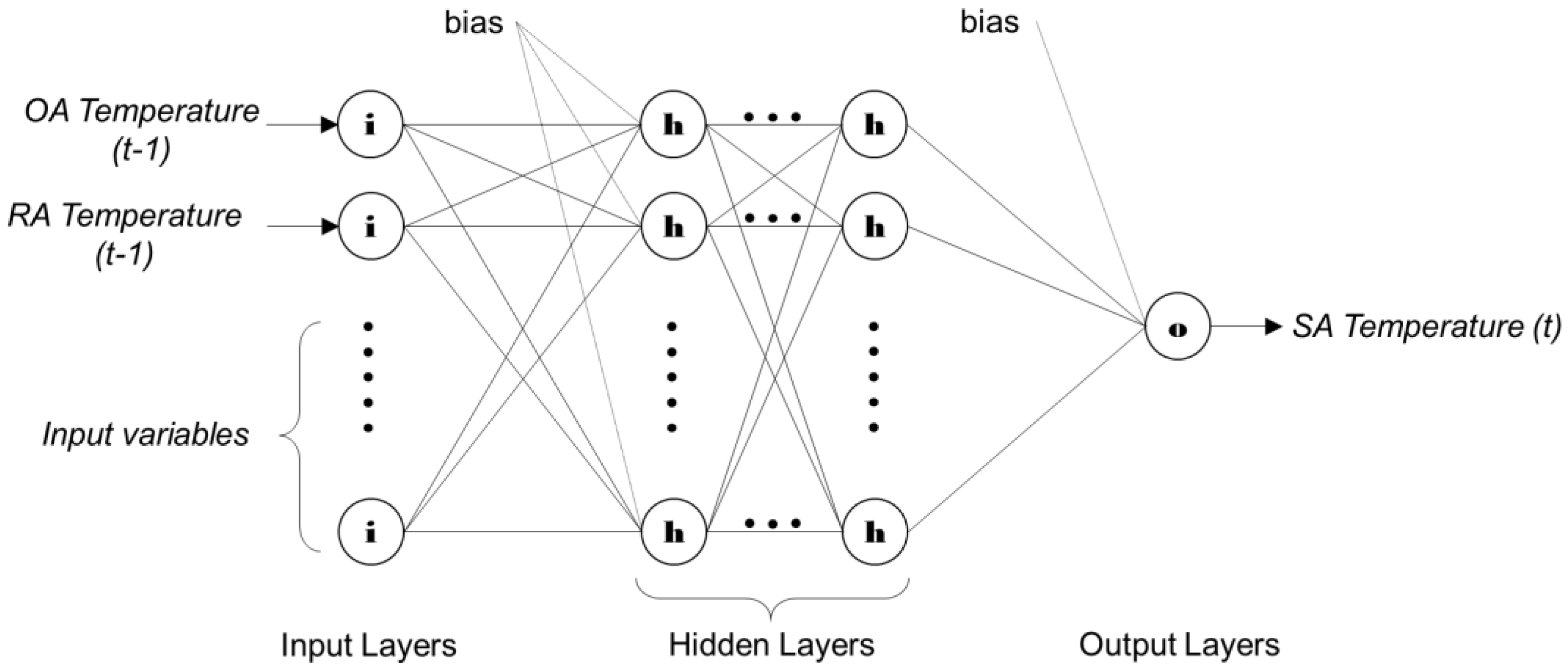

The present study used a typical neural network as shown in

Figure 1. Since an ANN has the ability to learn and analyze mapping relations, including nonlinear ones, its application to resolve various difficult problems has been increasing rapidly [

14].

There are three layers of neurons: an input, a hidden, and an output layer. In

Figure 1, neurons are placed in multiple layers. The first layer (input layer) receives inputs from the outside. The third layer (output layer) supplies the result assessed by the network and organizes the responses obtained [

10]. One or more layers, called hidden layers are positioned between the first layer and the third layer. The ANN has the ability to produce output which goes through a neuron’s network function. It can also match the produced output value to target value by modifying weights of interconnections. An ANN involves interconnected neurons. Each neuron or node is an independent computational unit. The current study used resilient backpropagation with weight backtracking for supervised learning in feedforward ANNs. Training a neural network is a process of setting the most suitable weights on the input of each unit. Backpropagation is the most used method for calculating error gradient for a feedforward network [

20]. Connection weights and bias values initially selected as random numbers can be fixed as a result of the training process.

2.2. Data

The case study is a fifteen-story hospital with six basement floors. The building is about 8600 m

2 and approximately 80 m high.

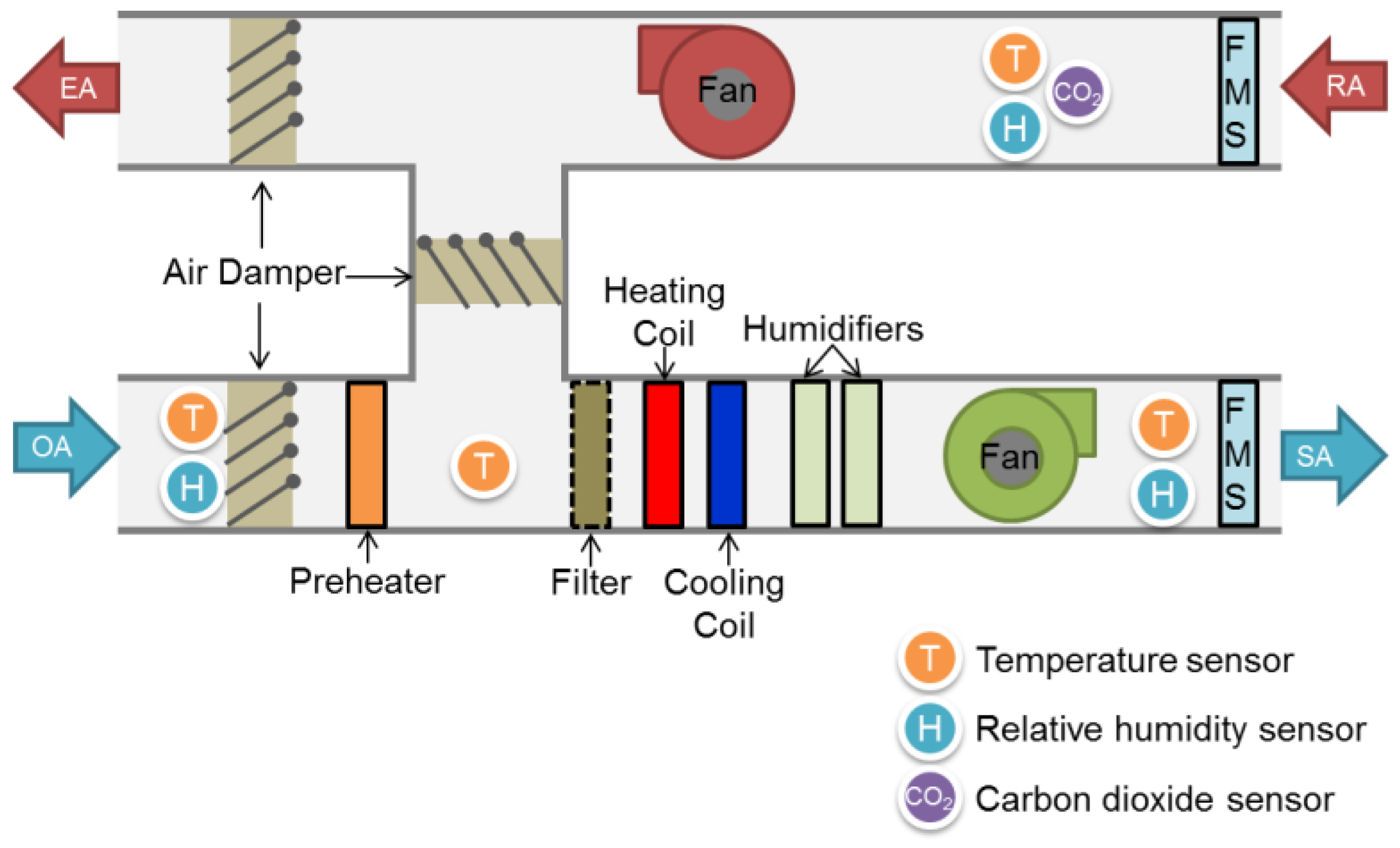

Figure 2 shows the typical organization of AHUs in patient rooms. An AHU consists of a chilled water cooling coil, steam heating coil, two fans, filters, dampers, and sensors. Hospital AHUs require more components than AHUs serving other types of buildings [

21].

Table 1 shows the specification of the sensors for temperature and relative humidity. By using sensors mounted onto the AHU and ducts, the variables listed below were monitored (as shown in

Figure 3) and the data was recorded by an interval period specified by the user.

The variables that were monitored include:

Temperature (indoor air, outdoor air, supply air, return air, mixed air)

Relative humidity (indoor air, outdoor air, supply air, return air)

Air flow rate (supply air, return air)

Pressure difference

Coil valve opening ratio (heating, cooling)

Indoor carbon dioxide

The data collection period was one year (from December 2015 to November 2016), with a measurement interval of an hour. In order to develop a suitable predictive model, it is important to select appropriate input data. A variety of input variables such as outdoor air temperature (OAT), mixed air temperature (MAT), return air temperature (RAT), and others which were monitored in the AHU, were used to develop a predictive model for SAT output. The data set was normalized by scaling data between 0 and 1.

Input variables, output variables, statistical indices, and data sets used in the existing literature were scrutinized and are summarized in

Table 2. As shown in

Table 2, data collected from measurements or from simulation programs were used to develop the predictive model. Mostly, input variables were outdoor air, supply air, indoor temperature, and humidity, all of which are measurable. Using this data, energy demand, room temperature, and relative humidity can be derived as outputs. This current study examined various methods to generate proper input variables.

As displayed in

Table 2, statistical indicators were used to compare predicted values and actual values. The following statistical indicators were used: CV (coefficient of variation), mean square error (MSE), mean absolute error (MAE), root mean square error (RMSE), and coefficient of determination (r

2). The present study used MSE, RMSE, and CVRMSE in order to assess and verify the results of the predictive model.

MSE means the standard error value and uses the measure of fitness for predictive values. The neural network algorithm seeks to minimize this MSE [

6].

where,

is the predicted value,

is the actual value,

is the number of data.

The prediction accuracy was also measured by the root mean square error (RMSE). RMSE is a frequently used measure of the differences between a model’s predicted values and the actual values. It follows Equation (2):

The CV (Coefficient of Variation) of the RMSE is a non-dimensional measure calculated by dividing the RMSE by the mean value of the actual temperature. Using CVRMSE makes it easier to determine the error range. The closer its value gets to 0%, the higher the accuracy.

The R program was used to predict the SAT of AHUs in this current study. R is a statistical tool that has a programming language built in. It can be used for a wide range of statistical analyses [

22,

23,

24]. R offers many linear and nonlinear statistical models, classical statistical tests, time series analysis, classification, clustering, and graphical techniques. Therefore, it is very extensible. In addition, R is an analytical tool that supplies statistics and visualizations for language and the development environment. It can also provide statistical techniques, modeling, new data mining approaches, simulations, and numerical analysis methods [

25].

2.3. Initial Model

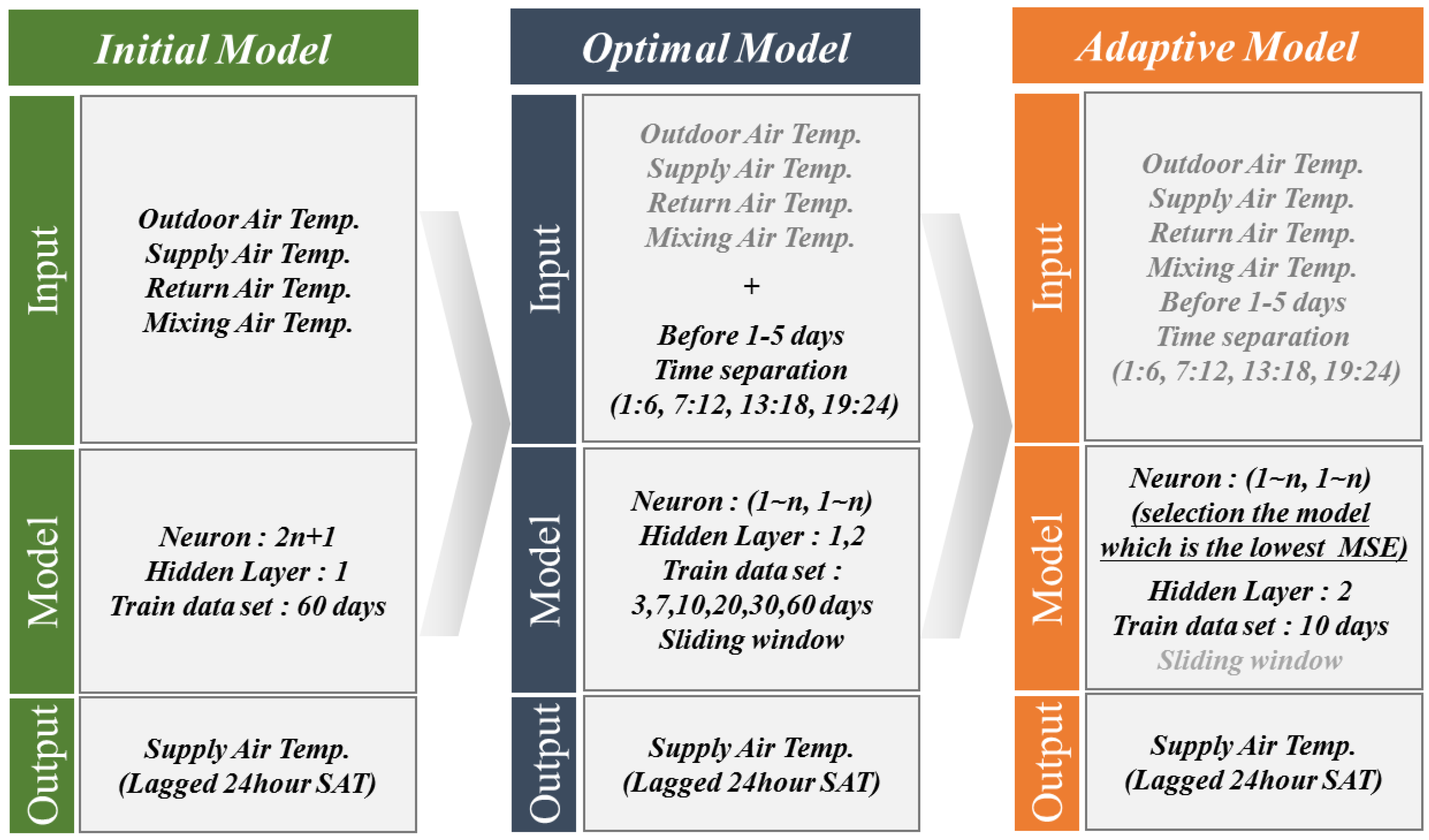

The process of finding an optimal predictive model is shown in

Figure 4. The figure on the left shows the initial model. To predict the SAT of the AHU, operation data such as OAT, SAT, RAT, and MAT were selected as input variables for the initial model.

Data from the monitored AHU systems were collected only up to the present time. To predict the SAT for the future 24 h, input variables for the future 24 h are needed. However, there was no future input data. Therefore, time-lagged 24 h SAT (lag 24 SAT) should be considered as output in order to estimate SAT for the future 24 h. The lag 24 SAT was included as output and trained itself to estimate. Therefore, 24 h data collected from existing SAT data was used as lag 24 SAT data. The lag 24 SAT were collections of previous 24 h data.

Predicting results should be SAT(

t + 1), SAT(

t + 2),…, SAT(

t + 24). However, at the present time (

t), known data is input variables such as (

t), (

t − 1), (

t − 2),…, (

t −

n). By adding lag 24 SAT as output variables for 24 h, it is possible to predict SAT. In other words, input variables are: OAT (

t = training period), SAT (

t = training period), RAT (

t = training period), and MAT (

t = training period). All input values up to the present time (

t) exist except for the lag 24 SAT for the recent 24 h. This makes the prediction possible. The current study used time-lagged output variables to develop a step-ahead prediction model [

4,

11,

22].

Initial models consist of one hidden layer with nine neurons, where the number of neurons was 2

n + 1 (

n = the number of input variables). The number of neurons was inferred from the survey of exiting literature [

26,

27,

28]. To validate the initial model, data from December 2015 to January 2016 was used as training data.

2.4. Optimal Model

The model in the middle of

Figure 4 is the optimal model. The diagram explains the ANN architecture. Different configurations of the input variables, number of hidden layers, number of neurons, and training periods were tested in order to derive the optimal model.

Since the initial model’s results were not perfect, additional input variables were considered. In order to take SAT changes occurring in previous days into consideration, a model with five more input variables was created. The five extra variables were collections of previous SAT from day 1 to day 5. Moreover, four additional input variables (dawn, morning, afternoon, and evening) were included. These four variables were used to divide 24 h. These variables were referred to the time series analysis method. The performance of the prediction was analyzed by adding the input variables mentioned above.

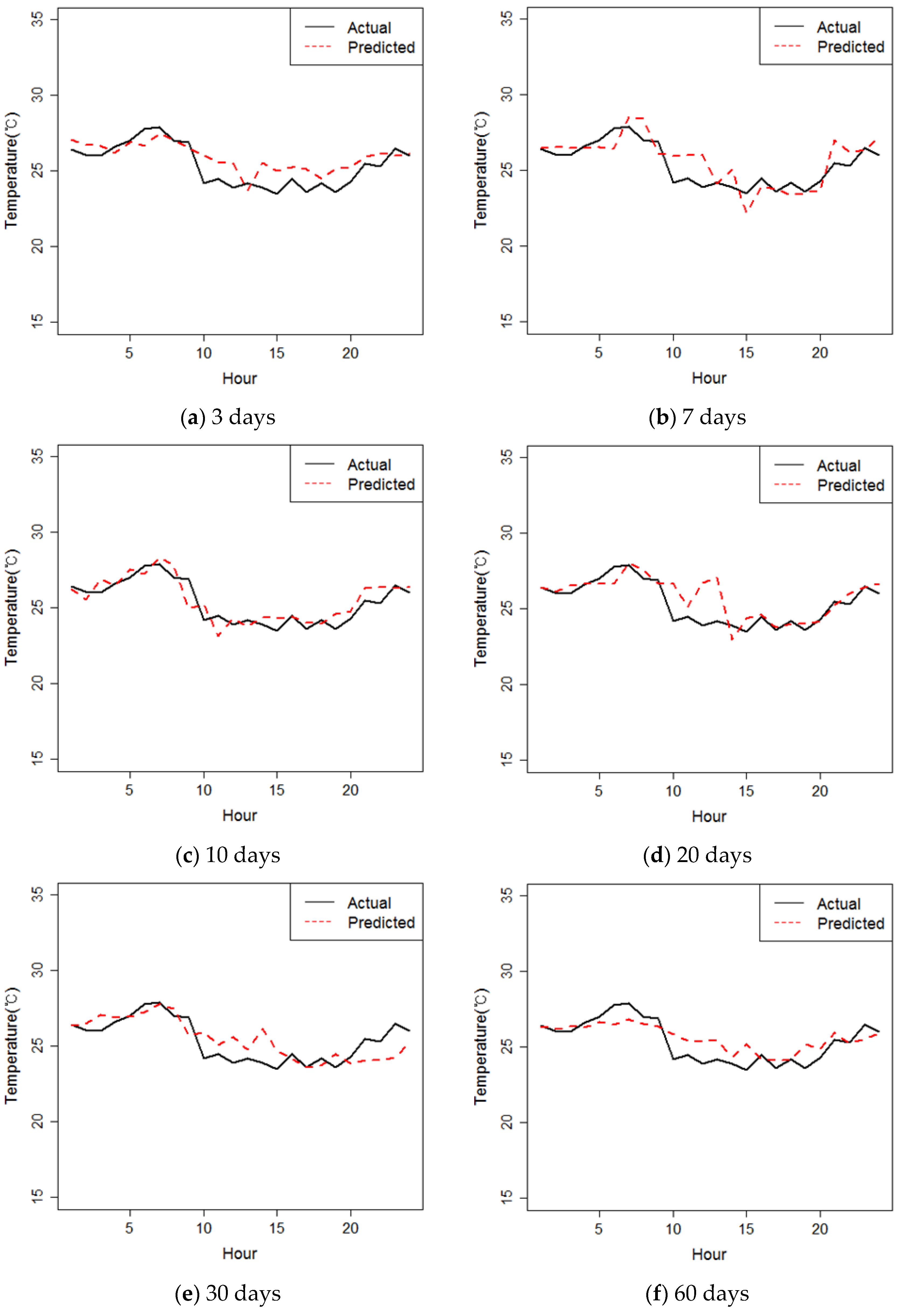

The optimal model positioned a different number of hidden layers and neurons in order to check the accuracy of the prediction. The number of hidden layers was one or two. The number of neurons was increased from one to the number of input variables. Training times were: day 3, day 7, day 10, day 20, day 30, and day 60 because the amount of training data could affect the accuracy of the model [

29].

An ANN can periodically be retrained using an augmented data set filled with new measurements. This method is also referred to as accumulative training. It can help an ANN to recognize daily and seasonal trends in predicted values. However, one drawback is that a large amount of data continuously accumulating, may become too difficult to control. Larger amounts of data mean longer training times for the ANN. Data volume can be set so that the oldest data is removed as new measurements are added. This can be achieved using a graph and periodically sliding a time window across a time series of measurements when choosing training data. In comparison with accumulative training, the sliding window technique can provide better results for real measurements [

22]. The current study used the sliding window training technique.

The present study identified the optimal model by allocating the same number of neurons to the number of hidden layers. In this case, input variables were 13 and hidden layers were 2. Therefore, neuron values (number of neurons in the first hidden layer, number of neurons in the second hidden layer) were (1,1), (2,1), (2,2), (3,1),…, (13,13), resulting in a total of 91 models.

2.5. Adaptive Model

Basic ANN architecture was created through the process of finding the optimal model. More efficient models such as an adaptive model had to be considered. Configuration of the adaptive model is shown on the right of

Figure 4.

In order to find the adaptive model, a test data set was set up to examine the accuracy of the prediction. However, when actually conducting predictions, there were no test data set. It was unclear how well the prediction matched the actual value. Learning period data changed continuously because hourly data was stored. The optimal model as described in

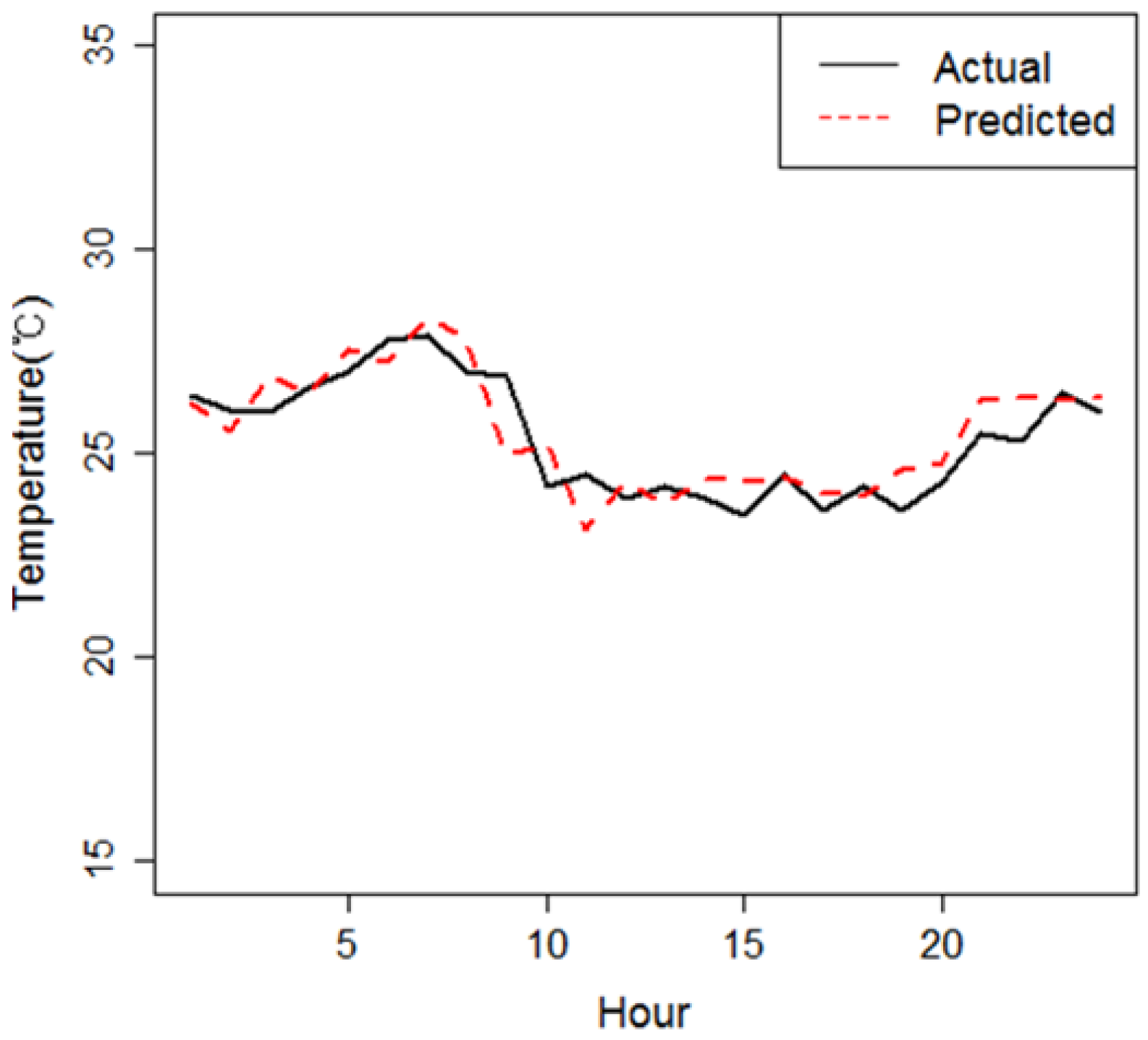

Section 2.4 might lower the prediction accuracy. To overcome this issue, data from the previous 12 h of predicted values for 24 h was used as test data and models with various number of neurons were tested again to achieve better prediction performance. A total of 91 models with neuron numbers of (1,1), (2,1), (2,2), (3,1),…, and (13,13) were analyzed to find the most appropriate model. Outputs of these models were compared with the 12-hour test data and the model with the lowest MSE value was selected as an adaptive model.

As training data changed, an adaptive model was developed to improve the prediction performance by selecting the best model among 91 models using various neuron numbers.

For validation of the final adaptive model, AHU data from another building was used. The data measuring period was from 6 March 2017 to 23 April 2017 for each hour.

4. Discussion and Conclusions

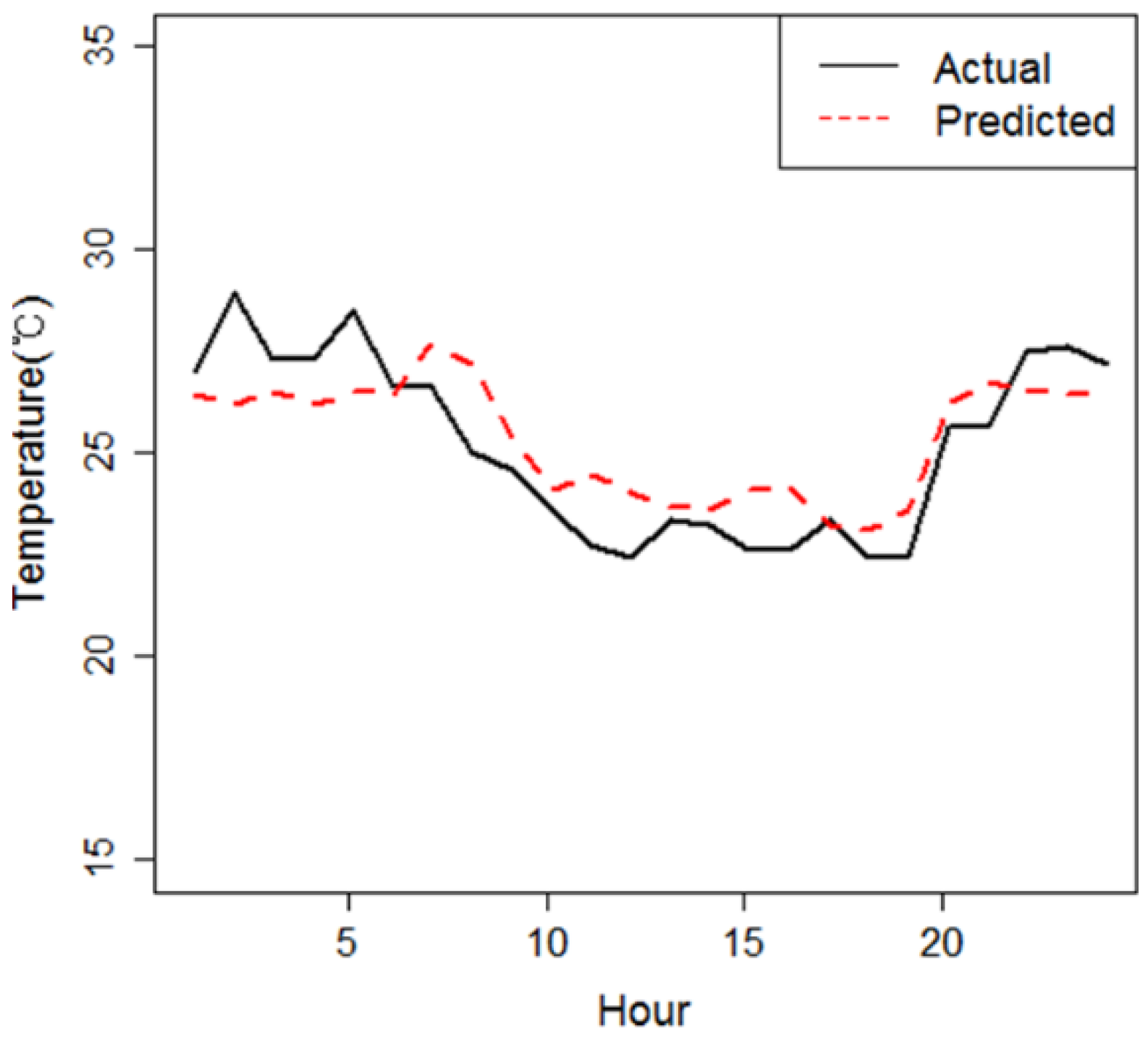

This study predicted the SAT of an AHU using an ANN. In addition, a model with good predictive performance was developed using an initial model, an optimal model, and an adaptive model.

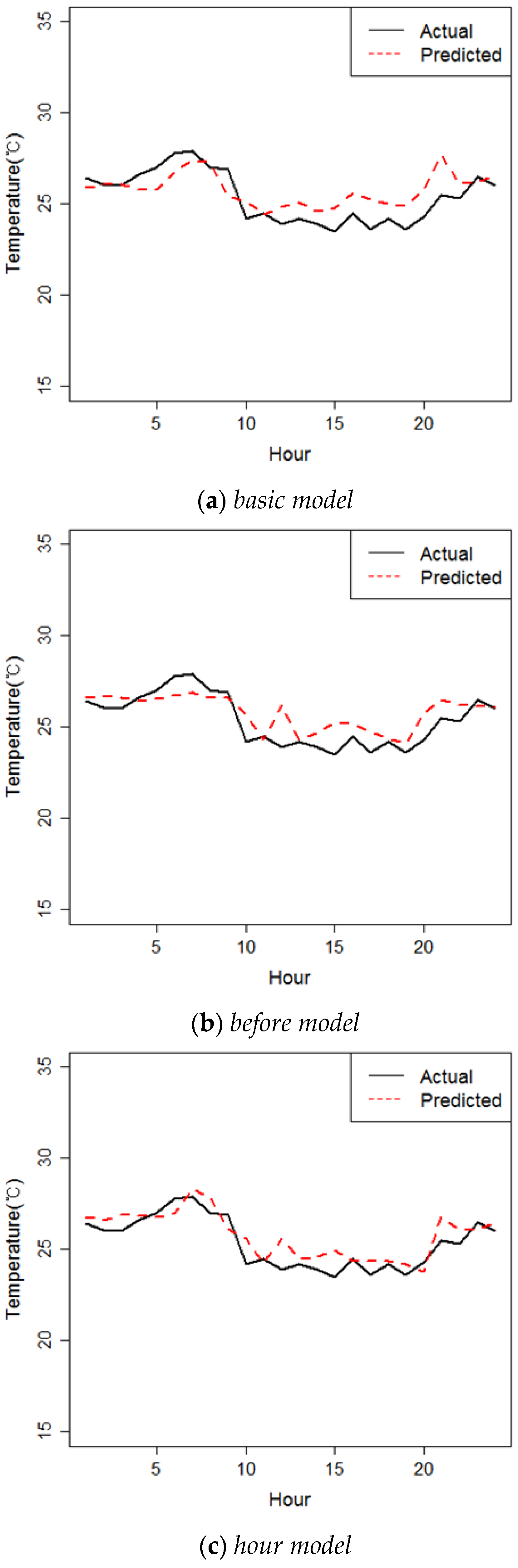

The potential of SAT prediction was found through the initial model. Parameters and number of input variables are important in the process of finding an optimal model. The hour model with various input variables showed a 25% improvement in prediction performance than the basic model which used temperature measured within the AHU.

A total of 91 models with two hidden layers were tested. The prediction performance was improved 20% (RMSE-based) when the number of hidden layers was two instead of one.

Two hidden layers and (5,2) neurons were selected for the optimal model. It is difficult to determine the number of hidden layers and the number of neurons [

9]. The number of neurons should be made to correspond to the number of input and output variables and should also follow some simple rules [

30]. Unfortunately, few studies have provided guidelines for selecting the best layer or neuron numbers. Therefore, factors such as the number of hidden layers and neurons should be determined based on the characteristics of the application and data [

31].

Based on the results of performance using various periods for data learning, 10 days was determined to be the best for the optimal model developed in the present study. When the learning period was increased to 20 or 30 days, the MSE value increased. Therefore, it is important to review the length of the data learning period according to data characteristics. When the learning period was increased to 60 days, the prediction performance was better than that with learning period of 20 or 30 days. However, such a long learning period was ultimately inefficient because its execution took a much longer time [

32]. Even though a learning period of 3 days also showed good results, such a short time period might not completely capture the trends for the predicted values [

22].

The optimal model developed in this current study is a model that can use recently collected data. It was developed by using a data training approach with a sliding window method rather than using an accumulative data method. Applying the sliding window technique makes it possible to maintain a training data set of a relatively small and constant size and retrain it quickly. However, annual and seasonal changes may not be accurately reflected in the prediction results.

Although the optimal model was developed, learning data changes over time. A fixed optimal model dependent on changing training data does not show uniformly good prediction performance results.

In conclusion, an adaptive model was developed by selecting a model with the lowest MSE. A total of 91 models were evaluated after setting up a 12-h test set at every prediction. The adaptive model can learn from training data that changes in real time. It seeks the model that has the best prediction performance. The prediction performance of the adaptive model is similar to that of the optimal model. However, it has the advantage of being able to actively cope with changing training data.