To What Extent is Drinking Water Tested in Sub-Saharan Africa? A Comparative Analysis of Regulated Water Quality Monitoring

Abstract

:1. Introduction

2. Materials and Methods

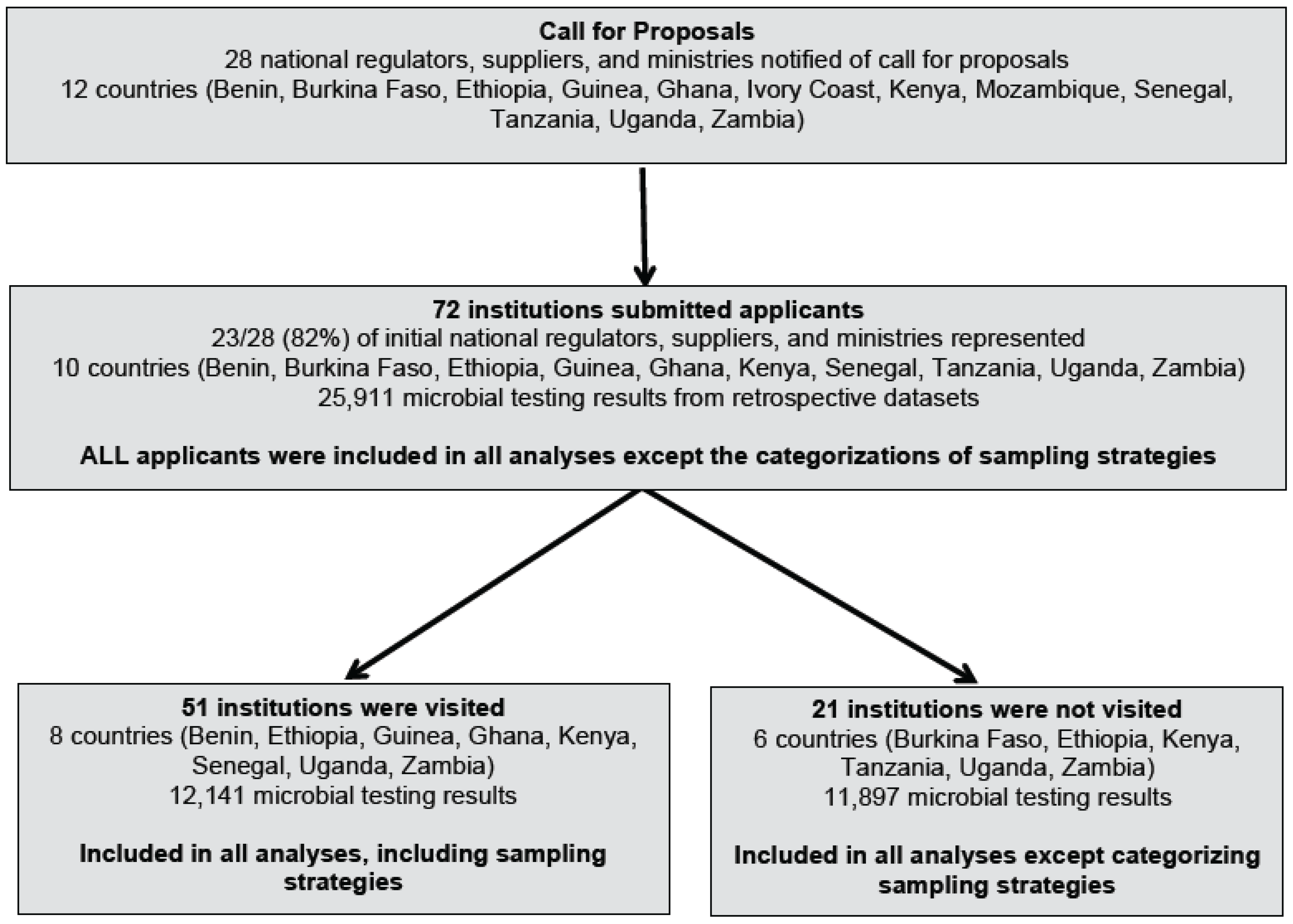

2.1. Country Selection and Study Institutions

2.2. Data Collection

2.3. Retrospective Datasets

2.4. Monitoring Performance and Data Analysis

2.5. Institutional Characteristics and Data Analysis

2.6. Ethical Approval

3. Results

3.1. Study Institutions

3.2. Sampling Strategies

3.3. Testing Methods

3.4. Monitoring Guidelines and Standards

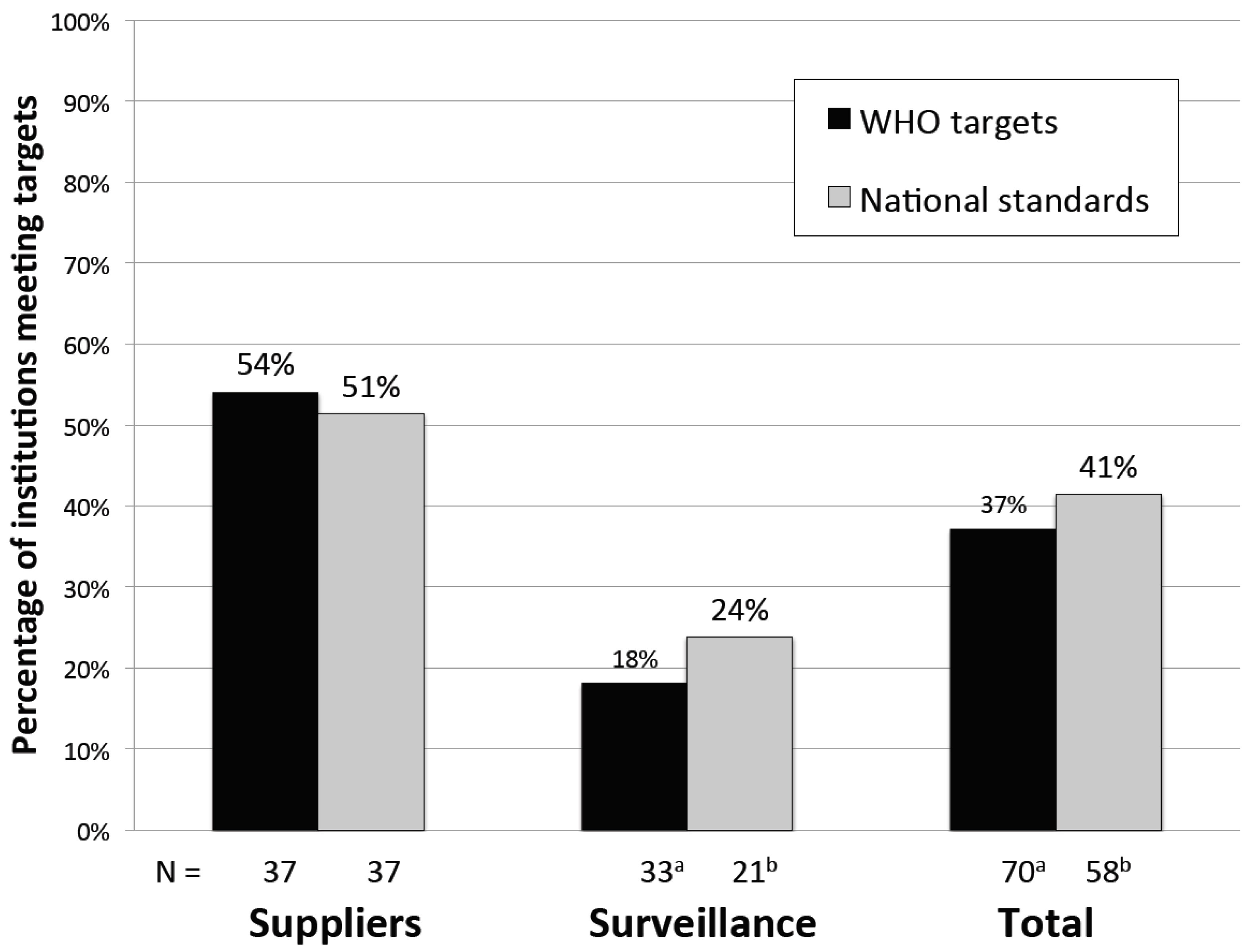

3.5. Monitoring Performance

3.6. Factors Associated with Monitoring Performance

4. Discussion

5. Conclusions

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| AfWA | African Water Association |

| H2S | hydrogen-sulfide producing bacteria |

| IWA | International Water Association |

| MfSW | Monitoring for Safe Water |

| WHO | World Health Organization |

References

- WHO. Preventing Diarrhoea through Better Water, Sanitation and Hygiene; WHO: Geneva, Switzerland, 2014. [Google Scholar]

- Prüss-Ustün, A.; Bartram, J.; Clasen, T.; Colford, J.M.; Cumming, O.; Curtis, V.; Bonjour, S.; Dangour, A.D.; De France, J.; Fewtrell, L.; et al. Burden of disease from inadequate water, sanitation and hygiene in low- and middle-income settings: A retrospective analysis of data from 145 countries. Trop. Med. Int. Health 2014, 19, 894–905. [Google Scholar]

- Liu, L.; Johnson, H.L.; Cousens, S.; Perin, J.; Scott, S.; Lawn, J.E.; Rudan, I.; Campbell, H.; Cibulskis, R.; Li, M.; et al. Global, regional, and national causes of child mortality: An updated systematic analysis for 2010 with time trends since 2000. Lancet 2012, 379, 2151–2161. [Google Scholar] [CrossRef]

- Steynberg, M.C. Drinking water quality assessment practices: An international perspective. Water Sci. Technol. Water Supply 2002, 2, 43–49. [Google Scholar]

- Rahman, Z.; Crocker, J.; Chang, K.; Khush, R.; Bartram, J. A comparative assessment of institutional frameworks for managing drinking water quality. J. Water Sanit. Hyg. Dev. 2011, 1, 242–258. [Google Scholar] [CrossRef]

- WHO. WHO Guidelines for Drinking-Water Quality, 4th ed.; WHO: Geneva, Switzerland, 2011. [Google Scholar]

- Lloyd, B.J.; Bartram, J. Surveillance solutions to microbiological problems in water quality control in developing countries. Water Sci. Technol. 1991, 24, 61–75. [Google Scholar]

- Lloyd, B.; Pardon, M.; Bartram, J. The development and implementation of a water surveillance and improvement programme for Peru. In American Society for Civil Engineers International Conference on Resources Mobilisation for Drinking Water Supply and Sanitation in Developing Nations; American Society for Civil Engineers: San Juan, Puerto Rico, 1987; pp. 640–652. [Google Scholar]

- Crocker, J.; Bartram, J. Comparison and cost analysis of drinking water quality monitoring requirements versus practice in seven developing countries. Int. J. Environ. Res. Public Health 2014, 11, 7333–7346. [Google Scholar] [CrossRef] [PubMed]

- Peletz, R.; Rahman, Z.; Bonham, M.; Aleru, L.; Khush, R. Monitoring for safe water: Evaluating microbial water quality testing constraints across Africa. Water 2013, 21, 35–36. [Google Scholar]

- Kumpel, E.; Peletz, R.; Mateyo, B.; Khush, R. Regulatory data for local water quality management and national monitoring in sub-Saharan Africa. 2016; in preparation. [Google Scholar]

- Diekmann, A. Not the first digit! Using Benford’s law to detect fraudulent scientific data. J. Appl. Stat. 2007, 34, 321–329. [Google Scholar] [CrossRef]

- Fewster, R.M. A simple explanation of Benford’s law. Am. Stat. 2009, 63, 26–32. [Google Scholar] [CrossRef]

- Republique du Benin. Fixant les Procedures de Delimitation des Perimetres de Protection (Decret No 2011-094); Presidence de la Repulique: Cotonou, Benin, 2001. (In French) [Google Scholar]

- L'Office national de l'eau et de l’assainissement (ONEA); Ouagadougou, Burkina Faso; Société des Eaux de Guinée (SEG); Conakry, Guinea; Service National de l’Hygiène (SNH); Dakar, Senegal. Personal communication, 2013.

- Ethiopian Standards Agency. Drinking Water—Speficications (ES 261:2001); Quality and Standards Authority of Ethiopia: Addis Ababa, Ethiopia, 2001. [Google Scholar]

- Ethiopian Ministry of Health. National Drinking Water Quality Monitoring and Surveillance Strategy; Ethiopian Ministry of Health: Addis Ababa, Ethiopia, 2011.

- Government of Ghana. Community Water and Sanitation Agency Water Safety Framework; Government of Ghana Ministry of Water Resources, Works and Housing: Accra, Ghana, 2010.

- Ghana Standards Authority. Water. Quality—Specification for Drinking Water (GS 175-1: 2013), 4th ed.Ghana Standards Authority: Accra, Ghana, 2013.

- WASREB. Drinking Water Quality and Effluent Monitoring Guidelines; WASREB: Nairobi, Kenya, 2009. [Google Scholar]

- The United Republic of Tanzania Ministry of Water. Water Sector Development Programme 2006–2025: Programme Implementation Manual; United Republic of Tanzania Ministry of Water: Dar es Salaam, Tanzania, 2011.

- Uganda National Bureau of Standards. Uganda Standard: Drinking (Potable) Water—Specification (US 201:2008); Uganda National Bureau of Standards: Kampala, Uganda, 2008. [Google Scholar]

- Zambian Ministry of Health; Lusaka, Zambia; Uganda Ministry of Health; Kampala, Uganda. Personal communication, 2013.

- NWASCO. Guidelines on Water Quality Monitoring; NWASCO: Lusaka, Zambia, 2010. [Google Scholar]

- Department of Economic and Social Affairs, Population Division, United Nations. World Urbanization Prospects: The 2014 Revision; United Nations: New York, NY, USA, 2014.

- Kirkwood, B.R.; Sterne, J.A.C. Essential Medical Statistics, 2nd ed.; Blackwell Science, Ltd.: Hoboken, NJ, USA, 2003. [Google Scholar]

- WASREB. WASREB 2014 Impact Report: A Performance Review of Kenya’s Water Services Sector 2011-2012; WASREB: Nairobi, Kenya, 2014. [Google Scholar]

- Njonjo, K.S. Exploring Kenya’ s Inequality: Pulling Apart of Pooling Together? Abriged Report; Kenya National Bureau of Statistics and the Society for International Development—East Africa: Nairobi, Kenya, 2013. [Google Scholar]

- World Bank Harzominized List of Fragile Situations FY16. Available online: http://pubdocs.worldbank.org/pubdocs/publicdoc/2015/7/700521437416355449/FCSlist-FY16-Final-712015.pdf (accessed on 4 February 2016).

- WHO; UN-Water. UN-Water Global Analysis and Assessment of Sanitation and Drinking-Water (GLAAS) 2014 Report: Increasing Access, Reducing Inequalities; World Health Organization: Geneva, Switzerland, 2014. [Google Scholar]

- Mugabi, J.; Castro, V. Water Operators Partnerships: Africa Utility Performance Assessment; Water and Sanitation Program- Africa, World Bank: Nairobi, Kenya, 2009. [Google Scholar]

- UNICEF/WHO. Progress on Sanitation and Drinking Water: 2015 Update and MDG Assessment; UNICEF/WHO: Geneva, Switzerland, 2015. [Google Scholar]

- MWE. Water and Enviornment Sector Performance Report 2013; MWE: Kampala, Uganda, 2013. [Google Scholar]

- Bartram, J.; Corrales, L.; Davison, A.; Deere, D.; Drury, D.; Gordon, B.; Howard, B.; Rinehold, A.; Stevens, M. Water Safety Plan Manual: Step-by-Step Risk Management for Drinking-Water Suppliers; World Health Organization: Geneva, Switzerland, 2009. [Google Scholar]

- Davison, A.; Howard, G.; Stevens, M.; Callan, P.; Fewtrell, L.; Deere, D.; Bartram, J. Water Safety Plans: Managing Drinking-Water Quality from Catchment to Consumer; World Health Organization: Geneva, Switzerland, 2005. [Google Scholar]

| Dataset Characteristic | Category | Supplier (N = 37) | Surveillance (N = 35) | Total (N = 72) |

|---|---|---|---|---|

| Microbial retrospective datasets | Datasets provided | 31 (84%) | 17 (49%) | 48 (67%) |

| Dataset not provided | 6 (16%) | 18 (51%) | 24 (33%) | |

| Method datasets received | Emailed | 25 (68%) | 10 (29%) | 35 (49%) |

| Scanned data during visits | 6 (16%) | 7 (20%) | 13 (18%) | |

| No datasets provided | 6 (16%) | 18 (51%) | 24 (33%) | |

| Format provided | Portable Document Format (PDF) | 17 (46%) | 8 (23%) | 25 (35%) |

| Microsoft Word | 6 (16%) | 4 (11%) | 10 (14%) | |

| Microsoft Excel | 4 (11%) | 4 (11%) | 8 (11%) | |

| Multiple formats | 4 (11%) | 1 (3%) | 5 (7%) | |

| No datasets provided | 6 (16%) | 18 (51%) | 24 (33%) | |

| Included handwritten entries | Yes | 9 (24%) | 7 (20%) | 16 (22%) |

| No | 22 (59%) | 10 (29%) | 32 (44%) | |

| No datasets provided | 6 (16%) | 18 (51%) | 24 (33%) | |

| Completeness | ≥12 months of testing results | 9 (24%) | 1 (3%) | 10 (14%) |

| 6–11 months of testing results | 11 (30%) | 3 (9%) | 14 (19%) | |

| 0–5 months of testing results | 17 (46%) | 31 (89%) | 48 (67%) |

| Institution Characteristic | Category | Suppliers (N = 37) | Surveillance (N = 35) | Total (N = 72) |

|---|---|---|---|---|

| Country | Benin | 7 (19%) | 1 (3%) | 8 (11%) |

| Burkina Faso | 1 (3%) | 1 (3%) | 2 (3%) | |

| Ethiopia | 4 (11%) | 5 (14%) | 9 (13%) | |

| Ghana | 1 (3%) | 0 (0%) | 1 (1%) | |

| Guinea | 1 (3%) | 0 (0%) | 1 (1%) | |

| Kenya | 8 (22%) | 5 (14%) | 13 (18%) | |

| Senegal | 0 (0%) | 3 (9%) | 3 (4%) | |

| Tanzania | 2 (5%) | 0 (0%) | 2 (3%) | |

| Uganda | 10 (27%) | 8 (23%) | 18 (25%) | |

| Zambia | 3 (8%) | 12 (34%) | 15 (21%) | |

| Institution Type | Supplier | |||

| National Supplier 1 | 15 (41%) | - | 15 (21%) | |

| Provincial Supplier | 3 (8%) | - | 3 (4%) | |

| City/town Supplier | 14 (38%) | - | 14 (19%) | |

| Small-scale Private Water Supplier | 5 (14%) | - | 5 (7%) | |

| Surveillance | ||||

| National Health Ministry 1 | - | 5 (14%) | 5 (7%) | |

| National Water Ministry | - | 1 (3%) | 1 (1%) | |

| Regional Laboratory 2 | - | 5 (14%) | 5 (7%) | |

| District Health or Water Office | - | 24 (69%) | 24 (33%) | |

| Population Served 3 | <100,000 | 13 (35%) | 2 (6%) | 15 (22%) |

| 100,000–<500,000 | 18 (49%) | 18 (56%) | 36 (52%) | |

| ≥500,000 | 6 (16%) | 12 (38%) | 18 (26%) | |

| Urban/rural | Urban | 37 (100%) | 8 (23%) | 45 (63%) |

| Rural | 0 (0%) | 27 (77%) | 27 (38%) | |

| Type of water sources in jurisdiction 4 | Piped water only | 37 (100%) | 3 (9%) | 40 (57%) |

| Point sources only | 0 (0%) | 1 (3%) | 1 (1%) | |

| Both point sources and piped water | 0 (0%) | 29 (88%) | 29 (41%) | |

| Number of connections (suppliers only) 5 | <5000 | 9 (27%) | - | - |

| 5000–<10,000 | 8 (24%) | - | - | |

| 10,000–<20,000 | 7 (21%) | - | - | |

| 20,000–<100,000 | 6 (18%) | - | - | |

| ≥100,000 | 3 (9%) | - | - | |

| Years in operation (suppliers only) 6 | <10 | 13 (41%) | - | - |

| 10–30 | 9 (28%) | - | - | |

| >30 | 10 (31%) | - | - | |

| Number of water sources (surveillance only) 7 | <100 | - | 2 (6%) | - |

| 100–<1000 | - | 10 (30%) | - | |

| 1000–<10,000 | - | 16 (48%) | - | |

| ≥10,000 | - | 5 (15%) | - | |

| Number of staff involved in water testing 8 | <5 | 20 (67%) | 10 (29%) | 30 (46%) |

| 5–<10 | 3 (10%) | 8 (23%) | 11 (17%) | |

| 10–<25 | 6 (20%) | 10 (29%) | 16 (25%) | |

| ≥25 | 1 (3%) | 7 (20%) | 8 (12%) | |

| Annual water monitoring budget (USD) | Total, median 9 (interquartile range) | 9600 (2200–22,000) | 3400 (900–11,000) | 7500 (1200–18,000) |

| Annual water monitoring budget (USD) | Per person served, median 9 (interquartile range) | 0.056 (0.018–0.131) | 0.011 (0.004–0.032) | 0.024 (0.006–0.081) |

| Independently regulated 10 | Yes | 14 (38%) | 0 (0%) | 14 (19%) |

| No | 23 (62%) | 35 (100%) | 58 (81%) |

| Water Sample Type | Supplier (n = 22,957) | Surveillance (n = 1081) | Total (n = 24,038) |

|---|---|---|---|

| Network (consumer taps) | 15,303 (67%) | 338 (31%) | 15,641 (65%) |

| Service Reservoir | 3593 (16%) | 12 (1%) | 3605 (15%) |

| Water Treatment Plant | 1371 (6%) | 8 (1%) | 1379 (6%) |

| Incoming water to distribution system 1 | 1364 (6%) | 7 (1%) | 1371 (6%) |

| Raw Water | 882 (4%) | 30 (3%) | 912 (4%) |

| Standpipe/Kiosk (public or commercial taps) | 362 (2%) | 6 (1%) | 368 (2%) |

| Storage Container/Tank | 1 (<1%) | 167 (15%) | 168 (1%) |

| Well | 2 (<1%) | 93 (9%) | 95 (<1%) |

| Borehole | 2 (<1%) | 77 (7%) | 79 (<1%) |

| Spring | 0 (<1%) | 25 (2%) | 25 (<1%) |

| Other 2 | 2 (<1%) | 7 (1%) | 9 (<1%) |

| Unknown | 75 (<1%) | 311 (29%) | 386 (2%) |

| Sampling Strategy | Description | Suppliers (n = 19) 1 | Surveillance (n = 13) 2 |

|---|---|---|---|

| Distribution system | Sampling system components, including raw water, treatment plant water, and piped network water | 10 | 0 |

| Geographic | Sampling an area of jurisdiction that may contain multiple source types | 6 | 0 |

| Population-based | Sampling the sources serving areas with the highest population densities | 6 | 2 |

| Risk-based | Sampling areas where disease rates are known to be high | 3 | 2 |

| Reactive | Sampling after a disease outbreak or after a distribution problem is resolved | 3 | 7 |

| New points | Sampling after a new water source is installed or a new line is laid, | 1 | 3 |

| Ad-hoc | Sampling points determined by the sample collector | 2 | 3 |

| Water Testing Characteristic | Category | Supplier (N = 37) | Surveillance (N = 35) | Total (N = 72) |

|---|---|---|---|---|

| Reported (physical, chemical, and/or microbial) 1 | Both internal and external lab | 25 (68%) | 17 (49%) | 42 (58%) |

| Internal only | 11 (30%) | 6 (17%) | 17 (24%) | |

| External lab only | 1 (3%) | 11 (31%) | 12 (17%) | |

| No current testing | 0 (0%) | 1 (3%) | 1 (1%) | |

| Reported regular monitoring | Microbial | 27 (77%) | 27 (73%) | 54 (75%) |

| Physical | 28 (80%) | 12 (32%) | 40 (56%) | |

| Chemical | 30 (81%) | 14 (38%) | 44 (61%) | |

| Types of tests conducted internally | Microbial tests | 25 (68%) | 19 (54%) | 44 (61%) |

| Turbidity | 29 (78%) | 12 (34%) | 41 (57%) | |

| Chlorine | 31 (84%) | 7 (20%) | 38 (53%) | |

| Reported any microbial water testing in the past year 2 | Yes | 34 (92%) | 26 (76%) | 60 (85%) |

| No | 2 (8%) | 8 (24%) | 11 (15%) | |

| Microbial testing indicators 3 | E. coli | 12 (32%) | 5 (14%) | 17 (24%) |

| Thermotolerant (fecal) coliforms | 15 (41%) | 11 (31%) | 26 (36%) | |

| Total coliforms | 12 (32%) | 9 (26%) | 21 (29%) | |

| Heterotrophic bacteria | 8 (23%) | 0 (0%) | 8 (11%) | |

| Fecal Streptococci | 4 (11%) | 0 (0%) | 4 (6%) | |

| Enterococci | 1 (3%) | 0 (0%) | 1 (1%) | |

| Clostridium perfringens | 4 (11%) | 0 (0%) | 4 (6%) | |

| H2S | 1 (3%) | 2 (6%) | 3 (4%) | |

| Unspecified | 3 (9%) | 12 (32%) | 15 (21%) | |

| Microbial testing method used 3 | Membrane filtration | 18 (49%) | 15 (43%) | 33 (46%) |

| Most probable number (MPN) | 8 (22%) | 3 (9%) | 11 (15%) | |

| Presence-absence | 10 (27%) | 8 (23%) | 18 (25%) | |

| Petrifilm™ | 2 (5%) | 1 (3%) | 3 (4%) | |

| Average number of microbial tests per year 1 | Mean (SD) | 1731 (3369) | 196 (524) | 996 (2562) |

| Median (interquartile range) | 250 (66–1430) | 49 (5–148) | 110 (18–492) |

| Covariates | Category | Suppliers (N = 37) | Surveillance (N = 33) 1 | Total (N = 70) 1 | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| N | % | p-Value 2 | N | % | p-Value 2 | N | % | p-Value 2 | ||

| Institution Type | Supplier | 20/37 | 54% | - | - | 20/37 | 54% | <0.01 | ||

| Surveillance | 6/33 | 18% | 6/33 | 18% | ||||||

| Population Served 3 | <500,000 | 14/31 | 45% | 0.02 | 1/20 | 5% | 0.02 | 15/51 | 29% | 0.02 |

| ≥500,000 | 6/6 | 100% | 5/12 | 42% | 11/18 | 61% | ||||

| Area of jurisdiction | City/town/district | 6/19 | 32% | 0.01 | 3/25 | 12% | 0.07 | 9/44 | 20% | <0.01 |

| Regional | 3/3 | 100% | 1/5 | 20% | 4/8 | 50% | ||||

| National | 11/15 | 73% | 2/3 | 67% | 13/18 | 72% | ||||

| Region | West Africa | 9/10 | 90% | 0.01 | 3/4 | 75% | 0.01 | 12/14 | 86% | <0.01 |

| East/Southern Africa | 11/27 | 41% | 3/29 | 10% | 14/56 | 25% | ||||

| Number of connections (suppliers only) | <10,000 | 4/17 | 24% | <0.01 | - | - | - | 4/17 | 24% | <0.01 |

| ≥10,000 | 12/16 | 75% | - | - | - | 12/16 | 75% | |||

| Number of water sources (surveillance only) | <1000 | - | - | - | 5/13 | 38% | 0.03 | 5/13 | 38% | 0.05 |

| ≥1000 | - | - | - | 1/20 | 5% | 1/20 | 5% | |||

| Urban/rural | Urban | 20/37 | 54% | - | 2/7 | 26% | 0.38 | 22/44 | 50% | <0.01 |

| Rural | 0/0 | 0% | 4/26 | 15% | 4/26 | 15% | ||||

| Water quality budget per person served (USD) | <$0.05 | 4/17 | 24% | <0.01 | 5/26 | 19% | 0.62 | 9/43 | 21% | <0.01 |

| ≥$0.05 | 16/20 | 80% | 1/7 | 14% | 17/27 | 63% | ||||

| Water quality staff per 10,000 people served 4 | <1 | 9/23 | 39% | 0.07 | 6/25 | 25% | 0.24 | 15/48 | 32% | 0.49 |

| ≥1 | 5/6 | 83% | 0/6 | 0% | 5/12 | 42% | ||||

| Years in operation (suppliers only) 5 | <10 | 4/13 | 31% | 0.18 | - | - | 4/13 | 31% | 0.18 | |

| 10–30 | 3/9 | 44% | - | - | 3/9 | 44% | ||||

| >30 | 7/10 | 70% | - | - | 7/10 | 70% | ||||

| Independently regulated 6 | Yes | 8/14 | 57% | 0.52 | 0/0 | - | N/A | 8/14 | 57% | 0.08 |

| No | 12/23 | 52% | 6/33 | 18% | 18/56 | 32% | ||||

| National standards documented 7 | Yes | 18/35 | 31% | 0.29 | 3/17 | 18% | 0.64 | 21/52 | 40% | 0.34 |

| No | 2/2 | 100% | 3/16 | 19% | 5/18 | 28% | ||||

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peletz, R.; Kumpel, E.; Bonham, M.; Rahman, Z.; Khush, R. To What Extent is Drinking Water Tested in Sub-Saharan Africa? A Comparative Analysis of Regulated Water Quality Monitoring. Int. J. Environ. Res. Public Health 2016, 13, 275. https://doi.org/10.3390/ijerph13030275

Peletz R, Kumpel E, Bonham M, Rahman Z, Khush R. To What Extent is Drinking Water Tested in Sub-Saharan Africa? A Comparative Analysis of Regulated Water Quality Monitoring. International Journal of Environmental Research and Public Health. 2016; 13(3):275. https://doi.org/10.3390/ijerph13030275

Chicago/Turabian StylePeletz, Rachel, Emily Kumpel, Mateyo Bonham, Zarah Rahman, and Ranjiv Khush. 2016. "To What Extent is Drinking Water Tested in Sub-Saharan Africa? A Comparative Analysis of Regulated Water Quality Monitoring" International Journal of Environmental Research and Public Health 13, no. 3: 275. https://doi.org/10.3390/ijerph13030275