Visual Sensor Technology for Advanced Surveillance Systems: Historical View, Technological Aspects and Research Activities in Italy

Abstract

:1. Introduction

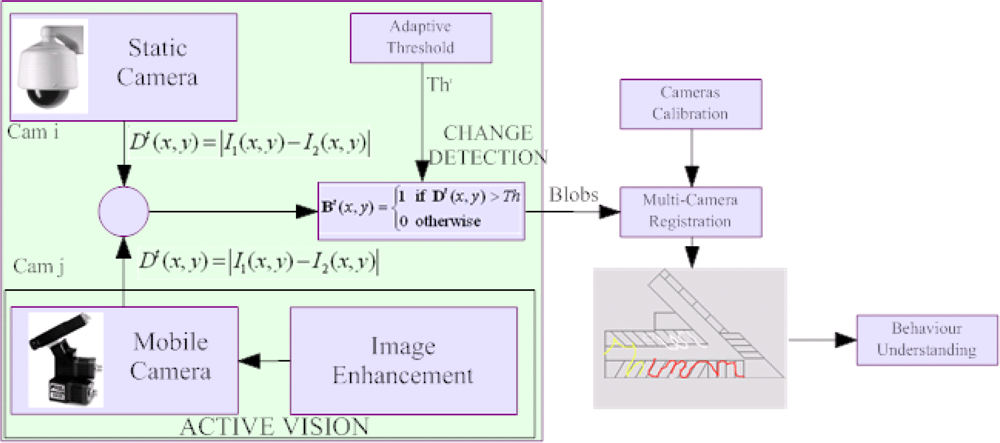

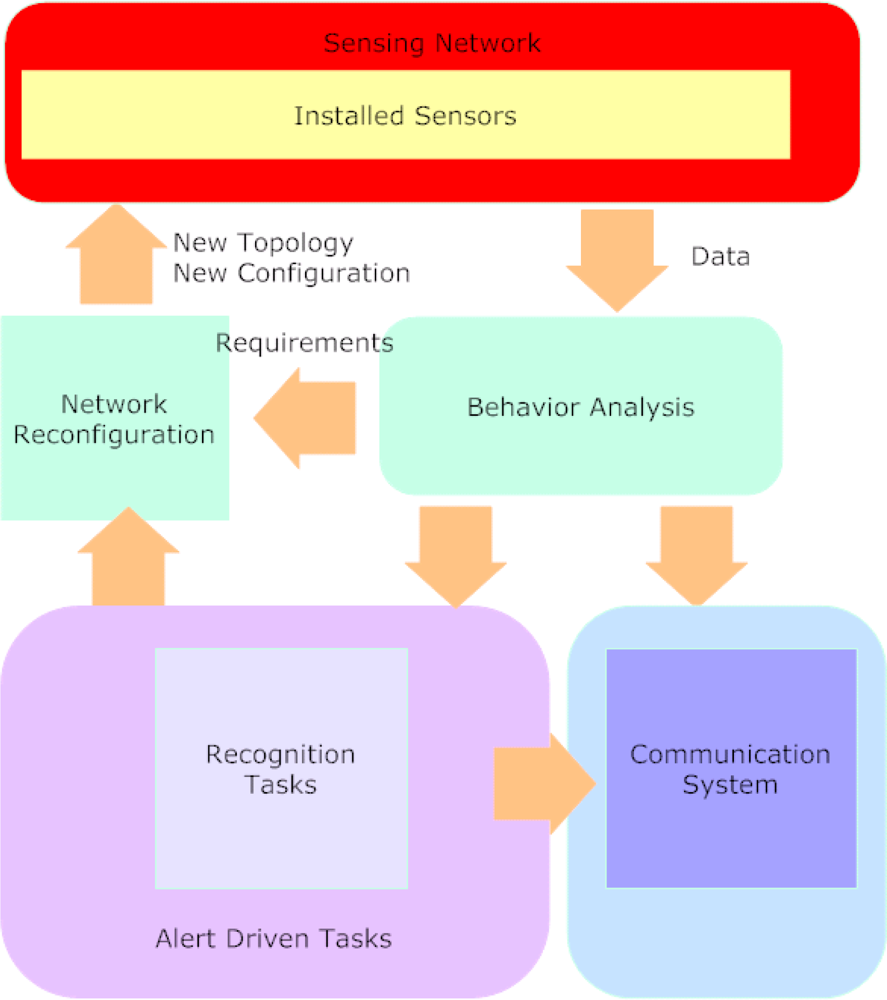

2. Advanced Visual-based Surveillance Systems

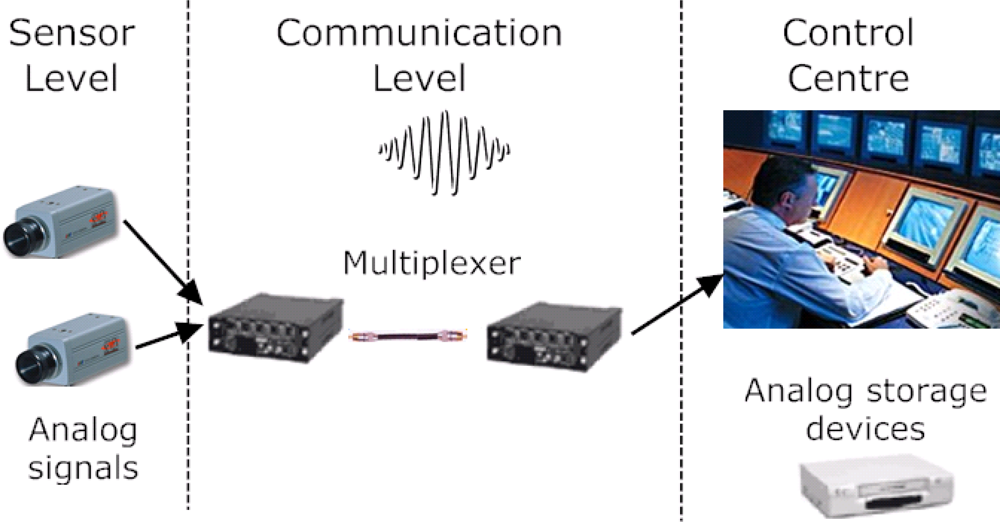

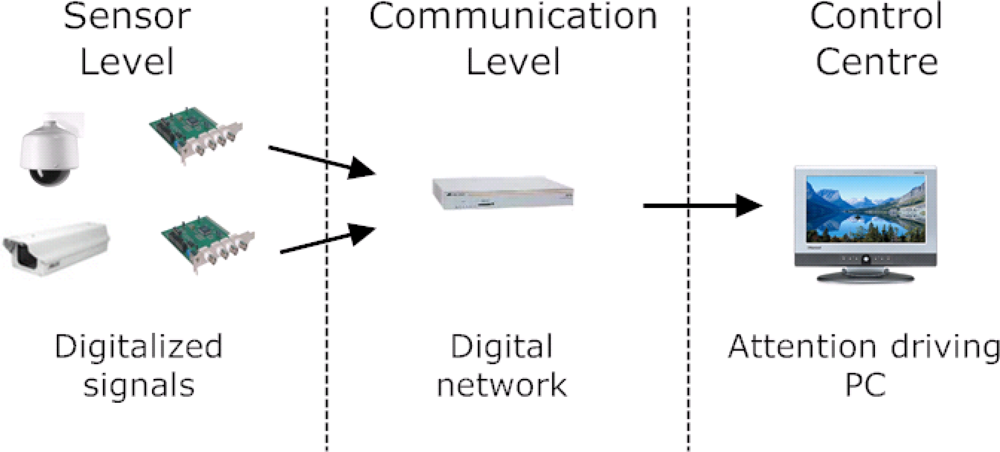

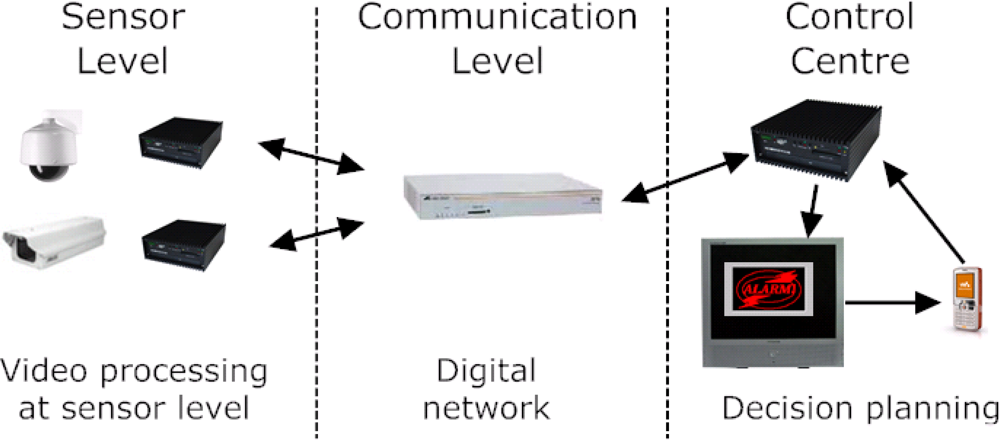

2.1. Evolution of Visual-Based Surveillance Systems

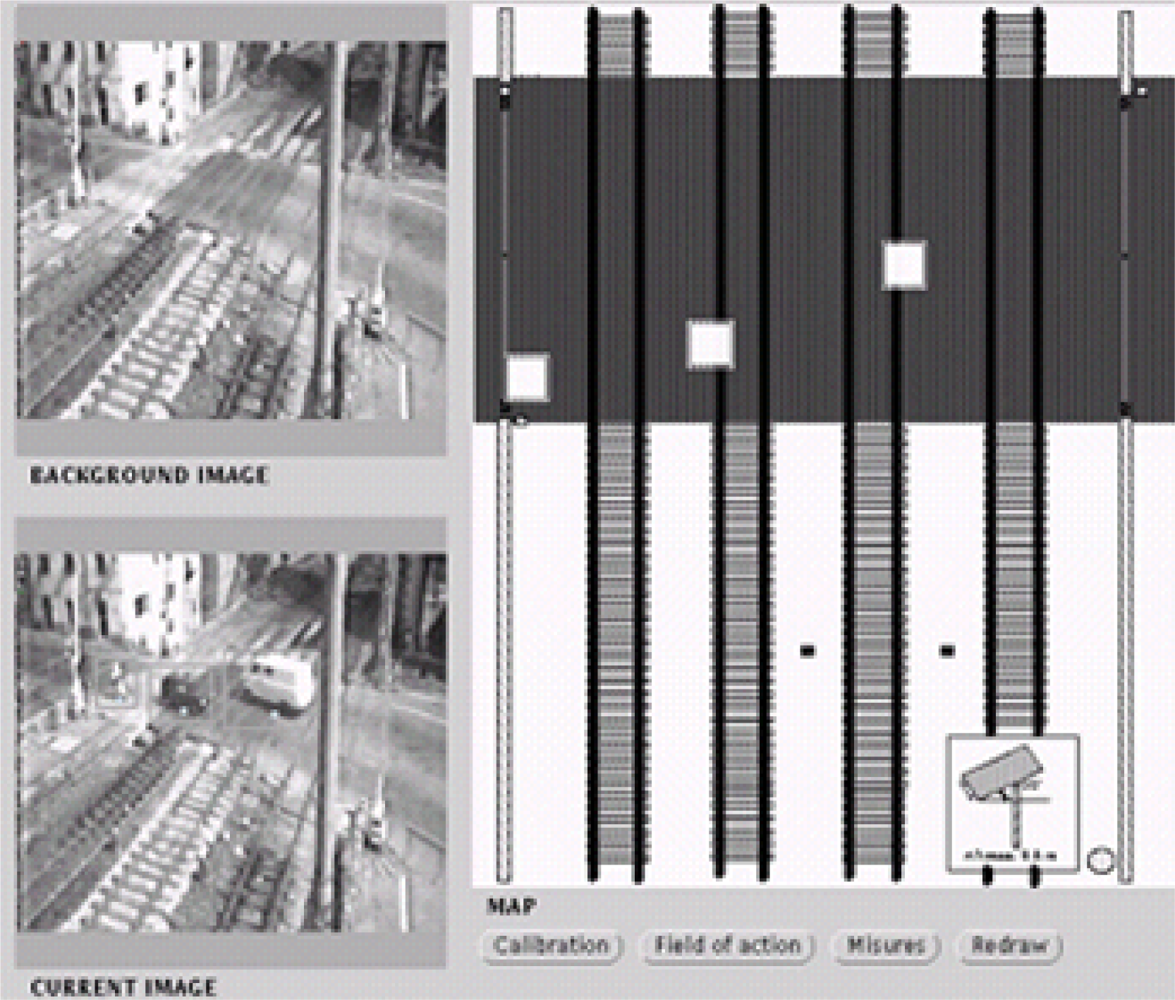

2.2. Visual-Based Surveillance Systems in Italy

3. Intelligent Visual Sensors

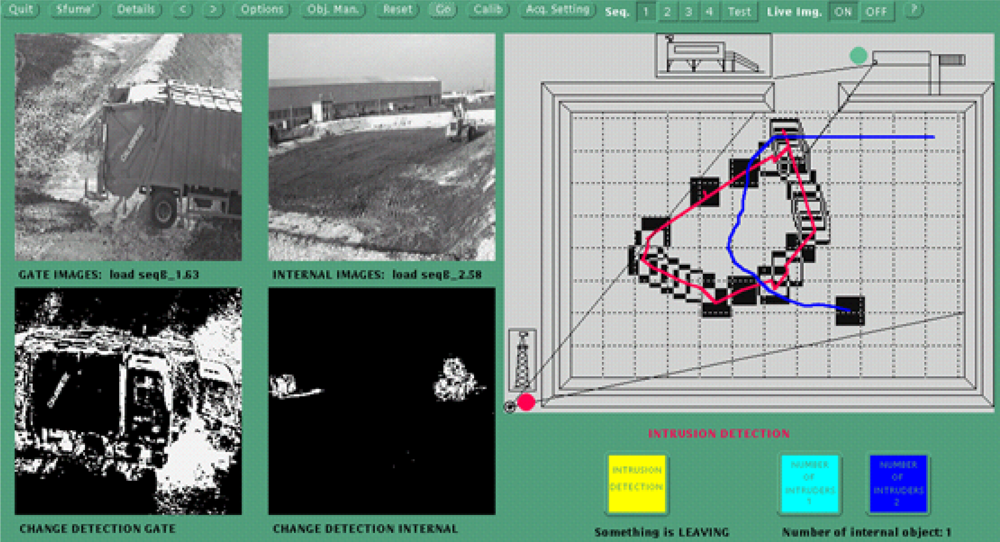

3.1. Visual Data Processing at Sensor Level

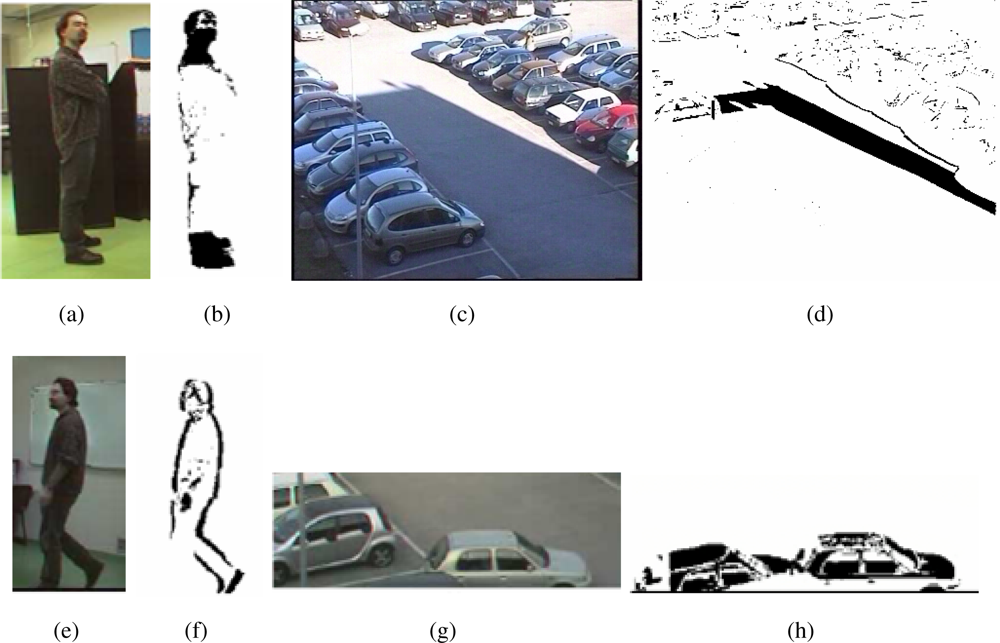

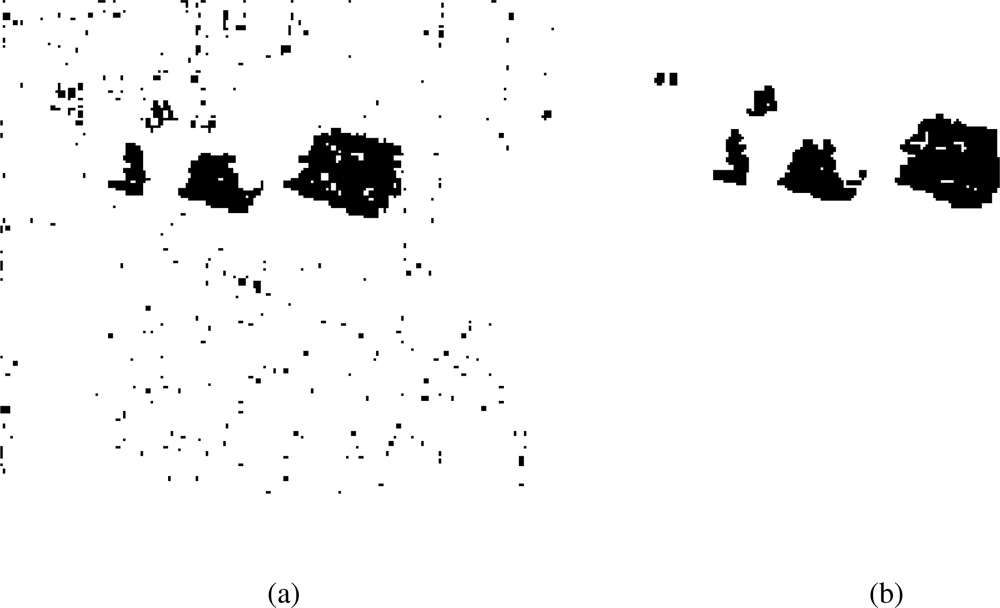

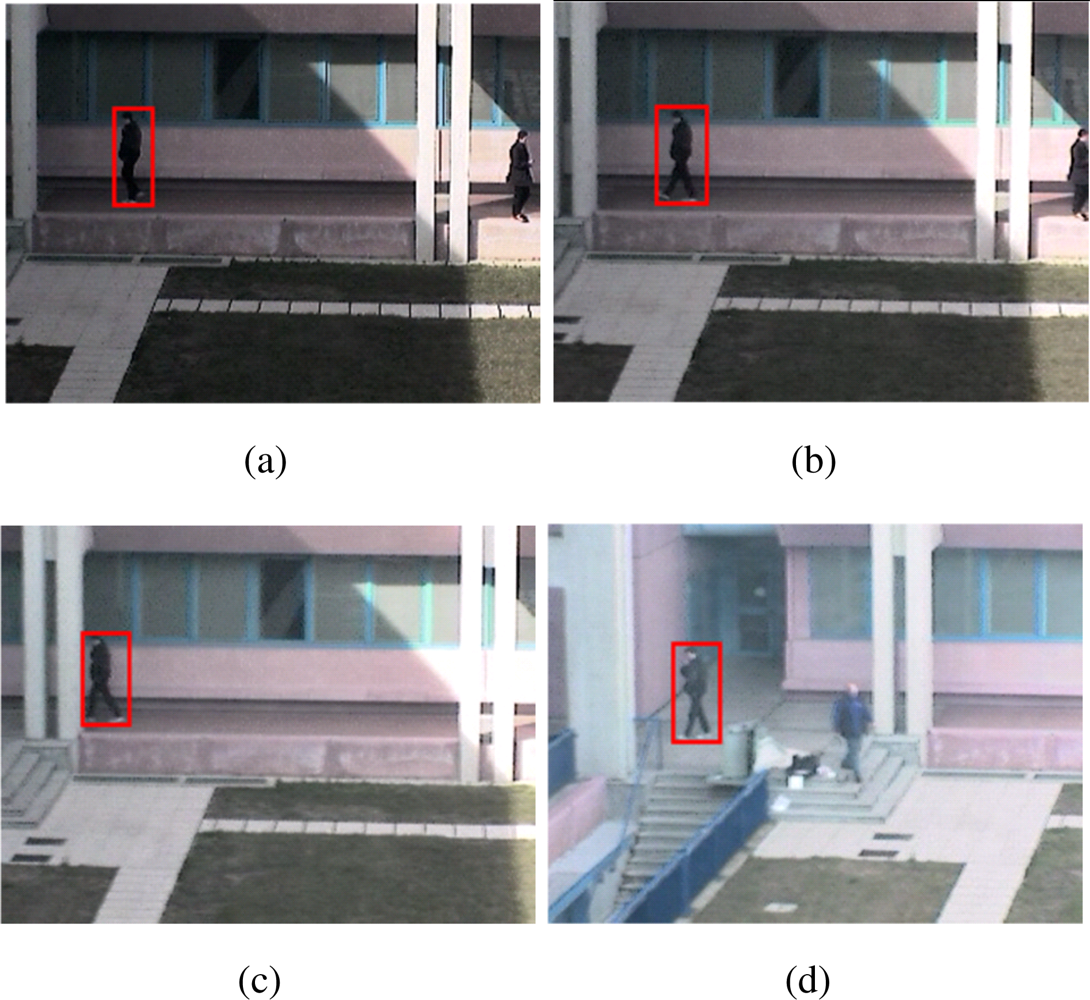

- camouflage effects are caused by moving objects similar in appearance to the background (changes in the scene do not imply changes in the image, e.g., Figure 8 (a) and (b))

- light changes can lead to changes in the images that are not associated to real foreground objects (changes in the image do not imply changes in the scene, e.g., Figure 8 (c) and (d))

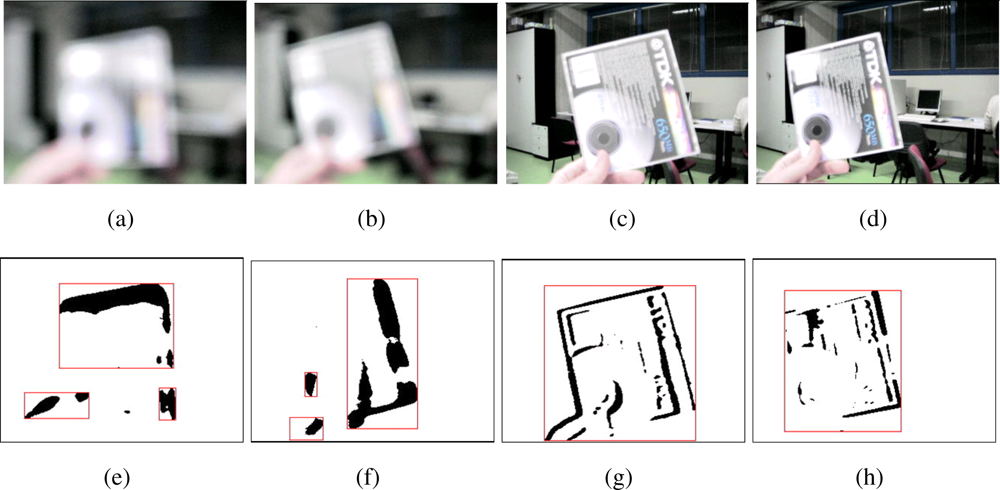

- foreground aperture is a problem affecting the detection of moving objects with uniform appearance, so that motion can be detected only on the borders of the object (e.g., Figure 8 (e) and (f))

- ghosting refers to the detection of false objects due to motion of elements initially considered as a part of the background (e.g., Figure 8 (g) and (h))

- frame-by-frame algorithms

- frame-background algorithms (with reference background image or with background models).

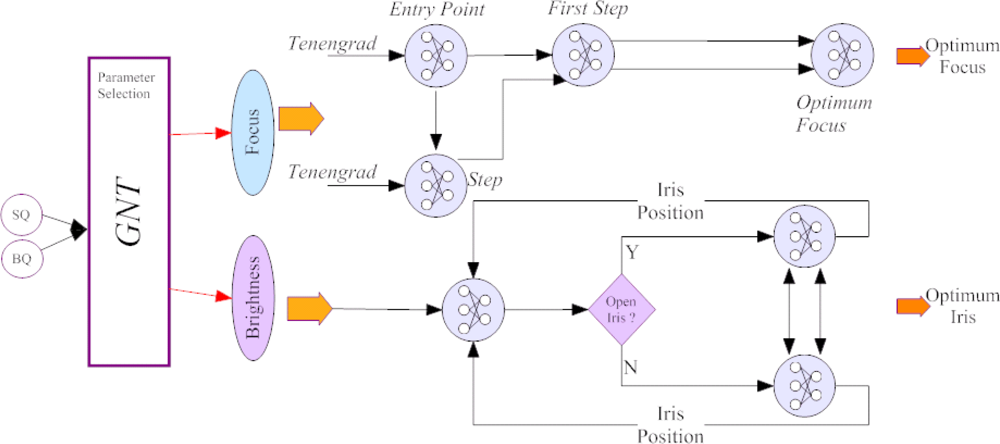

3.2. Automatic Camera Parameter Regulation

3.3. Sensor Selection

3.4. Performance Evaluation

4. Conclusions

Acknowledgments

References and Notes

- Regazzoni, C. S.; Visvanathan, R.; Foresti, G. L. Scanning the issue / technology - Special Issue on Video Communications, processing and understanding for third generation surveillance systems. Proceedings of the IEEE 2001, 89, 1355–1367. [Google Scholar]

- Donold, C. H. M. Assessing the human vigilance capacity of control rooms operators. Proceedings of the International Conference on Human Interfaces in Control Rooms, Cockpits and Command Centres, 1999; pp. 7–11.

- Pahlavan, K.; Levesque, A. H. Wireless data communications. Proceedings of the IEEE 1994, 82, 1398–1430. [Google Scholar]

- Yilmaz, A.; Javed, O.; Shah, M. Object tracking: A survey. ACM Computing Surveys 2006, 38, 1–45. [Google Scholar]

- Pantic, M.; Pentland, A.; Nijholt, A.; Huang, T. Artifical Intelligence for Human Computing; chapter Human Computing and Machine Understanding of Human Behavior: A Survey,; pp. 47–71, Lecture Notes in Computer Science.; Springer, 2007. [Google Scholar]

- Haritaoglu, I.; Harwood, D.; Davis, L. W 4: Real-time surveillance of people and their activities. IEEE Transactions on Pattern Analysis and Machine Intelligence 2000, 22, 809–830. [Google Scholar]

- Oliver, N. M.; Rosario, B.; Pentland, A. P. A bayesian computer vision system for modeling human interactions. IEEE Transactions on Pattern Analysis and Machine Intelligence 2000, 22, 831–843. [Google Scholar]

- Ricquebourg, Y.; Bouthemy, P. Real-time tracking of moving persons by exploiting spatio-temporal image slices. 2000, 22, 797–808. [Google Scholar]

- Bremond, F.; Thonnat, M. Tracking multiple nonrigid objects in video sequences. 1998, 8, 585–591. [Google Scholar]

- Faulus, D.; Ng, R. An expressive language and interface for image querying. Machine Vision and Applications 1997, 10, 74–85. [Google Scholar]

- Haanpaa, D. P. An advanced haptic system for improving man-machine interfaces. Computer Graphics 1997, 21, 443–449. [Google Scholar]

- Kurana, M. S.; Tugcu, T. A survey on emerging broadband wireless access technologies. Computer Networks 2007, 51, 3013–3046. [Google Scholar]

- Stringa, E.; Regazzoni, C. S. Real-time video-shot detection for scene surveillance applications. 2000, 9, 69–79. [Google Scholar]

- Kimura, N.; Latifi, S. A survey on data compression in wireless sensor networks. Proceedings of the International Conference on Information Technology: Coding and Computing, USA, 4–6 April 2005; pp. 8–13.

- Lu, W. Scanning the issue - special issue on multidimensional broad-band wireless technologies and services. Proceedings of the IEEE 2001, 89, 3–5. [Google Scholar]

- Fazel, K.; Robertson, P.; Klank, O.; Vanselow, F. Concept of a wireless indoor video communications system. Signal Processing: Image Communication 1998, 12, 193–208. [Google Scholar]

- Batra, P.; Chang, S. Effective algorithms for video transmission over wireless channels. Signal Processing: Image Communication 1998, 12, 147–166. [Google Scholar]

- Manjunath, B. S.; Huang, T.; Tekalp, A. M.; Zhang, H. J. Introduction to the special issue on image and video processing for digital libraries. 2000, 9, 1–2. [Google Scholar]

- Bjontegaard, G.; Lillevold, K.; Danielsen, R. A comparison of different coding formats for digital coding of video using mpeg-2. 1996, 5, 1271–1276. [Google Scholar]

- Cheng, H.; Li, X. Partial encryption of compressed images and videos. 2000, 48, 2439–2451. [Google Scholar]

- Benoispineau, J.; Morier, F.; Barba, D.; Sanson, H. Hierarchical segmentation of video sequences for content manipulation and adaptive coding. Signal Processing 1998, 66, 181–201. [Google Scholar]

- Vasconcelos, N.; Lippman, A. Statistical models of video structure for content analysis and characterization. 2000, 9, 3–19. [Google Scholar]

- Ebrahimi, T.; Salembier, P. Special issue on video sequence segmentation for content-based processing and manipulation. Signal Processing 1998, 66, 3–19. [Google Scholar]

- Akyildiz, I. F.; Su, W.; Sankarasubramaniam, Y.; Cayirci, E. Wireless sensor networks: a survey. Computer Networks 2002, 38, 393–422. [Google Scholar]

- Kersey, C.; Yu, Z.; Tsai, J. Wireless Ad Hoc Networking: Personal-Area, Local-Area, and the Sensory-Area Networks; chapter Intrusion Detection for Wireless Network,; pp. 505–533. CRC Press, 2007. [Google Scholar]

- Sluzek, A.; Palaniappan, A. Development of a reconfigurable sensor network for intrusion detection. Proceedings of the International Conference on Military and Aerospace Application of Programmable Logic Devices (MAPLD), Washington, D.C., USA, September 2005.

- Margi, C.; Petkov, V.; Obraczka, K.; Manduchi, R. Characterizing energy consumption in a visual sensor network testbed. Proceedings on the 2nd International IEEE/Create-Net Conference on Testbeds and Research Infrastructures for the Development of Networks and Communities, Barcelona, Spain, 2006.

- Rahimi, M.; Baer, R.; Iroezi, O. I.; Garcia, J. C.; Warrior, J.; Estrin, D.; Srivastava, M. Cyclops: In situ image sensing and interpretation in wireless sensor networks. Proceedings of the International Conference on Embedded Networked Sensor Systems, San Diego, California, USA, November 24 2005; pp. 192–204.

- Regazzoni, C. S.; Tesei, A. Distributed data-fusion for real-time crowding estimation. Signal Processing 1996, 53, 47–63. [Google Scholar]

- Foresti, G. L.; Regazzoni, C. S. Multisensor data fusion for driving autonomous vehicles in risky enviroments. IEEE Transactions on Vehicular Technology 2002, 51, 1165–1185. [Google Scholar]

- Bogaert, M.; Chelq, N.; Cornez, P.; Regazzoni, C.; Teschioni, A.; Thonnat, M. The password project. Proceedings of the International Conference on Image Processing, Chicago, USA; 1996; pp. 675–678. [Google Scholar]

- Cucchiara, R.; Grana, C.; Piccardi, M.; Prati, A. Detecting moving objects, ghosts, and shadows in video streams. 2003, 25, 1337–1342. [Google Scholar]

- Foresti, G.; Micheloni, C. A robust feature tracker for active surveillance of outdoor scenes. Electronic Letters on Computer Vision and Image Analysis 2003, 1, 21–34. [Google Scholar]

- Piciarelli, C.; Micheloni, C.; Foresti, G. Trajectory-based anomalous event detection. IEEE Transaction on Circuits and Systems for Video Technology 2008, 18, 1544–1554. [Google Scholar]

- Carincotte, C.; Desurmont, X.; Ravera, B.; Bremond, F.; Orwell, J.; Velastin, S.; Odobez, J.; Corbucci, B.; Palo, J.; Cernocky, J. Toward generic intelligent knowledge extraction from video and audio: the eu-funded CARETAKER project. Proceedings of the IEE Conference on Imaging for Crime Detection and Prevention (ICDP), London, UK, 13–14 June 2006; pp. 470–475.

- Fan, J.; Wang, R.; Zhang, L.; Xing, D.; Gan, F. Image sequence segmentation based on 2d temporal entropic thresholding. Pattern Recognition Letters 1996, 17, 1101–1107. [Google Scholar]

- Tsai, D.; Lin, C. Fast normalized cross correlation for defect detection. Pattern Recognition Letters 2003, 24, 2625–2631. [Google Scholar]

- Wang, D.; Feng, T.; Shum, H.; Ma, S. A novel probability model for background maintenance and subtraction. Proceedings of the 15th International Conference on Vision Interface, Calgari, Canada, 2002; pp. 109–117.

- Stauffer, C.; Grimson, W. E. L. Learning patterns of activity using real-time tracking. IEEE Pattern Analysis and Machine Intelligence 2000, 22, 747–757. [Google Scholar]

- Tsai, W.-H. Moment-preserving thresholding: A new approach. Computer Vision, Graphics, and Image Processing 1985, 29, 377–393. [Google Scholar]

- Rosin, P. L. Unimodal thresholding. Pattern Recognition 2001, 34, 2083–2096. [Google Scholar]

- Snidaro, L.; Foresti, G. Real-time thresholding with Euler numbers. Pattern Recognition Letters 2003, 24, 1533–1544. [Google Scholar]

- Yuille, A.; Vincent, L.; Geiger, D. Statistical morphology and bayesian reconstruction. Journal of Mathematical Imaging and Vision 1992, 1, 223–238. [Google Scholar]

- Serra, J. Image Analysis and Mathematical Morphology; Academic Press: London, 1982. [Google Scholar]

- Mikolajczyk, K.; Schmid, C. A performance evaluation of local descriptors. 2005, 27, 1615–1630. [Google Scholar]

- Micheloni, C.; Foresti, G. Image acquisition enhancement for active video surveillance. Proceedings of the International Conference on Pattern Recognition (ICPR), Cambridge, U.K., 22–26 August 2004; 3, pp. 326–329.

- Micheloni, C.; Foresti, G. Fast good features selection for wide area monitoring. Proceedings of the IEEE Conference on Advanced Video and Signal Based Surveillance, Miami, FL, USA, July 2003; pp. 271–276.

- Micheloni, C.; Foresti, G. Focusing on target’s features while tracking. Proc. 18th International Conference on Pattern Recognition (ICPR), Honk Kong, July 21–22, 2006; 1, pp. 836–839.

- Crowley, J.; Hall, D.; Emonet, R. Autonomic computer vision systems. Proceedings of the 5th International Conference on Computer Vision Systems (ICVS07), Bielefeld, Germany, 21–24 March 2007.

- Snidaro, L.; Niu, R.; Foresti, G.; Varshney, P. Quality-Based Fusion of Multiple Video Sensors for Video Surveillance. 2007, 37, 1044–1051. [Google Scholar]

- Snidaro, L.; Foresti, G. Advances and Challenges in Multisensor Data and Information Processing; chapter Sensor Performance Estimation for Multi-camera Ambient Security Systems: a Review,; pp. 331–338, NATO Security through Science Series, D: Information and Communication Security.; IOS Press, 2007. [Google Scholar]

- Avcibaş, I.; Sankur, B.; Sayood, K. Statistical evaluation of image quality measures. Journal of Electronic Imaging 2002, 11, 206–223. [Google Scholar]

- Collins, R. T.; Lipton, A. J.; Kanade, T. Introduction to the special section on video surveillance. 2000, 22, 745–746. [Google Scholar]

- Erdem, Ç. E.; Sankur, B.; Tekalp, A. M. Performance measures for video object segmentation and tracking. 2004, 13, 937–951. [Google Scholar]

- Correia, P. L.; Pereira, F. Objective evaluation of video segmentation quality. 2003, 12, 186–200. [Google Scholar]

- Collins, R. T.; Liu, Y.; Leordeanu, M. Online selection of discriminative tracking features. 2005, 27, 1631–1643. [Google Scholar]

- Nghiem, A.; Bremond, F.; Thonnat, M.; Ma, R. New evaluation approach for video processing algorithms. Proceedings of the IEEE Workshop on Motion and Video Computing (WMVC07), Austin, Texas, USA, February 23–24 2007.

© 2009 by the authors; licensee MDPI, Basel, Switzerland This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Foresti, G.L.; Micheloni, C.; Piciarelli, C.; Snidaro, L. Visual Sensor Technology for Advanced Surveillance Systems: Historical View, Technological Aspects and Research Activities in Italy. Sensors 2009, 9, 2252-2270. https://doi.org/10.3390/s90402252

Foresti GL, Micheloni C, Piciarelli C, Snidaro L. Visual Sensor Technology for Advanced Surveillance Systems: Historical View, Technological Aspects and Research Activities in Italy. Sensors. 2009; 9(4):2252-2270. https://doi.org/10.3390/s90402252

Chicago/Turabian StyleForesti, Gian Luca, Christian Micheloni, Claudio Piciarelli, and Lauro Snidaro. 2009. "Visual Sensor Technology for Advanced Surveillance Systems: Historical View, Technological Aspects and Research Activities in Italy" Sensors 9, no. 4: 2252-2270. https://doi.org/10.3390/s90402252