Object-Based Point Cloud Analysis of Full-Waveform Airborne Laser Scanning Data for Urban Vegetation Classification

Abstract

:1 Introduction

2 Related work

3 Test site and data sets

3.1 Test site

3.2 Full-waveform ALS data

3.3 Reference data

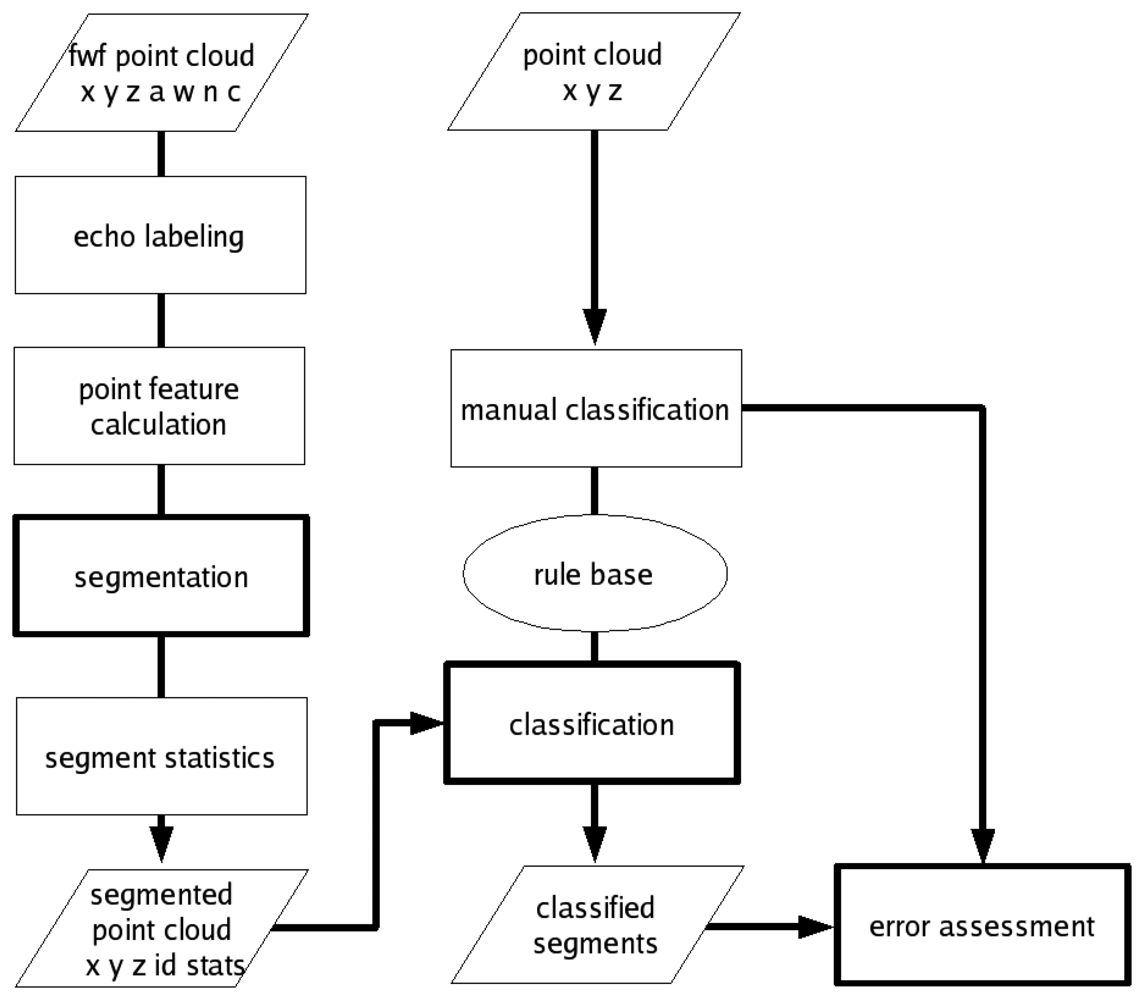

4 Methods of the object-based point cloud analysis workflow

4.1 Additional point features

4.2 Segmentation

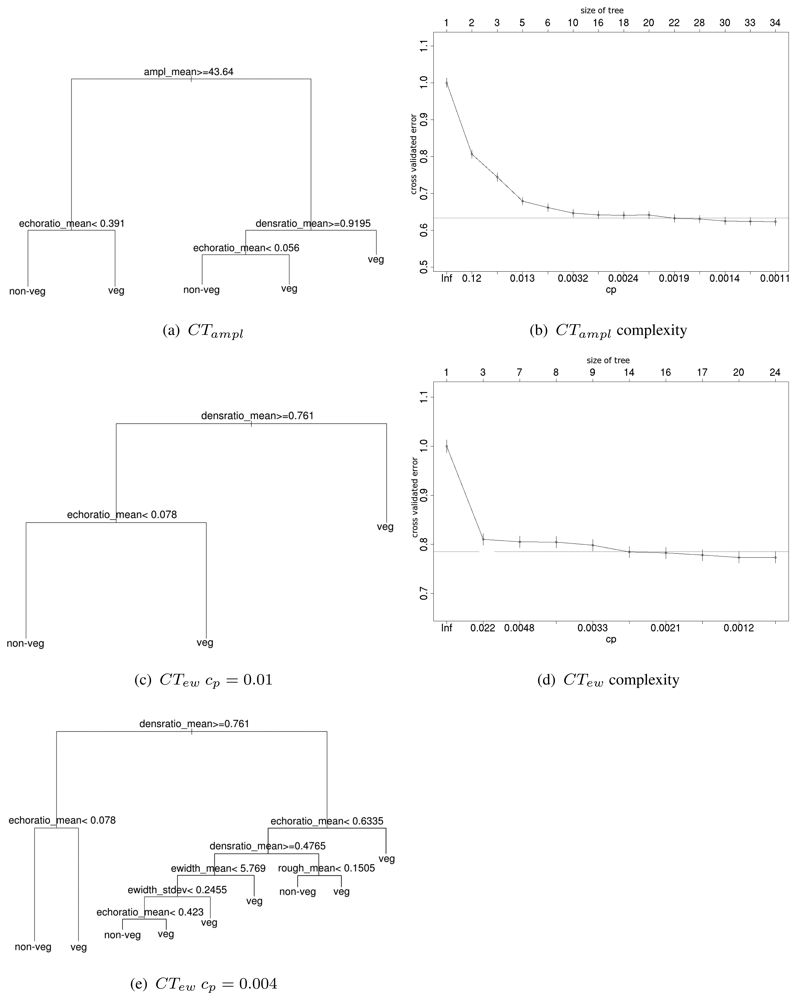

4.3 Classification tree

4.4 Error assessment

5 Object-based point cloud analysis settings

5.1 Segmentation settings

5.2 Classification tree settings

6 Results and discussion

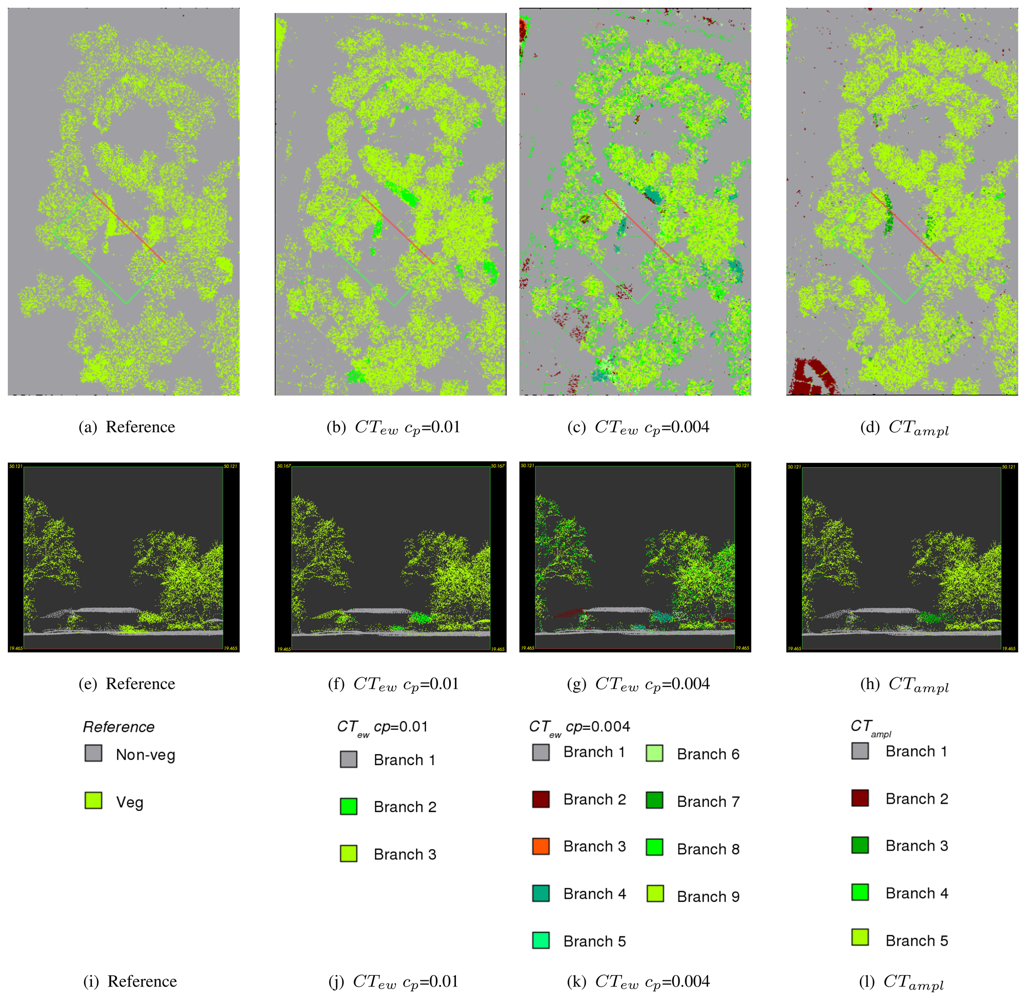

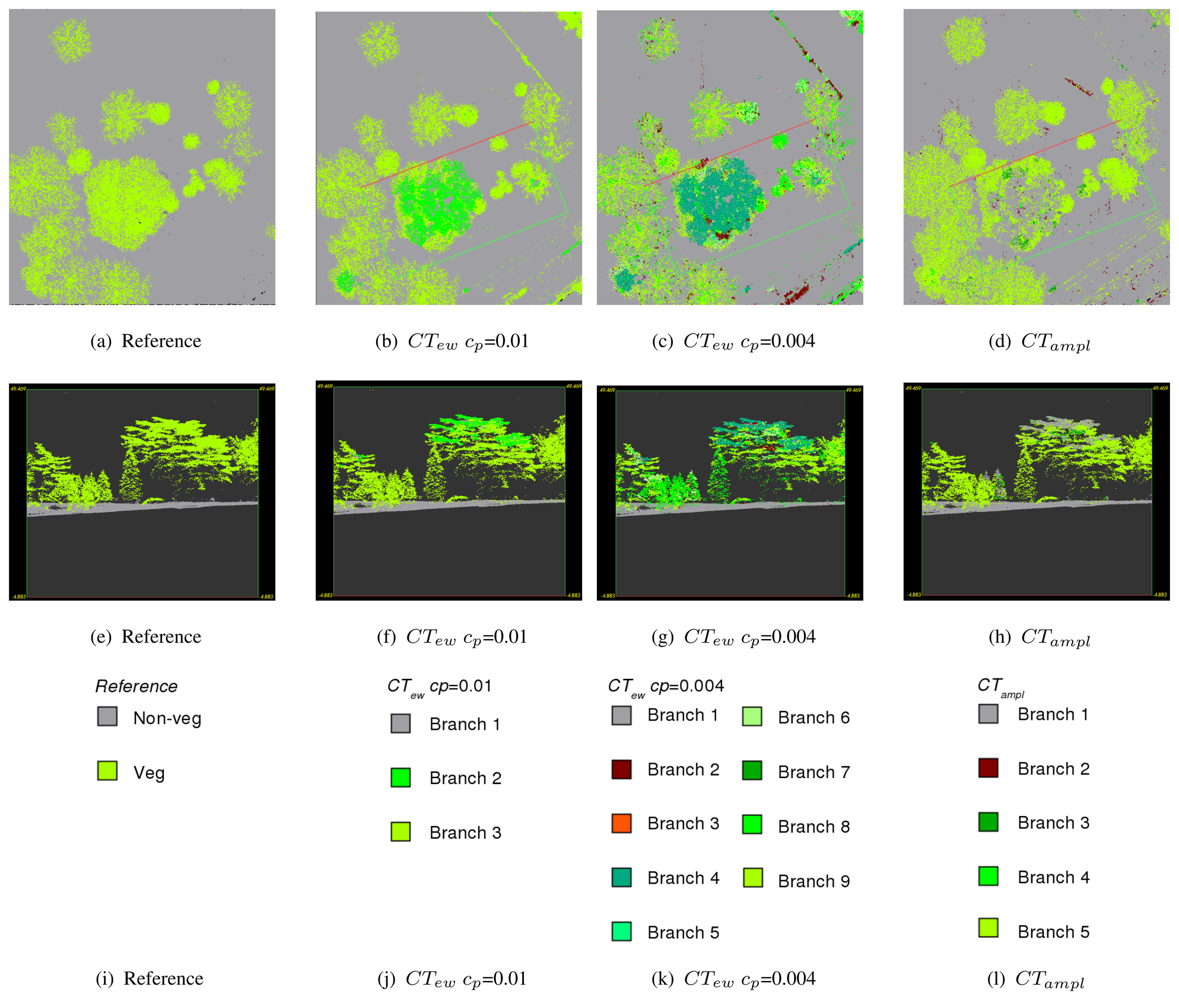

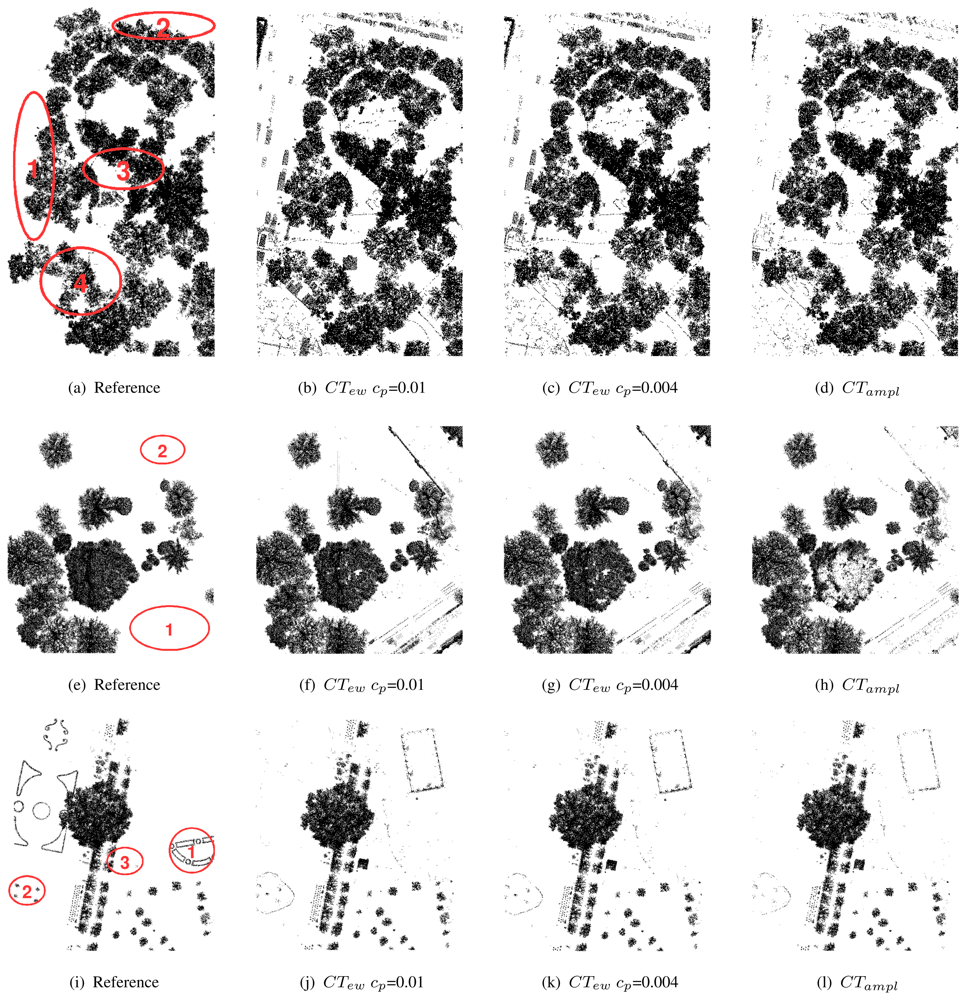

6.1 Segmentation

6.2 Interpretation of classification tree branches

6.3 Error assessment on the vegetation class

7 Conclusion

Acknowledgments

References

- Kraus, K.; Pfeifer, N. Determination of terrain models in wooded areas with ariborne laser scanner data. ISPRS Journal of Photogrammetry and Remote Sensing 1998, 53, 193–203. [Google Scholar]

- Sithole, G.; Vosselman, G. Experimental comparison of filter algorithms for bare-earth extraction from airborne laser scanning point clouds. ISPRS Journal of Photogrammetry and Remote Sensing 2004, 59(1-2), 85–101. [Google Scholar]

- Koukal, T.; Schneider, W. (Eds.) Proceedings of the International Workshop on 3D Remote Sensing in Forestry, Vienna, Austria; 2006.

- Rönnholm, P.; Hyyppä, H.; Hyyppä, J. (Eds.) Proceedings of the ISPRS Workshop 'Laser Scanning 2007 and SilviLaser 2007', volume 36 of International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, Espoo, Finland; 2007.

- Rottensteiner, F.; Trinder, J.; Clode, S.; Kubik, K. Building detection by fusion of airborne laser scanner data and multi-spectral images: Performance evaluation and sensitivity analysis. ISPRS Journal of Photogrammetry and Remote Sensing 2007, 62, 135–149. [Google Scholar]

- Sohn, G.; Downman, I. Data fusion of high-resolution satellite imagery and lidar data for automatic building extraction. ISPRS Journal of Photogrammetry and Remote Sensing 2007, 62, 43–63. [Google Scholar]

- Wagner, W.; Hollaus, M.; Briese, C.; Ducic, V. 3d vegetaion mapping using small-footprint full-waveform airborne laser scanners. International Journal of Remote Sensing 2008, 29(5), 1433–1452. [Google Scholar]

- Blaschke, T.; Lang, S.; Hay, G. (Eds.) In Object-based image analysis, spatial concepts for knowledge-driven remote sensing applications; Lecture Notes in Geoinformation and Cartography. Springer; in press.

- Rutzinger, M.; Höfle, B.; Pfeifer, N. In Object-based image analysis - spatial concepts for knowledge-driven remote sensing applications; chapter Object detection in airborne laser scanning data - an integrative approach on object-based image and point cloud analysis. Lecture Notes in Geoinformation and Cartography. Springer; in press.

- Höfle, B.; Geist, T.; Rutzinger, M.; Pfeifer, N. Glacier surface segmentation using airborne laser scanning point cloud and intensity data. In International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences; volume 36 of Part 3/W52; pp. 195–200. Espoo, Finland, 2007. [Google Scholar]

- Ullrich, A.; Hollaus, M.; Briese, C.; Wagner, W.; Doneus, M. Utilization of full-waveform data in airborne laser scanning applications. Turner, M. D., Kamerman, G. W., Eds.; In Proceedings of SPIE: Laser Radar Technology and Applications XII; volume 6550, 2007. [Google Scholar]

- Weinacker, H.; Koch, B.; Weinacker, R. Treesvis - a software system for simultanious 3d-real-time visualisation of dtm, dsm, laser raw data, multispectral data, simple tree and building models. International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences 2004, volume 36, 90–95. [Google Scholar]

- Vosselman, G.; Kessels, P.; Gorte, B. The utilisation of airborne laser scanning for three-dimensional mapping. International Journal of Applied Earth Observation and Geoinformation 2005, 6(3-4), 177–186. [Google Scholar]

- Haala, N.; Brenner, C. Extraction of buildings and trees in urban environments. ISPRS Journal of Photogrammetry and Remote Sensing 1999, 54(2-3), 130–137. [Google Scholar]

- Iovan, C.; Boldo, D.; Cord, M. Automatic extraction of urban vegetation structures from high resolution imagery and digital elevation model. Joint IEEE-GRSS/ISPRS Workshop on Remote Sensing and Data Fusion over Urban Areas, Urban 2007, page on CD. Paris, France; 2007. [Google Scholar]

- Matikainen, L.; Kaartinen, H.; Hyyppä, J. Classification tree based building detection from laser scanner and aerial image data. In International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences; volume 36-3, Espoo, Finland, 2007. [Google Scholar]

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multiresolution, object-oriented fuzzy analysis of remote sensing data for gis-ready information; 2004; Volume 58, 3-4, pp. 239–258. [Google Scholar]

- Filin, S.; Pfeifer, N. Segmentation of airborne laser scanning data using a slope adaptive neighborhood. ISPRS Journal of Photogrammetry and Remote Sensing 2006, 60(2), 71–80. [Google Scholar]

- Sithole, G.; Vosselman, G. Automatic structure detection in a point-cloud of an urban landscape. In 2nd GRSS/ISPRS Joint Workshop on “Data Fusion and Remote Sensing over Urban Areas”; page on CD; Berlin, Germany, 2003. [Google Scholar]

- Melzer, T. Non-parametric segmentation of als point clouds using mean shift. Journal of Applied Geodesy 2007, 1, 159–170. [Google Scholar]

- Straatsma, M.W.; Baptist, M. J. Floodplain roughness parametrization using airborne laser scanning and spectral remote sensing. Remote Sensing of Environment 2008, 112, 1062–1080. [Google Scholar]

- Gross, H.; Jutzi, B.; Thoennessen, U. Segmentation of tree regions using data of a full-waveform laser. International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences 2007, volume 36, 57–62. [Google Scholar]

- Reitberger, J.; Heurich, M.; Krzystek, P.; Stilla, U. Single tree detection in forest areas with high-density lidar data. In International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences; volume 36, pp. 139–143. Espoo, Finland, 2007. [Google Scholar]

- Wagner, W.; Ullrich, A.; Ducic, V.; Melzer, T.; Studnicka, N. Gaussian decomposition and calibration of a novel small-footprint full-waveform digitising airborne laser scanner. ISPRS Journal of Photogrammetry & Remote Sensing 2006, 60(2), 100–112. [Google Scholar]

- Jutzi, B.; Stilla, U. Waveform processing of laser pulses for reconstruction of surfaces in urban areas. In International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences; volume 36, page on CD; Tempe, Arizona, USA, 2005. [Google Scholar]

- Rutzinger, M.; Höfle, B.; Pfeifer, N. Detection of high urban vegetation with airborne laser scanning data. In Proceedings forestsat 2007; page on CD; Montpellier, France, 2007. [Google Scholar]

- Höfle, B.; Pfeifer, N. Correction of laser scanning intensity data, data and model-driven approaches. ISPRS Journal of Photogrammetry & Remote Sensing 2007, 62(6), 415–433. [Google Scholar]

- Briese, C.; Höfle, B.; Lehner, H.; Wagner, W.; Pfennigbauer, M.; Ullrich, A. Calibration of full-waveform airborne laser scanning data for object classification. In Proceedings of SPIE conference Defense and Security 2008; volume 6950, Orlando, Florida, USA, 2008. [Google Scholar]

- Breiman, L.; Friedman, J.H.; Olshen, R. A.; Stone, C. J. Classification and regression trees.; Chapman and Hall, 1984. [Google Scholar]

- Therneau, T. M.; Atkinson, E.J. An introduction to recursive partitioning using the rpart routines; Departmnet of Health Science Research Mayo Clinic: Rochester, MN, technical report 61 edition; 1997. [Google Scholar]

- Ripley, B. D. Pattern Recognition and Neural Networks; Cambridge University Press, 1996. [Google Scholar]

- Maindonald, J.; Braun, J. Cambridge Series in Statistical and Probabilistic Mathematics. Data analysis and graphics using R - An Example-based approach, second edition 2007. [Google Scholar]

- R Development Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2008; ISBN 3-900051-07-0. [Google Scholar]

- Shufelt, J.A. Performance evaluation and analysis of monocular building extraction from aerial imagery. IEEE Transactions on Pattern Analysis and Machine Intelligence 1999, 21(4), 311–326. [Google Scholar]

- Ducic, V.; Hollaus, M.; Ullrich, A.; Wagner, W.; Melzer, T. 3d vegetation mapping and classification using full-waveform laser scanning. In Workshop on 3D Remote Sensing in Forestry; pp. 211–217. Vienna, Austria, 2006. [Google Scholar]

- Höfle, B.; Pfeifer, N.; Ressl, C.; Rutzinger, M.; Vetter, M. Water surface mapping using airborne laser scanning elevation and signal amplitude data. In Geophysical Abstracts; number 10, Vienna, Austria, 2008. [Google Scholar]

- 5A manual reference classification of laser scanner data in combination with orthophotos is used by Sithole and Vosselman [2] to evaluate the derivation of DTMs.

- 7The absence of single echoes would result in division by 0, which is considered in the implementation.

| Parameter | Value |

|---|---|

| Measurement range | 30 m - 1800 m at target reflectivity of 60% 30 m - 1200 m at target reflectivity of 20% |

| Ranging accuracy | 20 mm |

| Multi-target resolution | down to 0.5 m |

| Measurement rate | 240,000 measurements / sec (burst rate) up to 160,000 measurements / sec (average) |

| Scan range | 45° (up to 60°) |

| Scan speed | up to 160 lines / sec |

| Time stamping | resolution 1 μs, unambiguous range > 1 week |

| Laser safety | laser class 1, wavelength near infrared |

| Parameter | Settings |

|---|---|

| Seed criterion (roughness) | All points, descending |

| Growing criterion (echo width) | 1 ns (controls dynamic tolerance depending on echo width of starting seed point) |

| Nearest neighbors (k) | 5 points |

| 3D maximum growing distance (dist) | 0.5 m |

| Minimum segment size (minArea) | 1 point |

| Maximum segment size (maxArea) | 100,000 points |

| CT | End nod with Class | SQL WHERE rule | Amount of echoes [%] | ||

|---|---|---|---|---|---|

| RP | BG | VG | |||

| CTew cp=0.01 | branch1: non-veg | density ratiomean >= 0.761 | 65.29 | 86.17 | 67.48 |

| echo ratiomean < 0.078 | |||||

| branch2: veg | density ratiomean >= 0.761 | 1.03 | 0.18 | 5.27 | |

| echo ratiomean >= 0.078 | |||||

| branch3: veg | density ratiomean < 0.761 | 33.68 | 13.65 | 27.25 | |

| CTew cp=0.004 | branch1: non-veg | density ratiomean >= 0.761 | 65.29 | 86.17 | 67.48 |

| echo ratiomean < 0.078 | |||||

| branch2: non-veg | density ratiomean < 0.761 | 0.98 | 0.13 | 0.96 | |

| echo ratiomean < 0.6335 | |||||

| density ratiomean >= 0.4765 | |||||

| echo widthmean < 5.769 | |||||

| echo widthSD < 0.2455 | |||||

| echo ratiomean < 0.423 | |||||

| branch3: non-veg | density ratiomean < 0.761 | 0.07 | 0.02 | 0.06 | |

| echo ratiomean < 0.6335 | |||||

| density ratiomean < 0.4765 | |||||

| roughnessmean < 0.1505 | |||||

| branch4: veg | density ratiomean >= 0.761 | 1.03 | 0.18 | 5.27 | |

| echo ratiomean >= 0.078 | |||||

| branch5: veg | density ratiomean < 0.761 | 0.01 | 0 | 0.12 | |

| echo ratiomean < 0.6335 | |||||

| density ratiomean >= 0.4765 | |||||

| echo widthmean < 5.769 | |||||

| echo widthSD < 0.2455 | |||||

| echo ratiomean >= 0.423 | |||||

| branch6: veg | density ratiomean < 0.761 | 0.98 | 0.06 | 1.82 | |

| echo ratiomean < 0.6335 | |||||

| density ratiomean >= 0.4765 | |||||

| echo widthmean < 5.769 | |||||

| echo widthSD >= 0.2455 | |||||

| branch7: veg | density ratiomean < 0.761 | 0.18 | 0.02 | 0.11 | |

| echo ratiomean < 0.6335 | |||||

| density ratiomean >= 0.4765 | |||||

| echo widthmean >= 5.769 | |||||

| branch8: veg | density ratiomean < 0.761 | 10.13 | 2.05 | 6.65 | |

| echo ratiomean < 0.6335 | |||||

| density ratiomean < 0.4765 | |||||

| roughnessmean >= 0.1505 | |||||

| branch9: veg | density ratiomean < 0.761 | 21.32 | 11.37 | 17.52 | |

| echo ratiomean >= 0.6335 | |||||

| CTamplitude | branch1: non-veg | amplitudemean >= 43.64 | 67.07 | 86.31 | 73.72 |

| echo ratiomean < 0.391 | |||||

| branch2: non-veg | amplitudemean < 43.64 | 0.56 | 0.39 | 0.2 | |

| density ratiomean >= 0.9195 | |||||

| echo ratiomean < 0.056 | |||||

| branch3: veg | amplitudemean >= 43.64 | 0.75 | 0.36 | 0.72 | |

| echo ratiomean >= 0.391 | |||||

| branch4: veg | amplitudemean < 43.64 | 0.1 | 0.13 | 0.13 | |

| density ratiomean >= 0.9195 | |||||

| echo ratiomean >= 0.056 | |||||

| branch5: veg | amplitudemean < 43.64 | 31.52 | 12.8 | 25.24 | |

| density ratiomean < 0.9195 | |||||

| RP | BG | VG | |

|---|---|---|---|

| Number of | |||

| total points | 549,944 | 559,963 | 537,945 |

| points (outlier removed) | 549,330 | 559,784 | 537,882 |

| non-vegetation points in reference | 374,435 | 393,282 | 463,225 |

| vegetation points in reference | 175,509 | 166,681 | 74,720 |

| Overall accuracy [%] | |||

| CTew cp=0.01 | 96.44 | 96.75 | 97.84 |

| CTew cp=0.004 | 97.23 | 96.52 | 97.73 |

| CTampl | 97.90 | 94.18 | 98.09 |

| Average accuracy [%] | |||

| CTew cp=0.01 | 97.10 | 96.96 | 95.19 |

| CTew cp=0.004 | 97.57 | 97.18 | 95.19 |

| CTampl | 97.80 | 91.26 | 95.13 |

| Correctness (class vegetation) [%] | |||

| CTew cp=0.01 | 91.04 | 92.15 | 92.90 |

| CTew cp=0.004 | 93.52 | 90.48 | 92.06 |

| CTampl | 97.53 | 95.97 | 95.12 |

| Completeness (class vegetation) [%] | |||

| CTew cp=0.01 | 98.92 | 97.48 | 91.52 |

| CTew cp=0.004 | 98.51 | 98.81 | 91.66 |

| CTampl | 97.53 | 84.07 | 91.03 |

© 2008 by the authors; licensee Molecular Diversity Preservation International, Basel, Switzerland. This article is an open-access article distributed under the terms and conditions of the Creative Commons Attribution license ( http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Rutzinger, M.; Höfle, B.; Hollaus, M.; Pfeifer, N. Object-Based Point Cloud Analysis of Full-Waveform Airborne Laser Scanning Data for Urban Vegetation Classification. Sensors 2008, 8, 4505-4528. https://doi.org/10.3390/s8084505

Rutzinger M, Höfle B, Hollaus M, Pfeifer N. Object-Based Point Cloud Analysis of Full-Waveform Airborne Laser Scanning Data for Urban Vegetation Classification. Sensors. 2008; 8(8):4505-4528. https://doi.org/10.3390/s8084505

Chicago/Turabian StyleRutzinger, Martin, Bernhard Höfle, Markus Hollaus, and Norbert Pfeifer. 2008. "Object-Based Point Cloud Analysis of Full-Waveform Airborne Laser Scanning Data for Urban Vegetation Classification" Sensors 8, no. 8: 4505-4528. https://doi.org/10.3390/s8084505