Target Recognition of SAR Images via Matching Attributed Scattering Centers with Binary Target Region

Abstract

:1. Introduction

2. Extraction of Binary Target Region and ASCs

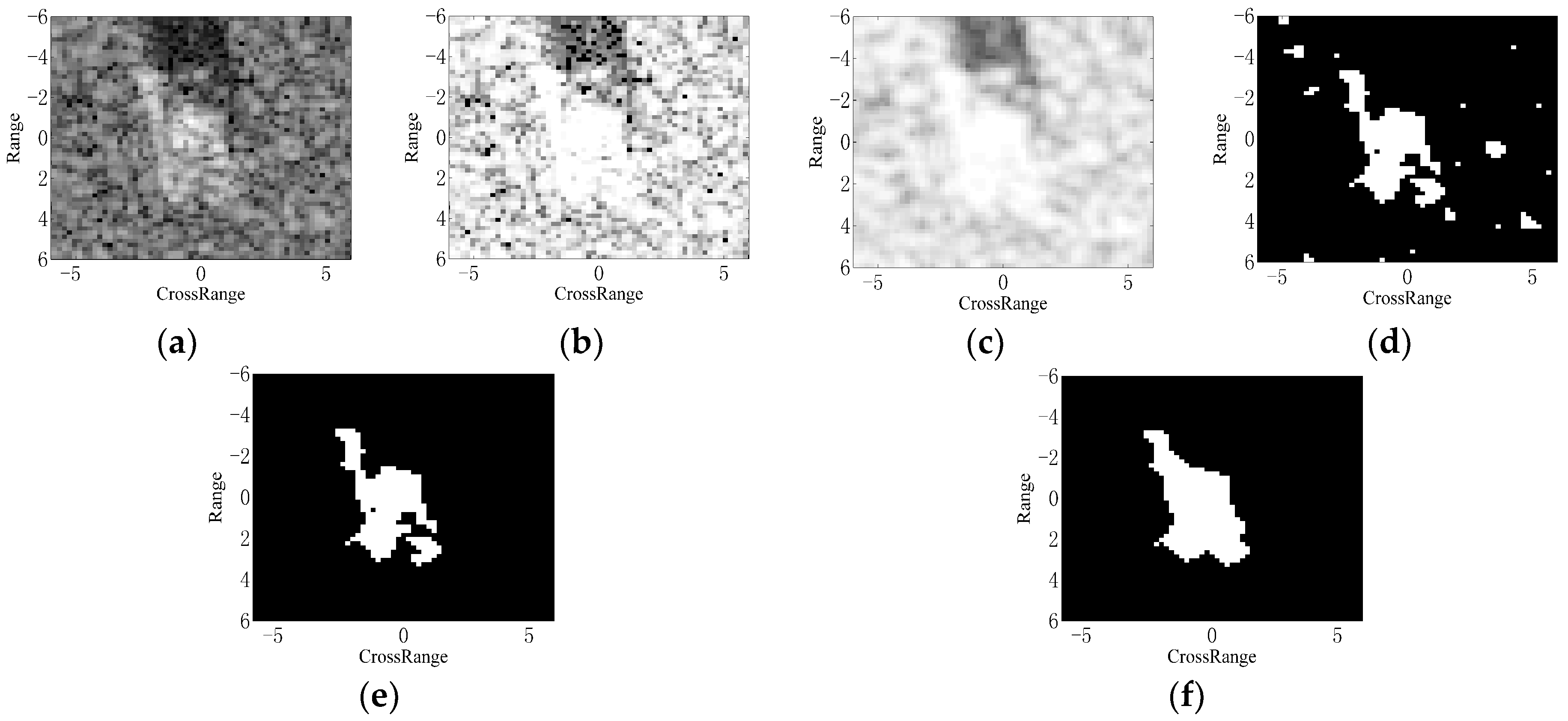

2.1. Target Segmentation

2.2. ASC Extraction

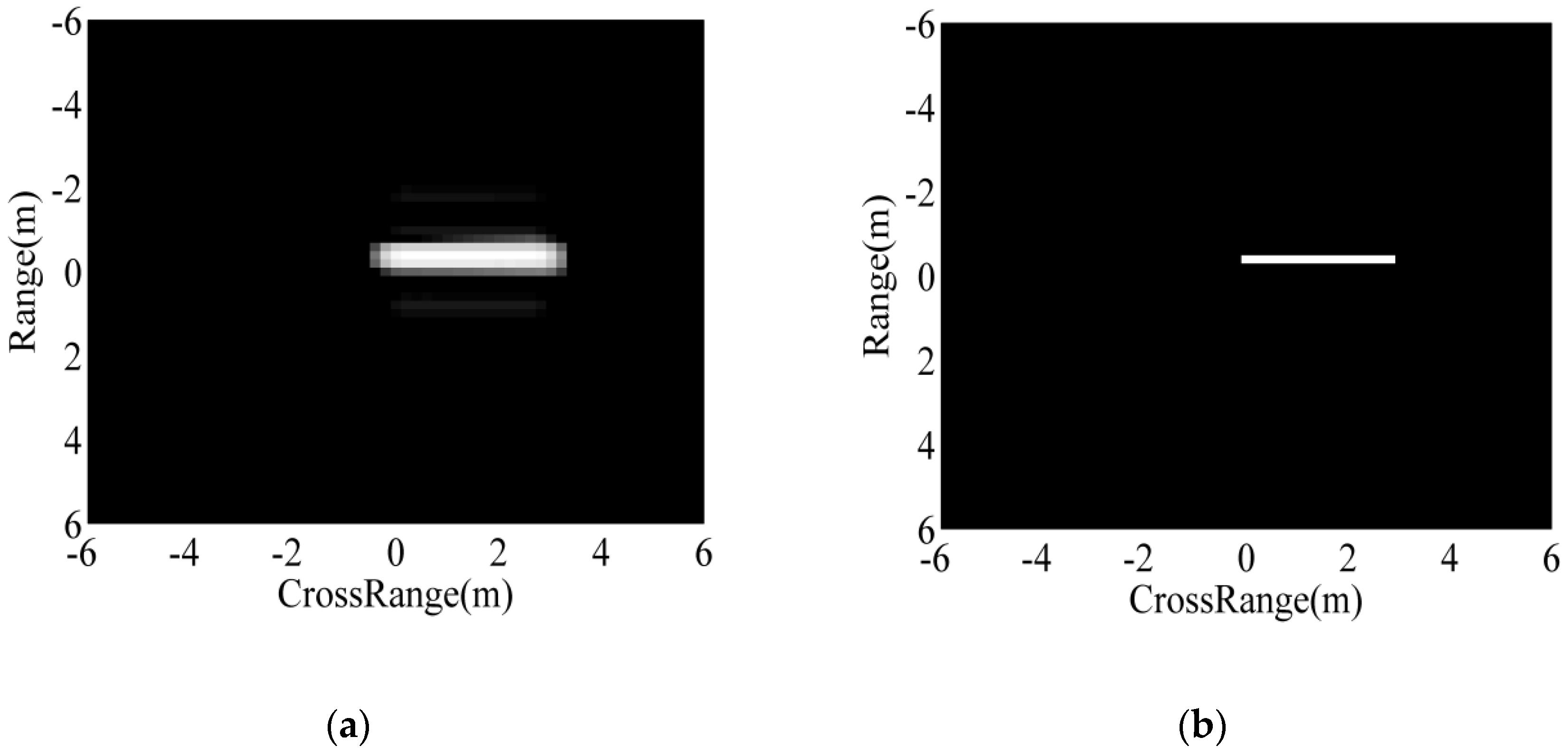

2.2.1. ASC Model

2.2.2. ASC Extraction Based on Sparse Representation

| Algorithm 1 ASC Extraction based on Sparse Representation |

| Input: The vectorized SAR image , noise level , and overcomplete dictionary . Initialization: Initial parameters of the ASCs , reconstruction error , counter . 1. while do 2. Calculate correlation: , where represents conjugate transpose. 3. Estimate parameters: , . 4. Estimate amplitudes: , where represents the Moore-Penrose pseudo-inverse, denotes the overcomplete dictionary from the parameter set . 5. Update residual: . 6. Output: The estimated parameters set . |

3. Matching ASCs with Binary Target Region

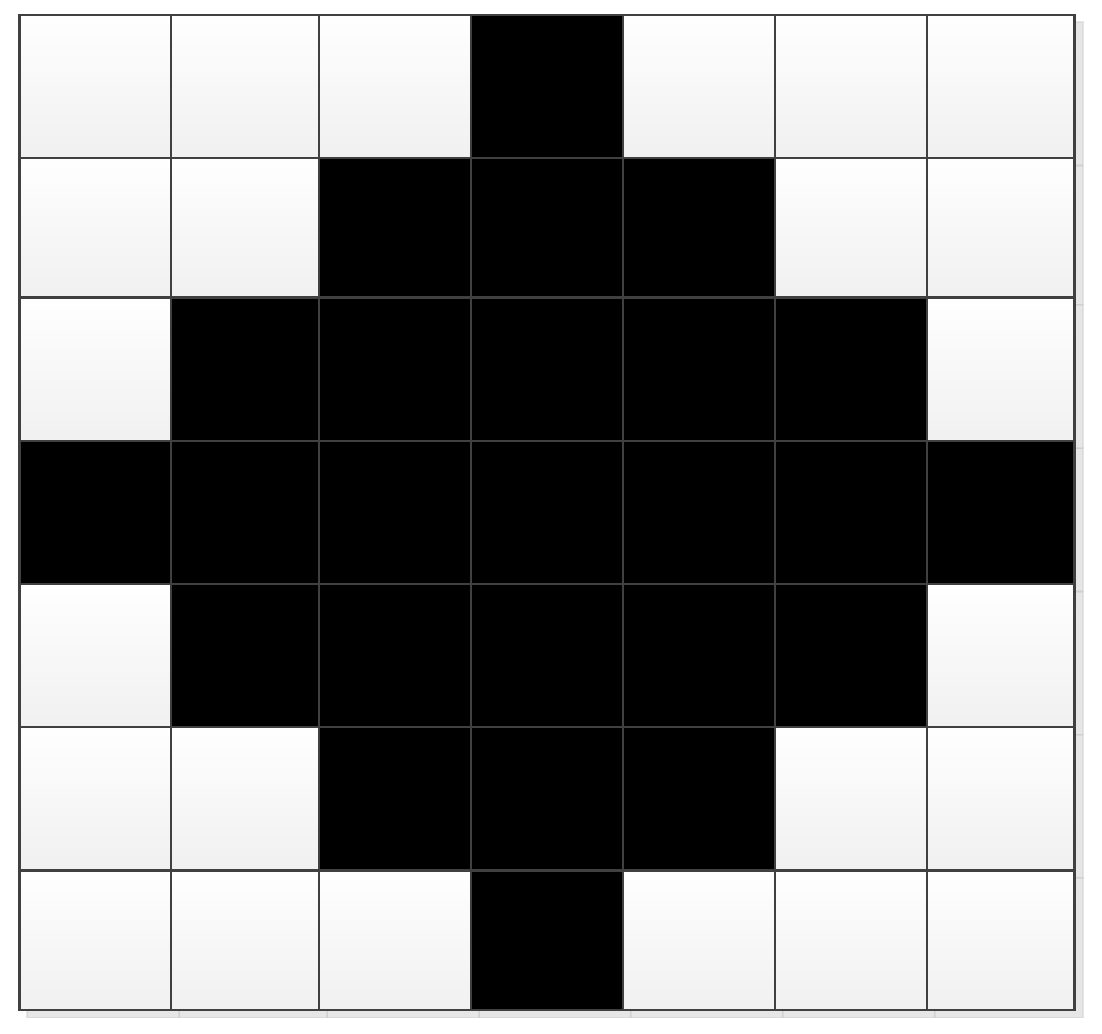

3.1. Region Prediction by ASC

3.2. Region Matching

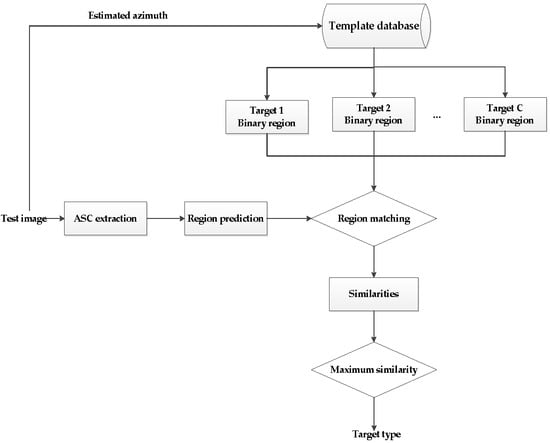

3.3. Target Recognition

- (1)

- The ASCs of the test image are estimated and predicted as binary regions.

- (2)

- The azimuth of the test image is estimated to select the corresponding template images.

- (3)

- Extract the binary target regions of all the selected template samples.

- (4)

- Matched the predicted regions to each of the template regions and calculate the similarity.

- (5)

- Decide the target label to be the template class, which achieves the maximum similarity.

4. Experiment on MSTAR Dataset

4.1. Experimental Setup

4.1.1. MSTAR Dataset

4.1.2. Reference Methods

4.2. Experiment under SOC

4.3. Experiment under EOCs

4.3.1. EOC 1-Configuration Variants

4.3.2. EOC 2-Large Depression Angle Variation

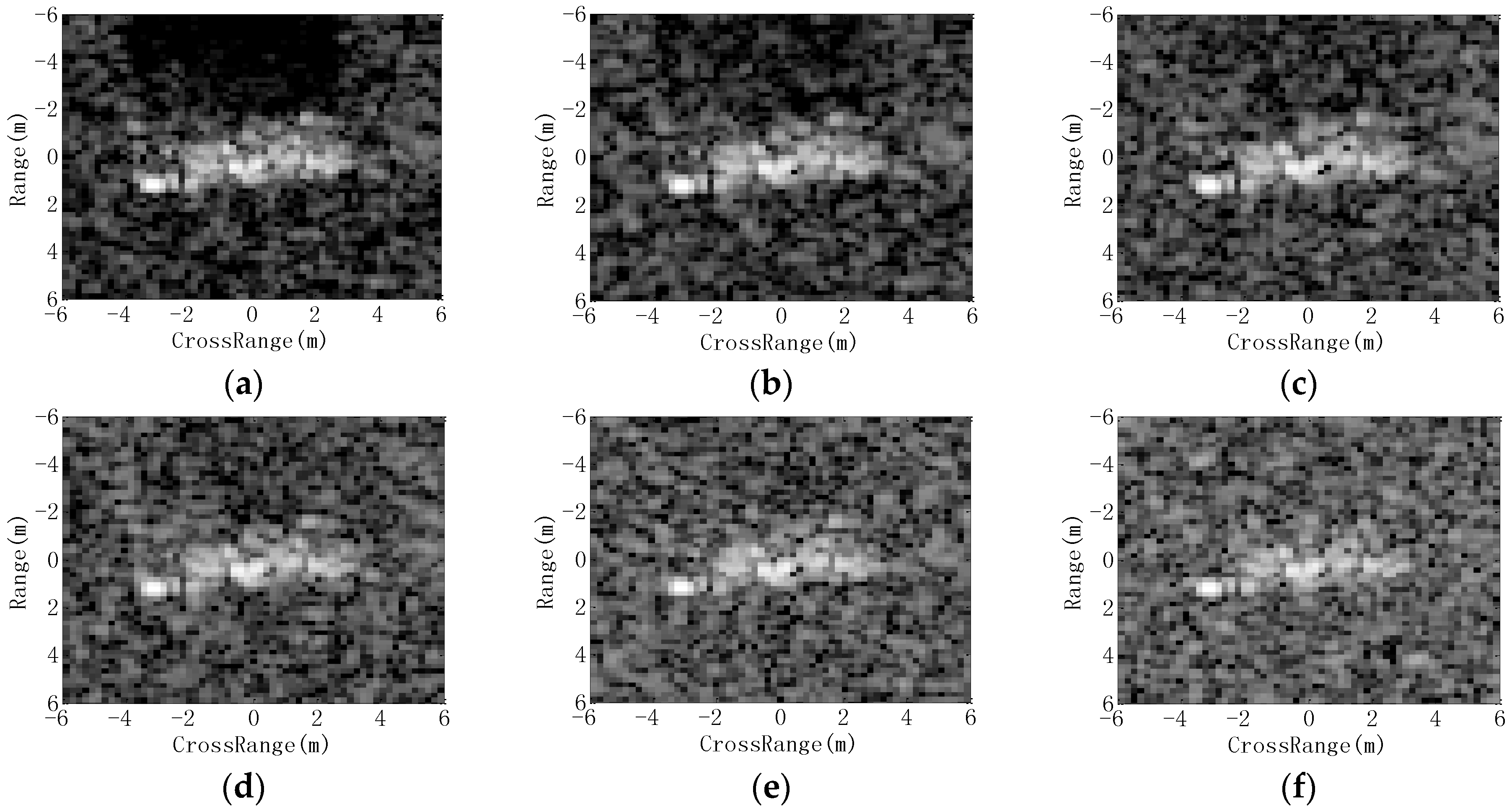

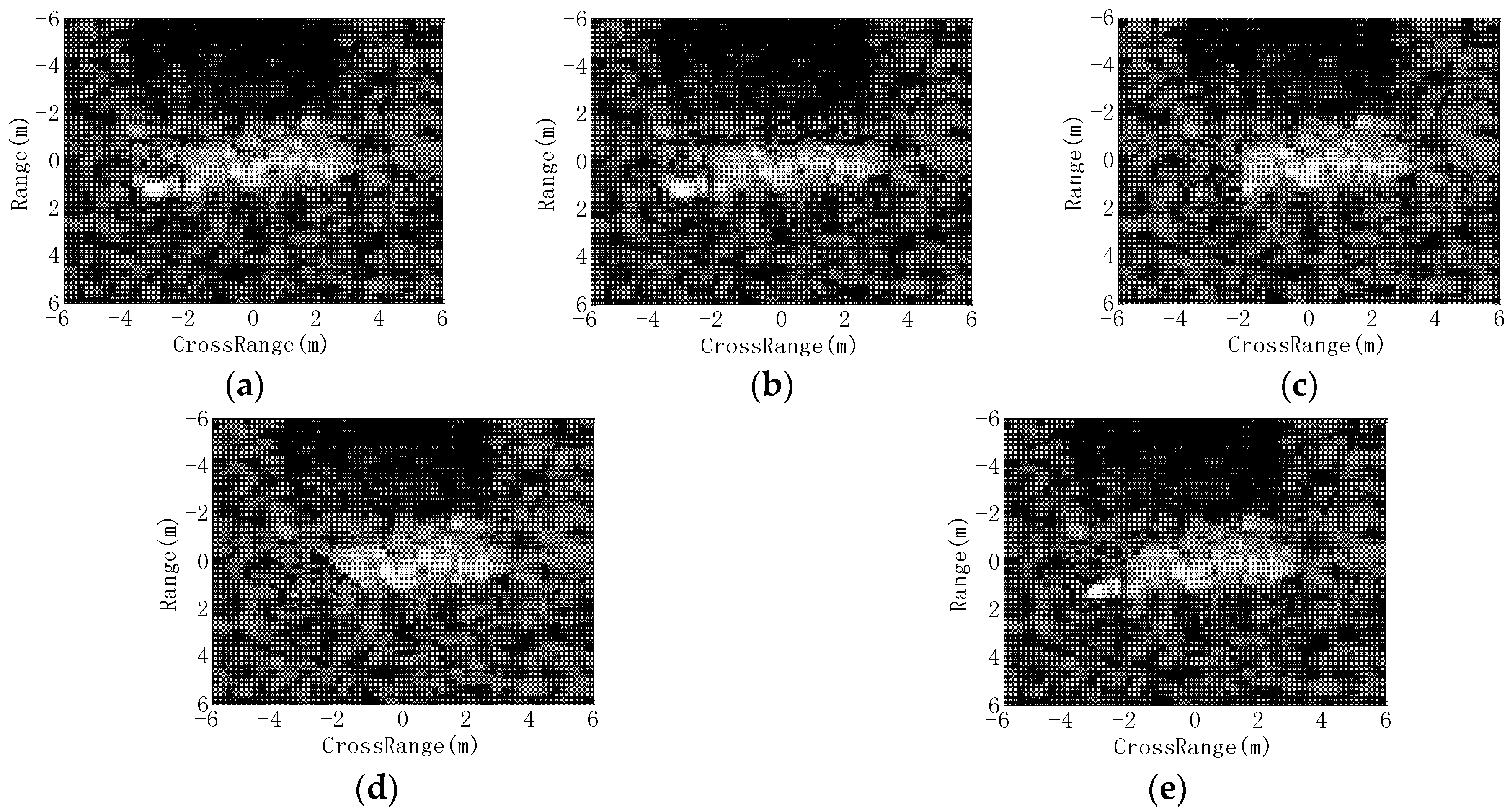

4.3.3. EOC 3-Noise Contamination

4.3.4. EOC 4-Partial Occlusion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- El-Darymli, K.; Gill, E.W.; McGuire, P.; Power, D.; Moloney, C. Automatic target recognition in synthetic aperture radar imagery: A state-of-the-art review. IEEE Access 2016, 4, 6014–6058. [Google Scholar] [CrossRef]

- Park, J.; Park, S.; Kim, K. New discrimination features for SAR automatic target recognition. IEEE Geosci. Remote Sens. Lett. 2013, 10, 476–480. [Google Scholar] [CrossRef]

- Ding, B.Y.; Wen, G.J.; Ma, C.H.; Yang, X.L. Target recognition in synthetic aperture radar images using binary morphological operations. J. Appl. Remote Sens. 2016, 10, 046006. [Google Scholar] [CrossRef]

- Amoon, M.; Rezai-rad, G. Automatic target recognition of synthetic aperture radar (SAR) images based on optimal selection of Zernike moment features. IET Comput. Vis. 2014, 8, 77–85. [Google Scholar] [CrossRef]

- Anagnostopulos, G.C. SVM-based target recognition from synthetic aperture radar images using target region outline descriptors. Nonlinear Anal. 2009, 71, e2934–e2939. [Google Scholar] [CrossRef]

- Ding, B.Y.; Wen, G.J.; Ma, C.H.; Yang, X.L. Decision fusion based on physically relevant features for SAR ATR. IET Radar Sonar Navig. 2017, 11, 682–690. [Google Scholar] [CrossRef]

- Papson, S.; Narayanan, R.M. Classification via the shadow region in SAR imagery. IEEE Trans. Aerosp. Electron. Syst. 2012, 48, 969–980. [Google Scholar] [CrossRef]

- Yuan, X.; Tang, T.; Xiang, D.L.; Li, Y.; Su, Y. Target recognition in SAR imagery based on local gradient ratio pattern. Int. J. Remote Sens. 2014, 35, 857–870. [Google Scholar] [CrossRef]

- Mishra, A.K. Validation of PCA and LDA for SAR ATR. In Proceedings of the 2008 IEEE Region 10 Conference, Hyderabad, India, 19–21 November 2008; pp. 1–6. [Google Scholar]

- Cui, Z.Y.; Cao, Z.J.; Yang, J.Y.; Feng, J.L.; Ren, H.L. Target recognition in synthetic aperture radar via non-negative matrix factorization. IET Radar Sonar Navig. 2015, 9, 1376–1385. [Google Scholar] [CrossRef]

- Huang, Y.L.; Pei, J.F.; Yang, J.Y.; Liu, X. Neighborhood geometric center scaling embedding for SAR ATR. IEEE Trans. Aerosp. Electron. Syst. 2014, 50, 180–192. [Google Scholar] [CrossRef]

- Yu, M.T.; Dong, G.G.; Fan, H.Y.; Kuang, G.Y. SAR target recognition via local sparse representation of multi-manifold regularized low-rank approximation. Remote Sens. 2018, 10, 211. [Google Scholar] [CrossRef]

- Liu, X.; Huang, Y.L.; Pei, J.F.; Yang, J.Y. Sample discriminant analysis for SAR ATR. IEEE Geosci. Remote Sens. Lett. 2014, 11, 2120–2124. [Google Scholar]

- Gerry, M.J.; Potter, L.C.; Gupta, I.J.; Merwe, A. A parametric model for synthetic aperture radar measurement. IEEE Trans. Antennas Propag. 1999, 47, 1179–1188. [Google Scholar] [CrossRef]

- Potter, L.C.; Mose, R.L. Attributed scattering centers for SAR ATR. IEEE Trans. Image Process. 1997, 6, 79–91. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chiang, H.; Moses, R.L.; Potter, L.C. Model-based classification of radar images. IEEE Trans. Inf. Theor. 2000, 46, 1842–1854. [Google Scholar] [CrossRef] [Green Version]

- Ding, B.Y.; Wen, G.J.; Zhong, J.R.; Ma, C.H.; Yang, X.L. Robust method for the matching of attributed scattering centers with application to synthetic aperture radar automatic target recognition. J. Appl. Remote Sens. 2016, 10, 016010. [Google Scholar] [CrossRef]

- Ding, B.Y.; Wen, G.J.; Huang, X.H.; Ma, C.H.; Yang, X.L. Target recognition in synthetic aperture radar images via matching of attributed scattering centers. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3334–3347. [Google Scholar] [CrossRef]

- Ding, B.Y.; Wen, G.J.; Zhong, J.R.; Ma, C.H.; Yang, X.L. A robust similarity measure for attributed scattering center sets with application to SAR ATR. Neurocomputing 2017, 219, 130–143. [Google Scholar] [CrossRef]

- Zhao, Q.; Principe, J.C. Support vector machines for synthetic radar automatic target recognition. IEEE Trans. Aerosp. Electron. Syst. 2001, 37, 643–654. [Google Scholar] [CrossRef]

- Liu, H.C.; Li, S.T. Decision fusion of sparse representation and support vector machine for SAR image target recognition. Neurocomputing 2013, 113, 97–104. [Google Scholar] [CrossRef]

- Sun, Y.J.; Liu, Z.P.; Todorovic, S.; Li, J. Adaptive boosting for SAR automatic target recognition. IEEE Trans. Aerosp. Electron. Syst. 2007, 43, 112–125. [Google Scholar] [CrossRef]

- Thiagarajan, J.J.; Ramamurthy, K.; Knee, P.P.; Spanias, A.; Berisha, V. Sparse representation for automatic target classification in SAR images. In Proceedings of the 2010 4th Communications, Control and Signal Processing (ISCCSP), Limassol, Cyprus, 3–5 March 2010. [Google Scholar]

- Song, H.B.; Ji, K.F.; Zhang, Y.S.; Xing, X.W.; Zou, H.X. Sparse representation-based SAR image target classification on the 10-class MSTAR data set. Appl. Sci. 2016, 6, 26. [Google Scholar] [CrossRef]

- Chen, S.Z.; Wang, H.P.; Xu, F.; Jin, Y.Q. Target classification using the deep convolutional networks for SAR images. IEEE Trans. Geosci. Remote Sens. 2016, 47, 1685–1697. [Google Scholar] [CrossRef]

- Wagner, S.A. SAR ATR by a combination of convolutional neural network and support vector machines. IEEE Trans. Aerosp. Electron. Syst. 2016, 52, 2861–2872. [Google Scholar] [CrossRef]

- Ding, J.; Chen, B.; Liu, H.W.; Huang, M.Y. Convolutional neural network with data augmentation for SAR target recognition. IEEE Geosci. Remote Sens. Lett. 2016, 13, 364–368. [Google Scholar] [CrossRef]

- Du, K.N.; Deng, Y.K.; Wang, R.; Zhao, T.; Li, N. SAR ATR based on displacement-and rotation-insensitive CNN. Remote Sens. Lett. 2016, 7, 895–904. [Google Scholar] [CrossRef]

- Huang, Z.L.; Pan, Z.X.; Lei, B. Transfer learning with deep convolutional neural networks for SAR target classification with limited labeled data. Remote Sens. 2017, 9, 907. [Google Scholar] [CrossRef]

- Zhou, J.X.; Shi, Z.G.; Cheng, X.; Fu, Q. Automatic target recognition of SAR images based on global scattering center model. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3713–3729. [Google Scholar]

- Ding, B.Y.; Wen, G.J.; Huang, X.H.; Ma, C.H.; Yang, X.L. Target recognition in SAR images by exploiting the azimuth sensitivity. Remote Sens. Lett. 2017, 8, 821–830. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 3rd ed.; Prentice Hall: Englewood, NJ, USA, 2008. [Google Scholar]

- Liu, H.W.; Jiu, B.; Li, F.; Wang, Y.H. Attributed scattering center extraction algorithm based on sparse representation with dictionary refinement. IEEE Trans. Antennas Propag. 2017, 65, 2604–2614. [Google Scholar] [CrossRef]

- Cong, Y.L.; Chen, B.; Liu, H.W.; Jiu, B. Nonparametric Bayesian attributed scattering center extraction for synthetic aperture radar targets. IEEE Trans. Signal Process. 2016, 64, 4723–4736. [Google Scholar] [CrossRef]

- Dong, G.G.; Kuang, G.Y. Classification on the monogenic scale space: Application to target recognition in SAR image. IEEE Tran. Image Process. 2015, 24, 2527–2539. [Google Scholar] [CrossRef] [PubMed]

- Chang, C.; Lin, C. LIBSVM: A library for support vector machine. ACM Trans. Intell. Syst. Technol. 2011, 2, 389–396. [Google Scholar]

- Doo, S.; Smith, G.; Baker, C. Target classification performance as a function of measurement uncertainty. In Proceedings of the 5th Asia-Pacific Conference on Synthetic Aperture Radar, Singapore, 1–4 September 2015. [Google Scholar]

- Ding, B.Y.; Wen, G.J. Target recognition of SAR images based on multi-resolution representation. Remote Sens. Lett. 2017, 8, 1006–1014. [Google Scholar] [CrossRef]

- Ding, B.Y.; Wen, G.J. Sparsity constraint nearest subspace classifier for target recognition of SAR images. J. Visual Commun. Image Represent. 2018, 52, 170–176. [Google Scholar] [CrossRef]

- Bhanu, B.; Lin, Y. Stochastic models for recognition of occluded targets. Pattern Recogn. 2003, 36, 2855–2873. [Google Scholar] [CrossRef]

- Ding, B.Y.; Wen, G.J. Exploiting multi-view SAR images for robust target recognition. Remote Sens. 2017, 9, 1150. [Google Scholar] [CrossRef]

- Lopera, O.; Heremans, R.; Pizurica, A.; Dupont, Y. Filtering speckle noise in SAS images to improve detection and identification of seafloor targets. In Proceedings of the International Waterside Security Conference, Carrara, Italy, 3–5 November 2010. [Google Scholar]

- Idol, T.; Haack, B.; Mahabir, R. Radar speckle reduction and derived texture measures for land cover/use classification: A case study. Geocarto Int. 2017, 32, 18–29. [Google Scholar] [CrossRef]

- Qiu, F.; Berglund, J.; Jensen, J.R.; Thakkar, P.; Ren, D. Speckle noise reduction in SAR imagery using a local adaptive median filter. Gisci. Remote Sens. 2004, 41, 244–266. [Google Scholar] [CrossRef]

| Target | Serial | Template/Training Set | Test Set | ||

|---|---|---|---|---|---|

| Depr. | Number | Depr. | Number | ||

| BMP2 | 9563 | 17° | 233 | 15° | 195 |

| 9566 | 17° | 232 | 15° | 196 | |

| c21 | 17° | 233 | 15° | 196 | |

| BTR70 | c71 | 17° | 233 | 15° | 196 |

| T72 | 132 | 17° | 232 | 15° | 196 |

| 812 | 17° | 231 | 15° | 195 | |

| S7 | 17° | 228 | 15° | 191 | |

| ZSU23/4 | D08 | 17° | 299 | 15° | 274 |

| ZIL131 | E12 | 17° | 299 | 15° | 274 |

| T62 | A51 | 17° | 299 | 15° | 273 |

| BTR60 | k10yt7532 | 17° | 256 | 15° | 195 |

| D7 | 92v13015 | 17° | 299 | 15° | 274 |

| BDRM2 | E71 | 17° | 298 | 15° | 274 |

| 2S1 | B01 | 17° | 299 | 15° | 274 |

| Method | Feature | Classifier | Reference |

|---|---|---|---|

| SVM | Feature vector from PCA | SVM | [20] |

| SRC | Feature vector from PCA | SRC | [24] |

| A-ConvNet | Image intensities | CNN | [28] |

| ASC Matching | ASCs | One-to-one matching | [18] |

| Region Matching | Binary target region | Region matching | [3] |

| Target | BMP2 | BTR70 | T72 | T62 | BDRM2 | BTR60 | ZSU23/4 | D7 | ZIL131 | 2S1 | PCC (%) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| BMP2 | 553 | 6 | 7 | 0 | 0 | 3 | 2 | 0 | 1 | 2 | 96.44 |

| BTR70 | 0 | 196 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 100 |

| T72 | 6 | 4 | 562 | 0 | 0 | 0 | 2 | 5 | 3 | 0 | 96.73 |

| T62 | 0 | 0 | 0 | 274 | 0 | 0 | 0 | 0 | 0 | 0 | 100 |

| BDRM2 | 0 | 0 | 0 | 0 | 274 | 0 | 0 | 0 | 0 | 0 | 100 |

| BTR60 | 1 | 0 | 0 | 0 | 0 | 193 | 0 | 1 | 0 | 0 | 98.94 |

| ZSU23/4 | 1 | 0 | 0 | 1 | 1 | 0 | 269 | 0 | 1 | 1 | 98.16 |

| D7 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 271 | 0 | 1 | 98.88 |

| ZIL131 | 0 | 2 | 0 | 0 | 1 | 0 | 2 | 0 | 269 | 0 | 98.18 |

| 2S1 | 0 | 4 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 269 | 98.18 |

| Average | 98.34 |

| Method | Proposed | SVM | SRC | A-ConvNet | ASC Matching | Region Matching |

|---|---|---|---|---|---|---|

| PCC (%) | 98.34 | 95.66 | 94.68 | 97.52 | 95.30 | 94.68 |

| Time consumption (ms) | 75.8 | 55.3 | 60.5 | 63.2 | 125.3 | 88.6 |

| Depr. | BMP2 | BDRM2 | BTR70 | T72 | |

|---|---|---|---|---|---|

| Template set | 17° | 233 (9563) | 298 | 233 | 232 (132) |

| Test set | 15°, 17° | 428 (9566) 429 (c21) | 0 | 0 | 426 (812) 573 (A04) 573 (A05) 573 (A07) 567 (A10) |

| Target | Serial | BMP2 | BRDM2 | BTR-70 | T72 | PCC (%) |

|---|---|---|---|---|---|---|

| BMP2 | 9566 | 412 | 11 | 2 | 3 | 96.26 |

| c21 | 420 | 4 | 2 | 3 | 97.90 | |

| T72 | 812 | 18 | 1 | 0 | 407 | 95.54 |

| A04 | 5 | 8 | 0 | 560 | 97.73 | |

| A05 | 1 | 1 | 0 | 571 | 99.65 | |

| A07 | 3 | 2 | 3 | 565 | 98.60 | |

| A10 | 7 | 0 | 2 | 558 | 98.41 | |

| Average | 98.58 |

| Method | Proposed | SVM | SRC | A-ConvNet | ASC Matching | Region Matching |

|---|---|---|---|---|---|---|

| PCC (%) | 98.58 | 95.67 | 95.44 | 96.16 | 97.12 | 96.55 |

| Depr. | 2S1 | BDRM2 | ZSU23/4 | |

|---|---|---|---|---|

| Template set | 17° | 299 | 298 | 299 |

| Test set | 30° | 288 | 287 | 288 |

| 45° | 303 | 303 | 303 |

| Depr. | Target | Classification Results | PCC (%) | Average (%) | ||

|---|---|---|---|---|---|---|

| 2S1 | BDRM2 | ZSU23/4 | ||||

| 30° | 2S1 | 278 | 6 | 4 | 96.53 | 97.68 |

| BDRM2 | 1 | 285 | 1 | 99.30 | ||

| ZSU23/4 | 4 | 4 | 280 | 97.22 | ||

| 45° | 2S1 | 229 | 48 | 26 | 75.58 | 75.82 |

| BDRM2 | 10 | 242 | 51 | 79.87 | ||

| ZSU23/4 | 54 | 31 | 218 | 71.95 | ||

| Method | PCC (%) | |

|---|---|---|

| 30° | 45° | |

| Proposed | 97.68 | 75.82 |

| SVM | 96.87 | 65.05 |

| SRC | 96.24 | 64.32 |

| A-ConvNet | 97.16 | 66.27 |

| ASC Matching | 96.56 | 71.35 |

| Region Matching | 95.82 | 64.72 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tan, J.; Fan, X.; Wang, S.; Ren, Y. Target Recognition of SAR Images via Matching Attributed Scattering Centers with Binary Target Region. Sensors 2018, 18, 3019. https://doi.org/10.3390/s18093019

Tan J, Fan X, Wang S, Ren Y. Target Recognition of SAR Images via Matching Attributed Scattering Centers with Binary Target Region. Sensors. 2018; 18(9):3019. https://doi.org/10.3390/s18093019

Chicago/Turabian StyleTan, Jian, Xiangtao Fan, Shenghua Wang, and Yingchao Ren. 2018. "Target Recognition of SAR Images via Matching Attributed Scattering Centers with Binary Target Region" Sensors 18, no. 9: 3019. https://doi.org/10.3390/s18093019