Robust Visual Tracking Based on Adaptive Convolutional Features and Offline Siamese Tracker

Abstract

:1. Introduction

2. Related Work

2.1. Tracker with Correlation Filter

2.2. Tracker with Deep Features

2.3. Trackers with Feature Dimensionality Reduction

2.4. Trackesr with Siamese Networks

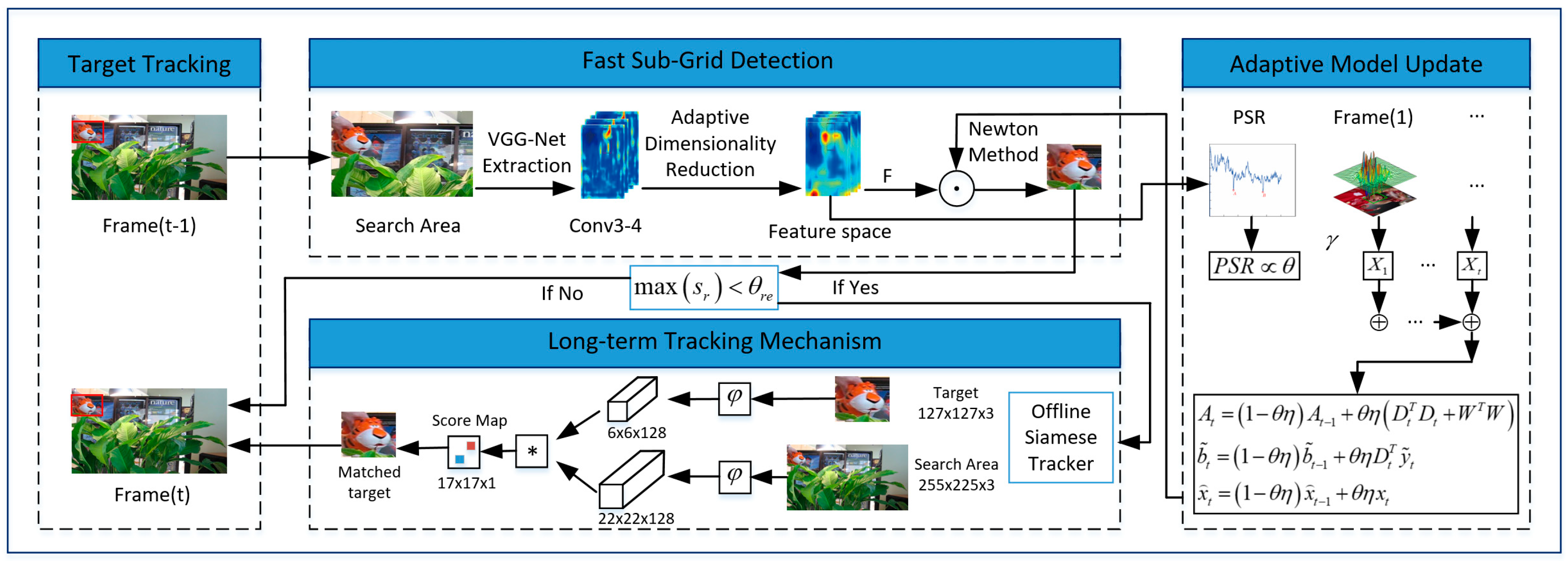

3. Proposed Method

3.1. Baseline

3.2. Adaptive Convolutional Features

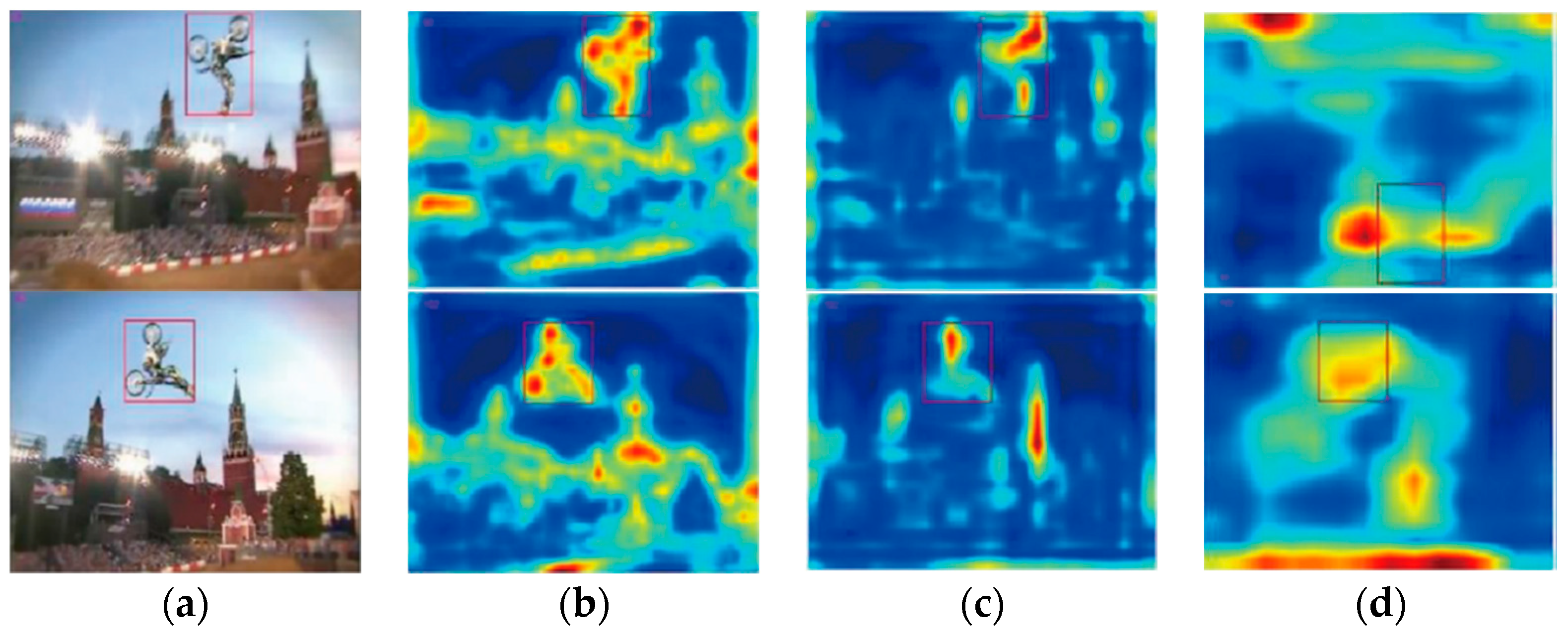

3.2.1. Convolutional Features

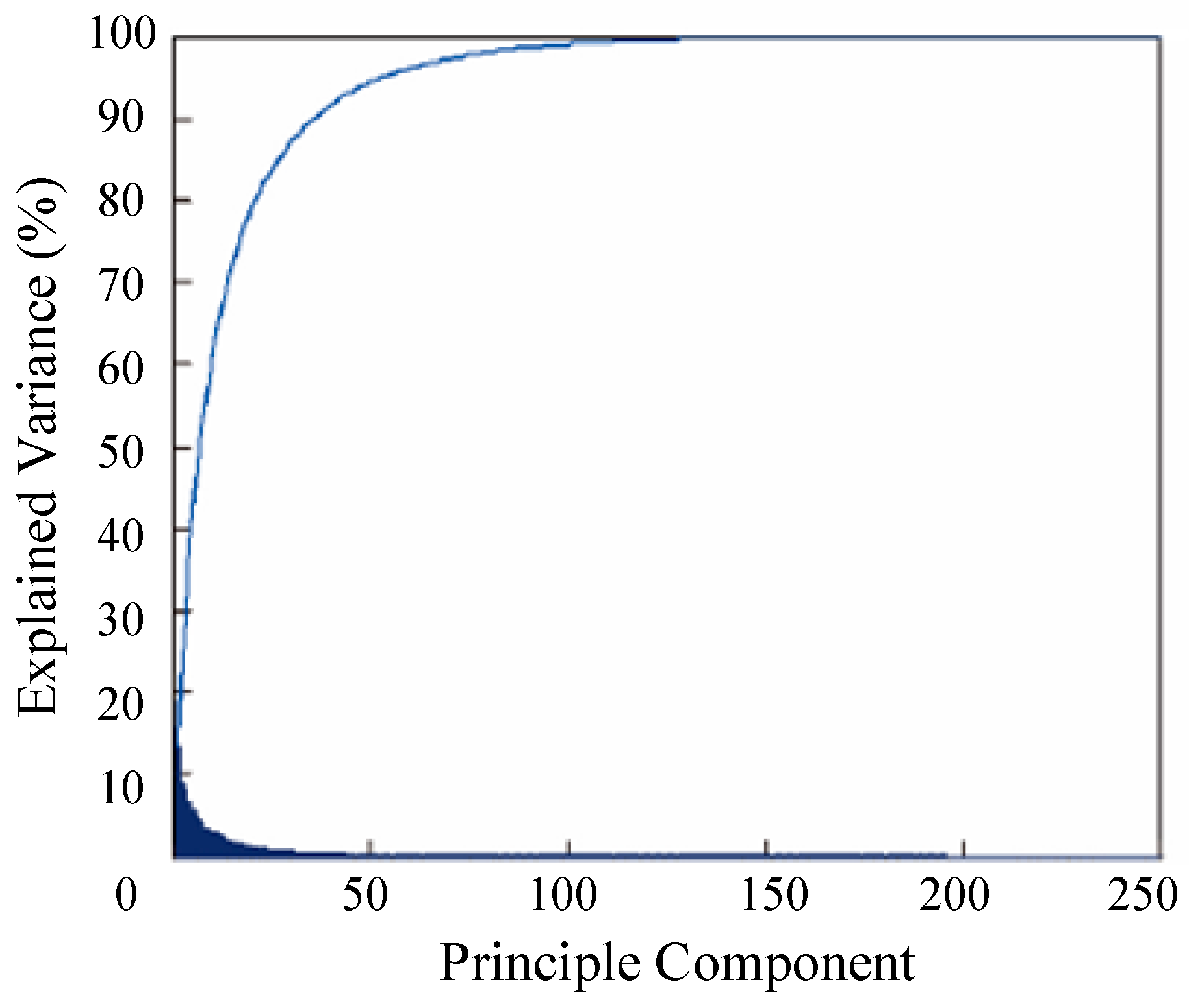

3.2.2. Adaptive Dimensionality Reduction

3.2.3. Fast Sub-Grid Detection

3.2.4. Adaptive Model Update

3.3. Long-Term Tracking Mechanism Based on Siamese Offline Tracker

| Algorithm 1: Proposed tracking algorithm. |

| Input: Image ; Initial target position and scale ; previous target position and scale |

| Output: Estimated object position and scale . |

| For each |

| Extract the deep feature space thought the pre-trained VGG-Net; |

| Update matrix and by linear interpolation using Equation (13) and (14). The SVD is performed and a new is found; |

| Update the low dimensional appearance feature space using Equation (15); |

| Compute the confidence of the target position using Equation (18); |

| Update the tracking model , and using Equations (19)–(22); |

| Compute the estimated object position and scale using fast sub-grid detection; |

| If , |

| Update the estimated object position and scale using the offline Siamese tracker; |

| Else |

| Output the estimated object position and scale directly; |

| End |

4. Experimental Results and Analysis

4.1. Implementation Details

4.2. Reliablity Ablation Study

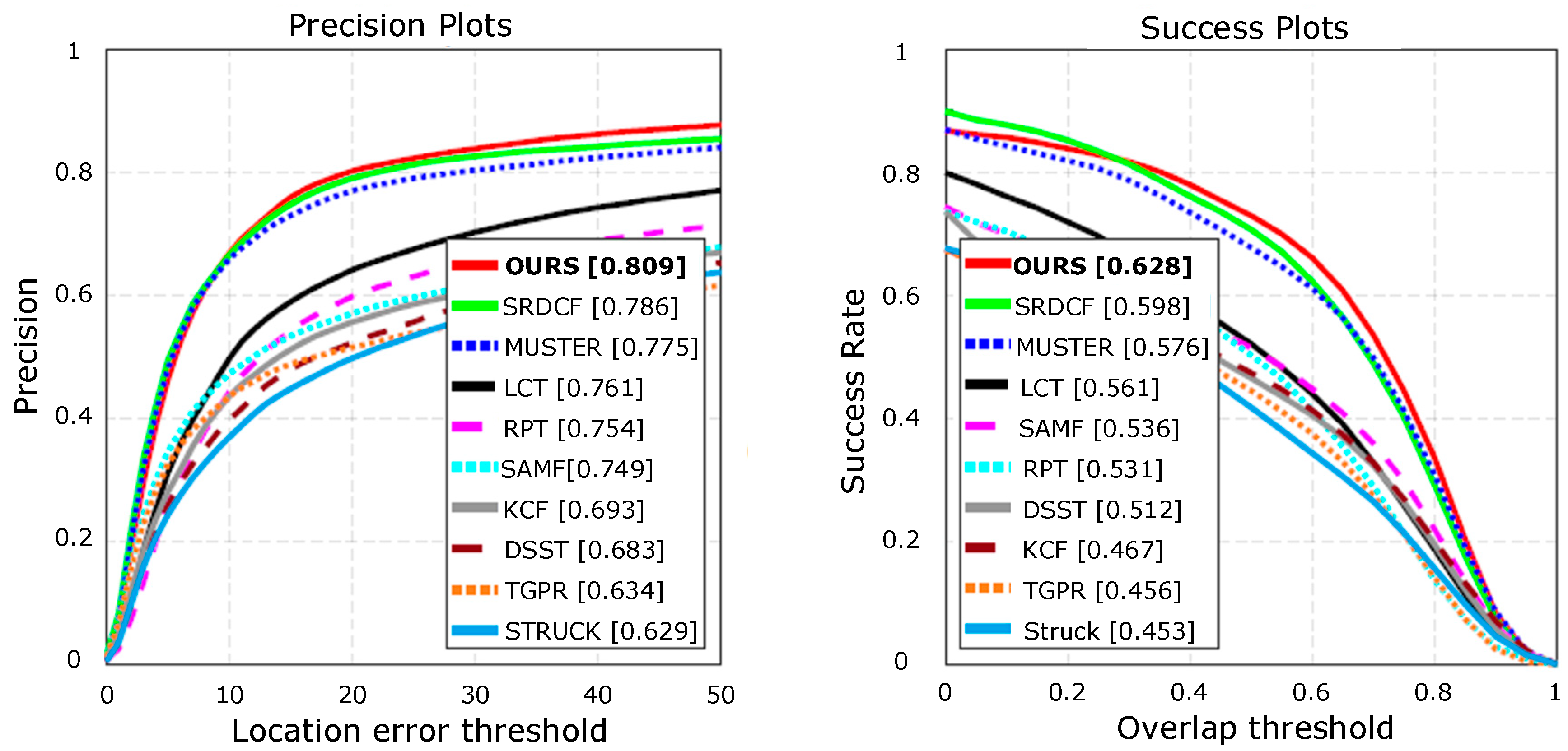

4.3. OTB-2015 Benchmark

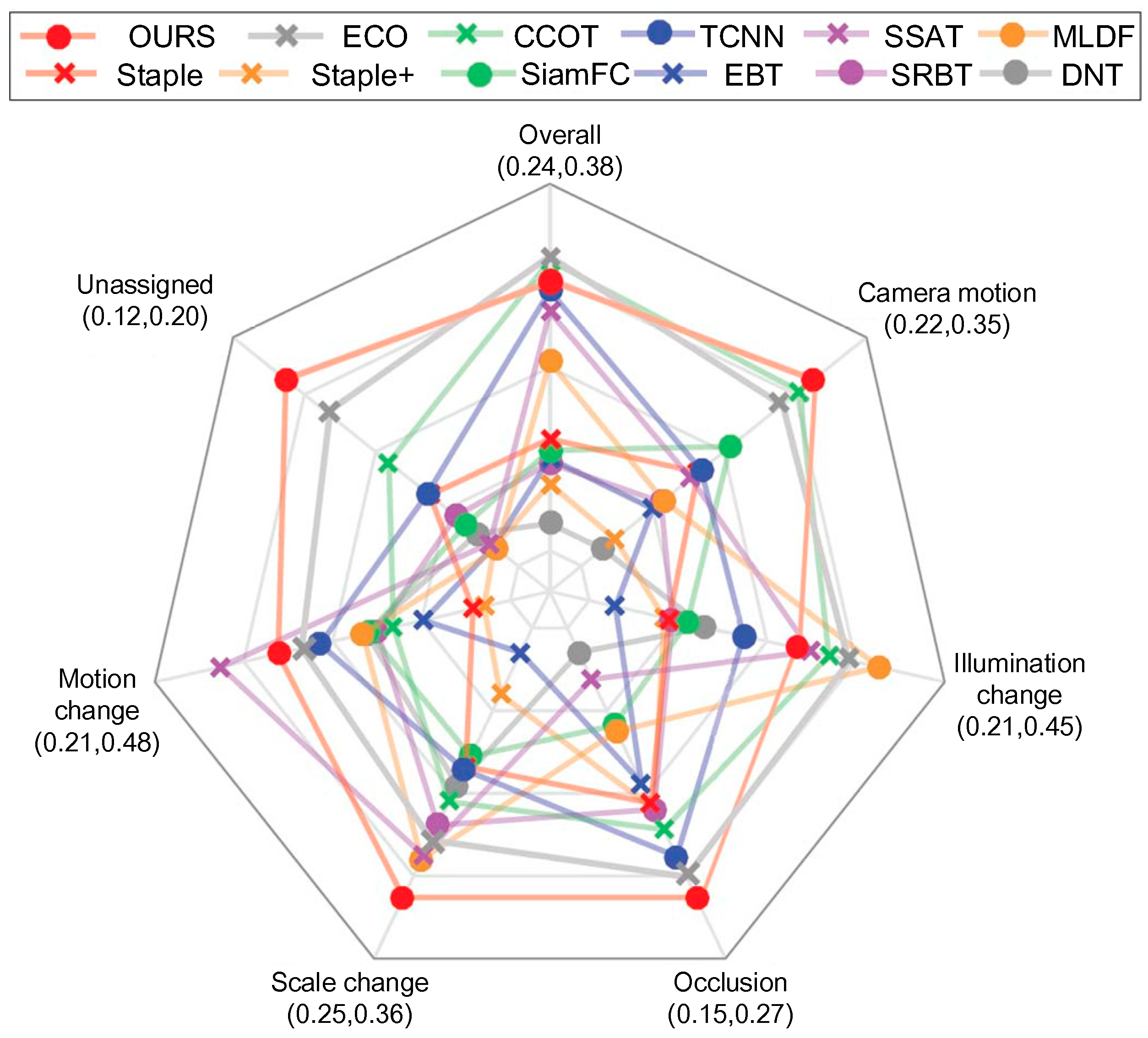

4.4. VOT2016 Benchmark

4.5. Per-Attribute Analysis

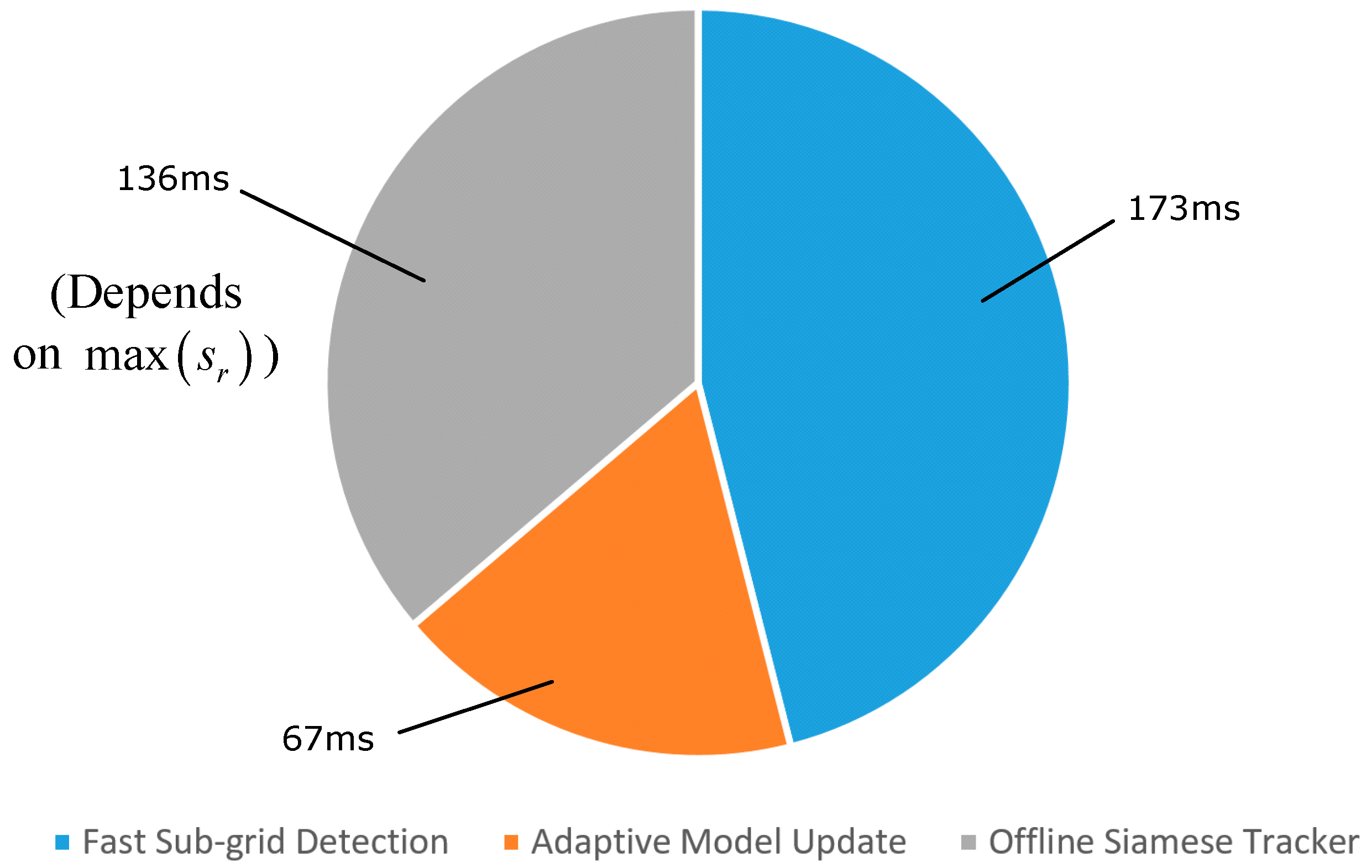

4.6. Tracking Speed Analysis

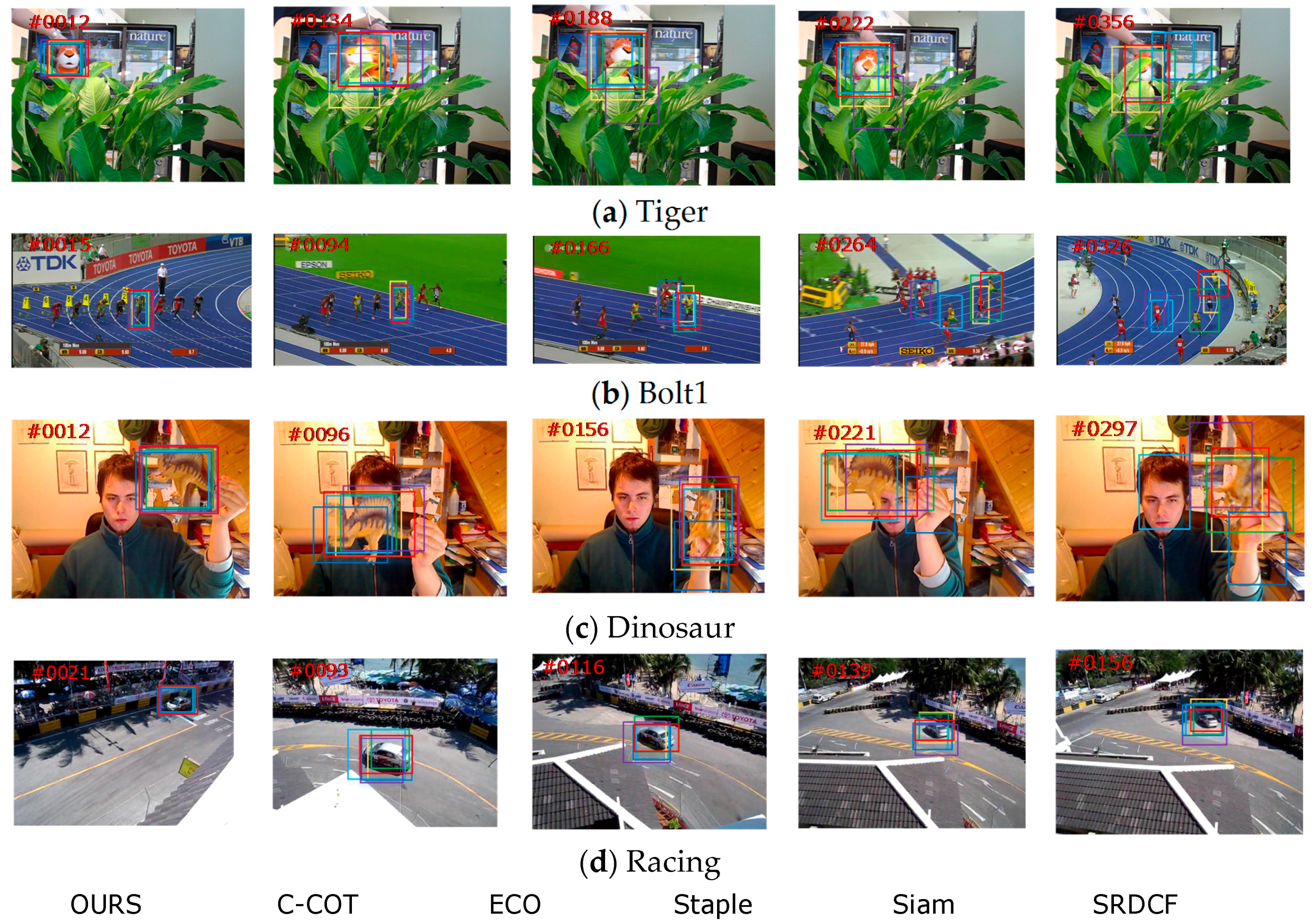

4.7. Qualitative Evaluation

4.7.1. Qualitative Evaluation on the OTB Benchmark

4.7.2. Qualitative Evaluation on VOT Benchmark

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Smeulders, A.W.; Chu, D.M.; Cucchiara, R.; Calderara, S.; Dehghan, A.; Shah, M. Visual Tracking: An Experimental Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1442–1468. [Google Scholar] [PubMed] [Green Version]

- Danelljan, M.; Hager, G.; Khan, F.S.; Michael, F. Learning Spatially Regularized Correlation Filters for Visual Tracking. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 13–15 December 2015; pp. 4310–4318. [Google Scholar]

- Danelljan, M.; Häger, G.; Khan, F.; Felsberg, M. Accurate Scale Estimation for Robust Visual Tracking. In Proceedings of the British Machine Vision Conference (BMVC), Nottingham, UK, 1–5 September 2014. [Google Scholar]

- Henriques, J.F.; Rui, C.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 583–596. [Google Scholar] [CrossRef] [PubMed]

- Ma, C.; Yang, X.; Zhang, C.; Yang, M.H. Long-term correlation tracking. In Proceedings of the Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 5388–5396. [Google Scholar]

- Galoogahi, H.K.; Sim, T.; Lucey, S. Correlation filters with limited boundaries. In Proceedings of the Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 4630–4638. [Google Scholar]

- Fernandez, J.A.; Boddeti, V.N.; Rodriguez, A.; Vijaya Kumar, B.V. Zero-Aliasing Correlation Filters for Object Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1702–1715. [Google Scholar] [CrossRef] [PubMed]

- Hare, S.; Saffari, A.; Torr, P.H.S. Struck: Structured output tracking with kernels. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 263–270. [Google Scholar]

- Wang, N.; Yeung, D.Y. Learning a deep compact image representation for visual tracking. In Proceedings of the International Conference on Neural Information Processing Systems, Daegu, Korea, 3–7 November 2013; pp. 809–817. [Google Scholar]

- Wang, S.; Lu, H.; Yang, F.; Yang, M.H. Superpixel tracking. In Proceedings of the International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 1323–1330. [Google Scholar]

- Wen, L.; Cai, Z.; Lei, Z.; Yi, D.; Li, S.Z. Robust Online Learned Spatio-Temporal Context Model for Visual Tracking. IEEE Trans. Image Process. 2014, 23, 785–796. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. Comput. Sci. 2014, arXiv:1409.1556. [Google Scholar]

- Adam, A.; Rivlin, E.; Shimshoni, I. Robust Fragments-based Tracking using the Integral Histogram. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), New York, NY, USA, 17–22 June 2006; pp. 798–805. [Google Scholar]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. Convolutional Features for Correlation Filter Based Visual Tracking. In Proceedings of the IEEE International Conference on Computer Vision Workshop (ICCV), Santiago, Chile, 13–15 December 2015; pp. 621–629. [Google Scholar]

- Valmadre, J.; Bertinetto, L.; Henriques, J.; Vedaldi, A.; Torr, P.H.S. End-to-End Representation Learning for Correlation Filter Based Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5000–5008. [Google Scholar]

- Wang, N.; Li, S.; Gupta, A.; Yeung, D.-Y. Transferring Rich Feature Hierarchies for Robust Visual Tracking. Comput. Sci. 2015, arXiv:1501.04587. [Google Scholar]

- Zhang, K.; Liu, Q.; Wu, Y.; Yang, M.H. Robust Visual Tracking via Convolutional Networks without Training. IEEE Trans Image Process. 2016, 25, 1779–1792. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Li, Y.; Porikli, F. DeepTrack: Learning Discriminative Feature Representations Online for Robust Visual Tracking. IEEE Trans. Image Process. 2016, 25, 1834–1848. [Google Scholar] [CrossRef] [PubMed]

- Ma, C.; Huang, J.B.; Yang, X.; Yang, M.H. Hierarchical Convolutional Features for Visual Tracking. IEEE Trans. Image Process. 2015, 25, 1834–1848. [Google Scholar]

- Danelljan, M.; Robinson, A.; Khan, F.S.; Felsberg, M. Beyond Correlation Filters: Learning Continuous Convolution Operators for Visual Tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 472–488. [Google Scholar]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. ECO: Efficient Convolution Operators for Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6931–6939. [Google Scholar]

- Danelljan, M.; Khan, F.S.; Felsberg, M.; van de Weijer, J. Adaptive Color Attributes for Real-Time Visual Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 24–27 June 2014; pp. 1090–1097. [Google Scholar]

- Huang, H.; Luo, F.; Ma, Z.; Feng, H. Semi-Supervised Dimensionality Reduction of Hyperspectral Image Based on Sparse Multi-Manifold Learning. J. Comput. Commun. 2017, 3, 33–39. [Google Scholar] [CrossRef]

- Cai, Y.; Yang, D.; Mao, N.; Yang, F. Visual Tracking Algorithm Based on Adaptive Convolutional Features. Acta Opt. Sin. 2017, 37, 0315002. [Google Scholar]

- Harandi, M.; Salzmann, M.; Hartley, R. Dimensionality Reduction on SPD Manifolds: The Emergence of Geometry-Aware Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 48–62. [Google Scholar] [CrossRef] [PubMed]

- Tao, R.; Gavves, E.; Smeulders, A.W.M. Siamese instance search for tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26–31 June 2016; pp. 1420–1429. [Google Scholar]

- Held, D.; Thrun, S.; Savarese, S. Learning to track at 100 fps with deep regression networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26–31 June 2016; pp. 749–765. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H.S. Fully-Convolutional Siamese Networks for Object Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26–31 June 2016; pp. 850–865. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vision 2014, 115, 211–252. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the International Conference on Neural Information Processing Systems, Doha, Qatar, 12–15 November 2012; pp. 1097–1105. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26–31 June 2016; pp. 770–778. [Google Scholar]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. IEEE Comput. Vision Pattern Recogn. 2010, 119, 2544–2550. [Google Scholar]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Tracking-Learning-Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1409–1422. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Vedaldi, A.; Lenc, K. MatConvNet: Convolutional Neural Networks for MATLAB. In Proceedings of the ACM International Conference on Multimedia, Brisbane, Australia, 26–30 June 2015; pp. 689–692. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.H. Object Tracking Benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [PubMed]

- Gao, J.; Ling, H.; Hu, W.; Xing, J. Transfer Learning Based Visual Tracking with Gaussian Processes Regression. Springer 2014, 8691, 188–203. [Google Scholar] [Green Version]

- Li, Y.; Zhu, J.A. Scale Adaptive Kernel Correlation Filter Tracker with Feature Integration. In Proceedings of the IEEE European Conference on Computer Vision Workshops (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 254–265. [Google Scholar]

- Li, Y.; Zhu, J.; Hoi, S.C. Reliable Patch Trackers: Robust visual tracking by exploiting reliable patches. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 353–361. [Google Scholar]

- Hong, Z.; Chen, Z.; Wang, C.; Mei, X.; Prokhorov, D.; Tao, D. MUlti-Store Tracker (MUSTer): A cognitive psychology inspired approach to object tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 749–758. [Google Scholar]

- Čehovin, L.; Leonardis, A.; Kristan, M. Visual Object Tracking Performance Measures Revisited. IEEE Signal Process. Soc. 2016, 25, 1261. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H.S. Staple: Complementary Learners for Real-Time Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26–31 June 2016; pp. 1401–1409. [Google Scholar]

- Roffo, G.; Kristan, M.; Matas, J. The Visual Object Tracking VOT2016 challenge results. In Proceedings of the IEEE European Conference on Computer Vision Workshops (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; pp. 777–823. [Google Scholar]

- Nam, H.; Han, B. Learning multi-domain convolutional neural networks for visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26–31 June 2016; pp. 4293–4302. [Google Scholar]

- Wang, L.; Ouyang, W.; Wang, X.; Lu, H. Visual tracking with fully convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 13–15 December 2015; pp. 3119–3127. [Google Scholar]

- Zhu, G.; Porikli, F.; Li, H. Beyond local search: Tracking objects everywhere with instance-specific proposals. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26–31 June 2016; pp. 943–951. [Google Scholar]

| Tracker | Adr. | Amu. | St. | |||

|---|---|---|---|---|---|---|

| x | x | x | 0.329 | 0.59 | 0.83 | |

| x | x | - | 0.293 | 0.49 | 1.12 | |

| - | x | x | 0.282 | 0.47 | 0.87 | |

| x | - | x | 0.256 | 0.48 | 1.32 | |

| - | - | - | 0.228 | 0.45 | 1.58 |

| Tracker | |||

|---|---|---|---|

| Ours | 0.329 | 0.59 | 0.83 |

| ECO | 0.374 | 0.54 | 0.76 |

| CCOT | 0.331 | 0.52 | 0.85 |

| TCNN | 0.325 | 0.54 | 0.96 |

| SSAT | 0.321 | 0.57 | 1.04 |

| MLDF | 0.311 | 0.48 | 0.83 |

| Staple | 0.295 | 0.54 | 1.35 |

| DDC | 0.293 | 0.53 | 1.23 |

| EBT | 0291 | 0.44 | 0.90 |

| SiamFC | 0.284 | 0.52 | 0.87 |

| SRBT | 0.286 | 0.55 | 1.32 |

| Tracker | OURS | CCOT | ECO | SiamFC | SRDCF | DeepSRDCF | Staple | DSST | KCF |

|---|---|---|---|---|---|---|---|---|---|

| Average Fps | 4.6 | 1.2 | 6.6 | 8.1 | 7.3 | 2.8 | 62.3 | 17.4 | 112.4 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Wang, M. Robust Visual Tracking Based on Adaptive Convolutional Features and Offline Siamese Tracker. Sensors 2018, 18, 2359. https://doi.org/10.3390/s18072359

Zhang X, Wang M. Robust Visual Tracking Based on Adaptive Convolutional Features and Offline Siamese Tracker. Sensors. 2018; 18(7):2359. https://doi.org/10.3390/s18072359

Chicago/Turabian StyleZhang, Ximing, and Mingang Wang. 2018. "Robust Visual Tracking Based on Adaptive Convolutional Features and Offline Siamese Tracker" Sensors 18, no. 7: 2359. https://doi.org/10.3390/s18072359