Novel Adaptive Laser Scanning Method for Point Clouds of Free-Form Objects

Abstract

:1. Introduction

2. Instruments and Data Acquisition

2.1. Sensors

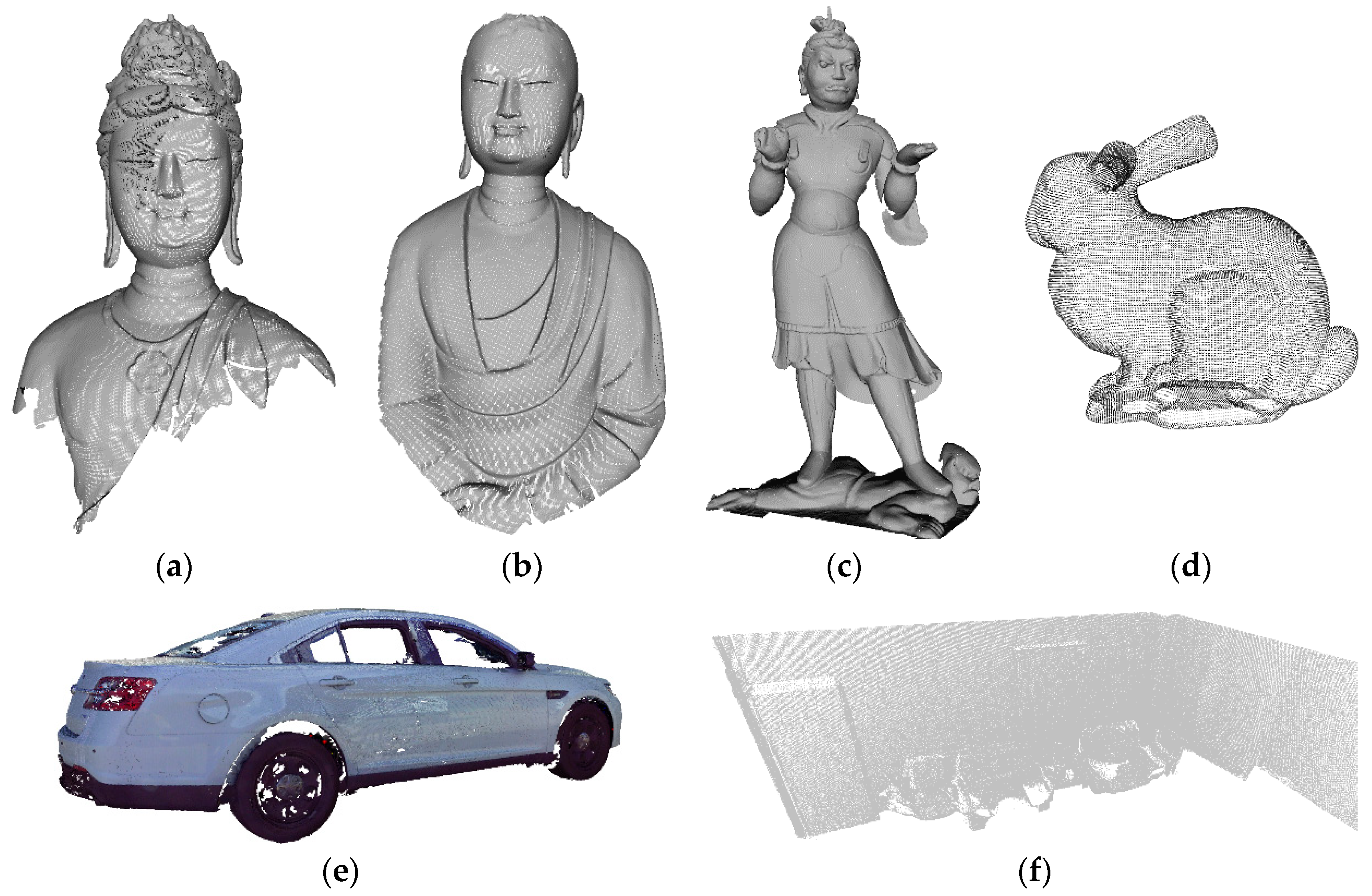

2.2. Measurements and Experimental Data

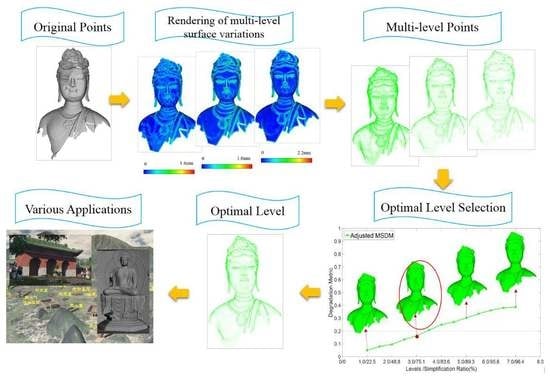

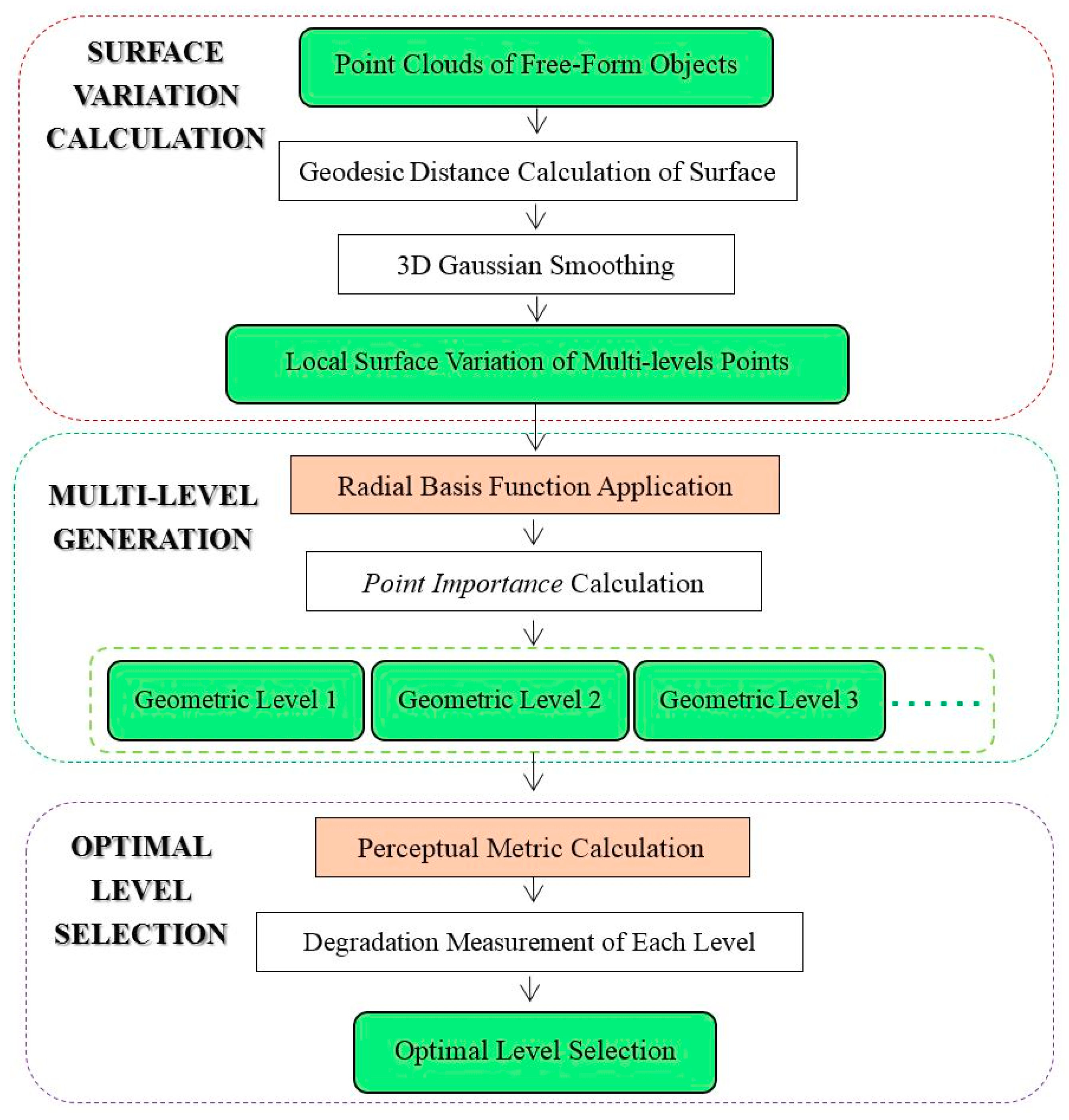

3. Methodology

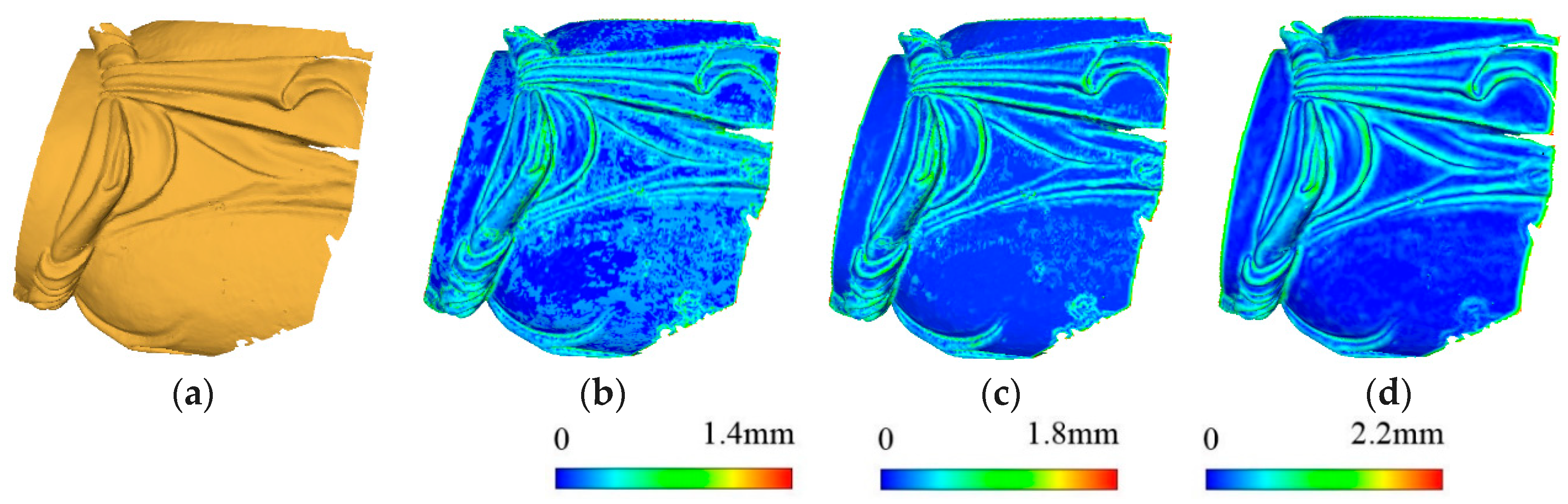

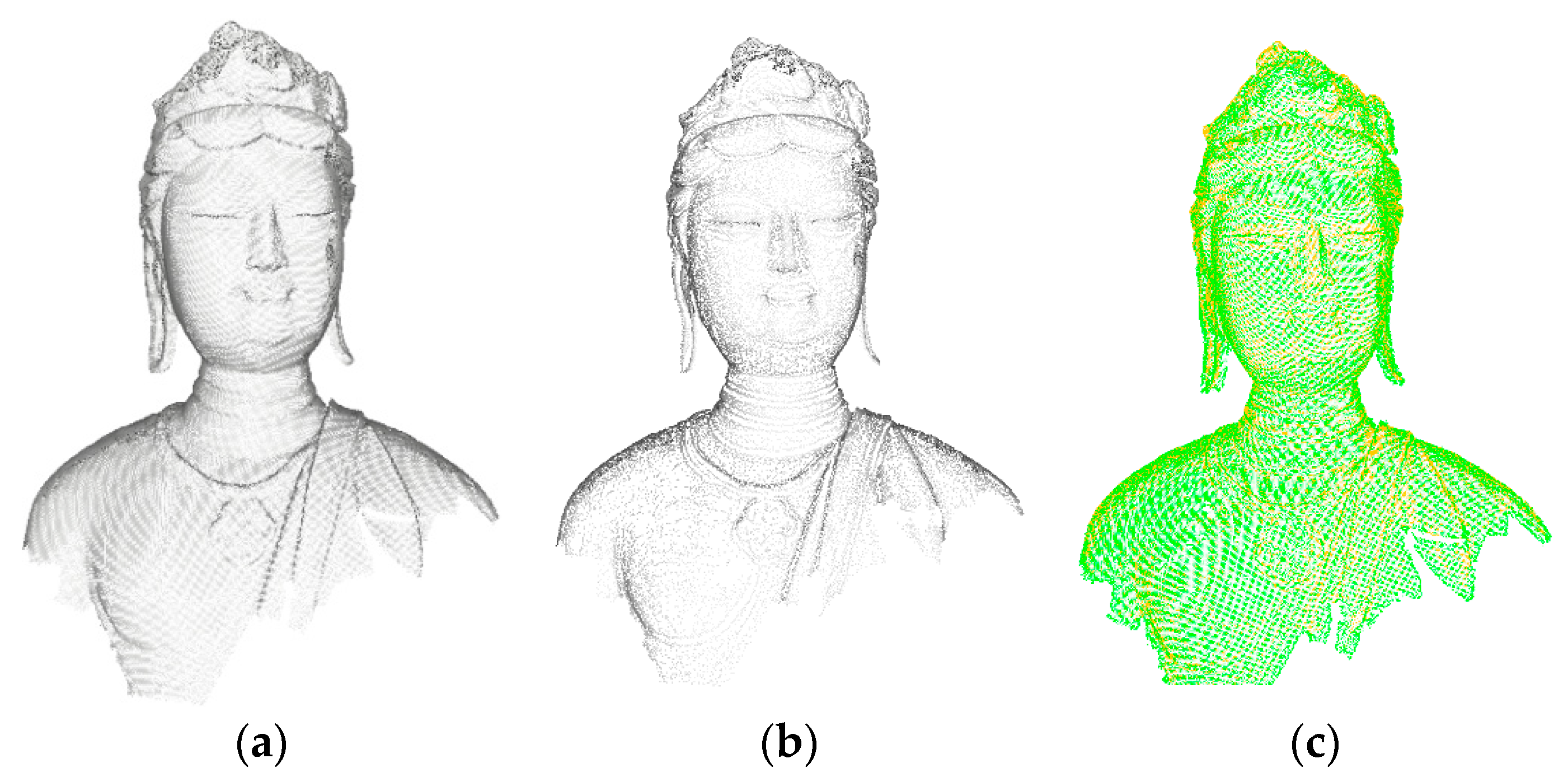

3.1. Surface Variation of 3D Surface Points

3.2. Multi-Level Points Generation Based on Degree of Importance

- Step 1.

- According to Equations (1) and (5), calculate the surface variation of each point based on the neighborhood range of the current level, and rank all points in decreasing order according to their surface variation values.

- Step 2.

- Select the surface variation value of the point located at the 80% position as the DOI threshold.

- Step 3.

- Take the point from the sorted point set in turn to a destination point set. The original surface variation value of this point and the new neighbors in the supporting region of the destination point set are integrated to calculate its DOI value using Equation (4). If the DOI is larger than the threshold, reserve it; otherwise, remove it from the destination point set.

- Step 4.

- Repeat Step 3 until all the points are processed.

- Step 5.

- Remove the points already existed in the coarser levels.

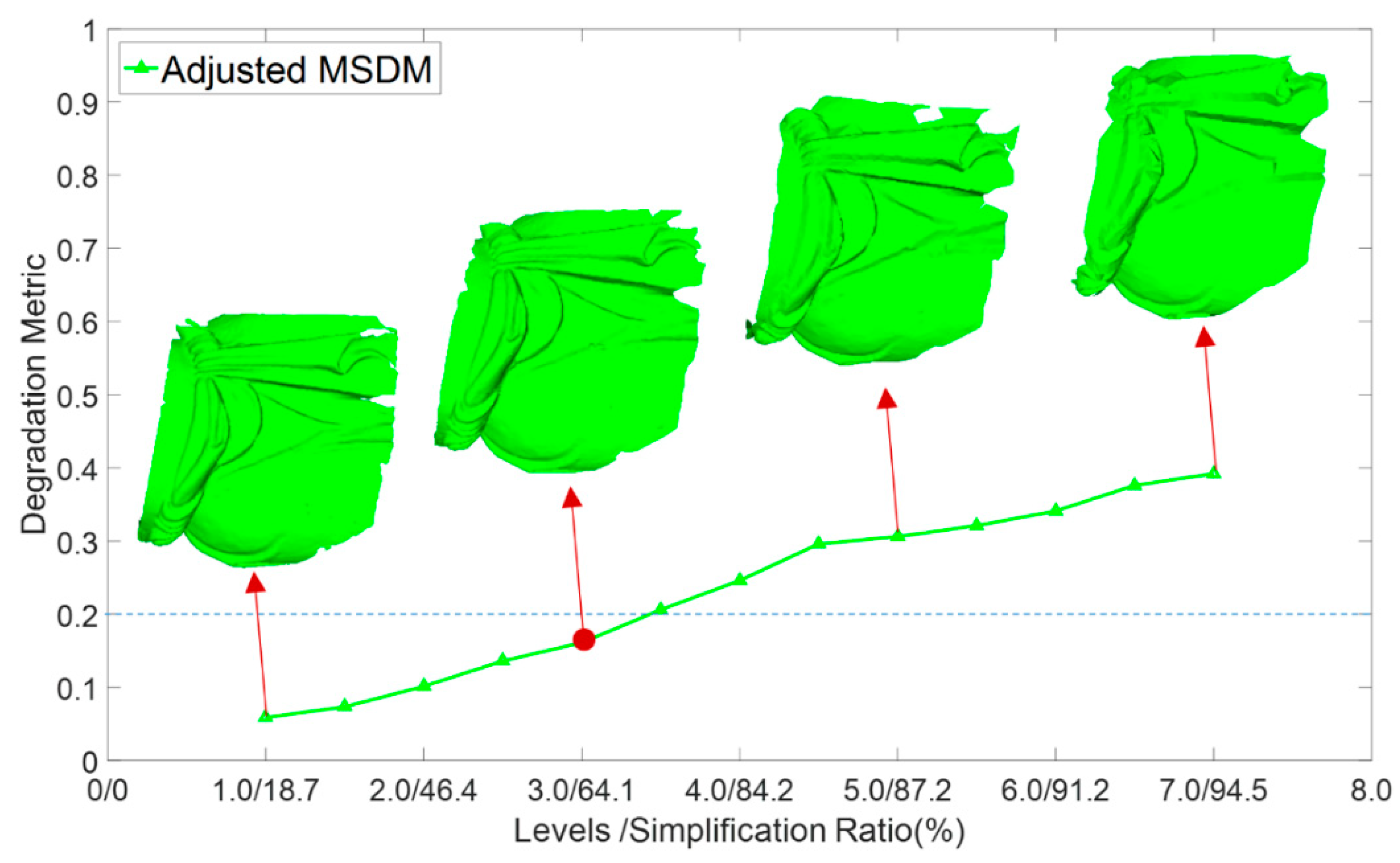

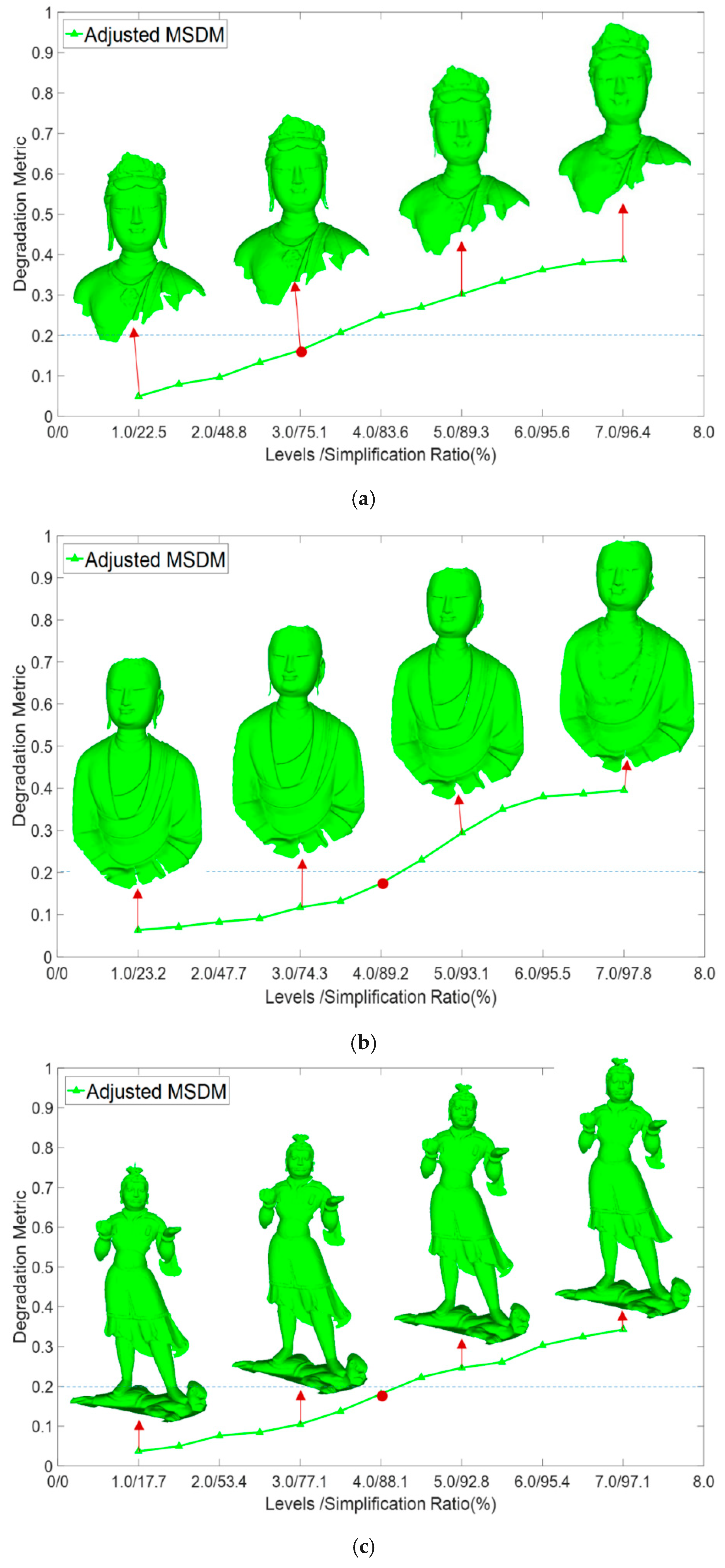

3.3. Perceptual Metric of Multi-Level and Optimal Level Selection

4. Results and Discussion

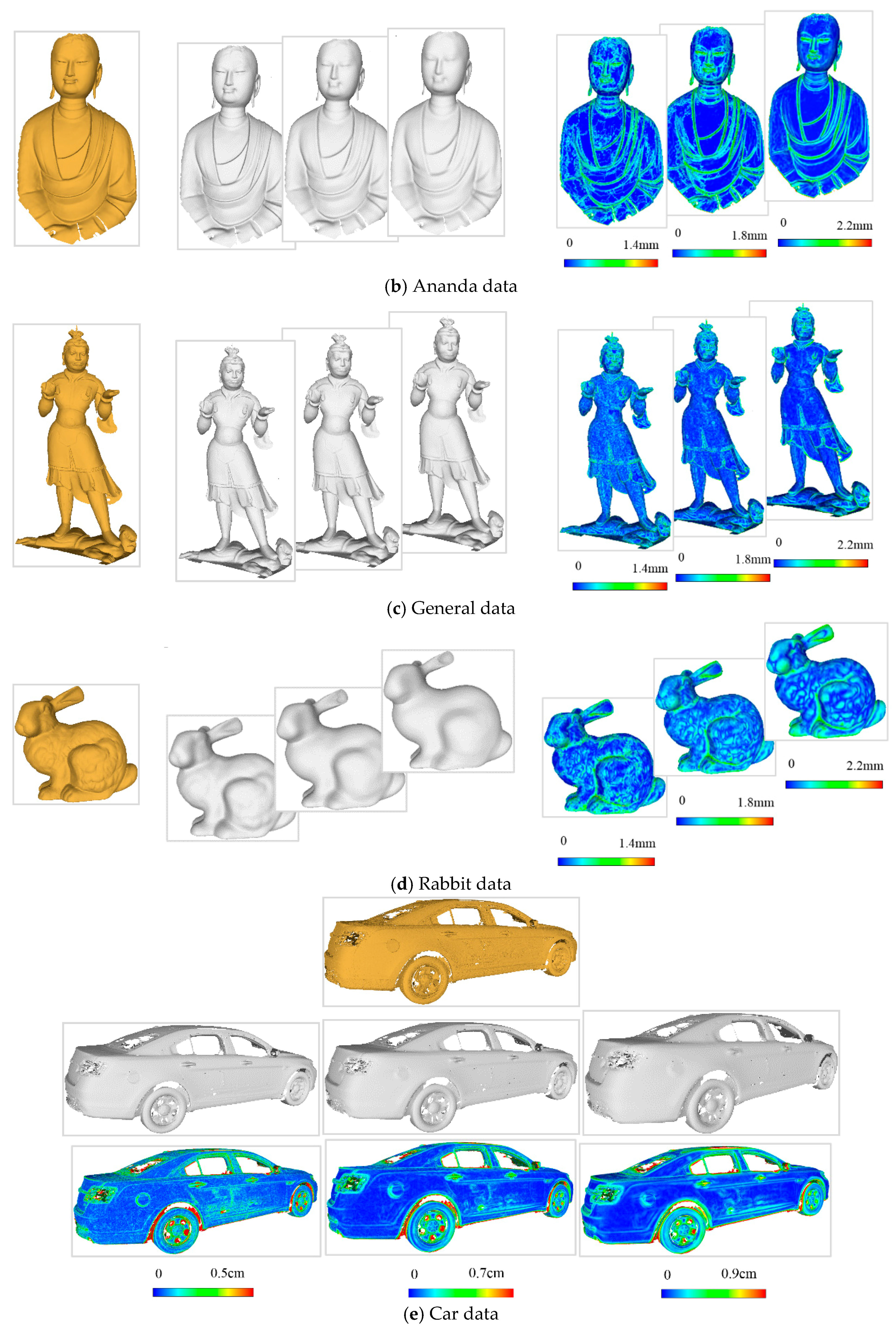

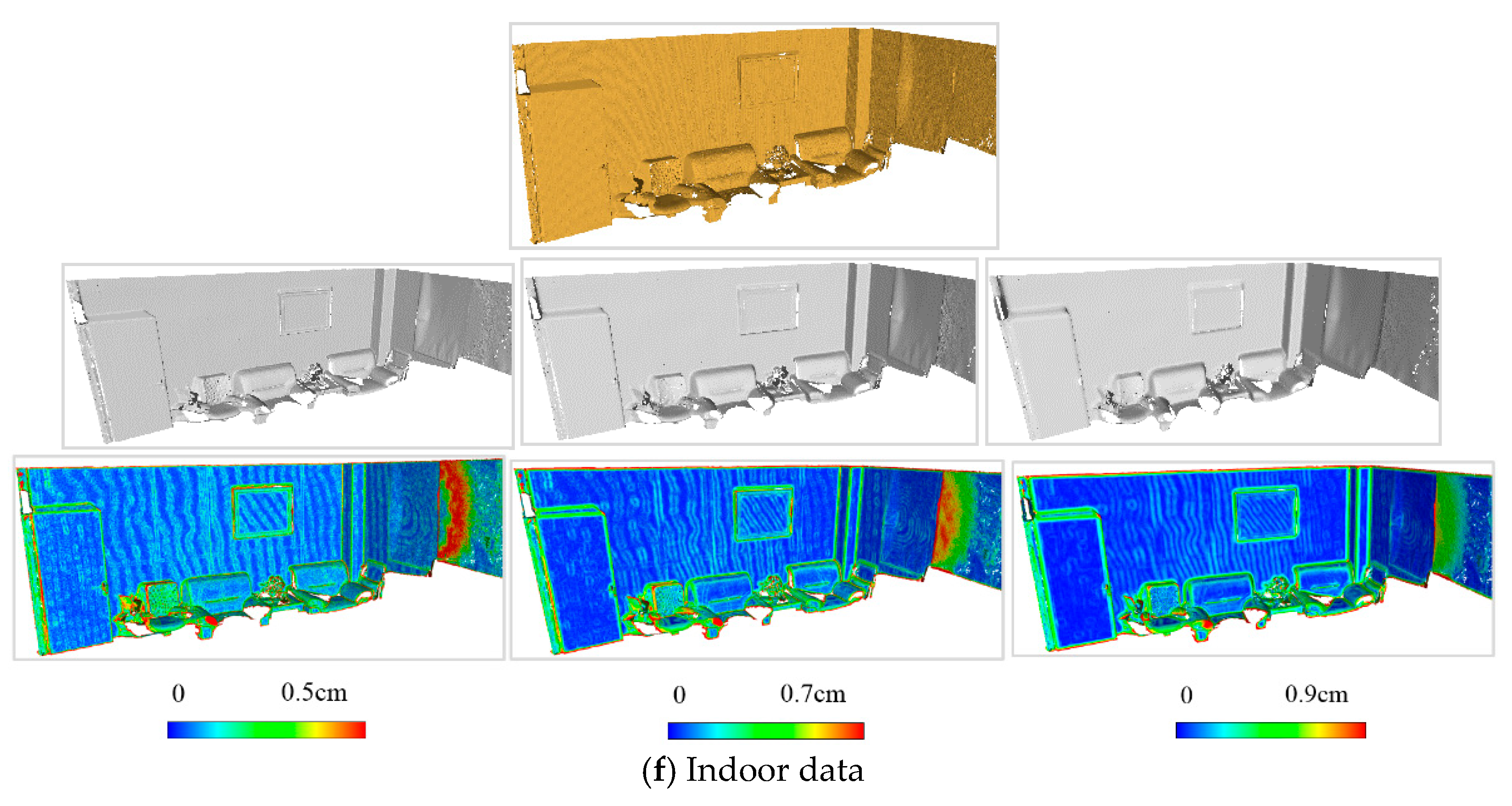

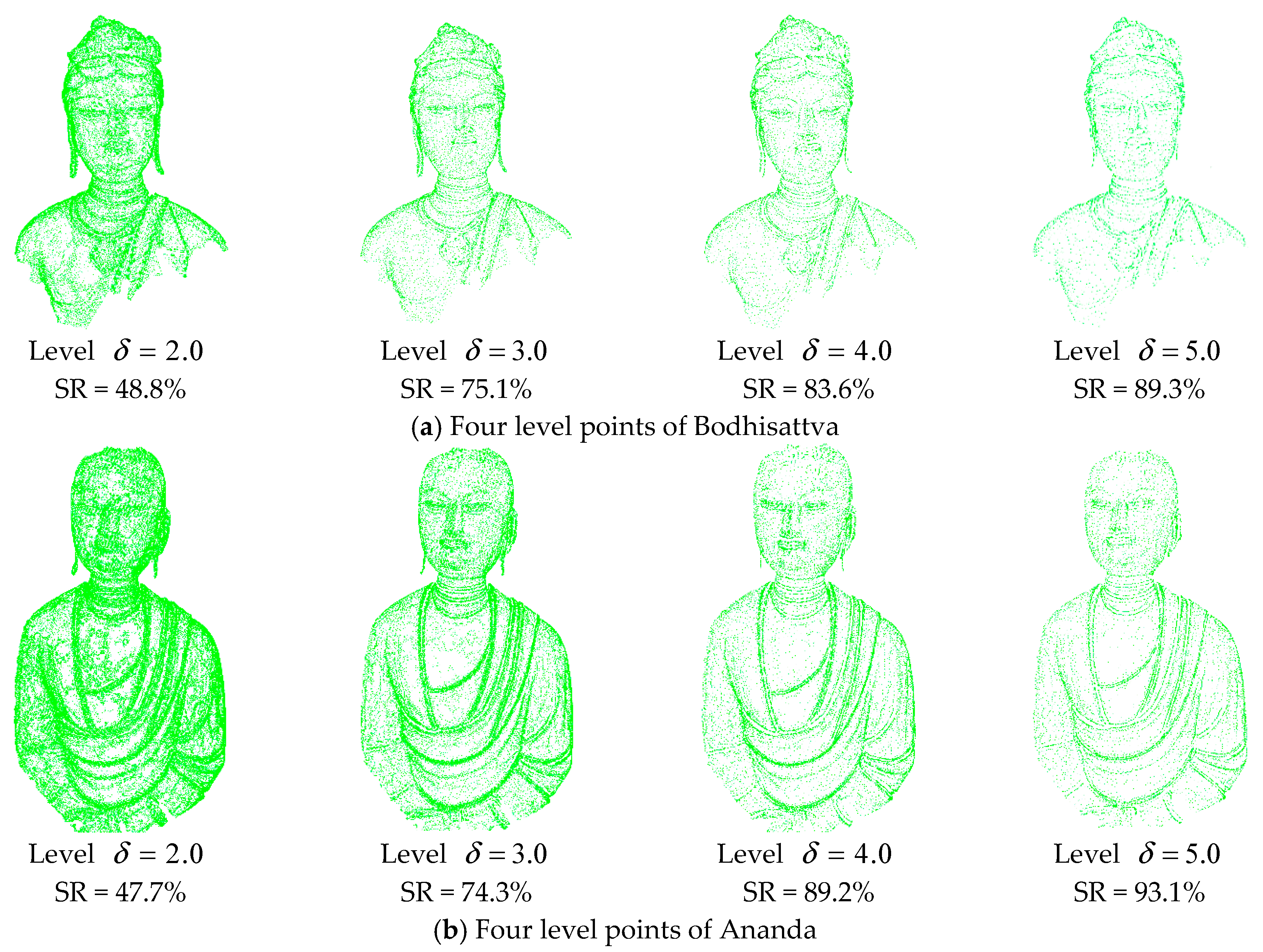

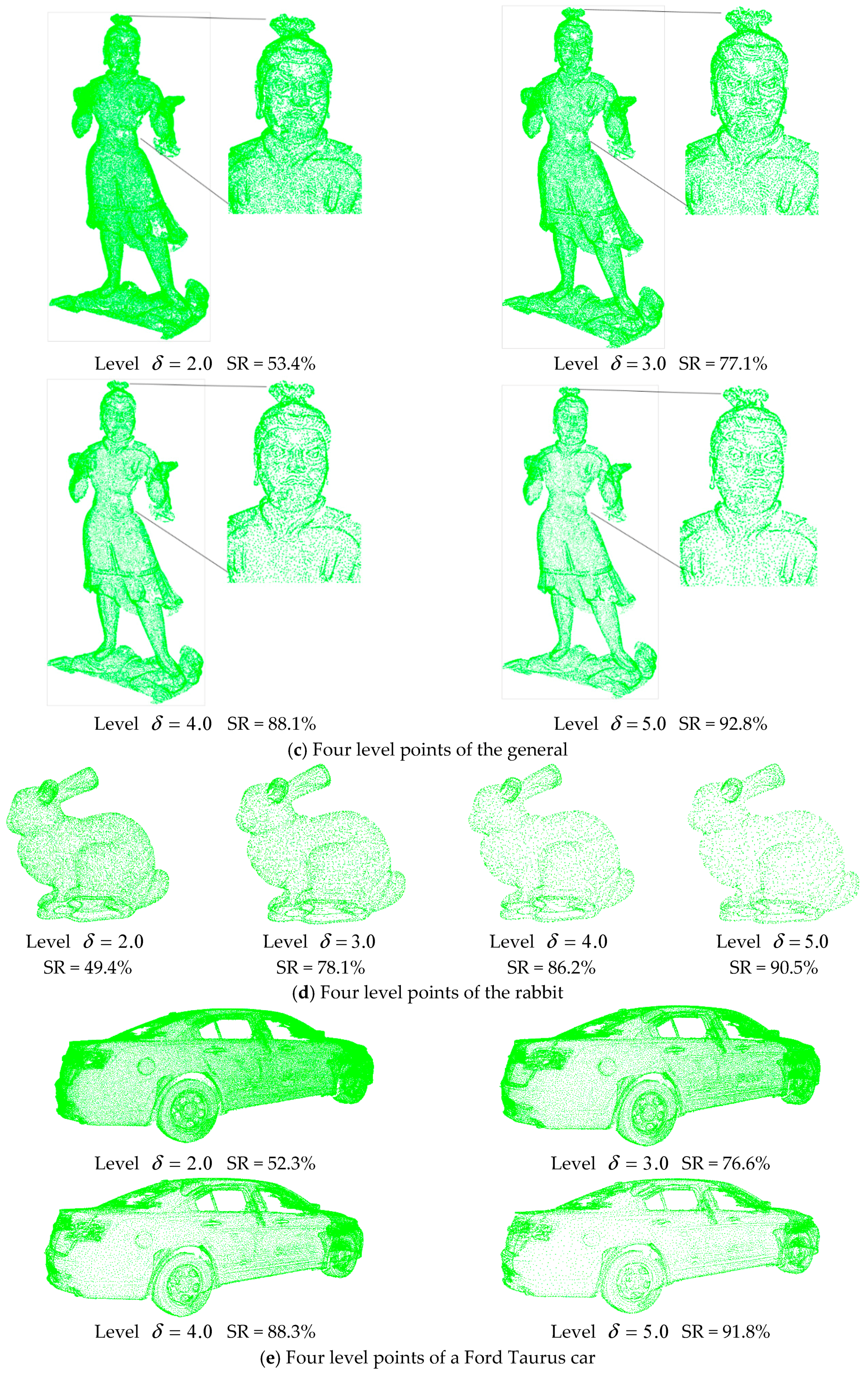

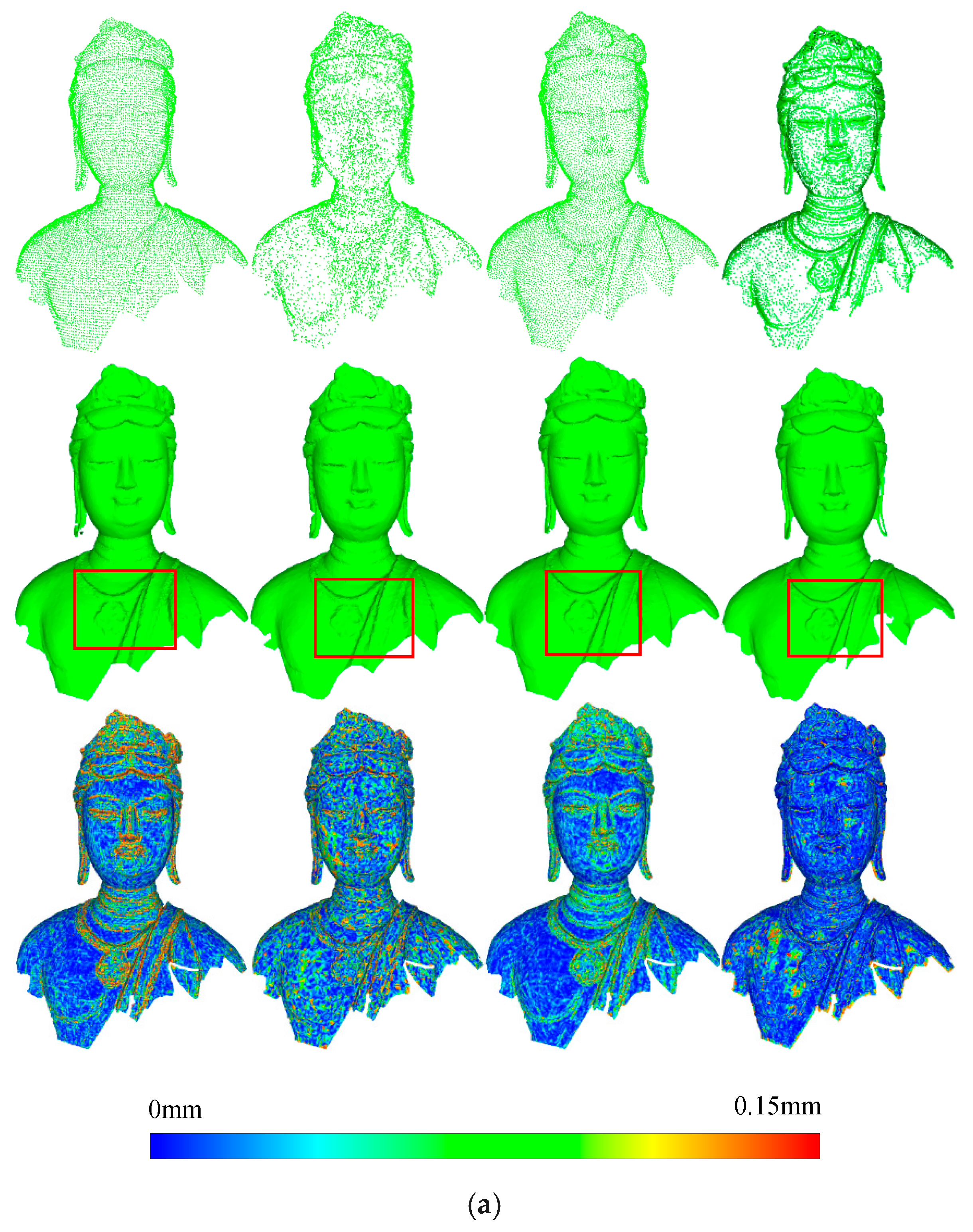

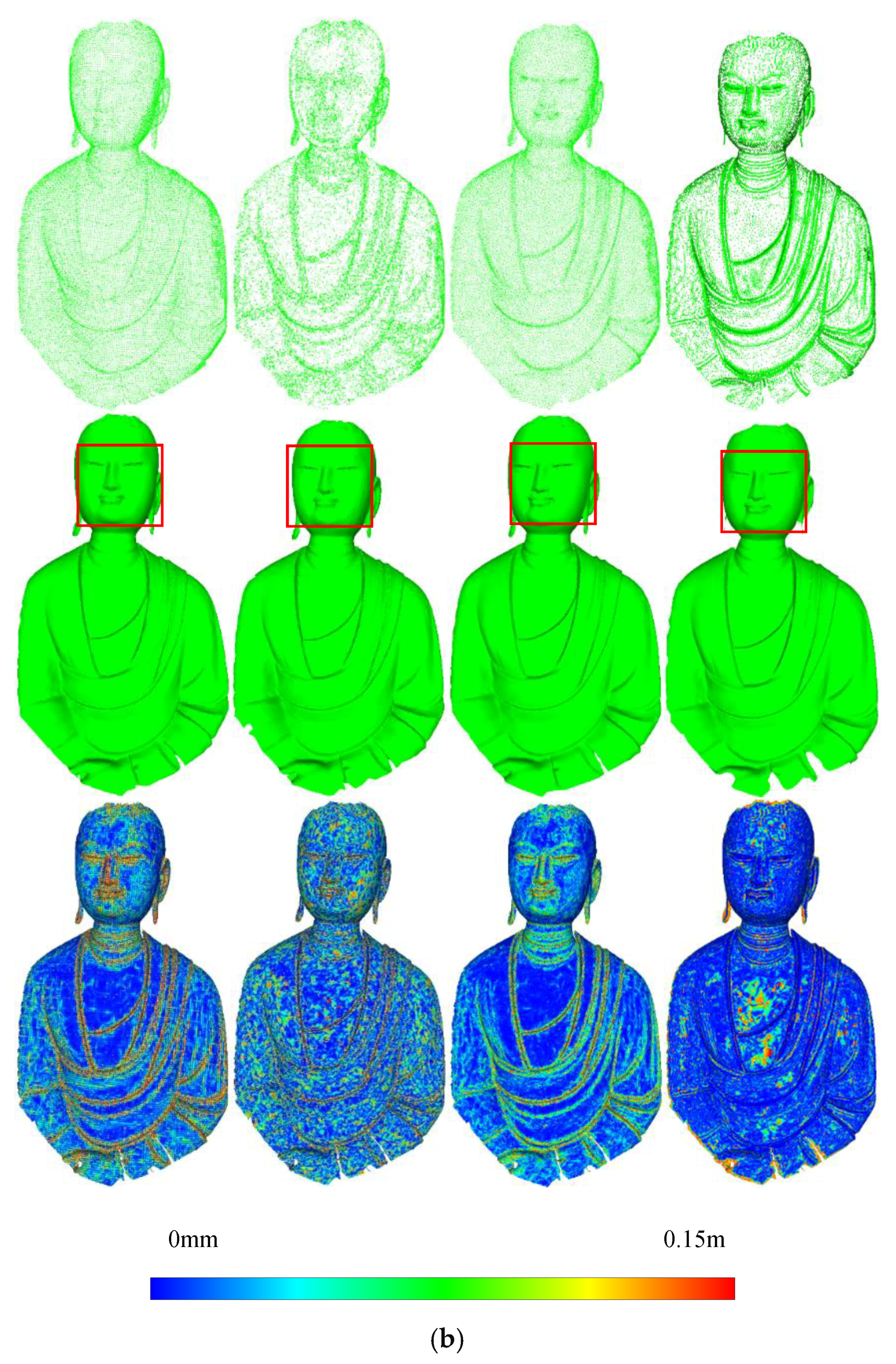

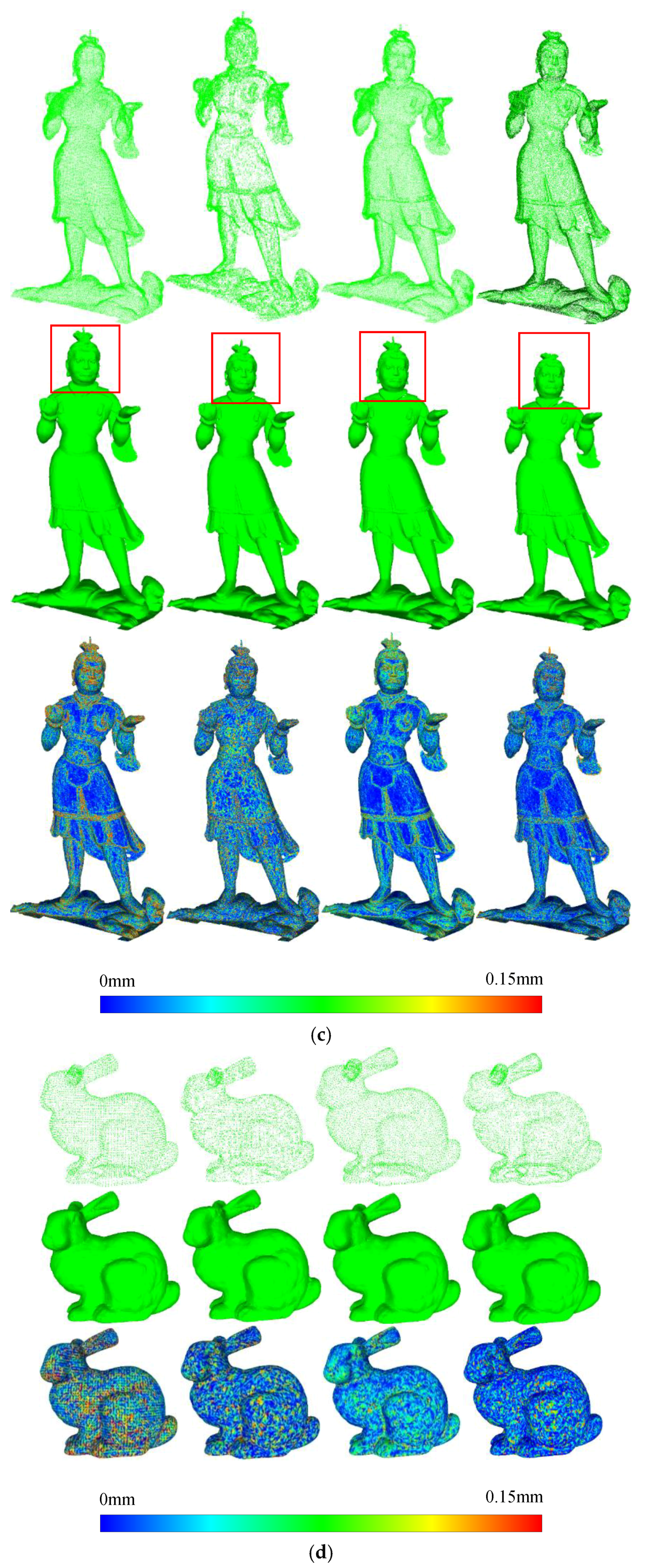

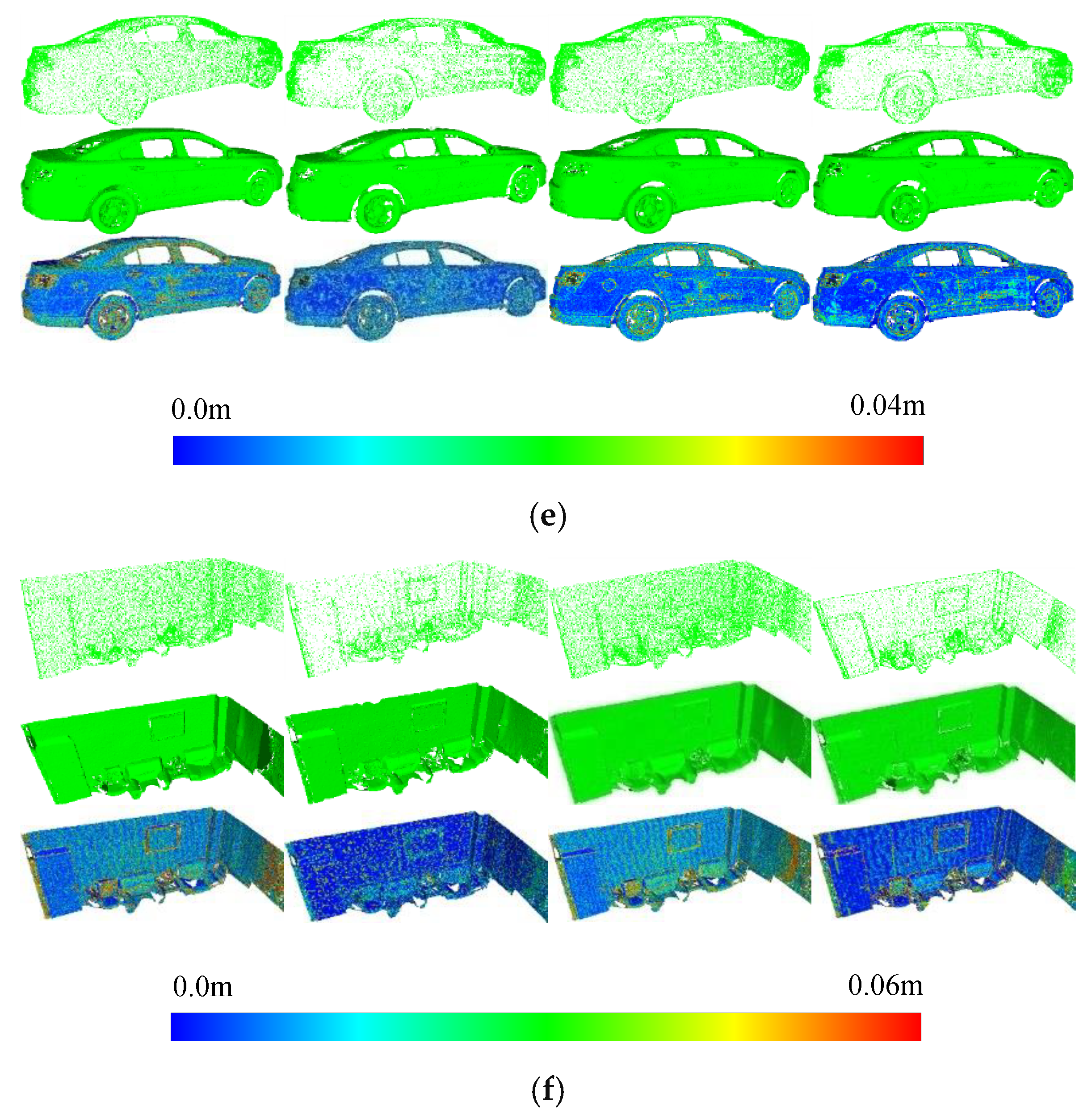

4.1. Results and Analysis of Multi-Level Generation

4.2. Influence of Parameters of Multi-Level Point Generation

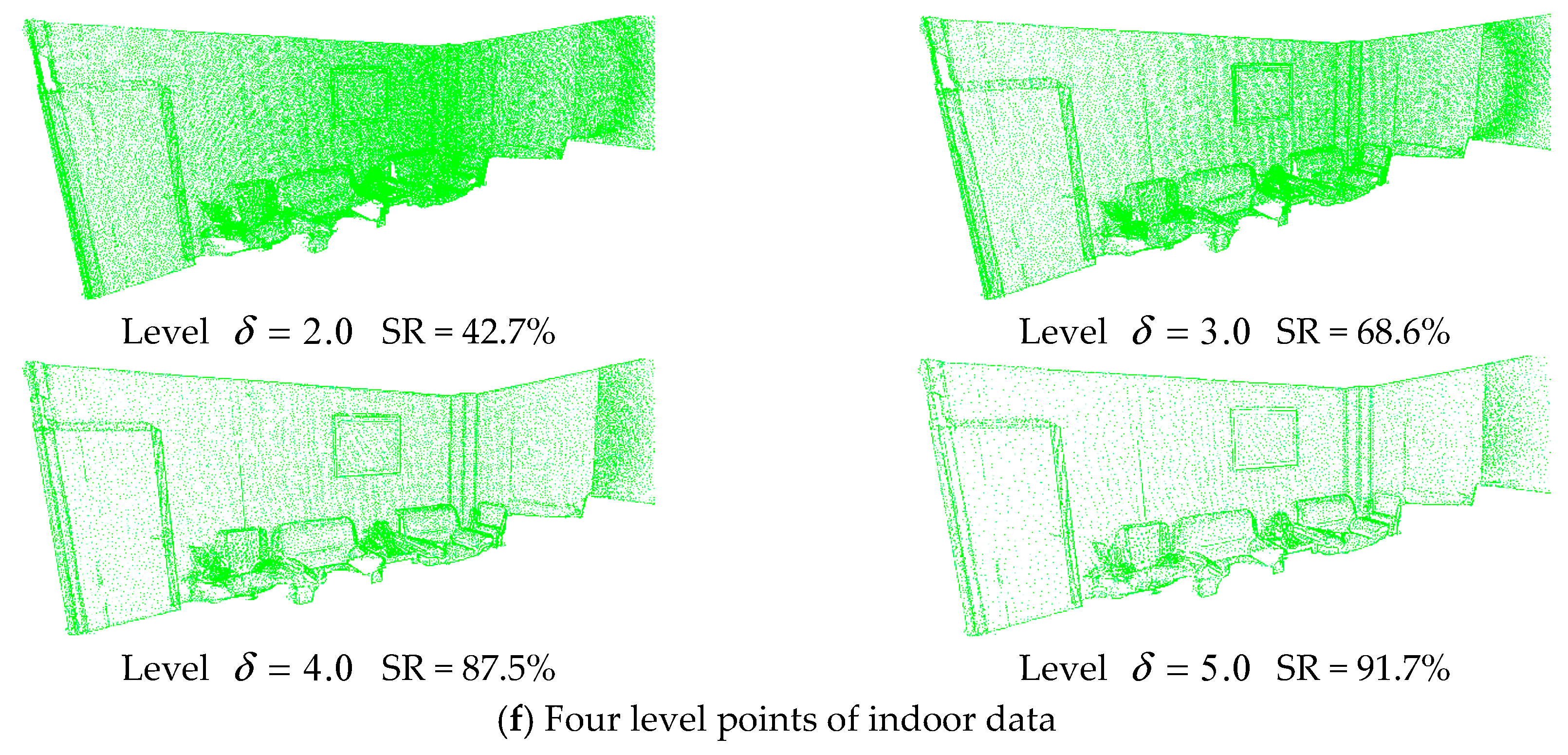

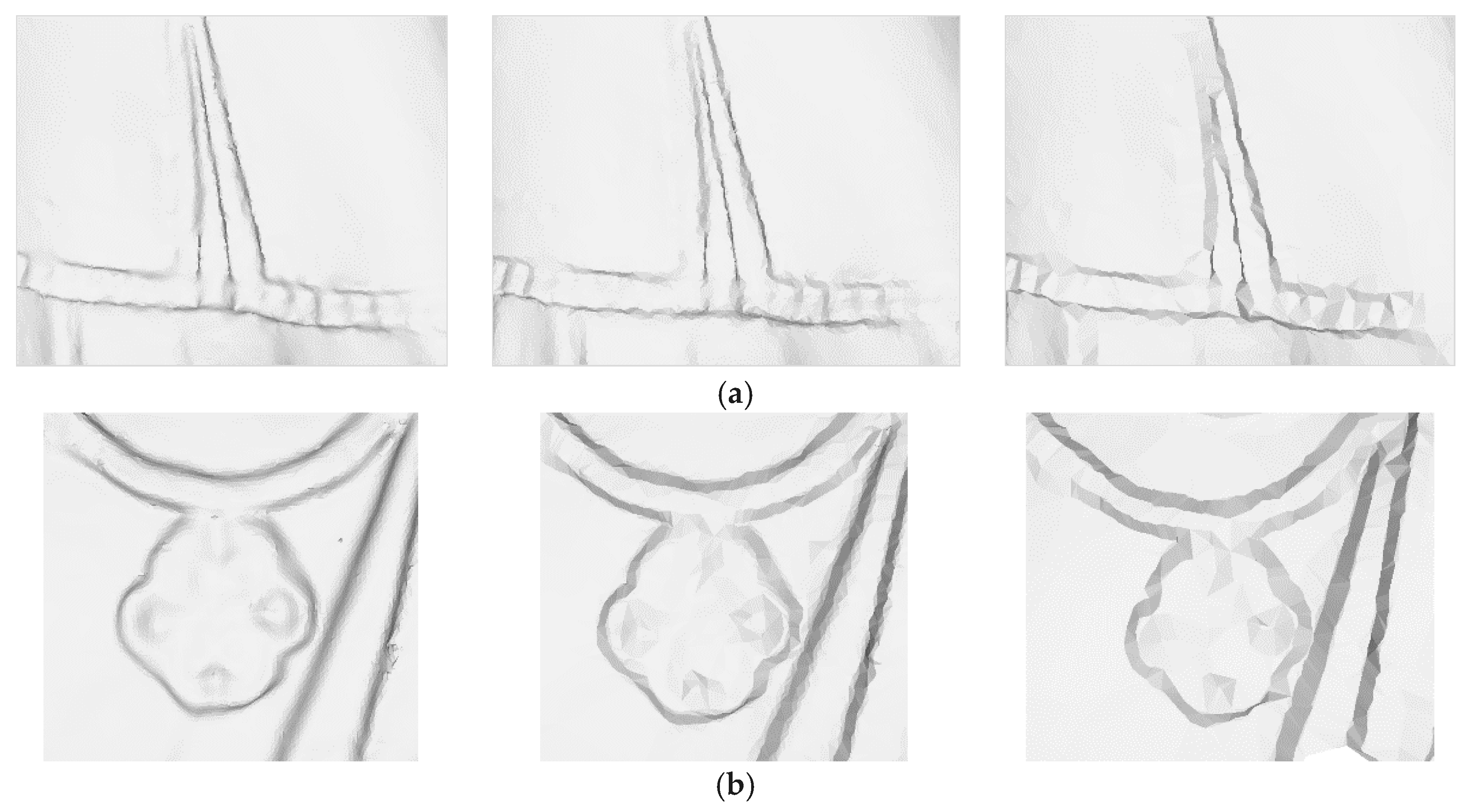

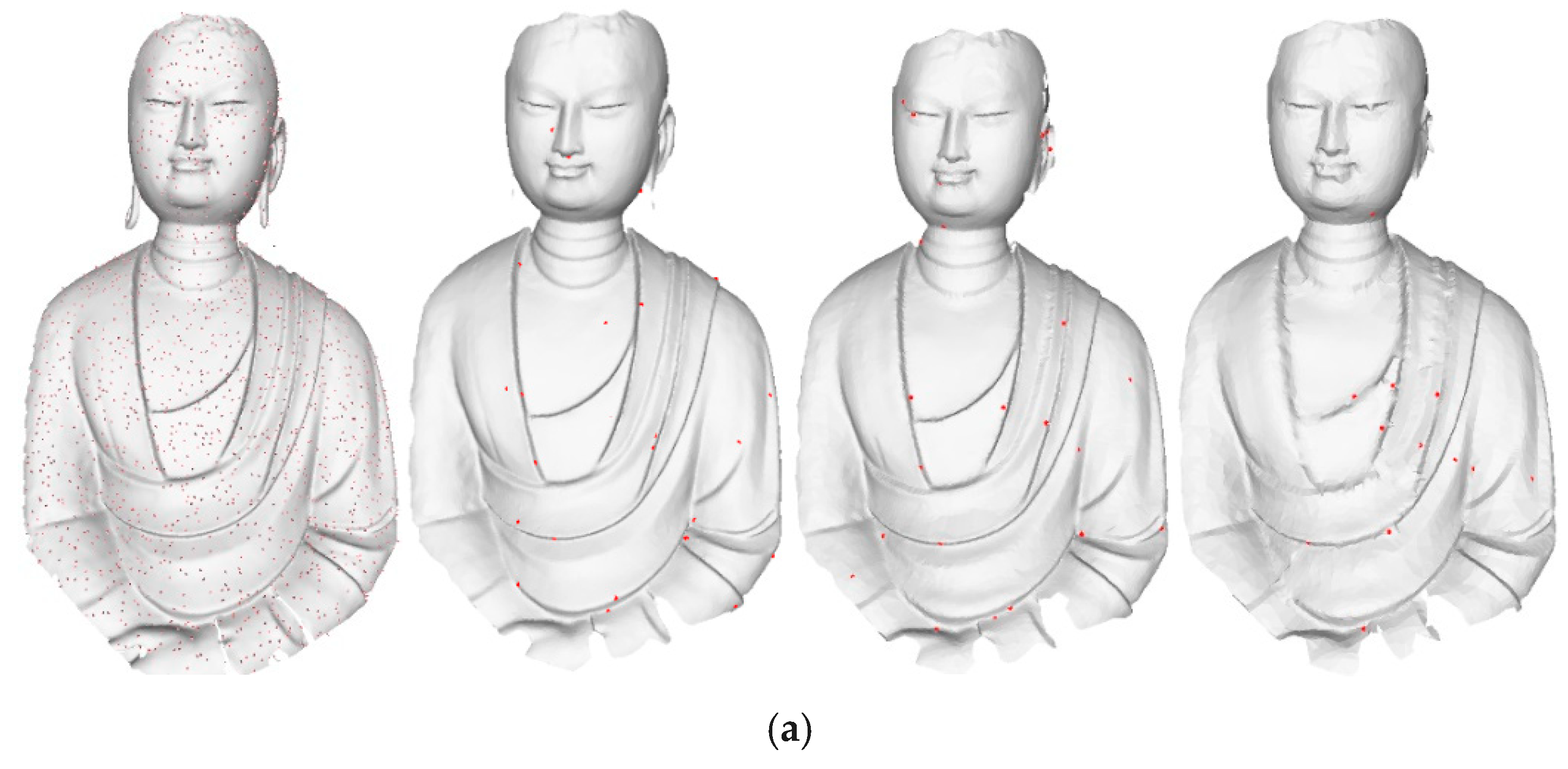

4.3. Robustness to Noise

4.4. Analysis of Optimal Level Selection and Comparison with Other Methods

5. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Liu, X.; Zhang, Z. Effects of LiDAR Data Reduction and Breaklines on the Accuracy of Digital Elevation Model. Surv. Rev. 2011, 43, 614–628. [Google Scholar] [CrossRef]

- Odaker, T.; Kranzlmueller, D.; Volkert, J. GPU-Accelerated Real-Time Mesh Simplification Using Parallel Half Edge Collapses. In International Doctoral Workshop on Mathematical and Engineering Methods in Computer Science; Springer International Publishing: Basel, Switzerland, 2015; pp. 107–118. [Google Scholar]

- Hu, K.; Yan, D.M.; Bommes, D.; Alliez, P.; Benes, B. Error-Bounded and Feature Preserving Surface Remeshing with Minimal Angle Improvement. IEEE Trans. Vis. Comput. Graph. 2016, 99, 2560–2573. [Google Scholar] [CrossRef] [PubMed]

- Tsai, Y.Y. An adaptive steganographic algorithm for 3D polygonal models using vertex decimation. Multimed. Tools Appl. 2014, 69, 859–876. [Google Scholar] [CrossRef]

- Salinas, D.; Lafarge, F.; Alliez, P. Structure-Aware Mesh Decimation. Comput. Graph. Forum 2015, 34, 211–227. [Google Scholar] [CrossRef] [Green Version]

- Sanchez, G.; Leal, E.; Leal, N. A Linear Programming Approach for 3D Point Cloud Simplification. IAENG Int. J. Comput. Sci. 2017, 44, 60–67. [Google Scholar]

- Tseng, J.L. Surface Simplification of 3D animation models using robust homogeneous coordinate transformation. J. Appl. Math. 2014, 2014, 189241. [Google Scholar] [CrossRef]

- Feng, S.; Choi, Y.K.; Yu, Y.; Wang, W. Medial Meshes—A Compact and Accurate Representation of Medial Axis Transform. IEEE Trans. Vis. Comput. Graph. 2016, 22, 1278–1290. [Google Scholar]

- Shi, G.; Dang, X.; Gao, X. Research on Adaptive Point Cloud Simplification and Compression Technology Based on Curvature estimation of Energy Function. Revista de la Facultad de Ingeniería U.C.V. 2017, 32, 336–343. [Google Scholar]

- Digne, J.; Cohen-Steiner, D.; Alliez, P.; Goes, F.; Desbrun, M. Feature-preserving surface reconstruction and simplification from defect-laden point sets. J. Math. Imaging Vis. 2014, 48, 369–382. [Google Scholar] [CrossRef]

- Sbert, M.; Chover, M.; Feixas, M. Viewpoint-based simplification using f-divergences. Inf. Sci. 2008, 178, 2375–2388. [Google Scholar]

- Kircher, S.; Garland, M. Progressive Multiresolution Meshes for Deforming Surfaces. In Proceedings of the 2005 ACM SIGGRAPH. Eurographics Symposium on Computer Animation, Los Angeles, CA, USA, 29–31 July 2005; pp. 191–200. [Google Scholar]

- Ohtake, Y.; Belyaev, A.; Seidel, H.P. A multi-scale approach to 3D scattered data interpolation with compactly supported basis functions. Shape Model. Int. 2003, 2003, 153–161. [Google Scholar]

- Alexa, M.; Behr, J.; Cohen-Or, D.; Fleishman, S.; Levin, D.; Silver, C.T. Computing and rendering point set surfaces. IEEE Trans. Vis. Comput. Graph. 2003, 9, 3–15. [Google Scholar] [CrossRef]

- Sim, J.Y.; Lee, S.U.; Kim, C.S. Construction of regular 3D point clouds using octree partitioning and resampling. In Proceedings of the 2005 IEEE International Symposium on Circuits and Systems, Kobe, Japan, 23–26 May 2005; pp. 956–959. [Google Scholar]

- Alraddady, F. Data reduction algorithm based on planar surface fitting. J. Glob. Res. Comput. Sci. 2013, 4, 1–6. [Google Scholar]

- Zanaty, E.A. Three dimensional data reduction algorithm based on surface fitting. J. Glob. Res. Comput. Sci. 2014, 5, 5–13. [Google Scholar]

- Carr, J.C.; Beatson, R.K.; Cherrie, J.B.; Mitchell, T.J.; Fright, W.R.; McCallum, B.C.; Evans, T.R. Reconstruction and representation of 3D objects with radial basis functions. In Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques, Los Angeles, CA, USA, 12–17 August 2001; pp. 67–76. [Google Scholar]

- Fuhrmann, S.; Goesele, M. Floating scale surface reconstruction. ACM Trans. Graph. (TOG) 2014, 33, 46. [Google Scholar] [CrossRef]

- Gómez-Mora, M.; Flórez-Valencia, L. Surface reconstruction from three-dimensional segmentations using implicit functions. In Proceedings of the 2015 10th IEEE Computing Colombian Conference (10CCC), Bogota, Colombia, 21–25 September 2015; pp. 317–323. [Google Scholar]

- Liu, S.; Wang, C.C.L.; Brunnett, G.; Wang, J. A closed-form Equationtion of HRBF-based surface reconstruction by approximate solution. Comput.-Aided Des. 2016, 78, 147–157. [Google Scholar] [CrossRef]

- Wu, J.; Kobbelt, L. Optimized Sub-Sampling of Point Sets for Surface Splatting. Comput. Graph. Forum 2010, 23, 643–652. [Google Scholar] [CrossRef]

- Li, T.; Pan, Q.; Gao, L.; Li, P. A novel simplification method of point cloud with directed Hausdorff distance. In Proceedings of the 2017 IEEE 21st International Conference on Computer Supported Cooperative Work in Design (CSCWD), Wellington, New Zealand, 26–28 April 2017; pp. 469–474. [Google Scholar]

- Zhao, P.; Wang, Y.; Hu, Q. A feature preserving algorithm for point cloud simplification based on hierarchical clustering. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 5581–5584. [Google Scholar]

- Pauly, M.; Gross, M.; Kobbelt, L.P. Efficient simplification of point-sampled surfaces. In Proceedings of the conference on IEEE Visualization 2002, Boston, MA, USA, 27 October–1 November 2002; pp. 163–170. [Google Scholar] [Green Version]

- Wu, J.; Zhang, Z.; Kobbelt, L. Progressive splatting. In Proceedings of the Eurographics/IEEE VGTC Symposium Point-Based Graphics, Stony Brook, NY, USA, 21–22 June 2005; pp. 25–142. [Google Scholar]

- Dyn, N.; Armin, I.; Holger, W. Meshfree thinning of 3D point clouds. Found. Comput. Math. 2008, 8, 409–425. [Google Scholar] [CrossRef]

- Peters, R.; Ledoux, H. Robust approximation of the Medial Axis Transform of LiDAR point clouds as a tool for visualisation. Comput. Geosci. 2016, 90, 123–133. [Google Scholar] [CrossRef] [Green Version]

- Nguyen, Y.S.; Bac, A.; Daniel, M. Simplification of 3D Point Clouds sampled from Elevation Surfaces. In Proceedings of the 21st International Conference on Computer Graphics, Visualization and Computer Vision WSCG 2013, Plzen, Czech Republic, 24–27 June 2013; pp. 60–69. [Google Scholar]

- Shen, Y.; Li, H.; Xu, P. Simplification with Feature Preserving for 3D Point Cloud. In Proceedings of the 2015 8th International Conference on Intelligent Computation Technology and Automation (ICICTA), Nanchang, China, 14–15 June 2015; pp. 819–822. [Google Scholar]

- Wang, G.; Lv, Y.; Han, N.; Zhang, D. Simplification Method and Application of 3D Laser Scan Point Cloud Data. In Proceedings of the 1st International Conference on Mechanical Engineering and Material Science, Berlin, Germany, 28–30 December 2012. [Google Scholar]

- Han, H.; Han, X.; Sun, F.; Huang, C. Point cloud simplification with preserved edge based on normal vector. Optik-Int. J. Light Electron Opt. 2015, 126, 2157–2162. [Google Scholar] [CrossRef]

- Whelan, T.; Ma, L.; Bondarev, E.; de With, P.H.N.; McDonald, J. Incremental and batch planar simplification of dense point cloud maps. Robot. Auton. Syst. 2015, 69, 3–14. [Google Scholar] [CrossRef] [Green Version]

- Song, H.; Feng, H.Y. ; A progressive point cloud simplification algorithm with preserved sharp edge data. Int. J. Adv. Manufact. Technol. 2009, 45, 583–592. [Google Scholar] [CrossRef]

- Lan, J.; Li, J.; Li, J.; Zheng, L.; Hu, G. Data reduction based on dynamic-threshold uniform grid-algorithm. Optik-Int. J. Light Electron Opt. 2013, 124, 6461–6468. [Google Scholar] [CrossRef]

- Perfilieva, I.; Hurtik, P.; Martino, F.D.; Sessa, S. Image reduction method based on the f-transform. Soft Comput. 2015, 1–15. [Google Scholar] [CrossRef]

- Arnold, I.; Christopher, Z.; Frahm, J-M.; Horst, B. From structure-from-motion point clouds to fast location recognition. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 2599–2606. [Google Scholar]

- Cheng, W.; Lin, W.; Zhang, X.; Goesele, M.; Sun, M.T. A data-driven point cloud simplification framework for city-scale image-based localization. IEEE Trans. Image Process. 2017, 26, 262–275. [Google Scholar] [CrossRef] [PubMed]

- Hyun, S.P.; Yu, W.; Eriko, N.; James, C.H.; Yaser, S.; Mei, C. 3D point cloud reduction using mixed-integer quadratic programming. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Portland, OR, USA, 23–28 June 2013; pp. 229–236. [Google Scholar]

- Cao, S.; Snavely, N. Minimal scene descriptions from structure from motion models. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 461–468. [Google Scholar]

- Bariya, P.; Novatnack, J.; Schwartz, G.; Nishino, K. 3D geometric scale variability in range images: Features and descriptors. Int. J. Comput. Vis. 2012, 99, 232–255. [Google Scholar] [CrossRef]

- Breitmeyer, B.G. Visual masking: Past accomplishments, present status, future developments. Adv. Cognit. Psychol. 2007, 3, 9–20. [Google Scholar] [CrossRef] [PubMed]

- Torkhani, F.; Wang, K.; Chassery, J.M. A Curvature-Tensor-Based Perceptual Quality Metric for 3D Triangular Meshes. Mach. Graph. Vis. 2014, 23, 59–82. [Google Scholar]

- Shi, Z.; Li, Q.; Niu, X. A Perceptual Metric based on Salient Information Entropy for 3D Mesh Distortion. In Proceedings of the 2010 Sixth International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Darmstadt, Germany, 15–17 October 2010; pp. 563–566. [Google Scholar]

- Lavoué, G.; Gelasca, E.D.; Dupont, F.; Baskurt, A.; Ebrahimi, T. Perceptually driven 3D distance metrics with application to watermarking. SPIE Opt. Photonics Int. Soc. Opt. Photonics 2006, 6312. [Google Scholar] [CrossRef]

- Klein, J.; Zachmann, G. Point cloud surfaces using geometric proximity graphs. Comput. Graph. 2004, 28, 839–850. [Google Scholar] [CrossRef] [Green Version]

- Kalpana, R.; Thambidurai, P. A speedup technique for dynamic graphs using partitioning strategy and multithreaded approach. J. King Saud Univ. Comput. Inf. Sci. 2014, 26, 111–119. [Google Scholar] [CrossRef]

- Tombari, F.; Salti, S.; DiStefano, L. Unique shape context for 3D data description. In Proceedings of the ACM Workshop on 3D Object Retrieval, Firenze, Italy, 25 October 2010; pp. 57–62. [Google Scholar]

- Wendland, H.; Piecewise, P. Positive definite and compactly supported radial functions of minimal degree. Adv. Comput. Math. 1995, 4, 389–396. [Google Scholar] [CrossRef]

- Zhang, K.; Bi, W.; Zhang, X.; Fu, X.; Zhou, K.; Zhu, L. A new k-means clustering algorithm for point cloud. Int. J. Hybrid Inf. Technol. 2015, 8, 157–170. [Google Scholar] [CrossRef]

- Su, Z.; Li, Z.; Zhao, Y.; Cao, J. Curvature-aware simplification for point-sampled geometry. Front. Inf. Technol. Electron. Eng. 2011, 12, 184–194. [Google Scholar] [CrossRef]

- Corsini, M.; Cignoni, P.; Scopigno, R. Efficient and flexible sampling with blue noise properties of triangular meshes. IEEE Trans. Vis. Comput. Graph. 2012, 18, 914–924. [Google Scholar] [CrossRef] [PubMed]

| Specification | Handyscan 3D | Rigel VZ400 | Faro Focus X330 |

|---|---|---|---|

| Range | 300 mm | 800 m | 0.6 m~330 m |

| Measurement speed (pts/s) | 25,000 | 500,000 | 244,000 |

| Accuracy | 0.05 mm | 4 mm | 2 mm |

| Field of view (vertical/horizontal) | — | 100°/360° | 300°/360° |

| Depth of field | 250 mm | — | — |

| Laser pulse repetition rate | — | 1.2 MHz | — |

| Laser class | Class II (eye-safe) | Laser class I | Laser class I |

| Software | VXelements | RiSCAN PRO | FARO SCENE |

| Dataset | Bodhisattva | Ananda | General | Rabbit | Car Data | Indoor Data |

|---|---|---|---|---|---|---|

| Sensors | Handyscan 3D | Synthetic | Faro X330 | Rigel VZ400 | ||

| Sizes (m3) | 0.55 × 0.35 × 0.12 | 0.58 × 0.35 × 0.12 | 1.34 × 0.73 × 0.15 | 0.16 × 0.15 × 0.09 | 4.2 × 1.4 × 1.7 | 4.6 × 2.0 × 3.5 |

| Stations | 2 | 3 | 6 | 5 | 1 | 1 |

| Points Num. | 142,555 | 169,822 | 408,495 | 35,946 | 468,682 | 338,683 |

| Point Span | 0.8 mm | 1.0 mm | 1.0 cm | 1.0 cm | ||

| Redundancy | High | Medium | Medium | High | ||

| Data Type | Points | Points/mesh | Points | Points | ||

| Noise Number | Level δ = 2.0 | Level δ = 4.0 | Level δ = 6.0 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| No. | M (mm) | V (mm) | No. | M (mm) | V (mm) | No. | M (mm) | V (mm) | |

| 1699 | 26 | 0.038 | 0.19 | 23 | 0.53 | 0.43 | 13 | 1.27 | 0.68 |

| 3397 | 83 | 0.12 | 0.32 | 39 | 0.26 | 0.31 | 32 | 0.92 | 0.79 |

| 8492 | 212 | 0.14 | 0.35 | 144 | 0.35 | 0.36 | 77 | 0.87 | 0.68 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zang, Y.; Yang, B.; Liang, F.; Xiao, X. Novel Adaptive Laser Scanning Method for Point Clouds of Free-Form Objects. Sensors 2018, 18, 2239. https://doi.org/10.3390/s18072239

Zang Y, Yang B, Liang F, Xiao X. Novel Adaptive Laser Scanning Method for Point Clouds of Free-Form Objects. Sensors. 2018; 18(7):2239. https://doi.org/10.3390/s18072239

Chicago/Turabian StyleZang, Yufu, Bisheng Yang, Fuxun Liang, and Xiongwu Xiao. 2018. "Novel Adaptive Laser Scanning Method for Point Clouds of Free-Form Objects" Sensors 18, no. 7: 2239. https://doi.org/10.3390/s18072239