Monitoring of Cardiorespiratory Signals Using Thermal Imaging: A Pilot Study on Healthy Human Subjects

Abstract

:1. Introduction

2. Methodology

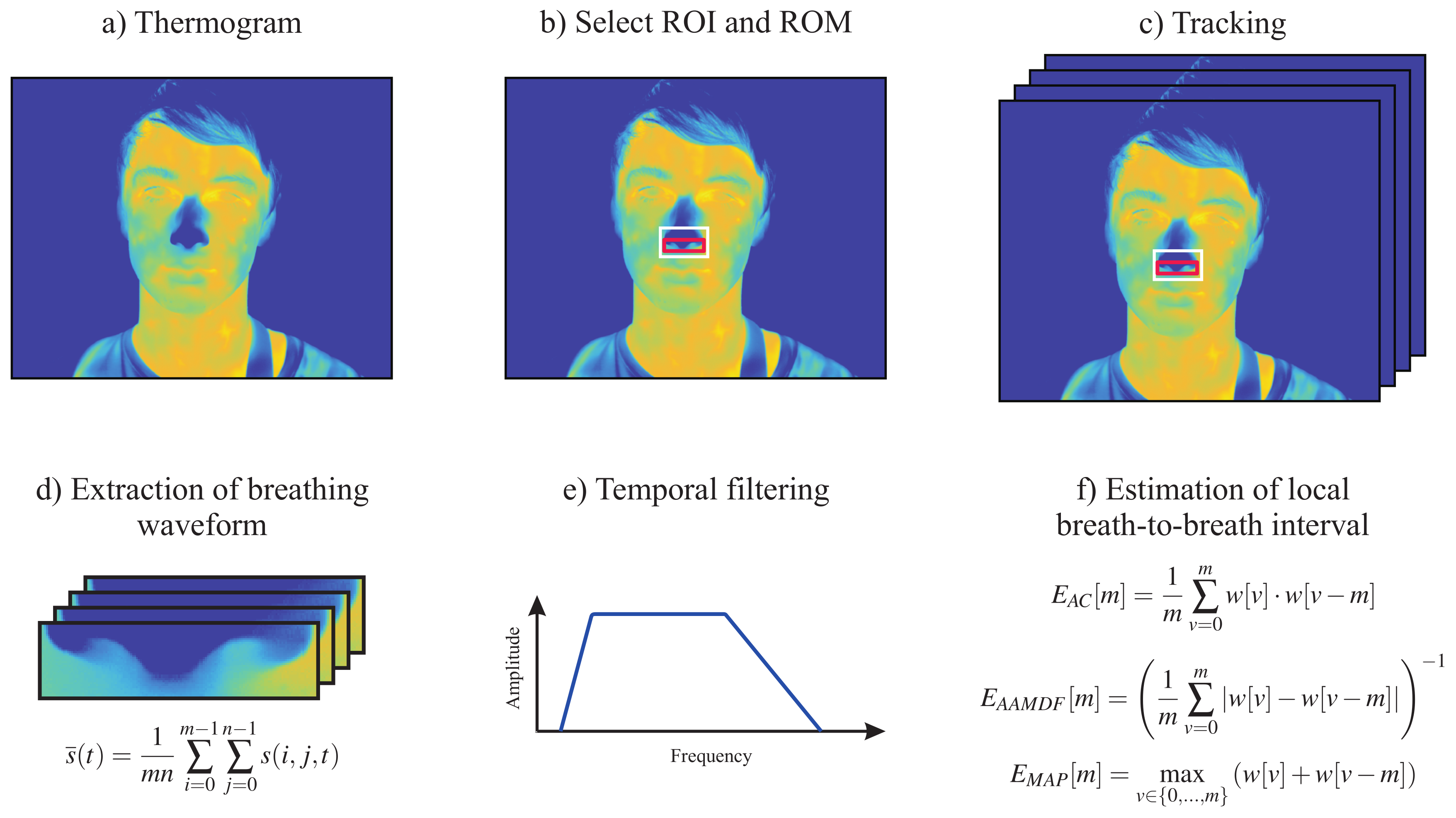

2.1. Respiratory Rate

- Adaptive window autocorrelation -The adaptive window correlation was computed for all interval lengths (discrete lags) m as given byIn summary, determines the correlation between m samples to the right and to the left of the analysis window center .

- Adaptive window average magnitude difference function -The estimator, in turn, finds the absolute difference between samples according to

- Maximum amplitude pairs -The third and last estimator can be interpreted as an indirect peak detector, as it only concerns the signal amplitude. is computed as followsIt achieves its maximum when two peaks, separated by m samples, are included in the analysis window.

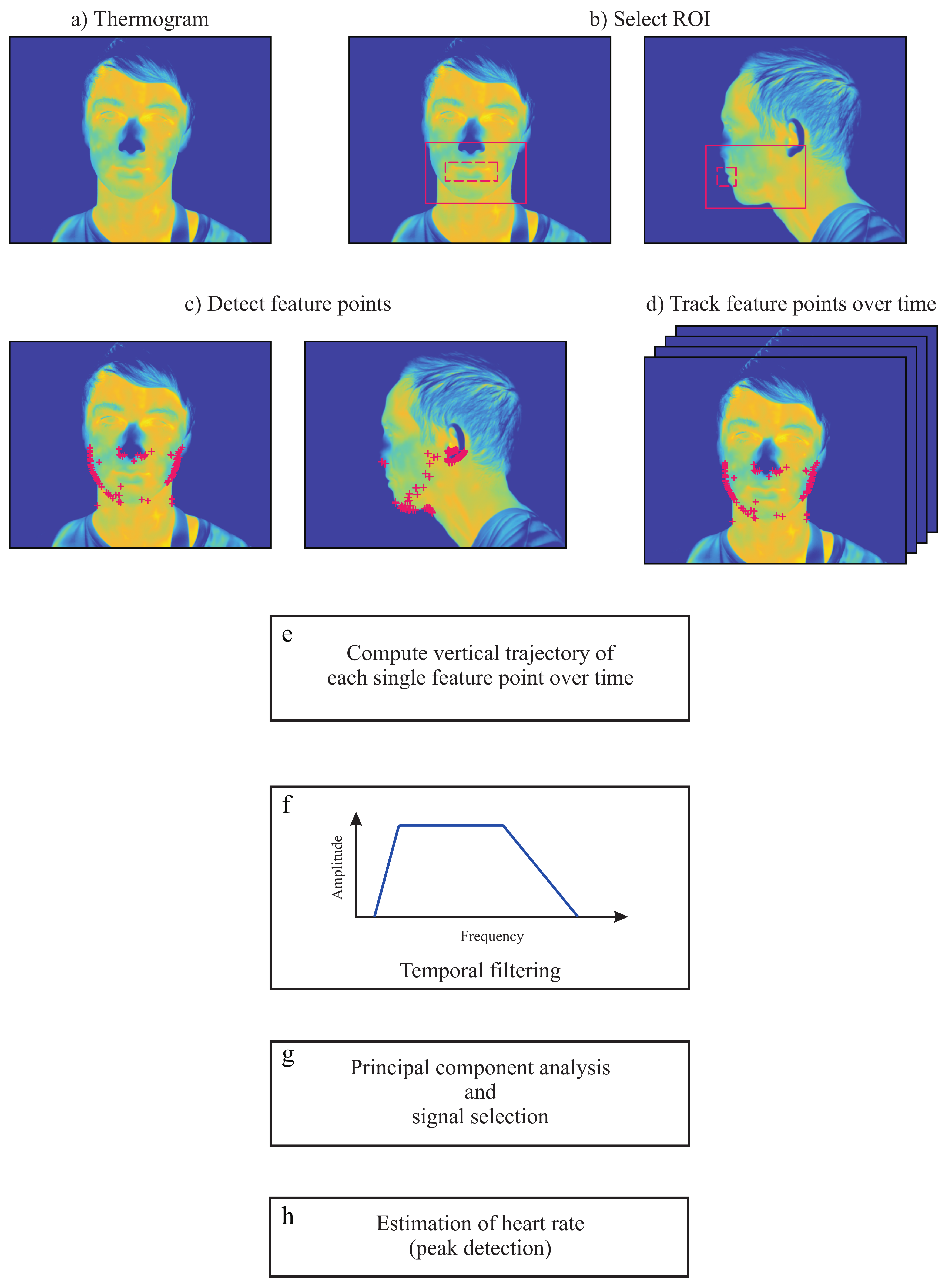

2.2. Heart Rate

2.2.1. Image Preprocessing

2.2.2. Region Selection

2.2.3. Selection and Tracking of Feature Points

2.2.4. Temporal Filtering

2.2.5. Principal Component Analysis Decomposition

2.2.6. Principal Component Selection

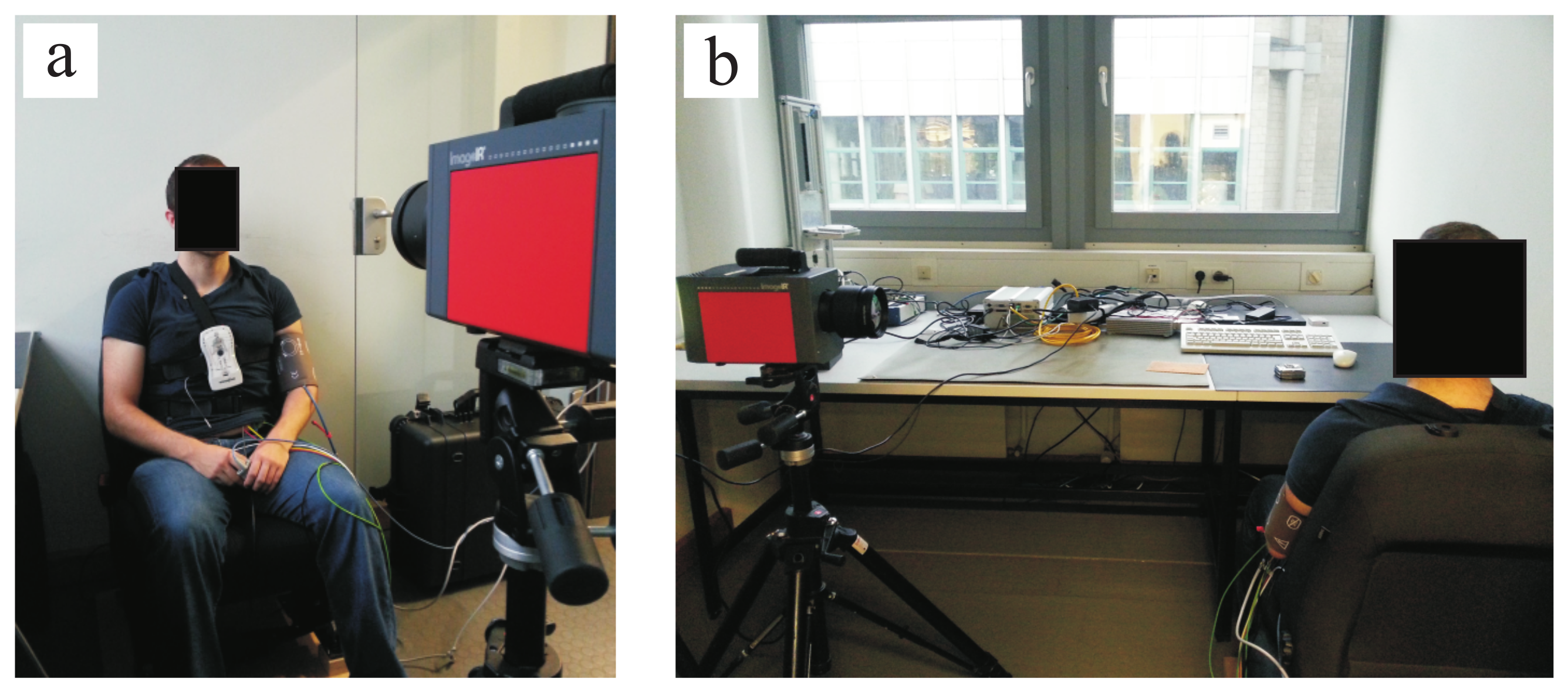

3. Experimental Protocol and Setup

4. Results

4.1. Respiratory Rate

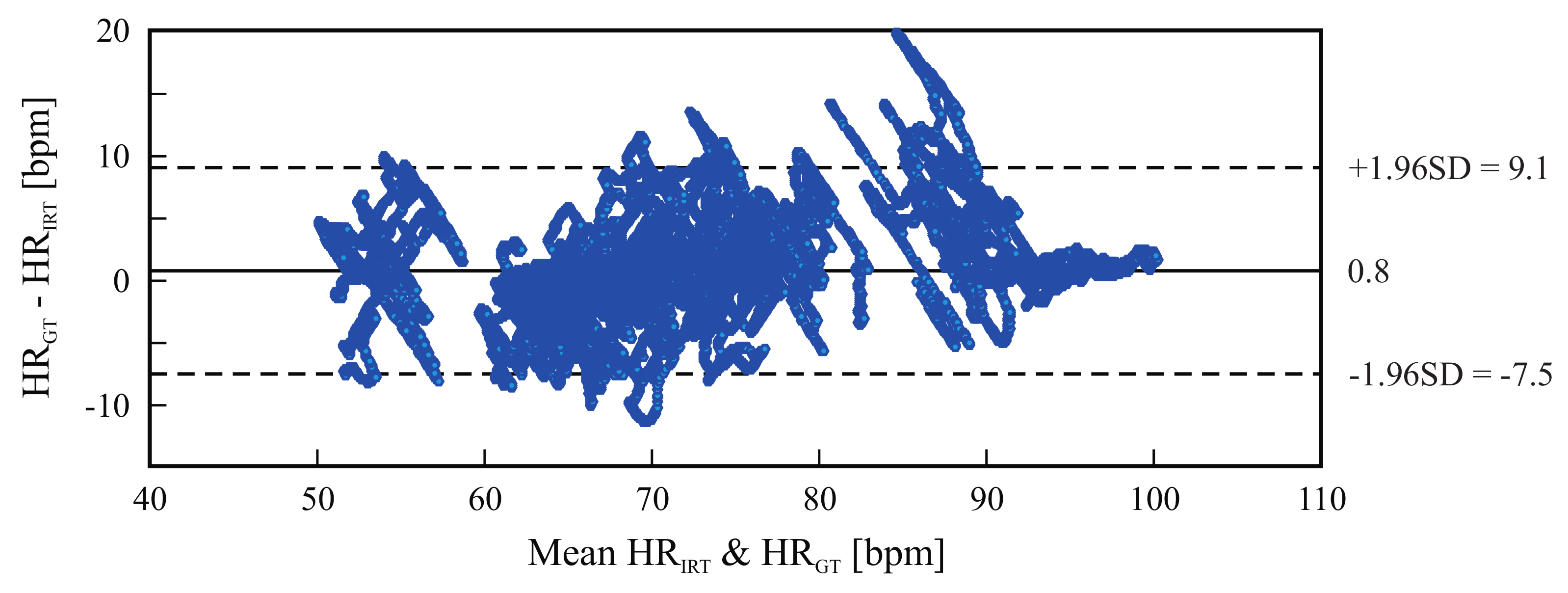

4.2. Heart Rate

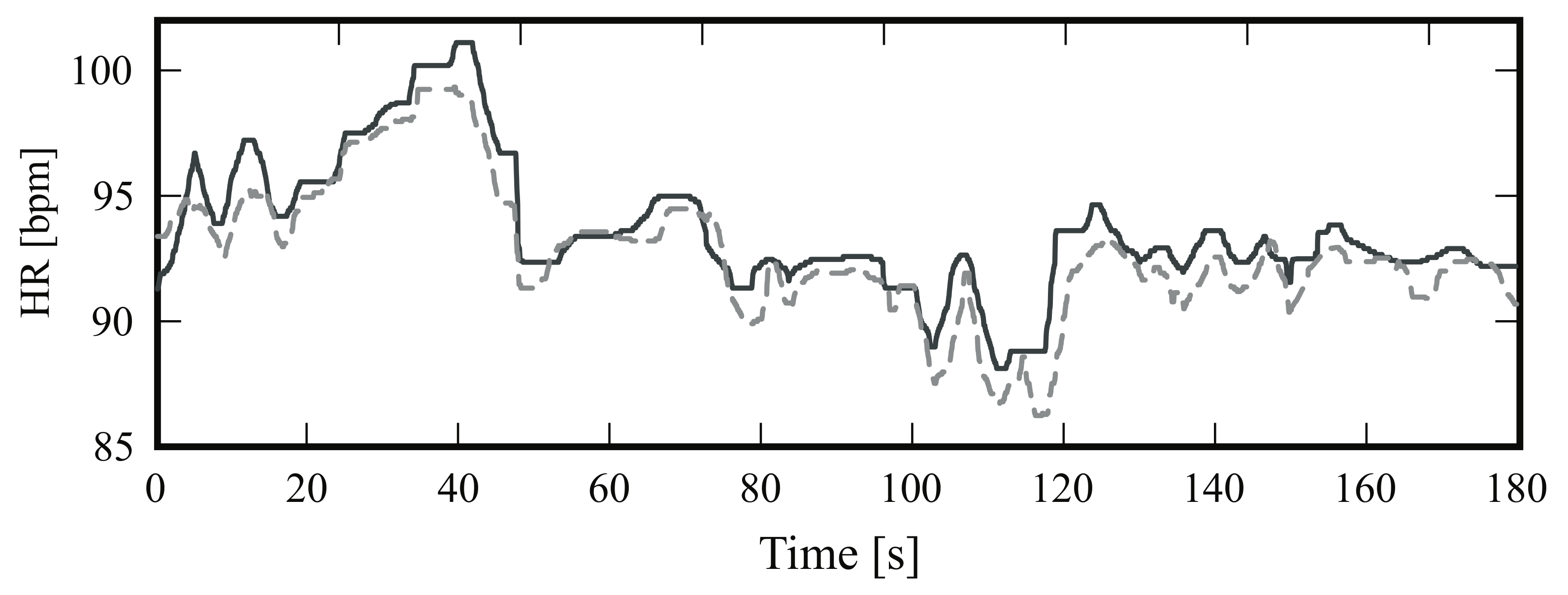

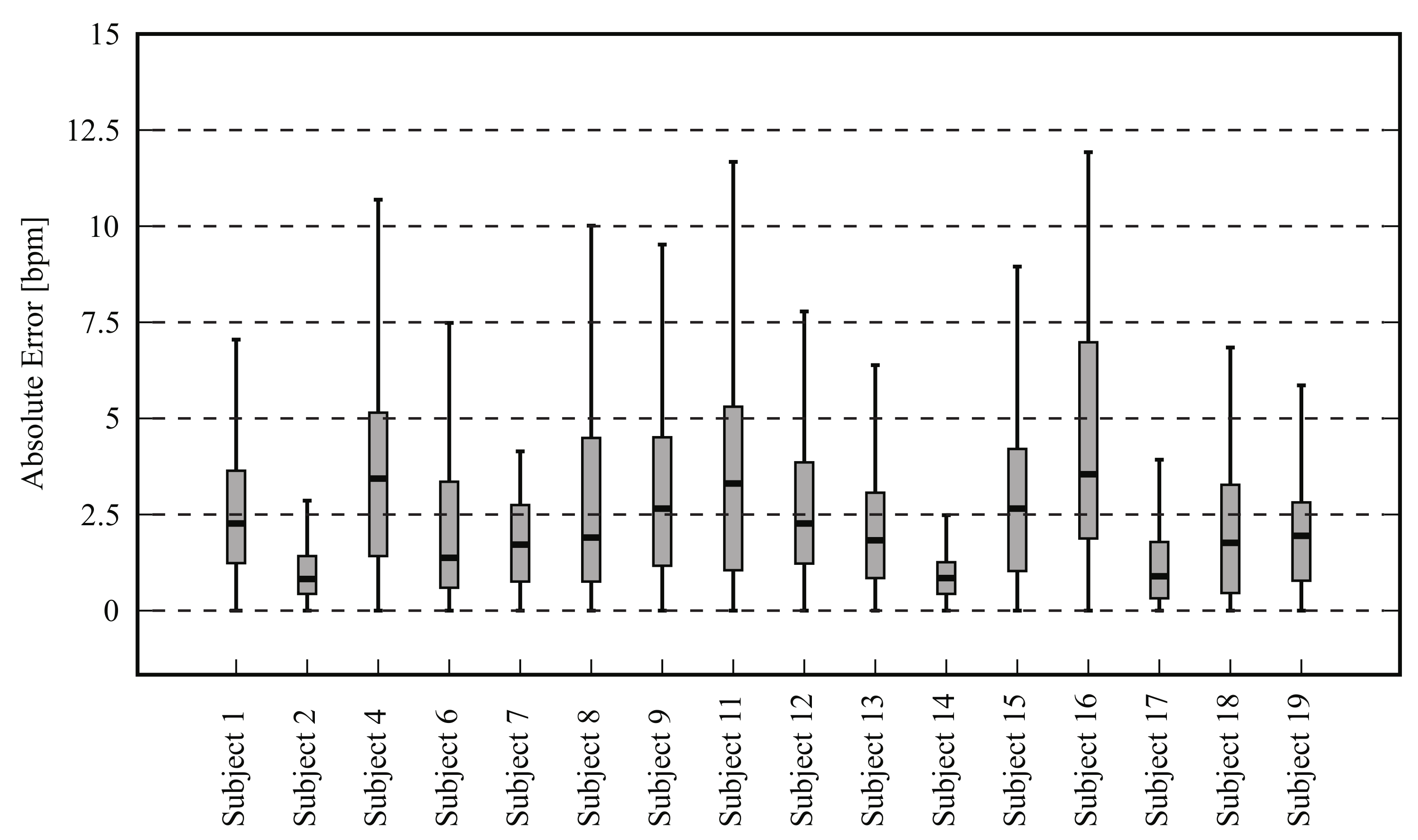

4.2.1. Frontal View

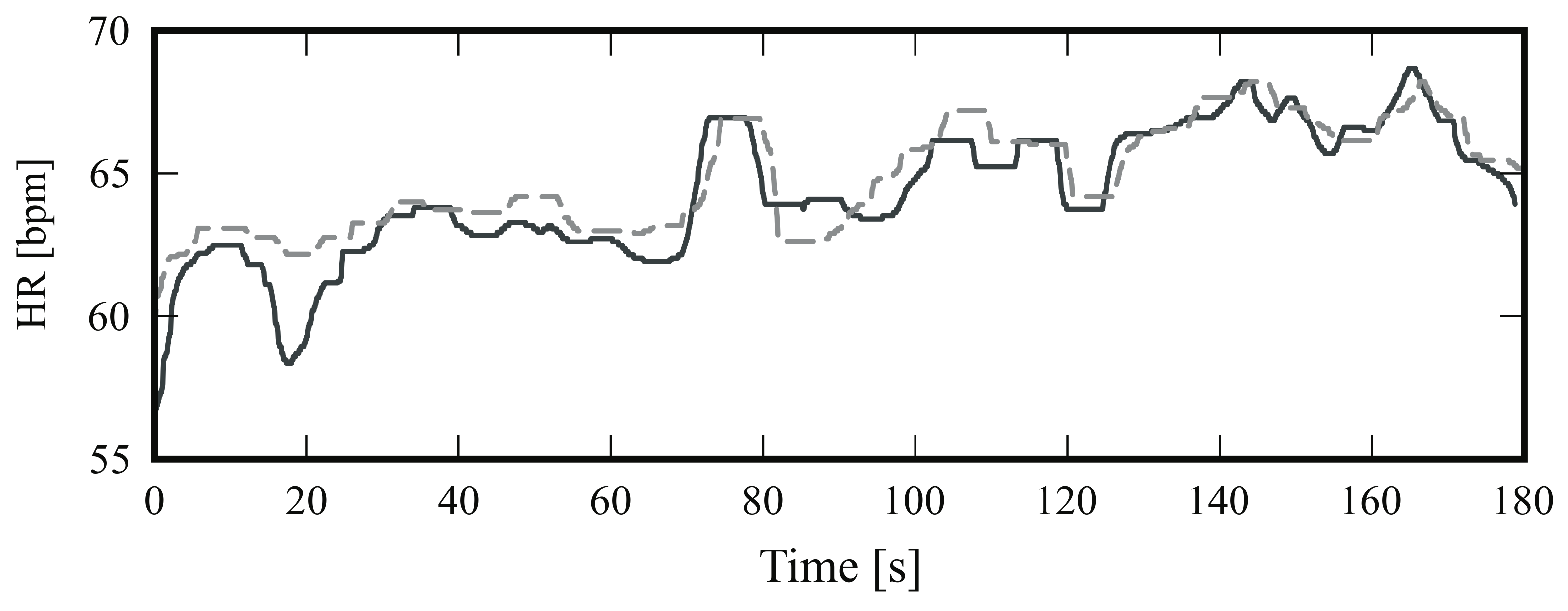

4.2.2. Side View

5. Discussion

6. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Modak, R.K. Anesthesiology Keywords Review, 2nd ed.; Lippincott Williams & Wilkins: Philadelphia, PA, USA, 2013; ISBN 978-145-117-782-4. [Google Scholar]

- Bartula, M.; Tigges, T.; Muehlsteff, J. Camera-based system for contactless monitoring of respiration. In Proceedings of the IEEE Engineering in Medicine and Biology Society, Osaka, Japan, 3–7 July 2013; pp. 2672–2675. [Google Scholar]

- Fei, J.; Pavlidis, I. Thermistor at a distance: Unobtrusive measurement of breathing. IEEE Trans. Biomed. Eng. 2010, 57, 988–998. [Google Scholar] [CrossRef] [PubMed]

- Pereira, C.B.; Yu, X.; Czaplik, M.; Rossaint, R.; Blazek, V.; Leonhardt, S. Remote monitoring of breathing dynamics using infrared thermography. Biomed. Opt. Express 2015, 6, 4378–4394. [Google Scholar] [CrossRef] [PubMed]

- Baharestani, M.M. An overview of neonatal and pediatric wound care knowledge and considerations. Ostomy Wound Manag. 2007, 53, 34–55. [Google Scholar]

- Droitcour, A.D.; Seto, T.B.; Park, B.-K.; Yamada, S.; Vergara, A.; El Hourani, C.; Shing, T.; Yuen, A.; Lubecke, V.M.; Boric-Lubecke, O. Non-contact respiratory rate measurement validation for hospitalized patients. In Proceedings of the IEEE Engineering in Medicine and Biology Society, Minneapolis, MN, USA, 2–6 September 2009; pp. 4812–4815. [Google Scholar]

- Oehler, M.; Schilling, M.; Emperor, H. Capacitive ECG system with direct access to standard leads and body surface potential mapping. Biomed. Technol. 2009, 54, 329–335. [Google Scholar] [CrossRef] [PubMed]

- Teichmann, D.; Teichmann, M.; Weitz, P.; Wolfart, S.; Leonhardt, S.; Walter, M. SensInDenT: Noncontact Sensors Integrated Into Dental Treatment Units. IEEE Trans. Biomed. Circuits Syst. 2017, 11, 225–233. [Google Scholar] [CrossRef] [PubMed]

- Tarassenko, L.; Villarroel, M.; Guazzi, A.; Jorge, J.; Clifton, D.A.; Pugh, C. Non-contact video-based vital sign monitoring using ambient light and auto-regressive models. Phys. Meas. 2014, 35, 807–831. [Google Scholar] [CrossRef] [PubMed]

- Li, M.H.; Yadollahi, A.; Taati, B. Noncontact Vision-Based Cardiopulmonary Monitoring in Different Sleeping Positions. IEEE J. Biomed. Health Inform. 2017, 21, 1367–1375. [Google Scholar] [CrossRef] [PubMed]

- Blanik, N.; Heimann, K.; Pereira, C.; Paul, M.; Blazek, V.; Venema, B.; Orlikowsky, T.; Leonhardt, S. Remote Vital Parameter Monitoring in Neonatology—Robust, Unobtrusive Heart Rate Detection in a Realistic Clinical Scenario. Biomed. Technol. 2016, 61, 631–643. [Google Scholar] [CrossRef] [PubMed]

- Garbey, M.; Sun, N.; Merla, A.; Pavlidis, I. Contact-free measurement of cardiac pulse based on the analysis of thermal imagery. IEEE Trans. Biomed. Eng. 2007, 54, 1418–1426. [Google Scholar] [CrossRef] [PubMed]

- Chekmenev, S.Y.; Farag, A.A.; Essock, E.A. Multiresolution Approach for Non-Contact Measurements of Arterial Pulse using Thermal Imaging. In Proceedings of the 2006 Conference on Computer Vision and Pattern Recognition Workshop, New York, NY, USA, 17–22 June 2006; pp. 1–4. [Google Scholar]

- Pereira, C.B.; Czaplik, M.; Blanik, N.; Rossaint, R.; Blazek, V.; Leonhardt, S. Contact-free monitoring of circulation and perfusion dynamics based on the analysis of thermal imagery. Biomed. Opt. Express 2014, 5, 1075–1089. [Google Scholar] [CrossRef] [PubMed]

- Pereira, C.; Heimann, K.; Venema, B.; Blazek, V.; Czaplik, M.; Leonhardt, S. Estimation of respiratory rate from thermal videos of preterm infants. In Proceedings of the IEEE Engineering in Medicine and Biology Society, Seogwipo, Korea, 11–15 July 2017; pp. 3818–3821. [Google Scholar]

- Lewis, G.F.; Gatto, R.G.; Porges, S.W. A novel method for extracting respiration rate and relative tidal volume from infrared thermography. Psychophysiology 2011, 48, 877–887. [Google Scholar] [CrossRef] [PubMed]

- Fraiwan, L.; AlKhodari, M.; Ninan, J.; Mustafa, B.; Saleh, A.; Ghazal, M. Diabetic foot ulcer mobile detection system using smart phone thermal camera: A feasibility study. Biomed. Eng. Online 2017, 16, 1–19. [Google Scholar] [CrossRef] [PubMed]

- Czaplik, M.; Hochhausen, N.; Dohmeier, H.; Barbosa Pereira, C.; Rossaint, R. Development of a Thermal-Associated Pain Index score Using Infrared-Thermography for Objective Pain Assessment. In Proceedings of the IEEE Engineering in Medicine and Biology Society, Seogwipo, Korea, 11–15 July 2017; pp. 3831–3834. [Google Scholar]

- Heimann, K.; Jergus, K.; Abbas, A.K.; Heussen, N.; Leonhardt, S.; Orlikowsky, T. Infrared thermography for detailed registration of thermoregulation in premature infants. J. Perinat Med. 2013, 41, 613–620. [Google Scholar] [CrossRef] [PubMed]

- Knobel, R.; Guenther, B.; Rice, H. Thermoregulation and Thermography in Neonatal Physiology and Disease. Biol. Res. Nurs. 2011, 13, 274–282. [Google Scholar] [CrossRef] [PubMed]

- Sun, G.; Nakayama, Y.; Dagdanpurev, S.; Abe, S.; Nishimura, H.; Kirimoto, T.; Matsui, T. Remote sensing of multiple vital signs using a CMOS camera-equipped infrared thermography system and its clinical application in rapidly screening patients with suspected infectious diseases. Int. J. Infect. Dis. 2017, 55, 113–117. [Google Scholar] [CrossRef] [PubMed]

- Lahiri, B.; Bagavathiappan, S.; Jayakumar, T.; Philip, J. Medical applications of infrared thermography: A review. Infrared Phys. Technol. 2012, 55, 221–235. [Google Scholar] [CrossRef]

- Löser, R.E. Die Messung und Auswertung des Kopfballistogrammes als Möglichkeit zur Erkennung des Schnellen Hirndruckanstieges. Ph.D. Thesis, Faculty of Electrical Engineering, Technical University Darmstadt, Darmstadt, Germany, 1989. [Google Scholar]

- Brüser, C.; Winter, S.; Leonhardt, S. Robust inter-beat interval estimation in cardiac vibration signals. Phys. Meas. 2013, 34, 123–138. [Google Scholar] [CrossRef] [PubMed]

- Brüser, C. Sensing and Signal Processing for Unobtrusive Cardiac Monitoring Utilizing Ballistocardiography. Ph.D. Thesis, Faculty of Electrical Engineering, RWTH Aachen University, Aachen, Germany, 2014. [Google Scholar]

- Balakrishnan, G.; Durand, F.; Guttag, J. Detecting pulse from head motions in video. In Proceedings of the IEEE Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 3430–3437. [Google Scholar]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Liao, P.S.; Chen, T.S.; Chung, P.C. A fast algorithm for multi-level thresholding. J. Inf. Sci. Eng. 2001, 17, 713–727. [Google Scholar]

- Shi, J.; Tomasi, C. Good features to track. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; pp. 593–600. [Google Scholar]

- Jolliffe, I.T. Principal Component Analysis. In Springer Series in Statistics; Bickel, P., Diggle, P., Fienberg, S.E., Gather, U., Zeger, S., Eds.; Springer: Berlin, Germany, 2002. [Google Scholar]

- Pereira, C.B.; Yu, X.; Czaplik, M.; Blazek, V.; Venema, B.; Leonhardt, S. Estimation of breathing rate in thermal imaging videos: A pilot study on healthy human subjects. J. Clin. Monit. Comput. 2017, 31, 1241–1254. [Google Scholar] [CrossRef] [PubMed]

- Shafiq, G.; Veluvolu, K.C. Surface chest motion decomposition for cardiovascular monitoring. Sci. Rep. 2014, 4, 1–9. [Google Scholar] [CrossRef] [PubMed]

| Subject ID | Gender | Age (Years) |

|---|---|---|

| Subject 1 | M | 36 |

| Subject 2 | M | 30 |

| Subject 3 | M | 25 |

| Subject 4 | F | 33 |

| Subject 5 | F | 25 |

| Subject 6 | M | 23 |

| Subject 7 | M | 27 |

| Subject 8 | M | 29 |

| Subject 9 | M | 25 |

| Subject 10 | F | 36 |

| Subject 11 | M | 25 |

| Subject 12 | M | 27 |

| Subject 13 | F | 31 |

| Subject 14 | M | 50 |

| Subject 15 | M | 18 |

| Subject 16 | M | 19 |

| Subject 17 | F | 23 |

| Subject 18 | F | 23 |

| Subject 19 | F | 26 |

| Subject 20 | M | 26 |

| Mean ± SD | 27.85 ± 6.90 |

| Subject | Mean HR | RMSE (Breaths/Min) | |||

|---|---|---|---|---|---|

| GT (Breaths/Min) | IRT (Breaths/Min) | ||||

| Subject 1 | 13.94 | 13.51 | 1.28 | 0.07 | 0.13 |

| Subject 3 | 15.46 | 15.34 | 0.46 | 0.02 | 0.05 |

| Subject 4 | 15.52 | 15.15 | 0.96 | 0.05 | 0.09 |

| Subject 5 | 13.10 | 13.16 | 0.59 | 0.03 | 0.08 |

| Subject 6 | 20.62 | 20.73 | 0.57 | 0.02 | 0.06 |

| Subject 7 | 9.04 | 9.10 | 0.24 | 0.02 | 0.05 |

| Subject 8 | 12.78 | 12.43 | 0.62 | 0.03 | 0.05 |

| Subject 9 | 14.21 | 14.13 | 0.58 | 0.03 | 0.06 |

| Subject 10 | 18.15 | 17.77 | 1.13 | 0.05 | 0.11 |

| Subject 11 | 19.39 | 19.43 | 0.70 | 0.03 | 0.05 |

| Subject 12 | 9.70 | 9.62 | 0.77 | 0.05 | 0.14 |

| Subject 14 | 8.79 | 9.10 | 0.81 | 0.05 | 0.10 |

| Subject 16 | 17.33 | 17.26 | 1.52 | 0.07 | 0.15 |

| Subject 17 | 19.33 | 19.57 | 0.74 | 0.03 | 0.07 |

| Subject 18 | 21.20 | 21.09 | 0.48 | 0.02 | 0.04 |

| Subject 20 | 15.71 | 15.81 | 0.47 | 0.02 | 0.04 |

| Mean ± SD | 15.36 ± 3.95 | 15.31 ± 3.93 | 0.71 ± 0.30 | 0.03 ± 0.01 | 0.08 ± 0.03 |

| Subject | Mean HR | RMSE (bpm) | |||

|---|---|---|---|---|---|

| GT (bpm) | IRT (bpm) | ||||

| Subject 1 | 72.29 | 71.21 | 4.15 | 0.05 | 0.11 |

| Subject 2 | 63.26 | 64.37 | 2.20 | 0.03 | 0.06 |

| Subject 3 | 74.48 | 73.44 | 1.91 | 0.02 | 0.04 |

| Subject 4 | 63.54 | 63.98 | 1.80 | 0.02 | 0.05 |

| Subject 5 | 63.68 | 67.25 | 4.40 | 0.06 | 0.09 |

| Subject 6 | 90.46 | 85.22 | 7.16 | 0.07 | 0.14 |

| Subject 7 | 73.62 | 73.83 | 3.84 | 0.04 | 0.08 |

| Subject 9 | 80.46 | 77.65 | 4.46 | 0.05 | 0.10 |

| Subject 11 | 68.84 | 69.76 | 2.17 | 0.02 | 0.05 |

| Subject 12 | 66.79 | 68.06 | 4.66 | 0.06 | 0.11 |

| Subject 13 | 55.50 | 53.67 | 5.32 | 0.09 | 0.02 |

| Subject 14 | 63.33 | 63.91 | 1.13 | 0.01 | 0.03 |

| Subject 15 | 93.80 | 92.88 | 1.40 | 0.01 | 0.02 |

| Subject 16 | 63.54 | 64.65 | 4.07 | 0.05 | 0.11 |

| Subject 17 | 66.29 | 66.98 | 3.34 | 0.04 | 0.09 |

| Subject 18 | 54.07 | 52.10 | 3.49 | 0.06 | 0.10 |

| Subject 19 | 59.28 | 59.36 | 3.04 | 0.04 | 0.08 |

| Subject 20 | 61.05 | 62.80 | 5.01 | 0.05 | 0.11 |

| Mean ± SD | 68.57 ± 10.52 | 68.40 ± 9.72 | 3.53 ± 1.53 | 0.04 ± 0.02 | 0.08 ± 0.03 |

| Subject | Mean HR | RMSE (bpm) | |||

|---|---|---|---|---|---|

| GT (bpm) | IRT (bpm) | ||||

| Subject 1 | 66.69 | 66.81 | 2.87 | 0.04 | 0.06 |

| Subject 2 | 63.98 | 64.48 | 1.22 | 0.02 | 0.03 |

| Subject 4 | 62.11 | 65.84 | 5.77 | 0.06 | 0.13 |

| Subject 6 | 87.86 | 85.58 | 4.35 | 0.03 | 0.07 |

| Subject 7 | 70.03 | 68.72 | 2.24 | 0.03 | 0.05 |

| Subject 8 | 65.97 | 66.21 | 3.84 | 0.04 | 0.09 |

| Subject 9 | 78.77 | 76.81 | 3.95 | 0.04 | 0.08 |

| Subject 11 | 65.47 | 68.13 | 6.94 | 0.06 | 0.12 |

| Subject 12 | 69.82 | 69.51 | 3.71 | 0.04 | 0.08 |

| Subject 13 | 54.03 | 52.97 | 2.54 | 0.04 | 0.09 |

| Subject 14 | 64.36 | 64.86 | 1.08 | 0.01 | 0.02 |

| Subject 15 | 88.98 | 86.98 | 4.36 | 0.04 | 0.08 |

| Subject 16 | 60.02 | 63.99 | 5.18 | 0.07 | 0.14 |

| Subject 17 | 65.79 | 66.16 | 1.39 | 0.02 | 0.04 |

| Subject 18 | 51.08 | 49.43 | 2.68 | 0.04 | 0.10 |

| Subject 19 | 61.32 | 63.33 | 2.72 | 0.03 | 0.07 |

| Mean ± SD | 67.27 ± 10.06 | 67.49 ± 9.32 | 3.43 ± 1.61 | 0.04 ± 0.01 | 0.08 ± 0.03 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Barbosa Pereira, C.; Czaplik, M.; Blazek, V.; Leonhardt, S.; Teichmann, D. Monitoring of Cardiorespiratory Signals Using Thermal Imaging: A Pilot Study on Healthy Human Subjects. Sensors 2018, 18, 1541. https://doi.org/10.3390/s18051541

Barbosa Pereira C, Czaplik M, Blazek V, Leonhardt S, Teichmann D. Monitoring of Cardiorespiratory Signals Using Thermal Imaging: A Pilot Study on Healthy Human Subjects. Sensors. 2018; 18(5):1541. https://doi.org/10.3390/s18051541

Chicago/Turabian StyleBarbosa Pereira, Carina, Michael Czaplik, Vladimir Blazek, Steffen Leonhardt, and Daniel Teichmann. 2018. "Monitoring of Cardiorespiratory Signals Using Thermal Imaging: A Pilot Study on Healthy Human Subjects" Sensors 18, no. 5: 1541. https://doi.org/10.3390/s18051541