A High-Speed Imaging Method Based on Compressive Sensing for Sound Extraction Using a Low-Speed Camera

Abstract

:1. Introduction

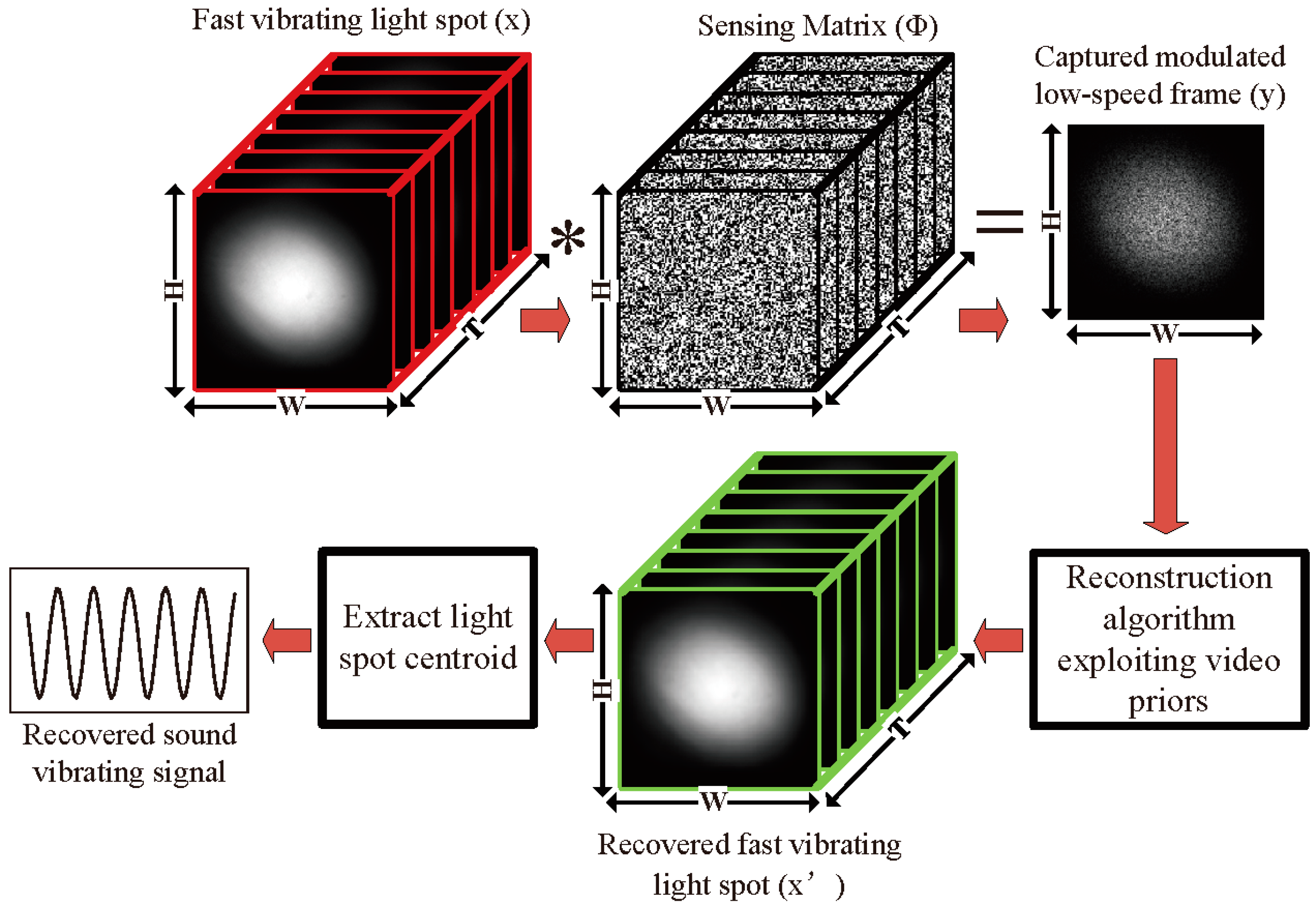

2. Methods

3. Simulations

3.1. Simulation Setup

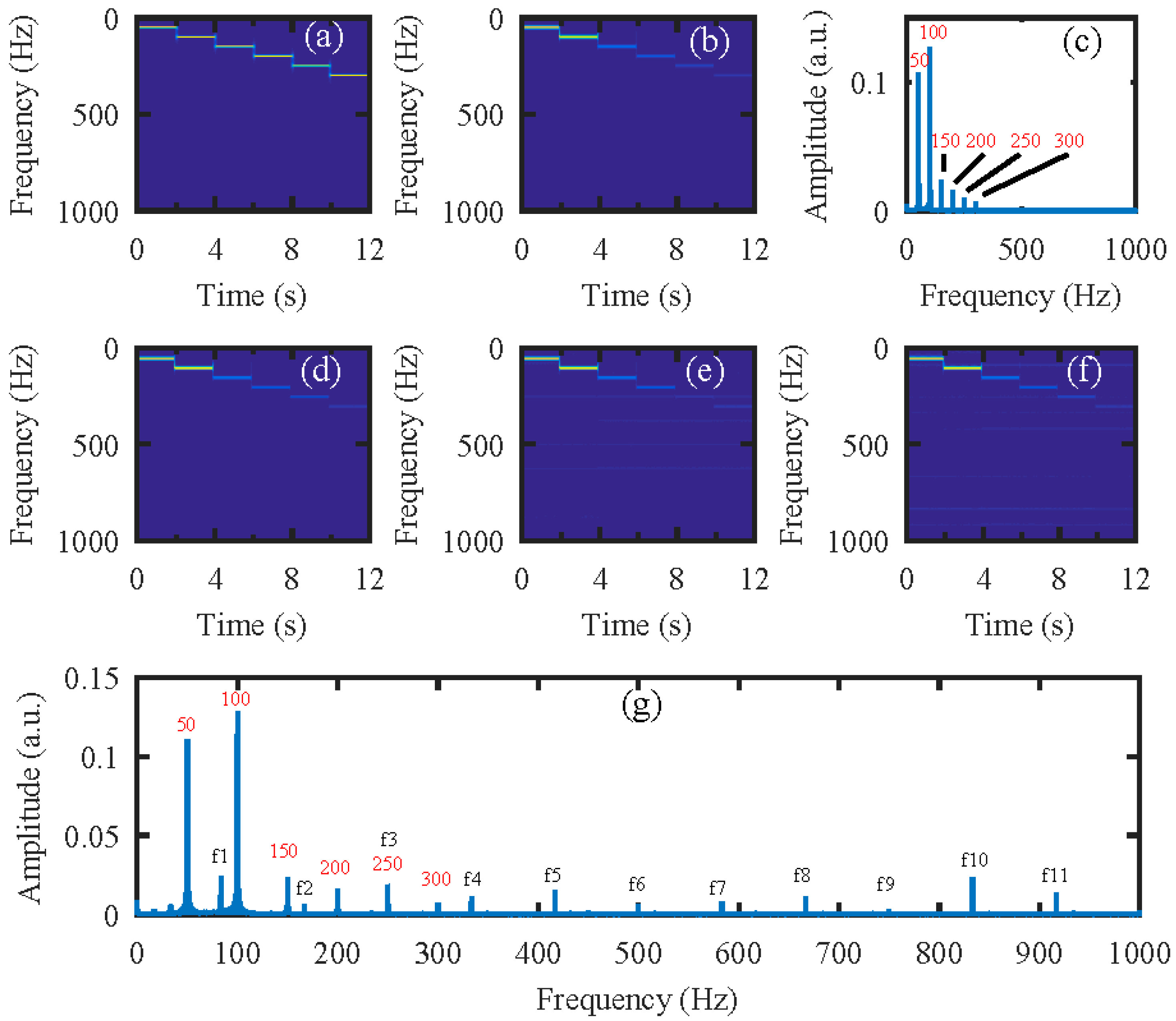

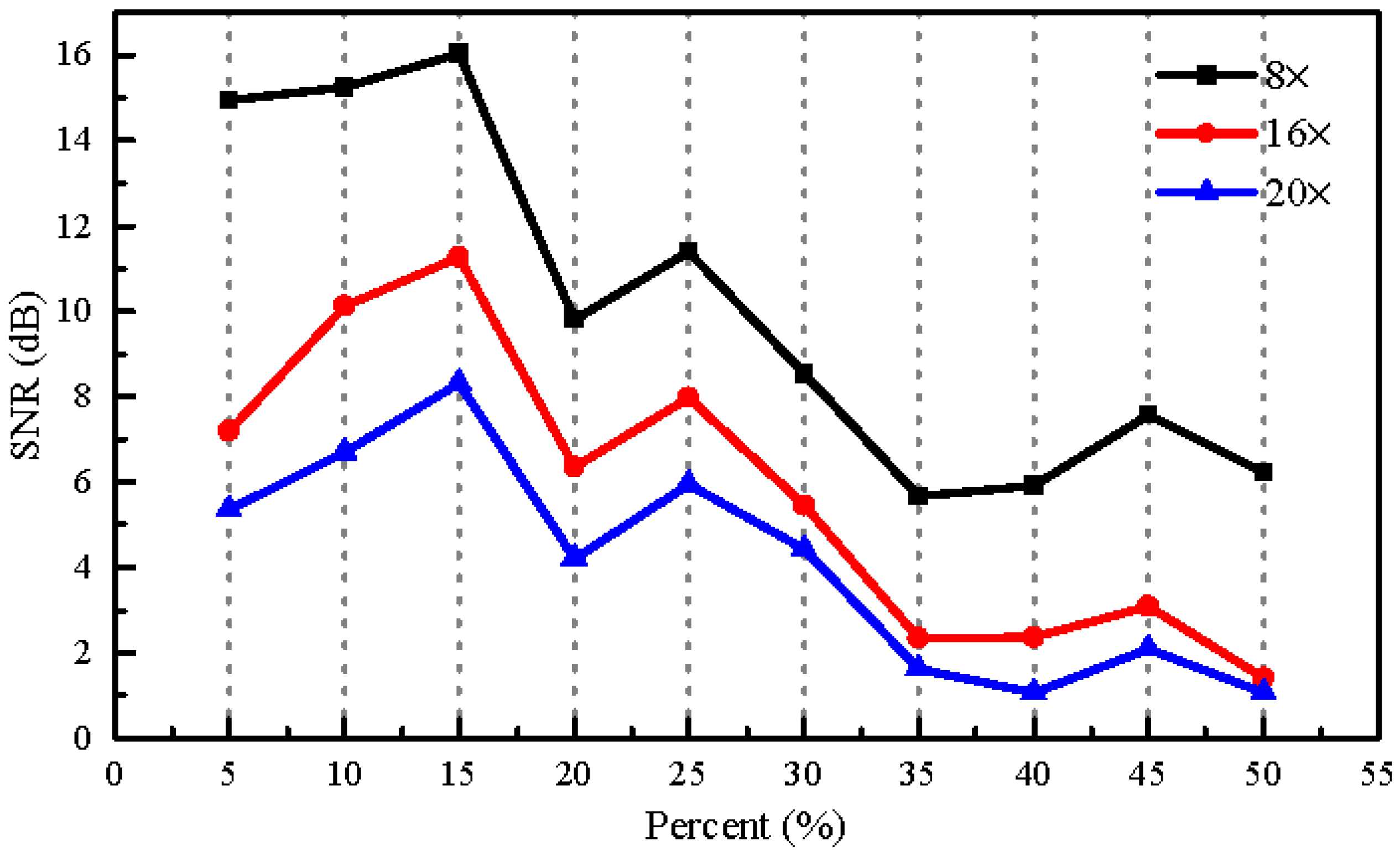

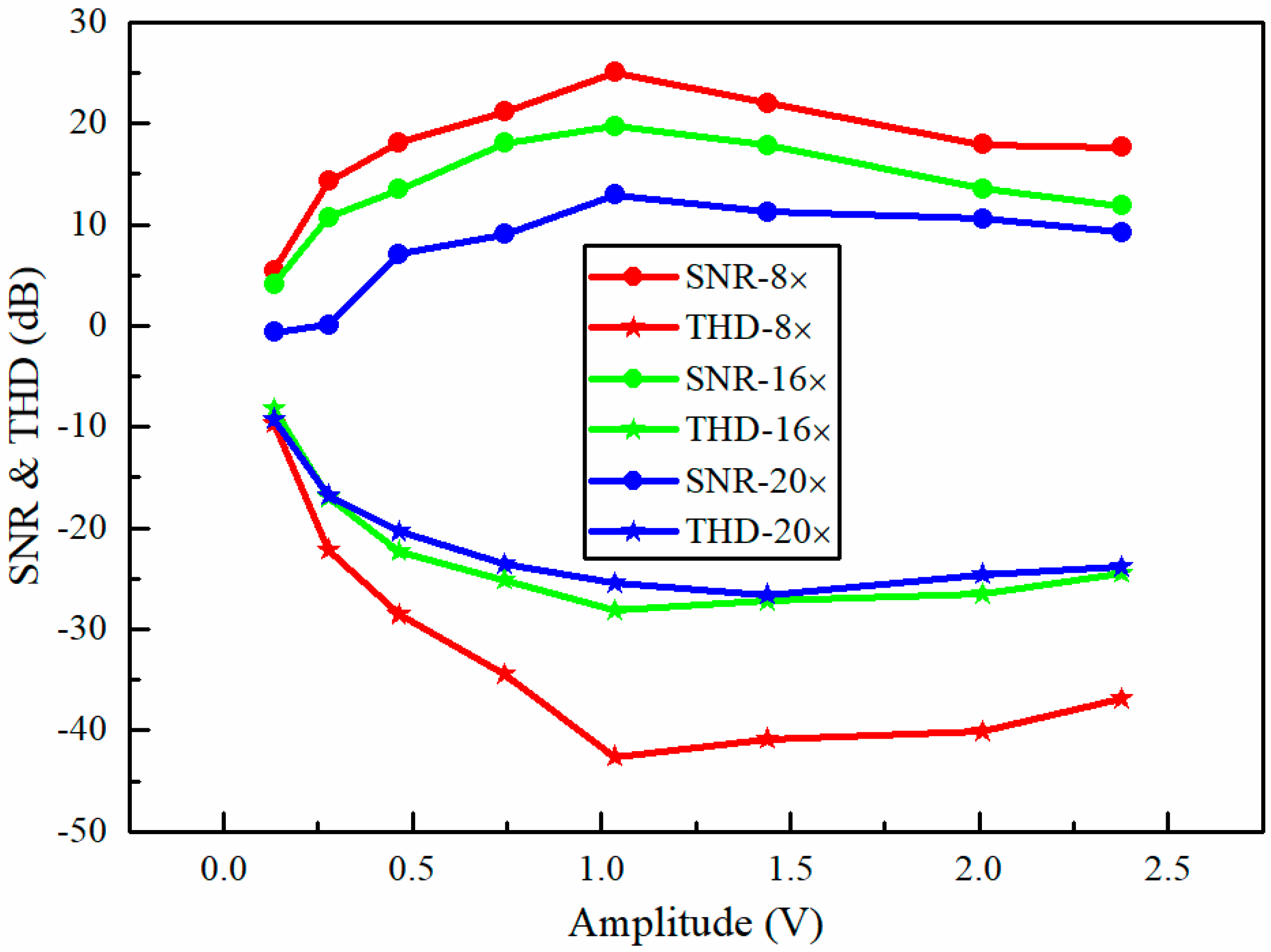

3.2. Simulation Results

4. Experiments

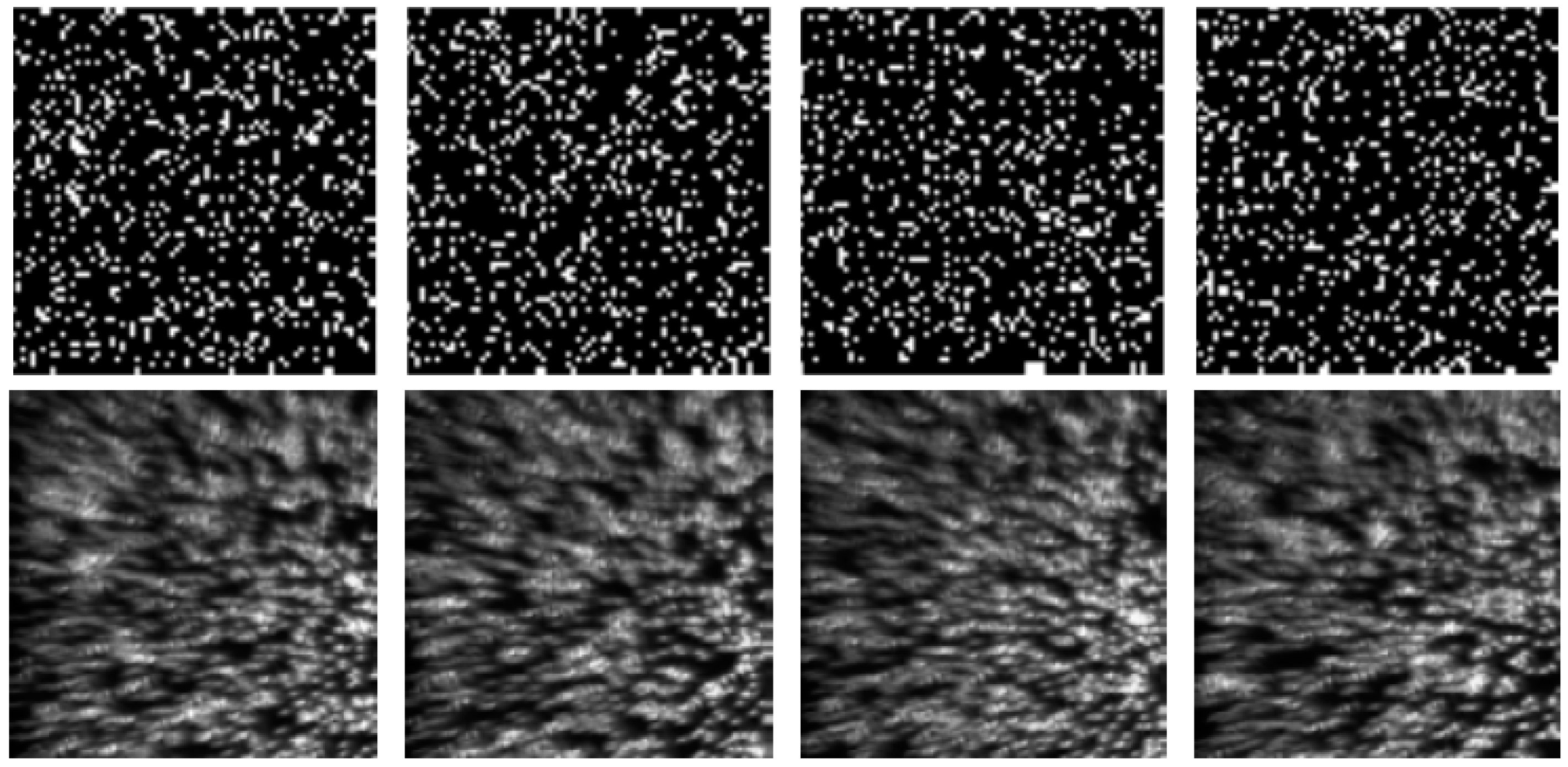

4.1. Experimental Setup

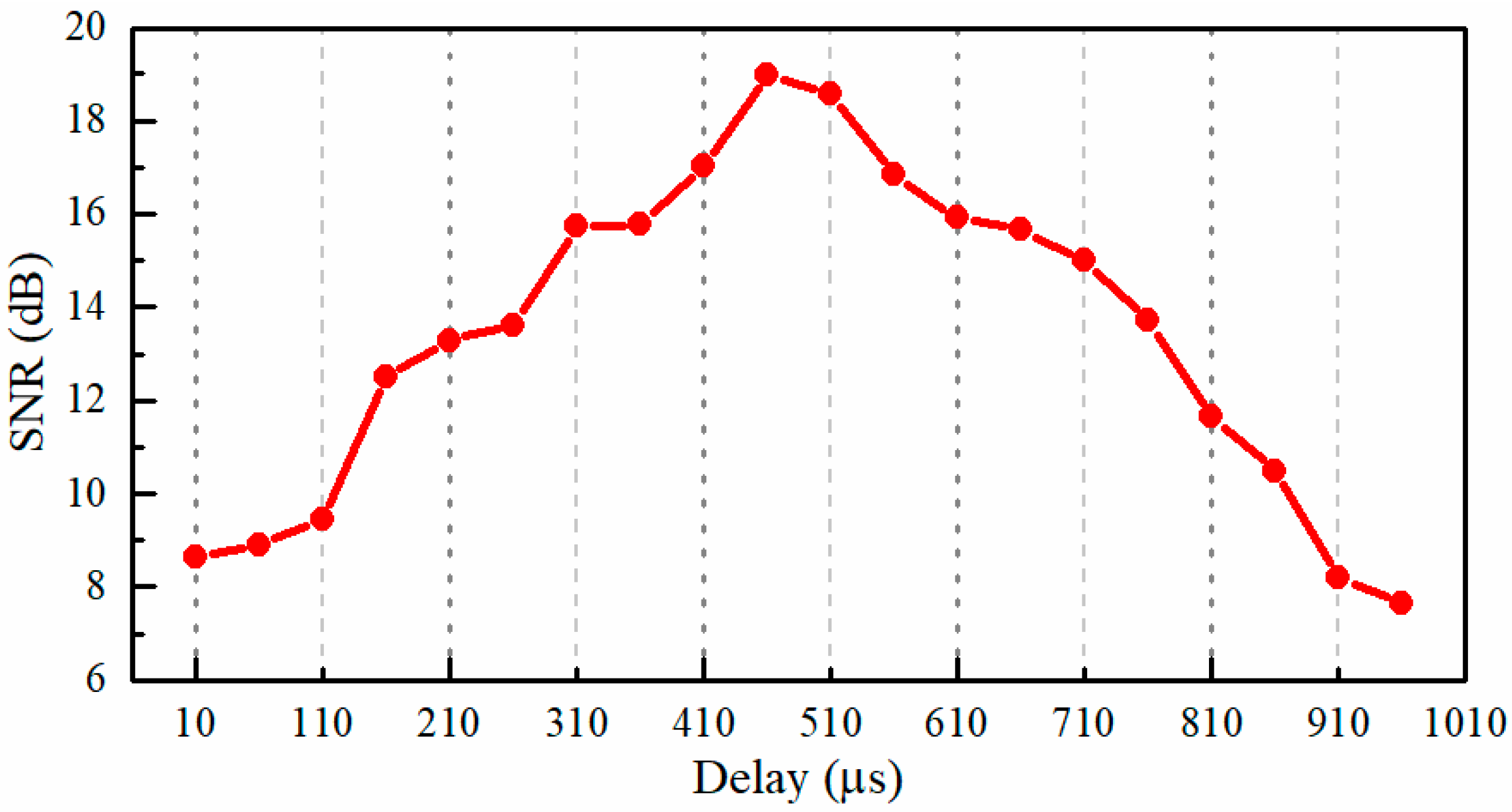

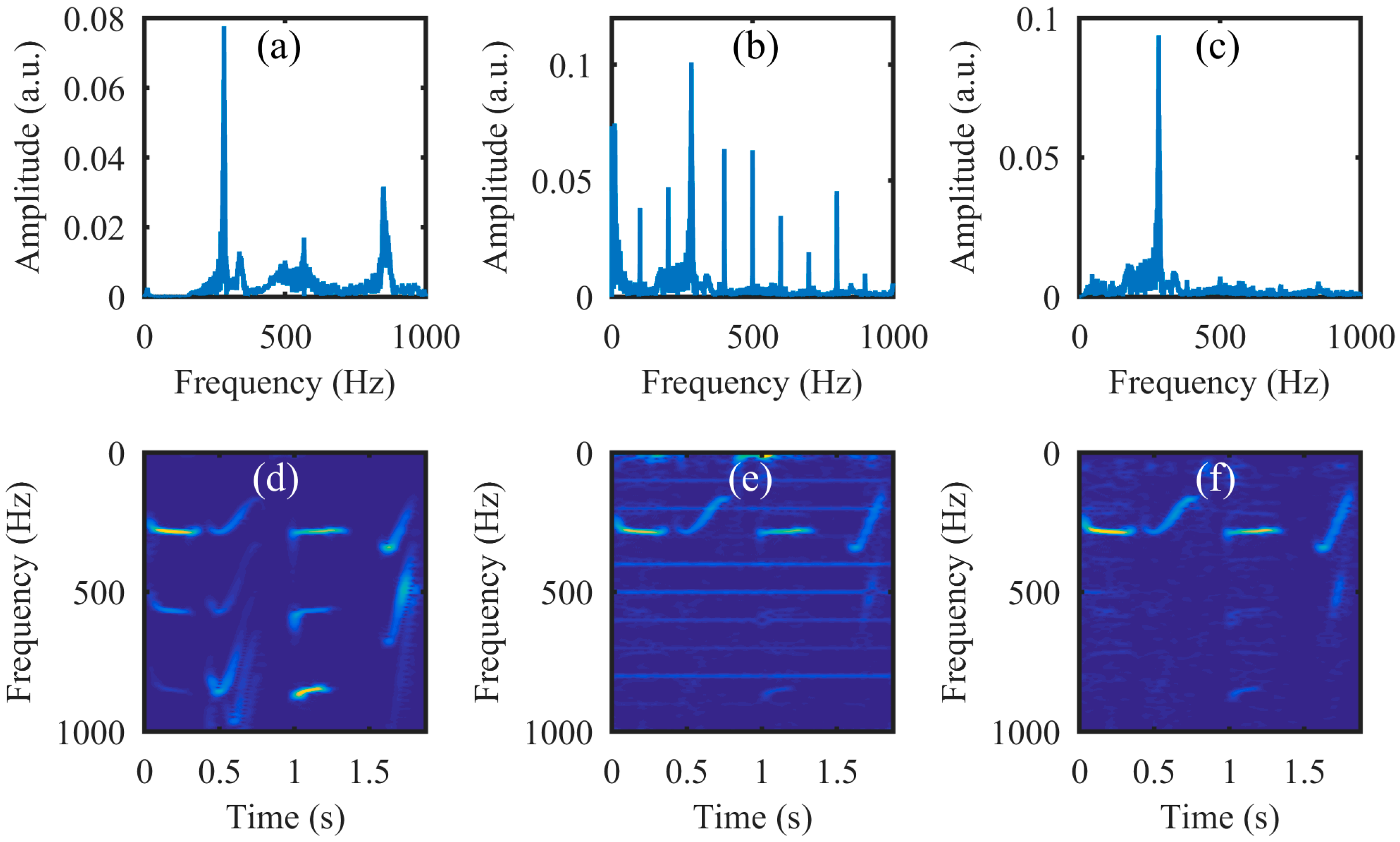

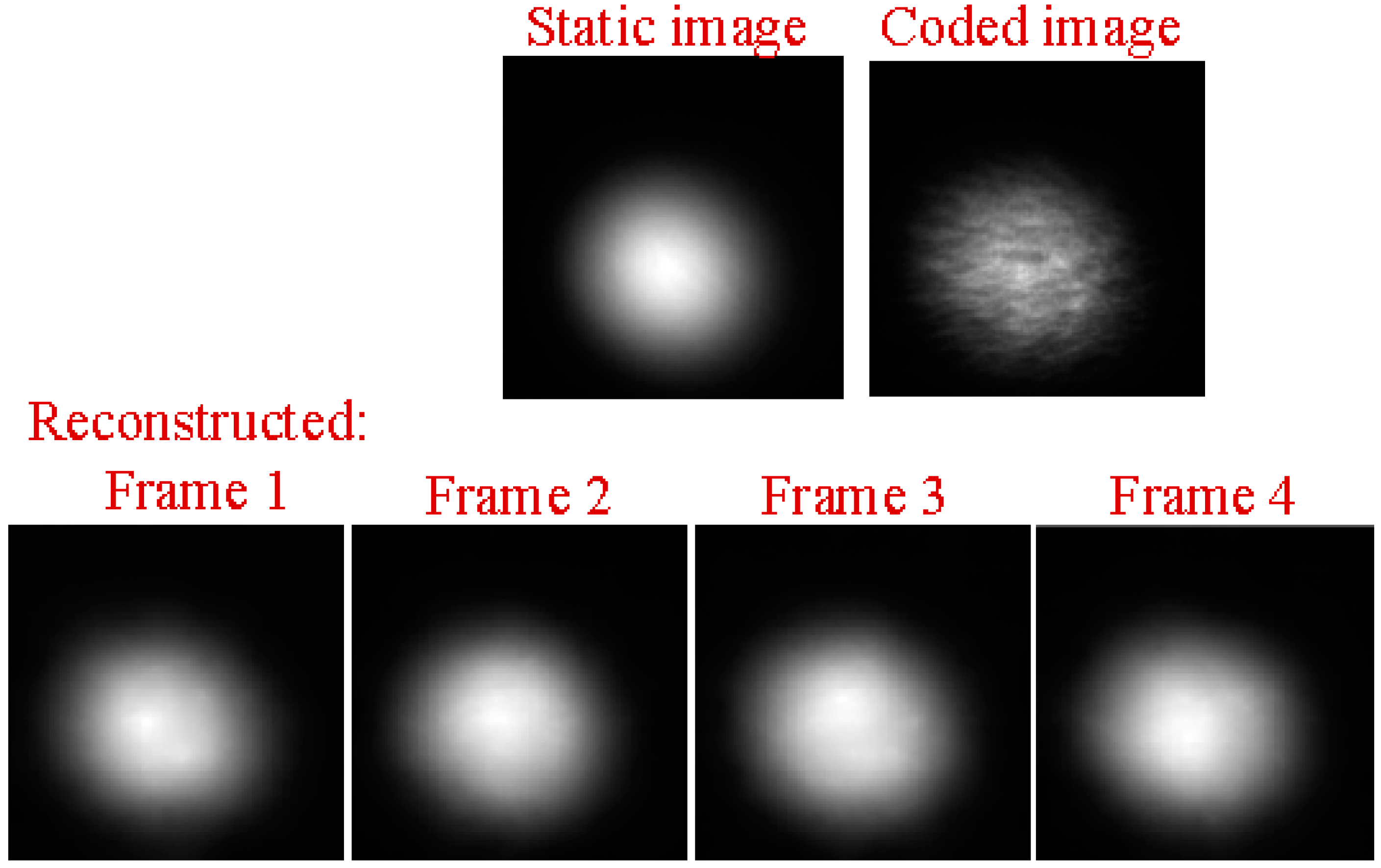

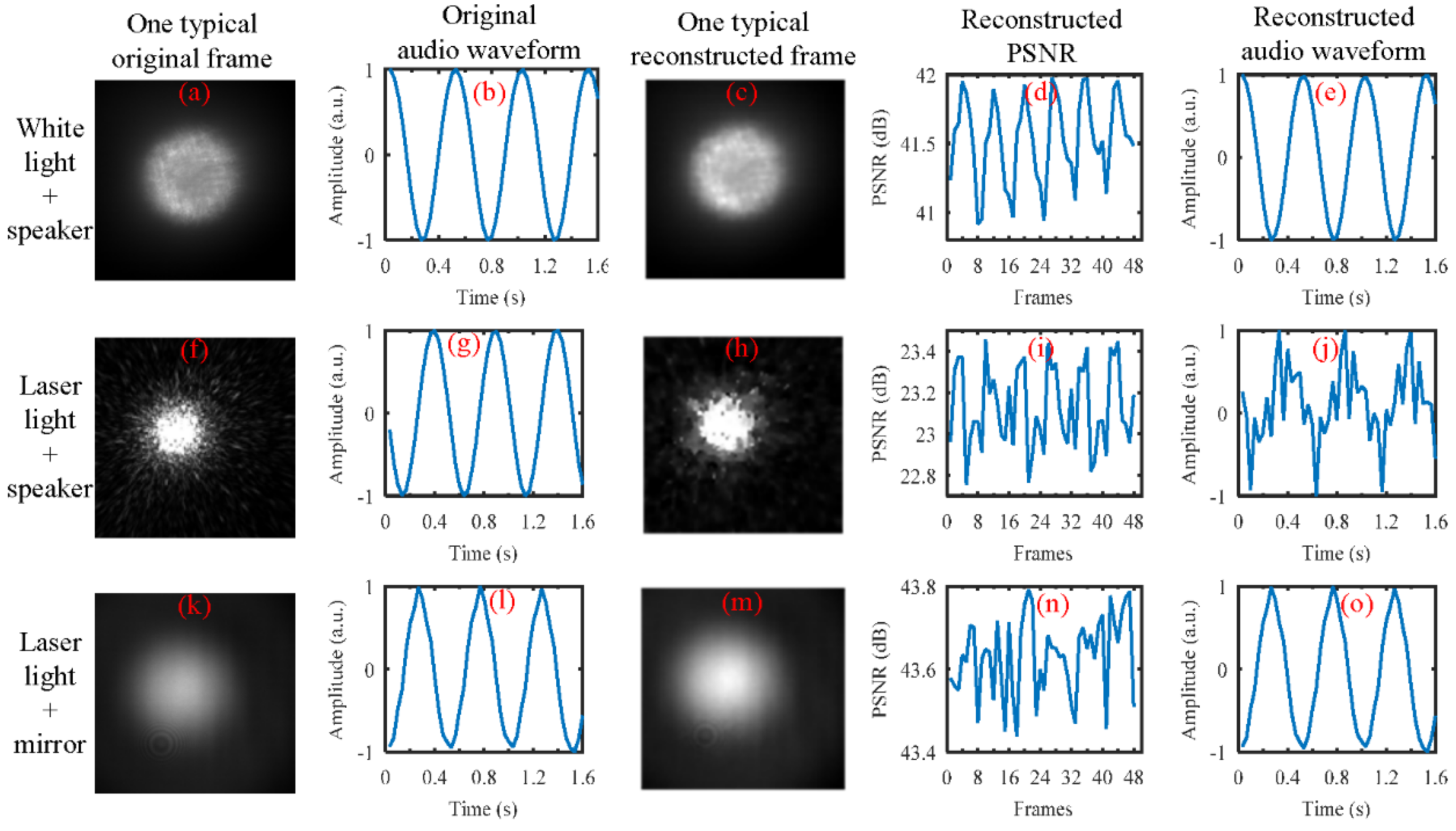

4.2. Experimental Results

5. Discussions

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Zieger, C.; Brutti, A.; Svaizer, P. Acoustic Based Surveillance System for Intrusion Detection. In Proceedings of the 2009 Sixth IEEE International Conference on Advanced Video and Signal Based Surveillance, Genova, Italy, 2–4 September 2009; pp. 314–319. [Google Scholar]

- Clavel, C.; Ehrette, T.; Richard, G. Events Detection for an Audio-Based Surveillance System. In Proceedings of the 2005 IEEE International Conference on Multimedia and Expo, Amsterdam, The Netherlands, 6 July 2005; pp. 1306–1309. [Google Scholar]

- Zhang, L.; Zhang, X.; Tang, W. Amplitude Measurement of Weak Sinusoidal Water Surface Acoustic Wave Using Laser Interferometer. Chin. Opt. Lett. 2015, 13, 091202. [Google Scholar] [CrossRef]

- Li, L.; Zeng, H.; Zhang, Y.; Kong, Q.; Zhou, Y.; Liu, Y. Analysis of Backscattering Characteristics of Objects for Remote Laser Voice Acquisition. Appl. Opt. 2014, 53, 971–978. [Google Scholar] [CrossRef] [PubMed]

- Shang, J.; He, Y.; Liu, D.; Zang, H.; Chen, W.B. Laser Doppler Vibrometer for Real-Time Speech-Signal Acquirement. Chin. Opt. Lett. 2009, 7, 732–733. [Google Scholar] [CrossRef]

- Li, R.; Wang, T.; Zhu, Z.G.; Xiao, W. Vibration Characteristics of Various Surfaces Using an LDV for Long-Range Voice Acquisition. IEEE Sens. J. 2011, 11, 1415–1422. [Google Scholar] [CrossRef]

- Qu, Y.; Wang, T.; Zhu, Z.G. Vision-Aided Laser Doppler Vibrometry for Remote Automatic Voice Detection. IEEE/ASME Trans. Mech. 2011, 16, 1110–1119. [Google Scholar] [CrossRef]

- Qu, Y.; Wang, T.; Zhu, Z.G. An Active Multimodal Sensing Platform for Remote Voice Detection. In Proceedings of the 2010 IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Montreal, QC, Canada, 6–9 July 2010; pp. 627–632. [Google Scholar]

- Veber, A.A.; Lyashedko, A.; Sholokhov, E.; Trikshev, A.; Kurkov, A.; Pyrkov, Y.; Veber, A.E.; Seregin, V.; Tsvetkov, V. Laser Vibrometry Based on Analysis of the Speckle Pattern from a Remote Object. Appl. Phys. B 2011, 105, 613–617. [Google Scholar] [CrossRef]

- Akutsu, M.; Oikawa, Y.; Yamasaki, Y. Extract Voice Information Using High-Speed Camera. J. Acoust. Soc. Am. 2013, 133, 3297. [Google Scholar] [CrossRef]

- Davis, A.; Rubinstein, M.; Wadhwa, N.; Mysore, G.J.; Durand, F.; Freeman, W.T. The Visual Microphone: Passive Recovery of Sound from Video. ACM Trans. Graph. 2014, 33, 79. [Google Scholar] [CrossRef]

- Wang, Z.Y.; Nguyen, H.; Quisberth, J. Audio Extraction from Silent High-Speed Video Using an Optical Technique. Opt. Eng. 2014, 53, 110502. [Google Scholar] [CrossRef]

- Zhang, D.; Guo, J.; Lei, X.; Zhu, C. Note: Sound Recovery from Video Using SVD-Based Information Extraction. Rev. Sci. Instrum. 2016, 87, 086111. [Google Scholar] [CrossRef] [PubMed]

- Zhang, D.S.; Guo, J.; Jin, Y.; Zhu, C.A. Efficient Subtle Motion Detection from High-Speed Video for Sound Recovery and Vibration Analysis Using Singular Value Decomposition-Based Approach. Opt. Eng. 2017, 56, 094105. [Google Scholar] [CrossRef]

- Zalevsky, Z.; Beiderman, Y.; Margalit, I.; Gingold, S.; Teicher, M.; Mico, V.; Garcia, J. Simultaneous Remote Extraction of Multiple Speech Sources and Heart Beats from Secondary Speckles Pattern. Opt. Express 2009, 17, 21566–21580. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Wang, C.; Huang, C.; Fu, H.; Luo, H.; Wang, H. Audio Signal Reconstruction Based on Adaptively Selected Seed Points from Laser Speckle Images. Opt. Commun. 2014, 331, 6–13. [Google Scholar] [CrossRef]

- Zhu, G.; Yao, X.R.; Qiu, P.; Mahmood, W.; Yu, W.K.; Sun, Z.B.; Zhai, G.J.; Zhao, Q. Sound Recovery via Intensity Variations of Speckle Pattern Pixels Selected with Variance-Based Method. Opt. Eng. 2018, 57, 026117. [Google Scholar] [CrossRef]

- El-Desouki, M.; Deen, M.J.; Fang, Q.; Liu, L.; Tse, F.; Armstrong, D. CMOS Image Sensors for High Speed Applications. Sensors 2009, 9, 430–444. [Google Scholar] [CrossRef] [PubMed]

- Pournaghi, R.; Wu, X.L. Coded Acquisition of High Frame Rate Video. IEEE Trans. Image Process. 2014, 23, 5670–5682. [Google Scholar] [CrossRef] [PubMed]

- Bub, G.; Tecza, M.; Helmes, M.; Lee, P.; Kohl, P. Temporal Pixel Multiplexing for Simultaneous High-Speed, High-Resolution Imaging. Nat. Methods 2010, 7, 209–211. [Google Scholar] [CrossRef] [PubMed]

- Feng, W.; Zhang, F.; Qu, X.; Zheng, S. Per-Pixel Coded Exposure for High-Speed and High-Resolution Imaging Using a Digital Micromirror Device Camera. Sensors 2016, 16, 331. [Google Scholar] [CrossRef] [PubMed]

- Donoho, D.L. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Candes, E.J.; Wakin, M.B. An introduction to compressive sampling. IEEE Signal Process. Mag. 2008, 25, 21–30. [Google Scholar] [CrossRef]

- Rombers, J. Imaging via compressive sampling [introduction to compressive sampling and recovery via convex programming]. IEEE Signal Process. Mag. 2008, 25, 14–20. [Google Scholar]

- Baraniuk, R.G. Compressive sensing [lecture notes]. IEEE Signal Process. Mag. 2007, 24, 118–124. [Google Scholar] [CrossRef]

- Dadkhah, M.; Deen, M.J.; Shirani, S. Compressive Sensing Image Sensors-Hardware Implementation. Sensors 2013, 13, 4961–4978. [Google Scholar] [CrossRef] [PubMed]

- Sankaranarayanan, A.C.; Studer, C.; Baraniuk, R.G. CS-MUVI: Video Compressive Sensing for Spatial-Multiplexing Cameras. In Proceedings of the IEEE International Conference on Computational Photography, Seattle, WA, USA, 28–29 April 2012; pp. 1–10. [Google Scholar]

- Reddy, D.; Veeraraghavan, A.; Chellappa, R. P2C2: Programmable Pixel Compressive Camera for High Speed Imaging. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 329–336. [Google Scholar]

- Hitomi, Y.; Gu, J.; Gupta, M.; Mitsunaga, T.; Nayar, S.K. Video from a Single Coded Exposure Photograph Using a Learned over-Complete Dictionary. In Proceedings of the International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 287–294. [Google Scholar]

- Liu, D.; Gu, J.; Hitomi, Y.; Gupta, M.; Mitsunaga, T.; Nayar, S.K. Efficient Space-Time Sampling with Pixel-Wise Coded Exposure for High-Speed Imaging. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 248–260. [Google Scholar] [PubMed]

- Llull, P.; Liao, X.; Yuan, X.; Yang, J.; Kittle, D.; Carin, L.; Sapiro, G.; Brady, D.J. Coded Aperture Compressive Temporal Imaging. Opt. Express 2013, 21, 10526–10545. [Google Scholar] [CrossRef] [PubMed]

- Koller, R.; Schmid, L.; Matsuda, N.; Niederberger, T.; Spinoulas, L.; Cossairt, O.; Schuster, G.; Katsaggelos, A.K. High Spatio-Temporal Resolution Video with Compressed Sensing. Opt. Express 2015, 23, 15992–16007. [Google Scholar] [CrossRef] [PubMed]

- Veeraraghavan, A.; Reddy, D.; Raskar, R. Coded Strobing Photography: Compressive Sensing of High Speed Periodic Videos. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 671–686. [Google Scholar] [CrossRef] [PubMed]

- Holloway, J.; Sankaranarayanan, A.C.; Veeraraghavan, A.; Tambe, S. Flutter Shutter Video Camera for Compressive Sensing of Videos. In Proceedings of the IEEE International Conference on Computational Photography, Seattle, WA, USA, 28–29 April 2012; pp. 1–9. [Google Scholar]

- Serrano, A.; Gutierrez, D.; Masia, B. Compressive High Speed Video Acquisition. In Proceedings of the Spanish Computer Graphics Conference (CEIG), Benicàssim, Spain, 1–3 July 2015; p. 1. [Google Scholar]

- Yuan, X. Generalized Alternating Projection Based Total Variation Minimization for Compressive Sensing. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 2539–2543. [Google Scholar]

- Bioucas-Dias, J.M.; Figueiredo, M.A.T. A New TwIST: Two-Step Iterative Shrinkage/Thresholding Algorithms for Image Restoration. IEEE Trans. Image Process. 2007, 16, 2992–3004. [Google Scholar] [CrossRef] [PubMed]

- Boldt, J.B.; Ellis, D.P.W. A Simple Correlation-Based Model of Intelligibility for Nonlinear Speech Enhancement and Separation. In Proceedings of the 2009 17th European Signal Processing Conference, Glasgow, UK, 24–28 August 2009; pp. 1849–1853. [Google Scholar]

- 0.7 XGA 12° DDR DMD Discovery. Available online: http://www.loreti.it/Download/PDF/DMD/7XGAdlp.pdf (accessed on 30 August 2005).

- MantaG-145-30fps. Available online: https://www.alliedvision.com/en/products/cameras/detail/Manta/G-145-30fps/action/pdf.html (accessed on 8 July 2016).

- Bloshkina, A.I.; Li, L.; Gubarev, F.A.; Klenovskii, M.S. Investigation of Extracting Information from Vibrating Objects by Digital Speckle Correlation. In Proceedings of the 2016 17th International Conference of Young Specialists on Micro/Nanotechnologies and Electron Devices (EDM), Erlagol, Russia, 30 June–4 July 2016; pp. 637–641. [Google Scholar]

- Ri, S.E.; Matsunaga, Y.; Fujigaki, M.; Matui, T.; Morimoto, Y. Development of DMD Reflection-Type CCD Camera for Phase Analysis and Shape Measurement. Optomech. Sens. Instrum. 2005, 6049. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, G.; Yao, X.-R.; Sun, Z.-B.; Qiu, P.; Wang, C.; Zhai, G.-J.; Zhao, Q. A High-Speed Imaging Method Based on Compressive Sensing for Sound Extraction Using a Low-Speed Camera. Sensors 2018, 18, 1524. https://doi.org/10.3390/s18051524

Zhu G, Yao X-R, Sun Z-B, Qiu P, Wang C, Zhai G-J, Zhao Q. A High-Speed Imaging Method Based on Compressive Sensing for Sound Extraction Using a Low-Speed Camera. Sensors. 2018; 18(5):1524. https://doi.org/10.3390/s18051524

Chicago/Turabian StyleZhu, Ge, Xu-Ri Yao, Zhi-Bin Sun, Peng Qiu, Chao Wang, Guang-Jie Zhai, and Qing Zhao. 2018. "A High-Speed Imaging Method Based on Compressive Sensing for Sound Extraction Using a Low-Speed Camera" Sensors 18, no. 5: 1524. https://doi.org/10.3390/s18051524