Presentation Attack Detection for Iris Recognition System Using NIR Camera Sensor

Abstract

:1. Introduction

- -

- First, this is the first approach to use a deep CNN model for iPAD to overcome the limitation of previous studies which adopted only shallow CNN networks. The trained CNN model can extract discriminative features for classifying real and presentation attack images because it is trained using a large amount of augmented training images.

- -

- Second, since presentation attack images have special characteristics such as noise or discrete patterns of textures, we applied a multi-level local binary pattern (MLBP) method to extract these images features. The handcrafted image features can be seen as a complement to the deep features to enhance the classification result.

- -

- Third, we combined the detection results based on MLBP and deep features to enhance the accuracy of the iPAD method. The combination was performed using feature level fusion and score level fusion. This is the first approach to combine handcrafted and deep features for iPAD.

- -

- All previous research showed the performances of iPAD according to the individual iPAD dataset such as printed or contact lenses. However, we present the robustness of our method irrespective of the kinds of iPAD datasets through the evaluation with the fused datasets of printed and contact lenses.

- -

- Finally, we made our trained models and algorithms for iPAD available to other researchers for comparison purposes [17].

2. Related Works

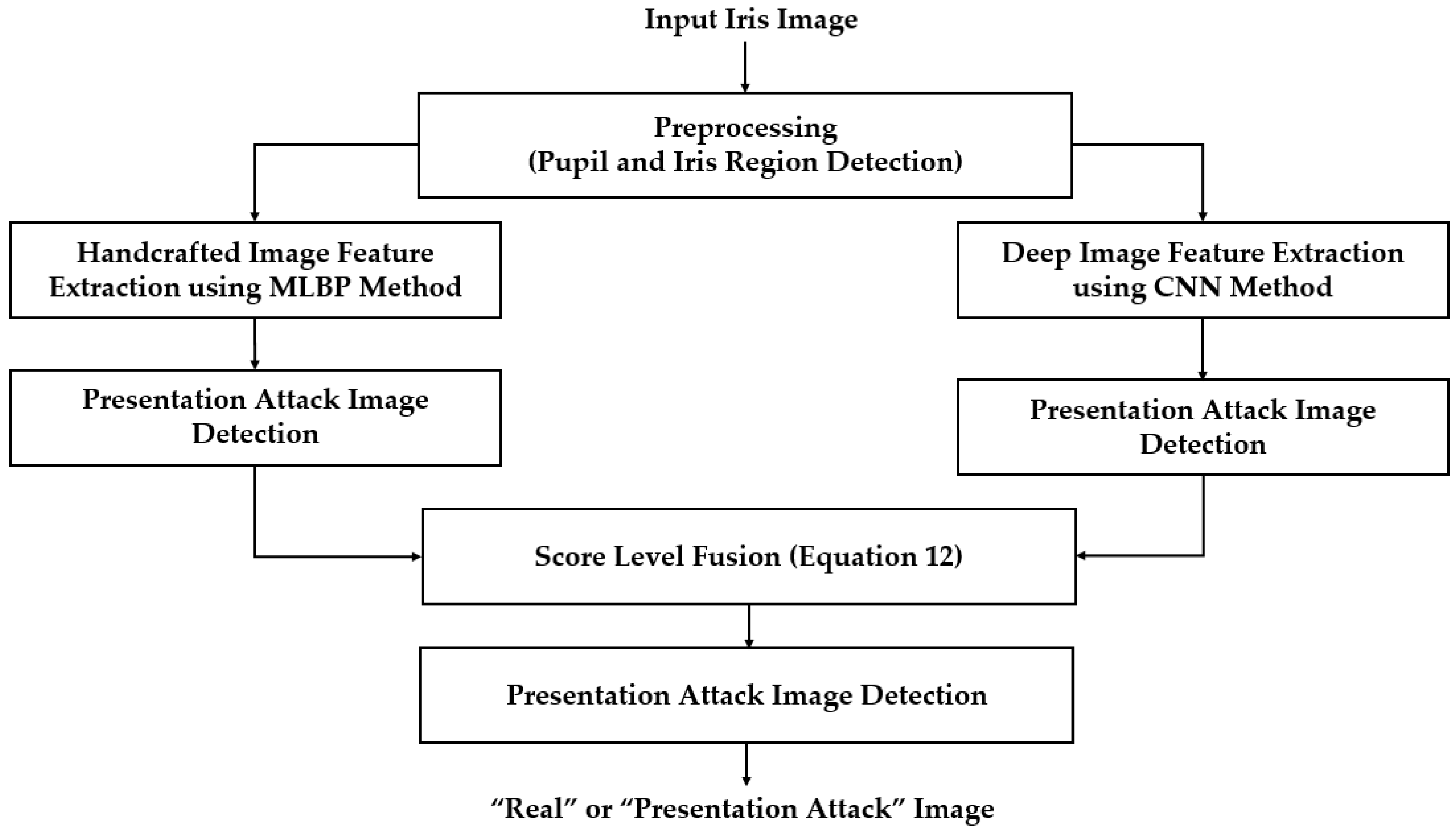

3. Proposed PAD Method for Iris Recognition System

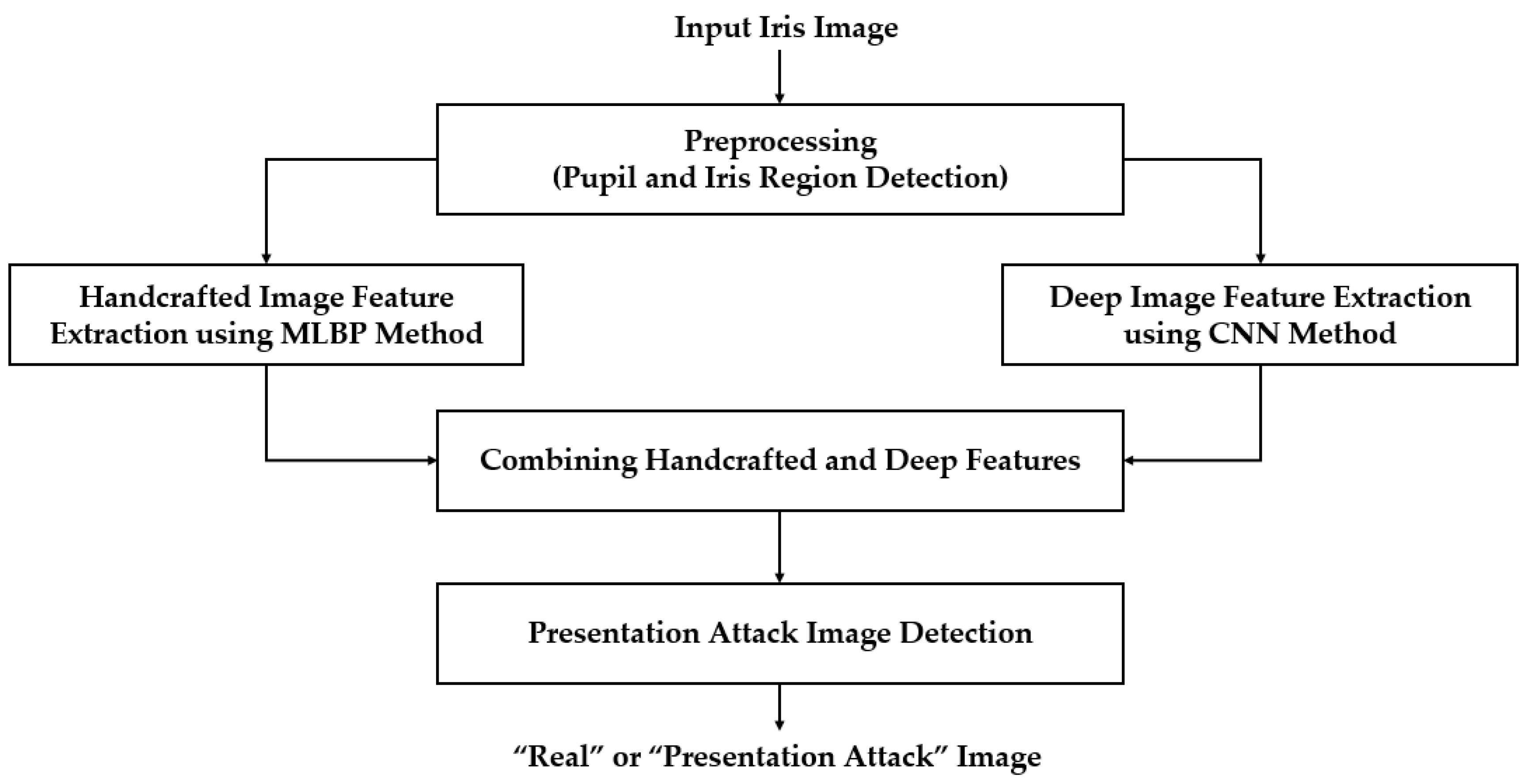

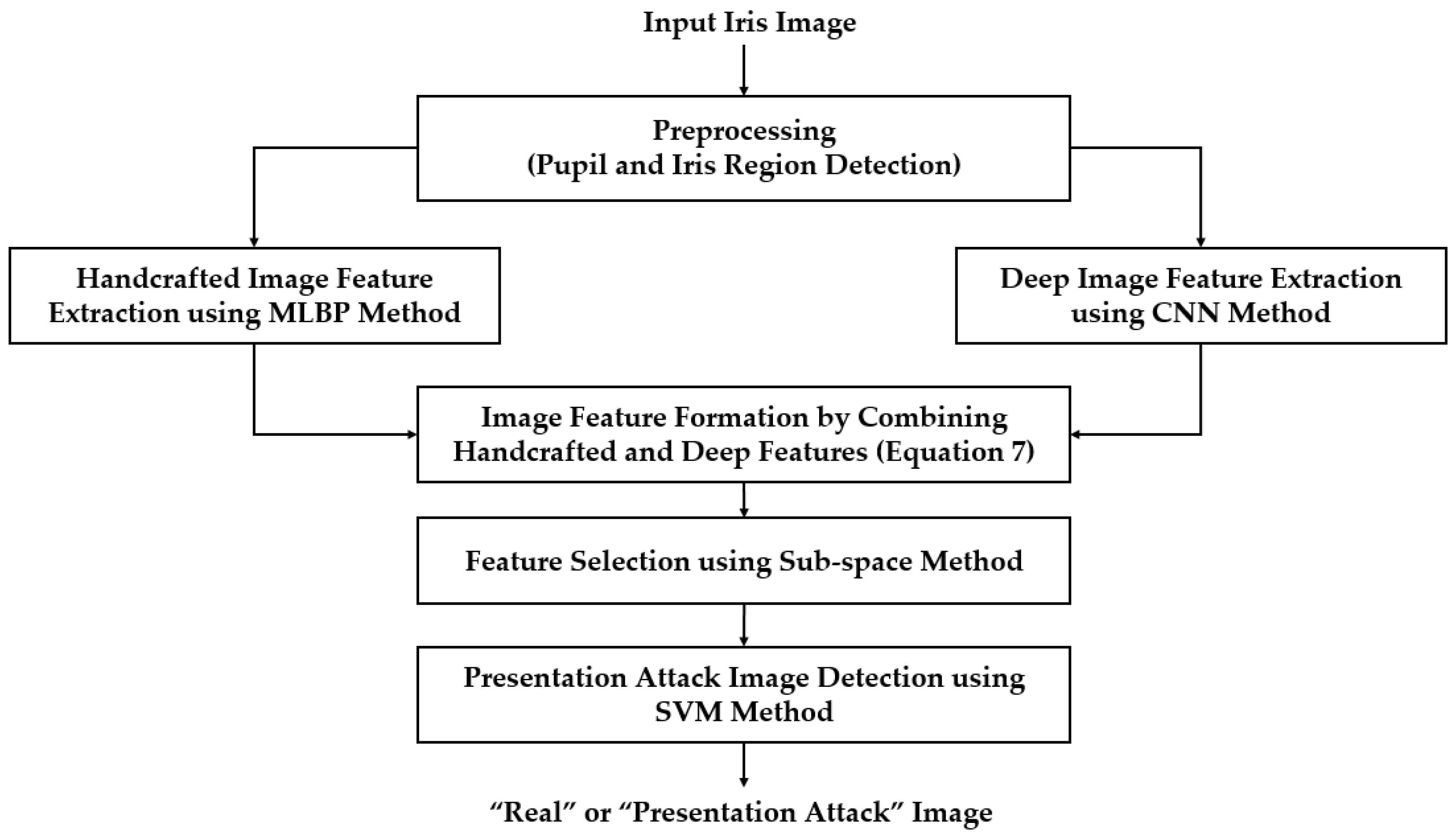

3.1. Overview of Proposed Method

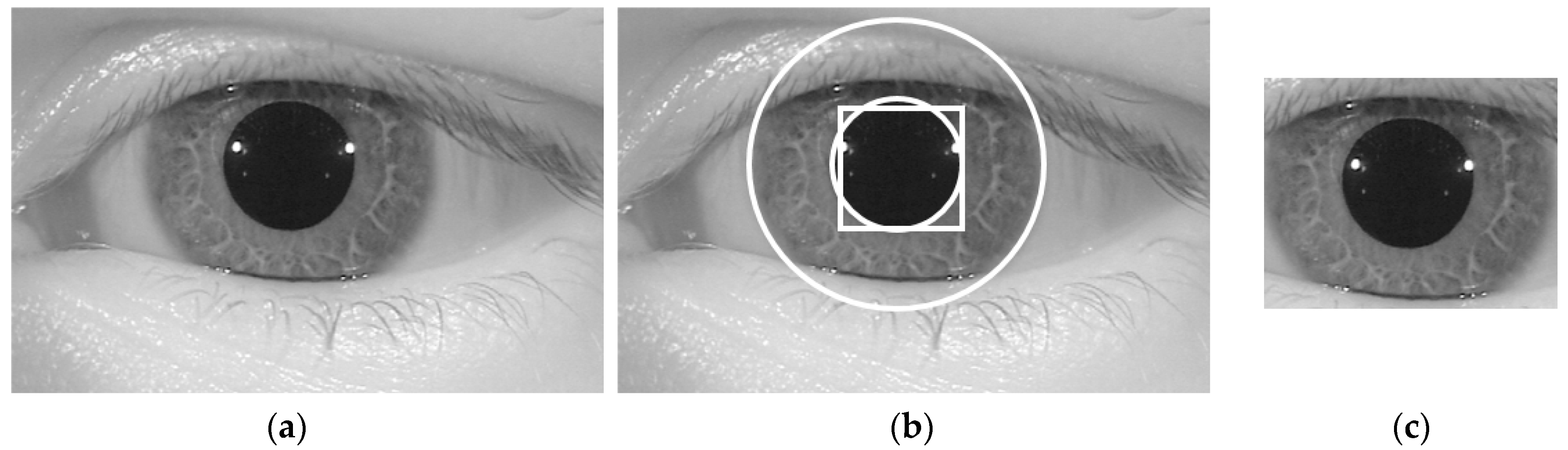

3.2. Iris Region Detection Using Circular Edge Detection Method

3.3. Image Feature Extraction Based on MLBP Method

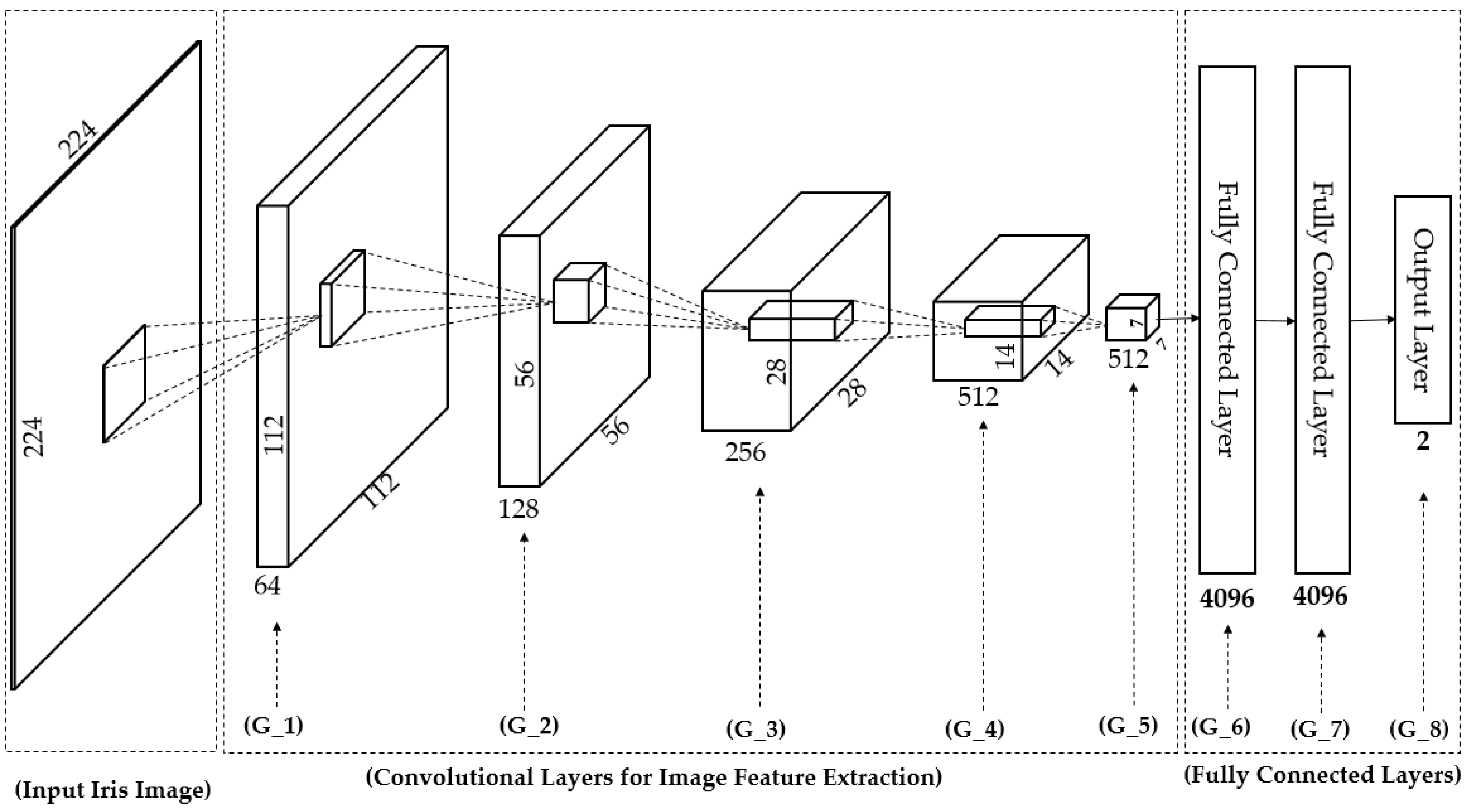

3.4. Image Feature Extraction Based on CNN Method

3.5. Image Feature Extraction and Detection Using SVM Method

4. Experimental Results

4.1. Datasets

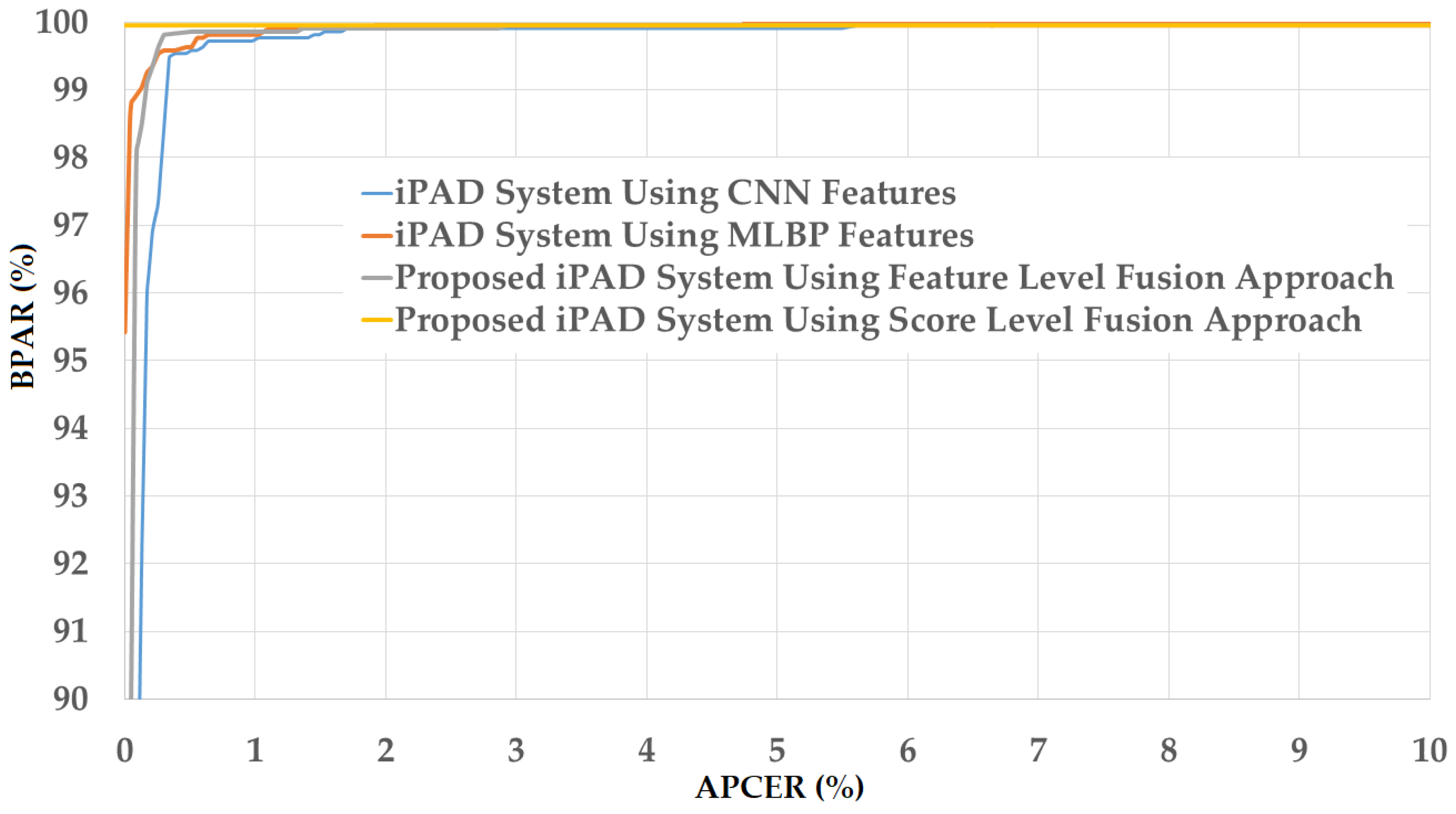

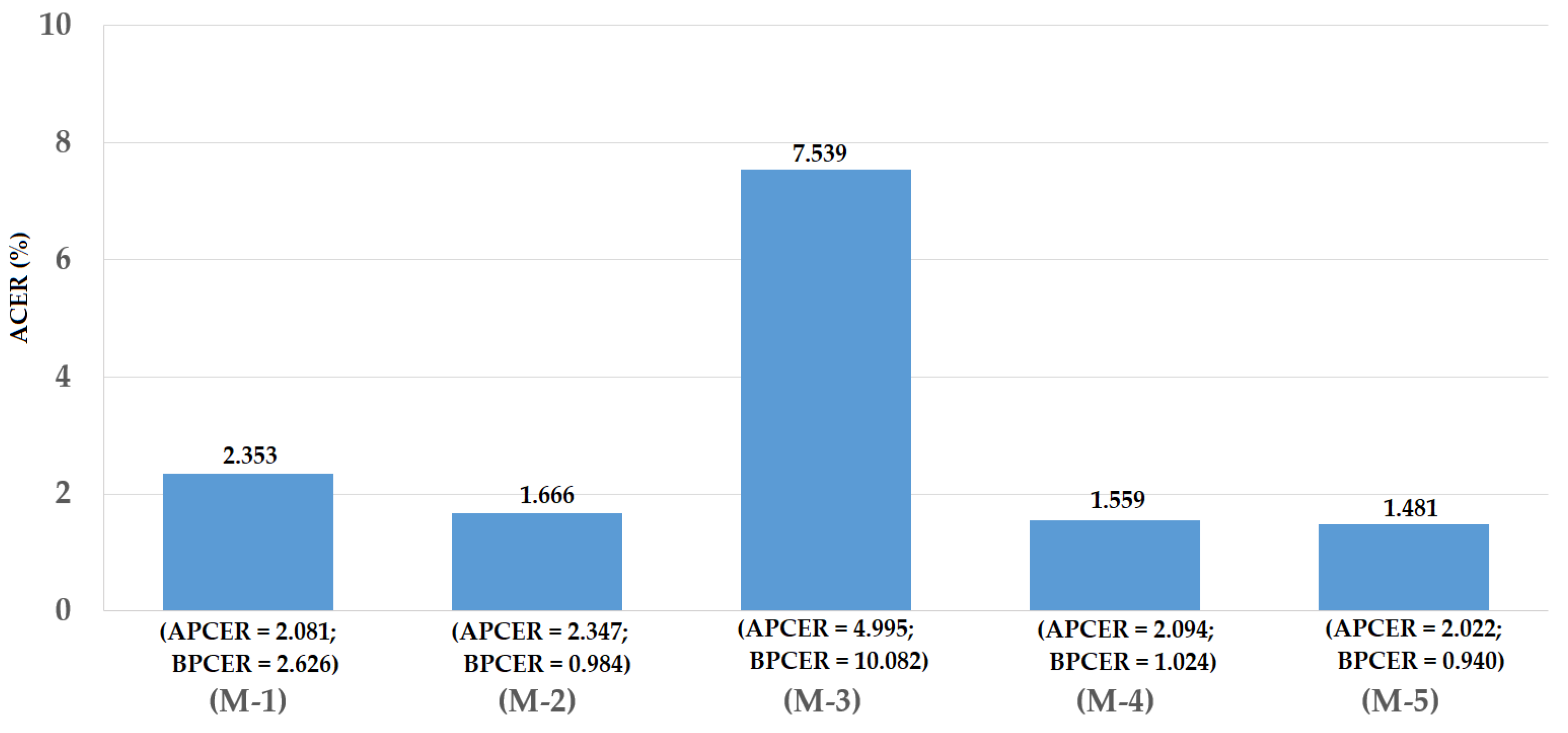

4.2. Detection Performance for Attack Method Based on Printed Samples

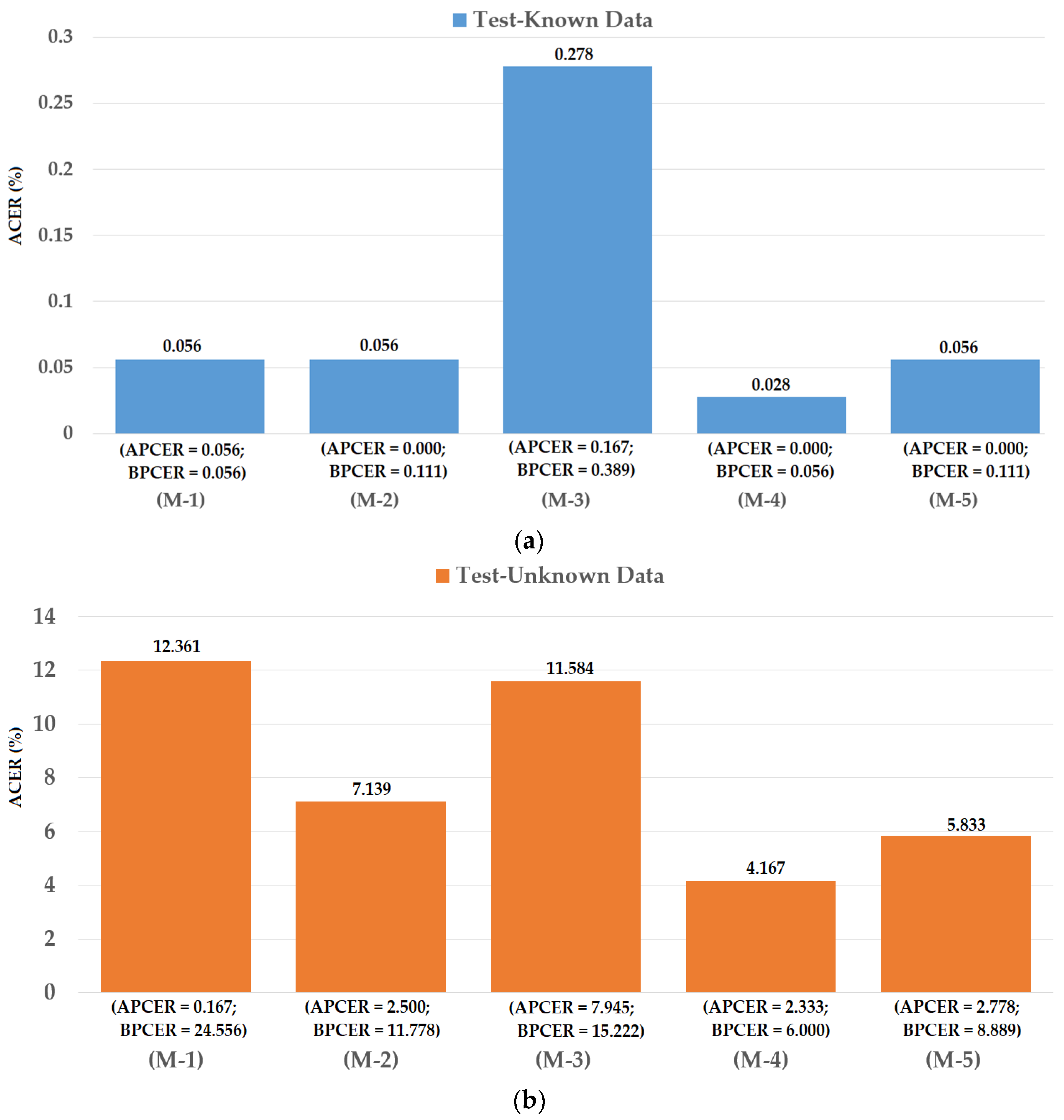

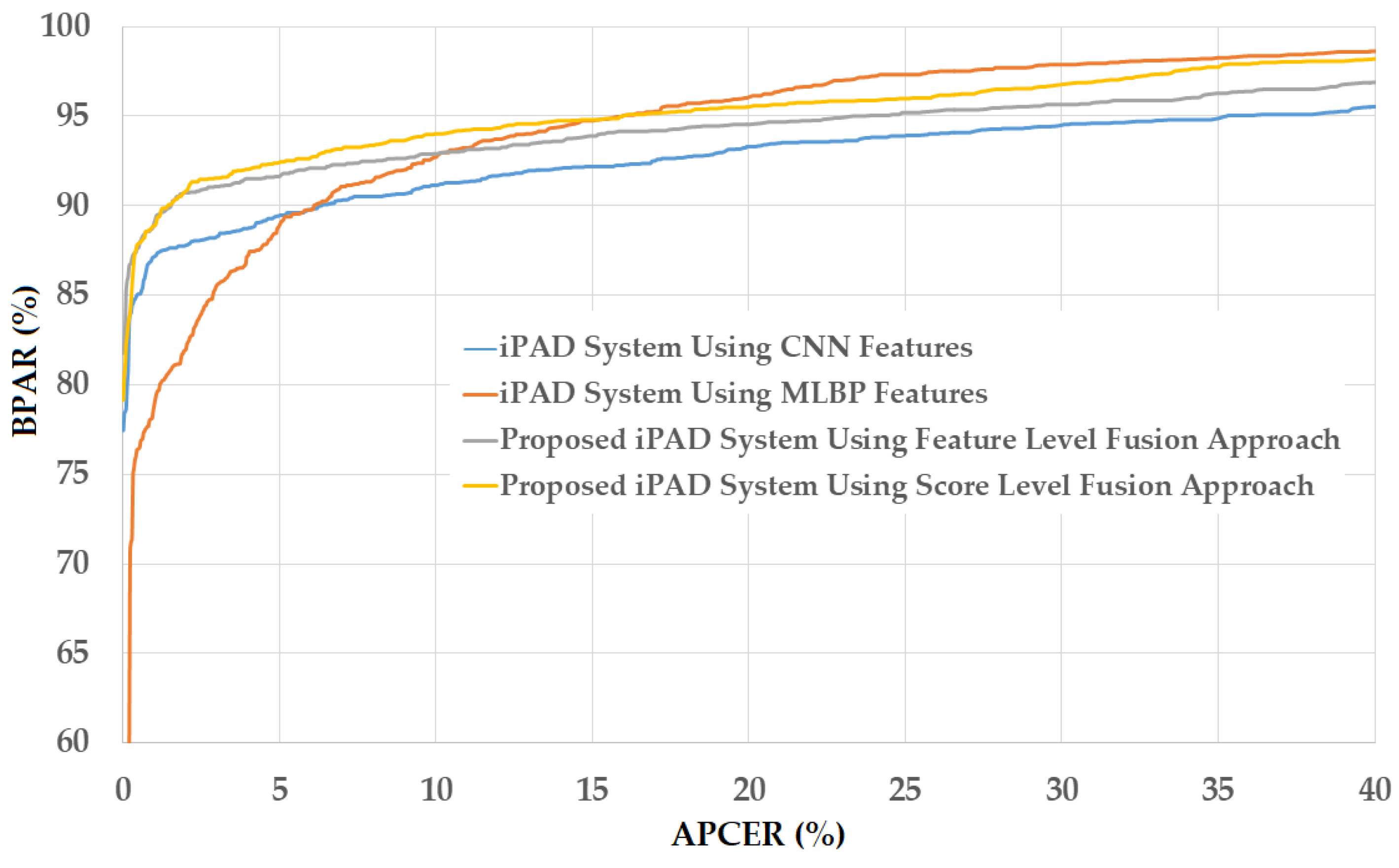

4.3. Detection Performance for Attack Method Based on Contact Lens

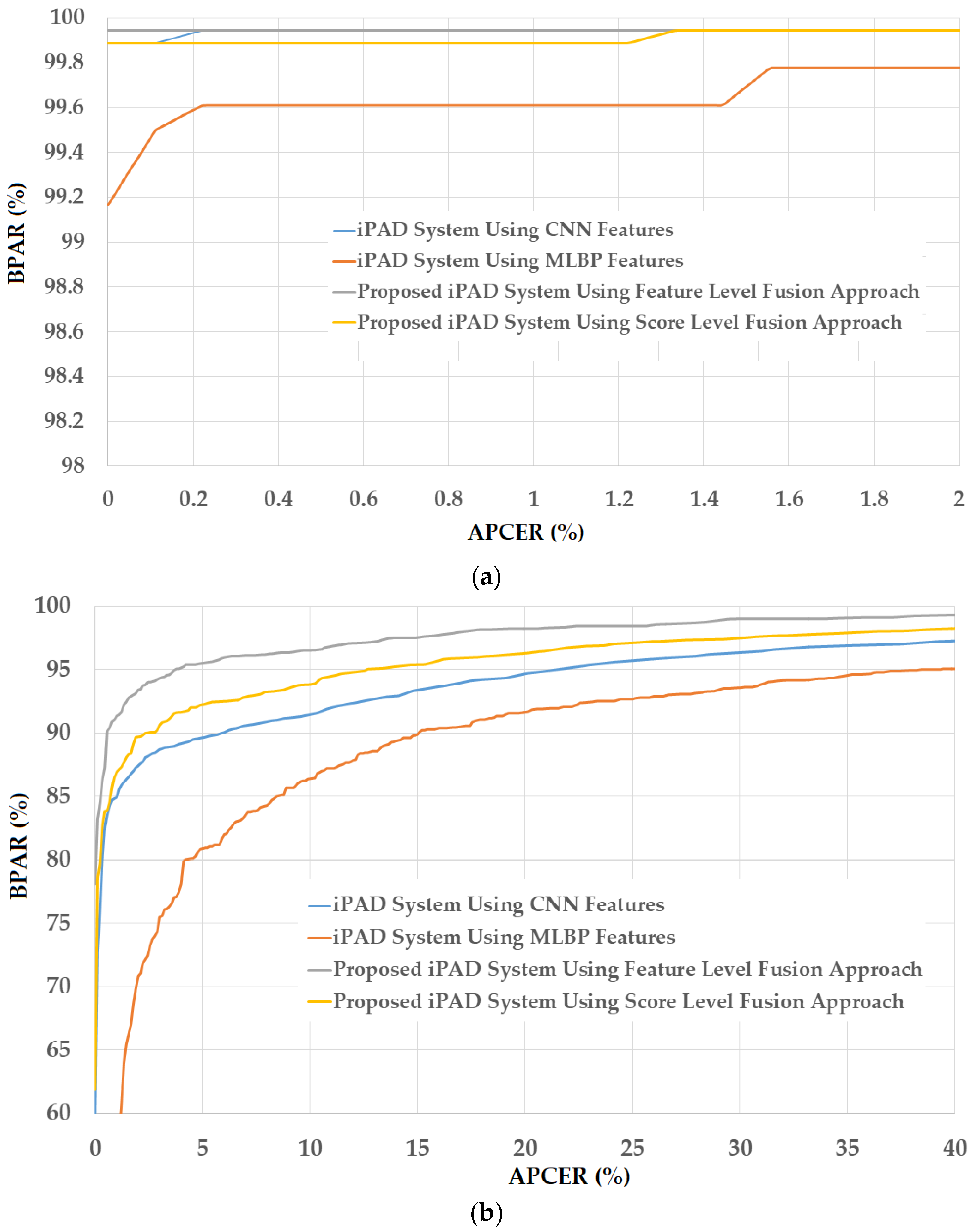

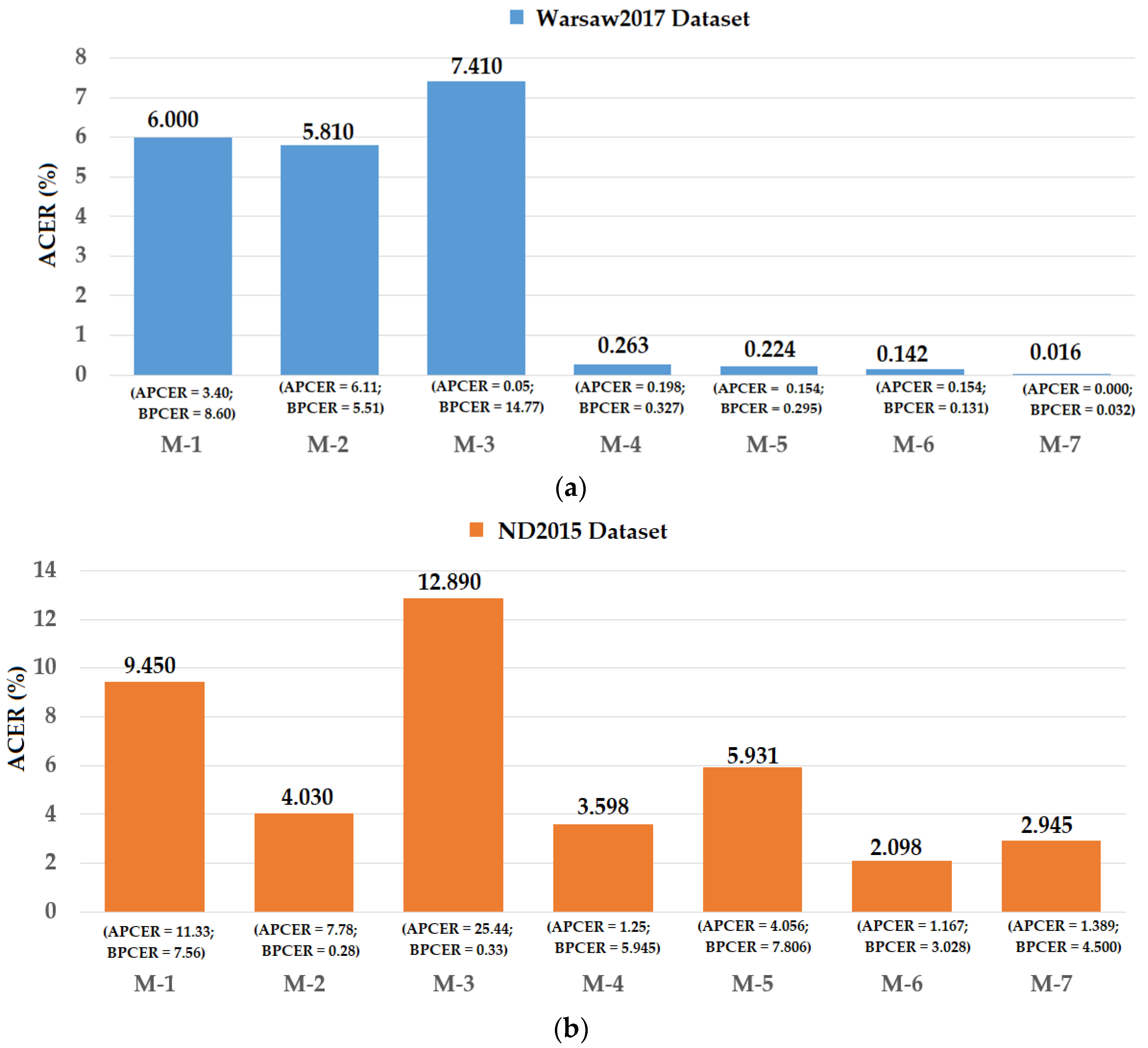

4.4. Detection Performance for Attack Method Based on Both Printed Samples and Contact Lens

4.5. Comparisons and Discussion

5. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Jain, A.K.; Ross, A.; Prabhakar, S. An introduction to biometric recognition. IEEE Trans. Circuits Syst. Video Technol. 2004, 14, 4–20. [Google Scholar] [CrossRef]

- Nguyen, D.T.; Yoon, H.S.; Pham, T.D.; Park, K.R. Spoof detection for finger-vein recognition system using NIR camera. Sensors 2017, 17, 2261. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, K.; Fookes, C.; Jillela, R.; Sridharan, S.; Ross, A. Long range iris recognition: A survey. Pattern Recognit. 2017, 72, 123–143. [Google Scholar] [CrossRef]

- Peralta, D.; Galar, M.; Triguero, I.; Paternain, D.; Garcia, S.; Barrenechea, E.; Benitez, J.M.; Bustince, H.; Herrera, F. A survey on fingerprint minutiae-based local matching for verification and identification: Taxonomy and experimental evaluation. Inf. Sci. 2015, 315, 67–87. [Google Scholar] [CrossRef]

- Pham, T.D.; Park, Y.H.; Nguyen, D.T.; Kwon, S.Y.; Park, K.R. Nonintrusive finger-vein recognition system using NIR images sensor and accuracy analyses according to various factors. Sensors 2015, 15, 16886–16894. [Google Scholar] [CrossRef] [PubMed]

- Lin, C.-L.; Wang, S.-H.; Cheng, H.-Y.; Fan, K.-C.; Hsu, W.-L.; Lai, C.-R. Bimodal biometric verification using the fusion of palmprint and infrared palm-dorsum vein images. Sensors 2015, 15, 31339–31361. [Google Scholar] [CrossRef] [PubMed]

- Mirmohamadsadeghi, L.; Drygajlo, A. Palm-vein recognition with local texture patterns. IET Biom. 2014, 3, 198–206. [Google Scholar] [CrossRef]

- Zhou, H.; Milan, A.; Wei, L.; Creighton, D.; Hossny, M.; Nahavandi, S. Recent advances on single modal and multimodal face recognition: A survey. IEEE Trans. Hum. Mach. Syst. 2014, 44, 701–716. [Google Scholar] [CrossRef]

- Shin, K.Y.; Kim, Y.G.; Park, K.R. Enhanced iris recognition method based on multi-unit iris images. Opt. Eng. 2013, 52, 1–11. [Google Scholar] [CrossRef]

- Nguyen, D.T.; Pham, T.D.; Baek, N.R.; Park, K.R. Combining deep and handcrafted image features for presentation attack detection in face recognition using visible light camera sensors. Sensors 2018, 18, 699. [Google Scholar] [CrossRef] [PubMed]

- Sousedik, C.; Busch, C. Presentation attack detection methods for fingerprint recognition system: A survey. IET Biom. 2014, 3, 219–233. [Google Scholar] [CrossRef]

- Galbally, J.; Marcel, S.; Fierrez, J. Biometric antispoofing methods: A survey in face recognition. IEEE Access 2014, 2, 1530–1552. [Google Scholar] [CrossRef]

- Nguyen, D.T.; Park, Y.H.; Shin, K.Y.; Kwon, S.Y.; Lee, H.C.; Park, K.R. Fake finger-vein image detection based on Fourier and wavelet transforms. Digit. Signal Process. 2013, 23, 1401–1413. [Google Scholar] [CrossRef]

- Galbally, J.; Marcel, S.; Fierrez, J. Image quality assessment for fake biometric detection: Application to iris, fingerprint and face recognition. IEEE Trans. Image Process. 2014, 23, 710–724. [Google Scholar] [CrossRef] [PubMed]

- De Souza, G.B.; Da Silva Santos, D.F.; Pires, R.G.; Marana, A.N.; Papa, J.P. Deep texture features for robust face spoofing detection. IEEE Trans. Circuits Syst. II Express Briefs 2017, 64, 1397–1401. [Google Scholar] [CrossRef]

- Akhtar, Z.; Micheloni, C.; Foresti, G.L. Biometric liveness detection: Challenges and research opportunities. IEEE Secur. Priv. 2015, 13, 63–72. [Google Scholar] [CrossRef]

- Dongguk Iris Spoof Detection CNN Model (DFSD-CNN) with Algorithm. Available online: http://dm.dgu.edu/link.html (accessed on 26 March 2018).

- Gragnaniello, D.; Poggi, G.; Sansone, C.; Verdoliva, L. An investigation of local descriptors for biometric spoofing detection. IEEE Trans. Inf. Forensic Secur. 2015, 10, 849–863. [Google Scholar] [CrossRef]

- Doyle, J.S.; Bowyer, K.W. Robust detection of textured contact lens in iris recognition using BSIF. IEEE Access 2015, 3, 1672–1683. [Google Scholar] [CrossRef]

- Hu, Y.; Sirlantzis, K.; Howells, G. Iris liveness detection using regional features. Pattern Recognit. Lett. 2016, 82, 242–250. [Google Scholar] [CrossRef]

- Komogortsev, O.V.; Karpov, A.; Holland, C.D. Attack of mechanical replicas: Liveness detection with eye movement. IEEE Trans. Inf. Forensic Secur. 2015, 10, 716–725. [Google Scholar] [CrossRef]

- Raja, K.B.; Raghavendra, R.; Busch, C. Color adaptive quantized pattern for presentation attack detection in ocular biometric systems. In Proceedings of the ACM International Conference on Security of Information and Networks, Newark, NJ, USA, 20–22 July 2016; pp. 9–15. [Google Scholar]

- Silva, P.; Luz, E.; Baeta, R.; Pedrini, H.; Falcal, A.X.; Menotti, D. An approach to iris contact lens detection based on deep image representation. In Proceedings of the IEEE Conference on Graphics, Patterns and Images, Salvador, Brazil, 26–29 August 2015; pp. 157–164. [Google Scholar]

- Menotti, D.; Chiachia, G.; Pinto, A.; Schwartz, W.R.; Pedrini, H.; Falcao, A.X.; Rocha, A. Deep representation for iris, face and fingerprint spoofing detection. IEEE Trans. Inf. Forensic Secur. 2015, 10, 864–879. [Google Scholar] [CrossRef]

- Daugman, J. How iris recognition works. IEEE Trans. Circuits Syst. Video Technol. 2004, 14, 21–30. [Google Scholar] [CrossRef]

- Cho, S.R.; Nam, G.P.; Shin, K.Y.; Nguyen, D.T.; Pham, T.D.; Lee, E.C.; Park, K.R. Periocular-based biometrics robust to eye rotation based on polar coordinates. Multimed. Tools Appl. 2017, 76, 11177–11197. [Google Scholar] [CrossRef]

- Kim, Y.G.; Shin, K.Y.; Park, K.R. Improved iris localization by using wide and narrow field of view cameras for iris recognition. Opt. Eng. 2013, 52, 103102-1–103102-12. [Google Scholar] [CrossRef]

- Choi, S.E.; Lee, Y.J.; Lee, S.J.; Park, K.R.; Kim, J. Age estimation using a hierarchical classifier based on global and local facial features. Pattern Recognit. 2011, 44, 1262–1281. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Nguyen, D.T.; Cho, S.R.; Pham, T.D.; Park, K.R. Human age estimation method robust to camera sensor and/or face movement. Sensors 2015, 15, 21898–21930. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–8 December 2012. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional neural networks for large-scale image recognition. In Proceedings of the International Conference on Learning Representations, Kunming, China, 25–27 September 2013. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Huang, G.; Liu, Z.; Weinberger, K.Q.; Van de Maaten, L. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. ArXiv, 2016; arXiv:1506.01497. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look one: Unified, real-time object detection. ArXiv, 2016; arXiv:1506.02640. [Google Scholar]

- Taigman, Y.; Yang, M.; Ranzato, M.; Wolf, L. Deepface: Closing the gap to human-level performance in face verification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Levi, G.; Hassner, T. Age and gender classification using convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Gangwar, A.; Joshi, A. DeepIrisNet: Deep iris representation with applications in iris recognition and cross-sensor iris recognition. In Proceedings of the IEEE International Conference on Image Processing, Phoenix, AZ, USA, 25–28 September 2016. [Google Scholar]

- Nguyen, K.; Fookes, C.; Ross, A.; Sridharan, S. Iris recognition with off-the-shelf CNN features: A deep learning perspective. IEEE Access 2018, 6, 18848–18855. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Chang, C.-C.; Lin, C.-J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 27. [Google Scholar] [CrossRef]

- LIBSVM Tools for SVM Classification. Available online: https://www.csie.ntu.edu.tw/~cjlin/libsvm/ (accessed on 26 March 2018).

- Nguyen, D.T.; Kim, K.W.; Hong, H.G.; Koo, J.H.; Kim, M.C.; Park, K.R. Gender recognition from human-body images using visible-light and thermal camera videos based on a convolutional neural network for feature extraction. Sensors 2017, 17, 637. [Google Scholar] [CrossRef] [PubMed]

- Nanni, L.; Ghidoni, S.; Brahnam, S. Handcrafted vs. non-handcrafted features for computer vision classification. Pattern Recognit. 2017, 71, 158–172. [Google Scholar] [CrossRef]

- ISO/IEC JTC1 SC37 Biometrics. ISO/IEC WD 30107-3: 2014 Information Technology—Presentation Attack Detection—Part 3: Testing and Reporting and Classification of Attacks; International Organization for Standardization: Geneva, Switzerland, 2014. [Google Scholar]

- Raghavendra, R.; Busch, C. Presentation attack detection algorithms for finger vein biometrics: A comprehensive study. In Proceedings of the 11th International Conference on Signal-Image Technology and Internet-Based Systems, Bangkok, Thailand, 23–27 November 2015; pp. 628–632. [Google Scholar]

- Yambay, D.; Becker, B.; Kohli, N.; Yadav, D.; Czajka, A.; Bowyer, K.W.; Schuckers, S.; Singh, R.; Vatsa, M.; Noore, A.; et al. LivDet iris 2017—Iris liveness detection competition 2017. In Proceedings of the International Conference on Biometrics, Denver, CO, USA, 1–4 October 2017. [Google Scholar]

- Deep Learning Matlab Toolbox. Available online: https://www.mathworks.com/help/nnet/deep-learning-basics.html?s_tid=gn_loc_drop (accessed on 26 March 2018).

- Principal Component Analysis Matlab Toolbox. Available online: https://www.mathworks.com/help/stats/pca.html (accessed on 26 March 2018).

- Support Vector Machines (SVM) for Classification. Available online: https://www.mathworks.com/help/stats/support-vector-machine-classification.html (accessed on 26 March 2018).

- Yambay, D.; Walczak, B.; Schuckers, S.; Czajka, A. LivDet-iris 2015—Iris liveness detection. In Proceedings of the IEEE International Conference on Identity, Security and Behavior Analysis, New Delhi, India, 22–24 February 2017. [Google Scholar]

- Presentation Attack Video Iris Dataset (PAVID). Available online: http://nislab.no/biometrics_lab/pavid_db (accessed on 26 March 2018).

- Yambay, D.; Doyle, J.S.; Bowyer, K.W.; Czajka, A.; Schucker, S. LivDet-iris 2013—Iris liveness detection competition 2013. In Proceedings of the IEEE International Joint Conference on Biometrics, Clearwater, FL, USA, 29 September–2 October 2014. [Google Scholar]

| Category | Method | Strength | Weakness |

|---|---|---|---|

| Expert-knowledge-based feature extraction methods |

|

|

|

| Learning-based feature extraction methods |

|

|

| Operation Group | Operation | Layer Name | Number of Filters | Filter Size | Stride Size | Padding Size | Output Size |

|---|---|---|---|---|---|---|---|

| Group_0 (G_0) | Input image | Input layer | n/a | n/a | n/a | n/a | 224 × 224 × 3 |

| Group 1 (G_1) | Convolution (2 times) | Convolution layer | 64 | 3 × 3 × 3 | 1 × 1 | 1 × 1 | 224 × 224 × 64 |

| ReLU layer | n/a | n/a | n/a | n/a | 224 × 224 × 64 | ||

| Pooling | Max pooling layer | 1 | 2 × 2 | 2 × 2 | 0 | 112 × 112 × 64 | |

| Group_2 (G_2) | Convolution (2 times) | Convolution layer | 128 | 3 × 3 × 64 | 1 × 1 | 1 × 1 | 112 × 112 × 128 |

| ReLU layer | n/a | n/a | n/a | n/a | 112 × 112 × 128 | ||

| Pooling | Max pooling layer | 1 | 2 × 2 | 2 × 2 | 0 | 56 × 56 × 128 | |

| Group_3 (G_3) | Convolution (4 times) | Convolution layer | 256 | 3 × 3 × 128 | 1 × 1 | 1 × 1 | 56 × 56 × 256 |

| ReLU layer | n/a | n/a | n/a | n/a | 56 × 56 × 256 | ||

| Pooling | Max pooling layer | 1 | 2 × 2 | 2 × 2 | 0 | 28 × 28 × 256 | |

| Group_4 (G_4) | Convolution (4 times) | Convolution layer | 512 | 3 × 3 × 256 | 1 × 1 | 1 × 1 | 28 × 28 × 512 |

| ReLU layer | n/a | n/a | n/a | n/a | 28 × 28 × 512 | ||

| Pooling | Max pooling layer | 1 | 2 × 2 | 2 × 2 | 0 | 14 × 14 × 512 | |

| Group_5 (G_5) | Convolution (4 times) | Convolution layer | 512 | 3 × 3 × 512 | 1 × 1 | 1 × 1 | 14 × 14 × 512 |

| ReLU layer | n/a | n/a | n/a | n/a | 14 × 14 × 512 | ||

| Pooling | Max pooling layer | 1 | 2 × 2 | 2 × 2 | 0 | 7 × 7 × 512 | |

| Group_6 (G_6) | Inner Product | Fully connected layer | n/a | n/a | n/a | n/a | 4096 |

| ReLU layer | n/a | n/a | n/a | n/a | 4096 | ||

| Dropout | Dropout layer (dropout = 0.5) | n/a | n/a | n/a | n/a | 4096 | |

| Group_7 (G_7) | Inner Product | Fully connected layer | n/a | n/a | n/a | n/a | 4096 |

| ReLU layer | n/a | n/a | n/a | n/a | 4096 | ||

| Dropout | Dropout layer (dropout = 0.5) | n/a | n/a | n/a | n/a | 4096 | |

| Group_8 (G_8) | Inner Product | Output layer | n/a | n/a | n/a | n/a | 2 |

| Softmax | Softmax layer | n/a | n/a | n/a | n/a | 2 | |

| Classification | Classification layer | n/a | n/a | n/a | n/a | 2 |

| Momentum | Mini-Batch Size | Initial Learning Rate | Learning Rate Drop Factor | Learning Rate Drop Period (Epochs) | Number of Epochs |

|---|---|---|---|---|---|

| 0.90 | 32 | 0.001 | 0.1 | 3 | 9 |

| Dataset | Number of Real Images | Number of Attack Images | Total | Collection Method |

|---|---|---|---|---|

| Warsaw2017 | 5168 | 6845 | 12,013 | Recaptured printed iris patterns on paper |

| ND2015 | 4875 | 2425 | 7300 | Recaptured printed iris patterns on contact lens |

| Dataset | Training Dataset | Testing Dataset | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Real Image | Attack Image | Total | Test-Known Dataset | Test-Unknown Dataset | |||||

| Real Image | Attack Image | Total | Real Image | Attack Image | Total | ||||

| Original dataset | 1844 | 2669 | 4513 | 974 | 2016 | 2990 | 2350 | 2160 | 4510 |

| Augmented dataset | 27,660 (1844 × 15) | 24,021 (2669 × 9) | 51,681 | 974 | 2016 | 2990 | 2350 | 2160 | 4510 |

| Dataset | Training Dataset | Testing Dataset | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Real Image | Attack Image | Total | Test-Known Dataset | Test-Unknown Dataset | |||||

| Real Image | Attack Image | Total | Real Image | Attack Image | Total | ||||

| Original ND2015 dataset | 600 | 600 | 1200 | 900 | 900 | 1800 | 900 | 900 | 1800 |

| Augmented dataset | 29,400 (600 × 49) | 29,400 (600 × 49) | 58,800 | 900 | 900 | 1800 | 900 | 900 | 1800 |

| Dataset | Training Dataset | Testing Dataset | ||||

|---|---|---|---|---|---|---|

| Real Image | Attack Image | Total | Real Image | Attack Image | Total | |

| Original entire ND2015 (1st Fold) | 2340 | 1068 | 3408 | 2535 | 1357 | 3892 |

| Augmented dataset (1st Fold) | 28,080 (2340 × 12) | 26,700 (1068 × 25) | 54,780 | 2535 | 1357 | 3892 |

| Original entire ND2015 (2nd Fold) | 2535 | 1357 | 3892 | 2340 | 1068 | 3408 |

| Augmented dataset (2nd Fold) | 30,420 (2535 × 12) | 33,925 (1357 × 25) | 64,345 | 2340 | 1068 | 3408 |

| Training Dataset | Testing Dataset | |||||||

|---|---|---|---|---|---|---|---|---|

| Images from Warsaw2017 dataset | Images from ND2015 dataset | Total | Test-known dataset | Test-unknown dataset | ||||

| Images from Warsaw2017 dataset | Images from ND2015 dataset | Total | Images from Warsaw2017 dataset | Images from ND2015 dataset | Total | |||

| 51,681 | 58,800 | 110,481 | 2990 | 1800 | 4790 | 4510 | 1800 | 6310 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nguyen, D.T.; Baek, N.R.; Pham, T.D.; Park, K.R. Presentation Attack Detection for Iris Recognition System Using NIR Camera Sensor. Sensors 2018, 18, 1315. https://doi.org/10.3390/s18051315

Nguyen DT, Baek NR, Pham TD, Park KR. Presentation Attack Detection for Iris Recognition System Using NIR Camera Sensor. Sensors. 2018; 18(5):1315. https://doi.org/10.3390/s18051315

Chicago/Turabian StyleNguyen, Dat Tien, Na Rae Baek, Tuyen Danh Pham, and Kang Ryoung Park. 2018. "Presentation Attack Detection for Iris Recognition System Using NIR Camera Sensor" Sensors 18, no. 5: 1315. https://doi.org/10.3390/s18051315