LiteNet: Lightweight Neural Network for Detecting Arrhythmias at Resource-Constrained Mobile Devices

Abstract

:1. Introduction

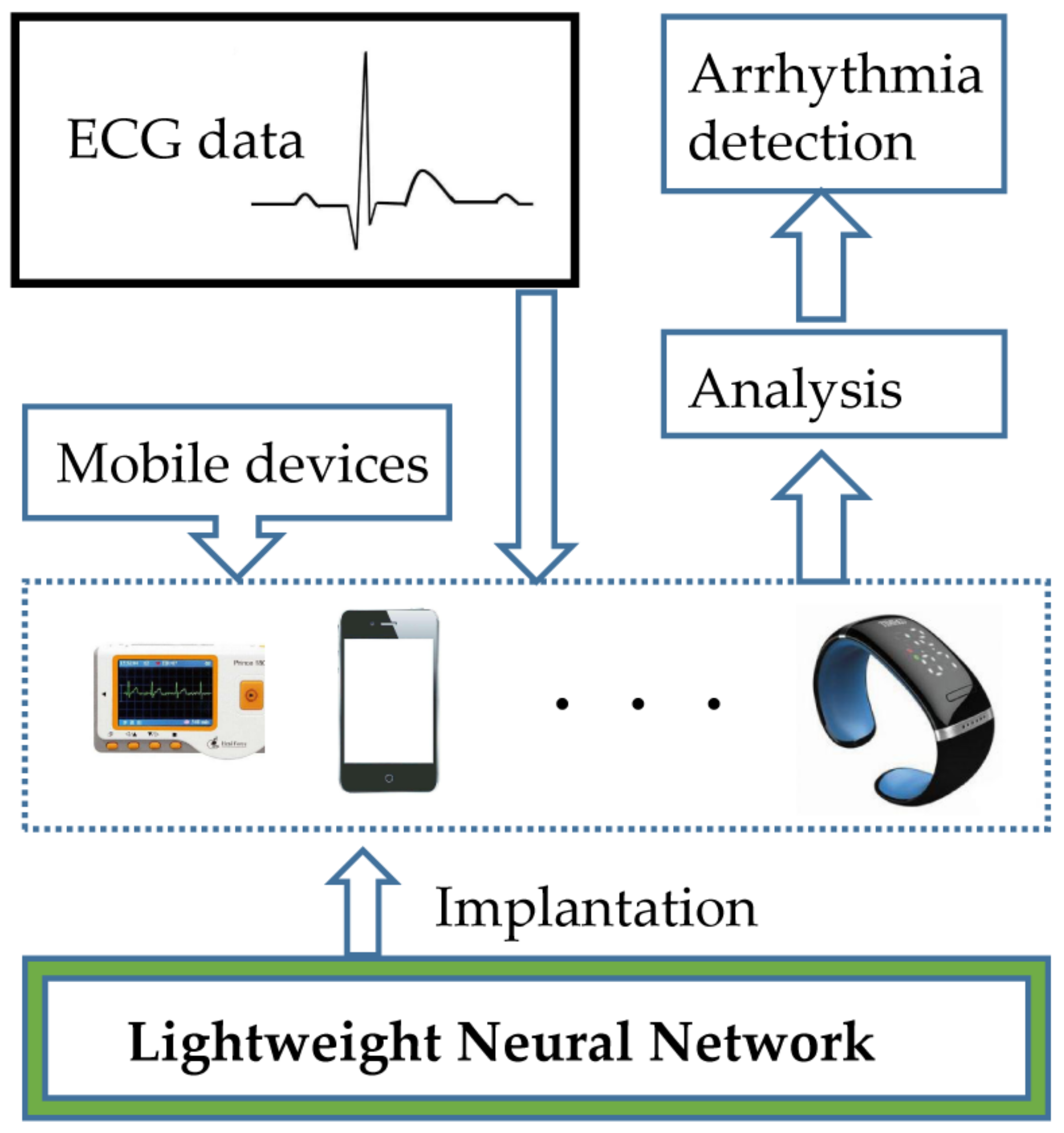

- We propose a light-weight neural network model, named LiteNet, which can not only be trained faster than traditional deep learning algorithms on remote servers, but which is also dramatically more resource-friendly when working on mobile devices. LiteNet can therefore be trained on low-capacity servers and the trained model can be installed on resource-constrained mobile devices for arrhythmia detection with low resource consumption.

- Filters with heterogeneous sizes in each convolutional layer are designed to get various feature combinations, in order to achieve high accuracy. Both the sizes of each filter and the total number of filters can be adapted within each convolutional layer, which helps substantially in obtaining different feature maps in a convolutional layer.

- LiteNet verifies that Adam optimizer [18] can be used as a stochastic objective function. It can improve the accuracy of the model compared with the traditional gradient descent optimizer while requiring minimal parameter tuning in the training process.

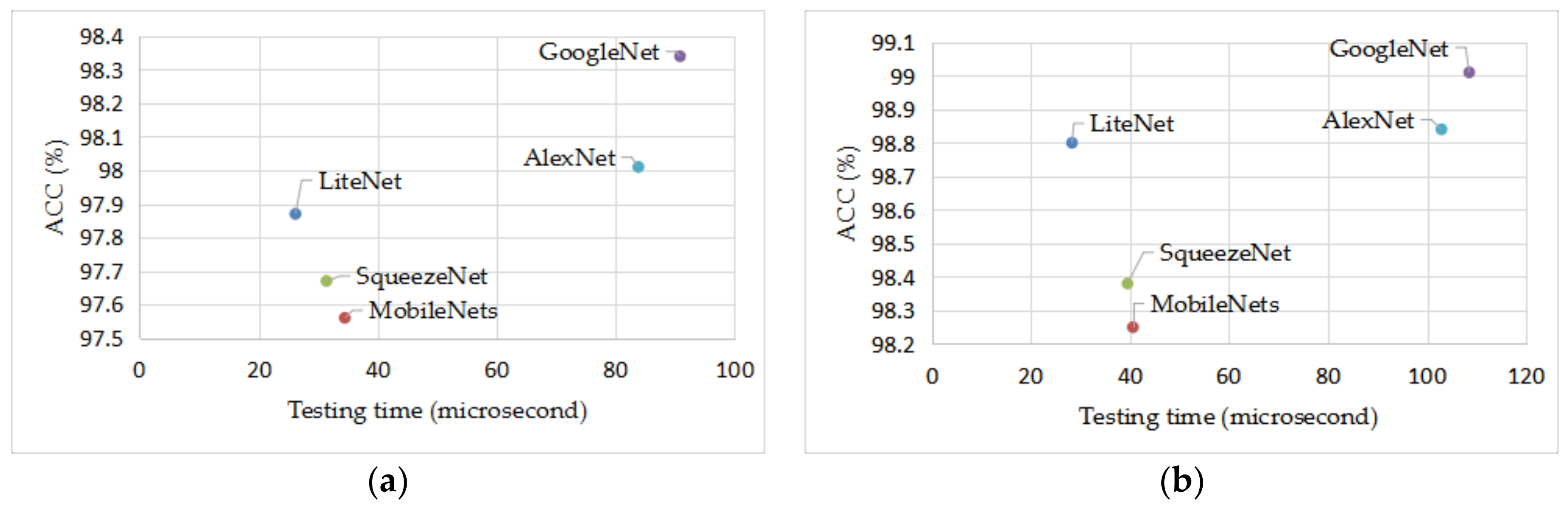

- We conducted extensive experiments to evaluate the performance of LiteNet in terms of both accuracy and efficiency. Experimental results confirm that LiteNet outperforms recent state-of-the-art networks in that it achieves comparable or even higher accuracy with much higher resource-efficiency. LiteNet is thus well suitable for resource-constrained mobile devices.

2. Related Work

2.1. Edge Processing

2.2. Arrhythmia Detection

3. Method of LiteNet

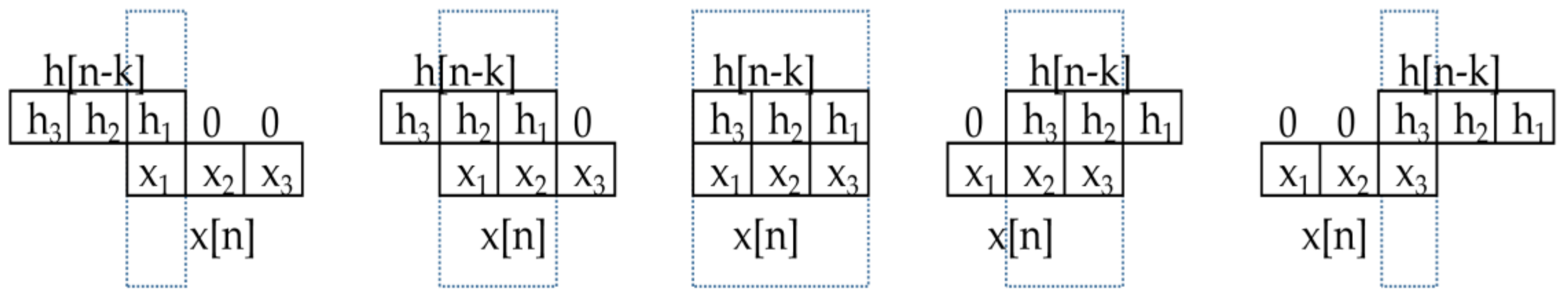

3.1. One-Dimensional Convolution Kernel

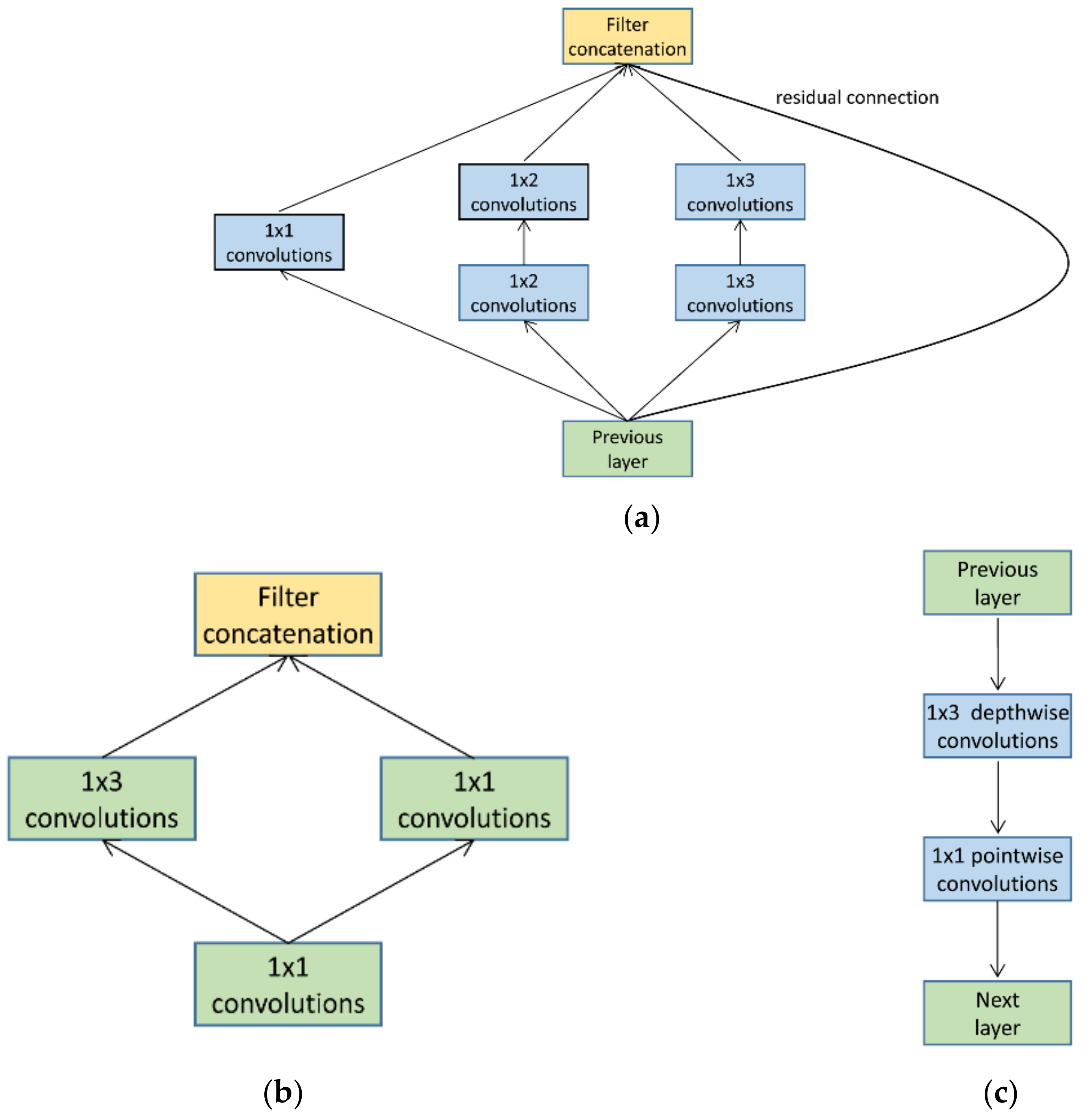

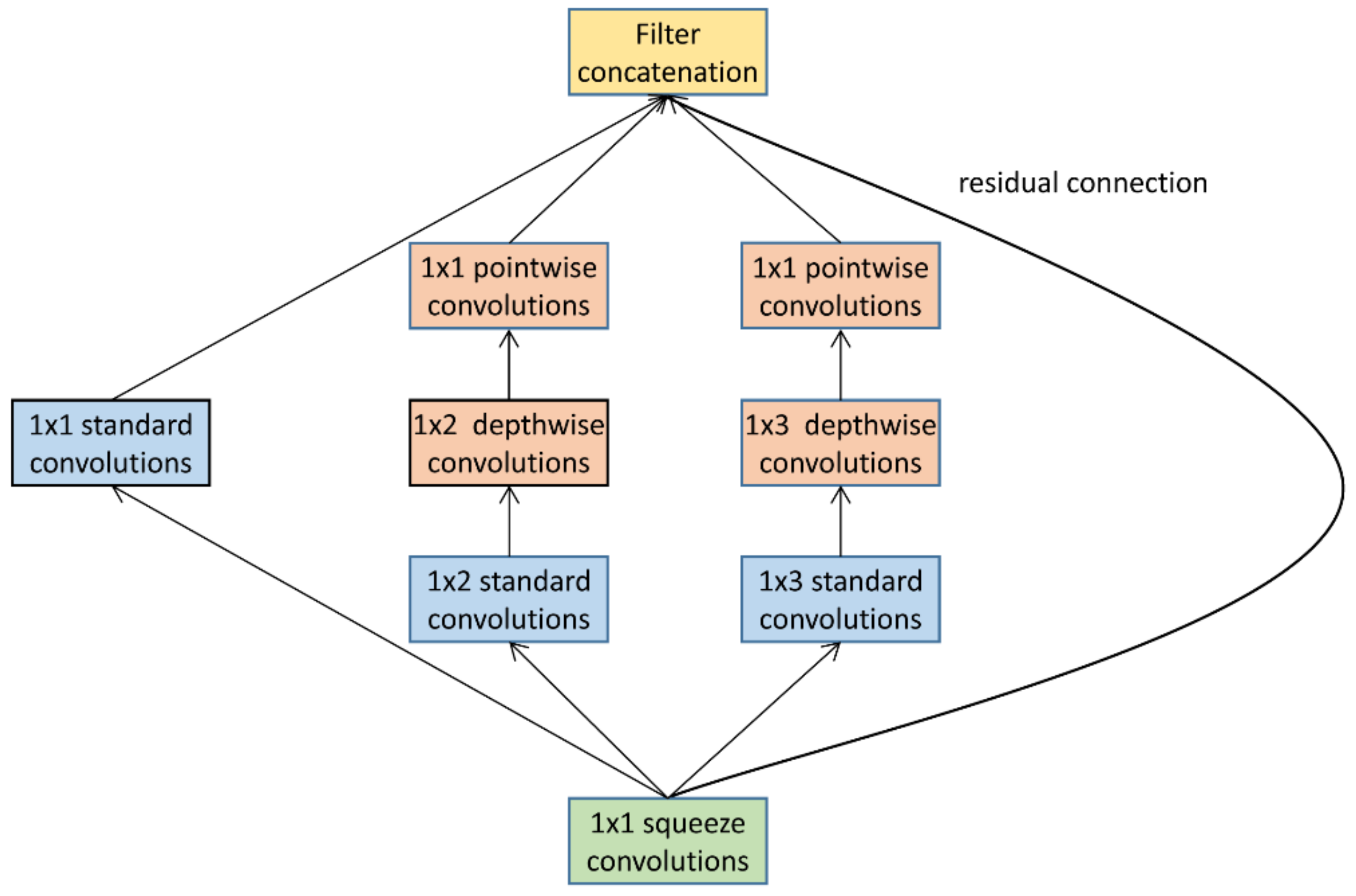

3.2. Lite Module

- It can reduce the parameter volume efficiently. It relies heavily on a squeeze convolutional layer and a depthwise separable convolutional layer, which cuts down on the parameter volume.

- The single 1 × 1 standard convolutional layer is able to enhance abstract representations of local features and cluster correlated feature maps [35].

- Large activation maps can be generated by Lite modules due to postponed down-sampling, which contributes to the high accuracy of the results.

- The Lite module contains filters of heterogeneous size, which facilitates the exploration of different feature maps for key feature extraction.

- The optional residual connection can eliminate the effect of the gradient vanishing problem in deep networks.

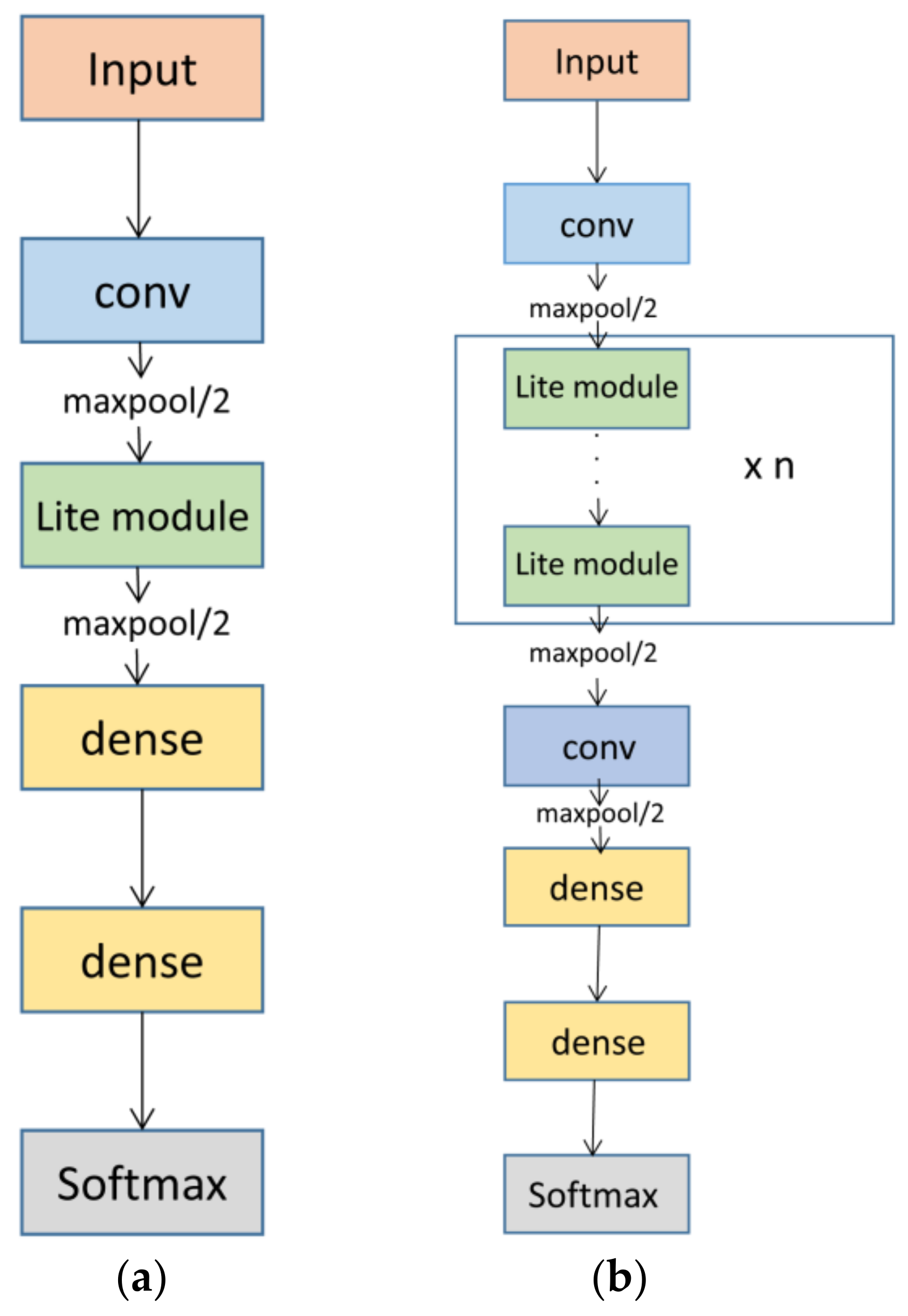

3.3. LiteNet Architecture

- Standard convolutional layer: Most CNN-based architectures begin with few feature maps and large filter size. We also use this design strategy in this paper. A standard convolutional layer has five filters and convolves with a filter size of 1 × 5.

- Max-pooling layer: LiteNet performs two max-pooling operations with a stride of two after a standard convolutional layer and Lite module layer. The max-pooling operation can lower the computational cost between convolutional layers.

- Lite module layer: The use of a small filter size can reduce the computational cost and enhance the abstract representations of local features in a heterogeneous convolutional layer. The Lite module has filter sizes of 1 × 1, 1 × 2 and 1 × 3 for this purpose, as shown in Figure 4, and the feature map settings of the Lite module layer are listed in Table 1.

- Fully connected layers: Two fully connected layers are used in basic LiteNet and in most deep learning architectures. The first and second layers consist of 30 and 20 units, respectively, which can yield expected classification result performance.

- Dropout [38] layer: To tackle the overfitting problem, the dropout technique is adopted. We build a dropout layer after the two dense layers and set the dropout rate at 0.3.

- Softmax layer: The softmax layer has five units. The softmax function is used as a classifier to predict five classes.

3.4. Adam Optimizer

4. Experiments and Results

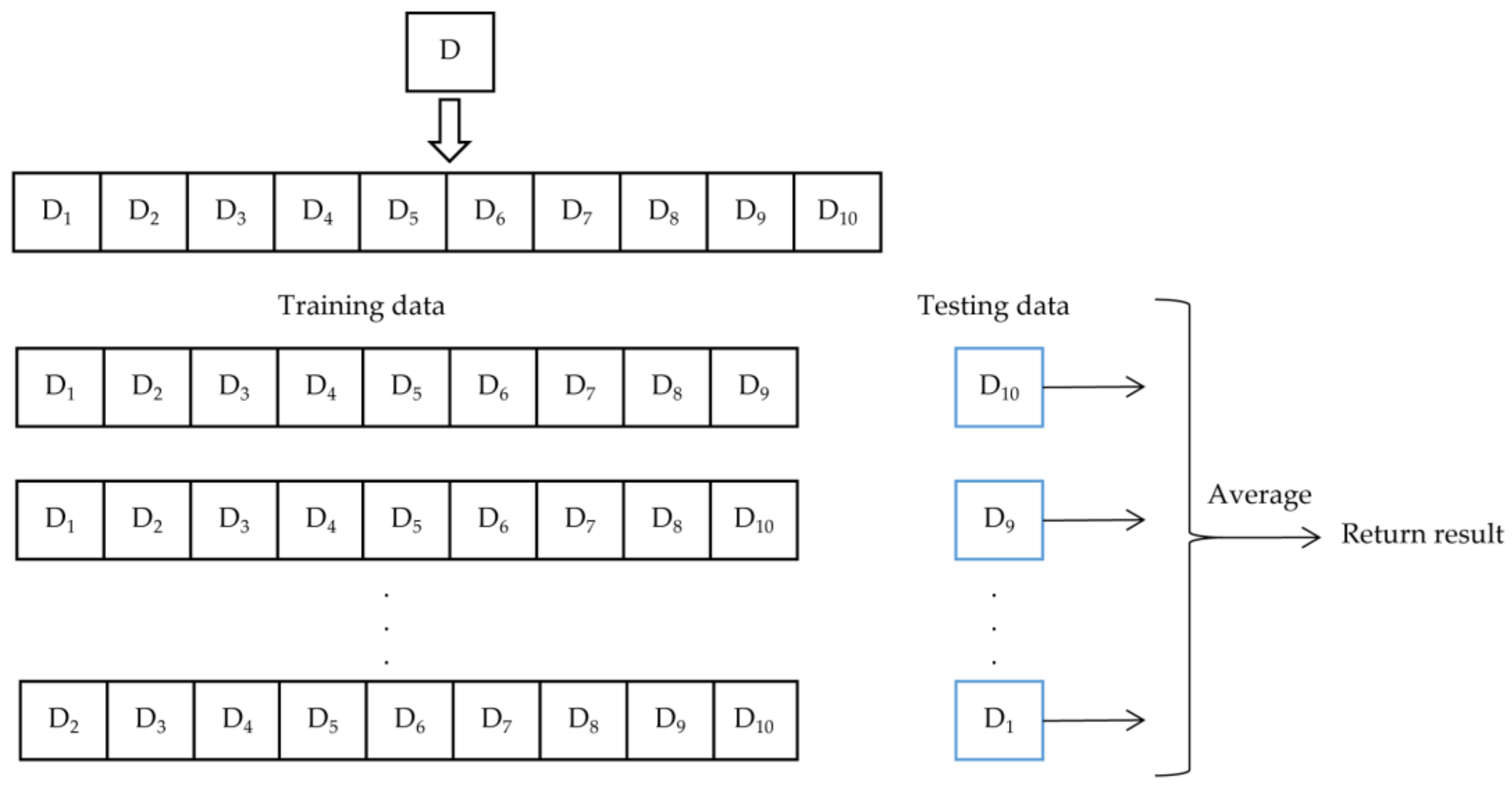

4.1. Dataset and Data Processing

4.2. Experimental Setup

4.3. Baselines and Metrics

- Parameter Count (PC): This metric can be loosely defined as the total parameter volume, except the number of fully connected layers. In deep learning, PC represents the model size and the number of unit connections (computational cost) between layers. PC is an important factor of computational complexity of deep-learning based algorithms. The lower PC is, the lower the computational cost and the less memory the model needs.

- Accuracy (ACC): ACC is an overall metric that measures the correctness of classification into the arrhythmias classes relative to all examples [45].

- F-measure (F1): F1-measure is a measure that combines precision and recall and is equal to the harmonic mean of precision and recall. Higher F1 indicates more effective classification performance.

- Area under the Curve (AUC): The AUC value is equivalent to the probability that a randomly chosen positive example is ranked higher than a randomly chosen negative example. The corresponding AUC values of classification performance evaluations are shown in Table 3.

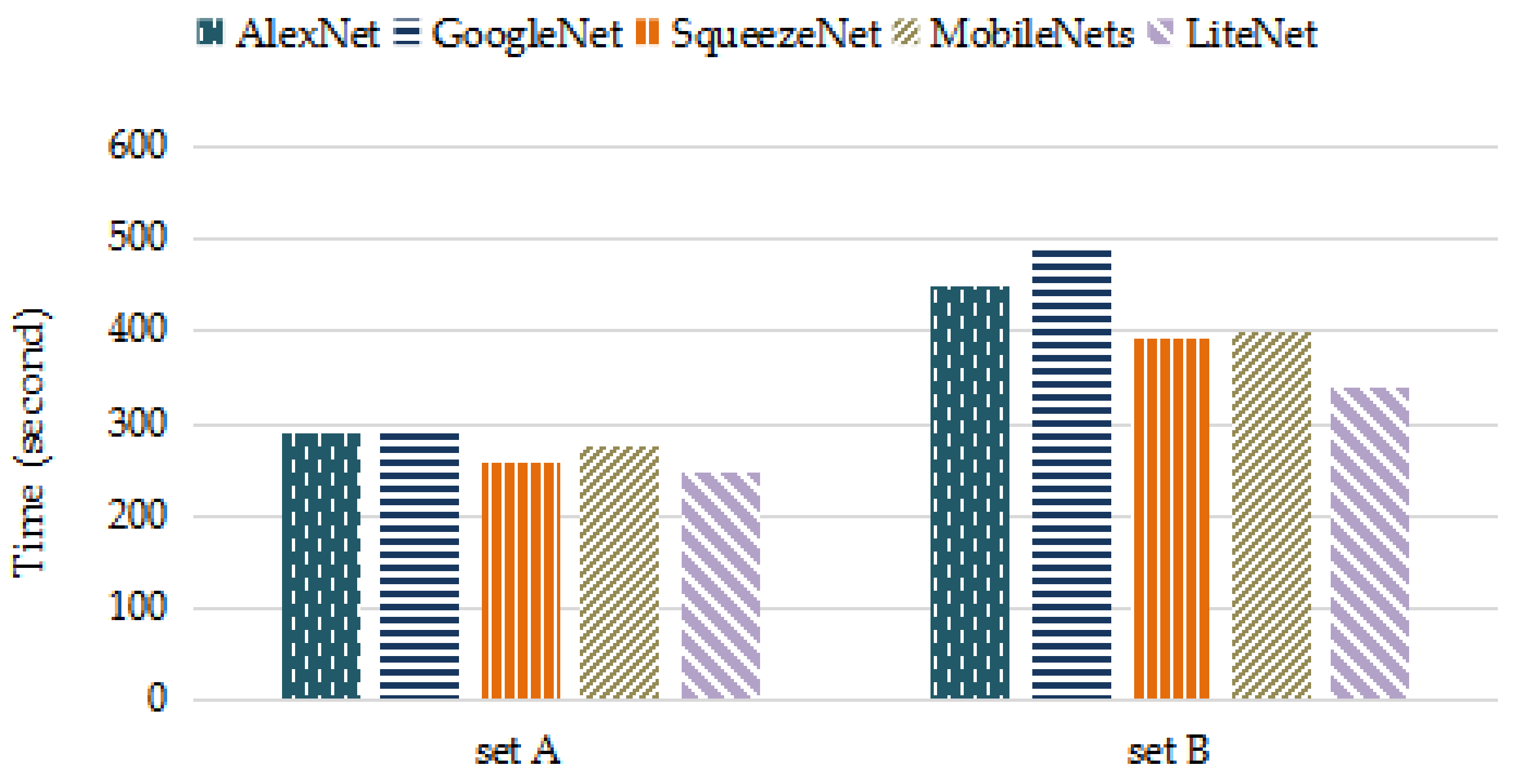

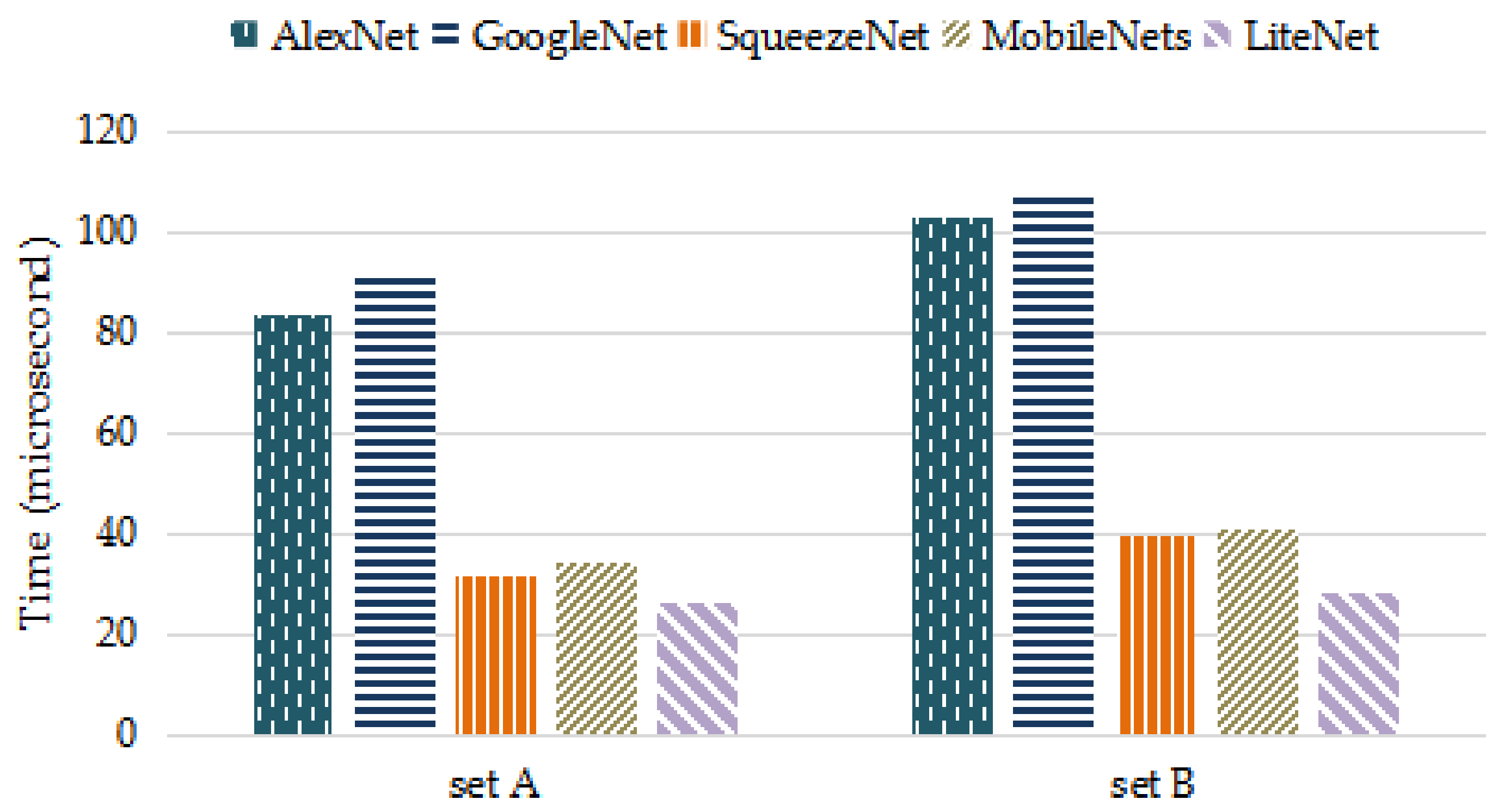

4.4. Experimental Results

- LiteNet is fully automatic. Hence, no additional feature extraction, selection, or classification is required.

- LiteNet has small model size. Thus, it requires less memory and has low computational cost. Small model size results in little transmission overhead when exporting new models to mobile terminals, while smaller memory footprint and low computational cost make LiteNet more feasible for mobile devices (e.g., wearable ECG monitors. As to the requirement of the wearable ECG monitor, the monitor can only have the detection (generating the ECG signal) function and sends the data to the nearby computing devices (network edge) to help calculate the generation results. Given the network edge can be personal computer, or even server, the computation capability and embedded memory is relatively larger than ECG monitors. As shown in the experimentation results, the detection can be finished in real-time with very high accuracy using normal personal computer with Intel (R) CPU i3-2370M at 2.40 GHz and 4 GB memory without any engineering optimization.).

- Although LiteNet has a small model size, a satisfactory recognition rate can be achieved.

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- World Health Organization|Cardiovascular Diseases (CVDs). Available online: http://www.who.int/mediacentre/factsheets/fs317/en/ (accessed on 5 July 2017).

- Acharya, U.R.; Suri, J.S.; Spaan, J.A.E.; Krishnan, S.M. Advances in Cardiac Signal Processing; Springer: Berlin, Germany, 2007; pp. 407–422. [Google Scholar]

- Martis, R.J.; Acharya, U.R.; Adeli, H. Current methods in electrocardiogram characterization. Comput. Biol. Med. 2014, 48, 133–149. [Google Scholar] [CrossRef] [PubMed]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge Computing: Vision and Challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Cui, Y.; He, W.; Ni, C.; Guo, C.; Liu, Z. Energy-Efficient Resource Allocation for Cache-Assisted Mobile Edge Computing. In Proceedings of the 42th IEEE Conference on Local Computer Networks (LCN), Singapore, 9–12 October 2017. [Google Scholar]

- Guo, J.; Song, Z.; Cui, Y.; Liu, Z.; Ji, Y. Energy-Efficient Resource Allocation for Multi-User Mobile Edge Computing. In Proceedings of the IEEE Global Communications Conference (GLOBECOM), Singapore, 4–8 December 2017. [Google Scholar]

- Feng, J.; Liu, Z.; Wu, C.; Ji, Y. AVE: Autonomous Vehicular Edge Computing Framework with ACO-Based Scheduling. IEEE Trans. Veh. Technol. 2017. [Google Scholar] [CrossRef]

- Tang, J.; Liu, A.; Zhang, J.; Xiong, N.N.; Zeng, Z.; Wang, T. A Trust-Based Secure Routing Scheme Using the Traceback Approach for Energy-Harvesting Wireless Sensor Networks. Sensors 2018, 18, 751. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Ota, K.; Zhang, K.; Ma, M.; Xiong, N.; Liu, A.; Long, J. QTSAC: An Energy-Efficient MAC Protocol for Delay Minimization in Wireless Sensor Networks. IEEE Access 2018, 6, 8273–8291. [Google Scholar] [CrossRef]

- Liu, A.; Huang, M.; Zhao, M.; Wang, T. A Smart High-Speed Backbone Path Construction Approach for Energy and Delay Optimization in WSNs. IEEE Access 2018, 6, 13836–13854. [Google Scholar] [CrossRef]

- Li, H.; Ota, K.; Dong, M. Learning IoT in Edge: Deep Learning for the Internet-of-Things with Edge Computing. IEEE Netw. Mag. 2018, 32, 96–101. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Rajpurkar, P.; Hannun, A.Y.; Haghpanahi, M.; Bourn, C.; Ng, A.Y. Cardiologist-Level Arrhythmia Detection with Convolutional Neural Networks. arXiv, 2017; arXiv:1707.01836. [Google Scholar]

- Acharya, U.R.; Fujita, H.; Lih, O.S.; Hagiwara, Y.; Tan, J.H.; Adam, M. Application of Deep Convolutional Neural Network for Automated Detection of Myocardial Infarction Using ECG Signals. Inf. Sci. 2017, 415–416, 190–198. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Iandola, F.N.; Moskewicz, M.W.; Ashraf, K.; Han, S.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50× fewer parameters and <0.5 MB model size. arXiv, 2016; arXiv:1602.07360. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv, 2017; arXiv:1704.04861. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv, 2014; arXiv:1412.6980. [Google Scholar]

- Tao, X.; Ota, K.; Dong, M.; Qi, H.; Li, K. Performance Guaranteed Computation Offloading for Mobile-Edge Cloud Computing. IEEE Wirel. Commun. Lett. 2017, 6, 774–777. [Google Scholar] [CrossRef]

- Feng, J.; Liu, Z.; Wu, C.; Ji, Y. HVC: A Hybrid Cloud Computing Framework in Vehicular Environments. In Proceedings of the IEEE International Conference on Mobile Cloud Computing, Services, and Engineering, San Francisco, CA, USA, 7–9 April 2017; pp. 9–16. [Google Scholar]

- Li, L.; Ota, K.; Dong, M. DeepNFV: A Light-weight Framework for Intelligent Edge Network Functions Virtualization. IEEE Netw. Mag. 2018. in Press. [Google Scholar]

- Li, L.; Ota, K.; Dong, M. Everything is Image: CNN-based Short-term Electrical Load Forecasting for Smart Grid. Poceedings of the 11th International Conference on Frontier of Computer Science and Technology (FCST-2017), Exeter, UK, 21–23 June 2017. [Google Scholar]

- Dong, M.; Zheng, L.; Ota, K.; Guo, S.; Guo, M.; Li, L. Improved Resource Allocation Algorithms for Practical Image Encoding in a Ubiquitous Computing Environment. J. Comput. 2009, 4, 873–880. [Google Scholar] [CrossRef]

- Sifre, L.; Mallat, S. Rigid-Motion Scattering for Texture Classification. Comput. Sci. 2014, 3559, 501–515. [Google Scholar]

- Khorrami, H.; Moavenian, M. A comparative study of DWT, CWT and DCT transformations in ECG arrhythmias classification. Expert Syst. Appl. 2010, 37, 5751–5757. [Google Scholar] [CrossRef]

- Al-Naima, F.; Al-Timemy, A. Neural Network Based Classification of Myocardial Infarction: A Comparative Study of Wavelet and Fourier Transforms. Available online: http://cdn.intechweb.org/pdfs/9163.pdf (accessed on 10 April 2018).

- Martis, R.J.; Acharya, U.R.; Prasad, H.; Chua, C.K.; Lim, C.M. Automated detection of atrial fibrillation using Bayesian paradigm. Knowl.-Based Syst. 2013, 54, 269–275. [Google Scholar] [CrossRef]

- Acharya, U.R.; Fujita, H.; Adam, M.; Lih, O.S.; Sudarshan, V.K.; Tan, J.H.; Koh, J.E.; Hagiwara, Y.; Chua, C.K.; Poo, C.K. Automated Characterization and Classification of Coronary Artery Disease and Myocardial Infarction by Decomposition of ECG Signals: A Comparative Study. Inf. Sci. 2016, 377, 17–29. [Google Scholar] [CrossRef]

- Dallali, A.; Kachouri, A.; Samet, M. Classification of Cardiac Arrhythmia Using WT, HRV, and Fuzzy C-Means Clustering. Signal Process. Int. J. 2011, 5, 101–108. [Google Scholar]

- Cheng, P.; Dong, X. Life-Threatening Ventricular Arrhythmia Detection with Personalized Features. IEEE Access 2017, 5, 14195–14203. [Google Scholar] [CrossRef]

- Mocchegiani, E.; Muzzioli, M.; Giacconi, R.; Cipriano, C.; Gasparini, N.; Franceschi, C.; Gaetti, R.; Cavalieri, E.; Suzuki, H. Detection of Life-Threatening Arrhythmias Using Feature Selection and Support Vector Machines. IEEE Trans. Biomed. Eng. 2014, 61, 832–840. [Google Scholar]

- Zheng, Y.; Liu, Q.; Chen, E.; Ge, Y.; Zhao, J.L. Time Series Classification Using Multi-Channels Deep Convolutional Neural Networks; Springer International Publishing: New York, NY, USA, 2014; pp. 298–310. [Google Scholar]

- Acharya, U.R.; Oh, S.L.; Hagiwara, Y.; Tan, J.H.; Adam, M.; Gertych, A.; Tan, R.S. A deep convolutional neural network model to classify heartbeats. Comput. Biol. Med. 2017, 89, 389. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv, 2015; arXiv:1512.03385. [Google Scholar]

- Lin, M.; Chen, Q.; Yan, S. Network In Network. arXiv, 2013; arXiv:1312.4400. [Google Scholar]

- Tang, Y. Deep Learning using Linear Support Vector Machines. arXiv, 2013; arXiv:1306.0239. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. In Proceeding of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Bouvrie, J. Notes on Convolutional Neural Networks. Available online: https://www.mdpi.com/2072-4292/8/4/271/pdf (accessed on 10 April 2018).

- Association for the Advancement of Medical Instrumentation. Testing and Reporting Performance Results of Cardiac Rhythm and ST Segment Measurement Algorithms: Fact Sheet; AAMI: Brisbane, Australia, 2012. [Google Scholar]

- Moody, G.B.; Mark, R.G. The impact of the MIT-BIH Arrhythmia Database. IEEE Eng. Med. Biol. Mag. 2001, 20, 45–50. [Google Scholar] [CrossRef] [PubMed]

- Pedregosa, F.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; Vanderplas, J. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2012, 12, 2825–2830. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M. TensorFlow: A system for large-scale machine learning. In Proceeding of the 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI ’16), Savannah, GA, USA, 2–4 November 2016. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems (NIPS’12), Lake Tahoe, NV, USA, 3–6 December 2012; Curran Associates Inc.: Red Hook, NY, USA, 2012; Volume 1, pp. 1097–1105. [Google Scholar]

- Bani-Hasan, A.M.; El-Hefnawi, M.F.; Kadah, M.Y. Model-based parameter estimation applied on electrocardiogram signal. J. Comput. Biol. Bioinform. Res. 2011, 3, 25–28. [Google Scholar]

| Layer | Kernel Size | Stride | No. of Filters | |

|---|---|---|---|---|

| Standard Conv. | 1 × 5 | 1 | 5 | |

| Max-Pooling | 1 × 2 | 2 | 5 | |

| Lite Module | Squeeze Conv. | 1 × 1 | 1 | 3 |

| Standard Conv. | 1 × 1 | 1 | 6 | |

| 1 × 2 | 1 | 6 | ||

| 1 × 3 | 1 | 6 | ||

| Depthwise Conv. | 1 × 2 | 1 | 6 | |

| 1 × 3 | 1 | 6 | ||

| Pointwise Conv. | 1 × 1 | 1 | 6 | |

| 1 × 1 | 1 | 6 | ||

| Max-Pooling | 1 × 2 | 2 | 18 | |

| Dense | 30 | |||

| Dense | 20 | |||

| True Label | |||

|---|---|---|---|

| Normal | Arrhythmia | ||

| Predicate label | normal | True Positive (TP) | False Positive (FP) |

| arrhythmia | False Negative (FN) | Ture Negative (TN) | |

| Evaluation | Excellent | Good | Fair | Poor | Failure |

|---|---|---|---|---|---|

| AUC range | 0.9–1.0 | 0.8–0.9 | 0.7–0.8 | 0.6–0.7 | 0.5–0.6 |

| Optimizer | ACC (%) | AUC (%) | F1-Measure (%) |

|---|---|---|---|

| SGD | 95.66 | 96.67 | 98.65 |

| Adam | 97.87 | 97.78 | 99.33 |

| Optimizer | ACC (%) | AUC (%) | F1-Measure (%) |

|---|---|---|---|

| SGD | 96.67 | 97.55. | 98.34 |

| Adam | 98.80 | 99.30 | 99.66 |

| Network | PC | ACC (%) | AUC (%) | F1-Measure (%) |

|---|---|---|---|---|

| AlexNet | 1100 | 97.89 | 98.48 | 99.35 |

| GoogleNet | 1276 | 98.34 | 98.79 | 99.58 |

| SqueezeNet | 528 | 97.53 | 97.28 | 99.09 |

| MobileNets | 550 | 97.45 | 97.34 | 99.12 |

| LiteNet | 454 | 97.87 | 97.78 | 99.33 |

| Network | PC | ACC (%) | AUC (%) | F1-Measure (%) |

|---|---|---|---|---|

| AlexNet | 1100 | 98.83 | 99.05 | 99.68 |

| GoogleNet | 1276 | 99.01 | 99.24 | 99.53 |

| SqueezeNet | 528 | 98.29 | 98.92 | 99.41 |

| MobileNets | 550 | 98.20 | 98.82 | 99.28 |

| LiteNet | 454 | 98.80 | 99.30 | 99.66 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, Z.; Zhang, X.; Cao, Y.; Liu, Z.; Zhang, B.; Wang, X. LiteNet: Lightweight Neural Network for Detecting Arrhythmias at Resource-Constrained Mobile Devices. Sensors 2018, 18, 1229. https://doi.org/10.3390/s18041229

He Z, Zhang X, Cao Y, Liu Z, Zhang B, Wang X. LiteNet: Lightweight Neural Network for Detecting Arrhythmias at Resource-Constrained Mobile Devices. Sensors. 2018; 18(4):1229. https://doi.org/10.3390/s18041229

Chicago/Turabian StyleHe, Ziyang, Xiaoqing Zhang, Yangjie Cao, Zhi Liu, Bo Zhang, and Xiaoyan Wang. 2018. "LiteNet: Lightweight Neural Network for Detecting Arrhythmias at Resource-Constrained Mobile Devices" Sensors 18, no. 4: 1229. https://doi.org/10.3390/s18041229