Infrared and Visible Image Fusion Based on Different Constraints in the Non-Subsampled Shearlet Transform Domain

Abstract

:1. Introduction

2. Methods

2.1. Preliminaries

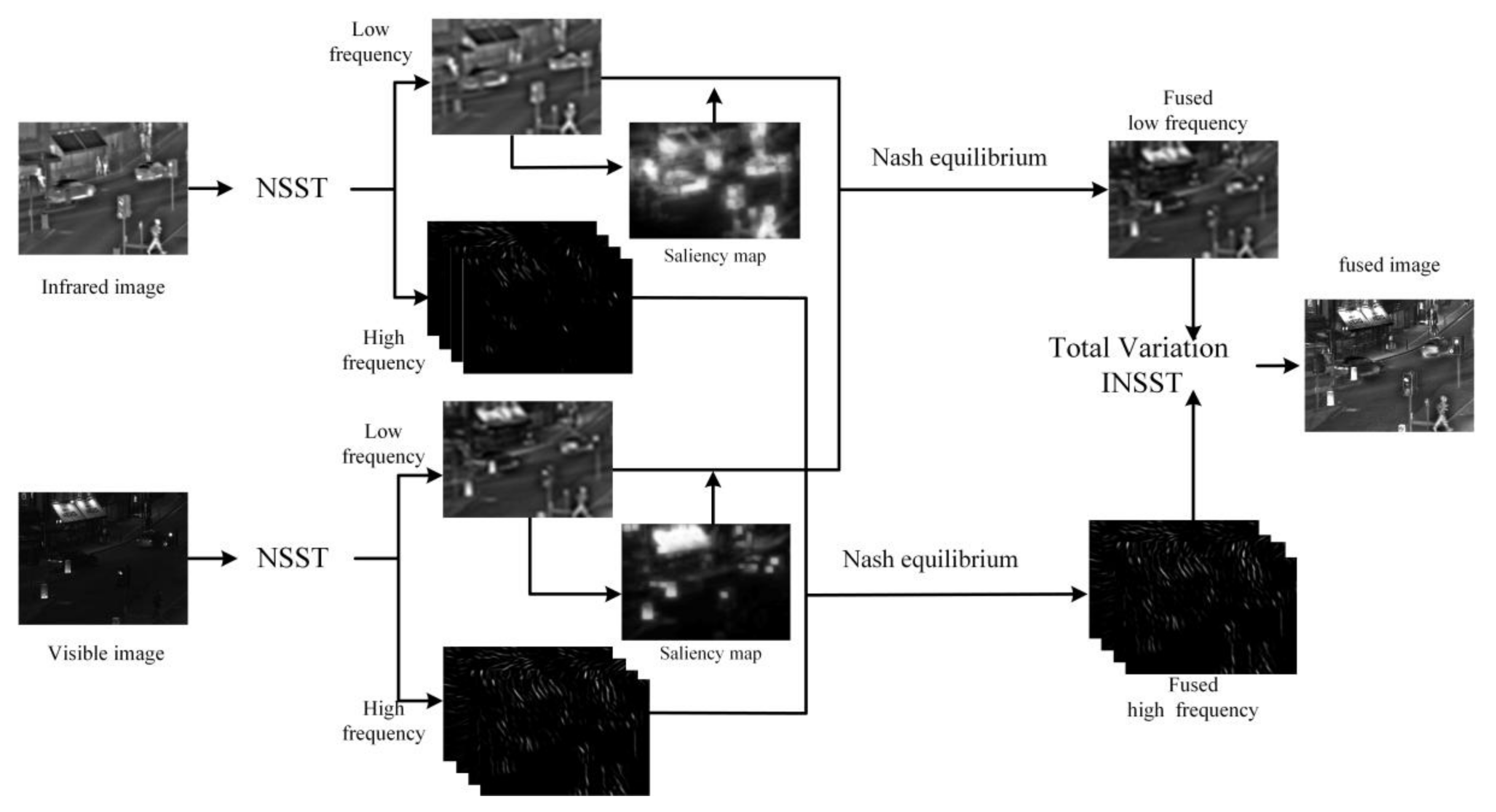

2.2. Proposed Method

2.2.1. Model of Image Fusion

2.2.2. Strategy for High Frequency Coefficients

2.3. Strategy for Low-Frequency Coefficients

2.4. Nash Equilibrium to Update the Coefficients

| Algorithm 1. The proposed method | |

| Input: infrared image I, visible image V | |

| Output: fused image f | |

| Step 1. | Initialize parameters, , , |

| Step 2. | Decompose the I and V by NSST, get the Id , {Ig} and Vd, {Vg} |

| Step 3. | Compute the Eg and Ed by Equation (12) and (18) Where Eg < σg, and Ed < σd, compute the fd and fg Else back to the step 3, update the α to minimize the Eg and Ed |

| Step 4. | Use the fd and fg to inverse NSST to obtain the output. |

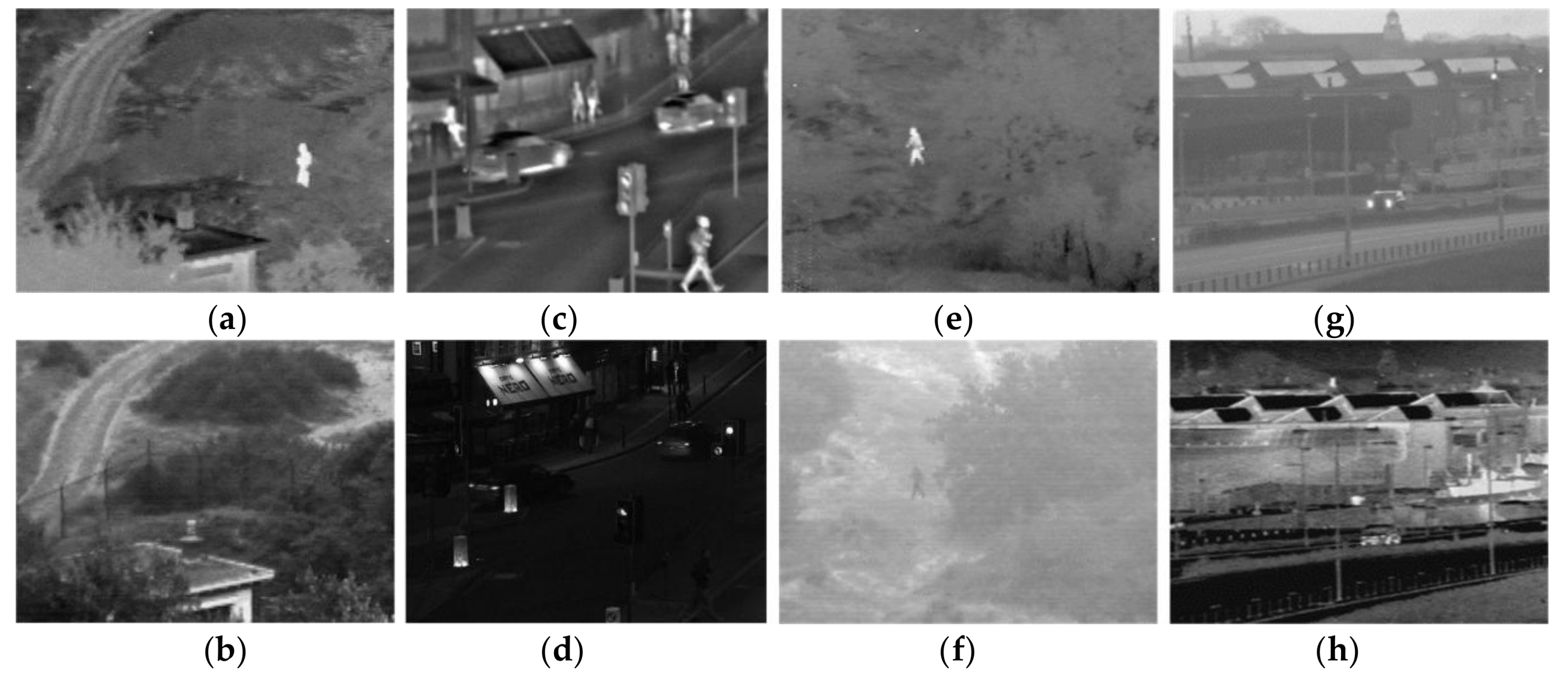

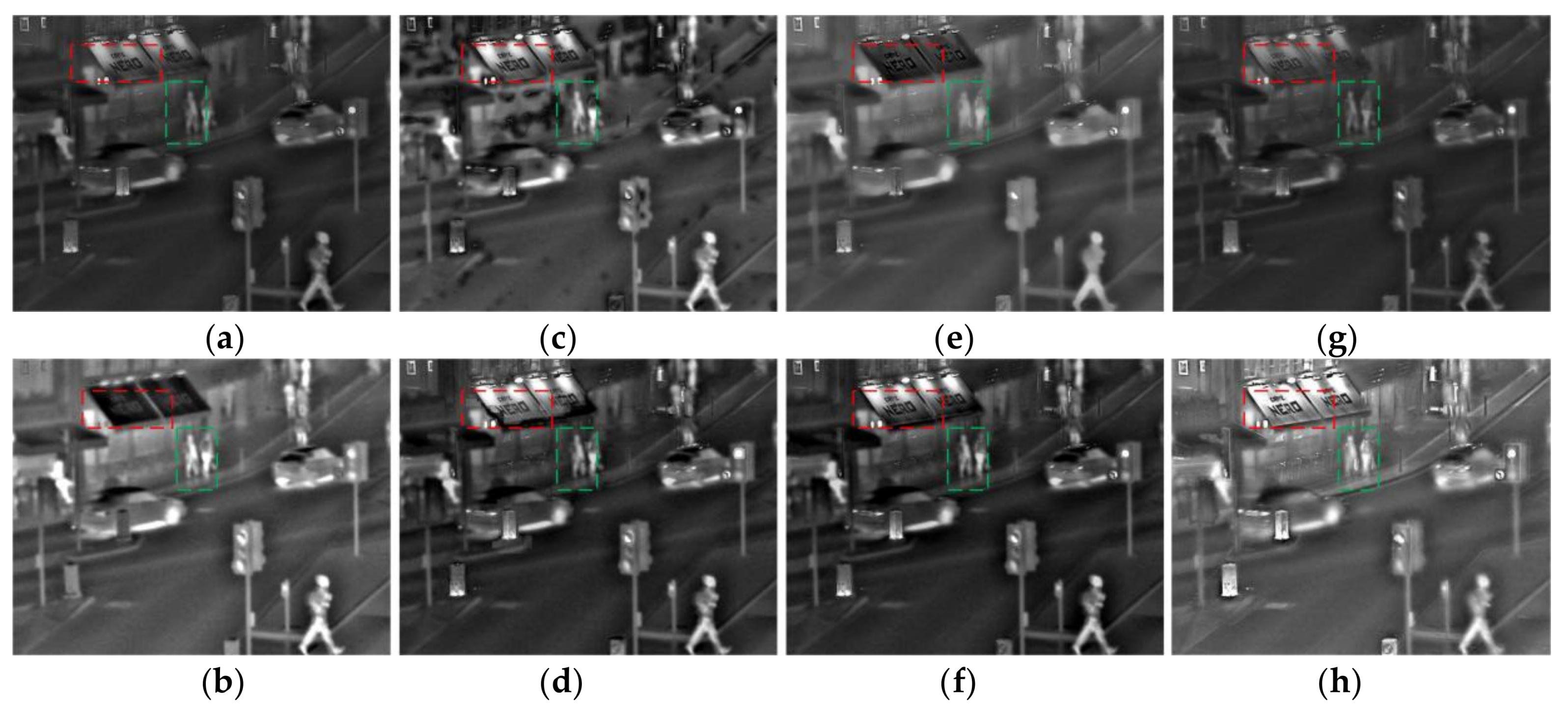

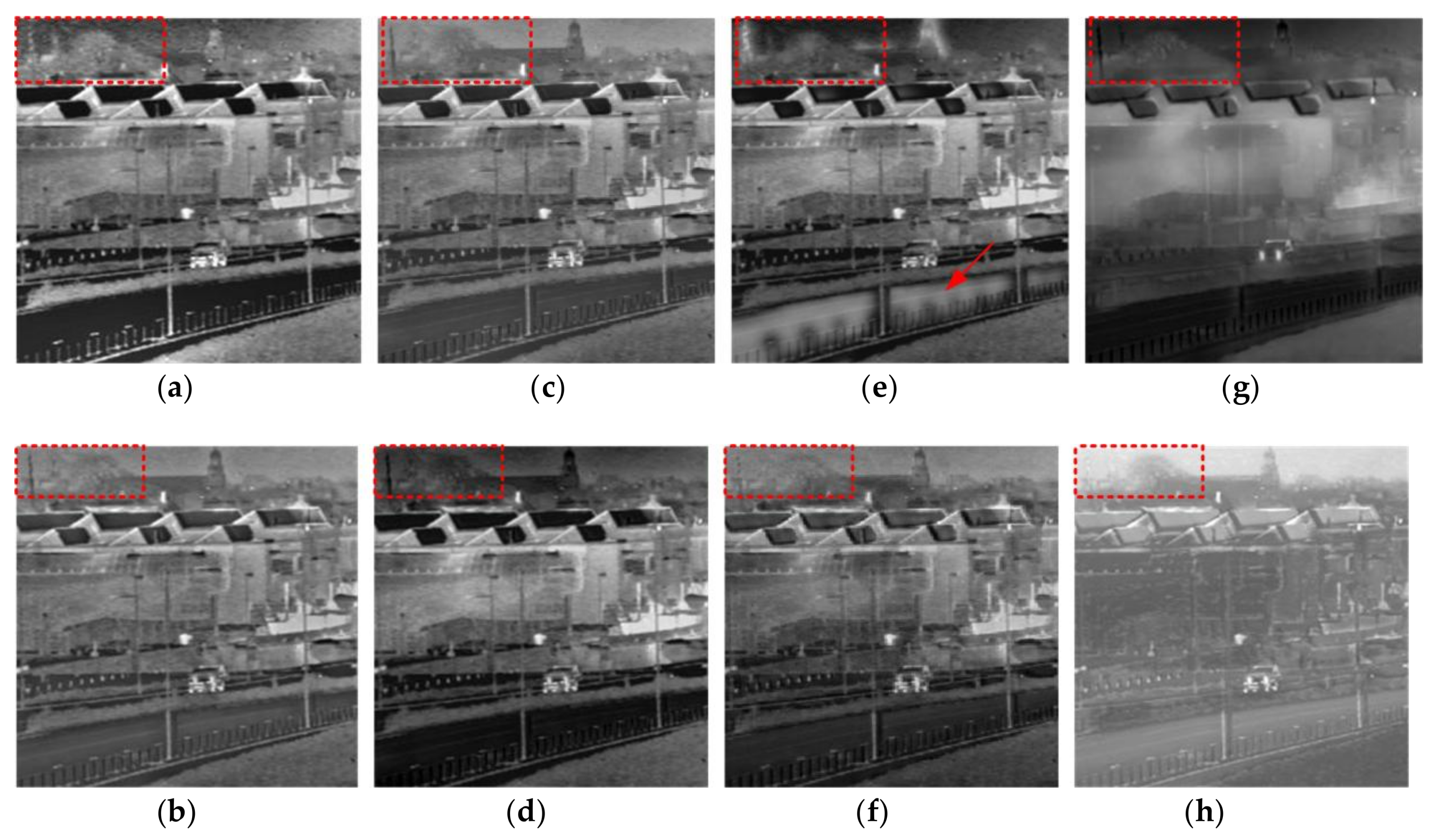

3. Results and Analysis

3.1. Performance Evaluation

3.2. Further Analysis

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Libao, Z.; Jue, Z. A new adaptive fusion method based on saliency analysis for remote sensing images. Chin. J. Lasers 2015, 42, 0114001. [Google Scholar] [CrossRef]

- Wang, L.; Li, B.; Tian, L.-F. Multi-model medical image fusion using the inter-scale and intra-scale dependencies between image shift-invariant shearlet coefficients. Inf. Fusion 2014, 19, 20–28. [Google Scholar] [CrossRef]

- Wenshan, D.; Duyan, B.; Linyuan, H.; Dongpeng, W. Fusion of Infrared and Visible Image Based on Shearlet Transform and Neighborhood Structure Features. Acta Opt. Sinica 2017, 37, 1010002. [Google Scholar] [CrossRef]

- Musheng, C.; Zhishan, C. Study on fusion of visual and infrared images based on NSCT. Laser Optoelectron. Prog. 2015, 52, 061002. [Google Scholar] [CrossRef]

- Jin, H.Y.; Wang, Y. A fusion method for visible and infrared images based on contrast pyramid with teaching learning based optimization. Infrared Phys. Technol. 2014, 64, 134–142. [Google Scholar] [CrossRef]

- Shahdoosti, H.D.; Ghassemian, H. Combining the spectral PCA and spatial PCA fusion methods by an optimal filter. Inf. Fusion 2016, 27, 150–160. [Google Scholar] [CrossRef]

- Durga, P.B.; Ravindra, D. Two-scale image fusion of visible and infrared images using saliency detection. Infrared Phys. Technol. 2016, 76, 52–64. [Google Scholar]

- Rui, C.; Ke, Z.; Li, J. An image fusion algorithm using wavelet transform. Acta Electron. Sin. 2004, 32, 750–752. [Google Scholar]

- Do, M.; Vetterli, M. The contourlet transform: An efficient directional multiresolution image representation. IEEE Trans. Image Process. 2005, 14, 2091–2106. [Google Scholar] [CrossRef]

- Wang, X.H.; Wei, T.T.; Zhou, Z.G. Remote sensing image fusion method based on contourlet coefficients’ correlativity of directional region. J. Remote Sens. 2010, 14, 905–916. [Google Scholar]

- Wang, N.Y. Multimodal medical image fusion framework based on simplified PCNN in Nonsubsampled contourlet transform domain. J. Multimed. 2013, 8, 270–276. [Google Scholar] [CrossRef]

- Guo, K.H.; Labate, D.; Lim, W.; Guido, W.; Edward, W. Wavelets with composite dilations and their MRA properties. Appl. Comput. Harmon. Anal. 2006, 20, 202–236. [Google Scholar] [CrossRef]

- Glenn, E.; Labate, D.; Lim, W. Optimally sparse image representations using Shearlets. In Proceedings of the Fortieth Asilomar Conference on the Signals, Systems and Computers (ACSSC’06), Pacific Grove, CA, USA, 29 October–1 November 2006. [Google Scholar]

- Glenn, E.; Labate, D.; Lim, W. Sparse directional image representations using the discrete shearlet transform. Appl. Comput. Harmon. Anal. 2008, 25, 25–46. [Google Scholar]

- Luo, X.Q.; Zhang, C.Z.; Wu, X. A novel algorithm of remote sensing image fusion based on shift-invariant shearlet transform and regional selection. Int. J. Electron Commun. 2016, 70, 186–197. [Google Scholar] [CrossRef]

- Wan, W.; Yang, Y.; Lee, H.J. Practical remote sensing image fusion method based on guided filter and improved SML in the NSST domain. In Signal Image and Video Processing; Springer: London, UK, 2018; pp. 1–8. [Google Scholar]

- Ma, J.Y.; Chen, C.; Li, C.; Huang, J. Infrared and visible image fusion via gradient transfer and total variation minimization. Inf. Fusion 2016, 31, 100–109. [Google Scholar] [CrossRef]

- Lei, W. Study for the Key Algorithms in Multi-Modal Medical Image Registration and Fusion; South China University of Technology: Guang Zhou, China, 2013. [Google Scholar]

- Ma, Y.; Chen, J.; Chen, C.; Fan, F.; Ma, J.Y. Infrared and visible image fusion using total variation model. Neurocomputing 2016, 202, 12–19. [Google Scholar] [CrossRef]

- Chan, T.F.; Osher, S.; Shen, J.H. The digital TV filter and nonlinear denoising. IEEE Trans. Image Process. 2001, 10, 231–241. [Google Scholar] [CrossRef] [PubMed]

- Itti, L.; Koch, C.; Niebur, E. A Model of Saliency-Based Visual Attention for Rapid Scene Analysis. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1254–1259. [Google Scholar] [CrossRef]

- Liu, T.; Sun, J.; Zheng, N.N. Learning to detect a salient object. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; Volume 33, pp. 1–8. [Google Scholar]

- Xie, Y.L.; Lu, H.C. Visual saliency detection based on Bayesian model. In Proceedings of the IEEE International Conference on Image Processing, Brussels, Belgium, 11–14 September 2011; Volume 263, pp. 645–648. [Google Scholar]

- Goferman, S.; Manor, L.; Tal, A. Context-Aware Saliency Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1915–1926. [Google Scholar] [CrossRef] [PubMed]

- Yang, Z.; Li, D.H.; Yu, J.G. Saliency detection based on diffusion maps. Optik 2014, 125, 5202–5206. [Google Scholar] [CrossRef]

- Feng, S.; Kai-yang, Q.; Wei, S.; Hong, G. Image saliency detection based on region merging. J. Comput. Aided Des. Comput. Graph. 2016, 28, 1679–1687. [Google Scholar]

- Li, S.T.; Kang, X.D.; Hu, J.W. Image fusion with guided filtering. IEEE Trans. Image Process. 2013, 22, 2864–2875. [Google Scholar] [PubMed]

- Wang, Z.; Bovik, A. A universal image quality index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

- Piella, G.; Heijmans, H. A new quality metric for image fusion. In Proceedings of the Tenth International Conference on Image Processing, Barcelona, Spain, 14–17 September 2003. [Google Scholar]

| Algorithm | Q | Qe | H | AVG | OCE |

|---|---|---|---|---|---|

| CP | 6.0401 | 55.7800 | 6.7564 | 5.3759 | 6.7892 |

| PCA | 5.7493 | 52.4211 | 7.4664 | 4.9450 | 6.2556 |

| GFF | 5.1017 | 45.3709 | 6.7829 | 4.2921 | 6.1763 |

| SAL | 6.0435 | 56.3253 | 7.1872 | 5.4797 | 6.4765 |

| GTF | 4.1171 | 35.6740 | 6.6778 | 3.4104 | 6.8306 |

| NSCT | 6.0186 | 50.6460 | 6.9740 | 4.8288 | 6.6516 |

| NSST | 3.4279 | 29.1192 | 6.2750 | 2.7837 | 5.9401 |

| Proposed | 6.1977 | 59.9885 | 6.6024 | 5.9197 | 6.9581 |

| Algorithm | Q | Qe | H | AVG | OCE |

|---|---|---|---|---|---|

| CP | 4.6699 | 43.5812 | 6.1763 | 4.4344 | 6.8412 |

| PCA | 4.1114 | 35.8304 | 6.9892 | 3.3226 | 7.1751 |

| GFF | 4.7462 | 41.7774 | 6.8365 | 3.9003 | 7.1223 |

| SAL | 4.9055 | 41.0244 | 6.8306 | 3.8867 | 7.3618 |

| GTF | 2.8752 | 25.9216 | 6.6204 | 2.4483 | 7.4233 |

| NSCT | 4.5353 | 38.1520 | 6.6516 | 3.5918 | 7.4169 |

| NSST | 2.5643 | 21..4194 | 5.9401 | 2.0280 | 7.3417 |

| Proposed | 5.0675 | 45.5668 | 6.8449 | 4.5225 | 7.4521 |

| Algorithm | Q | Qe | H | AVG | OCE |

|---|---|---|---|---|---|

| CP | 6.5900 | 36.4337 | 6.2311 | 4.2565 | 6.6155 |

| PCA | 2.8677 | 17.8803 | 5.9258 | 1.9602 | 6.4579 |

| GFF | 4.9492 | 28.1646 | 6.2764 | 3.2447 | 6.5692 |

| SAL | 5.3270 | 33.3692 | 6.2769 | 3.6519 | 6.7221 |

| GTF | 2.9241 | 21.2003 | 6.0205 | 2.1727 | 6.0142 |

| NSCT | 4.9417 | 29.5920 | 6.1613 | 3.2930 | 6.8353 |

| NSST | 3.1185 | 18.4770 | 5.9227 | 2.0706 | 6.9215 |

| Proposed | 5.7246 | 40.3332 | 6.6474 | 4.3008 | 7.2371 |

| Algorithm | Q | Qe | H | AVG | OCE |

|---|---|---|---|---|---|

| CP | 2.4312 | 62.4540 | 6.3764 | 4.5540 | 3.6240 |

| PCA | 3.6473 | 57.6553 | 5.9483 | 3.9369 | 2.7300 |

| GFF | 4.5772 | 53.543 | 5.4922 | 3.5770 | 2.0700 |

| SAL | 4.2346 | 42.4731 | 4.5290 | 1.3330 | 1.0230 |

| GTF | 1.8541 | 55.5872 | 6.5158 | 3.5109 | 2.3092 |

| NSCT | 3.9298 | 59.8357 | 6.1275 | 3.5552 | 3.0523 |

| NSST | 3.1185 | 52.2627 | 5.4491 | 3.8873 | 1.8936 |

| Proposed | 5.9032 | 65.4193 | 6.4327 | 4.5692 | 3.9829 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, Y.; Bi, D.; Wu, D. Infrared and Visible Image Fusion Based on Different Constraints in the Non-Subsampled Shearlet Transform Domain. Sensors 2018, 18, 1169. https://doi.org/10.3390/s18041169

Huang Y, Bi D, Wu D. Infrared and Visible Image Fusion Based on Different Constraints in the Non-Subsampled Shearlet Transform Domain. Sensors. 2018; 18(4):1169. https://doi.org/10.3390/s18041169

Chicago/Turabian StyleHuang, Yan, Duyan Bi, and Dongpeng Wu. 2018. "Infrared and Visible Image Fusion Based on Different Constraints in the Non-Subsampled Shearlet Transform Domain" Sensors 18, no. 4: 1169. https://doi.org/10.3390/s18041169