Moving Object Localization Based on UHF RFID Phase and Laser Clustering

Abstract

:1. Introduction

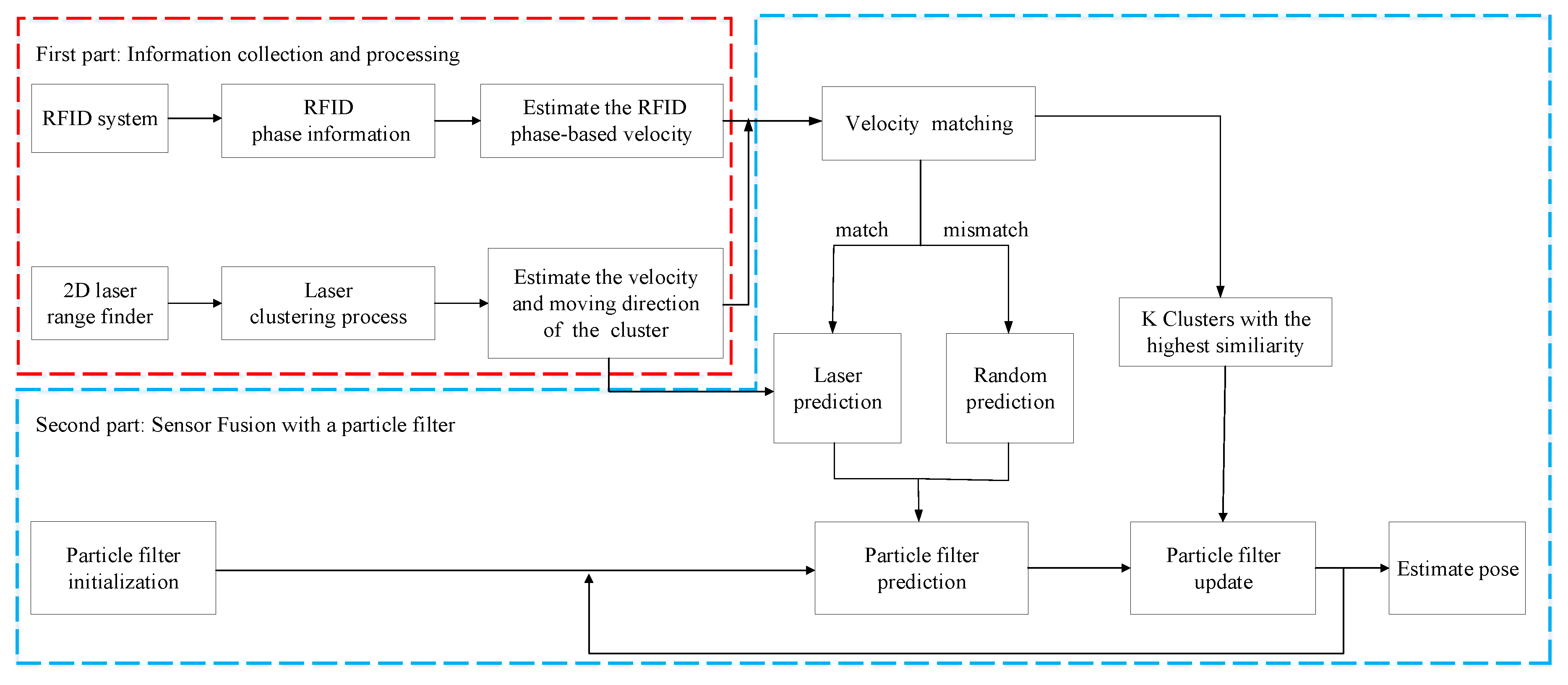

2. System Overview

3. Moving Object Localization Based on the Particle Filtering

3.1. Computing RFID Phase-Based Velocity

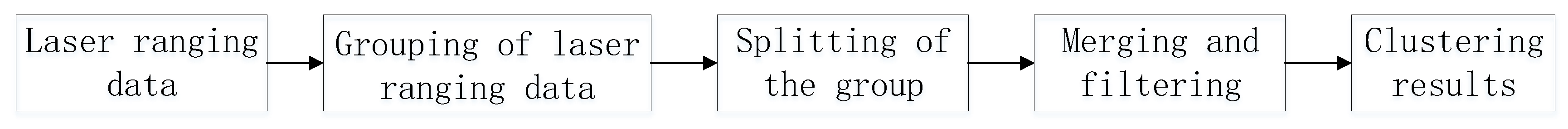

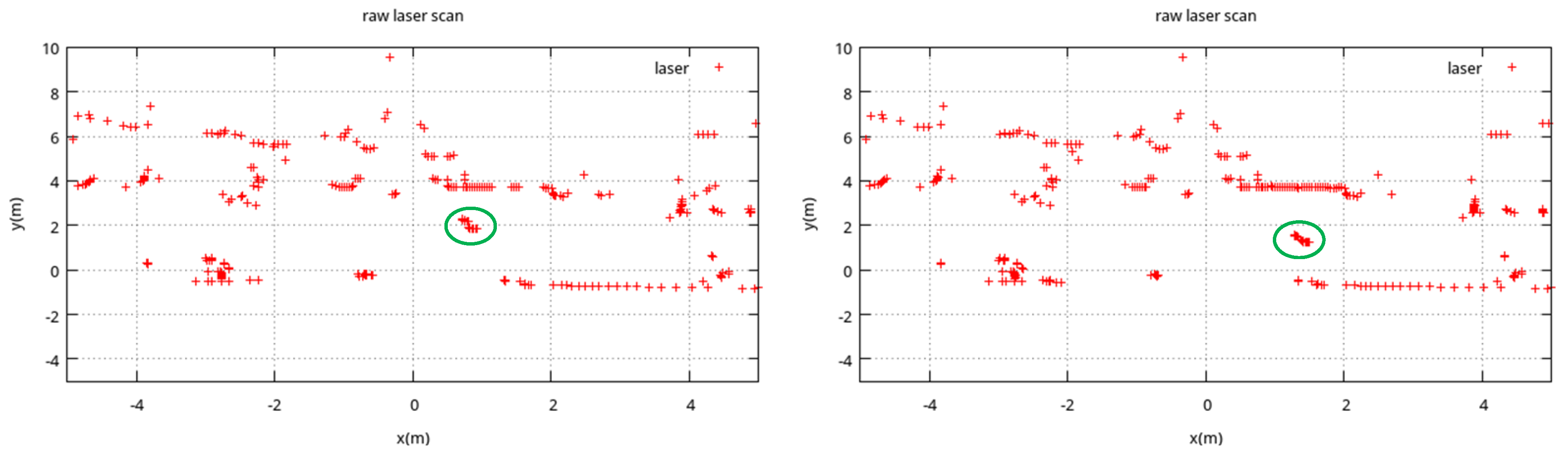

3.2. Clustering Laser Ranging Data

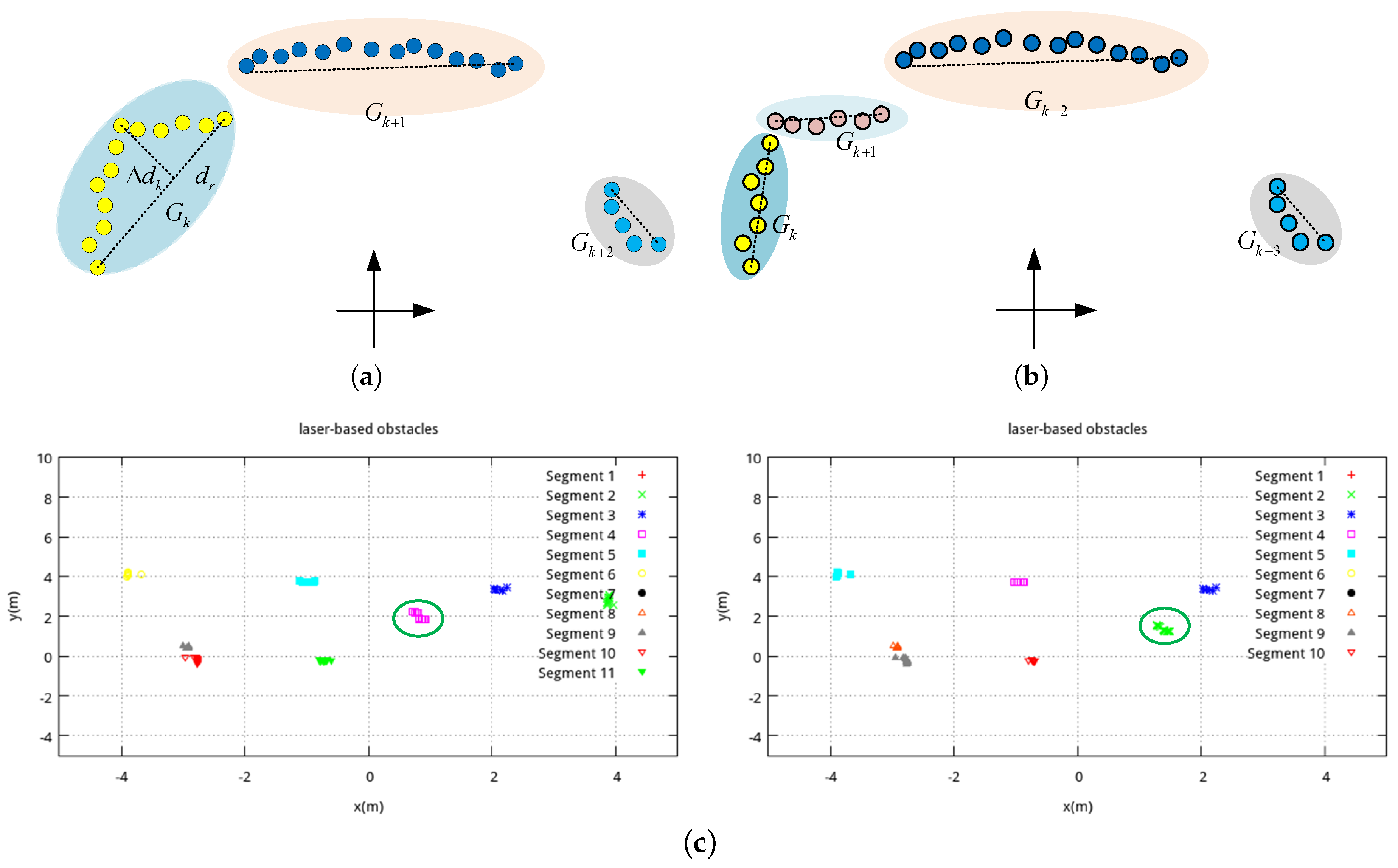

3.2.1. Grouping of Laser Ranging Data

3.2.2. Splitting of the Group

3.2.3. Merging and Filtering

3.3. Estimate the Distance-Based Velocity and Moving Direction of a Cluster

3.4. Similarity Computation Using Phase-Based and Distance-Based Velocity

3.5. Moving Object Localization with a Particle Filter Based on the K Best Clusters

3.5.1. Prediction

3.5.2. Update

3.5.3. Resampling

4. Experimental Results

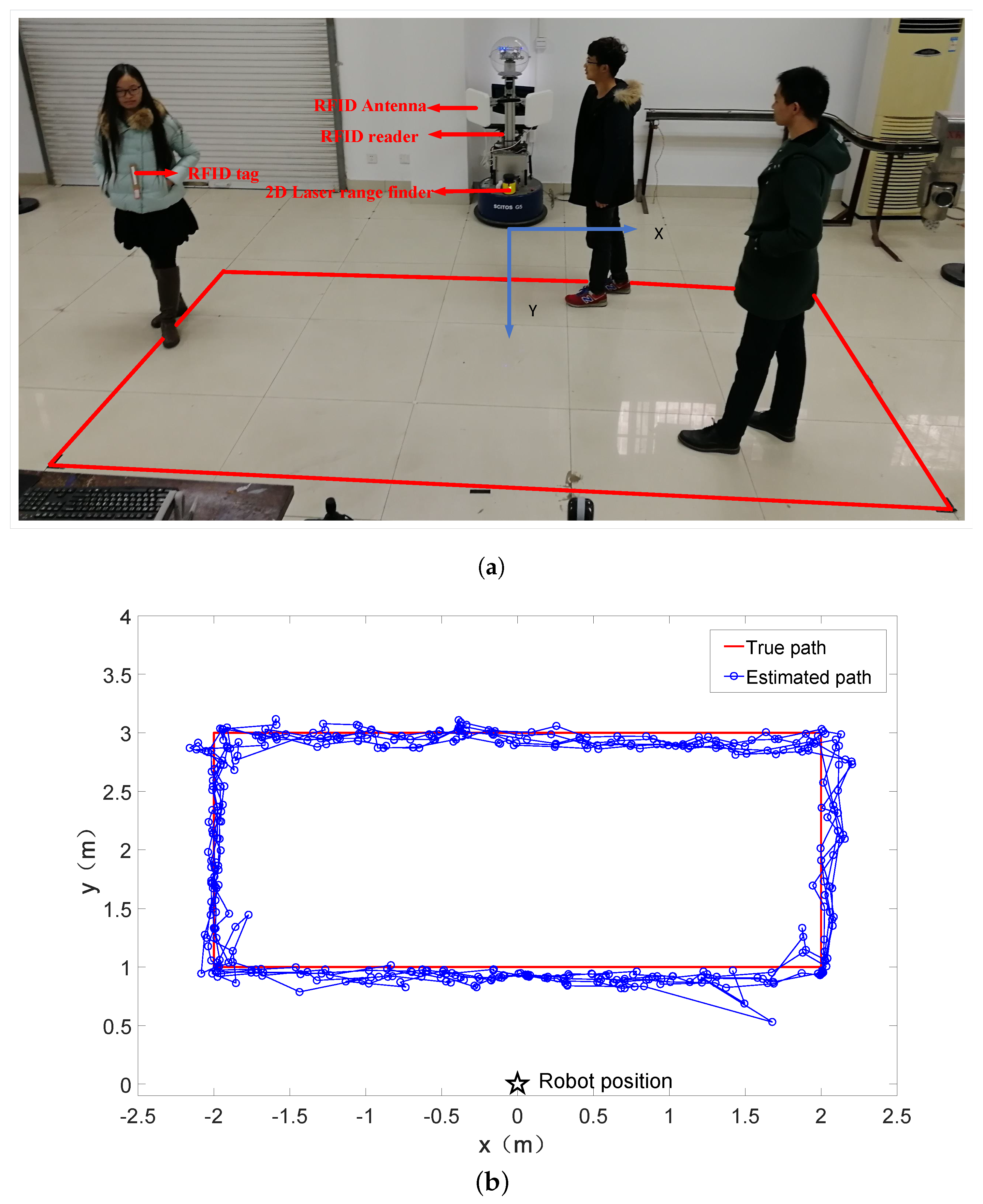

4.1. Experimental Setups

4.2. Impact of Different Parameters on the Positioning Accuracy

4.2.1. Impact of Different Antenna Configurations

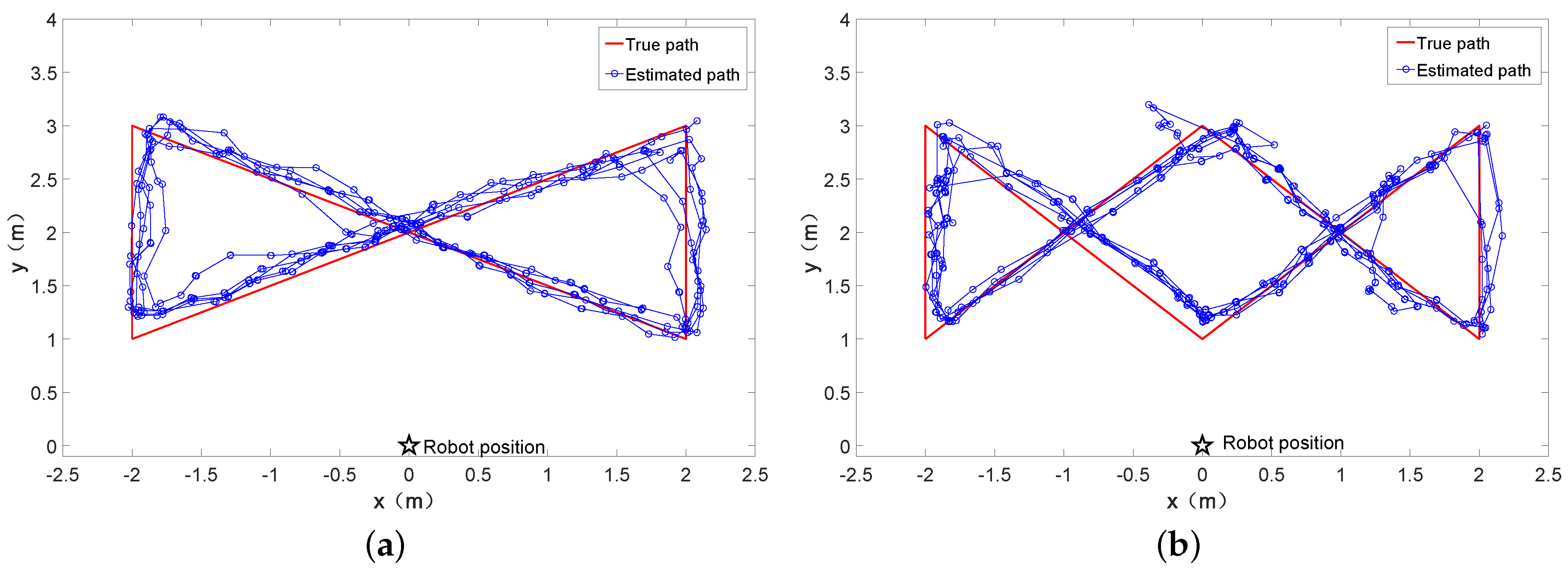

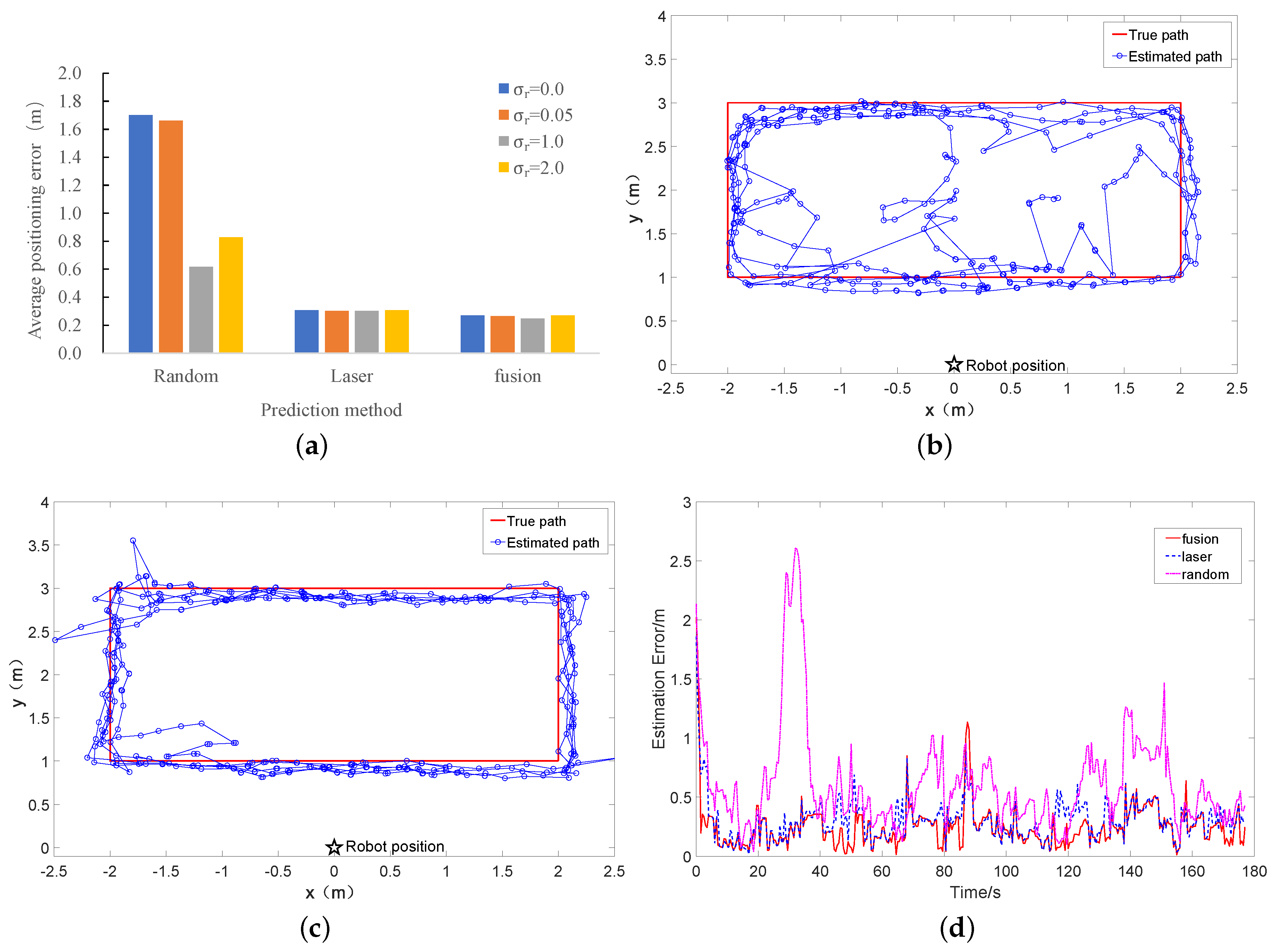

4.2.2. Impact of Different Prediction Forms

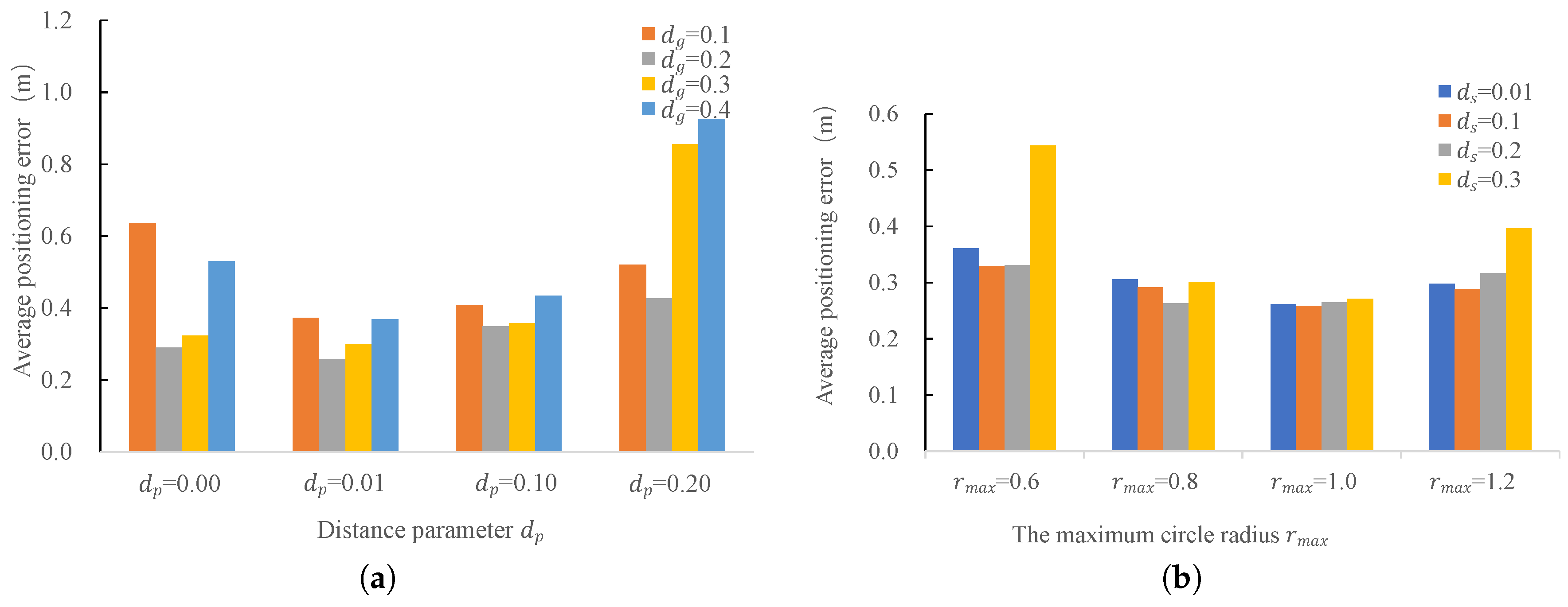

4.2.3. Impact of Different Parameters of Laser Clustering

4.2.4. Impact of Different Number of Particles N

4.2.5. Comparison of Different Velocity Noise and Moving Direction Noise

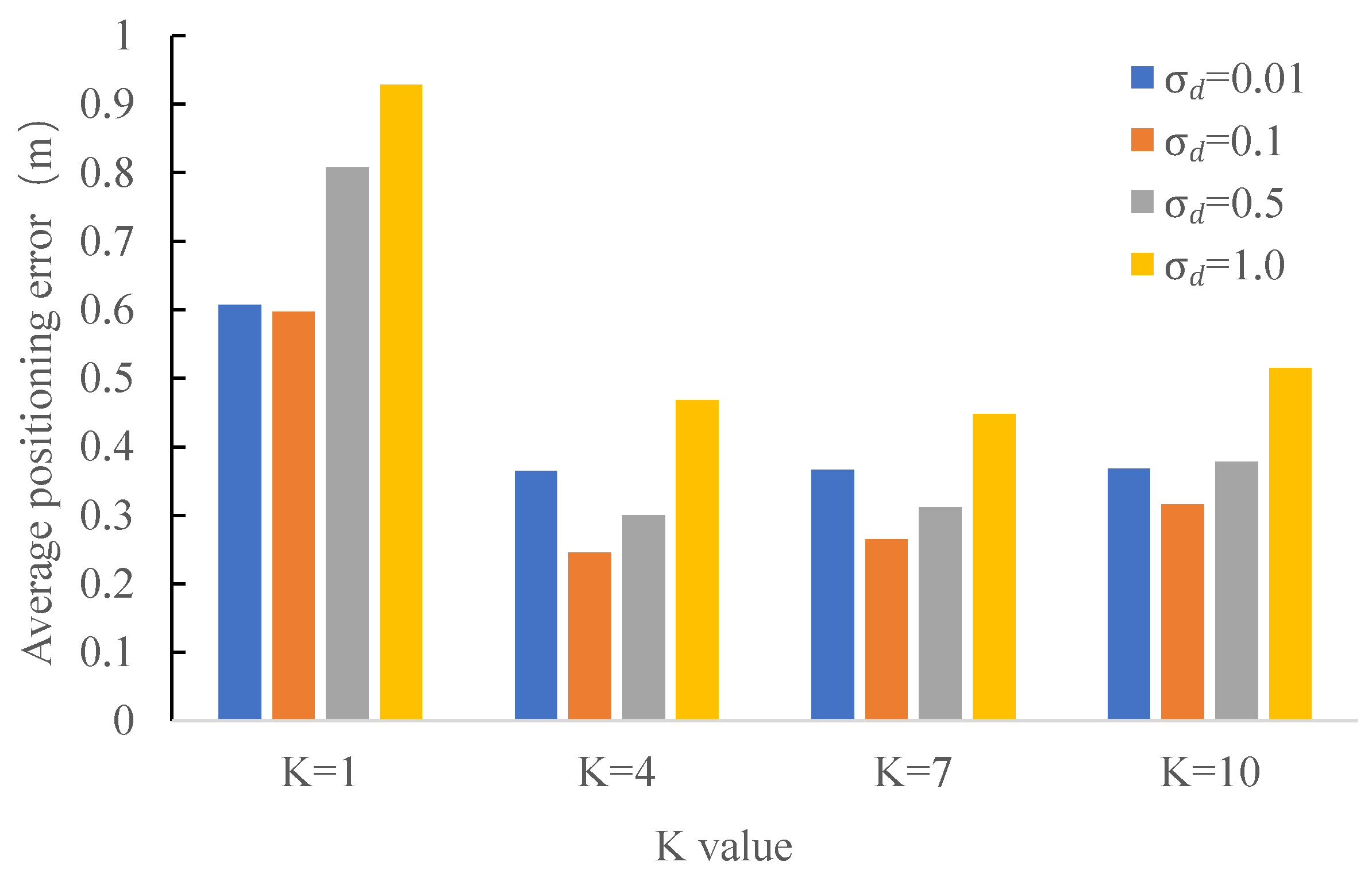

4.2.6. Impact of Different K and the Bandwidth Parameter

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| UHF | Ultra High Frequency |

| LBS | Location-Based Services |

| RFID | Radio Frequency Identification |

| Wi-Fi | Wireless Fidelity |

| RSS | Received Signal Strength |

| TOA | Time of Arrival |

| AOA | Angel of Arrival |

| WSN | Wireless Sensor Network |

| EKF | Extended Kalman Filter |

| VPH | Vector Polar Histogram |

| RMSE | Rooted Mean Square Error |

References

- Zhao, Y. Research on Phase Information Based Passive UHF RFID Localization Algorithms. Ph.D. Thesis, TianJin University, TianJin, China, 2015. [Google Scholar]

- Chen, Z.; Zou, H.; Jiang, H. Fusion of WiFi, smartphone sensors and landmarks using the Kalman filter for indoor localization. Sensors 2015, 15, 715–732. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y. RFID Technical Principle and Its Application. J. Cent. Univ. Natl. 2006, 15, 358–361. [Google Scholar]

- Zhou, J.R. Research on Indoor Localization Using RFID System. Ph.D. Thesis, Zhejiang University, Zhejiang, China, 2014. [Google Scholar]

- Liu, R.; Koch, A.; Zell, A. Path following with passive UHF RFID received signal strength in unknown environments. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2012), Vilamoura, Portugal, 7–12 October 2012; pp. 2250–2255. [Google Scholar]

- Xiao, C.; Chen, N.; Li, D. SCRMS: An RFID and Sensor Web-Enabled Smart Cultural Relics Management System. Sensors 2017, 17, 60. [Google Scholar] [CrossRef] [PubMed]

- Shaari, A.M.; Nor, N.S.M. Position and Orientation Detection of Stored Object Using RFID Tags. Procedia Eng. 2017, 184, 708–715. [Google Scholar]

- Lu, S.; Xu, C.; Zhong, R.Y. A RFID-enabled positioning system in automated guided vehicle for smart factories. J. Manuf. Syst. 2017, 44, 179–190. [Google Scholar] [CrossRef]

- Fortinsimard, D.; Bilodeau, J.S.; Bouchard, K. Exploiting Passive RFID Technology for Activity Recognition in Smart Homes. IEEE Intell. Syst. 2015, 30, 7–15. [Google Scholar] [CrossRef]

- Nur, K.; Morenzacinos, M.; Carreras, A. Projection of RFID-Obtained Product Information on a Retail Stores Indoor Panoramas. IEEE Intell. Syst. 2015, 30, 30–37. [Google Scholar] [CrossRef]

- Wang, J.; Ni, D.; Li, K. RFID-based vehicle positioning and its applications in connected vehicles. Sensors 2014, 14, 4225–4238. [Google Scholar] [CrossRef] [PubMed]

- Ren, Y.; Liu, X.; Zhang, X. Research on phase measuring method for ultra high frequency RFID positioning. Technol. Vert. Horiz. 2014, 14, 36-38+42. [Google Scholar]

- Hähnel, D.; Burgard, W.; Fox, D.; Fishkin, K.; Philipose, M. Mapping and localization with RFID technology. In Proceedings of the 2004 IEEE International Conference on Robotics and Automation (ICRA 2004), New Orleans, LA, USA, 26 April–1 May 2004; pp. 1015–1020. [Google Scholar]

- Ni, L.M.; Liu, Y.; Lau, Y.C.; Patil, A.P. LANDMARC: Indoor Location Sensing Using Active RFID. Wirel. Netw. 2004, 10, 701–710. [Google Scholar] [CrossRef]

- Xu, H.; Ding, Y.; Li, P. An RFID Indoor Positioning Algorithm Based on Bayesian Probability and K-Nearest Neighbor. Sensors 2017, 17, 1806. [Google Scholar] [CrossRef] [PubMed]

- Yang, P.; Wu, W. Efficient Particle Filter Localization Algorithm in Dense Passive RFID Tag Environment. IEEE Trans. Ind. Electron. 2014, 61, 5641–5651. [Google Scholar] [CrossRef]

- Xu, B.; Gang, W. Random sampling algorithm in RFID indoor location system. In Proceedings of the 2006 IEEE International Workshop on Electronic Design, Test and Applicatios, Kuala Lumpur, Malaysia, 17–19 January 2006; p. 6. [Google Scholar]

- Fu, Z. Research of Location-Aware Method Based on RFID Technology. Master’s Thesis, Changchun University of Technology, Changchun, China, 2016. [Google Scholar]

- Li, X.; Zhang, Y.; Marsic, I.; Burd, R.S. Online People Tracking and Identification with RFID and Kinect. arXiv, 2017; arXiv:1702.03824. [Google Scholar]

- Sarkka, S.; Viikari, V.V.; Huusko, M.; Jaakkola, K. Phase-Based UHF RFID Tracking With Nonlinear Kalman Filtering and Smoothing. IEEE Sens. J. 2012, 12, 904–910. [Google Scholar] [CrossRef]

- Ma, H.; Wang, K. Fusion of RSS and Phase Shift Using the Kalman Filter for RFID Tracking. IEEE Sens. J. 2017, 17, 3551–3558. [Google Scholar] [CrossRef]

- Martinelli, F. A Robot Localization System Combining RSSI and Phase Shift in UHF-RFID Signals. IEEE Trans. Control Syst. Technol. 2015, 23, 1782–1796. [Google Scholar] [CrossRef]

- Prinsloo, J.; Malekian, R. Accurate Vehicle Location System Using RFID, an Internet of Things Approach. Sensors 2016, 16, 825. [Google Scholar] [CrossRef] [PubMed]

- Song, X.; Li, X.; Tang, W. A hybrid positioning strategy for vehicles in a tunnel based on RFID and in-vehicle sensors. Sensors 2014, 14, 23095–23118. [Google Scholar] [CrossRef] [PubMed]

- Germa, T.; Lerasle, F.; Ouadah, N. Vision and RFID data fusion for tracking people in crowds by a mobile robot. Comput. Vis. Image Underst. 2010, 114, 641–651. [Google Scholar] [CrossRef]

- Deyle, T.; Reynolds, M.S.; Kemp, C. Finding and Navigating to Household Objects with UHF RFID Tags by Optimizing RF Signal Strength. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Chicago, IL, USA, 14–18 September 2014; pp. 2579–2586. [Google Scholar]

- Valero, E.; Adán, A. Integration of RFID with other technologies in construction. Measurement 2016, 94, 614–620. [Google Scholar] [CrossRef]

- Valero, E.; Adan, A.; Cerrada, C. Automatic Construction of 3D Basic-Semantic Models of Inhabited Interiors Using Laser Scanners and RFID Sensors. Sensors 2012, 12, 5705. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Liu, W.; Huang, P. Laser-activated RFID-based Indoor Localization System for Mobile Robots. In Proceedings of the IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; pp. 4600–4605. [Google Scholar]

- Martin, E. Multimode radio fingerprinting for localization. In Proceedings of the 2011 IEEE International Radio and Wireless Symposium, Phoenix, AZ, USA, 16–19 January 2011; pp. 383–386. [Google Scholar]

- Liu, R.; Koch, A.; Zell, A. Mapping UHF RFID Tags with a Mobile Robot using a 3D Sensor Model. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 1589–1594. [Google Scholar]

- Shirehjini, A.A.N.; Yassine, A.; Shirmohammadi, S. An RFID-Based Position and Orientation Measurement System for Mobile Objects in Intelligent Environments. IEEE Trans. Instrum. Meas. 2012, 61, 1664–1675. [Google Scholar] [CrossRef]

- Song, X.; Li, X.; Zhang, W. RFID Application for Vehicle Fusion Positioning in Completely GPS-denied Environments. Eng. Lett. 2016, 24, 19–23. [Google Scholar]

- Xiong, Z.; Song, Z.; Scalera, A. Hybrid WSN and RFID indoor positioning and tracking system. Eurasip J. Embed. Syst. 2013, 1, 6. [Google Scholar] [CrossRef]

- Su, X.; Li, S.; Yuan, C. Enhanced Boundary Condition–Based Approach for Construction Location Sensing Using RFID and RTK GPS. J. Constr. Eng. Manag. 2014, 140, 04014048. [Google Scholar] [CrossRef]

- Li, J.; Zhong, R.; Hu, Q.; Ai, M. Feaure-Based Laser Scan Matching and Its Application for Indoor Mapping. Sensors 2016, 16, 1265. [Google Scholar] [CrossRef] [PubMed]

- Jung, J.; Yoon, S.; Ju, S.; Heo, J. Development of Kinematic 3D Laser Scanning System for Indoor Mapping and As-Built BIM Using Constrained SLAM. Sensors 2015, 15, 26430–26456. [Google Scholar] [CrossRef] [PubMed]

- Tang, J.; Chen, Y.; Jaakkola, A.; Liu, J.; Hyyppä, J.; Hyyppä, H. NAVIS—An UGV indoor positioning system using laser scan matching for large-area real-time applications. Sensors 2014, 14, 11805–11824. [Google Scholar] [CrossRef] [PubMed]

- An, D.; Wang, H. VPH: A new laser radar based obstacle avoidance method for intelligent mobile robots. In Proceedings of the Intelligent Control and Automation, Hangzhou, China, 15–19 June 2004; pp. 4681–4685. [Google Scholar]

- Sun, J.L.; Sun, G.M.; Ma, P.G. Laser Target Localization Based on Symmetric Wavelet Denoising and Asymmetric Gauss Fitting. Chin. J. Lasers 2017, 44, 178–185. [Google Scholar]

- Cai, Z.; Xiao, Z.; Yu, J. Real-time Detection of Dynamic Obstacles Based on Laser Radar. Control Eng. China 2008, 15, 200–203. [Google Scholar]

- Zhou, J.J. Research on Key Technology of Lidar Based Object Detection and Tracking for Intelligent Vehicles. Ph.D. Thesis, Beijing University of Technology, Beijing, China, 2014. [Google Scholar]

- Vu, T.D.; Aycard, O.; Appentodt, N. Online Localization and Mapping with Moving Object Tracking in Dynamic Outdoor Environments. In Proceedings of the IEEE Intelligent Vehicles Symposium, Istanbul, Turkey, 13–15 June 2007; pp. 190–195. [Google Scholar]

- Streller, D.; Dietmayer, K. Object tracking and classification using a multiple hypothesis approach. In Proceedings of the 2004 IEEE Intelligent Vehicles Symposium, Parma, Italy, 14–17 June 2004; pp. 808–812. [Google Scholar]

- Zhang, Z.D. Research on Dynamic Navigation of Indoor Service Robot Using Laser Scanner. Master’s Thesis, Harbin Institute of Technology, Harbin, China, 2014. [Google Scholar]

- Liu, R.; Huskic, G.; Zell, A. Dynamic objects tracking with a mobile robot using passive UHF RFID tags. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems(IROS 2014), Chicago, IL, USA, 14–18 September 2014; pp. 4247–4252. [Google Scholar]

- Han, J.; Ding, H.; Qian, C.; Ma, D.; Xi, W.; Wang, Z.; Jiang, Z.; Shangguan, L. CBID: A Customer Behavior Identification System Using Passive Tags. In Proceedings of the 2014 IEEE International Conference on Network Protocols, Raleigh, NC, USA, 21–24 October 2014; pp. 47–58. [Google Scholar]

- Huang, R.; Liang, H.; Chen, J. Method of tracking and recognition of laser radar unmanned vehicle dynamic obstacle detection. Robot 2016, 38, 437–443. [Google Scholar]

- Tan, B.; Yang, C. Dynamic Obstacles Detection by Laser Radars. J. Xi’an Technol. Univ. 2015, 35, 205–209. [Google Scholar]

- Liu, R.; Yuen, C.; Do, T.N. Indoor Positioning using Similarity-based Sequence and Dead Reckoning without Training. arXiv, 2017; arXiv:1705.04934. [Google Scholar]

| Mathematical Symbol | Meaning |

|---|---|

| Phase of RFID signal at time t | |

| Phase-based velocity of RFID tag at time t | |

| Grouping threshold in laser-based clustering | |

| Distance parameter in laser-based clustering | |

| Splitting threshold in laser-based clustering | |

| The maximum cluster radius | |

| The velocity of cluster i at time t | |

| The moving direction of cluster i at time t | |

| K | The number of the best matching clusters |

| N | Number of particles |

| The object position at time t | |

| Location of particle n at time t | |

| The weight of particle n at time t | |

| Gaussian noise in random prediction | |

| Gaussian noise added to the moving direction in laser prediction | |

| Gaussian noise added to the velocity in laser prediction | |

| The bandwidth parameter used to control the weight update of the particle filter | |

| Moving direction after adding Gaussian noise to the cluster l at time t | |

| Velocity after adding Gaussian noise to cluster l at time t |

| Antenna Combination | Only Right Antenna | Only Left Antenna | Right and Left Antennas |

|---|---|---|---|

| Positioning accuracy (m) | 1.24 | 1.41 | 0.258 |

| Number of Particles N | Accuracy (m) | Running Time (ms) |

|---|---|---|

| 5 | 0.457 | 4.187 |

| 10 | 0.295 | 4.535 |

| 50 | 0.279 | 4.655 |

| 100 | 0.256 | 4.858 |

| 500 | 0.254 | 6.654 |

| 1000 | 0.258 | 8.594 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fu, Y.; Wang, C.; Liu, R.; Liang, G.; Zhang, H.; Ur Rehman, S. Moving Object Localization Based on UHF RFID Phase and Laser Clustering. Sensors 2018, 18, 825. https://doi.org/10.3390/s18030825

Fu Y, Wang C, Liu R, Liang G, Zhang H, Ur Rehman S. Moving Object Localization Based on UHF RFID Phase and Laser Clustering. Sensors. 2018; 18(3):825. https://doi.org/10.3390/s18030825

Chicago/Turabian StyleFu, Yulu, Changlong Wang, Ran Liu, Gaoli Liang, Hua Zhang, and Shafiq Ur Rehman. 2018. "Moving Object Localization Based on UHF RFID Phase and Laser Clustering" Sensors 18, no. 3: 825. https://doi.org/10.3390/s18030825