Low Cost Efficient Deliverying Video Surveillance Service to Moving Guard for Smart Home

Abstract

:1. Introduction

- Coverage deficiencies in LTE (especially in emergent countries).

- Range coverage and radio channel interferences in WiFi [16], prolonged access to the channel (long periods of contention) of WiFi terminal.

- Bad planificafication of dense WiFi networks.

- Inefficient behavior of transport protocols of Internet in WiFi and LTE.

- Inefficient behavior of video servers (they usually do not detect efficiently if the wireless terminal effectively will receive video frames).

- Inefficient behavior of video clients that cannot process the deadlines associated to the reception and visualization of video frames.

2. Background and Related Work

- Intelligent image processing. In [20,21] video surveillance systems are focused mainly on the detection of activities such as fights, assaults or excesses that may occur within an environment. The system contains external sensors that allow capturing information from the environment using cameras, microphones and motion detectors. When a security alert occurs, a centralized broker, for example will call the police, or blocks doors.... It does not support smart phones so it does not mitigate adverse effects of service interruptions. In [22] authors proposed a prototype whose intrusion alerts allows the detection of movement and location of objects in a determined area by processing the video coming from different cameras. Our system detects movement of the thief but we are not interested in his exact localization. In [23] a system capable of processing images was presented to obtain relative positions of objects supporting environmental adversities and combining it with IoT to improve the acquisition of information. Kavi and Kulathumani [24] are able to detect orientation of objects. We are not interested in the segmentation of objects or the affectation of environmental conditions, but in a real-time reception of images that facilitates a first vision on the part of the guard who observes the intrusion in the Smart Home. In [25] is treated the problem of intelligent recognition of objects in nigthtime using visible light cameras. They proposed an interesting image recognition system for near infrared cameras that can operate in daytime and nighttime. Whichever type of camera is used the problem of video service disruptions still exists. That is the reason why we only considered visible light cameras and full delivery of video. In [26] authors proposed a solution through the design and development of a video surveillance system, which uses semantic reasoning and ontologies. This system is able to work with small and cheap cameras, reduce required bandwidth and optimize the processing power. Our system also is able to use ontologies over an embedded processor.

- Smart codification and compression of objects. The main idea is to reduce the needed communication bandwidth between server (that processes a high amount of videos) and client, sending only relevant information to the client (user) [27]. Due to the low economic cost we imposed on our system, we consider a small number of video cameras, so we did not need to implement a system of this type. In [28] was presented a system to extract metadata of important objects to avoid the impossible to solve problem of monitoring large number of cameras. We did not treat with this problem due to the reduced number of cameras we considered.

- High number of video streams synchronization. Pereira et al. [29] proposed a window strategy together with a correntropy function to synchronize video streams of line applications that require a low computational power. We did not focus in synchronization of video streams; on the contrary, we focused on the adequated reception of frames of a video stream. However, the application of that technique to the synchronization of several video streams in our system requires a deep study to solve the mitigation of adverse effects of several video streams at the same time.

- Usage of low economic cost and embedded computers for hosting the video streaming server, which connects to a video camera through Universal Serial Bus (USB), and uses a mobile network for communicating the video streaming [30]. In [31] is used a built-in system based on the ARM9 processor (freely available), a 3G mobile network card, a USB camera that captures video using the H.264 standard and sends it to a video server. The user accesses it with an Android smart phone. The construction of an embedded system as a server and video processor is cheap because there are low economic cost freely distributed hardware and software to do it. However, in [31] they do not focus, as we did in real time streaming video. We used a general-purpose embedded computer (Raspberry Pi) for the good performance it offers and its low economic cost.

- Based on the regulation of the transmission rate. They estimate the highest possible rate of video transmission [33].

- Based on adaptive video buffer. They seek the relationship between the occupation of the buffer and the selected video bit rate and the available bandwidth [34]. Latency would increase for real-time video. Our system must minimize the latency so this scheme would be limited. In [35] the starting latency, the reproduction fluidity, the average reproduction quality, the smoothness of the reproduction and the cost of real time video are improved. In [36] was managed the bandwidth tolerance of the QoS degradation.

- Based on prediction of QoS parameters, which optimizes the allocation of resources and related variables in a control model such as the Markov Chains [37].

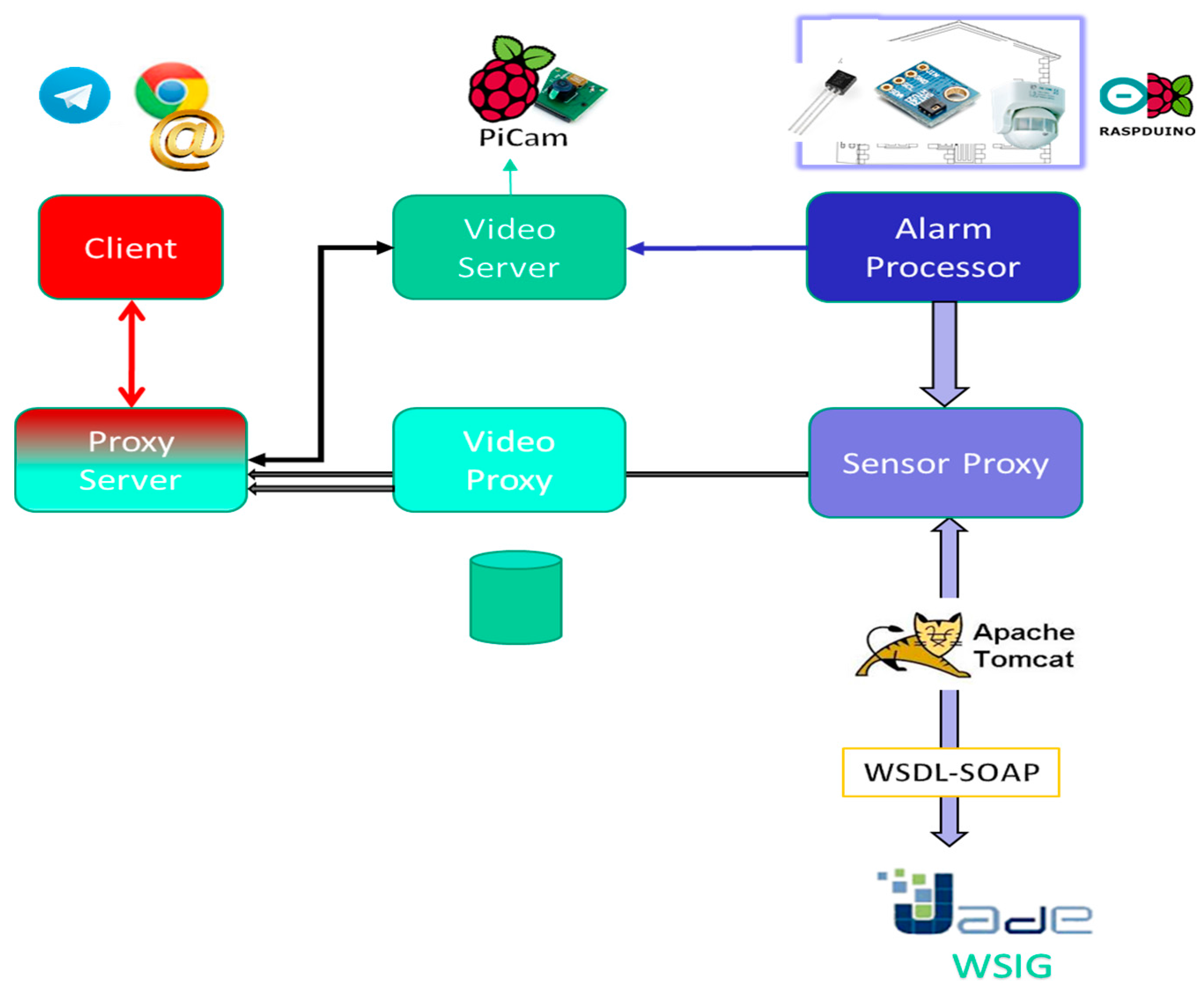

3. System Architecture and Operation

- The sensors are continuously sending sensed data (with a certain sampling period) to the alarm processor.

- The video camera starts operating once the Alarm processor fuses the sensor data and determines that an intrrusion has occurred. At that moment, the video will be stored in the video server memory (a file that works as a buffer) and simultaneously an instant message (Telegram application) and/or an e-mail are sent to the Client (guard). The file containing the recorded video can be used before the judge.

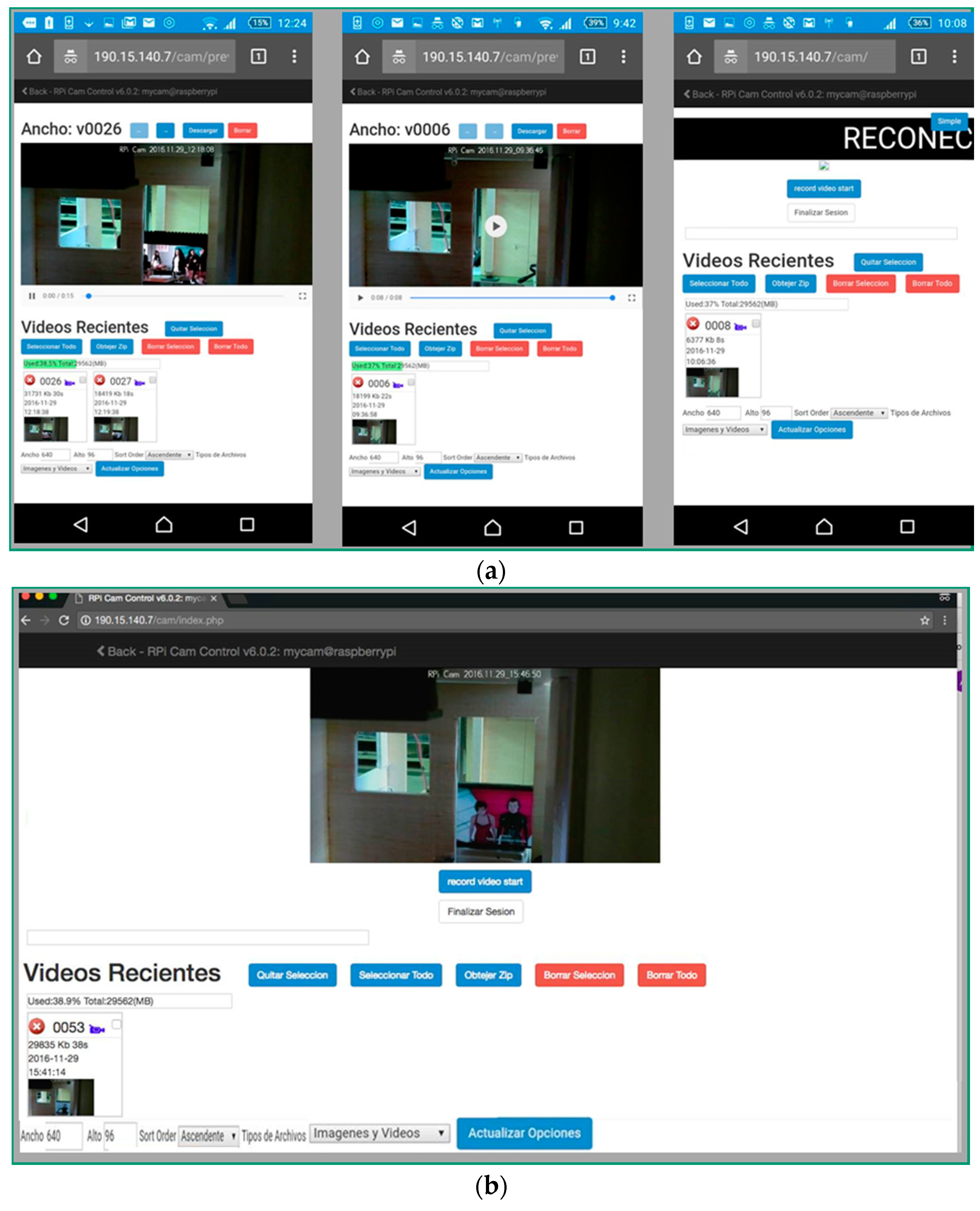

- The Client will receive a Telegram instant message and/or an e-mail. Whenever he wants, he can start a video streaming session on the Video Server, clicking a link (containing the Internet Protocol Address (@IP) of that server) in the instant message or in the e-mail text. If the Client receives the video while the server is producing it, the video will be delivered in real time. However, the Client could start an on demand video Streaming session whenever he wants, once the Video Server finished recording that video.

- Video service disruption: The Video Server registers the last set of frames sent to the Client continuously and has not been consumed by the Client. When the Client experiments a service disruption, the Video Server will continue sending from the last set of video frames previously sent. There is no need to restart the video session because the Video Server also maintains the original session opened during at most 10 min (the time that lasts ussually an intrusion). In that simple way, nested disruptions can be easily managed.

3.1. Software Design Pattern Specification

- Model (Server): it is located in the Video Server (video buffer that stored temporally the pending video still not sent to the Client and other stored videos). The Model consists in an Observer software pattern in charge to control de videos. An external Observer software design pattern abstracts the data model corresponding to the sensed data. That Observer is constantly testing the sensor data communicated to the Controller.

- View (Client or user): It consists of several views: the video display, the e-mail and Telegram messages interfaces and the links that allow starting the video streaming. Other simple view is the warning of disruptions.

- Controller: It is the most important software pattern. It is in charge to do all the control of video service disruptions, the control of the wireless channel to advertise possible disruptions and the cooperation with the sensor alarms. It consists in the cooperation among different standard software patterns that we present next. We distinguished internal software patterns to the Controller (inside the MVC) and other external software patterns to the Controller (outside the main MVC pattern).

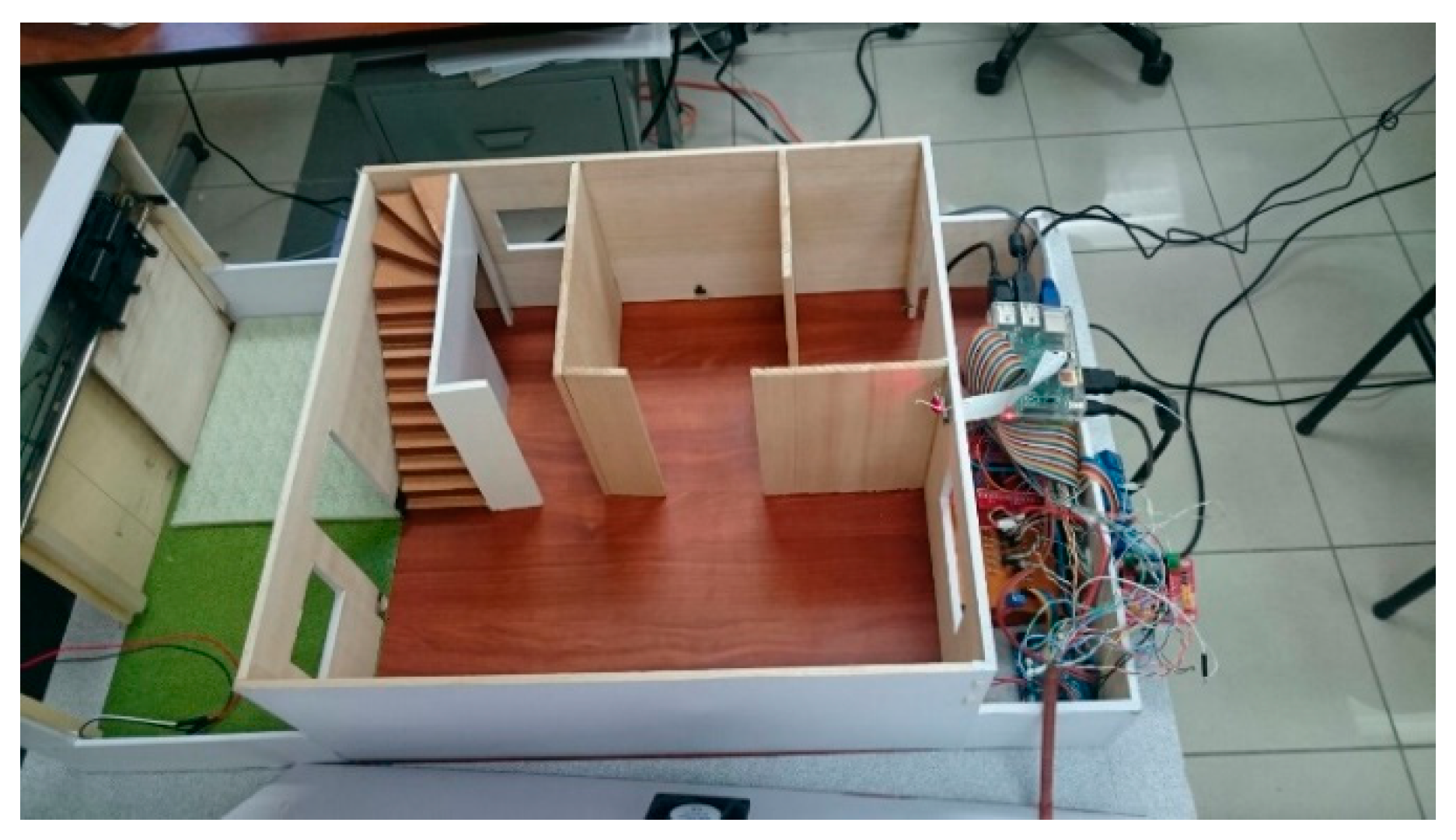

3.2. Low Cost Hardware Implementation

- Ligth: Dimensions 65 × 11 × 13 mm, series Photoresistor-BH1750, measurements 1-65535 LX, sampling 2 s.

- Digital temperature (Ds28b20): Each device has a unique 64-bit serial code stored in its Read Only Memory (ROM), multipoint capability that simplifies the design of temperature detection applications, can be powered from the data line. The power supply range is 3.0 V to 5.5 V. Measures temperatures from −55 °C to +125 °C (−67 °F to +257 °F) ± 0.5 °C to the nearest −10 °C at +85 °C. The resolution of the thermometer is selectable by the user from 9 to 12 b and converts the temperature into 12 b codes in 750 ms (maximum).

- Infrared Barrier: 10.2 × 5.8 × 7 mm phototransistor, peak operating distance: 2.5 mm, operating range for collector current variation greater than 20%: 0.2 mm to 15 mm, typical output current under test: 1 mA, ambient light blocking filter, emitter wavelength: 950 nm.

3.3. Software Implementation Based on Agents, Web Services and Free Platforms

4. High Level Abstraction Model of Performance

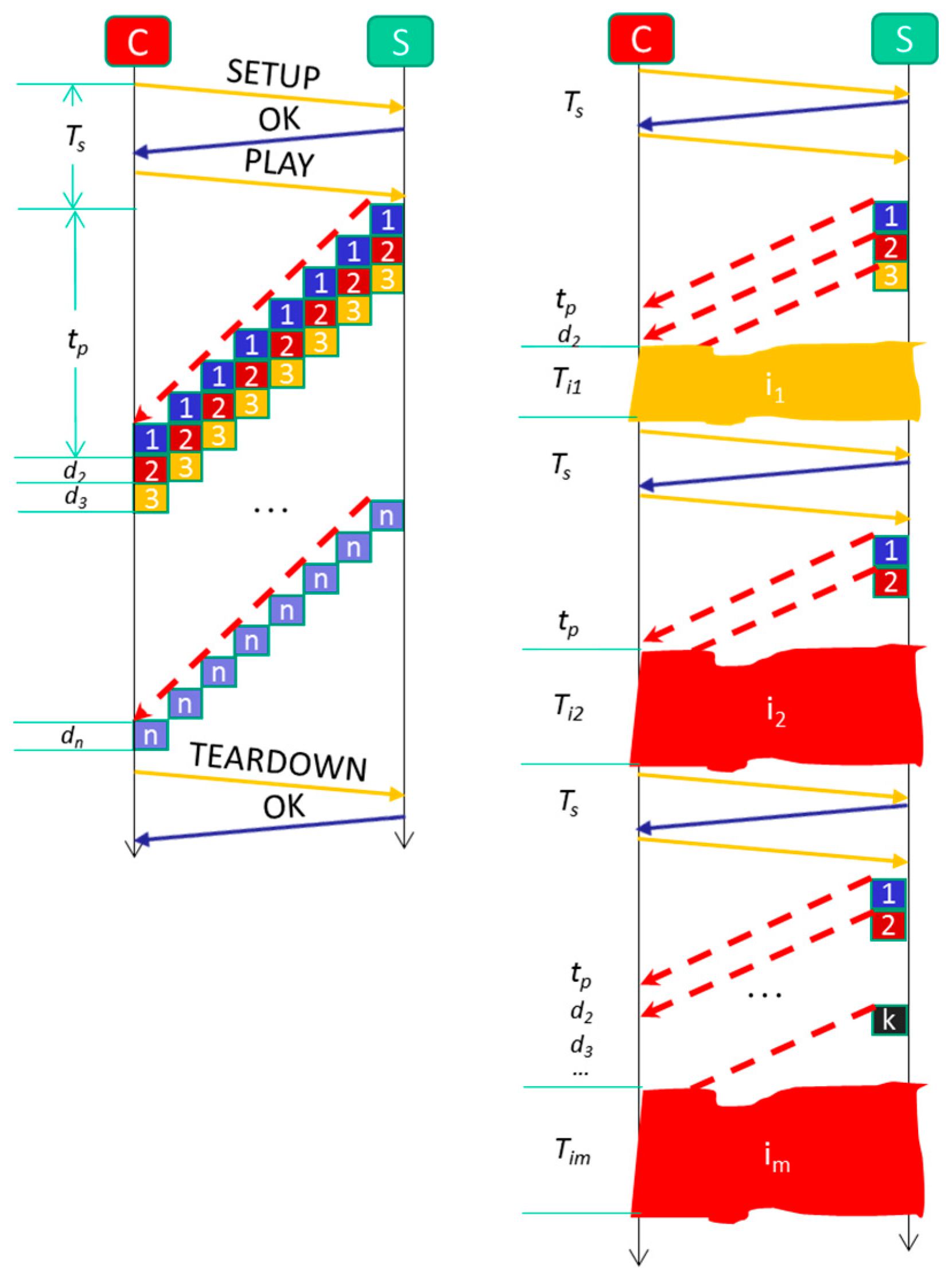

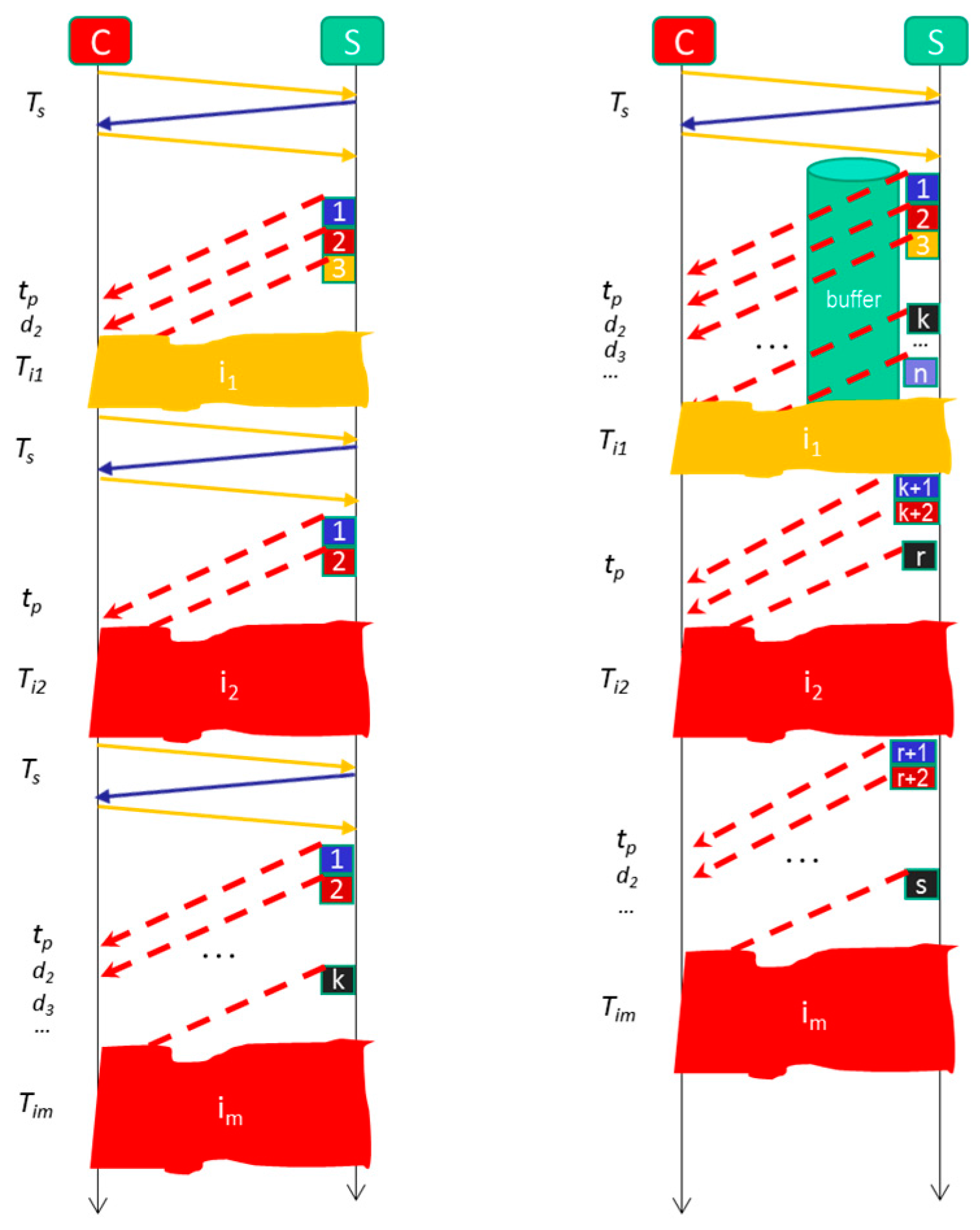

4.1. Formal Model for Video Streaming without Service Disruptions Mitigation

4.2. Formal Model for Video Streaming Mitigating Service Disruptions

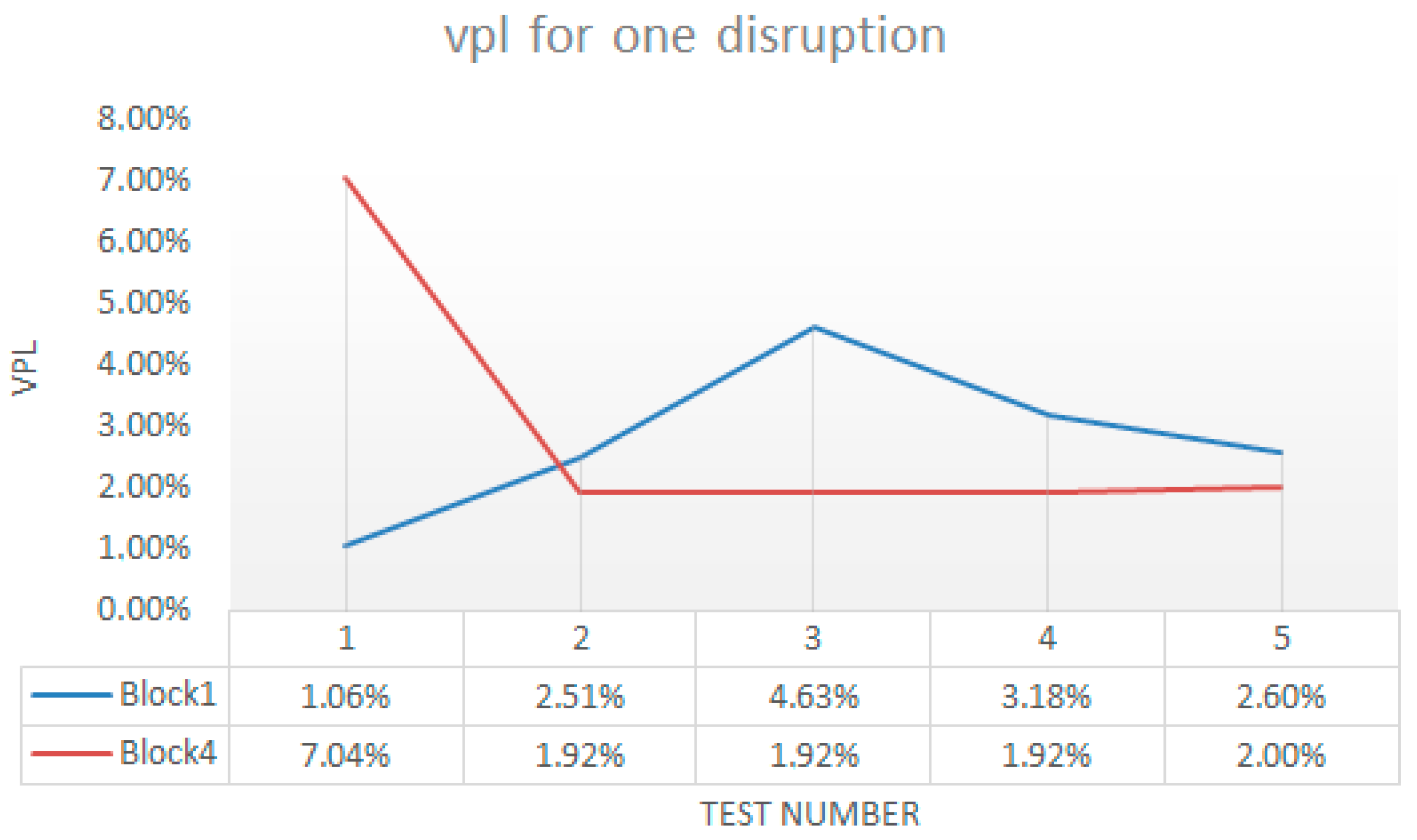

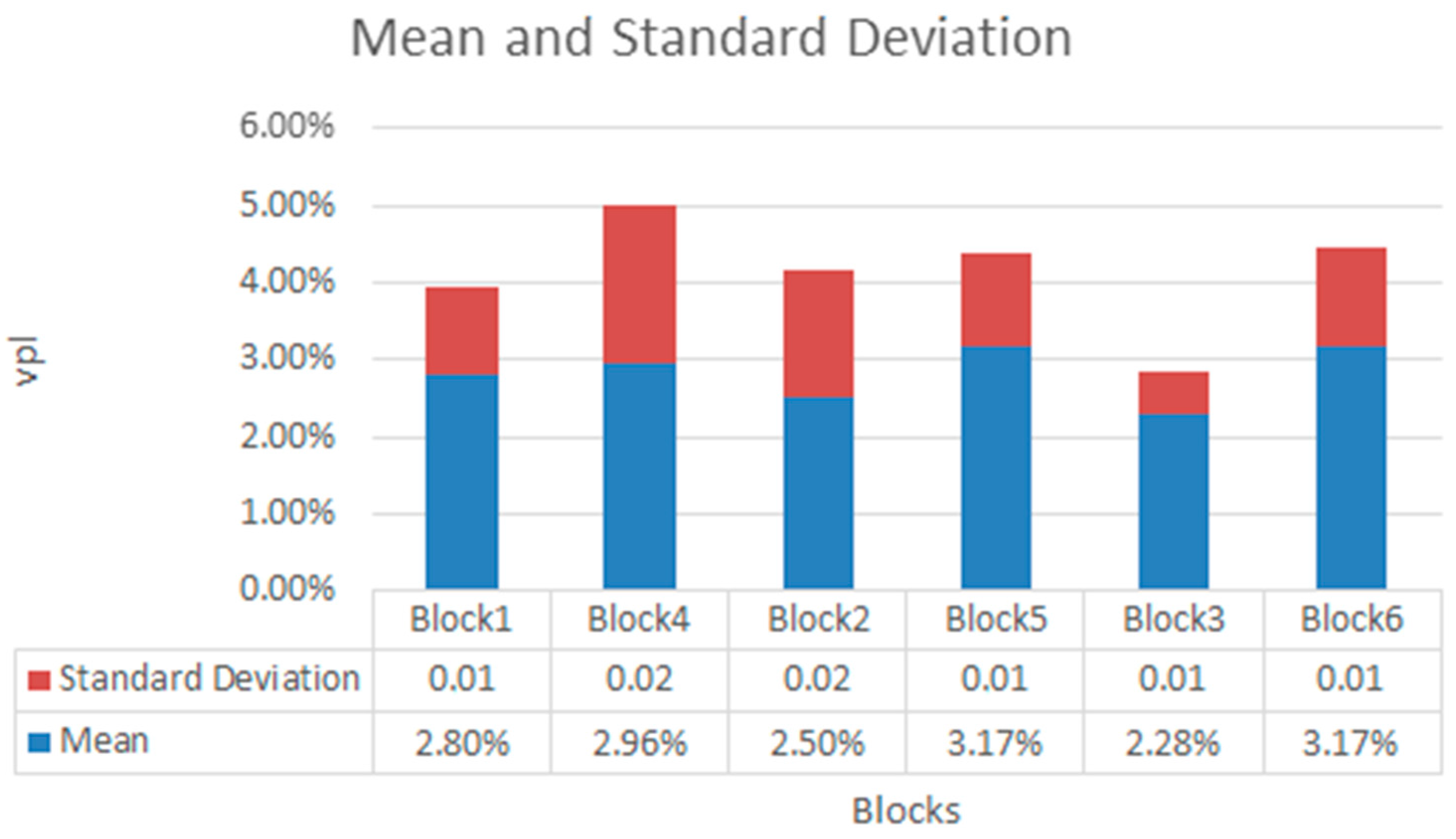

5. Experimental Results: Discussion

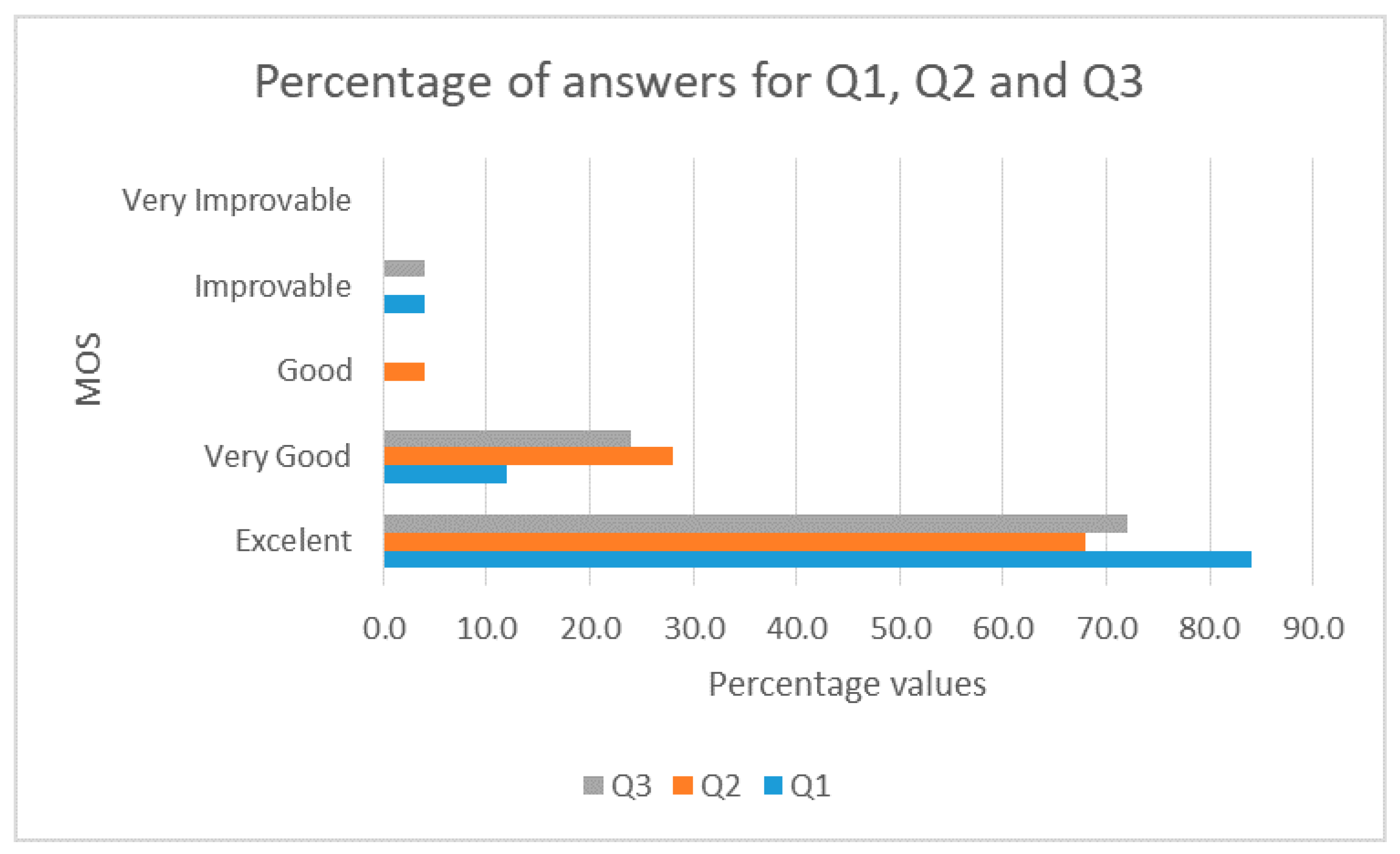

5.1. Discussion about QoE Performance

- Q1.

- How would you rate the design of the user interface of the application?

- Q2.

- How would you rate the usability of the application?

- Q3.

- How would you rate the interactivity of the application?

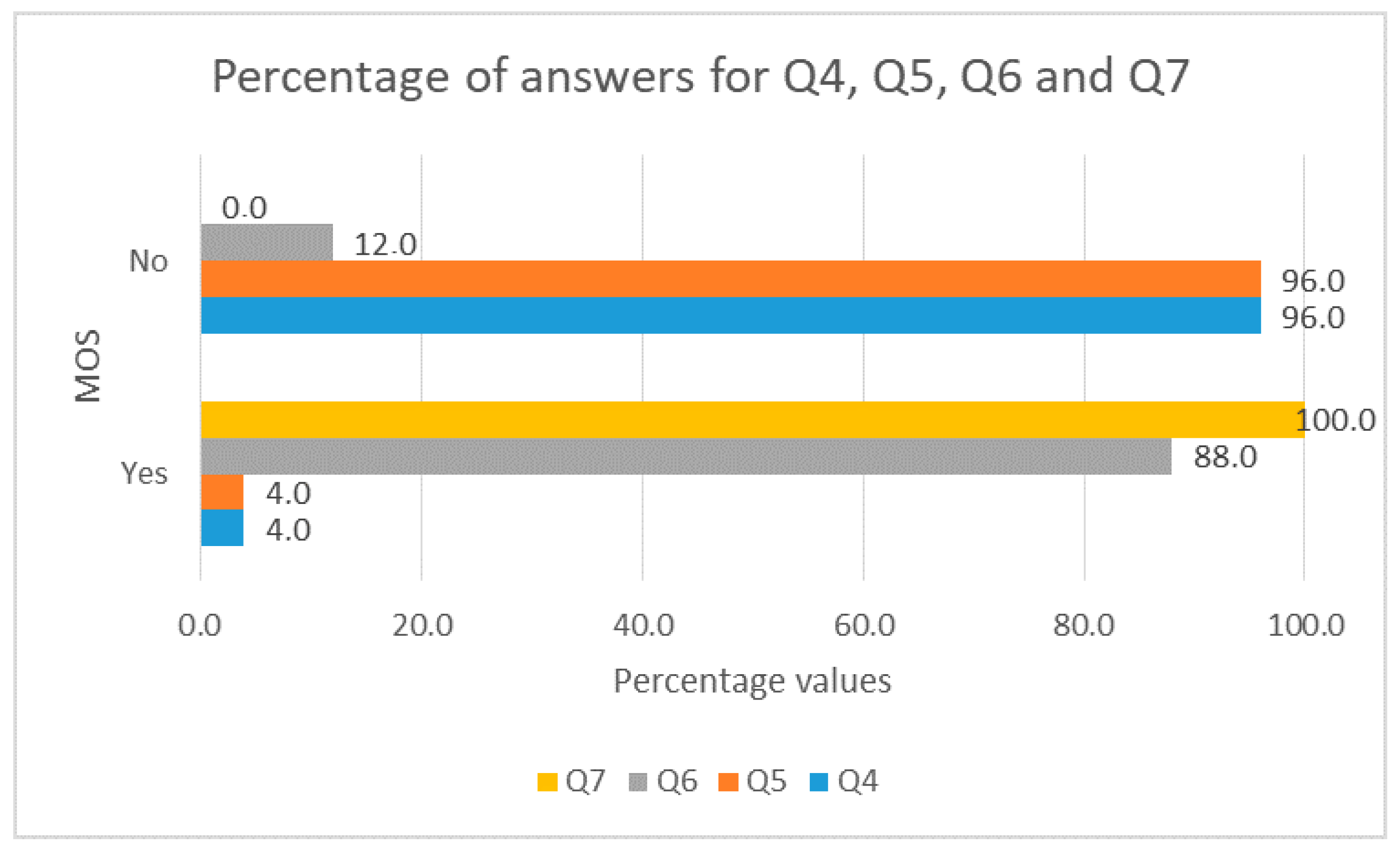

- Q4.

- Did you have problems accessing the application?

- Q5.

- Did the application have an execution error?

- Q6.

- Did the application reconnect the video without reloading the page?

- Q7.

- Did the application send e-mail and Telegram notification messages?

5.2. Discussion about QoS Performance

- WTerm 1: Smart phone Celular Sony Xperia Z3 Compact; 127 × 64.9 × 8.6 mm; 1280 × 720 pixels (4.6′′); Android 5.0; processor of 4 cores (2.5 GHz), Graphic Processor Unit Adreno; Battery capacity 2.600 mAh; 2 GB RAM; LTE and WiFi (IEEE 802.11 g/n/ac). This terminal was used in the blki (i = 1..3).

- WTerm 2: Laptop Mac Book Pro 15′′; 1920 × 1200 pixels (15.4′′); Mac OS High Sierra; 2.2 GHz quad-core Intel Core i7, Turbo Boost up to 3.4 GHz, with 6 MB shared L3 cache; 4 GB de SDRAM DDR3 (two moduls SO-DIMM de 2 GB) to 1.333 MHz; WiFi (IEEE 802.11 g/n/ac). This terminal was used in the blki (i = 4..6).

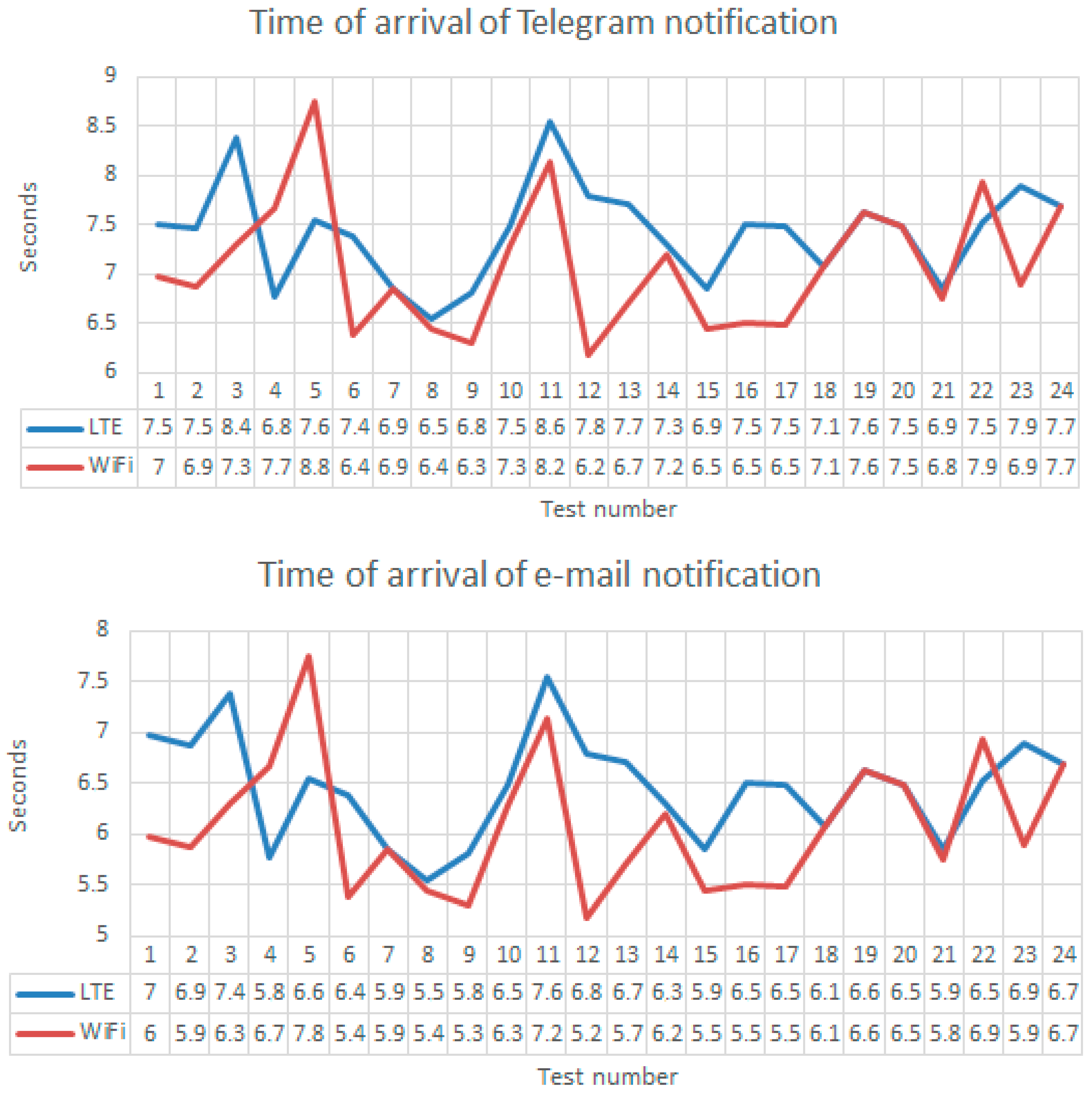

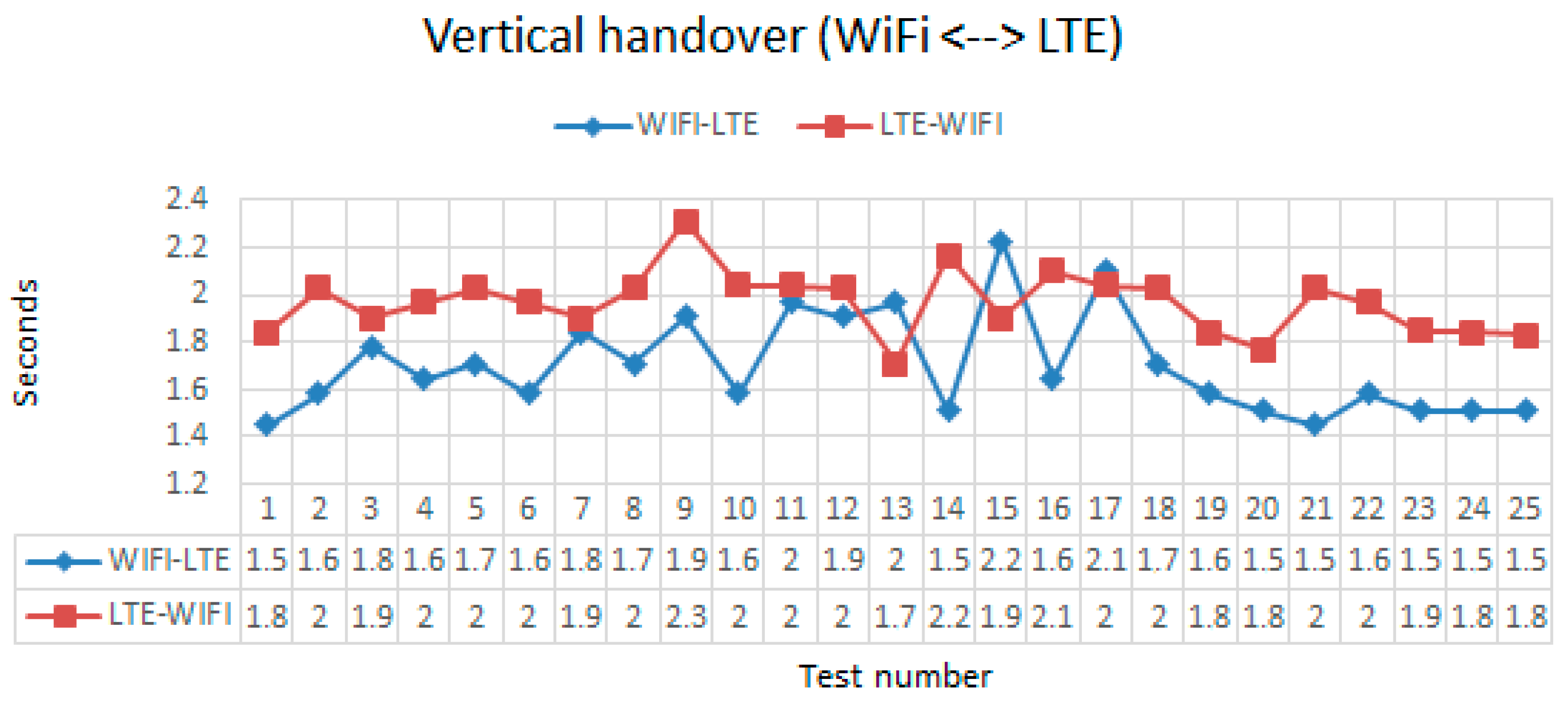

5.3. Notification Messages, Handover and Battery Consumption

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Mandula, K.; Parupalli, R.; Murty, C.A.S.; Magesh, E.; Lunagariya, R. Mobile based home automation using Internet of Things (IoT). In Proceedings of the International Conference on Control, Instrumentation, Communication and Computational Technologies (ICCICCT), Kumaracoil, India, 18–19 December 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 340–343. [Google Scholar]

- Lee, J.-H.; Morioka, K.; Ando, N.; Hashimoto, H. Cooperation of Distributed Intelligent Sensors in Intelligent Environment. IEEE/ASME Trans. Mechatron. 2004, 9, 535–543. [Google Scholar] [CrossRef]

- Karapistoli, E.; Economides, A.A. Wireless Sensor Network Security Visualization. In Proceedings of the Ultra Modern 4th International Congress on Telecommunications and Control Systems and Workshops (ICUMT), St. Petersburg, Russia, 3–5 October 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 850–856. [Google Scholar]

- Macias, E.; Suarez, A.; Chiti, F.; Sacco, A.; Fantacci, R. A Hierarchical Communication Architecture for Oceanic Surveillance Applications. Sensors 2011, 11, 11343–11356. [Google Scholar] [CrossRef]

- Ferryman, J.M.; Maybank, S.J.; Worrall, A.D. Visual Surveillance for Moving Vehicles. In International Journal of Computer Vision; Springer: Berlin/Heidelberg, Germany, 2000; Volume 37, pp. 187–197. [Google Scholar]

- Milosavljević, A.; Rančić, D.; Dimitrijević, A.; Predić, B.; Mihajlović, V. A Method for Estimating Surveillance Video Georeferences. ISPRS Int. J. Geo-Inf. 2017, 6, 211. [Google Scholar] [CrossRef]

- He, B.; Yu, S. Parallax-Robust Surveillance Video Stitching. Sensors 2016, 16, 7. [Google Scholar] [CrossRef]

- Villarrubia, G.; Bajo, J.; De Paz, J.F.; Corchado, J.M. Monitoring and Detection Platform to Prevent Anomalous Situations in Home Care. Sensors 2014, 14, 9900–9921. [Google Scholar] [CrossRef]

- Fan, X.; Huang, H.; Qi, S.; Luo, X.; Zeng, J.; Xie, Q.; Xie, C. Sensing Home: A Cost-Effective Design for Smart Home via Heterogeneous Wireless Networks. Sensors 2015, 15, 30270–30292. [Google Scholar] [CrossRef]

- Braeken, A.; Porambage, P.; Gurtov, A.; Ylianttila, M. Secure and Efficient Reactive Video Surveillance for Patient Monitoring. Sensors 2016, 16, 32. [Google Scholar] [CrossRef]

- Sagar, R.N.; Sharmila, S.P.; Suma, B.V. Smart Home Intruder Detection System. International Journal of Advanced Research in Computer Engineering & Technology (IJARCET). 2017. Available online: http://ijarcet.org/wp-content/uploads/IJARCET-VOL-6-ISSUE-4-439-443.pdf (accessed on 26 February 2018).

- Getting Started with PiCam. Available online: https://projects.raspberrypi.org/en/projects/getting-started-with-picamera (accessed on 15 December 2017).

- Telegram.com. Available online: http://www.telegram.com/ (accessed on 15 December 2017).

- Rendon, W. Evaluación de Métodos para Realizar Mediciones de Calidad de Servicio VoIP en Redes Móviles de Cuarta Generación (LTE) en Ambientes Urbanos de la Ciudad de Guayaquil. Master Thesis, Polytechnic University of Litoral (ESPOL), Guayas, Ecuador, March 2017. Available online: http://www.dspace.espol.edu.ec/xmlui/handle/123456789/38433?show=full (accessed on 15 December 2017).

- Gualotuña, T.; Macias, E.; Suárez, A.; Rivadeneira, A. Mitigando Efectos Adversos de Interrupciones del Servicio de Video-vigilancia del Hogar en Clientes WiFi Inalámbricos. In Proceedings of the XIII Jornadas de Ingeniería Telemática (JITEL 2017), Valencia, España, 27–29 September 2017; pp. 15–22. [Google Scholar]

- Santana, J.; Marrero, D.; Macías, E.; Mena, V.; Suárez, Á. Interference Effects Redress over Power-Efficient Wireless-Friendly Mesh Networks for Ubiquitous Sensor Communications across Smart Cities. Sensors 2017, 17, 1678. [Google Scholar] [CrossRef]

- Macías, E.; Suárez, A.; Espino, F. Multi-platform video streaming implementation on mobile terminals. In Multimedia Services and Streaming for Mobile Devices: Challenges and Innovations; IGI Global: Hershey, PA, USA, 2012; Volume 14, pp. 288–314. [Google Scholar]

- Gamma, E.; Helm, R.; Johnson, R.; Vlissides, J. Design Patterns: Elements of Reusable Object-Oriented Software; Addison-Wesley Longman Publishing: Boston, MA, USA, 1995. [Google Scholar]

- Schmidt, D.C.; Fayad, M.; Johnson, R.E. Software patterns. Commun. ACM 39 1996, 10, 37–39. [Google Scholar]

- Nam, Y.; Rho, S.; Park, J.H. Intelligent video surveillance system: 3-tier context-aware surveillance system with metadata. In Multimedia Tools and Applications; Springer: Berlin/Heidelberg, Germany, 2012; Volume 57, pp. 315–334. [Google Scholar]

- Pang, J.M.; Yap, V.V.; Soh, C.S. Human Behavioral Analytics System for Video. In Proceedings of the IEEE International Conference on Control System, Computing and Engineering (ICCSCE), Batu Ferringhi, Malaysia, 28–30 November 2014; IEEE: Piscataway, NJ, USA, 2015. [Google Scholar]

- Liu, K.; Liu, T.; Jiang, J.; Chen, Q.; Ma, C.; Li, Y.; Li, D. Intelligent Video Surveillance System based on Distributed Fiber Vibration Sensing Technique. In Proceedings of the 11th Conference on Lasers and Electro-Optics Pacific Rim (CLEO-PR), Busan, Korea, 24–28 August 2015; OSA Publishing: Washington, DC, USA, 2015. [Google Scholar]

- Stepanov, D.; Tishchenko, I. The Concept of Video Surveillance System Based on the Principles of Stereo Vision. 2016. Available online: https://fruct.org/publications/fruct18/files/Ste2.pdf (accessed on 26 February 2018).

- Kavi, R.; Kulathumani, V. Real-Time Recognition of Action Sequences Using a Distributed Video Sensor. Network. J. Sens. Actuator Netw. 2013, 2, 486–508. [Google Scholar]

- Batchuluun, G.; Kim, Y.G.; Kim, J.H.; Hong, H.G.; Park, K.R. Robust Behavior Recognition in Intelligent Surveillance Environments. Sensors 2016, 16, 1010. [Google Scholar] [CrossRef]

- Calavia, L.; Baladrón, C.; Aguiar, J.M.; Carro, B.; Sánchez-Esguevillas, A. A Semantic Autonomous Video Surveillance System for Dense Camera Networks in Smart Cities. Sensors 2012, 12, 10407–10429. [Google Scholar] [CrossRef]

- Hamida, A.B.; Koubaa, M.; Nicolas, H.; Amar, C.B. Video surveillance system based on a scalable application-oriented architecture. In Multimedia Tools and Applications; Springer: Berlin/Heidelberg, Germany, 2016; Volume 75, pp. 17187–17213. [Google Scholar]

- Jung, J.; Yoon, I.; Lee, S.; Paik, J. Normalized Metadata Generation for Human Retrieval Using Multiple Video Surveillance Cameras. Sensors 2016, 16, 963. [Google Scholar] [CrossRef]

- Pereira, I.; Silveira, L.F.; Gonçalves, L. Video Synchronization with Bit-Rate Signals and Correntropy Function. Sensors 2017, 17, 2021. [Google Scholar] [CrossRef]

- Prasad, S.; Mahalakshmi, P.; Sunder, A.J.C.; Swathi, R. Smart Surveillance Monitoring System Using Raspberry PI and PIR Sensor. 2014. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.658.6805&rep=rep1&type=pdf (accessed on 26 February 2018).

- Wang, Z.-Y.; Chen, L. Design of Mobile Phone Video Surveillance System for Home Security Based on Embedded System. In Proceedings of the 27th Chinese Control and Decision Conference (CCDC), Qingdao, China, 23–25 May 2015; IEEE: Piscataway, NJ, USA, 2015. [Google Scholar]

- Bukhari, J.; Akkari, N. QoS based approach for L TE-WiFi handover. In Proceedings of the 7th International Conference on Computer Science and Information Technology (CSIT), Amman, Jordan, 13–14 July 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar]

- Li, Z.; Zhu, X.; Gahm, J.; Pan, R.; Hu, H.; Begen, A.C.; Oran, D. Probe and Adapt: Rate Adaptation for HTTP Video Streaming At Scale. IEEE J. Sel. Areas Commun. 2014, 32, 719–733. [Google Scholar]

- Huang, T.Y.; Johari, R.; McKeown, N.; Trunnell, M.; Watson, M. A buffer-based approach to rate adaptation: Evidence from a large videostreaming service. In Proceedings of the ACM Conference on Special Interest Group on Data Communication (SIGCOMM), Chicago, IL, USA, 17–22 August 2014; ACM Digital Library: New York, NY, USA, 2014. [Google Scholar]

- Xing, M.; Xiang, S.; Cai, L. A Real-Time Adaptive Algorithm for Video Streaming over Multiple Wireless Access Networks. IEEE J. Sel. Areas Commun. 2014, 32, 795–805. [Google Scholar]

- Yan, K.L.; Yuen, J.C.H.; Edward, C.; Kan-Yiu, L. Adaptive Encoding Scheme for Real-Time Video Streaming Over Mobile Networks. In Proceedings of the 3rd Asian Himalayas International Conference on Internet (AH-ICI), Kathmandu, Nepal, 23–25 November 2012; IEEE: Piscataway, NJ, USA, 2012. [Google Scholar]

- Zhou, C.; Lin, C.W. A Markov decision based rate adaption approach for dynamic HTTP streaming. IEEE Trans. Multimed. 2016, 18, 738–751. [Google Scholar]

- Majumder, M.; Biswas, D. Real-time mobile learning using mobile live video streaming. In Proceedings of the World Congress on Information and Communication Technologies (WICT), Trivandrum, India, 30 October–2 November 2012; IEEE: Piscataway, NJ, USA, 2012. [Google Scholar]

- Nam, D.-H.; Park, S.-K. Adaptive multimedia stream presentation in mobile computing environment. In Proceedings of the IEEE Region 10 Conference TENCON 99, Cheju Island, Korea, 15–17 September 1999; IEEE: Piscataway, NJ, USA, 2002. [Google Scholar]

- Bellavista, P.; Corradi, A.; Giannelli, C. Mobile proxies for proactive buffering in wireless Internet multimedia streaming. In Proceedings of the 25th IEEE International Conference on Distributed Computing Systems Workshops, Columbus, OH, USA, 6–10 June 2005; IEEE: Piscataway, NJ, USA, 2005. [Google Scholar]

- Cha, S.; Du, W.; Kurz, B.J. Middleware Framework for Disconnection Tolerant Mobile Application Services. Proceeding of the 2010 Eighth Annual Communication Networks and Services Research Conference (CNSR), Montreal, QC, Canada, 11–14 May 2010; IEEE: Piscataway, NJ, USA, 2010. [Google Scholar]

- Suarez, A.; Macias, E.; Martin, J.; Gutierrez, Y.; Gil, M. Light Protocol and Buffer Management for Automatically Recovering Streaming Sessions in Wifi Mobile Telephones. In Proceedings of the Second International Conference on Mobile Ubiquitous Computing, Systems, Services and Technologies (UBICOMM’08), Valencia, Spain, 29 September–4 October 2008; IEEE: Piscataway, NJ, USA, 2008. [Google Scholar]

- Sarmiento, S.A.; Espino, F.; Macias, E.M. Automatic recovering of RTSP sessions in mobile telephones using JADE-LEAP. Latin Am. Trans. IEEE 2009, 7, 410–417. [Google Scholar]

- Gualotuña, T.; Marcillo, D.; López, E.M.; Suárez-Sarmiento, A. Mobile Video Service Disruptions Control in Android Using JADE. In Advances in Computing and Communications. ACC 2011. Communications in Computer and Information Science; Springer: Berlin/Heidelberg, Germany, 2011; Volume 193. [Google Scholar]

- Marcillo, D. Control de Interrupciones de Video Streaming Móvil en Arquitecturas Android Usando Técnicas de Realidad Aumentada y WebRTC. Ph.D. Thesis, Universidad de Las Palmas de Gran Canaria (ULPGC), España, Spain, February 2016. [Google Scholar]

- Manson, R. Getting Started with WebRTC. Explore WebRTC for Real-Time Peer-to-Peer Communication. 2013. Available online: https://www.packtpub.com/web-development/getting-started-webrtc (accessed on 26 Februry 2018).

- Marcillo, D.; Ortega, I.; Yacchirema, S.; Llasag, R.; Luje, D. Mechanism to control disconnections in multiple communication sessions using WebRTC. In Proceedings of the 12th Iberian Conference on Information Systems and Technologies (CISTI), Lisbon, Portugal, 21–24 June 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar]

- Gualotuña, T. Diseño de una Platalforma de Agentes para Control de Servicios de Video Streaming Móvil. Ph.D. Thesis, Supervisors: Alvaro Suárez y Elsa Macías. Universidad de Las Palmas de Gran Canaria (ULPGC), España, Febrero 2016. [Google Scholar]

- Rivandeneira, A. Video Vigilancia Autonoma Mediante Sistemas Empotrados-Hardware Libre. Master Thesis, Universidad de Las Fuerzas Armadas (ESPE), Guayas, Ecuador, June 2016. [Google Scholar]

- Sánchez, J.; Benet, G.; Simó, J.E. Video Sensor Architecture for Surveillance Applications. Sensors 2012, 12, 1509–1528. [Google Scholar] [CrossRef]

- Vega-Barbas, M.; Pau, I.; Martín-Ruiz, M.L.; Seoane, F. Adaptive Software Architecture Based on Confident HCI for the Deployment of Sensitive Services in Smart Homes. Sensors 2015, 15, 7294–7322. [Google Scholar]

- Hegedus, P.; Bán, D.; Ferenc, R.; Gyimóthy, T. Myth or Reality? Analyzing the Effect of Design Patterns on Software Maintainability. In Computer Applications for Software Engineering, Disaster Recovery, and Business Continuity; Springer: Berlin/Heidelberg, Germany, 2012; pp. 138–145. [Google Scholar]

- Huston, B. The effects of design pattern application on metric scores. J. Syst. Softw. 2001, 58, 261–269. [Google Scholar] [CrossRef]

- Bayley, I.; Zhu, H. Formal specification of the variants and behavioural features of design patterns. J. Syst. Softw. 2010, 83, 209–221. [Google Scholar] [CrossRef]

- Zamani, B.; Butler, G. Pattern language verification in model driven design. Inf. Sci. 2013, 237, 343–355. [Google Scholar] [CrossRef]

- Buschmann, F.; Henney, K.; Schmidt, D.C. Pattern-Oriented Software Architecture: On Patterns and Pattern Languages; Wiley (John Wiley & Sons): Hoboken, NJ, USA, 2007. [Google Scholar]

- 1061-1998—IEEE Standard for a Software Quality Metrics Methodology. 1998. Available online: http://ieeexplore.ieee.org/document/749159/ (accessed on 28 February 2018).

- Zhang, C.; Budgen, D. A survey of experienced user perceptions about software design patterns. Inf. Softw. Technol. 2013, 55, 822–835. [Google Scholar] [CrossRef]

- Sahin, C.; Cayci, F.; Gutierrez, I.L.M. Initial explorations on design pattern energy usage. In Proceedings of the 1st International Workshop on Green and Sustainable Software (GREENS), Zurich, Switzerland, 3 June 2012; IEEE: Piscataway, NJ, USA, 2012. [Google Scholar]

- Litke, A.; Zotos, K.; Chatzigeorgiou, A.; Stephanides, G. Energy Consumption Analysis of Design Patterns. 2005. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.111.8624&rep=rep1&type=pdf (accessed on 26 February 2018).

- Henderson-Sellers, B.; Gonzalez-Perez, C.; McBride, T.; Low, G. An ontology for ISO software engineering standards: 1) Creating the infrastructure. Comput. Stand. Interfaces 2014, 36, 563–576. [Google Scholar]

- Gonzalez-Perez, C.; Henderson-Sellers, B.; McBride, T.; Low, G.C.; Larrucea, X. An Ontology for ISO software engineering standards: 2) Proof of concept and application. Comput. Stand. Interfaces 2016, 48, 112–123. [Google Scholar]

- Avila, K.; Sanmartin, P.; Jabba, D.; Jimeno, M. Applications Based on Service-Oriented Architecture (SOA) in the Field of Home Healthcare. Sensors 2017, 17, 1703. [Google Scholar] [CrossRef]

- JADE and WSI. Available online: http://jade.tilab.com/doc/tutorials/WSIG_Guide.pdf (accessed on 15 December 2017).

- Zheng, S.; Zhang, Q.; Zheng, R.; Huang, B.-Q.; Song, Y.-L.; Chen, X.-C. Combining a Multi-Agent System and Communication Middleware for Smart Home Control: A Universal Control Platform Architecture. Sensors 2017, 17, 2135. [Google Scholar] [CrossRef]

- Liao, C.-F.; Chen, P.-Y. ROSA: Resource-Oriented Service Management Schemes for Web of Things in a Smart Home. Sensors 2017, 17, 2159. [Google Scholar] [CrossRef]

- Aloman, A.; Ispas, A.I.; Ciotirnae, P.; Sanchez-Iborra, R.; Cano, M.D. Performance evaluation of video streaming using MPEG DASH, RTSP, and RTMP in mobile networks. In Proceedings of the 8th IFIP Wireless and Mobile Networking Conference (WMNC), Munich, Germany, 5–7 October 2015; IEEE: Piscataway, NJ, USA, 2015. [Google Scholar]

- Laksono, I. Achieve End-To-End Qos for Wireless Video Streaming. 2004. Available online: http://www.eetimes.com/document.asp?doc_id=1272006 (accessed on 26 February 2018).

- Sharma, N.; Krishnappa, D.K.; Irwin, D.; Zink, M.; Shenoy, P. GreenCache: Augmenting Off-the-Grid Cellular Towers with Multimedia Caches. In Proceedings of the 4th ACM Multimedia Systems Conference, Oslo, Norway, 28 February–1 March 2013; ACM: New York, NY, USA, 2013. [Google Scholar]

- Abu-Lebdeh, M.; Belqasmi, F.; Glitho, R. An Architecture for QoS-Enabled Mobile Video Surveillance Applications in a 4G EPC and M2M Environment. IEEE 2016, 4, 4082–4093. [Google Scholar] [CrossRef]

- Santos-González, I.; Rivero-García, A.; Molina-Gil, J.; Caballero-Gil, P. Implementation and Analysis of Real-Time Streaming Protocols. Sensors 2017, 17, 846. [Google Scholar] [CrossRef]

- Parametric Non-Intrusive Assessment of Audiovisual Media Streaming Quality. 2012. Available online: https://www.itu.int/rec/T-REC-P.1201/en (accessed on 26 February 2018).

- Parametric Non-Intrusive Bitstream Assessment of Video Media Streaming Quality—Higher Resolution Application Area. 2012. Available online: https://www.itu.int/rec/T-REC-P.1202.2 (accessed on 26 February 2018).

- Kimura, T.; Yokota, M.; Matsumoto, A.; Takeshita, K.; Kawano, T.; Sato, K.; Yamamoto, H.; Hayashi, T.; Shiomoto, K.; Miyazaki, K. QUVE: QoE Maximizing Framework for Video-Streaming. IEEE J. Sel. Top. Signal Process. 2017, 11, 1. [Google Scholar]

- Melendi, D.; Pañeda, X.G.; García, V.G.; García, R.; Neira, A. Métricas para el Análisis de Calidad en Servicios de Vídeo-Bajo-Demanda Reales. In Proceedings of the Congreso Iberoamericano de Telemática (CITA). Montevideo, Uruguay. 2003. Available online: https://www.academia.edu/23629317/M%C3%A9tricas_para_el_An%C3%A1lisis_de_Calidad_en_Servicios_de_V%C3%ADdeo-Bajo-Demanda_Reales (accessed on 28 February 2018).

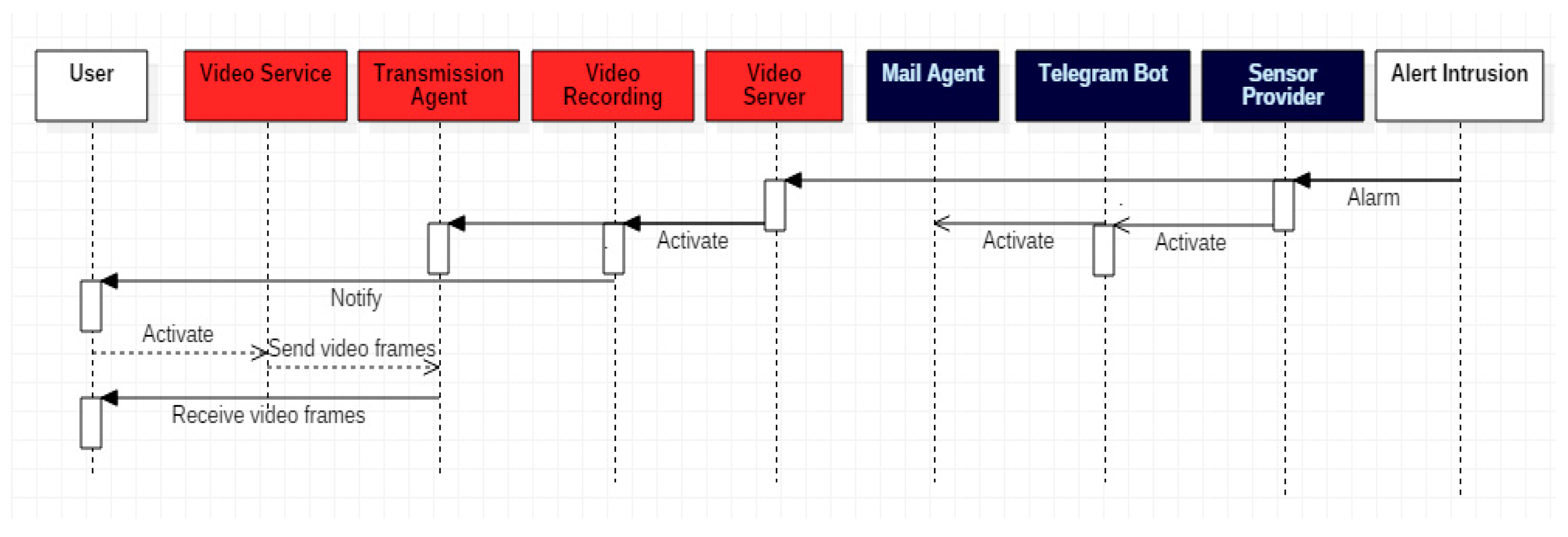

| Actors | Alert Intrusion | Person that Invades the Home and Provokes an Intrusion |

|---|---|---|

| Use Cases | User | Guard in charge of process alert messages and visualize real time intrusion video |

| Start Sensor Provider | Initiates the processing of the alarm after the sensors, asynchronously, have captured the information inside the Smart Home. Tests the sensors. Evaluates the range values. Triggers alarm. | |

| Start Message Procesor | Initiates the alert message processing, which constructs the messages to be sent to the User. | |

| Activate Telegram Bot Agent | Send the Telegram instant message to the User. | |

| Activate Mail Agent | Sends an e-mail to the User. | |

| Start Video Server | Initiates the Video Server to start the communication when requested by the User. | |

| Activate Recording Agent | Activates the agent that records the offline video. | |

| Activate Transmission Agent | Activates the agent that communicates the video to the User. Request the activation of the camera. | |

| Start video service | Starts the video session from the User. Visualize the video. |

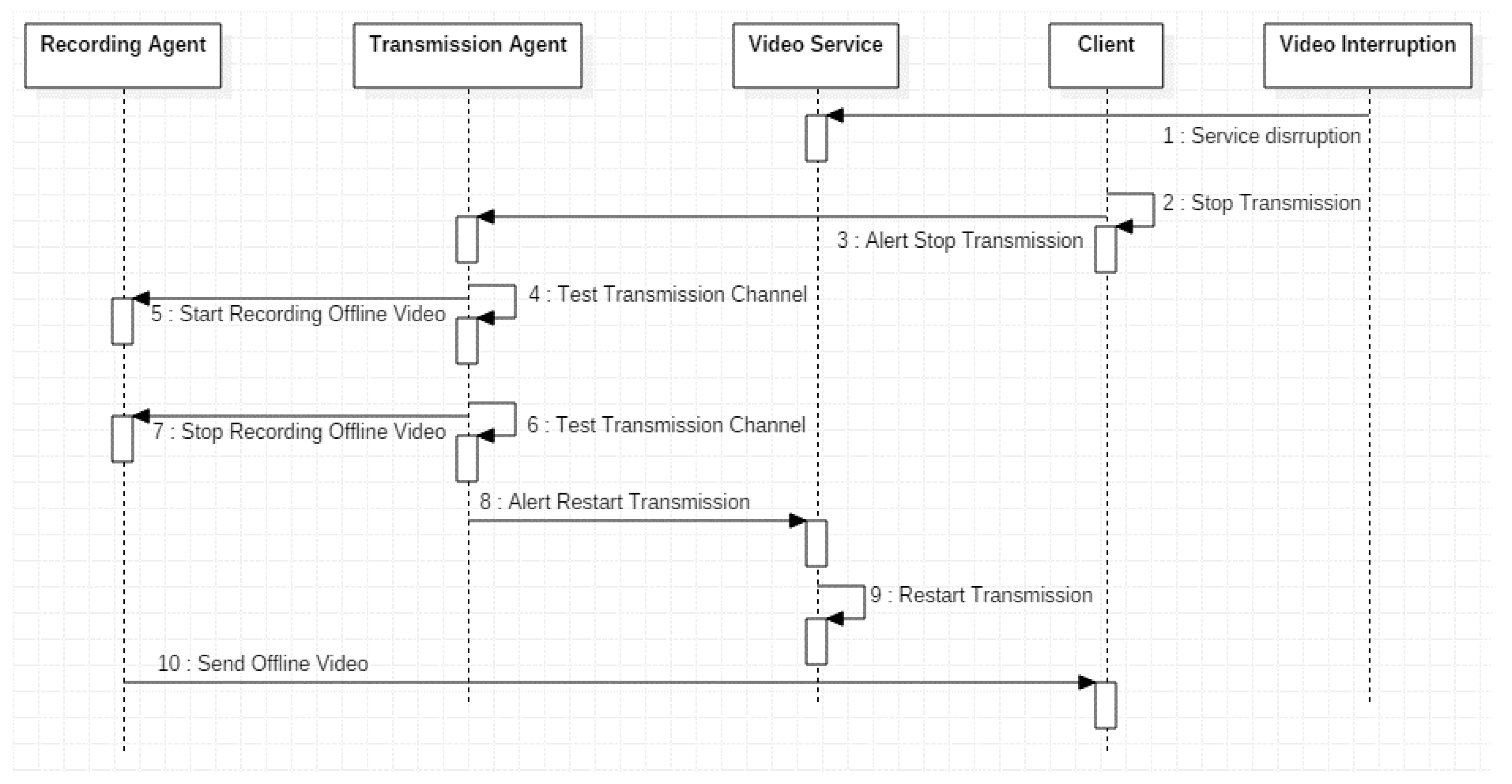

| Actors | Video Interruption | Represents the Disruption Causes. |

|---|---|---|

| Use Cases | Recording Agent | Manages video recording during disruptions. |

| Client | User or Entity that request to visualize the video. | |

| Transmission Agent | Manages video transmission and monitors the channel. | |

| Video Service | Video deployment to the User or Client. | |

| Test Transmission Channel | Evaluates the wireless channel state. When a video service disruption occurs an alarm is triggered, it will store the last not received video frames. | |

| Interrupt Video Service | Tests if a video Service interruption occured. | |

| Stop Transmission | Stops the video streaming service to the Client. | |

| Start Recording Offline Video | Once a video service interruption was detected, the video storing will start. | |

| Restart Transmision | Once the service interruption finalizes, the previous stored video will start to be sent to the Client. | |

| Stop Recording Offline Video | When video service interruption finalizes offline video will stop to be stored. | |

| Send Offline Video | Sends the video stored offline. |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gualotuña, T.; Macías, E.; Suárez, Á.; C., E.R.F.; Rivadeneira, A. Low Cost Efficient Deliverying Video Surveillance Service to Moving Guard for Smart Home. Sensors 2018, 18, 745. https://doi.org/10.3390/s18030745

Gualotuña T, Macías E, Suárez Á, C. ERF, Rivadeneira A. Low Cost Efficient Deliverying Video Surveillance Service to Moving Guard for Smart Home. Sensors. 2018; 18(3):745. https://doi.org/10.3390/s18030745

Chicago/Turabian StyleGualotuña, Tatiana, Elsa Macías, Álvaro Suárez, Efraín R. Fonseca C., and Andrés Rivadeneira. 2018. "Low Cost Efficient Deliverying Video Surveillance Service to Moving Guard for Smart Home" Sensors 18, no. 3: 745. https://doi.org/10.3390/s18030745