A Scheme for Enhancing Precision in 3-Dimensional Positioning for Non-Contact Measurement Systems Based on Laser Triangulation

Abstract

:1. Introduction

2. Measurement Principle

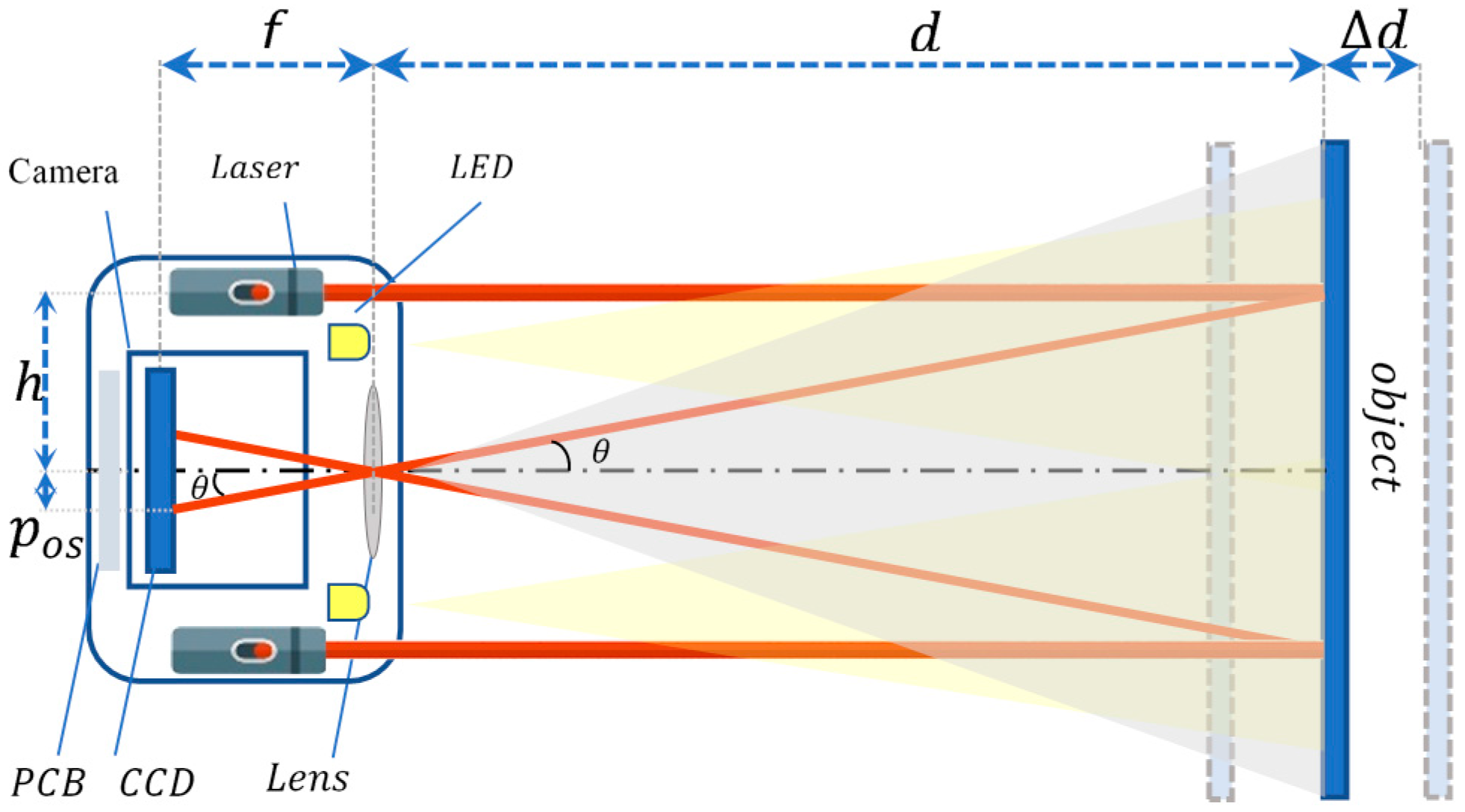

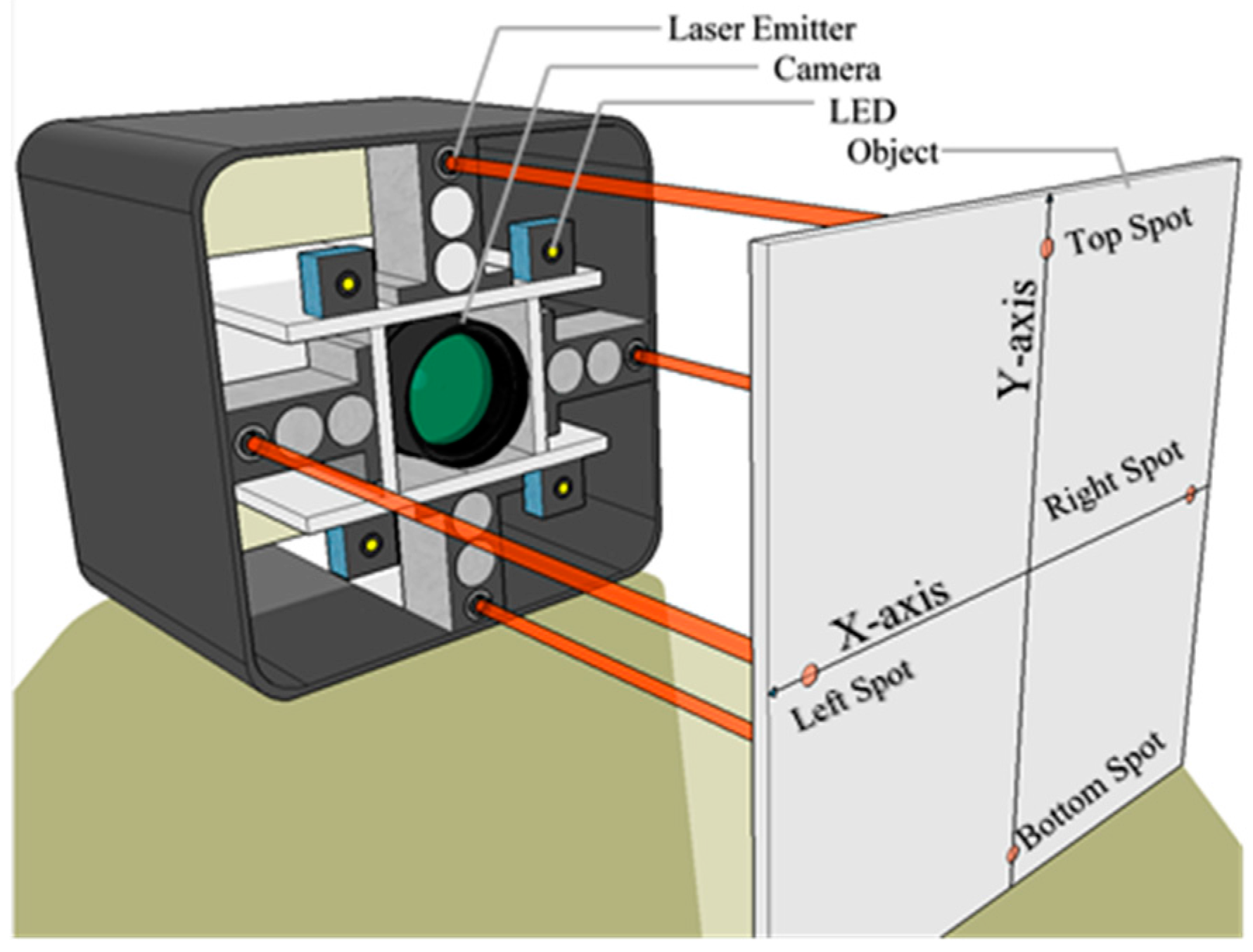

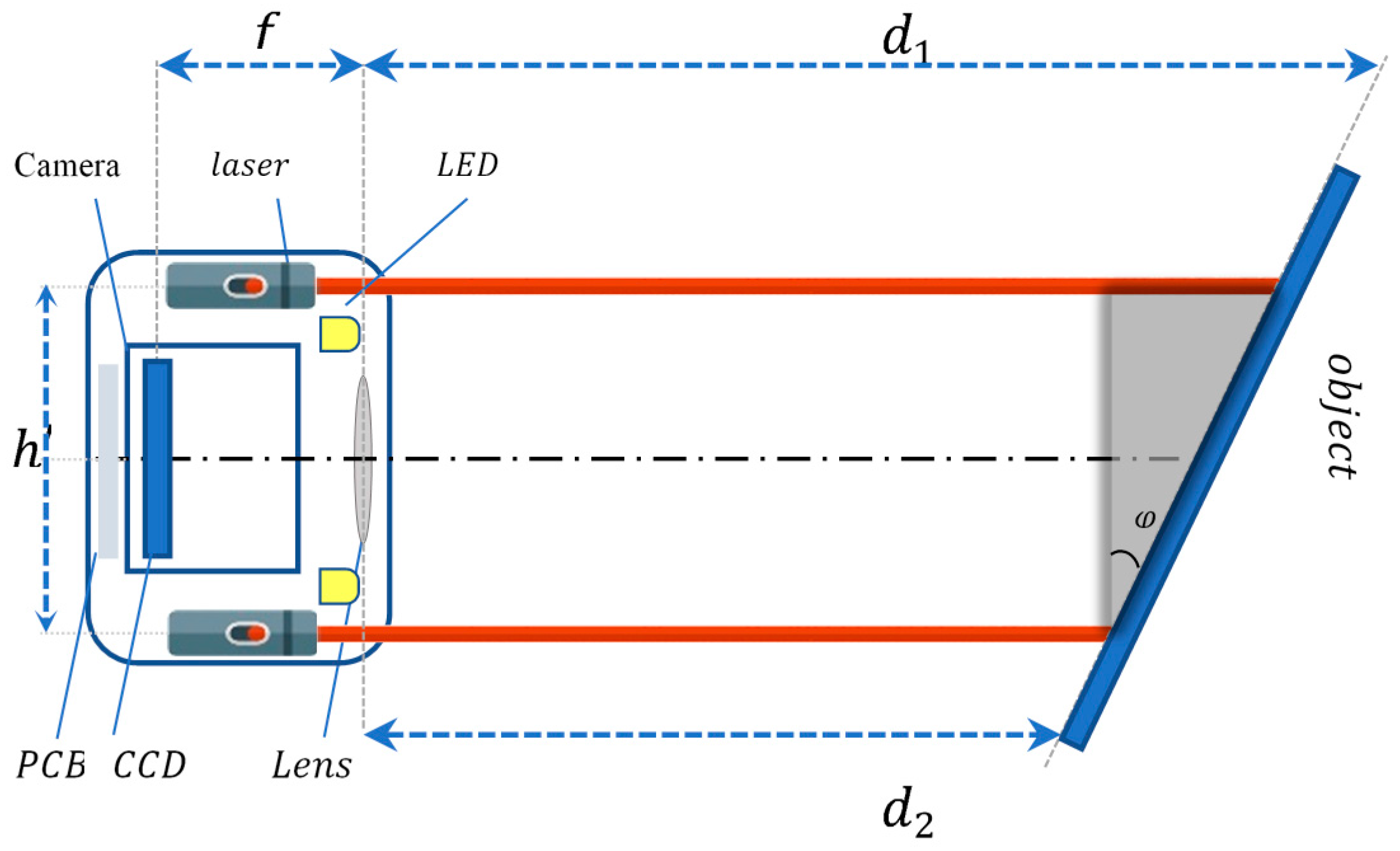

2.1. Distance Measurement Based on Displacement

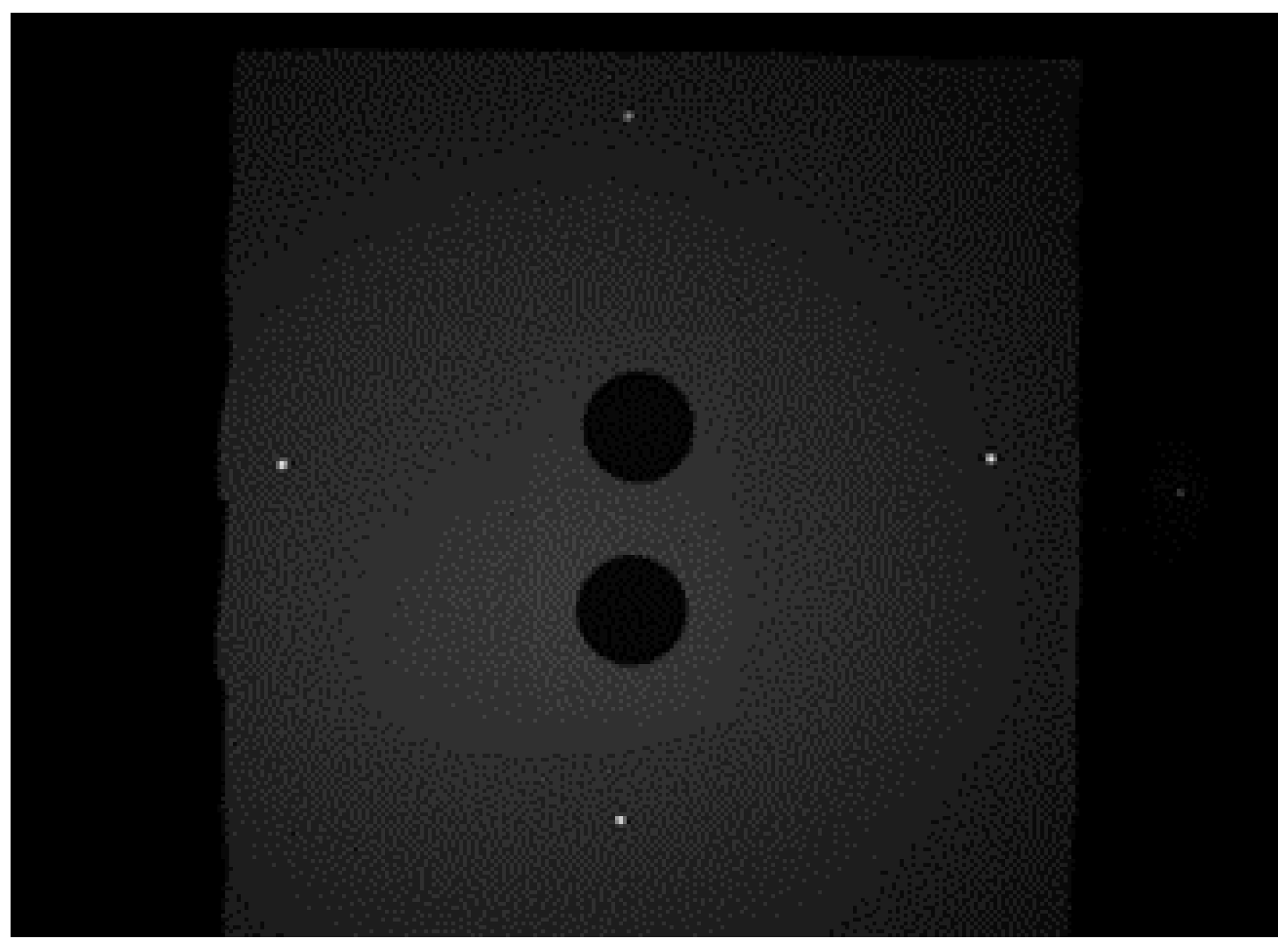

2.2. Laser Spot Center Determination

- Curve fitting

- Curve fitting of centroid

2.3. Calibration

3. Experimental Results

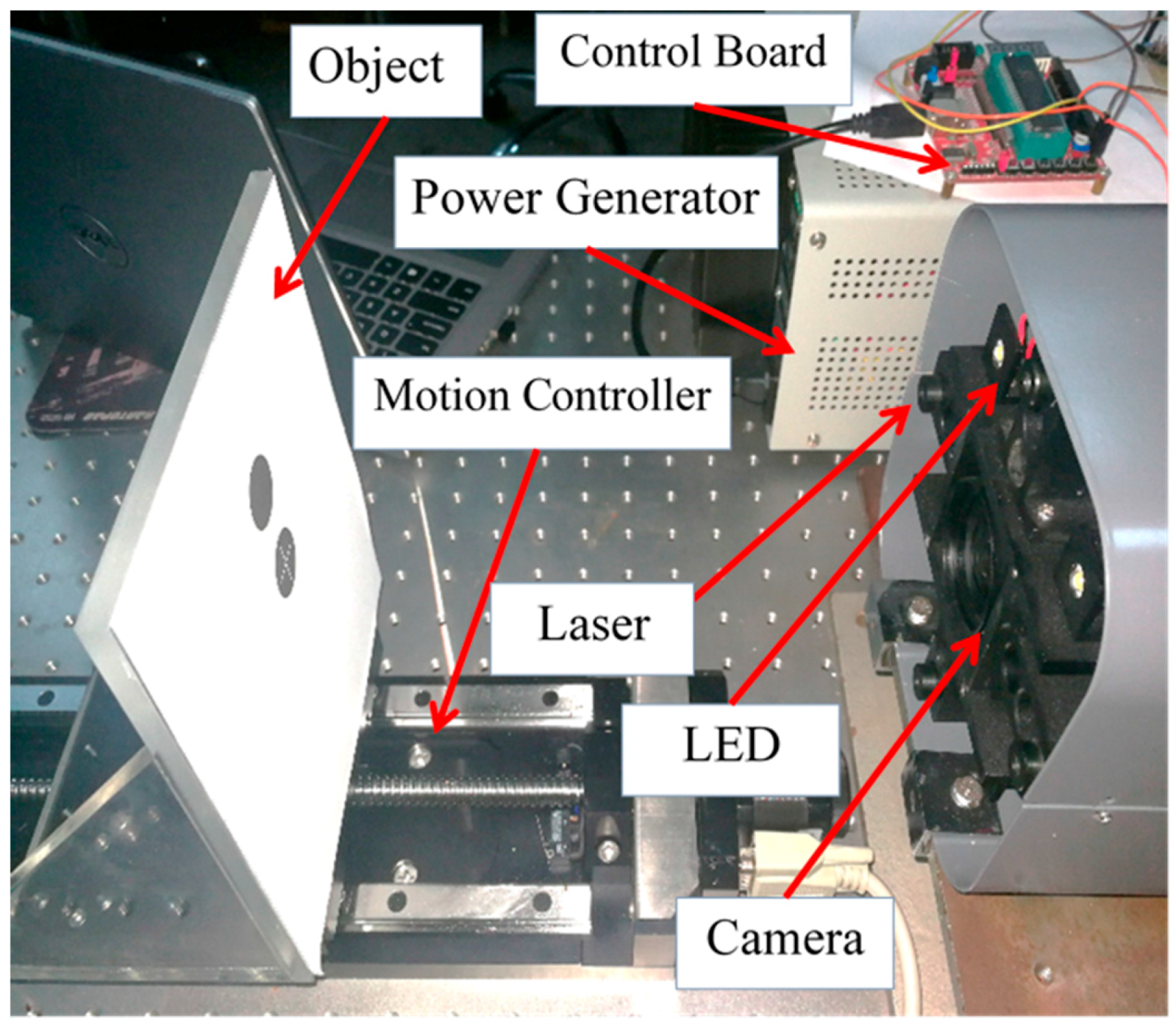

3.1. Equipment presetting

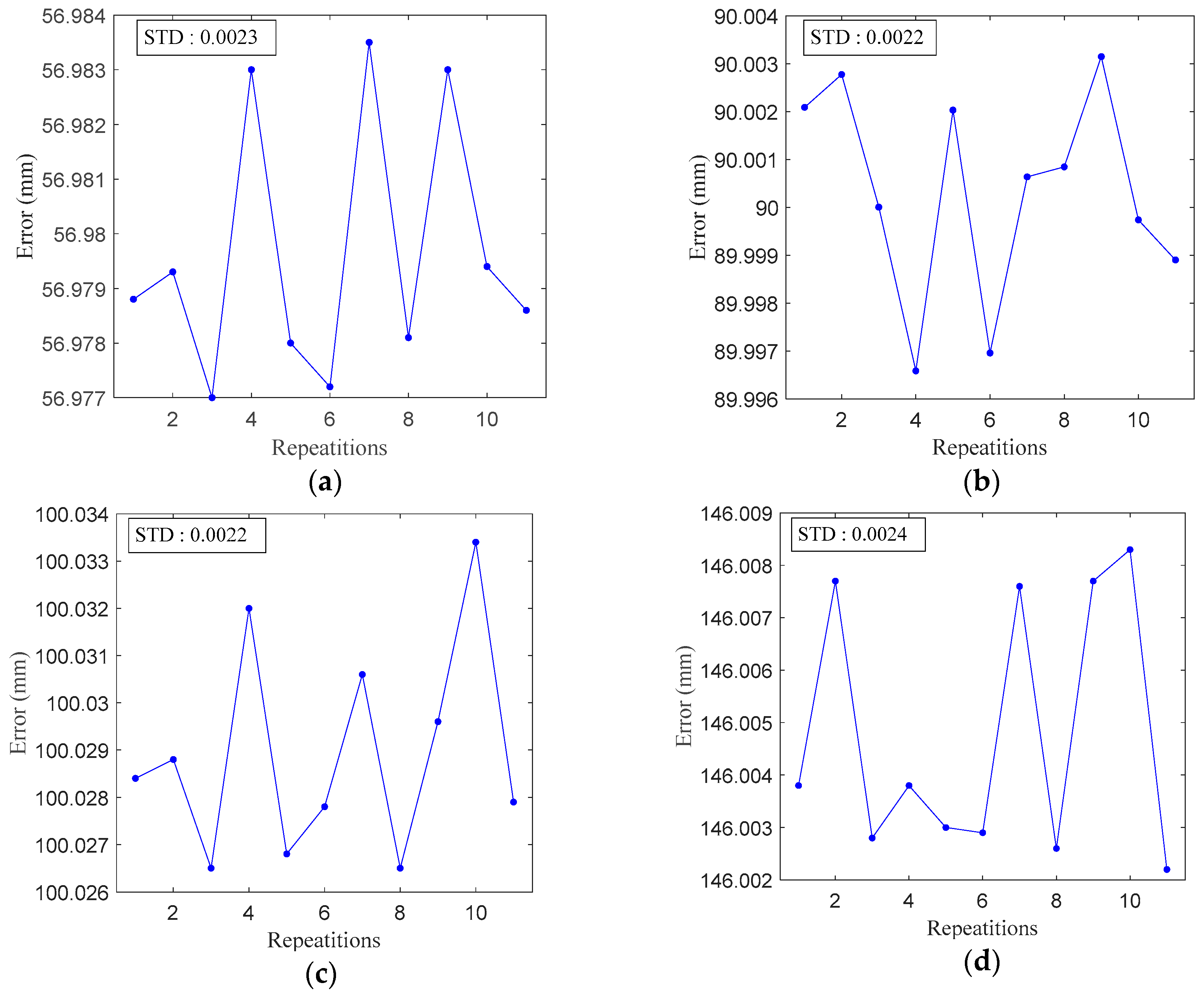

3.2. Repeatability Test

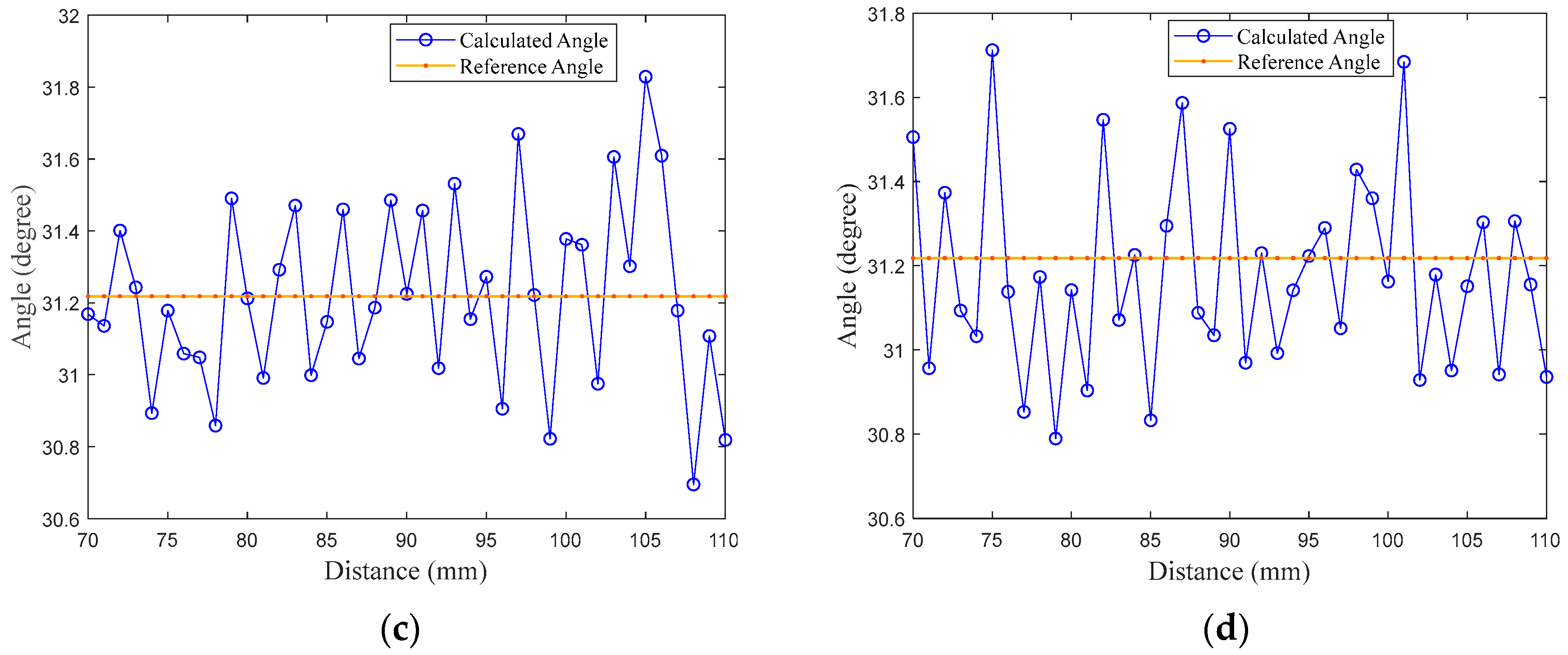

3.3. Rotational Plane Test

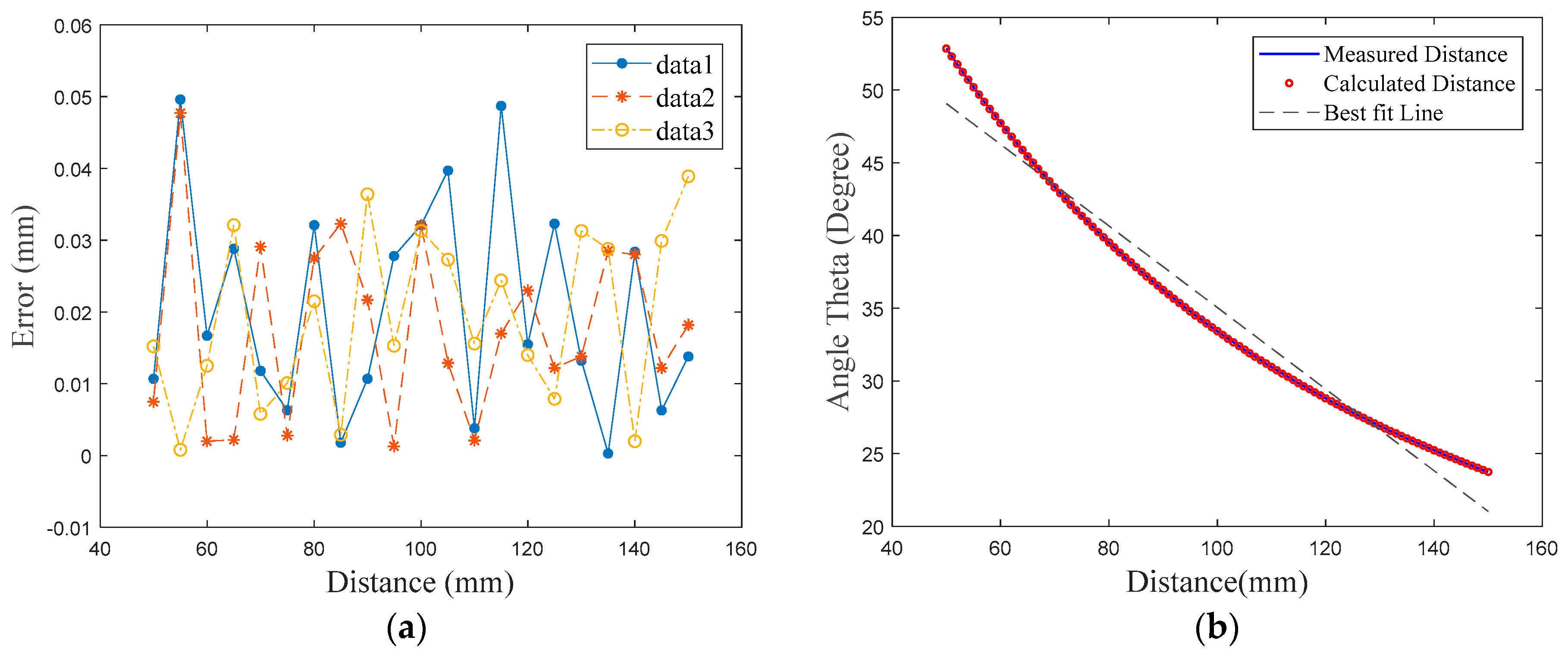

3.4. Nonlinearity Test

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Canali, C.; De Cicco, G.; Morten, B.; Prudenziati, M.; Taroni, A. A temperature compensated ultrasonic sensor operating in air for distance and proximity measurements. IEEE Trans. Ind. Electron. 1982, 4, 336–341. [Google Scholar] [CrossRef]

- Palojarvi, P.; Maatta, K.; Kostamovaara, J. Integrated time-of-flight laser radar. IEEE Trans. Instrum. Meas. 1997, 46, 996–999. [Google Scholar] [CrossRef]

- Buerkle, A.; Fatikow, S. Laser measuring system for a flexible microrobot-based micromanipulation station. In Proceedings of the 2000 IEEE/RSJ International Conference on InIntelligent Robots and Systems, 2000 (IROS 2000), Takamatsu, Japan, 31 October–5 November 2000; Volume 1, pp. 799–804. [Google Scholar]

- Muljowidodo, K.; Rasyid, M.A.; SaptoAdi, N.; Budiyono, A. Vision based distance measurement system using single laser pointer design for underwater vehicle. Indian J. Mar. Sci. 2008, 38, 324–331. [Google Scholar]

- Ashok, W.R.; Panse, M.S.; Apte, H. Laser Triangulation Based Object Height Measurement. Int. J. Res. Emerg. Sci. Technol. 2015, 2, 61–67. [Google Scholar]

- Yang, H.; Tao, W.; Zhang, Z.; Zhao, S.; Yin, X.; Zhao, H. Reduction of the Influence of Laser Beam Directional Dithering in a Laser Triangulation Displacement Probe. Sensors 2017, 17, 1126. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.Y.; Chang, R.S.; Jwo, K.W.; Hsu, C.C.; Tsao, C.P. A non-contact pulse automatic positioning measurement system for traditional Chinese medicine. Sensors 2015, 15, 9899–9914. [Google Scholar] [CrossRef] [PubMed]

- Lin, Y.C.; Hu, P.H.; Huang, K.Y.; Wang, Y.H.; Ko, S.S.; Liu, C.S. Design and characterization of laser-based inspection system. In Proceedings of the 2012 SICE Annual Conference (SICE), Akita, Japan, 20–23 August 2012; pp. 1062–1066. [Google Scholar]

- Koch, T.; Breier, M.; Li, W. Heightmap generation for printed circuit boards (PCB) using laser triangulation for pre-processing optimization in industrial recycling applications. In Proceedings of the 2013 11th IEEE International Conference on Industrial Informatics (INDIN), Bochum, Germany, 29–31 July 2013; pp. 48–53. [Google Scholar]

- Zhang, L.; Zhao, M.; Zou, Y.; Gao, S. A new surface inspection method of TWBS based on active laser-triangulation. In Proceedings of the 7th World Congress on Intelligent Control and Automation, Chongqing, China, 25–27 June 2008; pp. 1174–1179. [Google Scholar]

- Demeyere, M.; Rurimunzu, D.; Eugène, C. Diameter measurement of spherical objects by laser triangulation in an ambulatory context. IEEE Trans. Instrum. Meas. 2007, 56, 867–872. [Google Scholar] [CrossRef]

- Li, G.Q.; Guo, L.; Wang, Y.; Guo, Q.; Jin, Z. 2D Vision Camera Calibration Method for High-precision Measurement. TSEST Trans. Control Mech. Syst. 2012, 1, 99–103. [Google Scholar]

- Barreto, S.V.; Sant’Anna, R.E.; Feitosa, M.A. A method for image processing and distance measuring based on laser distance triangulation. In Proceedings of the 2013 IEEE 20th International Conference on Electronics, Circuits, and Systems (ICECS), Abu Dhabi, United Arab Emirates, 8–11 December 2013; pp. 695–698. [Google Scholar]

- Pan, B.; Yang, G.Q.; Liu, Y. Study on optimization threshold of centroid algorithm. Opt. Precis. Eng. 2008, 16, 1787–1792. [Google Scholar]

- Ai-ming, Z.H. Sub Pixel Edge Detection Algorithm Based on Guadrativ Curve Fitting. J. Harbin Univ. Sci. Technol. 2006, 3, 021. [Google Scholar]

- Zhang, J. Gradient algorithm based on subpixel displacement of DSCM considering the statistical characterization of micro-region. Opt. Tech. 2003, 29, 467–472. [Google Scholar]

- Ao, L.; Tan, J.B.; Cui, J.W.; Kang, W.J. Precise center location algorithm for circle target in CCD laser dual axis autocollimator. Opt. Precis. Eng. 2005, 6, 007. [Google Scholar]

- Liu, Z.; Wang, Z.; Liu, S.; Shen, C. Research of precise laser spot center location algorithm. Comput. Simul. 2011, 28, 399–401. [Google Scholar]

- Kumar, S.; Tiwari, P.K.; Chaudhury, S.B. An optical triangulation method for non-contact profile measurement. In Proceedings of the IEEE International Conference on Industrial Technology, 2006, ICIT 2006, Mumbai, India, 15–17 December 2006; pp. 2878–2883. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Selami, Y.; Tao, W.; Gao, Q.; Yang, H.; Zhao, H. A Scheme for Enhancing Precision in 3-Dimensional Positioning for Non-Contact Measurement Systems Based on Laser Triangulation. Sensors 2018, 18, 504. https://doi.org/10.3390/s18020504

Selami Y, Tao W, Gao Q, Yang H, Zhao H. A Scheme for Enhancing Precision in 3-Dimensional Positioning for Non-Contact Measurement Systems Based on Laser Triangulation. Sensors. 2018; 18(2):504. https://doi.org/10.3390/s18020504

Chicago/Turabian StyleSelami, Yassine, Wei Tao, Qiang Gao, Hongwei Yang, and Hui Zhao. 2018. "A Scheme for Enhancing Precision in 3-Dimensional Positioning for Non-Contact Measurement Systems Based on Laser Triangulation" Sensors 18, no. 2: 504. https://doi.org/10.3390/s18020504