Off-Line Evaluation of Mobile-Centric Indoor Positioning Systems: The Experiences from the 2017 IPIN Competition

Abstract

:1. Introduction

2. Related Work

2.1. Indoor Competitions

2.2. On-Line Benchmarking Platforms

2.3. Databases

3. The IPIN 2017 Competition

- Track 1: Smartphone-based (on-site)

- Track 2: Pedestrian Dead Reckoning Positioning (on-site)

- Track 3: Smartphone-based (off-site)

- Track 4: PDR for warehouse picking (off-site)

3.1. The Off-Site Track Rules

- An off-site competition approach where the organizers provided the required data for calibration, validation and evaluation. It was forbidden for competitors to survey the evaluation scenarios by themselves. This constraint guaranteed that all competitors had the same data for participating in the competition. All competitors were notified about the existence of public-access databases that were collected for the previous 2016 IPIN Competition [74].

- A natural movement is assumed in most captured data. Some special movements were also considered: turns, moving backward/laterally at certain points and changing floors through stairs. The user speed was approximately constant while recording the data with stops at some positions. It is important to note that the data were collected by a human, and not a controlled constant speed device, which makes the data closer to a natural movement.

- None of the collected dataset included artificial phone holding. The smartphone was either stable in front of actor’s face or chest, which is a typical position for reading or typing on the phone, or with the arm downwards while holding the phone with a hand. In only very few cases, the phone was near the actor’s ear simulating a phone call. This unpredicted variability is closer to reality.

- Each logfile covered a trajectory in one of the evaluation buildings and it was recorded by an actor using a smartphone. The provided data are diverse because they consist of multiple trajectories taken at three different buildings. A total of eight people participated in the database creation and 10 devices were used.

- The scenarios remained unaltered during the collection process. Only one of them changed, because BLE beacons had been previously deployed in a small area for testing purposes. The information about the location of those beacons was provided to competitors.

- Bluetooth Low Energy data were available.

- Position of BLE beacons and a few Wi-Fi APs was provided.

- In a few cases, the actor simulated a phone call while data were being captured, to add a natural motion.

- More raw-data per building were provided.

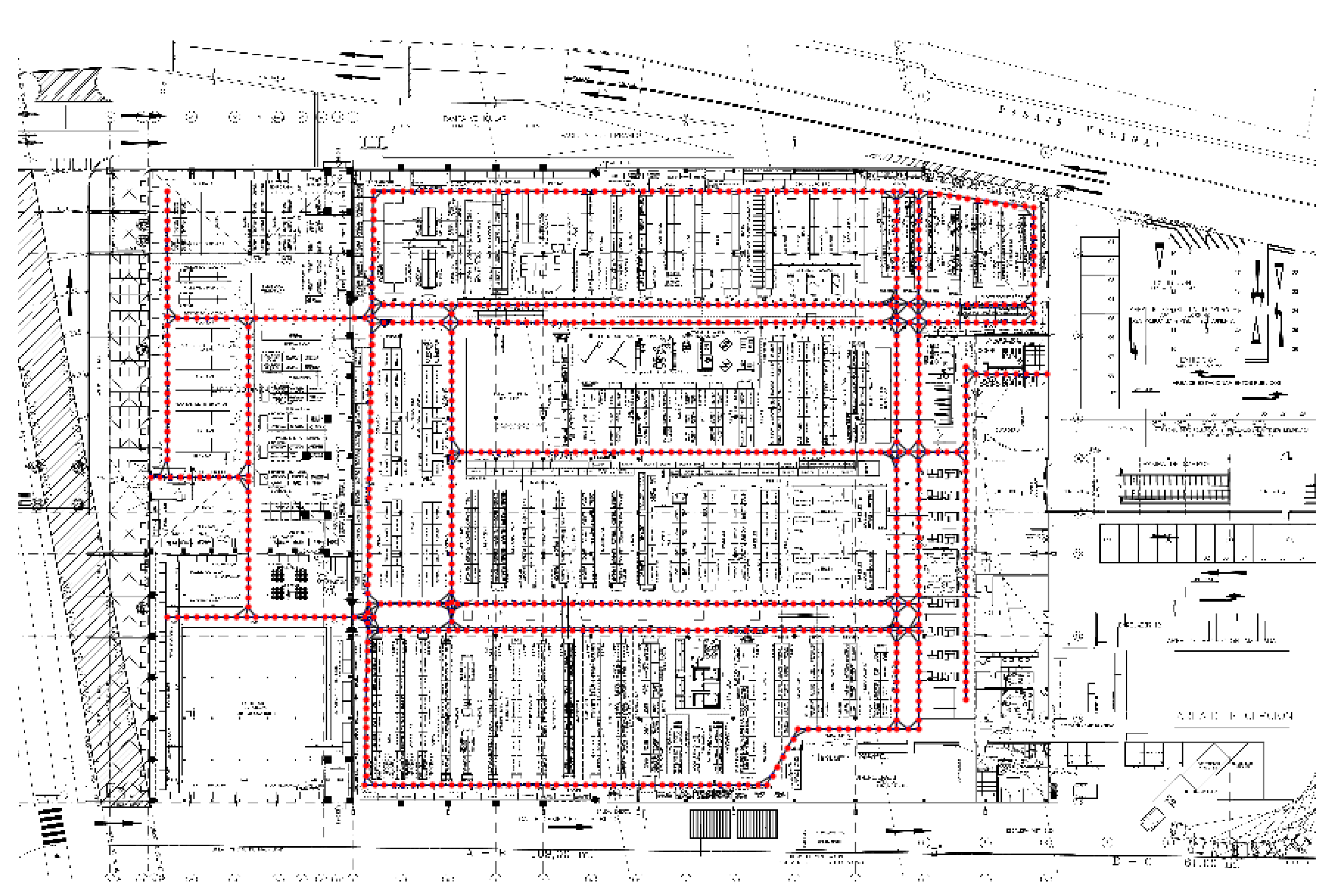

Description of Datasets

- Regarding the ground truth data, which were provided by POSI lines in training and validation logfiles, some competitors suggested to have more reference data and shorten the period between two consecutive POSI entries in the logfiles. However, the data collection, which included marking each reference point, was not an easy task.

- The organizers included a tricky transition between floors in one of the logfiles. This transition (stairs area) was not included in the training and validation logfiles and was considered a good way to test the robustness of competing IPS when facing unexpected events.

- The floor penalty was applied whenever a competing system misidentified the floor. However, in floor transitions (stairs areas), a system may provide an estimation very close to the evaluation point and yet be prone to miss the floor. Some competitors indicated that future competitions should take into account these special cases. The competition organizers are considering to integrate a more realistic error distance metric [76] to calculate the positioning error in next editions.

3.2. Submission of Results and Evaluation

3.3. The Competing Teams

- The UMinho team: Adriano Moreira, Maria João Nicolau, António Costa, Filipe Meneses. University of Minho and Centro de Computação Gráfica, Guimarães, Portugal.

- The AraraDS team: Tomás Lungenstrass, Joaquín Farina, Juan Pablo Morales. AraraDS, Santiago, Chile.

- The Yai team: Wei-Chung Lu, Wen-Chen Lu, Ho-Ti Cheng, Shi-Shen Yang, Shih-Hau Fang, Ying-Ren Chien and Yu Tsao. Yuan Ze University, National Ilan University, Academia Sinica Research Center for Information Technology Innovation, Taiwan.

- The HFTS team: Stefan Knauth. Stuttgart University of Applied Sciences, Stuttgart, Germany [77].

3.3.1. UMinho Team

3.3.2. AraraDS Team

3.3.3. YAI Team

- Determination of the correct building;

- determination of the correct floor; and

- estimation of the latitude and longitude coordinates.

- if the distance between the two consecutive points is less than 5 m, the accelerometer and magnetometer data are used to predict the trajectory of the walking person; and

- if the distance between the two consecutive points is more than 5 m, Wi-Fi Fingerprinting approach is used to determine the coordinates.

3.3.4. HFTS Team

- PDR Offset cancellation is not only map-based but also Wi-Fi based: The particle movement process of the particle filter includes a Wi-Fi based heading offset and step length correction. Depending on parameter setting, this may lead to ignoring Wi-Fi, mixing Wi-Fi and inertial sensor information or completely adjust to Wi-Fi positions.

- The particle filter uses Wi-Fi results as a bound to remove outliers: By keeping an estimated Wi-Fi position, particles exceeding a configurable distance from the Wi-Fi position are removed from the particle filter, thus keeping the particle cloud confined to a certain radius.

- The PDR algorithm considers floor areas on which a level change may occur, e.g., stairs and elevators. These areas are marked manually on the floor map. This allows step length adjustment for stairs and, in combination with Wi-Fi floor change detection, particle filter “flushing”, e.g., removal of particles which are not on a floor change area.

- In cases where the particle filter loose all particles, the map requirement is withdrawn, e.g., particles may cross walls. They are still confined to some extend by the Wi-Fi position.

4. Results

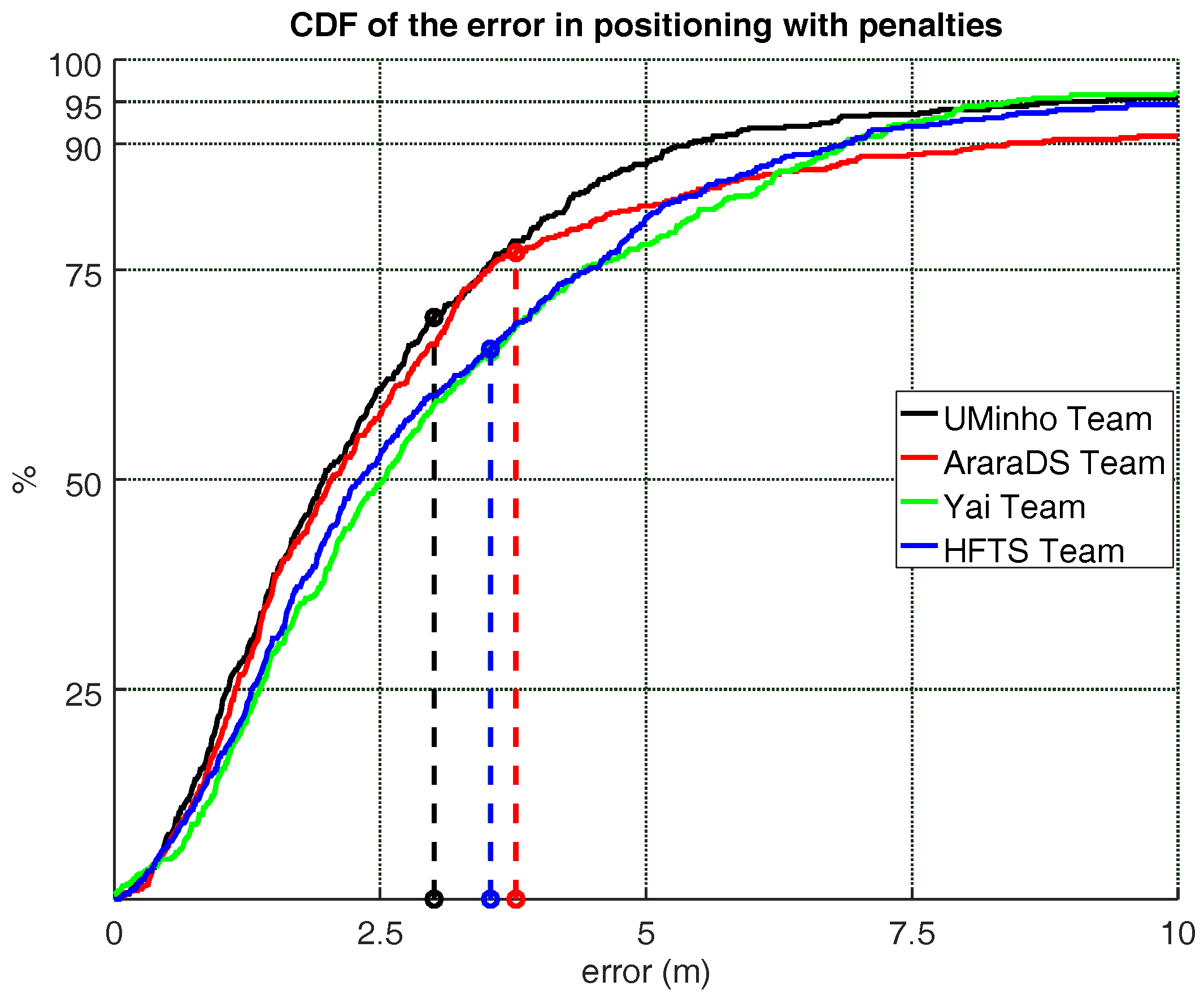

4.1. Competition Results

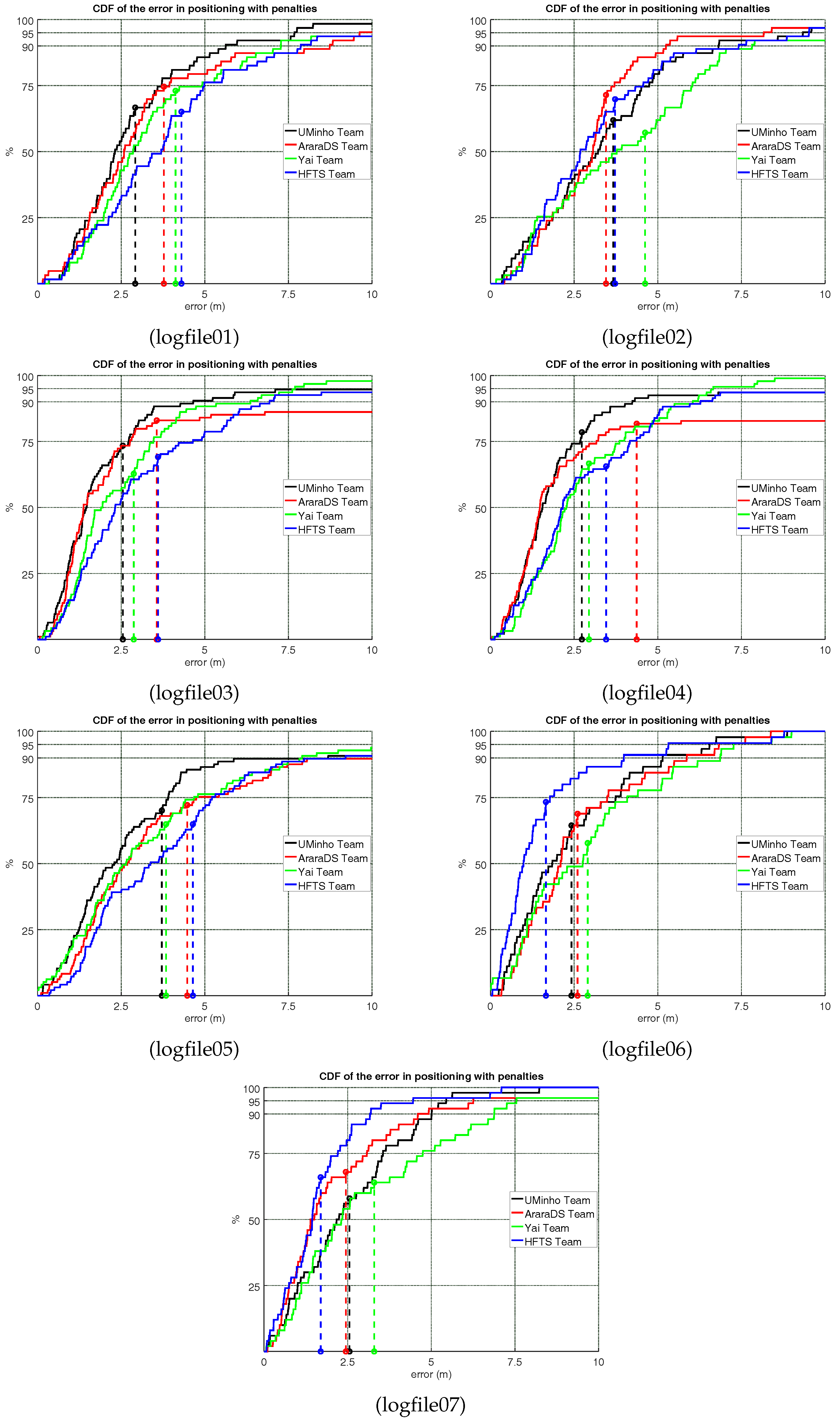

4.2. Detailed Results

4.3. The IPIN Competition Track 3 and Other Competitions

5. Conclusions

5.1. Competitors’ Feedback and Lessons Learned

- Provide more detailed data without gaps (e.g., without skipping multiple floors or rooms).

- Try to minimize unexpected events like missing stairs areas in training and validation log files.

- Code the floor transitions with a special floor code (e.g., 3.5 for transitions between the third and fourth floors) to minimise the unfair large positioning errors near areas close to floor transitions.

- Registration of datasets. The registration of data in the logfiles for the competition is done in a continuous way with minor stops. Some competitors, especially those that mainly rely on fingerprinting, felt uncomfortable with this procedure because they prefer a static registration, or at least a very slow continuous motion (0.2 m/s), in order to have more stable Wi-Fi fingerprints and more samples. They also would like a dense survey with many points, and no testing experiments outside of the surveyed area. We understand this point of view, but we also consider the current relevance of the generation of fingerprinting solutions from incomplete or low sampled scenarios, surveyed by actors in motion (the way that crowd-sourced fingerprints are registered by persons at malls, for example). Next editions could include some sites with a high density of points taken at static positions, just to observe how some systems can benefit from it, but we consider that we should keep the core of the current registration approach.

- Metric fairness. Regarding the metric used in the IPIN competition, which is based on the third quartile plus some penalties generated by floor or building identification mistakes, some competitors suggested that the weights for those penalties were too large (for example, the 15 m penalty for a wrong floor estimation). The usage of those penalties emphasizes that a positioning service must pay high importance to guaranteeing a correct floor indication. For some applications, a wrong floor indication can be very adverse for the location service provided. For example, a mistake in a floor number can make the user to lose valuable time when navigating in a mall from one section to another, and, most importantly, could lower the user interest in that service since it is perceived as misleading. The 15 m penalty is even a small penalty considering the time needed to correct our route from a failure due to a floor mistake. The competition organizers are considering in further editions to determine the positioning error using a more realistic distance, which computes the walkable distance between the estimated and real position.

5.2. General Conclusions

- Databases are important for comprehensive comparisons. The creation of different databases, in different buildings, with different actors, different smartphones, and with a high degree of diversity is, in general, the only way we can analyse the performance of systems with enough detail. We have seen from our analysis that some teams created algorithms whose behavior was better in a certain environment, or even for test occurrences, than others. Our feeling is that the typical evaluation procedure for IPS found in the literature, where a team selects a particular building and compares its method with a ground truth or with another approach, is prone to excessive fine-tuning of algorithms, and insufficient to conclude that the proposed positioning approach is robust and accurate enough for real-life uncontrolled scenarios. Competitions are a good way to collect experimental data of diverse sites for the creation of banks of databases against with to compare.

- Competition motivates competitors from Academia. Research involved in non-commercial applications does not commonly have the pressure of providing the best accurate working systems in every possible scenario. Once the details of their base system have been published (using their own experimental set-up in most of cases), the resources are dedicated to explore novel aspects and features of indoor localization. However, an annual competition that involves challenging participants from academia or industry forces every competitor to be highly competitive and to develop accurate working systems, ad not just prototypes. This fact boosts their motivation to improve and refine their systems to levels that are not usually considered in traditional R & D works.

- Competition integrates Academia and Industry. Competitions can be seen as showrooms where both worlds, Academia and Industry, show their developments, advances and interesting findings. Although the main aim of a competition is to compete and win, synergies between the competitors can be consolidated after the competition. In fact, informal contacts between competitors were detected after presenting the competition results. Creating a forum, to discuss about indoor positioning and establish new contacts, was one of the competition pillars.

- Competitions must be diverse. Although this paper presents the results of experiences of the 2017 off-site smartphone-based competition, there are other interesting competitions and evaluation initiatives available which consider different base positioning technologies (not smartphone-based such as PDR, UWB, and Ultrasound), real-time evaluation, joint off-line and on-line evaluation, among many other evaluation features. We consider that diversity in competitions is important, because it allows a huge indoor positioning community to be represented.

- Competitions need to cover multiple scenarios and contexts. The results of Track 3 from IPIN 2017 Competition show an interesting finding: none of the competing systems provided the best accuracy (positioning error, X-Y error and floor hit detection rate) in all the evaluation logfiles. This finding demonstrates that the accuracy of a positioning system depends on the scenario and context. Therefore, to have a comprehensive evaluation, as many as possible scenarios and contexts have to been considered in the evaluation set-up, thus avoiding winners that provide high accuracy for specific settings but perform poorly under other scenarios and contexts. However, considering multiple scenarios and contexts might not be feasible in on-site competitions, because the time constraints are very tight. Even for off-site competitions, the effort of considering multiple scenarios and contexts is huge, because the time required for planning the data collection, gathering the raw data and performing post-processing is considerable.

- Competitions need continuity. Although all competitions are important, the ones with continuity are particularly relevant. Continuity is an essential feature in order to track the competition evolution and to keep the motivation of competitors. Annual competitions, such as the IPIN and MS competitions, enable competitors to participate each year. The teams from the University of Minho and the Technical University of Stuttgart have participated in all three editions of this competition and they have received valuable feedback to refine their systems.

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Markets and Markets: Indoor Location Market Worth 40.99 Billion USD by 2022. Available online: http://www.marketsandmarkets.com/PressReleases/indoor-location.asp (accessed on 1 November 2017).

- Global Indoor Location Market Analysis (2017–2023). Available online: https://www.reportlinker.com/p05207399/Global-Indoor-Location-Market-Analysis.html (accessed on 23 November 2017).

- Pipelidis, G.; Rad, O.R.M.; prehofer, C.; Iwaszczuk, D.; Hugentobler, U. A Novel Approach for Dynamic Vertical Indoor Mapping through Crowd-sourced Smartphone Sensor Data. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Takayuki Akiyama, M.S.; Hashizume, H. Time-of-arrival-based Smartphone Localization Using Visible Light Communication. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Moreira, A.; Silva, I.; Meneses, F.; Nicolau, M.J.; Pendão, C.; Torres-Sospedra, J. Multiple simultaneous Wi-Fi measurements in fingerprinting indoor positioning. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Bousdar, D.; Blanco, L.E.D.; Diaz, E.M. Performance comparison of wearable-based pedestrian navigation systems in large areas. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Berkvens, R.; Bellekens, B.; Weyn, M. Signal Strength Indoor Localization using a Single DASH7 Message. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Tiemann, J.; Wietfeld, C. Scalable and Precise Multi-UAV Indoor Navigation using DOA-based UWB Localization. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Anagnostopoulos, G.G.; Deriaz, M.; Gaspoz, J.M.; Konstantas, D.; Guessous, I. Navigational Needs and Requirements of Hospital Staff: Geneva University Hospitals Case Study. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Wei, D.; Ji, X.; Li, W.; Yuan, H.; Xu1, Y. Vehicle Localization Based on Odometry Assisted Magnetic Matching. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Antigny, N.; Servières, M.; Renaudin, V. Pedestrian Track Estimation with Handheld Monocular Camera and Inertial-Magnetic Sensor for Urban Augmented Reality. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Walsh, E.; Daems, W.; Steckel, J. Stable Six-DoF Head-Pose Tracking in Assistive Technology Application. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Gualda, D.; Díaz, E.; Garcia, J.J.; del Carmen Perez, M.; Ureña, J.U.; Cervigón, R. Patient Activity Monitoring for Smartphones based on a PDR Algorithm. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Schumann, J.; Boltes, M. Tracking of wheelchair users in dense crowds. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Gualda, D.; Ureña, J.U.; Garcia, J.C.; del Carmen Perez, M.; Díaz, E. Study of Cooperative Position Estimations of Mobile Robots. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Nguyen, K.A.; Watkins, C.; Luo, Z. Co-location Epidemic Tracking on London Public Transports Using Low Power Mobile Magnetometer. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Perera, A.; Arukgoda, J.; Ranasinghe, R.; Dissanayake, G. Localization System for Carers to Track Elderly People in Visits to a Crowded Shopping Mall. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Jiménez, A.R.; Seco, F. Finding objects using UWB or BLE localization technology: A museum-like use case. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Bahl, P.; Padmanabhan, V.N. RADAR: An In-Building RF-Based User Location and Tracking System. In Proceedings of the IEEE Nineteenth Annual Joint Conference of the IEEE Computer and Communications Societies (INFOCOM 2000), Tel Aviv, Israel, 26–30 March 2000; pp. 775–784. [Google Scholar]

- Youssef, M.; Agrawala, A.K. The Horus WLAN location determination system. In Proceedings of the 3rd International Conference on Mobile Systems, Applications, and Services (MobiSys 2005), Seattle, WA, USA, 6–8 June 2005; pp. 205–218. [Google Scholar]

- Jovan Powar, C.G.; Harle, R. Assessing the Impact of Multi-Channel BLE Beacons on Fingerprint-based Positioning. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Gharat, V.; Colin, E.; Baudoin, G.; Richard, D. Indoor Performance Analysis of LF-RFID based Positioning System: Comparison with UHF-RFID and UWB. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Jiménez, A.R.; Seco, F. Combining RSS-based trilateration methods with radio-tomographic imaging: Exploring the capabilities of long-range RFID systems. In Proceedings of the 2015 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Banff, AB, Canada, 13–16 October 2015; pp. 13–16. [Google Scholar]

- Popleteev, A. AmbiLoc: A year-long dataset of FM, TV and GSM fingerprints for ambient indoor localization. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Knobloch, D. Practical Challenges of Particle Filter Based UWB Localization in Vehicular Environments. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Jiménez, A.; Seco, F. Comparing Ubisense, Bespoon and Decawave UWB location systems: indoor performance analysis. IEEE Trans. Instrum. Meas. 2017, 6, 2106–2117. [Google Scholar] [CrossRef]

- Rapp, P.; Esslinger, D.; Sawodny, O.; Tarin, C. Experimental Validation for Opto-Acoustic Distance Measurement based on Code Division Multiple Access Amplitude Modulation and Differential Carrier Phase Estimation. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Seco, F.; Jiménez, A.R.; Zampella, F. Fine-Grained Acoustic Positioning with Compensation of CDMA Interference. In Proceedings of the 2015 IEEE International Conference on Industrial Technology (ICIT), Seville, Spain, 17–19 March 2015; pp. 3418–3423. [Google Scholar]

- Dina Bousdar, E.M.D.; Minguez, J.A.C. Exploiting wearable devices for the calibration of inertial navigation systems. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Jiménez, A.R.; Seco, F.; Prieto, J.; Guevara, J. Pedestrian Indoor Navigation by aiding a Foot-mounted IMU with RFID Signal Strength Measurements. In Proceedings of the 2010 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Zurich, Switzerland, 15–17 September 2010; pp. 1–7. [Google Scholar]

- Jiménez, A.R.; Seco, F.; Zampella, F. PDR with a Foot-Mounted IMU and Ramp Detection. Sensors 2011, 11, 9393–9410. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jiménez, A.R.; Zampella, F.; Seco, F. Light-Matching : A new Signal of Opportunity for Pedestrian Indoor Navigation. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation (IPIN), Montbeliard-Belfort, France, 28–31 October 2013; Volume 1, pp. 777–786. [Google Scholar]

- Hanley, D.; Faustino, A.; Zelman, S.; Degenhardt, D.; Bretl, T. MagPIE: A Dataset for Indoor Positioning with Magnetic Anomalies. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Le Scornec, J.; Ortiz, M.; Renaudin, V. Foot-mounted pedestrian navigation reference with tightly coupled GNSS carrier phases, inertial and magnetic data. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Zampella, F.; Jiménez, A.; Seco, F. Indoor Positioning Using Efficient Map Matching , RSS Measurements , and an Improved Motion Model. IEEE Trans. Veh. Technol. 2015, 64, 1304–1317. [Google Scholar] [CrossRef]

- Zampella, F.; Bahillo, A.; Prieto, J.; Jimenez, A.R.; Seco, F. Indoor positioning using Pedestrian Dead Reckoning and RSS/TOF measurements. Sens. Actuators A Phys. 2013, 203, 249–260. [Google Scholar] [CrossRef]

- Eighth International Conference on Indoor Positioning and Indoor Navigation, Sapporo, Japan, 18–21 September 2017. Available online: http://www.ipin2017.org/ (accessed on 1 November 2017).

- Sakr, M.; El-Sheimy, N. Efficient Wi-Fi Signal Strength Maps Using Sparse Gaussian Process Models. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Fet, N.; Handte, M.; Marron, P.J. Autonomous Signal Source Displacement Detection and Recalibration of Fingerprinting-based Indoor Localization Systems. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Potortì, F.; Park, S.; Ruiz, A.R.J.; Barsocchi, P.; Girolami, M.; Crivello, A.; Lee, S.Y.; Lim, J.H.; Torres-Sospedra, J.; Seco, F.; et al. Comparing the performance of indoor localization systems through the EvAAL framework. Sensors 2017, 17, 2327. [Google Scholar] [CrossRef] [PubMed]

- Kasebzadeh, P.; Hendeby, G.; Fritsche, C.; Gunnarsson, F.; Gustafsson, F. IMU Dataset For Motion and Device Mode Classification. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- IPIN International Standards Committee (ISC). Available online: http://ipin-standards.org/ (accessed on 1 November 2017).

- ISO/IEC 18305:2016. Information Technology—Real Time Locating Systems—Test and Evaluation of Localization and Tracking Systems. Available online: https://www.iso.org/standard/62090.html (accessed on 15 December 2017).

- Lymberopoulos, D.; Liu, J.; Yang, X.; Choudhury, R.R.; Sen, S.; Handziski, V. Microsoft Indoor Localization Competition: Experiences and Lessons Learned. GetMob. Mob. Comput. Commun. 2015, 18, 24–31. [Google Scholar] [CrossRef]

- Lymberopoulos, D.; Liu, J.; Yang, X.; Choudhury, R.R.; Handziski, V.; Sen, S. A Realistic Evaluation and Comparison of Indoor Location Technologies: Experiences and Lessons Learned. In Proceedings of the 14th International Conference on Information Processing in Sensor Networks (IPSN ’15), Seattle, WA, USA, 13–16 April 2015; ACM: New York, NY, USA, 2015; pp. 178–189. [Google Scholar]

- Lymberopoulos, D.; Liu, J. The Microsoft Indoor Localization Competition: Experiences and Lessons Learned. IEEE Signal Process. Mag. 2017, 34, 125–140. [Google Scholar] [CrossRef]

- Barsocchi, P.; Chessa, S.; Furfari, F.; Potortì, F. Evaluating AAL solutions through competitive benchmarking: The localization competition. IEEE Pervasive Comput. Mag. 2013, 12, 72–79. [Google Scholar] [CrossRef]

- Chessa, S.; Furfari, F.; Potortì, F.; Lázaro, J.P.; Salvi, D. EvAAL: Evaluating AAL Systems through Competitive Benchmarking; ERCIM News; Springer: Berlin/Heidelberg, Germany, 2011; p. 46. [Google Scholar]

- Salvi, D.; Barsocchi, P.; Arredondo, M.T.; Ramos, J.P.L. EvAAL, Evaluating AAL Systems through Competitive Benchmarking, the Experience of the 1st Competition; International Competitions and Final Workshop; Springer: Berlin/Heidelberg, Germany, 2011; pp. 14–25. [Google Scholar]

- EvAAL-ETRI On-Site and Off-Site Indoor Localization Competition. Available online: http://www.ucalgary.ca/ipin2015/call-papers/call-competition (accessed on 15 December 2017).

- IPIN 2016 Indoor Localization Competition. Available online: http://www3.uah.es/ipin2016/cfc.php (accessed on 15 December 2017).

- PerfLoc: Performance Evaluation of Smartphone Indoor Localization Apps. Available online: https://perfloc.nist.gov/perfloc.php (accessed on 15 December 2017).

- Geo IoT World Indoor Location Testbed. Available online: https://www.geoiotworld.com/testbed (accessed on 15 December 2017).

- Evaluation of RF-Based Indoor Localization Solutions for the Future Internet. Available online: http://www.evarilos.eu/index.php. (accessed on 15 December 2017).

- Haute, T.V.; Poorter, E.D.; Lemic, F.; Handziski, V.; Wirström, N.; Voigt, T.; Wolisz, A.; Moerman, I. Platform for benchmarking of RF-based indoor localization solutions. IEEE Commun. Mag. 2015, 53, 126–133. [Google Scholar] [CrossRef]

- Lemic, F.; Handziski, V.; Wolisz, A.; Constambeys, T.; Laoudias, C.; Adler, S.; Schmitt, S.; Yang, Y. Experimental evaluation of RF-based indoor localization algorithms under RF interference. In Proceedings of the 2015 International Conference on Location and GNSS (ICL-GNSS), Gothenburg, Sweden, 22–24 June 2015; pp. 1–8. [Google Scholar]

- Van Haute, T.; De Poorter, E.; Crombez, P.; Lemic, F.; Handziski, V.; Wirström, N.; Wolisz, A.; Voigt, T.; Moerman, I. Performance analysis of multiple Indoor Positioning Systems in a healthcare environment. Int. J. Health Geogr. 2016, 15, 7. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Montoliu, R.; Sansano, E.; Torres-Sospedra, J.; Belmonte, O. IndoorLoc Platform: A Public Repository for Comparing and Evaluating Indoor Positioning Systems. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Shrestha, S.; Talvitie, J.; Lohan, E.S. Deconvolution-based indoor localization with WLAN signals and unknown access point locations. In Proceedings of the 2013 International Conference on Localization and GNSS (ICL-GNSS), Turin, Italy, 25–27 June 2013; pp. 1–6. [Google Scholar]

- Talvitie, J.; Lohan, E.S.; Renfors, M. The effect of coverage gaps and measurement inaccuracies in fingerprinting based indoor localization. In Proceedings of the International Conference on Localization and GNSS 2014 (ICL-GNSS 2014), Helsinki, Finland, 24–26 June 2014; pp. 1–6. [Google Scholar]

- King, T.; Kopf, S.; Haenselmann, T.; Lubberger, C.; Effelsberg, W. CRAWDAD Dataset Mannheim/Compass (v. 2008-04-11). Traceset: Fingerprintc. 2008. Available online: http://crawdad.org/mannheim/compass/20080411/fingerprint (accessed on 15 December 2017).

- Rhee, I.; Shin, M.; Hong, S.; Lee, K.; Kim, S.; Chong, S. CRAWDAD Dataset Ncsu/Mobilitymodels (v. 2009-07-23). 2009. Available online: http://crawdad.org/ncsu/mobilitymodels/20090723 (accessed on 15 December 2017).

- Nahrstedt, K.; Vu, L. CRAWDAD Dataset Uiuc/Uim (v. 2012-01-24). 2012. Available online: http://crawdad.org/uiuc/uim/20120124 (accessed on 15 December 2017).

- Purohit, A.; Pan, S.; Chen, K.; Sun, Z.; Zhang, P. CRAWDAD Dataset Cmu/Supermarket (v. 2014-05-27). 2014. Available online: http://crawdad.org/cmu/supermarket/20140527 (accessed on 15 December 2017).

- Parasuraman, R.; Caccamo, S.; Baberg, F.; Ogren, P. CRAWDAD Dataset Kth/Rss (v. 2016-01-05). 2016. Available online: http://crawdad.org/kth/rss/20160105 (accessed on 15 December 2017).

- Torres-Sospedra, J.; Montoliu, R.; Usó, A.M.; Avariento, J.P.; Arnau, T.J.; Benedito-Bordonau, M.; Huerta, J. UJIIndoorLoc: A new multi-building and multi-floor database for WLAN fingerprint-based indoor localization problems. In Proceedings of the 2014 International Conference on Indoor Positioning and Indoor Navigation (IPIN 2014), Busan, Korea, 27–30 October 2014; pp. 261–270. [Google Scholar]

- Torres-Sospedra, J.; Rambla, D.; Montoliu, R.; Belmonte, O.; Huerta, J. UJIIndoorLoc-Mag: A new database for magnetic field-based localization problems. In Proceedings of the 2015 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Banff, AB, Canada, 13–16 October 2015. [Google Scholar]

- Torres-Sospedra, J.; Montoliu, R.; Mendoza-Silva, G.M.; Belmonte, O.; Rambla, D.; Huerta, J. Providing Databases for Different Indoor Positioning Technologies: Pros and Cons of Magnetic Field and Wi-Fi Based Positioning. Mob. Inf. Syst. 2016, 2016, 6092618. [Google Scholar] [CrossRef]

- Bacciu, D.; Barsocchi, P.; Chessa, S.; Gallicchio, C.; Micheli, A. An experimental characterization of reservoir computing in ambient assisted living applications. Neural Comput. Appl. 2014, 24, 1451–1464. [Google Scholar] [CrossRef]

- Barsocchi, P.; Crivello, A.; Rosa, D.L.; Palumbo, F. A multisource and multivariate dataset for indoor localization methods based on WLAN and geo-magnetic field fingerprinting. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Alcala de Henares, Spain, 4–7 October 2016; pp. 1–8. [Google Scholar]

- Tiemann, J. Tudoetknjt/ed810593: Raw Experimental Results. Zenodo Repository, 2017. Available online: http://doi.org/10.5281/zenodo.322616 (accessed on 15 December 2017).

- Lohan, E.S.; Torres-Sospedra, J.; Richter, P.; Leppäkoski, H.; Huerta, J.; Cramariuc, A. Crowdsourced WiFi Database and Benchmark Software for Indoor Positioning. Zenodo Repository, 2017. Available online: https://doi.org/10.5281/zenodo.889797 (accessed on 15 December 2017).

- Lohan, E.S.; Torres-Sospedra, J.; Lepäkoski, H.; Richter, P.; Peng, Z.; Huerta, J. Wi-Fi Crowdsourced Fingerprinting Dataset for Indoor Positioning. Data 2017, 2, 32. [Google Scholar] [CrossRef]

- Torres-Sospedra, J.; Jiménez, A.R.; Knauth, S.; Moreira, A.; Beer, Y.; Fetzer, T.; Ta, V.C.; Montoliu, R.; Seco, F.; Mendoza-Silva, G.M.; et al. The smartphone-based offline indoor location competition at IPIN 2016: Analysis and future work. Sensors 2017, 17, 557. [Google Scholar] [CrossRef] [PubMed]

- The GetSensorData Android App for Registering All Sensor Stream in a Smartphone. Available online: https://lopsi.weebly.com/downloads.html (accessed on 15 December 2017).

- Mendoza-Silva, G.M.; Torres-Sospedra, J.; Huerta, J. More Realistic Error Distance Calculation for WiFi Indoor Positioning Systems Accuracy Evaluation. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Knauth, S. Smartphone PDR positioning in large environments employing WiFi, particle filter, and backward optimization. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Moreira, A.; Nicolau, M.J.; Costa, A.; Meneses, F. Indoor tracking from multidimensional sensor data. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Alcalá de Henares, Spain, 4–7 October 2016. [Google Scholar]

- Kang, W.; Han, Y. SmartPDR: Smartphone-Based Pedestrian Dead Reckoning for Indoor Localization. IEEE Sens. J. 2015, 15, 2906–2916. [Google Scholar] [CrossRef]

- Willemsen, T.; Keller, F.; Sternberg, H. A topological approach with MEMS in smartphones based on routing-graph. In Proceedings of the 2015 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Banff, AB, Canada, 13–16 October 2015. [Google Scholar]

- Knauth, S.; Storz, M.; Dastageeri, H.; Koukofikis, A.; Mähser-Hipp, N.A. Fingerprint calibrated centroid and scalar product correlation RSSI positioning in large environments. In Proceedings of the 2015 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Banff, AB, Canada, 13–16 October 2015. [Google Scholar]

| Scenario | Subset | Logfiles | Total Length (m) | Num. Ref. Points | Total Duration (s) |

|---|---|---|---|---|---|

| CAR | Train | 9 | ≈3720 | 450 | 9551 |

| Validation | 3 | ≈1865 | 225 | 4512 | |

| Test | 2 | ≈1180 | 126 | 2620 | |

| UJITI | Train | 4 | ≈3795 | 424 | 4105 |

| Validation | 2 | ≈410 | 59 | 874 | |

| Test | 2 | ≈590 | 95 | 1134 | |

| UJIUB | Train | 12 | ≈3425 | 702 | 4755 |

| Validation | 4 | ≈1450 | 332 | 2544 | |

| Test | 3 | ≈1450 | 284 | 2764 | |

| total | 38 | ≈17,890 | 2697 | 32,859 |

| Scenario | Subset | Logfiles | Total Length (m) | Num. Ref. Points | Total Duration (s) |

|---|---|---|---|---|---|

| CAR | Train | 4 | ≈2180 | 254 | 4295 |

| Test | 2 | ≈1085 | 152 | 2447 | |

| UJITI | Train | 2 | ≈1640 | 561 | 1724 |

| Test | 2 | ≈740 | 121 | 673 | |

| UJIUB | Train | 5 | ≈1615 | 294 | 2286 |

| Test | 1 | ≈375 | 91 | 730 | |

| UAH | Train | 6 | ≈3035 | 320 | 5603 |

| Test | 4 | ≈2200 | 214 | 4755 | |

| total | 26 | ≈12,860 | 2007 | 22,513 | |

| total * | 16 | ≈7630 | 1473 | 12,155 |

| UMinho Team | AraraDS Team | Yai Team | HFTS Team | |

|---|---|---|---|---|

| Score (m) | 3.48 | 3.53 | 4.41 | 4.45 |

| UMinho Team | AraraDS Team | |||||||

| 3rd Q (m) | MPE (m) | X-Y (m) | Flr (%) | 3rd Q (m) | MPE (m) | X-Y (m) | Flr (%) | |

| All Logfiles | ||||||||

| Logfile 01 | 100 | 100 | ||||||

| Logfile 02 | 100 | 100 | ||||||

| Logfile 03 | ||||||||

| Logfile 04 | ||||||||

| Logfile 05 | ||||||||

| Logfile 06 | 100 | 100 | ||||||

| Logfile 07 | 100 | 98 | ||||||

| Average | ||||||||

| Yai Team | HFTS Team | |||||||

| 3rd Q (m) | MPE (m) | X-Y (m) | Flr (%) | 3rd Q (m) | MPE (m) | X-Y (m) | Flr (%) | |

| All Logfiles | ||||||||

| Logfile 01 | 100 | 100 | ||||||

| Logfile 02 | 100 | 100 | ||||||

| Logfile 03 | ||||||||

| Logfile 04 | ||||||||

| Logfile 05 | ||||||||

| Logfile 06 | 100 | 100 | ||||||

| Logfile 07 | 100 | 100 | ||||||

| Average | ||||||||

| 2016 Winner (Score: 5.85) | 2017 Winner (Score: 3.85) | |||

|---|---|---|---|---|

| MPE (m) | Flr (%) | MPE (m) | Flr (%) | |

| Average logfiles CAR | 1.98 ± 0.35 | 100 ± 0 | 3.4 ± 0.47 | 100 ±0 |

| Average logfiles UJIUB | 5.16 ± 0 | 89.01 ± 0 | 2.98 ± 0.63 | 93.34 ± 2.52 |

| Average logfiles UJITI | 2.27 ± 0.33 | 100 ± 0 | 2.51 ± 0.06 | 100 ± 0 |

| Average logfiles UAH | 10.37 ± 6.55 | 93.18 ± 8 | - | - |

| Average (all logfiles except UAH) | 2.73 ± 1.39 | 97.8 ± 4.91 | 2.96 ± 0.55 | 97.14 ± 3.85 |

| Competicion | Track | Winner’s Accuracy (m) | Metric |

|---|---|---|---|

| MS-ISPN 2014 | infrastructure-based | 0.72 | MPE |

| MS-ISPN 2014 | infrastructure-free | 1.76 | MPE |

| MS-ISPN 2015 | infrastructure-based | 0.31 | MPE |

| MS-ISPN 2015 | infrastructure-free | 0.2 | MPE |

| IPIN 2015 | Smartphone (on-site) | 6.6 | 3rd Quartile |

| IPIN 2015 | PDR | 2.4 | 3rd Quartile |

| IPIN 2015 | Smartphone (off-site) | 8.3 | 3rd Quartile |

| MS-ISPN 2016 | 2D Positioning | 1.2 | MPE |

| MS-ISPN 2016 | 3D Positioning | 0.16 | MPE |

| IPIN 2016 | Smartphone (on-site) | 5.4 | 3rd Quartile |

| IPIN 2016 | PDR | 1.5 | 3rd Quartile |

| IPIN 2016 | Smartphone (off-site) | 5.8 | 3rd Quartile |

| IPIN 2016 | Robotics | 0.1 | 3rd Quartile |

| MS-ISPN 2017 | 2D Positioning | 2.2 | MPE |

| MS-ISPN 2017 | 3D Positioning | 0.03 | MPE |

| IPIN 2017 | Smartphone (on-site) | 8.8 | 3rd Quartile |

| IPIN 2017 | PDR | 2.04 | 3rd Quartile |

| IPIN 2017 | Smartphone (off-site) | 3.48 | 3rd Quartile |

| Competition | Trajectories | Length (m) | Ref. Points | Duration (s) |

|---|---|---|---|---|

| MS-ISPN 2014 | 1 | N/A | 20 | <1200 |

| MS-ISPN 2015 | 1 | 162 | 20 | <900 |

| IPIN 2015 Tracks 1 & 2 | 1 | 645 | 62 | 852–981 |

| MS-ISPN 2016 | 1 | 81 | 15 | <900 |

| IPIN 2016 Tracks 1 & 2 | 1 | 674 | 56 | 718–1129 |

| IPIN 2016 Track 3 | 9 | 4398 | 578 | 8605 |

| IPIN 2016 Track 3 * | 5 | 2198 | 364 | 3850 |

| MS-ISPN 2017 | 1 | 91 | 20 | N/A |

| IPIN 2017 Track 1 & 2 | 1 | 530 | 58 | 667–809 |

| IPIN 2017 Track 3 | 7 | 3220 | 505 | 6518 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Torres-Sospedra, J.; Jiménez, A.R.; Moreira, A.; Lungenstrass, T.; Lu, W.-C.; Knauth, S.; Mendoza-Silva, G.M.; Seco, F.; Pérez-Navarro, A.; Nicolau, M.J.; et al. Off-Line Evaluation of Mobile-Centric Indoor Positioning Systems: The Experiences from the 2017 IPIN Competition. Sensors 2018, 18, 487. https://doi.org/10.3390/s18020487

Torres-Sospedra J, Jiménez AR, Moreira A, Lungenstrass T, Lu W-C, Knauth S, Mendoza-Silva GM, Seco F, Pérez-Navarro A, Nicolau MJ, et al. Off-Line Evaluation of Mobile-Centric Indoor Positioning Systems: The Experiences from the 2017 IPIN Competition. Sensors. 2018; 18(2):487. https://doi.org/10.3390/s18020487

Chicago/Turabian StyleTorres-Sospedra, Joaquín, Antonio R. Jiménez, Adriano Moreira, Tomás Lungenstrass, Wei-Chung Lu, Stefan Knauth, Germán Martín Mendoza-Silva, Fernando Seco, Antoni Pérez-Navarro, Maria João Nicolau, and et al. 2018. "Off-Line Evaluation of Mobile-Centric Indoor Positioning Systems: The Experiences from the 2017 IPIN Competition" Sensors 18, no. 2: 487. https://doi.org/10.3390/s18020487