An Omnidirectional Vision Sensor Based on a Spherical Mirror Catadioptric System

Abstract

:1. Introduction

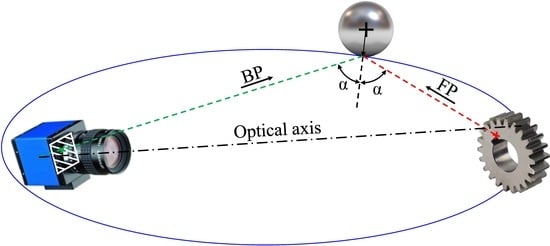

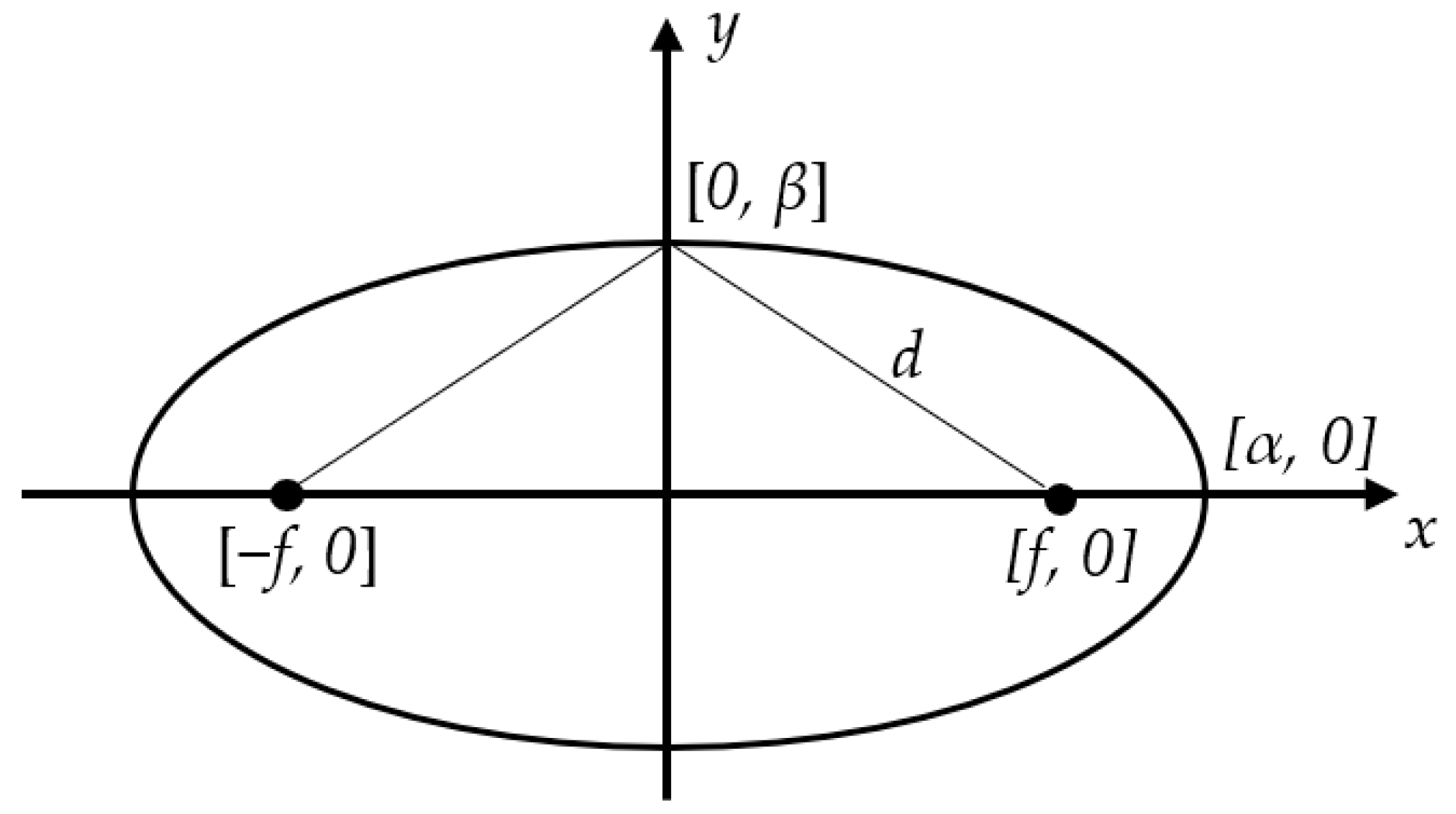

2. Background

3. Proposed Projection Model

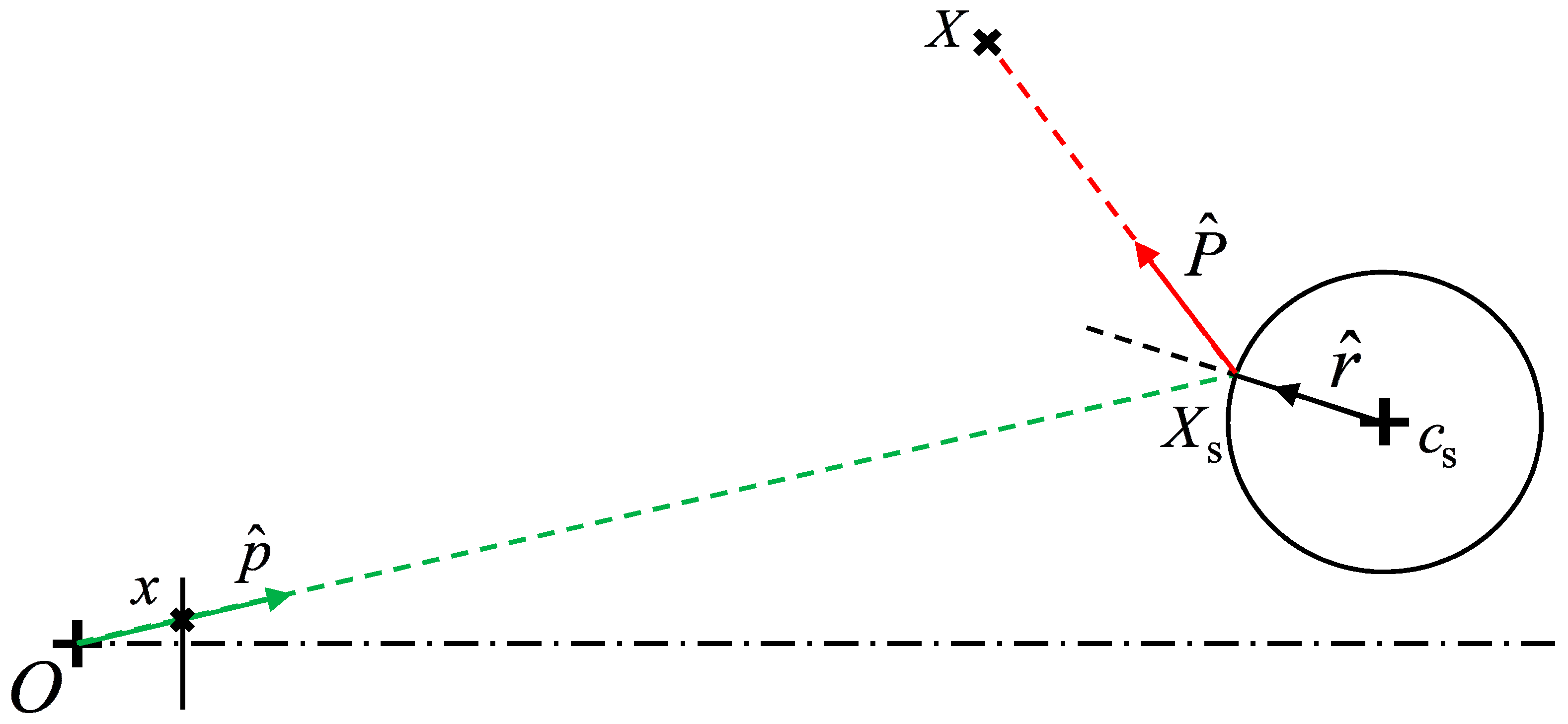

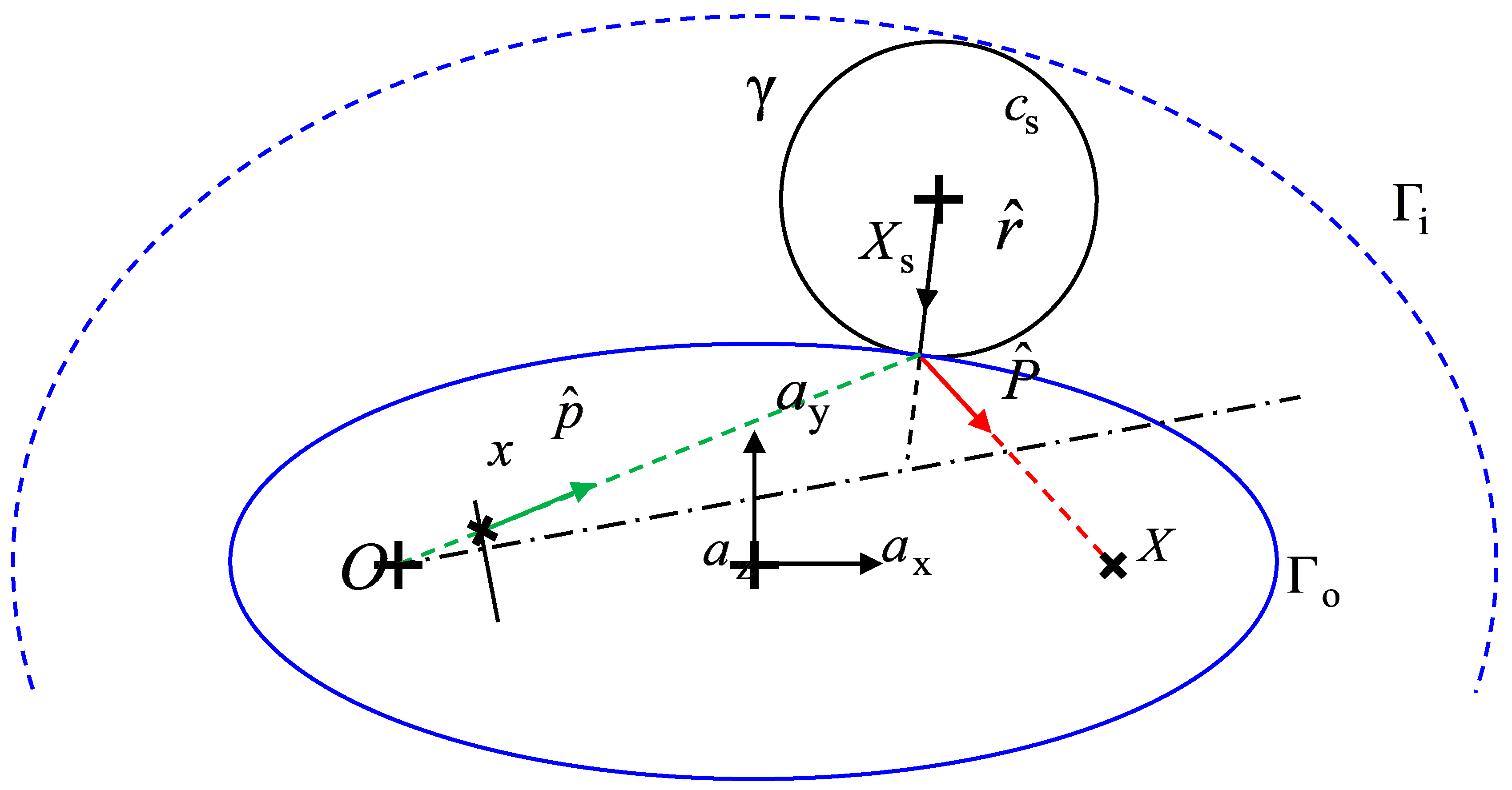

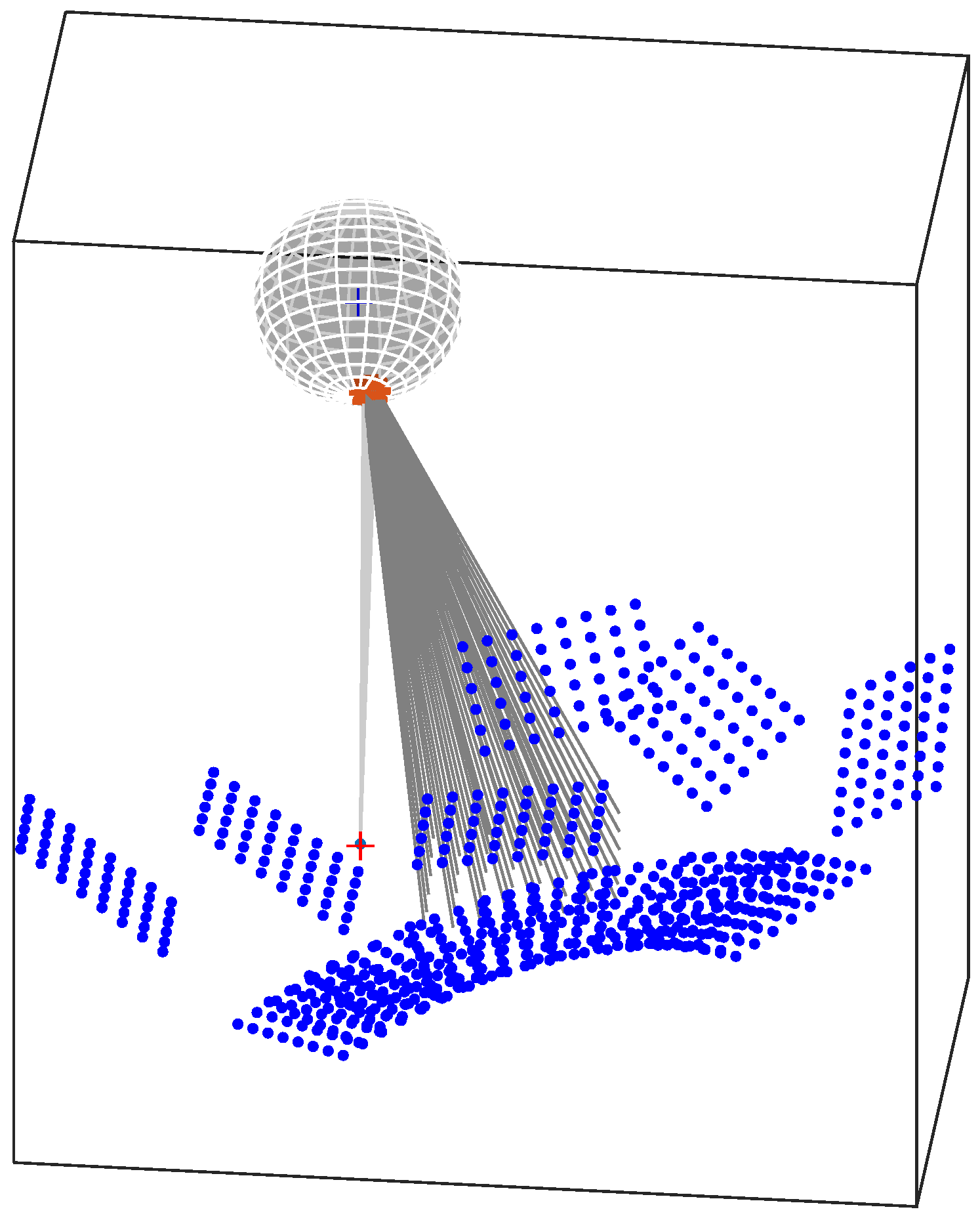

3.1. Backward Projection Model

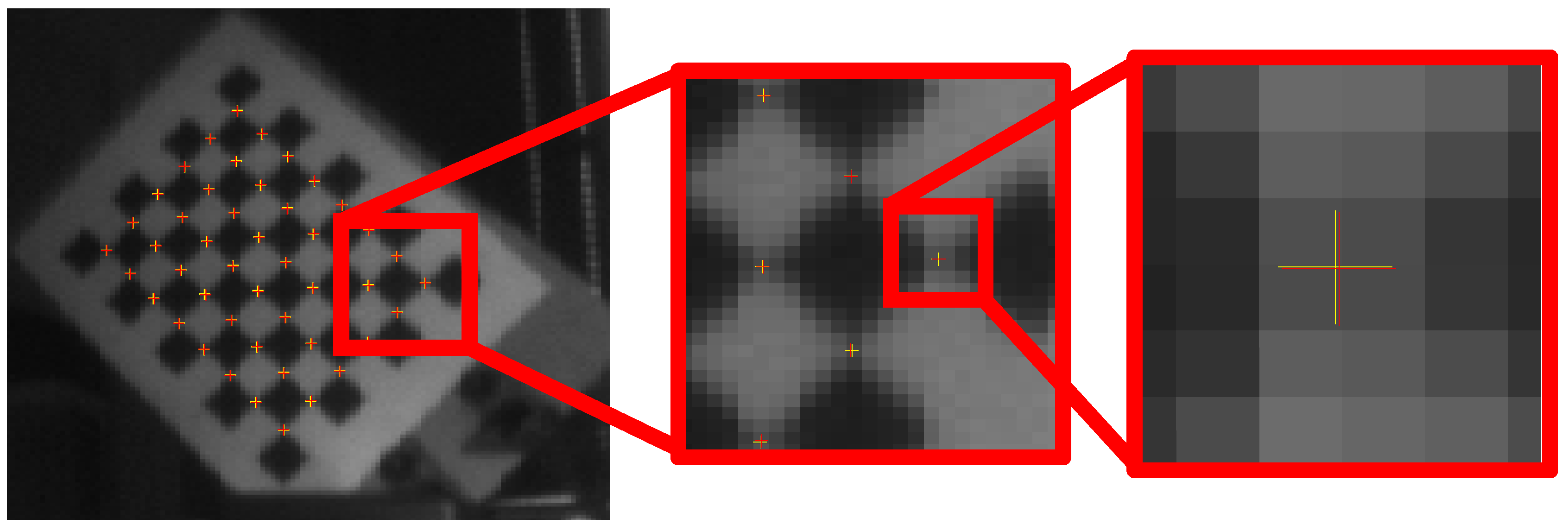

3.2. Forward Projection Model

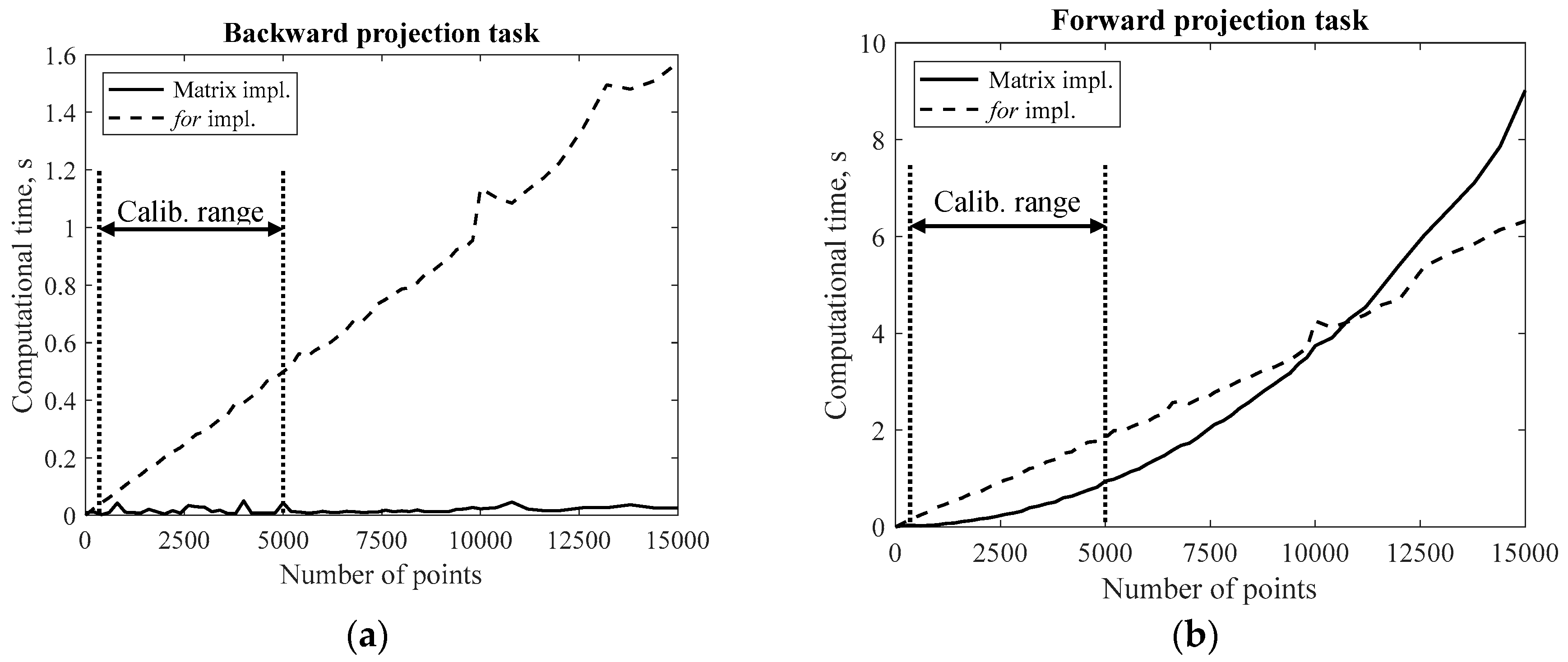

4. Software Implementation

4.1. Matrix Format

4.2. Jacobian Computation

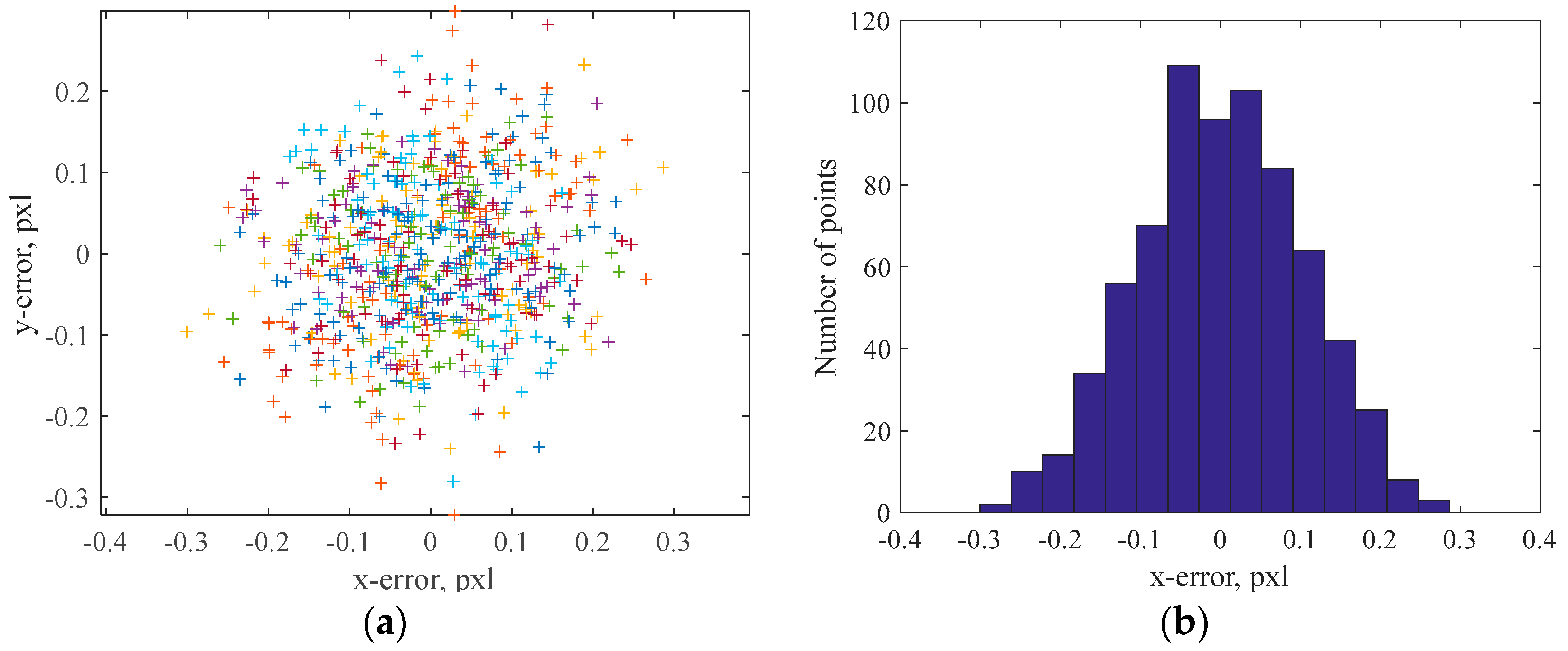

5. Validation

6. Application to Catadioptric Camera Calibration

7. Conclusions

Author Contributions

Conflicts of Interest

Appendix A

References

- Jaramillo, C.; Valenti, R.G.; Guo, L.; Xiao, J.Z. Design and analysis of a single-camera omnistereo sensor for quadrotor micro aerial vehicles (MAVs). Sensors 2016, 16, 217. [Google Scholar] [CrossRef] [PubMed]

- Sturm, P.; Ramalingam, S.; Tardif, J.-P.; Gasparini, S.; Barreto, J. Camera models and fundamental concepts used in geometric computer vision. Found. Trends Comput. Graph. Vis. 2011, 6, 1–183. [Google Scholar] [CrossRef]

- Ieng, S.H.; Benosman, R. Geometric construction of the caustic curves for catadioptric sensors. In Proceedings of the 2004 International Conference on IEEE Image, Singapore, 24–27 October 2004; pp. 3387–3390. [Google Scholar]

- Yoshizawa, T. Handbook of Optical Metrology: Principles and Applications, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2015. [Google Scholar]

- Scaramuzza, D.; Martinelli, A.; Siegwart, R. A toolbox for easily calibrating omnidirectional cameras. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 5695–5701. [Google Scholar]

- Mei, C.; Rives, P. Single view point omnidirectional camera calibration from planar grids. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation (ICRA), Roma, Italy, 10–14 April 2007; pp. 3945–3950. [Google Scholar]

- Goncalves, N. On the reflection point where light reflects to a known destination on quadratic surfaces. Opt. Lett. 2010, 35, 101–102. [Google Scholar] [CrossRef] [PubMed]

- Micusik, B.; Pajdla, T. Structure from motion with wide circular field of view cameras. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1135–1149. [Google Scholar] [CrossRef] [PubMed]

- Lhuillier, M. Automatic scene structure and camera motion using a catadioptric system. Comput. Vis. Image Underst. 2008, 109, 186–203. [Google Scholar] [CrossRef]

- Agrawal, A.; Taguchi, Y.; Ramalingam, S. Beyond alhazen’s problem: Analytical projection model for non-central catadioptric cameras with quadric mirrors. In Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition (Cvpr), Colorado Springs, CO, USA, 20–25 June 2011. [Google Scholar]

- Agrawal, A.; Taguchi, Y.; Ramalingam, S. Analytical forward projection for axial non-central dioptric and catadioptric cameras. In Proceedings of the European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010; pp. 129–143. [Google Scholar]

- Goncalves, N.; Araujo, H. Estimating parameters of noncentral catadioptric systems using bundle adjustment. Comput. Vis. Image Underst. 2009, 113, 11–28. [Google Scholar] [CrossRef]

- Chong, N.S.; Kho, Y.H.; Wong, M.L.D. A closed form unwrapping method for a spherical omnidirectional view sensor. Eurasip J. Image Video Process. 2013, 2013, 5. [Google Scholar] [CrossRef]

- Goncalves, N.; Nogueira, A.C.; Miguel, A.L. Forward projection model of non-central catadioptric cameras with spherical mirrors. Robotica 2017, 35, 1378–1396. [Google Scholar] [CrossRef]

- Neri, P.; Barone, S.; Paoli, A.; Razionale, A. Spherical Mirror Forward and Backward Projection. Available online: https://it.mathworks.com/matlabcentral/fileexchange/65891-spherical-mirror-forward-and-backward-projection (accessed on 29 January 2018).

- Glaeser, G. Reflections on spheres and cylinders of revolution. J. Geom. Graph. 1999, 3, 121–139. [Google Scholar]

- Shmakov, S.L. A universal method of solving quartic equations. Int. J. Pure Appl. Math. 2011, 71, 251–259. [Google Scholar]

- Bouguet, J.Y. Camera Calibration Toolbox for Matlab. Available online: http://www.vision.caltech.edu/bouguetj/calib_doc/ (accessed on 27 January 2018).

- Puig, L.; Bermudez, J.; Sturm, P.; Guerrero, J.J. Calibration of omnidirectional cameras in practice: A comparison of methods. Comput. Vis. Image Underst. 2012, 116, 120–137. [Google Scholar] [CrossRef]

- Perdigoto, L.; Araujo, H. Calibration of mirror position and extrinsic parameters in axial non-central catadioptric systems. Comput. Vis. Image Underst. 2013, 117, 909–921. [Google Scholar] [CrossRef] [Green Version]

- Rufli, M.; Scaramuzza, D.; Siegwart, R. Automatic detection of checkerboards on blurred and distorted images. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2008), Nice, France, 22–26 September 2008. [Google Scholar]

- Trujillo-Ortiz, A.; Hernandez-Walls, R.; Barba-Rojo, K.; Cupul-Magana, L. Roystest: Royston’s Multivariate Normality Test. A Matlab File. 2007. Available online: http://www.mathworks.com/matlabcentral/fileexchange/17811 (accessed on 27 January 2018).

- How to Draw Ellipse and Circle Tangent to Each Other? Available online: https://math.stackexchange.com/q/467299 (accessed on 27 January 2018).

| With Jacobian | Without Jacobian | |

|---|---|---|

| Time | 27 s | 2301 s |

| Final target function | 14.3 pxl2 | |

| Max rep. dist. | 0.3235 pxl | |

| Min rep. dist. | 0.0099 pxl | |

| cs | 0.1265 pxl | |

| r | [−1.9, −8.6, 284.3] mm | |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Barone, S.; Carulli, M.; Neri, P.; Paoli, A.; Razionale, A.V. An Omnidirectional Vision Sensor Based on a Spherical Mirror Catadioptric System. Sensors 2018, 18, 408. https://doi.org/10.3390/s18020408

Barone S, Carulli M, Neri P, Paoli A, Razionale AV. An Omnidirectional Vision Sensor Based on a Spherical Mirror Catadioptric System. Sensors. 2018; 18(2):408. https://doi.org/10.3390/s18020408

Chicago/Turabian StyleBarone, Sandro, Marina Carulli, Paolo Neri, Alessandro Paoli, and Armando Viviano Razionale. 2018. "An Omnidirectional Vision Sensor Based on a Spherical Mirror Catadioptric System" Sensors 18, no. 2: 408. https://doi.org/10.3390/s18020408