Cutting Pattern Identification for Coal Mining Shearer through a Swarm Intelligence–Based Variable Translation Wavelet Neural Network

Abstract

:1. Introduction

2. Basic Theory

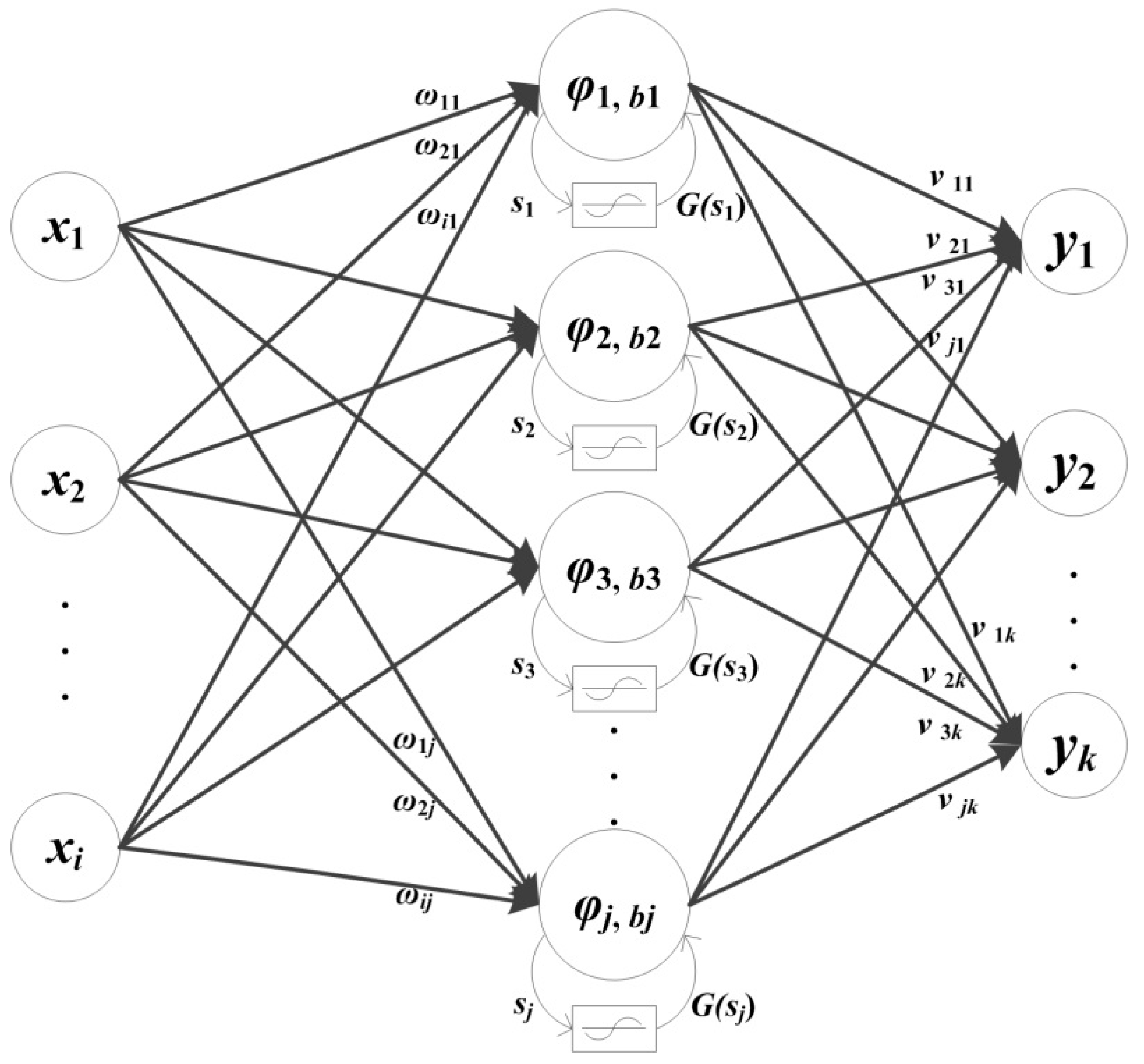

2.1. Variable Translation Wavelet Neural Network

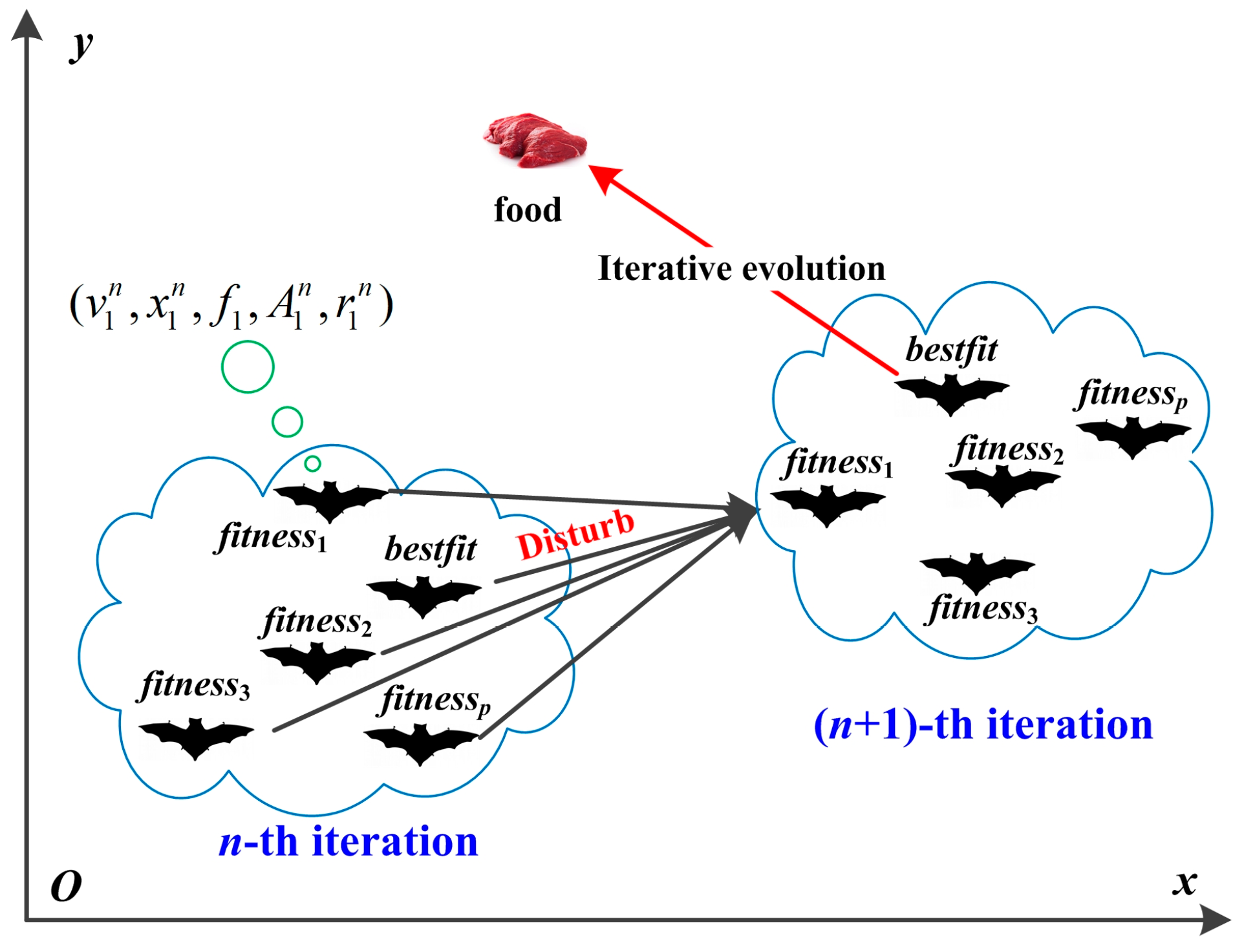

2.2. Bat Algorithm

3. Algorithm Design

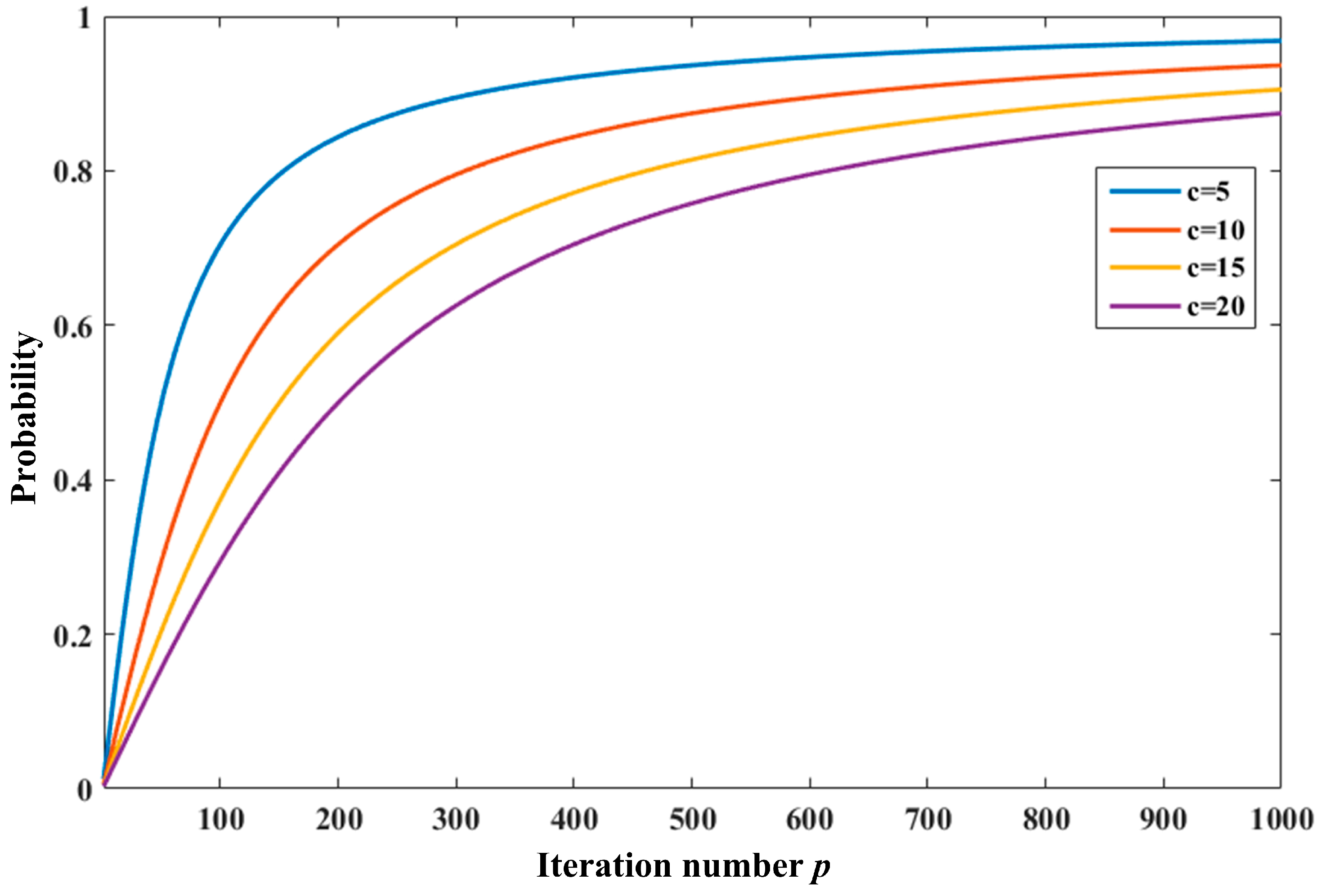

3.1. Modification of the Bat Algorithm

| Algorithm 1. The pseudocode of modified bat algorithm |

| Initialize P, A, r, f, α, γ, N, c and the objective function f(·). Initialize the position and velocity of each bat according to Equation (7). n = 0. Evaluate the fitness of each individual and find the best position xbestindex. while (n < N) if (f(xbestindex) remains unchanged more than c iterations) Rank the bats according to their fitness and divide them into two populations. Set xbestindex as the best native bat and generate new native bats as Equation (9). if (rand(0, 1) > r) Generate new native bat according to Equation (10). if (the new bat satisfy Equation (11)) Accept the new native bat and update the loudness and emission frequency end if end if Generate explorer bats randomly as Equation (7), calculate their fitness and find the best one xebestindex. Set xebestindex as the best explorer bat and generate new explorer bats as Equation (9). if (rand(0, 1) > r) Generate new explorer bat according to Equation (10). if (the new bat satisfy Equation (11)) Accept the new explorer bat and update the loudness and emission frequency end if end if Evaluate the fitness of all bats and search the best one x*. if (f(x*) is better than f(xebestindex)) Accept x* as the optimal. end if else Set xbestindex as the best bat and generate new bats as Equation (9). if (rand(0, 1) > r) Generate new bat according to Equation (10). if (the new bat satisfy Equation (11)) Accept the new bat and update the loudness and emission frequency end if end if Evaluate the fitness of all bats and search the best one x*. if (f(x*) is better than f(xebestindex)) Accept x* as the optimal. end if end if Search the current best bat. n = n + 1. end while Postprocess the results and visualization. |

3.2. Flowchart of Cutting Pattern Method

4. Simulation and Analysis

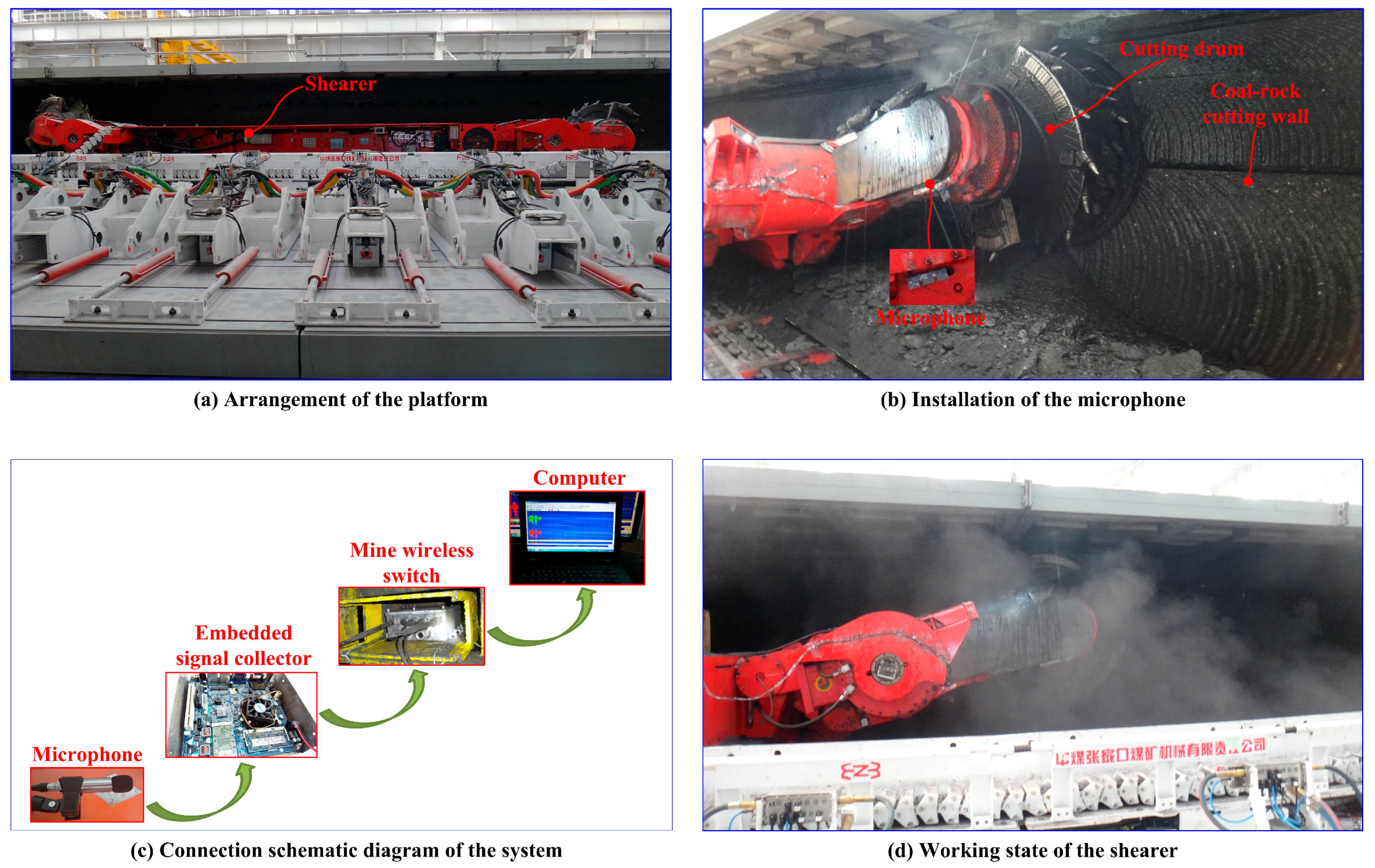

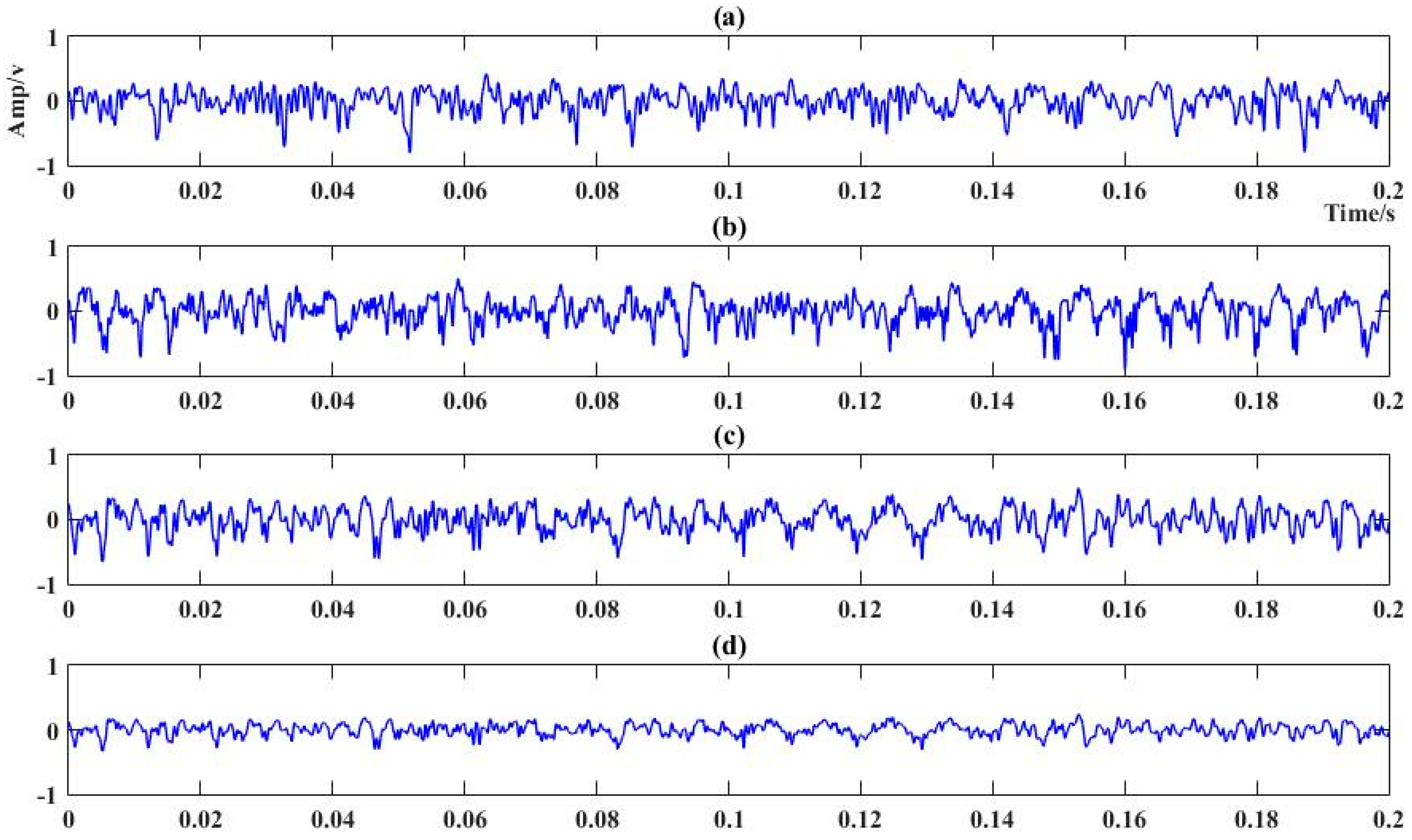

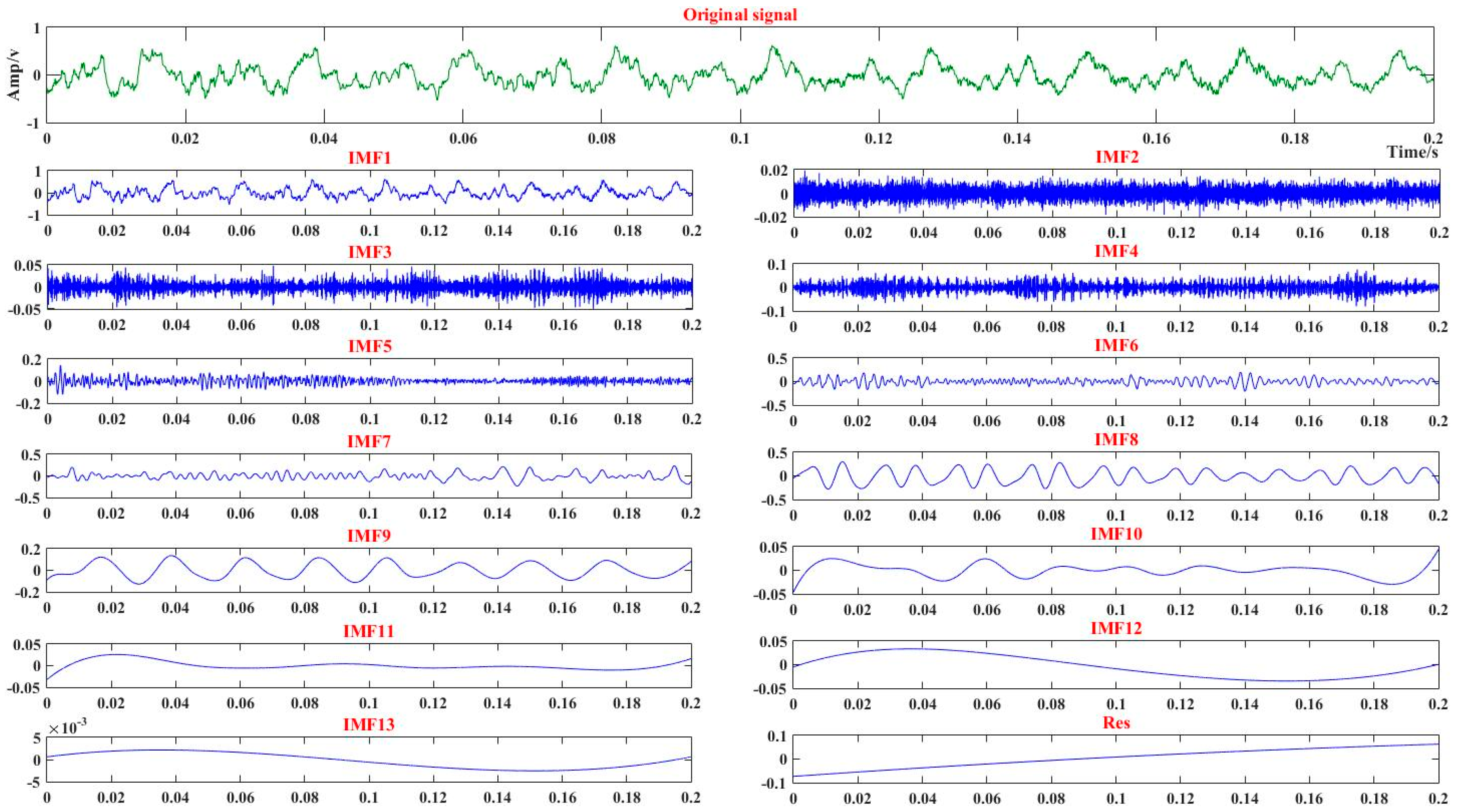

4.1. Cutting Sound Acquisition and Pretreatment

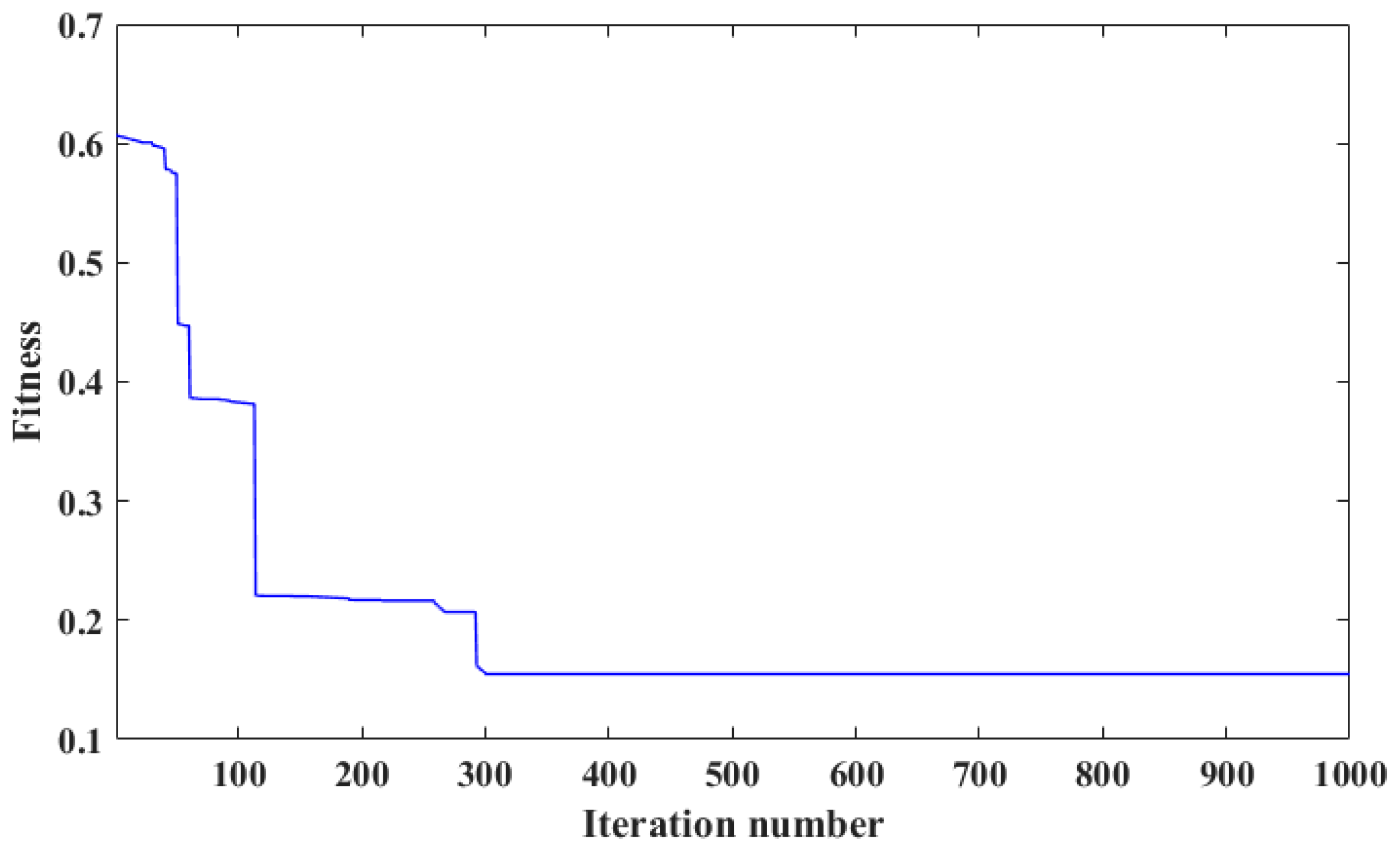

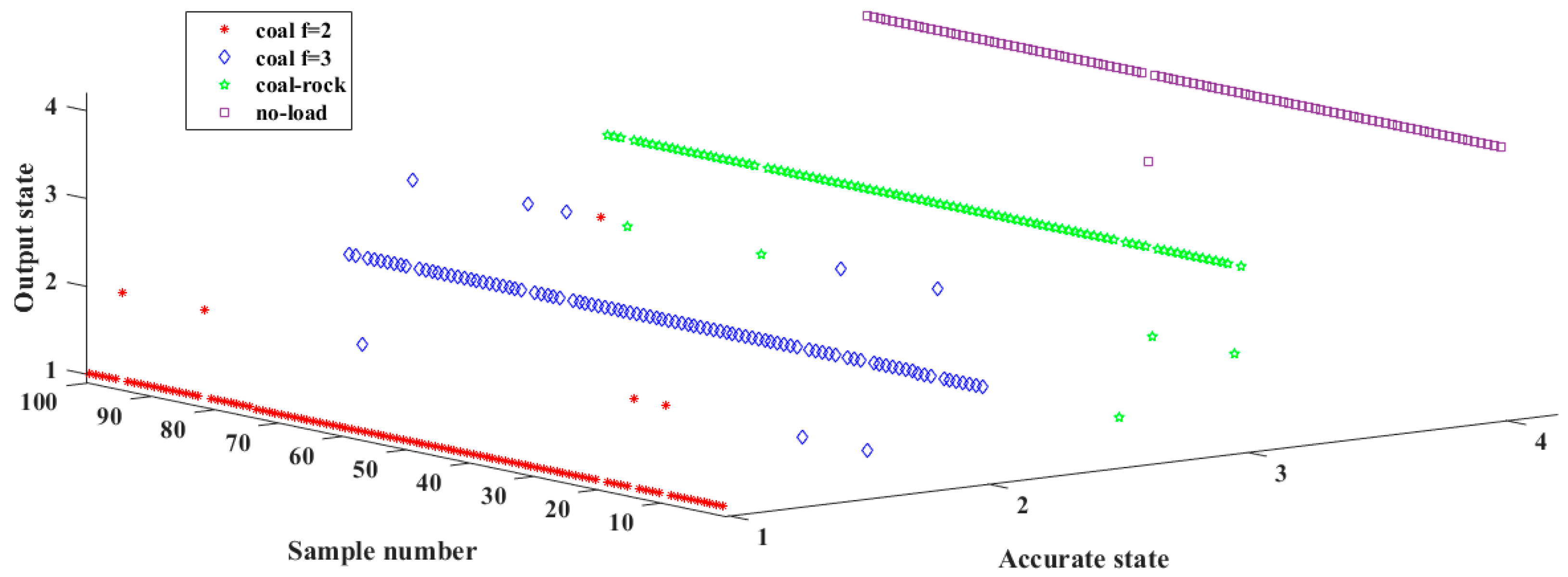

4.2. Training and Testing of the VTWNN-MBA

4.3. Comparison and Discussion

5. Conclusions and Future Work

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Xu, J.L.; Wang, Z.C.; Zhang, W.Z.; He, Y.P. Coal-rock interface recognition based on MFCC and neural network. Int. J. Signal Process. Image Process. Pattern Recognit. 2013, 6, 191–200. [Google Scholar]

- Jiang, Y.; Li, Z.X.; Zhang, C.; Hu, C.; Peng, Z. On the bi-dimensional variational decomposition applied to nonstationary vibration signals for rolling bearing crack detection in coal cutters. Meas. Sci. Technol. 2016, 27, 065103. [Google Scholar] [CrossRef]

- Bessinger, S.L.; Neison, M.G. Remnant roof coal thickness measurement with passive gamma ray instruments in coal mine. IEEE Trans. Ind. Appl. 1993, 29, 562–565. [Google Scholar] [CrossRef]

- Dong, Y.F.; Du, H.G.; Ren, W.J.; Du, Y.M. Experimental Research on Infrared Information Varying with Stress. J. Liaoning Tech. Univ. (Nat. Sci. Ed.) 2001, 20, 495–496. [Google Scholar]

- Zhang, Q.; Wang, H.J.; Wang, Z.; Wen, X.Z. Analysis of Coal—Rock’s Cutting Characteristics and Flash Temperature for Peak Based on Infrared Thermal Image Testing. Chin. J. Sens. Actuators 2016, 29, 686–692. [Google Scholar]

- Wang, B.P.; Wang, Z.C.; Zhang, W.Z. Coal-rock interface recognition method based on EMD and neural network. J. Vib. Meas. Diagn. 2012, 32, 586–590. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Ding, E.J.; Hu, K.X.; Zhao, R. Effects of coal-rock scattering characteristics on the GPR detection of coal-rock interface. J. China Univ. Min. Technol. 2016, 45, 34–41. [Google Scholar]

- Deptula, A.; Kunderman, D.; Osinski, P.; Radziwanowska, U.; Wlostowski, R. Acoustic diagnostics applications in the study of technical condition of combustion engine. Arch. Acoust. 2016, 41, 345–350. [Google Scholar] [CrossRef]

- Hemmati, F.; Orfali, W.; Gadala, M.S. Roller bearing acoustic signature extraction by wavelet packet transform, applications in fault detection and size estimation. Appl. Acoust. 2016, 104, 101–118. [Google Scholar] [CrossRef]

- Jozwik, J. Identification and monitoring of noise sources of CNC machine tools by acoustic holography methods. Adv. Sci. Technol. Res. J. 2016, 10, 127–137. [Google Scholar] [CrossRef]

- Glowacz, A. Diagnostics of Rotor Damages of Three-Phase Induction Motors Using Acoustic Signals and SMOFS-20-EXPANDED. Arch. Acoust. 2016, 41, 507–515. [Google Scholar] [CrossRef]

- Delgado-Arredondo, P.A.; Morinigo-Sotelo, D.; Osornio-Rios, R.A.; Avina-Cervantes, J.G.; Rostro-Gonzalez, H.; Romero-Troncoso, R.D. Methodology for fault detection in induction motors via sound and vibration signals. Mech. Syst. Signal Proc. 2017, 83, 568–589. [Google Scholar] [CrossRef]

- Van Hecke, B.; Yoon, J.; He, D. Low speed bearing fault diagnosis using acoustic emission sensors. Appl. Acoust. 2016, 105, 35–44. [Google Scholar] [CrossRef]

- Rezvanian, S.; Lockhart, T.E. Towards Real-Time Detection of Freezing of Gait Using Wavelet Transform on Wireless Accelerometer Data. Sensors 2016, 16, 475. [Google Scholar] [CrossRef] [PubMed]

- Kwon, S.-K.; Jung, H.-S.; Baek, W.-K.; Kim, D. Classification of Forest Vertical Structure in South Korea from Aerial Orthophoto and Lidar Data Using an Artificial Neural Network. Appl. Sci. 2017, 7, 1046. [Google Scholar] [CrossRef]

- Fabio, A.; Gaelle, B.-L.; Romain, H. Advances in artificial neural networks, machine learning and computational intelligence. Neurocomputing 2017, 268, 1–3. [Google Scholar]

- Narendra, K.S.; Parthasarathy, K. Identification and control of dynamical systems using neural networks. IEEE Trans. Neural Netw. 1990, 1, 4–27. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Q.G.; Benveniste, A. Wavelet Networks. IEEE Trans. Neural Netw. 1992, 3, 889–898. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Q.H. Using wavelet network in nonparametric estimation. IEEE Trans. Neural Netw. 1997, 8, 227–236. [Google Scholar] [CrossRef] [PubMed]

- Liu, Q.P.; Yu, X.L.; Feng, Q.K. Fault Diagnosis Using Wavelet Neural Networks. Neural Process. Lett. 2003, 18, 115–123. [Google Scholar]

- Turkoglu, I.; Arslan, A.; Ilkay, E. A wavelet neural network for the detection of heart valve diseases. Expert Syst. 2003, 20, 1–7. [Google Scholar] [CrossRef]

- Lin, S.F.; Pan, Y.X.; Sun, X.X. Noisy Speech Recognition Based on Hybrid Model of Hidden Markov Models and Wavelet Neural Network. J. Syst. Simul. 2005, 7, 1720–1723. [Google Scholar]

- Xiao, Y.; Li, Z.X. Wavelet Neural Network Blind Equalization with Cascade Filter Base on RLS in Underwater Acoustic Communication. Inf. Technol. J. 2011, 10, 2440–2445. [Google Scholar]

- Phyo, P.S.; Sai, H.L.; Hung, T.N. Hybrid PSO-based variable translation wavelet neural network and its application to hypoglycemia detection system. Neural Comput. Appl. 2013, 23, 2177–2184. [Google Scholar]

- Khan, M.A.; Uddin, M.N.; Rahman, M.A. A Novel Wavelet-Neural-Network-Based Robust Controller for IPM Motor Drives. IEEE Trans. Ind. Appl. 2013, 49, 2341–2351. [Google Scholar] [CrossRef]

- San, P.P.; Ling, S.H.; Nguyen, H.T. Optimized variable translation wavelet neural network and its application in hypoglycemia detection system. In Proceedings of the 2012 7th IEEE Conference on Industrial Electronics and Applications, Singapore, 18–20 July 2012; pp. 547–551. [Google Scholar]

- Meng, Y.B.; Zou, J.H.; Gan, X.S.; Zhao, L. Research on WNN aerodynamic modeling from flight data based on improved PSO algorithm. Neurocomputing 2012, 8, 212–221. [Google Scholar]

- Ling, S.H.; Iu, H.H.C.; Leung, F.H.F.; Chan, K.Y. Improved Hybrid Particle Swarm Optimized Wavelet Neural Network for Modeling the Development of Fluid Dispensing for Electronic Packaging. IEEE Trans. Ind. Electron. 2008, 55, 3447–3460. [Google Scholar] [CrossRef]

- Ling, S.H.; San, P.P.; Chan, K.Y.; Leung, F.H.F.; Liu, Y. An intelligent swarm based-wavelet neural network for affective mobile phone design. Neurocomputing 2014, 142, 30–38. [Google Scholar] [CrossRef]

- Ling, S.H.; Leung, F.H.F. Genetic Algorithm-Based Variable Translation Wavelet Neural Network and its Application. In Proceedings of the International Joint Conference on Neural Networks, Montreal, QC, Canada, 31 July–4 August 2005; pp. 1365–1370. [Google Scholar]

- Yang, X.-S. A New Metaheuristic Bat-Inspired Algorithm. In International Workshop on Nature Inspired Cooperative Strategies for Optimization; Springer: Berlin/Heidelberg, Germany, 2010; Volume 284, pp. 65–74. [Google Scholar]

- Yang, X.-S. Bat algorithm for multi-objective optimization. Int. J. Bio-Inspired Comput. 2011, 3, 267–274. [Google Scholar] [CrossRef]

- Yang, X.-S.; Gandomi, A.H. Bat algorithm: A novel approach for global engineering optimization. Eng. Comput. 2012, 29, 464–483. [Google Scholar] [CrossRef]

- Binu, D.; Selvi, M. BFC: Bat Algorithm Based Fuzzy Classifier for Medical Data Classification. J. Med. Imaging Health Inform. 2015, 5, 599–606. [Google Scholar] [CrossRef]

- Behnam, M.; Hyun, K.; Shaya, S. Bat intelligence search with application to multi-objective multiprocessor scheduling optimization. Int. J. Adv. Manuf. Technol. 2012, 60, 1071–1086. [Google Scholar]

- Amirmohammad, T.; Jalal, R.; Esmaeil, H. A Novel Forecasting Model Based on Support Vector Regression and Bat Meta-Heuristic (Bat-SVR): Case Study in Printed Circuit Board Industry. Int. J. Inf. Technol. Decis. Mak. 2015, 14, 195–215. [Google Scholar]

- Jaddi, N.S.; Abdullah, S.; Hamdan, A.R. Multi-population cooperative bat algorithm-based optimization of artificial neural network model. Inf. Sci. 2015, 294, 628–644. [Google Scholar] [CrossRef]

- Cai, X.J.; Gao, X.Z.; Xue, Y. Improved bat algorithm with optimal forage strategy and random disturbance strategy. Int. J. Bio-Inspired Comput. 2016, 8, 205–214. [Google Scholar] [CrossRef]

- Zhang, B.Y.; Yuan, H.W.; Sun, L.J.; Shi, J.; Ma, Z.; Zhou, L.M. A two-stage framework for bat algorithm. Neural Comput. Appl. 2017, 28, 2605–2619. [Google Scholar] [CrossRef]

- Hasançebi, O.; Azad, S.K. Improving Computational Efficiency of Bat-Inspired Algorithm in Optimal Structural Design. Adv. Struct. Eng. 2015, 18, 1003–1015. [Google Scholar] [CrossRef]

- Goyal, S.; Patterh, M.S. Modified Bat Algorithm for Localization of Wireless Sensor Network. Wirel. Pers. Commun. 2016, 86, 657–670. [Google Scholar] [CrossRef]

- Hasançebi, O.; Carbas, S. Bat inspired algorithm for discrete size optimization of steel frames. Adv. Eng. Softw. 2014, 67, 173–185. [Google Scholar] [CrossRef]

- Glowacz, A. Recognition of Acoustic Signals of Loaded Synchronous Motor Using FFT, MSAF-5 and LSVM. Arch. Acoust. 2015, 40, 197–203. [Google Scholar] [CrossRef]

- Yang, Z.X.; Zhong, J.H. A Hybrid EEMD-Based SampEn and SVD for Acoustic Signal Processing and Fault Diagnosis. Entropy 2016, 18, 112. [Google Scholar] [CrossRef]

- Li, Z.X.; Jiang, Y.; Hu, C.; Peng, Z. Recent progress on decoupling diagnosis of hybrid failures in gear transmission systems using vibration sensor signal: A review. Measurement 2016, 90, 4–19. [Google Scholar] [CrossRef]

- Tian, L.; Liu, X.; Zhang, B.; Liu, M.; Wu, L. Extraction of Rice Heavy Metal Stress Signal Features Based on Long Time Series Leaf Area Index Data Using Ensemble Empirical Mode Decomposition. Int. J. Environ. Res. Public Health 2017, 14, 1018. [Google Scholar] [CrossRef] [PubMed]

- Yi, C.; Lin, J.; Zhang, W.; Ding, J. Faults Diagnostics of Railway Axle Bearings Based on IMF’s Confidence Index Algorithm for Ensemble EMD. Sensors 2015, 15, 10991–11011. [Google Scholar] [CrossRef] [PubMed]

- Messina, A.R.; Vittal, V. Extraction of dynamic patterns from wide-area measurements using empirical orthogonal functions. IEEE Trans. Power Syst. 2007, 22, 682–692. [Google Scholar] [CrossRef]

- He, J.P.; Tang, X.B.; Gong, P.; Wang, P.; Wen, L.S.; Huang, X.; Han, Z.Y.; Yan, W.; Gao, L. Rapid radionuclide identification algorithm based on the discrete cosine transform and BP neural network. Ann. Nucl. Energy 2018, 112, 1–8. [Google Scholar] [CrossRef]

- Huang, X.D.; Wang, C.Y.; Fan, X.M.; Zhang, J.L.; Yang, C.; Wang, Z.D. Oil source recognition technology using concentration-synchronous-matrix-fluorescence spectroscopy combined with 2D wavelet packet and probabilistic neural network. Sci. Total Environ. 2018, 616–617, 632–638. [Google Scholar] [CrossRef] [PubMed]

- Wei, C.S.; Luo, J.J.; Dai, H.H.; Yin, Z.Y.; Ma, W.H.; Yuan, J.P. Globally robust explicit model predictive control of constrained systems exploiting SVM-based approximation. Int. J. Robust Nonlinear Control 2017, 27, 3000–3027. [Google Scholar] [CrossRef]

| Sample Number | Feature Vector |

|---|---|

| 1 | [0.493820, 0.018635, 0.002433, 0.003701, 0.001007, 0.000861, 0.000946, 0.000362, 0.000330, 0.000204, 0.000200, 0.000091, 0.000046] |

| 2 | [0.744507, 0.190640, 0.001730, 0.003545, 0.000902, 0.000844, 0.000783, 0.000187, 0.000305, 0.000197, 0.000167, 0.000080, 0.000140] |

| 3 | [0.700600, 0.081532, 0.001633, 0.004464, 0.000536, 0.000669, 0.000517, 0.000216, 0.000437, 0.000244, 0.000163, 0.000132, 0.000025] |

| 4 | [0.363571, 0.066428, 0.003079, 0.004894, 0.000692, 0.000852, 0.000895, 0.000415, 0.000399, 0.000256, 0.000155, 0.000107, 0.000003] |

| 5 | [0.480629, 0.035871, 0.009238, 0.014017, 0.001057, 0.001220, 0.003743, 0.000455, 0.000014, 0.000180, 0.000214, 0.000052, 0.000125] |

| 6 | [0.767436, 0.023610, 0.002480, 0.002233, 0.000964, 0.000818, 0.000401, 0.000157, 0.003202, 0.000255, 0.000136, 0.000823, 0.000227] |

| … | |

| 799 | [0.772048, 0.016429, 0.021885, 0.009308, 0.002668, 0.000636, 0.000302, 0.004158, 0.000097, 0.000159, 0.001217, 0.000137, 0.000038] |

| 800 | [0.268025, 0.015486, 0.001868, 0.007008, 0.000349, 0.001086, 0.001178, 0.000568, 0.000233, 0.000230, 0.000118, 0.000140, 0.000049] |

| Disturbance Coefficient | Iteration Time (s) | Fitness Value | Recognition Accuracy |

|---|---|---|---|

| 5 | 65.962150 | 0.150311 | 95.25% |

| 10 | 64.201883 | 0.154831 | 95.25% |

| 15 | 62.193844 | 0.163709 | 94.50% |

| 25 | 62.001930 | 0.180094 | 94.25% |

| 30 | 61.003760 | 0.183762 | 92.50% |

| 1000 | 60.227091 | 0.201358 | 91.50% |

| Compared Methods | Iteration Time (s) | Fitness Value | Recognition Accuracy |

|---|---|---|---|

| BPNN | 82.675028 | 0.330370 | 78.75% |

| PNN | 89.002130 | 0.310938 | 82.50% |

| SVM | 83.309544 | 0.311052 | 82.50% |

| VTWNN | 92.395211 | 0.310279 | 84.75% |

| VTWNN-PSO | 56.009550 | 0.229624 | 87% |

| VTWNN-GA | 79.362199 | 0.160962 | 95.25% |

| VTWNN-BA | 60.227091 | 0.201358 | 91.50% |

| VTWNN-MBA | 64.201883 | 0.154831 | 95.25% |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, J.; Wang, Z.; Tan, C.; Si, L.; Liu, X. Cutting Pattern Identification for Coal Mining Shearer through a Swarm Intelligence–Based Variable Translation Wavelet Neural Network. Sensors 2018, 18, 382. https://doi.org/10.3390/s18020382

Xu J, Wang Z, Tan C, Si L, Liu X. Cutting Pattern Identification for Coal Mining Shearer through a Swarm Intelligence–Based Variable Translation Wavelet Neural Network. Sensors. 2018; 18(2):382. https://doi.org/10.3390/s18020382

Chicago/Turabian StyleXu, Jing, Zhongbin Wang, Chao Tan, Lei Si, and Xinhua Liu. 2018. "Cutting Pattern Identification for Coal Mining Shearer through a Swarm Intelligence–Based Variable Translation Wavelet Neural Network" Sensors 18, no. 2: 382. https://doi.org/10.3390/s18020382