Towards the Use of Unmanned Aerial Systems for Providing Sustainable Services in Smart Cities

Abstract

:1. Introduction

2. Related Works

3. Background

3.1. Sustainability in Software Development

- Individual sustainability refers to private goods and individual human capital.

- Social sustainability relates to societal communities (mainly based on solidarity).

- Economical sustainability refers to assets, capital and, in general, added value achieved by the improvement of sustainability in a particular context.

- Environmental sustainability includes those activities performed to improve human welfare by protecting natural resources.

- Technical sustainability relates to the long-time usage of software systems and their adequate evolution over time.

3.2. Unmanned Aerial Systems

4. Necessities for a Sustainable UAS Architecture

- Step 1. Systematic Mapping Study (SMS): A systematic mapping study is carried out in order to identify a collection of representative case studies and areas where UAS are being used.

- –

- Output: Fields’ categorization: As a result of this step, the case studies are classified according to a particular categorization.

- Step 2. Feature analysis: A systematic analysis of the features required in each case is performed.

- –

- Outputs:

- *

- Features taxonomy: A new taxonomy where each feature is deeply defined and detailed. It represents the whole set of features that are present in all the case studies.

- *

- Features vs. case studies matching table: A table where the features that are required in each case study are summarized (grouped into the different categories).

- Step 3. Features vs. UAS matching: Based on an analysis of the UAS used in each case study and those that are more frequently commercialized, in this step, we compare the features identified in the case studies with those provided by the UAS in order to check whether the features may be provided or not by the UAS.

- –

- Output: The final result of this process is a table where we can easily check the features provided by all the UAS analyzed (both those used in the case studies and other commercial ones).

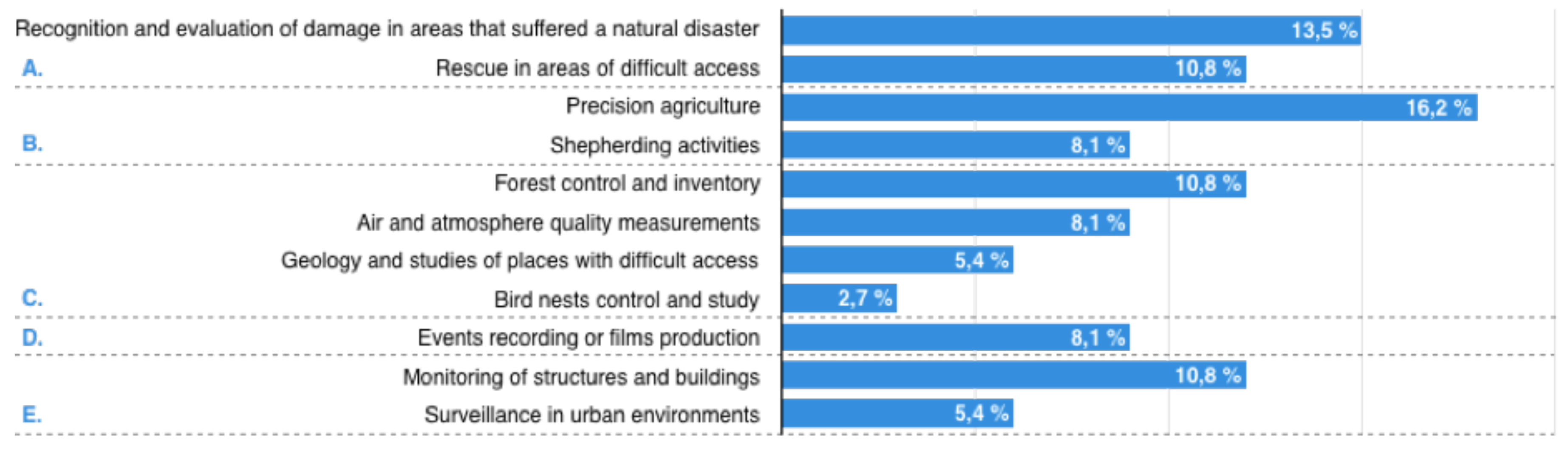

4.1. Features Required in Case Studies

- (UAS OR drone OR UAV OR RPA OR “unmanned aerial vehicle” OR “unmanned aerial system” OR “remotely piloted aircraft”) (RQ1)

- (“Case study” OR empirical OR experiment) (RQ2)

- (Feature OR property OR characteristic) (RQ3)

- (“Software engineering” OR algorithm OR method OR framework OR technology OR tool OR architecture OR system) (RQ1, RQ2 and RQ3)

- A.

- Disasters and emergency: This refers to the occurrence of a fateful event that alters the usual behavior of the environment. The main activities related to this category are:

- B.

- Agriculture and cattle raising: activities that are performed to grow crops or raise animals with the aim of obtaining either products to be consumed by humans and other animals or raw materials for industry. The activities included in this category are:

- C.

- Environmental control: tasks related to the inspection, surveillance and techniques applied to decrease or avoid any type of damage to the environment, in general, or to a specific ecosystem. Some examples are:

- D.

- Audiovisual and entertainment: These refer to activities related to the integration of audio and visual techniques to produce audiovisual products (montages, recordings, films, etc.):

- E.

- Surveillance and security: activities related to the integration of audio and visual techniques to produce audiovisual products (montages, recordings, films, etc.):

4.2. Features Provided by UAS

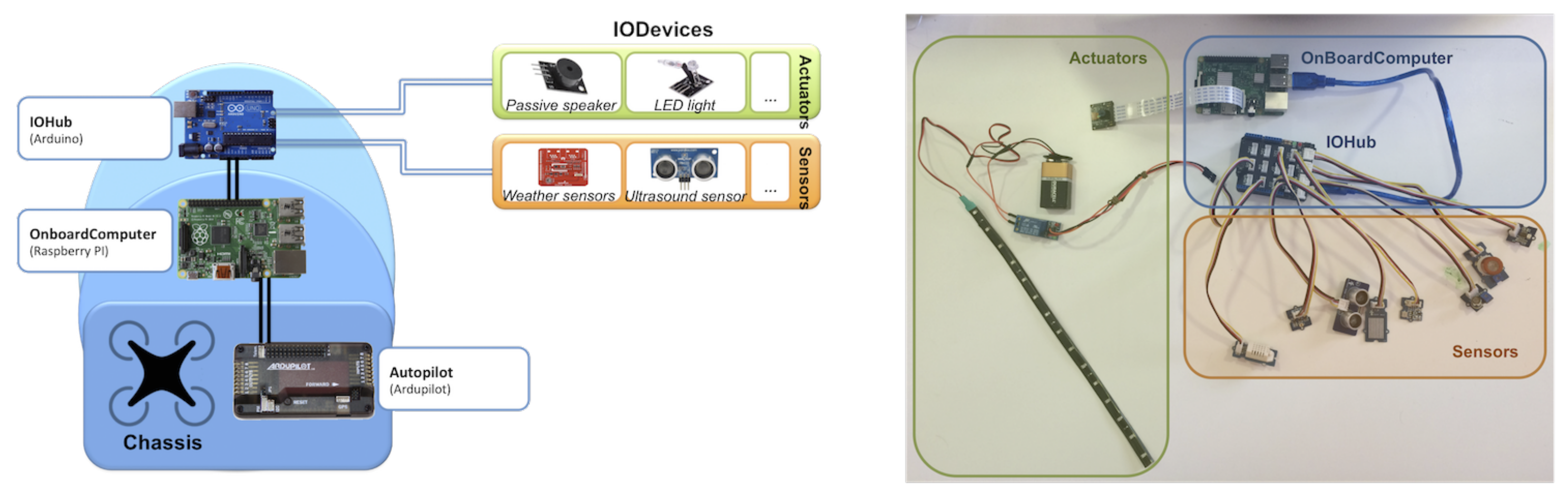

5. Our Approach: A General Multipurpose UAS Architecture

5.1. AutoPilot

5.2. OnBoardComputer

5.3. IOHub

5.4. DSL

- Specify the devices that will compose the hardware architecture (image/ranging sensors, actuators, and so on). An initial catalog of devices is included within the DSL (e.g., GoPro Hero 3, Asus Xtion Pro Live or HC-SR04, just to cite a few)

- Based on this specification, the DSL also allows one to include restrictions, such as maximum weight, distance, etc.

- It is also possible to check that the type of connections among devices are correct.

- Once a hardware implementation has been defined, code generators automatically generate the skeleton of the code that is embedded on those devices.

- The DSL also allows one to program the flight plan and the actions to be carried out by the UAS, generating also the necessary code for each of the devices.

- Finally, the DSL generates the necessary documentation to comply with the process of registration of operations indicated by the law of the country where the work will be carried out (a few countries have been initially considered just to validate the proposal).

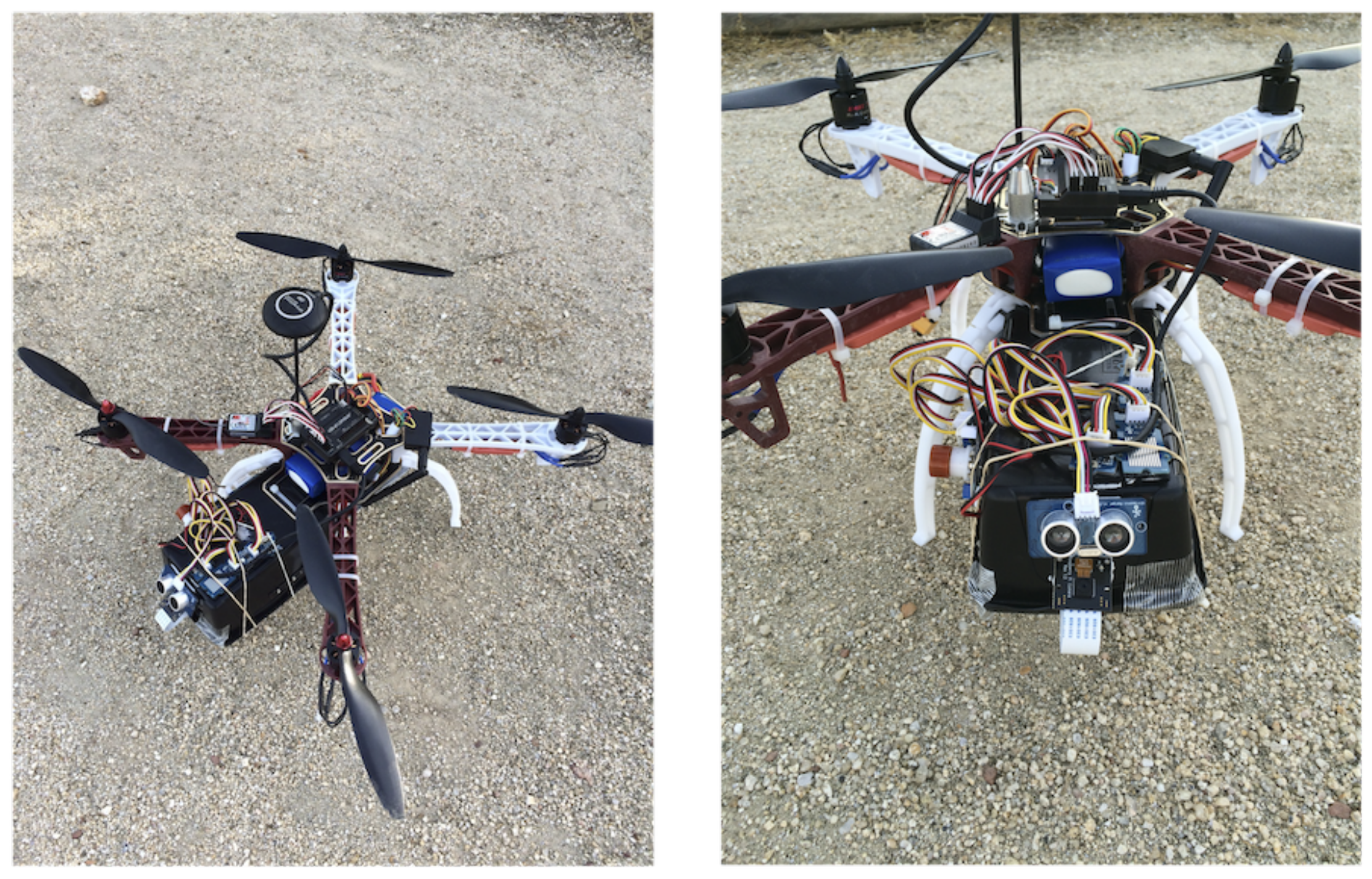

6. Implementation: An Instance of the Architecture

6.1. Chassis

6.2. AutoPilot

6.3. OnBoardComputer

6.4. IOHub

6.5. Final Assembly

6.6. Validation

7. Conclusions

Supplementary Materials

Supplementary File 1Acknowledgments

Author Contributions

Conflicts of Interest

References

- Kudva, S.; Ye, X. Smart Cities, Big Data, and Sustainability Union. Big Data Cogn. Comput. 2017, 1, 4. [Google Scholar] [CrossRef]

- Brenna, M.; Falvo, M.; Foiadelli, F.; Martirano, L.; Massaro, F.; Poli, D.; Vaccaro, A. Challenges in energy systems for the smart-cities of the future. In Proceedings of the 2012 IEEE International Energy Conference and Exhibition (ENERGYCON), Florence, Italy, 9–12 September 2012; pp. 755–762. [Google Scholar]

- Pellicer, S.; Santa, G.; Bleda, A.L.; Maestre, R.; Jara, A.J.; Skarmeta, A.G. A Global Perspective of Smart Cities: A Survey. In Proceedings of the 2013 Seventh International Conference on Innovative Mobile and Internet Services in Ubiquitous Computing, Taichung, Taiwan, 3–5 July 2013; pp. 439–444. [Google Scholar]

- Coopmans, C. Cyber-Physical Systems Enabled By Unmanned Aerial System-Based Personal Remote Sensing: Data Mission Quality-Centric Design Architectures; Utah State University: Logan, UT, USA, 2014. [Google Scholar]

- Coopmans, C.; Stark, B.; Jensen, A.; Chen, Y.Q.; McKee, M. Cyber-Physical Systems Enabled by Small Unmanned Aerial Vehicles. In Handbook of Unmanned Aerial Vehicles; Springer: Dordrecht, The Netherlands, 2015; pp. 2835–2860. [Google Scholar]

- Kotsev, A.; Schade, S.; Craglia, M.; Gerboles, M.; Spinelle, L.; Signorini, M. Next Generation Air Quality Platform: Openness and Interoperability for the Internet of Things. Sensors 2016, 16, 403. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hancke, G.; Silva, B.; Hancke, G., Jr. The Role of Advanced Sensing in Smart Cities. Sensors 2012, 13, 393–425. [Google Scholar] [CrossRef] [PubMed]

- Perera, C.; Zaslavsky, A.; Christen, P.; Georgakopoulos, D. Sensing as a service model for smart cities supported by Internet of Things. Trans. Emerg. Telecommun. Technol. 2014, 25, 81–93. [Google Scholar] [CrossRef]

- Giyenko, A.; Cho, Y.I. Intelligent Unmanned Aerial Vehicle Platform for Smart Cities. In Proceedings of the 2016 Joint 8th International Conference on Soft Computing and Intelligent Systems (SCIS) and 17th International Symposium on Advanced Intelligent Systems (ISIS), Sapporo, Japan, 25–28 August 2016; pp. 729–733. [Google Scholar]

- Chao, H.; Jensen, A.M.; Han, Y.; Chen, Y.; McKee, M. AggieAir: Towards Low-cost Cooperative Multispectral Remote Sensing Using Small Unmanned Aircraft Systems. In Advances in Geoscience and Remote Sensing; Jedlovec, G., Ed.; InTech: Vienna, Austria, 2009. [Google Scholar] [CrossRef]

- Chao, H.; Chen, Y. Remote Sensing and Actuation Using Networked Unmanned Vehicles; John Wiley & Sons: New York, NY, USA, 2012; p. 198. [Google Scholar]

- Stark, B.; Smith, B.; Chen, Y. Survey of thermal infrared remote sensing for Unmanned Aerial Systems. In Proceedings of the 2014 International Conference on Unmanned Aircraft Systems (ICUAS), Orlando, FL, USA, 27–30 May 2014; pp. 1294–1299. [Google Scholar]

- Anca, P.; Calugaru, A.; Alixandroae, I.; Nazarie, R. A Workflow for Uav’s Integration Into a Geodesign Platform. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2016, XLI-B1, 1099–1103. [Google Scholar] [CrossRef]

- Chessa, S.; Girolami, M.; Mavilia, F.; Dini, G.; Perazzo, P.; Rasori, M. Sensing the cities with social-aware unmanned aerial vehicles. In Proceedings of the 2017 IEEE Symposium on Computers and Communications (ISCC), Heraklion, Greece, 3–6 July 2017; pp. 278–283. [Google Scholar]

- Schmidt, G.T. Navigation sensors and systems in GNSS degraded and denied environments. Chin. J. Aeronaut. 2015, 28, 1–10. [Google Scholar] [CrossRef]

- Chowdhary, G.; Johnson, E.N.; Magree, D.; Wu, A.; Shein, A. GPS-denied Indoor and Outdoor Monocular Vision Aided Navigation and Control of Unmanned Aircraft. J. Field Robot. 2013, 30, 415–438. [Google Scholar] [CrossRef]

- Chaves, S.M.; Wolcott, R.W.; Eustice, R.M. NEEC Research: Toward GPS-denied Landing of Unmanned Aerial Vehicles on Ships at Sea. Nav. Eng. J. 2015, 127, 23–35. [Google Scholar]

- Kong, W.; Hu, T.; Zhang, D.; Shen, L.; Zhang, J. Localization Framework for Real-Time UAV Autonomous Landing: An On-Ground Deployed Visual Approach. Sensors 2017, 17, 1437. [Google Scholar] [CrossRef] [PubMed]

- Kapoor, R.; Ramasamy, S.; Gardi, A.; Sabatini, R. A bio-inspired acoustic sensor system for UAS navigation and tracking. In Proceedings of the 2017 IEEE/AIAA 36th Digital Avionics Systems Conference (DASC), St. Petersburg, FL, USA, 17–21 September 2017; pp. 1–7. [Google Scholar]

- Grenzdorffer, G.; Engel, A.; Teichert, B. The Photogrammetric Potential of Low-Cost UAVS in Forestry and Agriculture. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 31, 1207–1214. [Google Scholar]

- Kontitsis, M.; Valavanis, K.; Tsourveloudis, N. A UAV vision system for airborne surveillance. In Proceedings of the 2004 IEEE International Conference on Robotics and Automation, New Orleans, LA, USA, 26 April–1 May 2004; Volume 1, pp. 77–83. [Google Scholar]

- Küng, O.; Strecha, C.; Beyeler, A.; Zufferey, J.C.; Floreano, D.; Fua, P.; Gervaix, F. The Accuracy of Automatic Photogrammetric Techniques on Ultra-light UAV Imagery. Presented at UAV-g 2011—Unmanned Aerial Vehicle in Geomatics, Zürich, Switzerland, 14–16 September 2011. [Google Scholar]

- Rossi, M.; Brunelli, D.; Adami, A.; Lorenzelli, L.; Menna, F.; Remondino, F. Gas-Drone: Portable gas sensing system on UAVs for gas leakage localization. In Proceedings of the 2014 IEEE SENSORS, Valencia, Spain, 2–5 November 2014; pp. 1431–1434. [Google Scholar]

- VOC and Gas Detection Drones—Unmanned Aircraft Systems, 2017. Available online: https://www.aerialtronics.com/voc-gas-detection-drones/ (accessed on 22 December 2017).

- Gomes, P.; Santana, P.; Barata, J. A Vision-Based Approach to Fire Detection. Int. J. Adv. Robot. Syst. 2014, 11, 149. [Google Scholar] [CrossRef]

- Huang, J.L.; Cai, W.Y. UAV Low Altitude Marine Monitoring System. In Proceedings of the 2014 International Conference on Wireless Communication and Sensor Network, Wuhan, China, 13–14 December 2014; pp. 61–64. [Google Scholar]

- Lin, A.Y.M.; Novo, A.; Har-Noy, S.; Ricklin, N.D.; Stamatiou, K. Combining GeoEye-1 Satellite Remote Sensing, UAV Aerial Imaging, and Geophysical Surveys in Anomaly Detection Applied to Archaeology. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 4, 870–876. [Google Scholar] [CrossRef]

- Quater, P.B.; Grimaccia, F.; Leva, S.; Mussetta, M.; Aghaei, M. Light Unmanned Aerial Vehicles (UAVs) for Cooperative Inspection of PV Plants. IEEE J. Photovolt. 2014, 4, 1107–1113. [Google Scholar] [CrossRef] [Green Version]

- Teixeira, J.M.; Ferreira, R.; Santos, M.; Teichrieb, V. Teleoperation Using Google Glass and AR, Drone for Structural Inspection. In Proceedings of the 2014 XVI Symposium on Virtual and Augmented Reality, Piata Salvador, Brazil, 12–15 May 2014; pp. 28–36. [Google Scholar]

- Katrasnik, J.; Pernus, F.; Likar, B. A Survey of Mobile Robots for Distribution Power Line Inspection. IEEE Trans. Power Deliv. 2010, 25, 485–493. [Google Scholar] [CrossRef]

- Samad, A.M.; Kamarulzaman, N.; Hamdani, M.A.; Mastor, T.A.; Hashim, K.A. The potential of Unmanned Aerial Vehicle (UAV) for civilian and mapping application. In Proceedings of the 2013 IEEE 3rd International Conference on System Engineering and Technology, Shah Alam, Malaysia, 19–20 August 2013; pp. 313–318. [Google Scholar]

- Blair-Smith, H. Aviation mandates in an automated fossil-free century. In Proceedings of the 2015 IEEE/AIAA 34th Digital Avionics Systems Conference (DASC), Prague, Czech Republic, 13–17 September 2015; pp. 9A3-1–9A3-9. [Google Scholar]

- Penzenstadler, B.; Femmer, H. A generic model for sustainability with process- and product-specific instances. In Workshop on Green In/by Software Engineering—GIBSE; ACM Press: New York, NY, USA, 2013; p. 3. [Google Scholar]

- Rojas, M.; Alexander, J.; Malaver, A.; Gonzalez, F.; Motta, N.; Depari, A.; Corke, P. Towards the Development of a Gas Sensor System for Monitoring Pollutant Gases in the Low Troposphere Using Small Unmanned Aerial Vehicles. In Proceedings of the Workshop on Robotics for Environmental Monitoring, Camperdown, Sydney, Australia, 11 July 2012. [Google Scholar]

- Han, J.; Xu, Y.; Di, L.; Chen, Y. Low-cost Multi-UAV Technologies for Contour Mapping of Nuclear Radiation Field. J. Intell. Robot. Syst. 2013, 70, 401–410. [Google Scholar] [CrossRef]

- Scherer, J.; Rinner, B.; Yahyanejad, S.; Hayat, S.; Yanmaz, E.; Andre, T.; Khan, A.; Vukadinovic, V.; Bettstetter, C.; Hellwagner, H. An Autonomous Multi-UAV System for Search and Rescue. In Proceedings of the First Workshop on Micro Aerial Vehicle Networks, Systems, and Applications for Civilian Use, Florence, Italy, 18–22 May 2015; ACM Press: New York, NY, USA, 2015; pp. 33–38. [Google Scholar]

- Agcayazi, M.T.; Cawi, E.; Jurgenson, A.; Ghassemi, P.; Cook, G. ResQuad: Toward a semi-autonomous wilderness search and rescue unmanned aerial system. In Proceedings of the 2016 International Conference on Unmanned Aircraft Systems (ICUAS), Arlington, VA, USA, 7–10 June 2016; pp. 898–904. [Google Scholar]

- Mohammed, F.; Idries, A.; Mohamed, N.; AlJaroodi, J.; Jawhar, I. UAVs for smart cities: Opportunities and challenges. In Proceedings of the 2014 International Conference on Unmanned Aircraft Systems (ICUAS), Orlando, FL, USA, 27–30 May 2014; pp. 267–273. [Google Scholar]

- Wang, X.; Zhang, Y. Insulator identification from aerial images using Support Vector Machine with background suppression. In Proceedings of the 2016 International Conference on Unmanned Aircraft Systems (ICUAS), Arlington, VA, USA, 7–10 June 2016; pp. 892–897. [Google Scholar]

- Corral, L.; Fronza, I.; Ioini, N.E.; Ibershimi, A. Towards Optimization of Energy Consumption of Drones with Software-Based Flight Analysis; Knowledge Systems Institute Graduate School: Skokie, IL, USA, 2016. [Google Scholar]

- Liu, Y.D.; Ziarek, L. Toward Energy-Aware Programming for Unmanned Aerial Vehicles. In Proceedings of the 3rd International Workshop on Software Engineering for Smart Cyber-Physical Systems (SEsCPS), Buenos Aires, Argentina, 21 May 2017; pp. 30–33. [Google Scholar]

- Palossi, D.; Gomez, A.; Draskovic, S.; Keller, K.; Benini, L.; Thiele, L. Self-Sustainability in Nano Unmanned Aerial Vehicles: A Blimp Case Study. In Proceedings of the Computing Frontiers Conference, Siena, Italy, 15–17 May 2017. [Google Scholar]

- Bozhinoski, D.; Malavolta, I.; Bucchiarone, A.; Marconi, A. Sustainable Safety in Mobile Multi-robot Systems via Collective Adaptation. In Proceedings of the 2015 IEEE 9th International Conference on Self-Adaptive and Self-Organizing Systems, Cambridge, MA, USA, 21–25 September 2015; pp. 172–173. [Google Scholar]

- Coelho, B.N.; Coelho, V.N.; Coelho, I.M.; Ochi, L.S.; Haghnazar, R.; Zuidema, K.; Lima, M.S.F.; Costa, A.R. A multi-objective green UAV routing problem. Comput. Oper. Res. 2017, 88, 306–315. [Google Scholar] [CrossRef]

- Koulali, S.; Sabir, E.; Taleb, T.; Azizi, M. A green strategic activity scheduling for UAV networks: A sub-modular game perspective. IEEE Commun. Mag. 2016, 54, 58–64. [Google Scholar] [CrossRef]

- Neumann, P.G. The foresight saga. Commun. ACM 2012, 55, 26–29. [Google Scholar] [CrossRef]

- Becker, C.; Chitchyan, R.; Duboc, L.; Easterbrook, S.; Penzenstadler, B.; Seyff, N.; Venters, C.C. Sustainability Design and Software: The Karlskrona Manifesto. In Proceedings of the 2015 IEEE/ACM 37th IEEE International Conference on Software Engineering (ICSE), Florence, Italy, 16–24 May 2015; Volume 2, pp. 467–476. [Google Scholar]

- Lago, P. Challenges and opportunities for sustainable software. In Proceedings of the Fifth International Workshop on Product LinE Approaches in Software Engineering, Florence, Italy, 16–24 May 2015; IEEE Press: New York, NY, USA, 2015; pp. 1–2. [Google Scholar]

- Penzenstadler, B.; Raturi, A.; Richardson, D.; Calero, C.; Femmer, H.; Franch, X. Systematic mapping study on software engineering for sustainability (SE4S). In Proceedings of the 18th International Conference on Evaluation and Assessment in Software Engineering—EASE ’14, London, UK, 13–14 May 2014; ACM Press: New York, NY, USA, 2014; pp. 1–14. [Google Scholar]

- Venters, C.; Jay, C.; Lau, L.; Griffiths, M.; Holmes, V.; Ward, R.; Austin, J.; Dibsdale, C.; Xu, J. Software Sustainability: The Modern Tower of Babel. In Proceedings of the Third International Workshop on Requirements Engineering for Sustainable System, Karlskrona, Sweden, 26 August 2014. [Google Scholar]

- Naumann, S.; Dick, M.; Kern, E.; Johann, T. The GREENSOFT Model: A reference model for green and sustainable software and its engineering. Sustain. Comput. Inform. Syst. 2011, 1, 294–304. [Google Scholar] [CrossRef]

- UN World Comission on Environment and Development. Our Common Future: Report of theWorld Commission on Environment and Development; Technical Report; UN World Comission on Environment and Development: New York, NY, USA, 1987. [Google Scholar]

- Goodland, R. Sustainability: Human , Social , Economic and Environmental. In Encyclopedia of Global Environmental Change; JohnWiley & Sons: New York, NY, USA, 2002; Volume 6, pp. 220–225. [Google Scholar]

- ICAO. International Civil Aviation—Unmanned Aircraft Systems (UAS) International Civil Aviation Organization; Technical Report; International Civil Aviation Organization (ICAO): Montreal, QC, Canada, 2011. [Google Scholar]

- Clarke, R. What drones inherit from their ancestors. Comput. Law Secur. Rev. 2014, 30, 247–262. [Google Scholar] [CrossRef]

- Imam, A.; Bicker, R. State of the Art in Rotorcraft UAVs Research. IJESIT 2014, 3, 221–233. [Google Scholar]

- Daponte, P.; De Vito, L.; Mazzilli, G.; Picariello, F.; Rapuano, S.; Riccio, M. Metrology for drone and drone for metrology: Measurement systems on small civilian drones. In Proceedings of the 2015 IEEE Metrology for Aerospace (MetroAeroSpace), Benevento, Italy, 4–5 June 2015; pp. 306–311. [Google Scholar]

- Nedjati, A.; Vizvari, B.; Izbirak, G. Post-earthquake response by small UAV helicopters. Nat. Hazards 2016, 80, 1669–1688. [Google Scholar] [CrossRef]

- Aljehani, M.; Inoue, M. Multi-UAV tracking and scanning systems in M2M communication for disaster response. In Proceedings of the 2016 IEEE 5th Global Conference on Consumer Electronics, Kyoto, Japan, 11–14 October 2016; pp. 1–2. [Google Scholar]

- Murphy, R.; Dufek, J.; Sarmiento, T.; Wilde, G.; Xiao, X.; Braun, J.; Mullen, L.; Smith, R.; Allred, S.; Adams, J.; et al. Two case studies and gaps analysis of flood assessment for emergency management with small unmanned aerial systems. In Proceedings of the 2016 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Lausanne, Switzerland, 23–27 October 2016; pp. 54–61. [Google Scholar]

- Erdelj, M.; Natalizio, E.; Chowdhury, K.R.; Akyildiz, I.F. Help from the Sky: Leveraging UAVs for Disaster Management. IEEE Pervasive Comput. 2017, 16, 24–32. [Google Scholar] [CrossRef]

- Yuan, C.; Liu, Z.; Zhang, Y. Vision-based forest fire detection in aerial images for firefighting using UAVs. In Proceedings of the 2016 International Conference on Unmanned Aircraft Systems (ICUAS), Arlington, VA, USA, 7–10 June 2016; pp. 1200–1205. [Google Scholar]

- Meng, X.; Wang, W.; Leong, B. SkyStitch: A Cooperative Multi-UAV-based Real-time Video Surveillance System with Stitching. In Proceedings of the 23rd ACM international conference on Multimedia, Brisbane, Australia, 26–30 October 2015. [Google Scholar]

- Verykokou, S.; Doulamis, A.; Athanasiou, G.; Ioannidis, C.; Amditis, A. UAV-based 3D modelling of disaster scenes for Urban Search and Rescue. In Proceedings of the 2016 IEEE International Conference on Imaging Systems and Techniques (IST), Chania, Greece, 4–6 October 2016; pp. 106–111. [Google Scholar]

- Gotovac, S.; Papic, V.; Marusic, Z. Analysis of saliency object detection algorithms for search and rescue operations. In Proceedings of the 2016 24th International Conference on Software, Telecommunications and Computer Networks (SoftCOM), Split, Croatia, 22–24 September 2016; pp. 1–6. [Google Scholar]

- Cacace, J.; Finzi, A.; Lippiello, V.; Furci, M.; Mimmo, N.; Marconi, L. A control architecture for multiple drones operated via multimodal interaction in search & rescue mission. In Proceedings of the 2016 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Lausanne, Switzerland, 23–27 October 2016; pp. 233–239. [Google Scholar]

- Mitchell, J.J.; Glenn, N.F.; Anderson, M.O.; Hruska, R.C.; Halford, A.; Baun, C.; Nydegger, N. Unmanned aerial vehicle (UAV) hyperspectral remote sensing for dryland vegetation monitoring. In Proceedings of the 2012 4th Workshop on Hyperspectral Image and Signal Processing (WHISPERS), Shanghai, China, 4–7 June 2012; pp. 1–10. [Google Scholar]

- Katsigiannis, P.; Misopolinos, L.; Liakopoulos, V.; Alexandridis, T.K.; Zalidis, G. An autonomous multi-sensor UAV system for reduced-input precision agriculture applications. In Proceedings of the 2016 24th Mediterranean Conference on Control and Automation (MED), Athens, Greece, 21–24 June 2016; pp. 60–64. [Google Scholar]

- Martínez, J.; Egea, G.; Agüera, J.; Pérez-Ruiz, M. A cost-effective canopy temperature measurement system for precision agriculture: A case study on sugar beet. Precis. Agric. 2017, 18, 95–110. [Google Scholar] [CrossRef]

- Ren, D.D.W.; Tripathi, S.; Li, L.K.B. Low-cost multispectral imaging for remote sensing of lettuce health. J. Appl. Remote Sens. 2017, 11, 016006. [Google Scholar] [CrossRef]

- Vasudevan, A.; Kumar, D.A.; Bhuvaneswari, N.S. Precision farming using unmanned aerial and ground vehicles. In Proceedings of the 2016 IEEE Technological Innovations in ICT for Agriculture and Rural Development (TIAR), Chennai, India, 15–16 July 2016; pp. 146–150. [Google Scholar]

- Burkart, A.; Hecht, V.L.; Kraska, T.; Rascher, U. Phenological analysis of unmanned aerial vehicle based time series of barley imagery with high temporal resolution. Precis. Agric. 2017, 1–13. [Google Scholar] [CrossRef]

- Brennan, P. One Man and his Drone: Meet Shep, the Flying ’Sheepdog’. The Telegraph, 2015. Available online: http://www.telegraph.co.uk/men/the-filter/virals/11503611/One-man-and-his-drone-meet-Shep-the-flying-sheepdog.html(accessed 27 December 2017).

- Nyamuryekung’e, S.; Cibils, A.F.; Estell, R.E.; Gonzalez, A.L. Use of an Unmanned Aerial Vehicle—Mounted Video Camera to Assess Feeding Behavior of Raramuri Criollo Cows. Rangel. Ecol. Manag. 2016, 69, 386–389. [Google Scholar] [CrossRef]

- Chamoso, P.; Raveane, W.; Parra, V.; Gonzalez, A. UAVs Applied to the Counting and Monitoring of Animals; Springer: Cham, Switzerland, 2014; pp. 71–80. [Google Scholar]

- Saari, H.; Pellikka, I.; Pesonen, L.; Tuominen, S.; Heikkilä, J.; Holmlund, C.; Mäkynen, J.; Ojala, K.; Antila, T. Unmanned Aerial Vehicle (UAV) operated spectral camera system for forest and agriculture applications. In Proceedings of the Remote Sensing for Agriculture, Ecosystems, and Hydrology XIII, Prague, Czech Republic, 19–21 September 2011. [Google Scholar]

- Guldogan, O.; Rotola-Pukkila, J.; Balasundaram, U.; Le, T.H.; Mannar, K.; Chrisna, T.M.; Gabbouj, M. Automated tree detection and density calculation using unmanned aerial vehicles. In Proceedings of the 2016 Visual Communications and Image Processing (VCIP), Chengdu, China, 27–30 November 2016; pp. 1–4. [Google Scholar]

- Messinger, M.; Silman, M. Unmanned aerial vehicles for the assessment and monitoring of environmental contamination: An example from coal ash spills. Environ. Pollut. 2016, 218, 889–894. [Google Scholar] [CrossRef] [PubMed]

- Shintani, C.; Fonstad, M.A. Comparing remote-sensing techniques collecting bathymetric data from a gravel-bed river. Int. J. Remote Sens. 2017, 38, 1–20. [Google Scholar] [CrossRef]

- Astuti, G.; Caltabiano, D.; Giudice, G.; Longo, D.; Melita, D.; Muscato, G.; Orlando, A. “Hardware in the Loop”: Tuning for a Volcanic Gas Sampling UAV. In Advances in Unmanned Aerial Vehicles; Springer: Dordrecht, The Netherlands, 2007; pp. 473–493. [Google Scholar]

- Gatsonis, N.A.; Demetriou, M.A.; Egorova, T. Real-time prediction of gas contaminant concentration from a ground intruder using a UAV. In Proceedings of the 2015 IEEE International Symposium on Technologies for Homeland Security (HST), Waltham, MA, USA, 14–16 April 2015; pp. 1–6. [Google Scholar]

- Niethammer, U.; James, M.; Rothmund, S.; Travelletti, J.; Joswig, M. UAV-based remote sensing of the Super-Sauze landslide: Evaluation and results. Eng. Geol. 2012, 128, 2–11. [Google Scholar] [CrossRef]

- Bemis, S.P.; Micklethwaite, S.; Turner, D.; Mike R., J.; Akciz, S.; Thiele, S.T.; Ali Bangash, H. Ground-based and UAV-Based photogrammetry: A multi-scale, high-resolution mapping tool for structural geology and paleoseismology. J. Struct. Geol. 2014, 69, 163–178. [Google Scholar] [CrossRef]

- Potapov, E.; Utekhina, I.; McGrady, M.; Rimlinger, D. Usage of UAV for Surveying Steller’s Sea Eagle Nests—Raptors Conservation. Artic. Raptors Conserv. J. 2013, Vol. 27, 253–260. [Google Scholar]

- Santano, D. Unmanned Aerial Vehicle in audio visual data acquisition; a researcher demo intro. In Proceedings of the 2014 International Conference on Virtual Systems & Multimedia (VSMM), Hong Kong, China, 9–12 December 2014; pp. 318–322. [Google Scholar]

- Neri, M.; Campi, A.; Suffritti, R.; Grimaccia, F.; Sinogas, P.; Guye, O.; Papin, C.; Michalareas, T.; Gazdag, L.; Rakkolainen, I. SkyMedia—UAV-based capturing of HD/3D content with WSN augmentation for immersive media experiences. In Proceedings of the 2011 IEEE International Conference on Multimedia and Expo (ICME), Barcelona, Spain, 11–15 July 2011; pp. 1–6. [Google Scholar]

- Gini, R.; Passoni, D.; Pinto, L.; Sona, G. Aerial Images From an Uav System: 3d Modeling and Tree Species Classification in a Park Area. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 39, 361–366. [Google Scholar] [CrossRef]

- Levin, A. James Bond Drones Idle in U.S. as Pilots Oppose Waivers. Bloomberg, 2014. Available online: https://www.bloomberg.com/news/articles/2014-07-16/james-bond-drones-idle-in-u-s-as-pilots-oppose-waivers(accessed on 27 December 2017).

- Amazon. Quadcopters and Accessories. Available online: https://www.amazon.com/Quadcopters-Accessories/b?ie=UTF8node=11910405011 (accessed on December 2017).

- Young, J. 11 Best Drones For Sale 2017, 2017. Available online: http://www.droneguru.net/5-best-drones-for-sale-drone-reviews/ (accessed on December 2017).

- Quercus. UAS Flight Simulation. Available online: https://youtu.be/OhttLZwpwqA (accessed on December 2017).

| Research Question | Main Motivation |

|---|---|

| RQ1: In which contexts and areas are UAS being currently used? | To collect a set of case studies and areas where UAS are being used and the purpose of using them. |

| RQ2: What techniques and technologies are applied to use UAS in the different areas? | To know the technologies that either are applied to UAS or that UAS provide and their maturity level. |

| RQ3: Which features must the UAS provide in order to be used in each area? | To know the features that a UAS must have in order to be used in the different areas identified. |

| Storage | Storage capacity | Capacity for recording and persistently storing data into an electronic device. |

| Processing | Processing capacity | Capacity for executing calculations, operations and algorithms. |

| Reasoning | Capacity for processing the data acquired by the UAS and taking automatic decisions accordingly. This feature is strongly coupled with “Processing capacity” since it is required for achieving Reasoning. | |

| Context sensitive | Capacity for acquiring data from the environment and reacting according to these data in order to preserve the security of the device. This features is also strongly related with “Reasoning” and “Processing capacity”. | |

| Communication | Communication PC-UAS | Capacity for communicating the UAS with a server or Ground Station based on a wireless connection, such as WiFi (for a short distance) or radio (for a large distance). |

| Communication Remote-UAS | Capacity for communicating the UAS with a remote radio-control. | |

| Communication to external entity | Property that enables the communication between the UAS and an external entity in order to send information (measurements, controlling parameters, images, etc.) or receive data (e.g. accessing to a Web service, communicating with another aircraft, etc.). | |

| Configuration | Extensibility | Capacity for adding new components (sensors and/or actuators, cameras, ...) or interchanging those that are previously installed. |

| Programming | Property that allows the automation of directives or rules to be used in concrete situations. This programming capacity may be performed at a low abstraction level (adding machine code directly to the autopilot) or at a higher abstraction level (based on the usage of particular programs that translate the code into machine code). | |

| Route planning software | Capacity for programming the UAS through a PC or mobile device by specific software for route planning. This software may be closed to modifications (usually proprietary) or open to be extended with new directives or to adapt the existing ones. | |

| Adaptability | Property that allows modifying the programmed tasks during the flight (modifications on the fly). |

| Storage | Processing | Communication | Configuration | |||||||||

| Hardware | Software | |||||||||||

| Storage capacity | Processing capacity | Reasoning | Context sensitive | Communication PC-UAS | Communication Remote-UAS | Communication to External entity | Extensibility | Programming | Route planning software | Adaptability | ||

| A | Disasters and emergency | X | ✓ | ✓ | ✓ | ✓ | - | ✓ | X | X | X | X |

| B | Agriculture and cattle raising | ✓ | ✓ | ✓ | ✓ | - | ✓ | X | X | - | ✓ | ✓ |

| C | Environmental control | ✓ | - | X | X | X | ✓ | ✓ | ✓ | ✓ | X | X |

| D | Audiovisual and entertainment | ✓ | X | X | X | - | ✓ | X | ✓ | X | X | ✓ |

| E | Surveillance and security | X | ✓ | - | - | - | ✓ | ✓ | X | X | X | ✓ |

| Storage | Processing | Communication | Configuration | |||||||||

| Hardware | Software | |||||||||||

| Storage capacity | Processing capacity | Reasoning | Context sensitive | Communication PC-UAS | Communication Remote-UAS | Communication to External entity | Extensibility | Programming | Route planning software | Adaptability | ||

| A | [58] | X | X | X | X | - | X | ✓ | X | - | ✓ | X |

| [59] | X | X | X | X | X | ✓ | ✓ | X | X | X | X | |

| [60] | ✓ | X | X | X | ✓ | X | X | X | X | X | ✓ | |

| [61] | X | X | X | X | ✓ | X | ✓ | X | X | X | X | |

| [62] | X | X | X | X | ✓ | X | ✓ | - | X | X | X | |

| [63] | X | X | X | X | - | X | ✓ | - | X | X | X | |

| [64] | ✓ | X | X | X | X | ✓ | X | X | X | X | X | |

| [65] | ✓ | X | X | X | X | ✓ | X | X | X | X | X | |

| [66] | X | X | X | X | ✓ | X | ✓ | X | X | X | ||

| B | [73] | - | X | X | X | X | ✓ | X | X | X | X | X |

| [74] | ✓ | X | X | X | - | X | X | X | X | ✓ | X | |

| [75] | ✓ | X | X | X | ✓ | - | ✓ | X | X | X | X | |

| [67] | ✓ | X | X | X | ✓ | X | X | X | X | ✓ | X | |

| [68] | ✓ | X | X | X | X | X | X | X | X | ✓ | X | |

| [69] | ✓ | X | X | X | X | X | ✓ | X | X | ✓ | X | |

| [70] | X | X | X | X | ✓ | X | ✓ | X | X | X | - | |

| [71] | ✓ | X | X | X | X | ✓ | X | X | X | X | X | |

| [72] | ✓ | X | X | X | X | ✓ | X | X | X | X | X | |

| C | [76] | ✓ | X | X | X | X | ✓ | X | X | X | X | X |

| [77] | ✓ | X | X | X | X | ✓ | X | X | X | ✓ | X | |

| [78] | ✓ | X | X | X | ✓ | X | X | X | X | ✓ | X | |

| [79] | ✓ | X | X | X | X | ✓ | X | - | X | X | X | |

| [84] | X | X | X | X | X | ✓ | ✓ | - | X | X | X | |

| [82] | ✓ | ✓ | - | X | ✓ | X | X | X | ✓ | X | X | |

| [83] | ✓ | X | X | X | ✓ | X | X | X | X | ✓ | X | |

| [80] | ✓ | X | X | X | ✓ | X | ✓ | X | X | ✓ | X | |

| [81] | X | X | X | X | ✓ | X | ✓ | X | X | ✓ | ✓ | |

| [23] | X | ✓ | - | X | ✓ | X | ✓ | X | X | ✓ | ||

| D | [80] | ✓ | X | X | X | X | ✓ | X | - | X | X | X |

| [85] | ✓ | X | X | X | X | ✓ | ✓ | X | X | X | X | |

| [86] | X | X | X | X | ✓ | ✓ | ✓ | - | X | X | ✓ | |

| E | [21] | X | - | - | X | X | X | X | X | X | ||

| [87] | ✓ | X | X | X | X | ✓ | X | X | X | X | X | |

| [39] | ✓ | X | X | X | X | ✓ | X | X | X | X | X | |

| [30] | ✓ | X | X | X | X | ✓ | X | X | X | X | X | |

| [28] | ✓ | X | X | X | X | ✓ | X | - | X | X | X | |

| Storage | Processing | Communication | Configuration | ||||||||

| Hardware | Software | ||||||||||

| Storage capacity | Processing capacity | Reasoning | Context sensitive | Communication PC-UAS | Communication Remote-UAS | Communication to External entity | Extensibility | Programming | Route planning software | Adaptability | |

| DJI S800 EVO | X | X | X | X | X | ✓ | X | ✓ | X | ✓ | X |

| DJI Phantom 3 | ✓ | X | X | X | X | ✓ | X | X | X | ✓ | X |

| DJI Phantom 4 | ✓ | - | - | - | X | ✓ | X | X | X | ✓ | X |

| TBS Discovery | - | X | X | X | ✓ | ✓ | X | - | X | ✓ | X |

| Parrot Beebop | X | X | X | X | X | - | X | X | X | ✓ | X |

| GHOST Drone Aerial 2.0 | X | X | X | X | X | ✓ | X | X | X | X | X |

| AirDog Drone | ✓ | ✓ | ✓ | X | X | ✓ | X | X | X | - | X |

| Hemav Drone | ✓ | ✓ | X | X | ✓ | X | X | ✓ | ✓ | X | - |

| 3DR Solo Drone Quadcopter | ✓ | X | X | X | X | ✓ | ✓ | X | X | X | X |

| Walkera Tali H500 | X | X | X | X | X | ✓ | X | X | X | X | X |

| Yuneec Q500 | X | X | X | X | X | ✓ | X | X | X | X | X |

| Intelligenia Dynamics Drone | ✓ | - | ✓ | X | ✓ | X | X | ✓ | - | X | X |

| AutoPilots | OnBoardComputers | IOHubs | |||

| Storage | Storage capacity | ✓ | |||

| Processing | Processing capacity Reasoning Context sensitive | ✓ ✓ | ✓ | ||

| Communication | Communication PC-UAS Communication Remote-UAS Communication to External entity | ✓ ✓ | ✓ | ||

| Configuration | Hardware Software | Extensibility Programming Route planning software Adaptability | ✓* ✓ ✓ | ✓** | ✓ |

| Autopilot APM 2.6 | Pixhawk PX4 | Paparazzi Lisa/M 2 | ||

|---|---|---|---|---|

| Physical specifications | ||||

| Size (mm) | 70x45x15 | 82x50x16 | 60x34x10 | |

| Weight (g) | 28 | 38 | 10.8 | |

| DC in (V) | 3.3 - 5 | 4.5 - 5 | 3.3 - 5 | |

| Power consumption (mAh) | 600 | 800 | 200 | |

| Computing specifications | ||||

| CPU | Atmega 2560 (16 MHz) | Cortex M4F (168 MHz) | STM32 (84 MHz) | |

| Memory | 4 (MB) | 256 KB | 256 KB | |

| Storage | 16 MB | 2 MB | 64 KB | |

| Storage expansion (MB) | No | Yes (micro-SD) | No | |

| Communication range (km) [RC mudule depends] [minimum] | 7 | 5 | 1.61 (Xbee XSC only) | |

| System specifications | ||||

| Operating System/Firmware | ArduCopter-APM-2.0 | PX4 Pro Autopilot | GINA Autopilot | |

| Based on | Arduino | Unix/Linux | ARM7 | |

| Open source and code | ✓ | ✓ | ✓ | |

| Programming IDE | ✓(Arduino IDE) | ✓ | X | |

| Programming libraries | ✓ | ✓ | X | |

| Programming languages | C / Python / Matlab | C / Python | C / Python / OCAML | |

| Route planning software | ✓ (ex: MisionPlanner) | ✓ (ex: MisionPlanner) | ✓ (GINA Ground Control Station) | |

| Wireless configuration | Radio telemetry | Radio telemetry | X | |

| Open source communication protocol | MAVLink | MAVLink | X | |

| Interface connection | USB | micro-USB | micro-USB | |

| Serial ports | ✓ | ✓ | ✓ | |

| GPIO / I2C ports | ✓ | ✓ | ✓ | |

| Other ports | ✓ | ✓ | ✓ | |

| Autopilot functions | ||||

| Waypoints navigation | ✓ | ✓ | ✓ | |

| Auto-Take Off & landing | & | ✓ | ✓ | ✓ |

| Altitude hold | ✓ | ✓ | ✓ | |

| Air speed hold | ✓ | ✓ | X | |

| Multi-UAV support | X | X | X | |

| In-flight route editing | ✓ | ✓ | X | |

| Others | ||||

| Price ($) without GPS | 109 | 199 | 199 | |

| Company/Project | DIY Drones Team | 3DR | Paparazzi UAV | |

| Website | link | link | link | |

| License | Open-Source | Open-Source | Open-Source | |

| Raspberry Pi 3 | Raspberry Pi 2 | ODROID-XU4 | |

|---|---|---|---|

| Physical specifications | |||

| Size (mm) | 86x56x18 | 86x57x18 | 82x58x22 |

| Weight (g) | 59 | 45 | 60 |

| DC in (V) | 5 | 5 | 5 |

| Power consumption (mAh) Power source | 800 Micro-USB / GPIO header | 800 Micro-USB / GPIO header | 1.000 DC jack |

| Computing specifications | |||

| SoC (System on a Chip) | Broadcom BCM2837 | Broadcom BCM2836 | Samsung Exynos 5 Octa (5422) |

| Architecture | ARM Cortex-A53 | ARM Cortex-A7 | ARM Cortex-A7 |

| Cores | 4 | 4 | 8 |

| Frecuency | 1.2 GHz | 900 MHz | 1.4 GHz |

| GPU | Broadcom VideoCore IV | Broadcom VideoCore IV | ARM Mali-T628 (695 MHz) |

| Memory | 1 GB | 1 GB | 2 GB |

| Type | LPDDR2 | LPDDR2 | DDR3L |

| I/O interfaces and ports | |||

| Storage on-board | X | X | X |

| Flash slots (storage expansion) | micro-SD | micro-SD | micro-SD |

| SATA | X | X | X |

| PCIe (Peripheral Component Interconnect Express) | X | X | X |

| USB 2.0 | 4 | 4 | 1 |

| USB 3.0 | X | X | 2 |

| USB Type (device) | undefined | undefined | OTG 3.0 |

| Ethernet | ✓(10/100) | ✓(10/100) | ✓(10/100/1000) |

| WiFi | ✓(b/g/n) | X | X |

| GSM | X | X | X |

| Bluetooth | ✓(4.1) | X | X |

| I2C (Inter-Integrated Circuit) | ✓ | ✓ | ✓ |

| SPI (Serial Peripheral Interface) | ✓ | ✓ | ✓ |

| GPIO | 17 | 17 | ✓ |

| Analog | X | X | ADC |

| Camera port/bus | ✓ | ✓ | X |

| Others | UART | UART | UART & RTC battery |

| Raspberry Pi 3 | Raspberry Pi 2 | ODROID-XU4 | |

|---|---|---|---|

| Audiovisual interfaces | |||

| Mic. In | X | X | X |

| Audio out | X | X | X |

| HDMI | ✓(1.4) | ✓(1.4) | ✓(1.4) |

| LVDS (Low-Voltage Differential Signaling) | X | X | X |

| Others | Composite video | X | X |

| Operating system | |||

| Operating system / Firmware | Windows 10 / GNU Linux (ex: Raspbian) | Windows 10 / GNU Linux (ex: Raspbian) | GNU Linux / Android |

| Open source and code | ✓ | ✓ | ✓ |

| Programming IDE / SDK | ✓ | ✓ | ✓ |

| Programming libraries | ✓ | ✓ | ✓ |

| Programming languages | C / C++ / Python / Perl / Ruby / etc. | C / C++ / Python / Perl / Ruby / etc. | C / C++ / Java / etc. |

| Others | |||

| Price ($) | 45 | 35 | 74 |

| Company/Project | Raspberry Pi Foundation | Raspberry Pi Foundation | Hardkernel |

| Website | link | link | link |

| License | GPL Open-Source | GPL Open-Source | GPL Open-Source |

| Arduino UNO | Arduino MEGA 2560 | Arduino MKR1000 | |

|---|---|---|---|

| Physical specifications | |||

| Size (mm) | 69x54x14 | 102x54x11 | 56x26x6 |

| Weight (g) | 25 | 37 | 10 |

| DC In (V) | 7 - 12 | 7 - 12 | 5 |

| Power consumption (mAh) | 42 | 17 | 49 |

| Power source | DC jack | DC jack | Micro-USB |

| Computing specifications | |||

| CPU | ATmega328P (16 MHz) | ATmega2560 (16 MHz) | SAMD21 Cortex-M0+ (48 MHz) |

| EEPROM | 1 KB | 4 KB | X |

| SRAM | 2 KB | 8 KB | 32 KB |

| Flash | 32 KB | 256 KB | 256 KB |

| Storage expansion (MB) | X | X | X |

| Ethernet | X | X | X |

| WiFi | X | X | ✓ |

| USB | ✓(Regular) | ✓(Regular) | ✓(Micro) |

| Analog IN | 6 | 16 | 7 |

| Analog OUT | 0 | 0 | 1 |

| Digital IN | 14 | 54 | 8 |

| Digital OUT | 6 | 15 | 4 |

| UART port | 1 | 4 | 1 |

| External interrupts | 2 | 6 | 8 |

| Others connections | X | X | ✓ |

| Display | X | X | X |

| System specifications | |||

| Operating System/Firmware | None | None | None |

| Open source and code | ✓ | ✓ | ✓ |

| Programming IDE | ✓(Arduino IDE) | ✓(Arduino IDE) | ✓(Arduino IDE) |

| Programming libraries | ✓ | ✓ | ✓ |

| Programming languages | C / Processing / C# / Python / ArduBlock / etc. | C / Processing / C# / Python / ArduBlock / etc. | C / Processing / C# / Python / ArduBlock / etc. |

| Others | |||

| Price ($) without GPS | 20 | 35 | 31 |

| Company/Project | Arduino | Arduino | Arduino |

| Website | link | link | link |

| License | CC Atribution Share-Alike | CC Atribution Share-Alike | CC Atribution Share-Alike |

| AutoPilot (APM 2.6) | OnBoard Computer (Rasp. Pi 2) | IOHub (Arduino UNO) | |||

| Storage | Storage capacity | Up to 32 GB | |||

| Processing | Processing capacity | 512 MB | |||

| Reasoning | Programming capacity | ||||

| Context sensitive | Different sensors | ||||

| Communication | Communication PC-UAS | Telemetry | |||

| Communication Remote-UAS | Radio | ||||

| Communication to External entity | GSM communications | ||||

| Configuration | Hardware | Extensibility | Different Sensors | ||

| Software | Programming | C or Python | Different languages | ||

| Route planning software | APM Planner | ||||

| Adaptability | Different connections | ||||

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moguel, E.; Conejero, J.M.; Sánchez-Figueroa, F.; Hernández, J.; Preciado, J.C.; Sánchez-Figueroa, F.; Rodríguez-Echeverría, R. Towards the Use of Unmanned Aerial Systems for Providing Sustainable Services in Smart Cities. Sensors 2018, 18, 64. https://doi.org/10.3390/s18010064

Moguel E, Conejero JM, Sánchez-Figueroa F, Hernández J, Preciado JC, Sánchez-Figueroa F, Rodríguez-Echeverría R. Towards the Use of Unmanned Aerial Systems for Providing Sustainable Services in Smart Cities. Sensors. 2018; 18(1):64. https://doi.org/10.3390/s18010064

Chicago/Turabian StyleMoguel, Enrique, José M. Conejero, Fernando Sánchez-Figueroa, Juan Hernández, Juan C. Preciado, Fernando Sánchez-Figueroa, and Roberto Rodríguez-Echeverría. 2018. "Towards the Use of Unmanned Aerial Systems for Providing Sustainable Services in Smart Cities" Sensors 18, no. 1: 64. https://doi.org/10.3390/s18010064