An Adaptive Deghosting Method in Neural Network-Based Infrared Detectors Nonuniformity Correction

Abstract

:1. Introduction

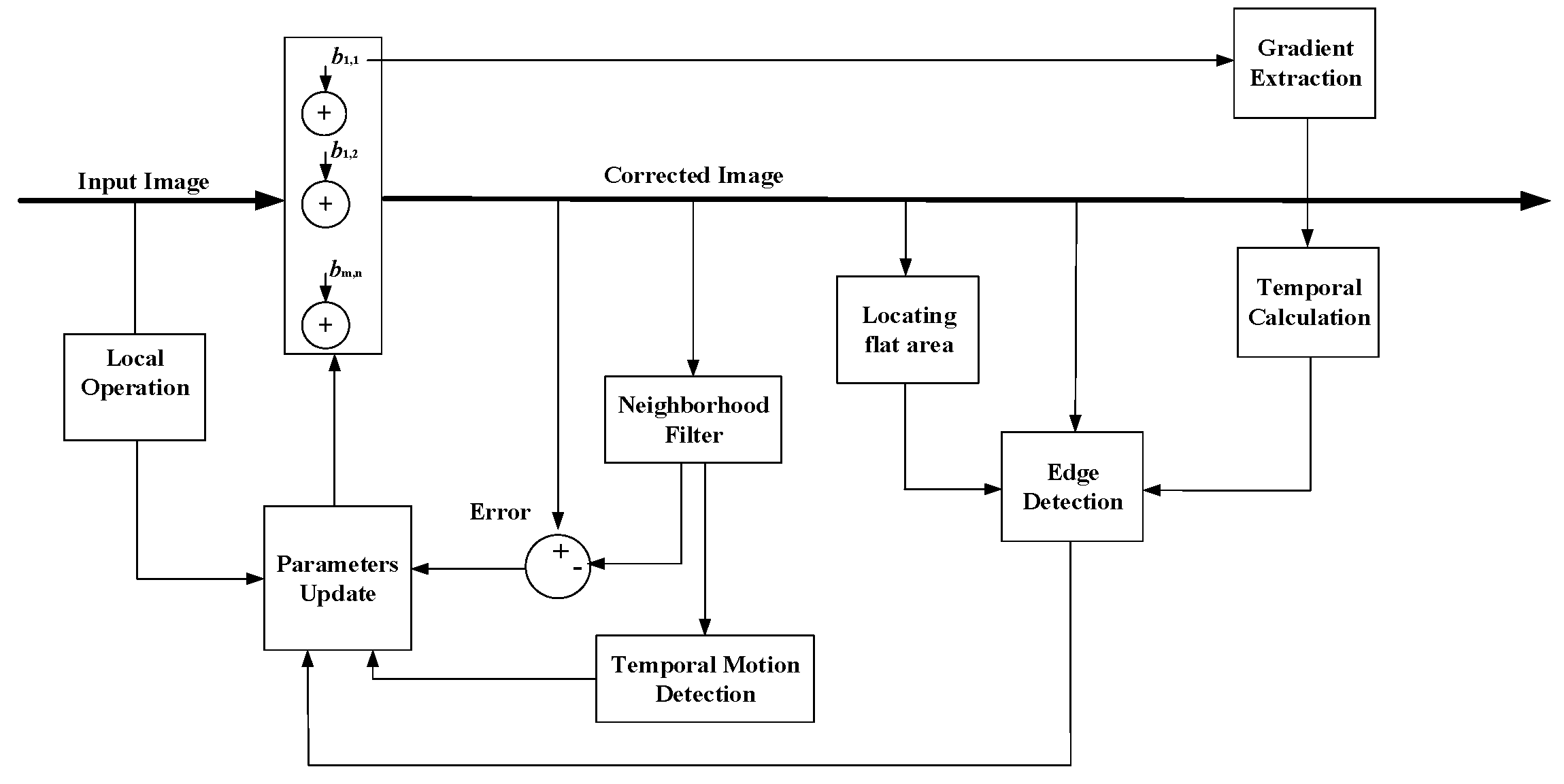

2. Neural Network-Based Nonuniformity Correction

2.1. Nonuniformity Observation Model

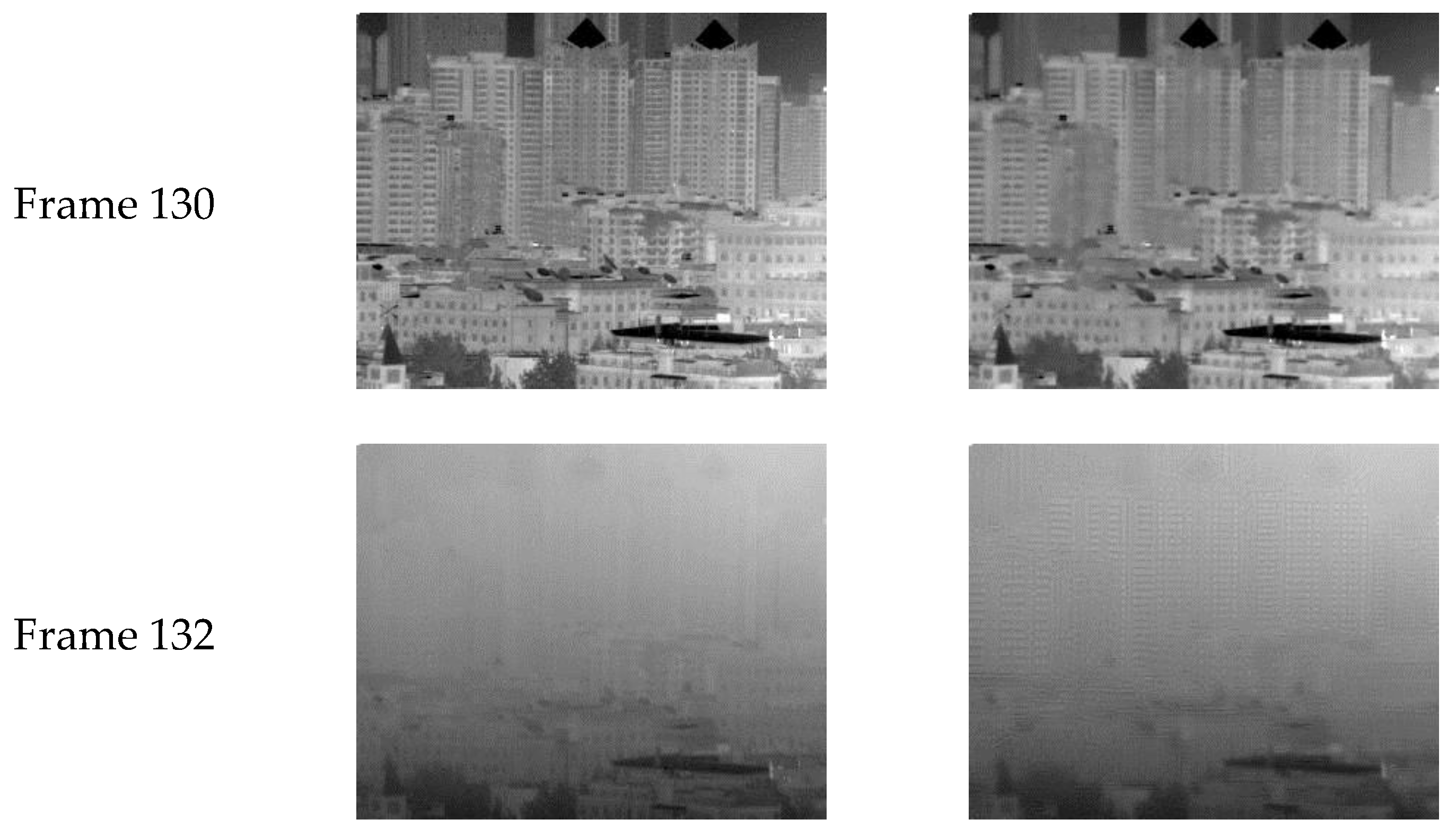

2.2. Deghosting Methods

3. Proposed Deghosting Method

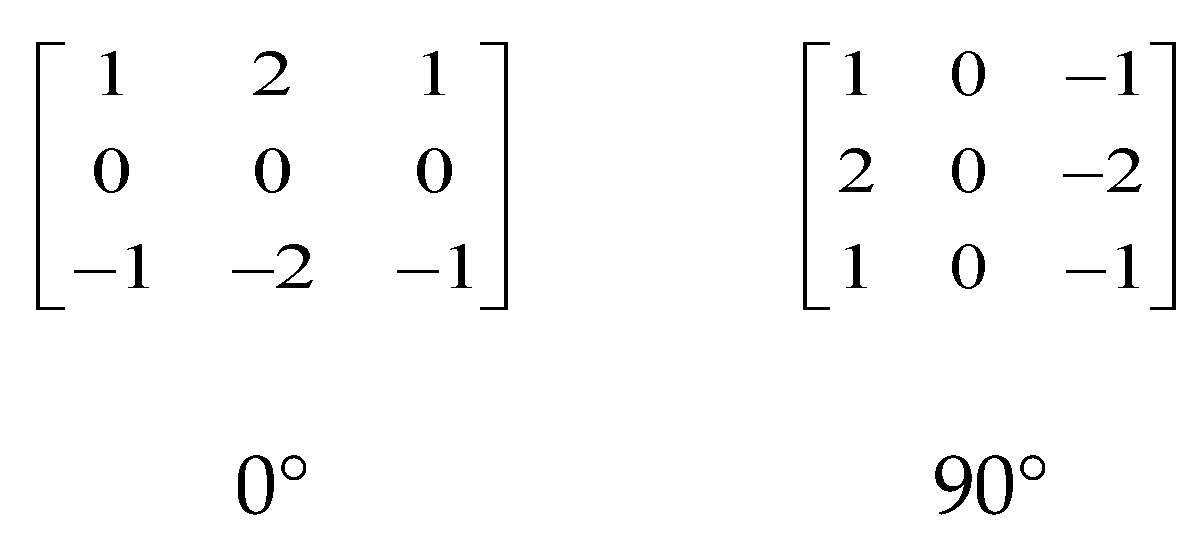

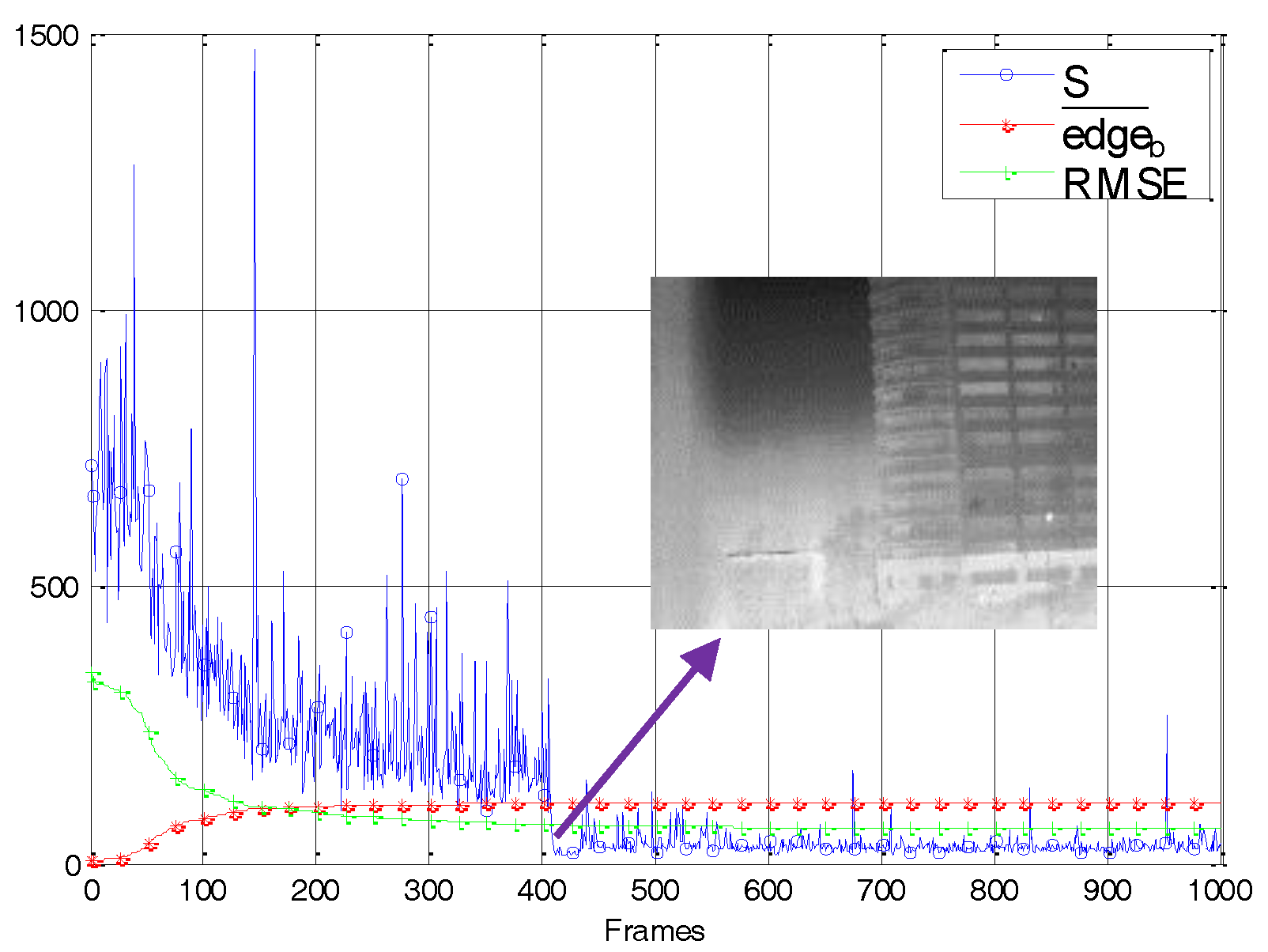

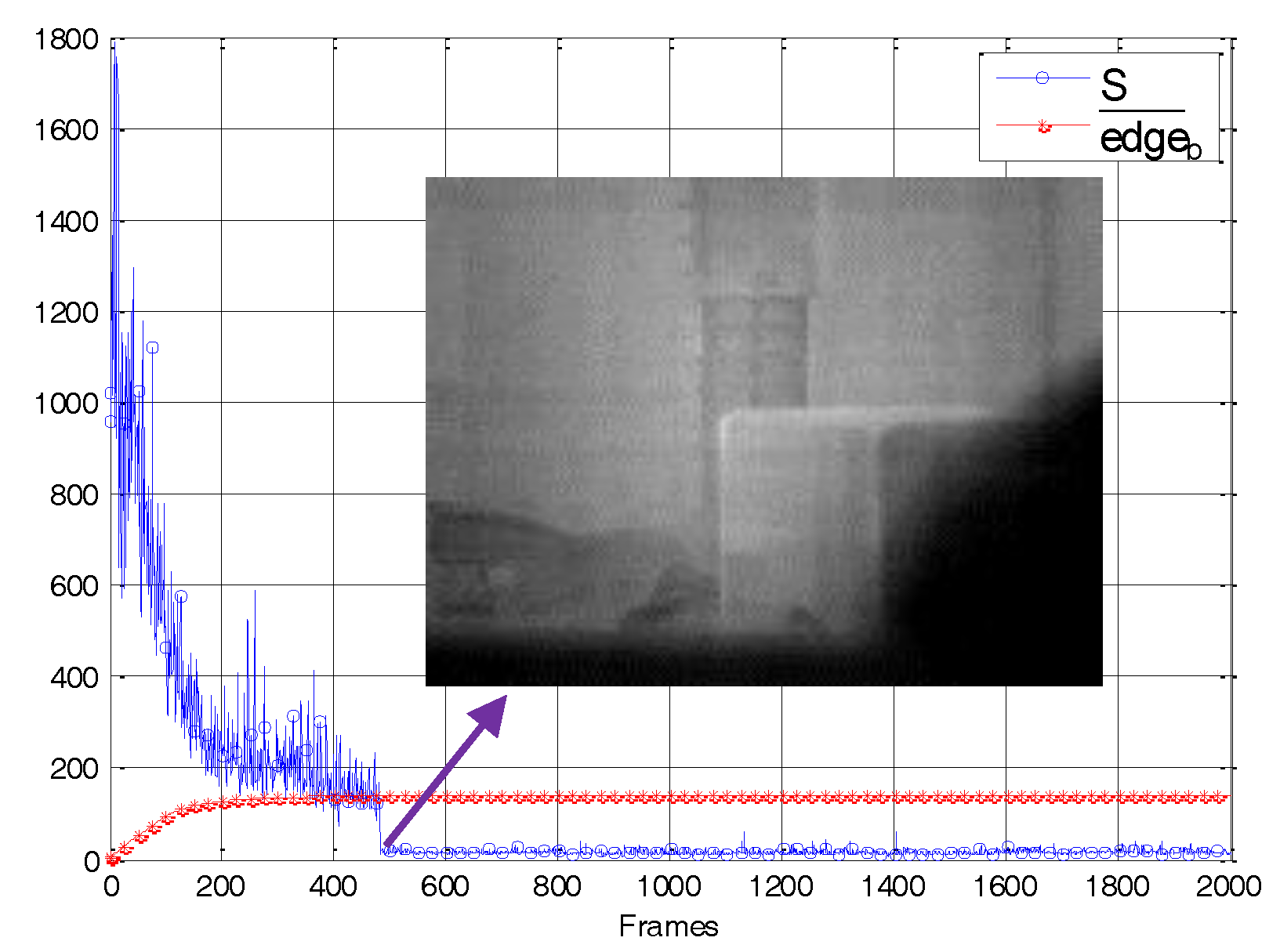

3.1. Combined Temporal Gate and Edge Detection

3.2. Adaptive Spatial Threshold

3.3. Algorithm Summary

4. Experimental Results

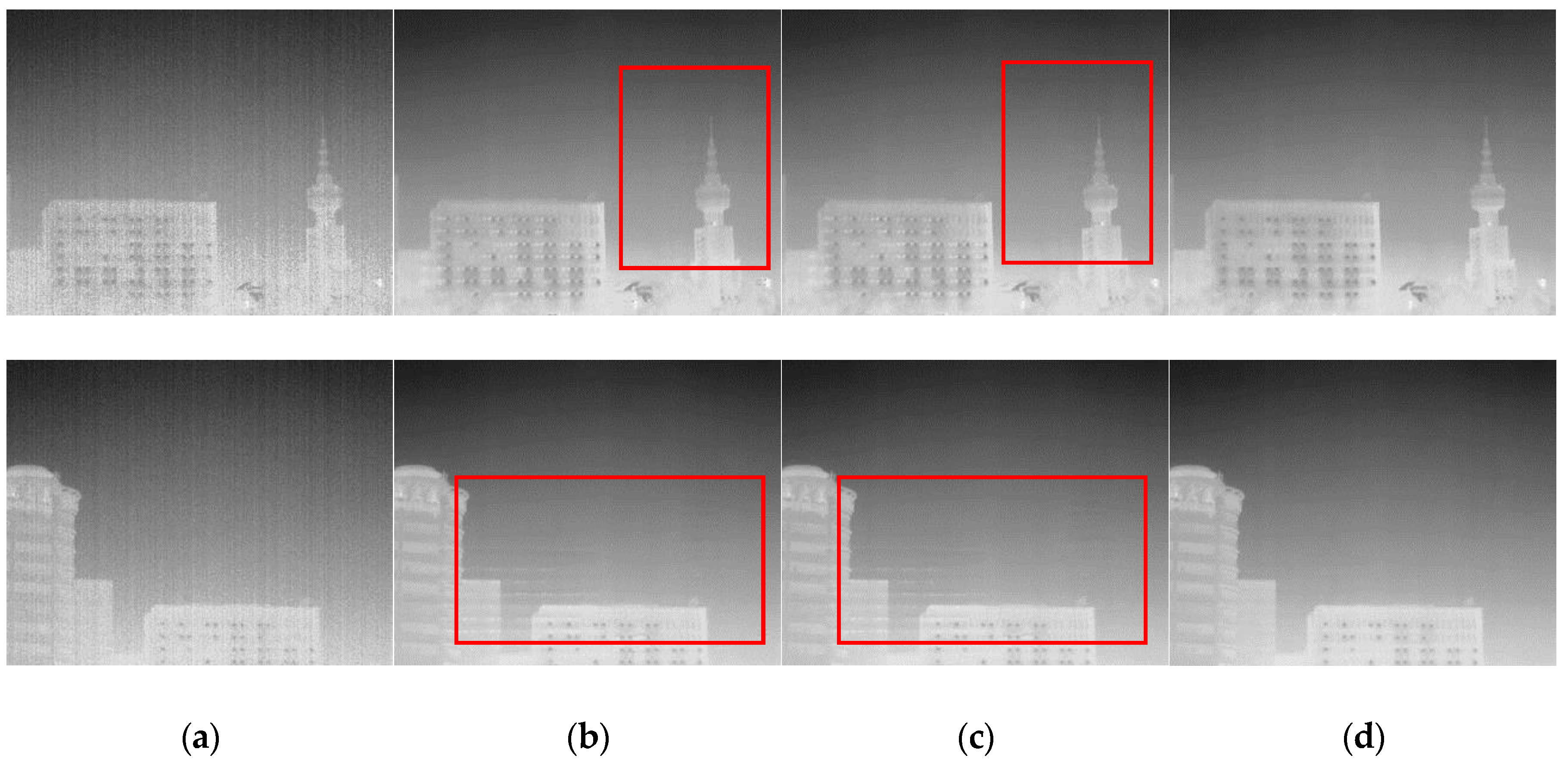

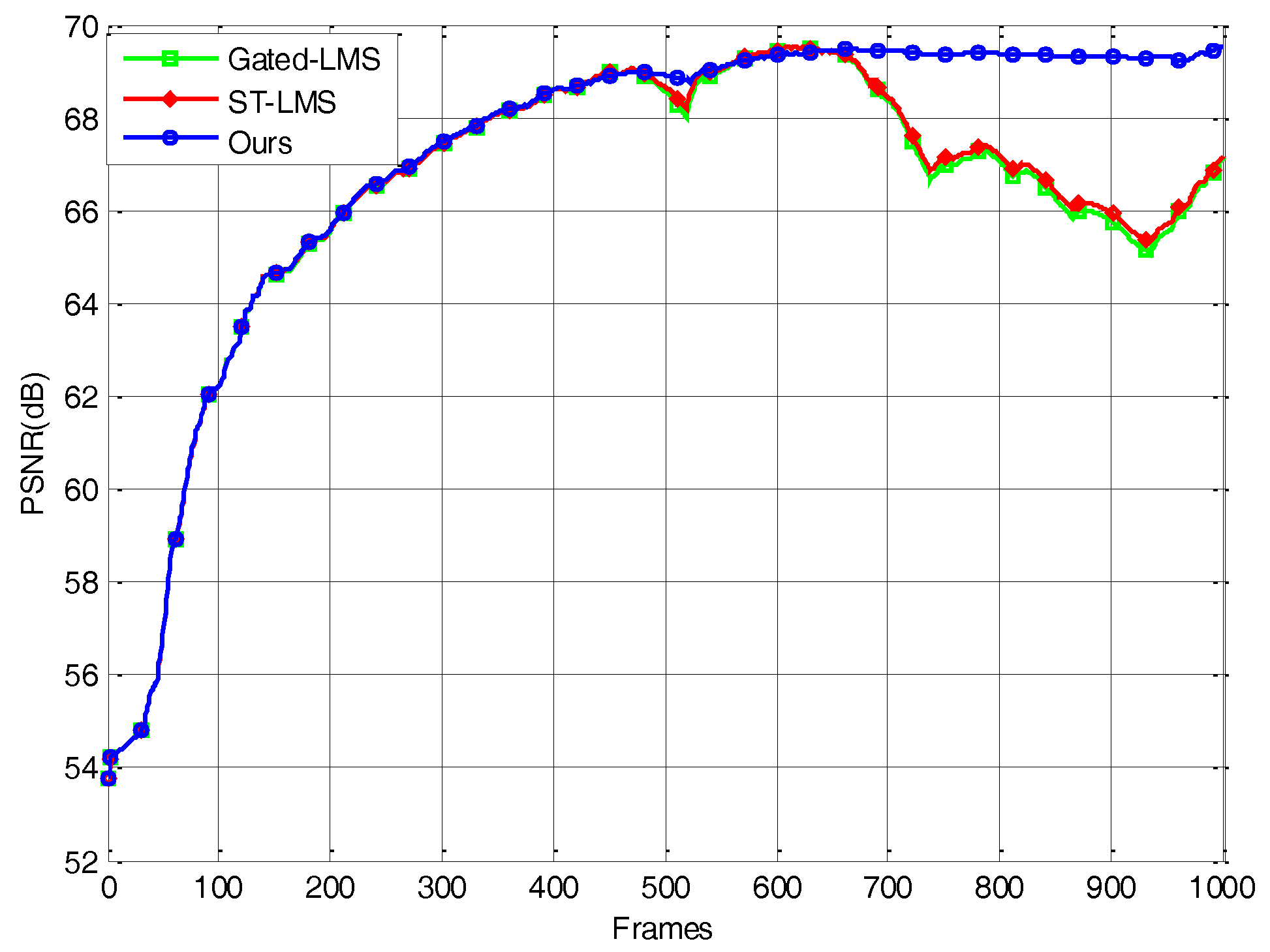

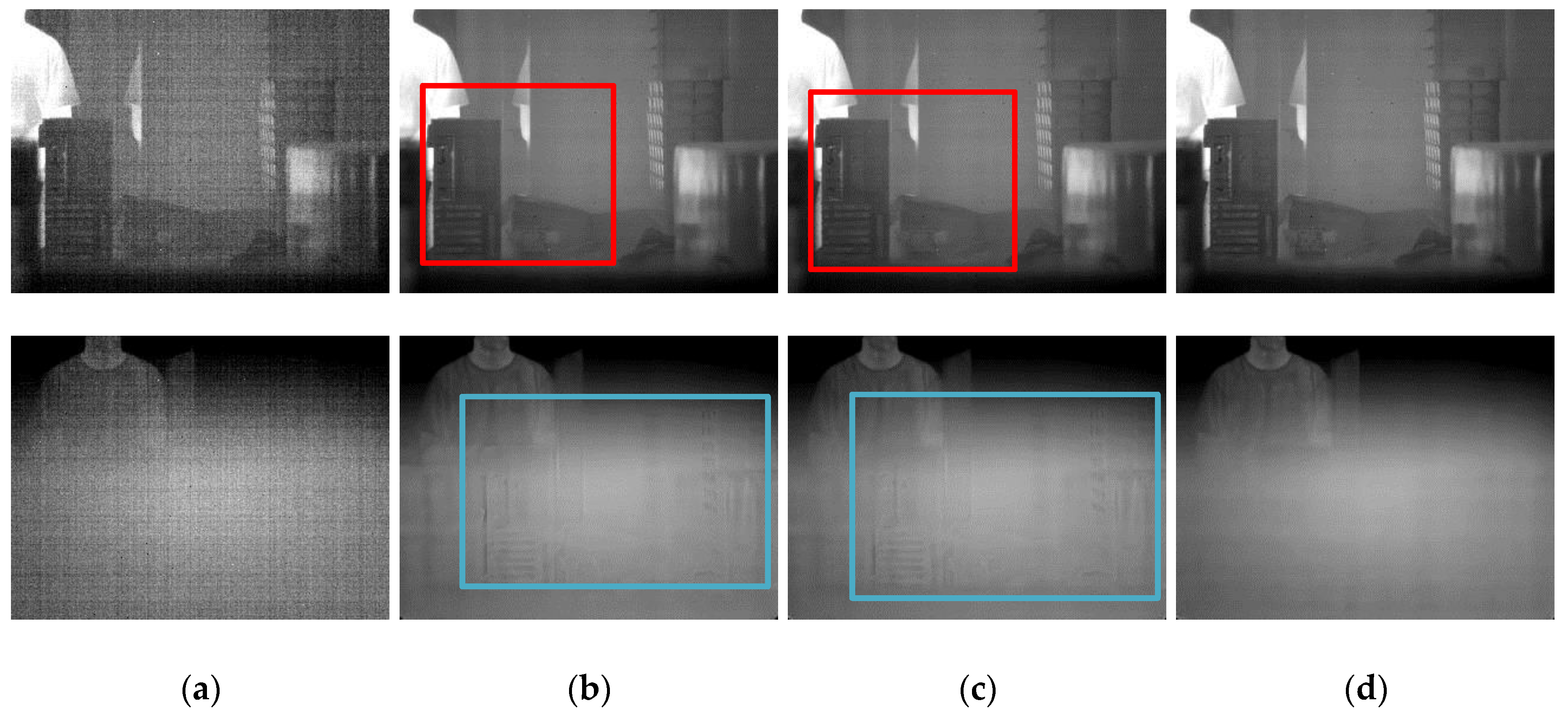

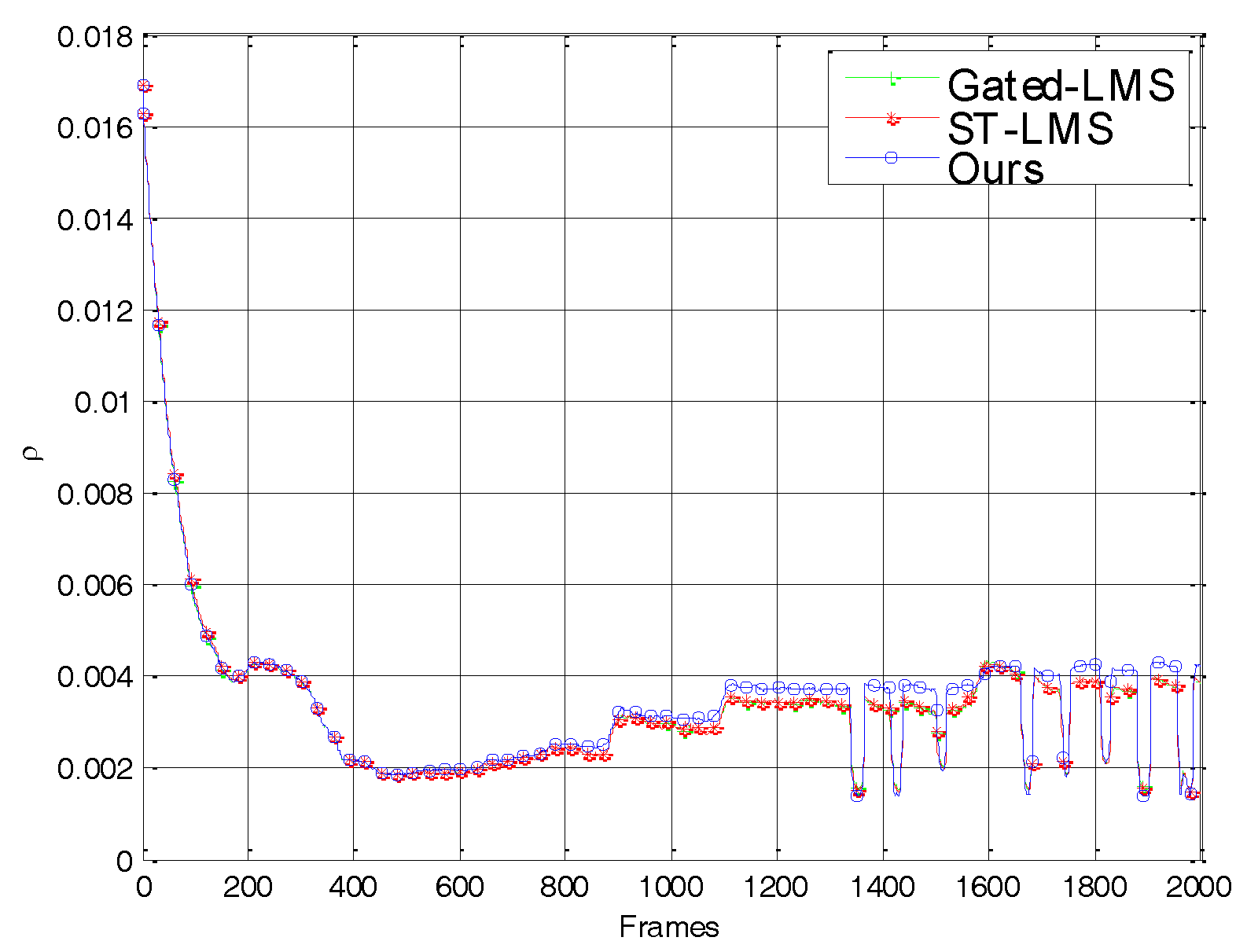

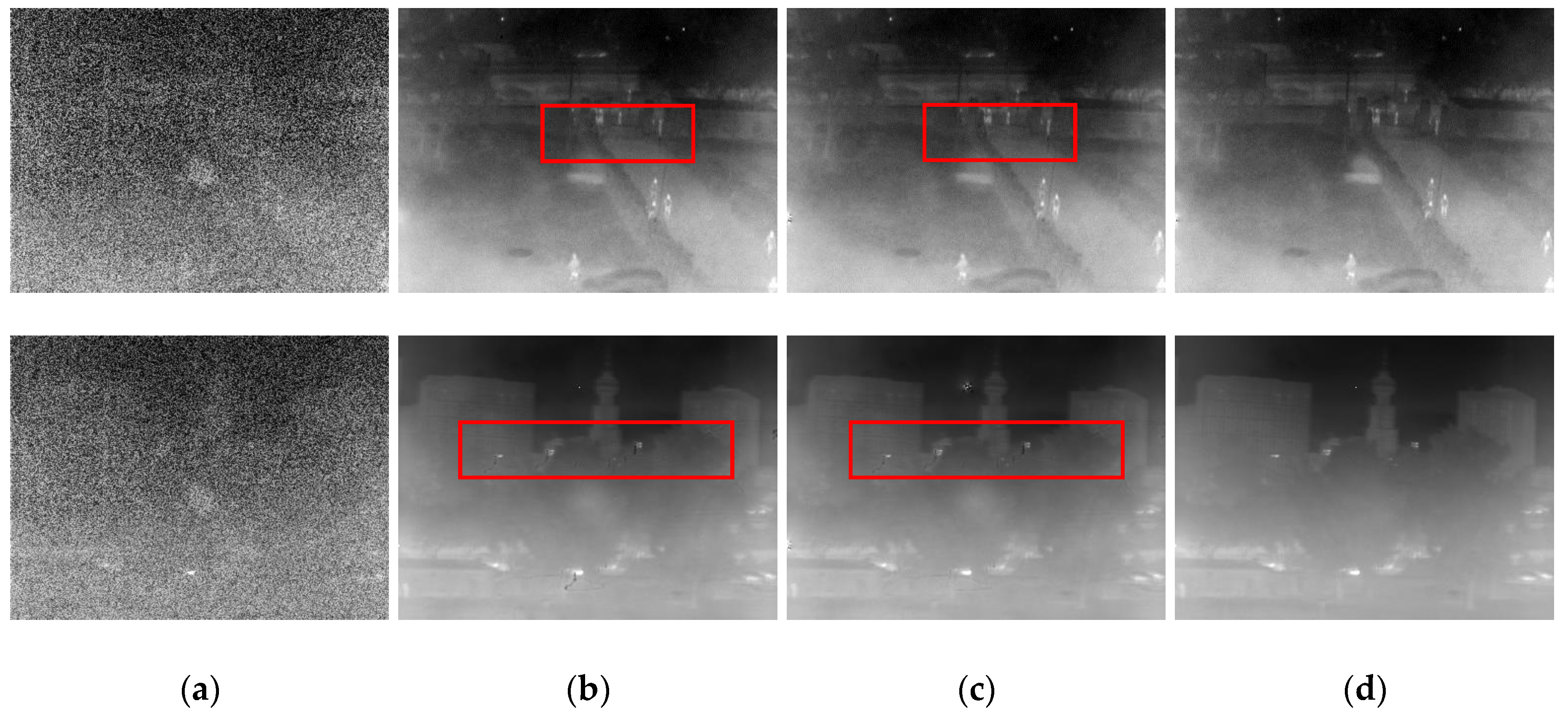

4.1. Nonuniformity Correction with Simulated Data

4.2. Nonuniformity Correction with Real Data

5. Efficiency Analysis

6. Conclusions

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Perry, D.L.; Dereniak, E.L. Linear theory of nonuniformity correction in infrared staring sensors. Opt. Eng. 1993, 32, 1854–1859. [Google Scholar] [CrossRef]

- Milton, A.F.; Barone, F.R.; Kruer, M.R. Influence of nonuniformity on infrared focal plane array performance. Opt. Eng. 1985, 24, 245855. [Google Scholar] [CrossRef]

- Scribner, D.A.; Sarkady, K.A.; Kruer, M.R.; Caulfield, J.T.; Hunt, J.D.; Colbert, M.; Descour, M. Adaptive retina-like preprocessing for imaging detector arrays. In Proceedings of the IEEE International Conference on Neural Networks, San Francisco, CA, USA, 28 March–1 April 1993. [Google Scholar]

- Zuo, C.; Chen, Q.; Gu, G.; Qian, W. New temporal high-pass filter nonuniformity correction based on bilateral filter. Opt. Rev. 2011, 18, 197–202. [Google Scholar] [CrossRef]

- Li, Y.; Jin, W.; Li, S.; Zhang, X.; Zhu, J. A Method of Sky Ripple Residual Nonuniformity Reduction for a Cooled Infrared Imager and Hardware Implementation. Sensors 2017, 17, 1070. [Google Scholar]

- Chiang, Y.-M.; Harris, J.G. An analog integrated circuit for continuous-time gain and offset calibration of sensor arrays. J. Analog Integr. Circuits Signal Process 1997, 12, 231–238. [Google Scholar] [CrossRef]

- Hardie, R.C.; Hayat, M.M.; Armstrong, E.E.; Yasuda, B. Scene-based nonuniformity correction using video sequences and registration. Appl. Opt. 2000, 39, 1241–1250. [Google Scholar] [CrossRef] [PubMed]

- Boutemedjet, A.; Deng, C.; Zhao, B. Robust Approach for Nonuniformity Correction in Infrared Focal Plane Array. Sensors 2016, 16, 1890. [Google Scholar] [CrossRef] [PubMed]

- Vera, E.M.; Torres, S.N. Fast Adaptive Nonuniformity Correction for Infrared Focal-Plane Array Detectors. EURASIP J. Appl. Signal Process. 2005, 13, 1994–2004. [Google Scholar] [CrossRef]

- Rossi, A.; Diani, M.; Corsini, G. Bilateral filter-based adaptive nonuniformity correction for infrared focal-plane array systems. Opt. Eng. 2010, 49, 057003–057013. [Google Scholar] [CrossRef]

- Zhang, T.; Shi, Y. Edge-directed adaptive nonuniformity correction for staring infrared plane arrays. Opt. Eng. 2006, 45, 016402. [Google Scholar] [CrossRef]

- Torres, S.N.; San Martin, C.; Sbarbaro, D.; Pezoa, J.E. A neural network for nonuniformity and ghosting correction of infrared image sequence. Lect. Notes Comput. Sci. 2005, 3656, 1208–1216. [Google Scholar]

- Hardie, R.; Baxley, F.; Brys, B.; Hytla, P. Scene-based nonuniformity correction with reduced ghosting using a gated LMS algorithm. Opt. Express 2009, 17, 14918–14933. [Google Scholar] [CrossRef] [PubMed]

- Rong, S.H.; Zhou, H.X.; Qin, H.L.; Lai, R.; Qian, K. Guided filter and adaptive learning rate based non-uniformity correction algorithm for infrared focal plane array. Infrared Phys. Technol. 2016, 76, 691–697. [Google Scholar]

- Fan, F.; Ma, Y.; Huang, J.; Liu, Z.; Liu, C.Y. A combined temporal and spatial deghosting technique in scene based nonuniformity correction. Infrared Phys. Technol. 2015, 71, 408–415. [Google Scholar] [CrossRef]

- Yu, H.; Zhang, Z.J.; Wang, C. An improved retina-like nonuniformity correction for infrared focal-plane array. Infrared Phys. Technol. 2015, 73, 62–72. [Google Scholar] [CrossRef]

- Martin, C.S.; Torres, S.; Pezoa, J.E. Statistical recursive filtering for offset nonuniformity estimation in infrared focal-plane-array sensors. Infrared Phys. Technol. 2008, 5745, 564–571. [Google Scholar] [CrossRef]

- Rogalski, A. Progress in focal plane array technologies. Prog. Quantum Electron. 2012, 36, 342–473. [Google Scholar] [CrossRef]

- Kaufman, L.; Kramer, D.M.; Crooks, L.E.; Ortendahl, D.A. Measuring signal-to-noise ratios in MR imaging. Radiology 1989, 173, 265–267. [Google Scholar] [CrossRef] [PubMed]

- Nowak, R.D. Wavelet-based Rician noise removal for magnetic resonance imaging. IEEE Trans. Image Process. 1999, 8, 1408–1419. [Google Scholar] [CrossRef] [PubMed]

- Aja-Fernández, S.; Vegas-Sánchez-Ferrero, G.; Martín-Fernández, M.; Alberola-López, C. Automatic noise estimation in images using local statistics. Additive and multiplicative cases. Image Vis. Comput. 2009, 27, 756–770. [Google Scholar] [CrossRef]

- Amer, A.; Dubois, E. Fast and reliable structure-oriented video noise estimation. IEEE Trans. Circuits Syst. Video Technol. 2005, 15, 113–118. [Google Scholar] [CrossRef]

- GWIR 0203X1A. Available online: http://www.gwic.com.cn/productdetail.html?id=8 (accessed on 22 October 2017).

| Method | Gated–LMS | ST–LMS | Ours |

|---|---|---|---|

| Time(s) | 0.0512 | 0.4627 | 0.7472 |

| Logical Operation | Adding | Multiplication | Division | |

|---|---|---|---|---|

| Gated–LMS | 2 | 19 | 8 | 4 |

| ST–LMS | 77 | 58 | 8 | 4 |

| Ours | 161 | 131 | 19 | 5 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Jin, W.; Zhu, J.; Zhang, X.; Li, S. An Adaptive Deghosting Method in Neural Network-Based Infrared Detectors Nonuniformity Correction. Sensors 2018, 18, 211. https://doi.org/10.3390/s18010211

Li Y, Jin W, Zhu J, Zhang X, Li S. An Adaptive Deghosting Method in Neural Network-Based Infrared Detectors Nonuniformity Correction. Sensors. 2018; 18(1):211. https://doi.org/10.3390/s18010211

Chicago/Turabian StyleLi, Yiyang, Weiqi Jin, Jin Zhu, Xu Zhang, and Shuo Li. 2018. "An Adaptive Deghosting Method in Neural Network-Based Infrared Detectors Nonuniformity Correction" Sensors 18, no. 1: 211. https://doi.org/10.3390/s18010211