1. Introduction

A typical galvanometric laser scanner consists of two rotatable mirrors driven by two limited-rotation motors, respectively. The incoming laser beam are deflected by the mirrors, the orientations of which are uniquely determined by the control voltages applied to the two motors, so there exists a one-to-one mapping between the two input voltage signals and the outgoing laser beam.

Due to the good characteristics of high deflection speed, high positioning repeatability, low price and concise structure, galvanometric laser scanning (GLS) systems are broadly used as the key component of a variety of devices for diverse applications, such as laser marking [

1,

2], laser projection [

3,

4], optical metrology [

5,

6], material processing [

7,

8,

9,

10], medical imaging [

11,

12], etc.

These GLS-based applications can be classified into two categories: the forward applications and the backward applications. In the forward applications, e.g., laser triangulation scanning, pre-defined control voltages are input and the 3D coordinates of the laser spot of the outgoing beam hitting on the object surface need to be solved, whereas in the backward applications, e.g., laser material processing and laser marking, the position of the laser spot on the object surface is pre-defined and the control voltages need to be solved accordingly. An essential problem involved in both forward and backward applications is how to establish a system model to accurately reveal the relationship between the input and the output, which is known as system calibration.

Most existing GLS system calibration methods focus on constructing a model with a set of real structural parameters to reveal the working mechanism of the device. This kind of methods is called physical-model-based calibration. Since the GLS system does not have a single center of projection, the physical model of GLS system is relative complex and suffers from distortion. As a representative physical-model-based method, Manakov et al. [

13] presented a complicated model containing up to 26 physical parameters to predict the distortions caused by installation errors. Even so, not all affecting factors are involved. In fact, system errors are difficult to be eliminated by additional parameters for a rather complex device, since too many parameters may lead to hard and non-convex optimization problems with a growing risk for local minima. To compensate the system error of the physical models, various distortion correction mechanisms were put forward [

14,

15,

16]. These distortion correction methods are applicable only if the object that the laser beam hits on is planar [

17], or only works for a limited set of outgoing rays [

18]. Instead, Cui et al. [

19] adapted the distortion model of a pinhole camera to represent the GLS system. This method is highly dependent on the similarities between a GLS system and a camera. Since a real GLS system does not have an optical center as that of a camera, it still needs many optimization parameters to ensure the calibration accuracy. In other words, there exists the same optimization problems as that in the physical-model-based method just mentioned.

A more reliable and flexible approach is to use the statistical learning methods (e.g., artificial neural networks, support vector machines) to approximate a model function irrespective of the specific system construction. This kind of methods establish universal regression models, which is called universal-model-based method, to describe the complex relations to be calibrated. The large amount of variables in the universal models are irrespective of specific physical meaning and are usually determined by means of supervised learning from a training data set, so they are also called data-driven methods. Along this line, Wissel et al. [

20] calibrated a galvanometric triangulation device, in which a GLS system and a camera constitute a fixed triangulation structure. However, their calibration result is only applicable for the specific triangulation setup to measure the shape of 3D objects, not applicable for the backward applications mentioned above. In general, the universal-model-based methods are more adaptive to different hardware structure and can achieve higher calibration accuracy. However, this type of methods is often criticized for the low learning efficiency, because it usually requires a large amount of training data and a time-consuming iterative training procedure.

Based on the fact that each pair of voltages corresponds to only one single outgoing ray, we establish a Single Hidden Layer Feedforward Neural Network (SLFN) [

21] to model the system. The SLFN directly takes the pair of voltage signals as input and the parameters of the outgoing laser beam as output. To facilitate the training data collection, a straight moving mechanism is employed. To efficiently train the established model, the Extreme Learning Machine (ELM) [

22,

23,

24] for specially solving the SLFN is introduced. This learning method does not need to adjust the connection weights between the input layer and hidden layer in during the training. It can achieve the unique optimal solution as long as the number of hidden neuron is selected. Within the framework of ELM, we only need to solve a linear system in a closed form for completing the calibration of the SLFN model. The calibration results can be conveniently used for both the forward and the backward applications.

2. Materials and Methods

2.1. System Calibration Configuration

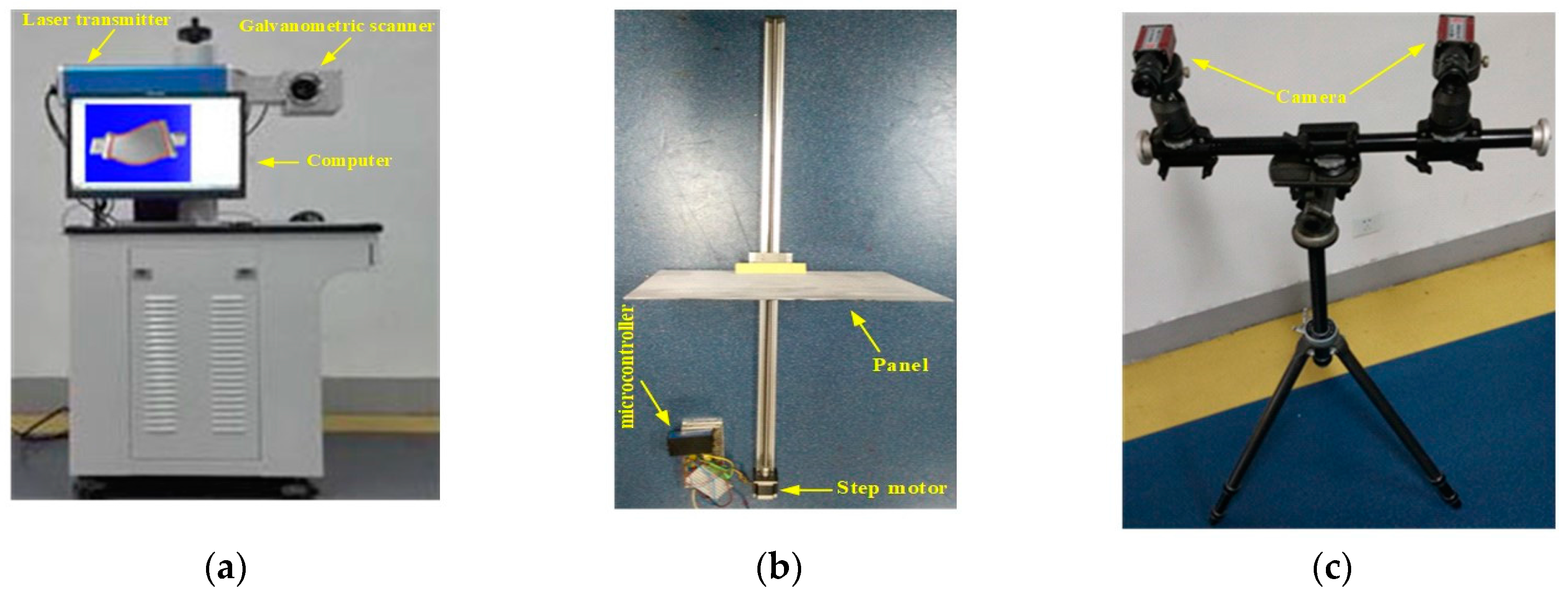

As shown in

Figure 1, the setup involved in the calibration procedure is composed of three parts: the GLS system, the binocular system and the moving mechanism. The GLS system consists of a laser transmitter, a double-mirror galvanometric scanner, a control board. The transmitter emits a laser beam, and the galvanometric scanner, including two perpendicular mirrors mounted on two separate galvanometer motors respectively, deflects the laser beam. The control board is used to control the opening or closing of the laser transmitter and the rotation angles of the dual mirrors. A smooth panel, a stepping motor, a ball screw and a microcontroller constitute the moving mechanism. The panel, coated with black flat lacquer, is fixed on the ball screw by a special clamp. The microcontroller is in charge of the stepping motor control, and the panel can do the translational motion in the viewing field of the binocular system with the help of the rotation of the ball screw driven by stepping motor. With the rapid deflection of the galvanometer, the laser beam can form a grid of laser spots on the panel. The 3D coordinates of the laser spots are obtained by the binocular system for the calibration. All the signals controlling the three parts above are sent by the same computer.

Figure 2 illustrates the specific hardware used in calibration experiment. The GLS system makes use of an economic 520 nm semiconductor laser and a TSH8050A/D galvanometer (Century Sunny, Beijing, China). Both the laser transmitter and the galvanometric scanning head are controlled by a GT-400-Scan control board (GuGao, Shenzhen, China).

The binocular system consists of two MG 419B CMOS cameras (Schneider-Kreuznach, Bad Kreuznch, Germany), two 35 mm lens and a tripod. The software for completing the whole calibration process is installed in a personal computer with 3.1 GHz and 8 GB RAM.

2.2. Calibration of GLS System

For the convenience of depiction, we first introduce some symbols used in this paper. We use to represent the camera coordinate system. Denote the digital voltage value applied to the motor of the first mirror as , and that of the second mirror as . The symbol represents the two-dimensional (2D) digital control voltages . The outgoing laser beam corresponding to of a specific is represented by . represents the six-dimensional (6D) vector of in the camera coordinate system , where represents the direction of , and represents a point on the beam . The mapping between the 2D digital control voltages and the 6D vector is denoted as .

2.2.1. The SLFN Model

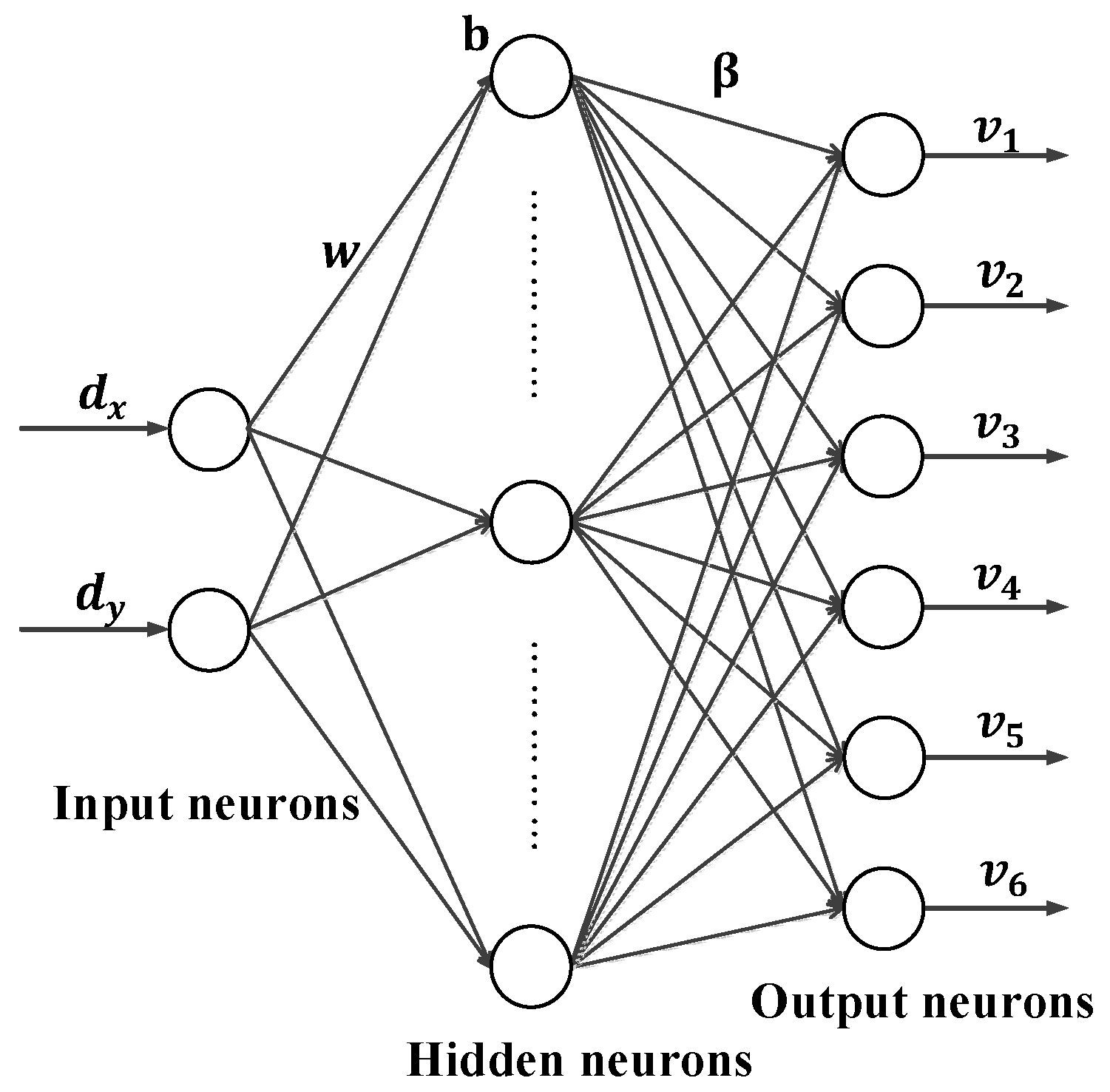

Considering the complexity of the GLS system, we treat the system modelling as a machine learning problem. More specifically, a SLFN as shown in

Figure 3 is used to model the 2D-to-6D mapping relationship

. There are two neurons in the input layer, which respectively are the voltage signals

and

. The output layer contains six neurons, which are respectively the components

,

,

,

,

and

of

. Denote the number of neurons in the hidden layer as

.

The model of

is formulated as:

where

is the input weight vector connecting the hidden neuron and the input neurons,

is the output weight vector connecting the hidden neuron and the output neurons, and

is the bias of the

-th hidden neuron. The activation function

is taken as the sigmoid function:

After having completed the training of the SLFN model, the corresponding to an arbitrary can be easily obtained by Equation (1). The following two subsections describe the training method in details.

2.2.2. Generating Training Data

As shown in

Figure 4, a set of control voltages

is sent to control the GLS system to project a gird of laser spots onto the panel. At the same time, the panel does translational motion in the field-of-view of the binocular system, and stops at every position

. The left camera records the image

of the laser spot grid at position

, and the right camera record the image

. According to the extracted image coordinate

of the laser spot

in

and the coordinate

in

, the 3D coordinates

of

is computed based on the binocular stereo vision algorithm. Then the 6D vector

of the laser beam

in

can be estimated by fitting the 3D points

of a common index

from the same laser beam

. The fitting is performed by minimizing the error measure shown in Equation (3):

where

is the distance from the point

to the laser beam

. Given the existence of outliers in

, RANSAC method [

25] is used to improve the fitting precision of the line. In this way, we achieve the 6D vectors

of all the outgoing laser beams. Associating

with the corresponding input control voltages

the training data set (

,

),

is fully achieved.

2.2.3. Solving the SLFN Model

The gradient-descent-based methods are commonly used for training neural networks. These methods tune all the parameters

,

and

of the networks iteratively in many steps. The gradient computation burden is large, since the number of the parameters is usually huge. So, these training processes are time-consuming and even may converge to local minima. To efficiently establish the 2D to 6D mapping

, we incorporate the extreme learning machine (ELM) [

22,

23] to solve the model formulated in Equation (1).

Given

arbitrary samples (

,

),

the SLFN with hidden neurons in Equation (1) should satisfy:

Equation (5) can be written compactly as:

where:

Given any small positive value

and randomly chosen

and

, if only the activation function

is infinitely differentiable, there exist

hidden nodes, such that for

arbitrary samples

holds [

24]. Based on the above conclusion, we randomly assign the input weights

and the hidden layer biases

. Then the hidden layer output matrix

in Equation (6) is fully determined, and the model in Equation (1) can be simply considered as a linear system. The output weights in

is determined by:

where

is the generalized inverse matrix of

. This is the smallest norm least square solution to the linear system. The solved

, together with the randomly chosen

and

, completely determine the model in Equation (1).

2.3. Validations

In order to verify the accuracy and efficiency of the proposed calibration method, cross validation experiment and target shooting experiment are performed respectively. Moreover, we provide both the forward and the backward applications based on the calibrated mapping . The achievement of these applications fully testifies the wide applicability of the proposed method in various applications.

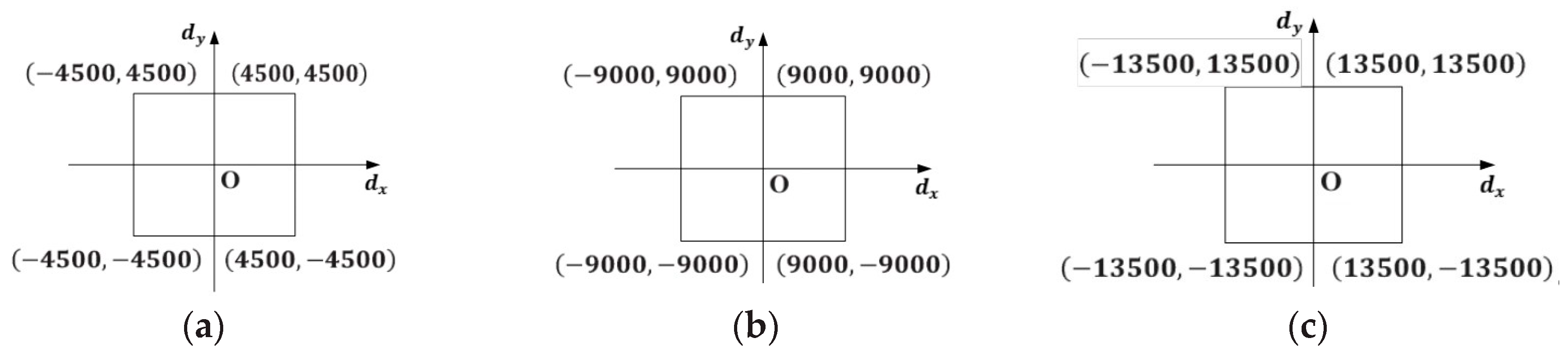

2.3.1. Cross Validation

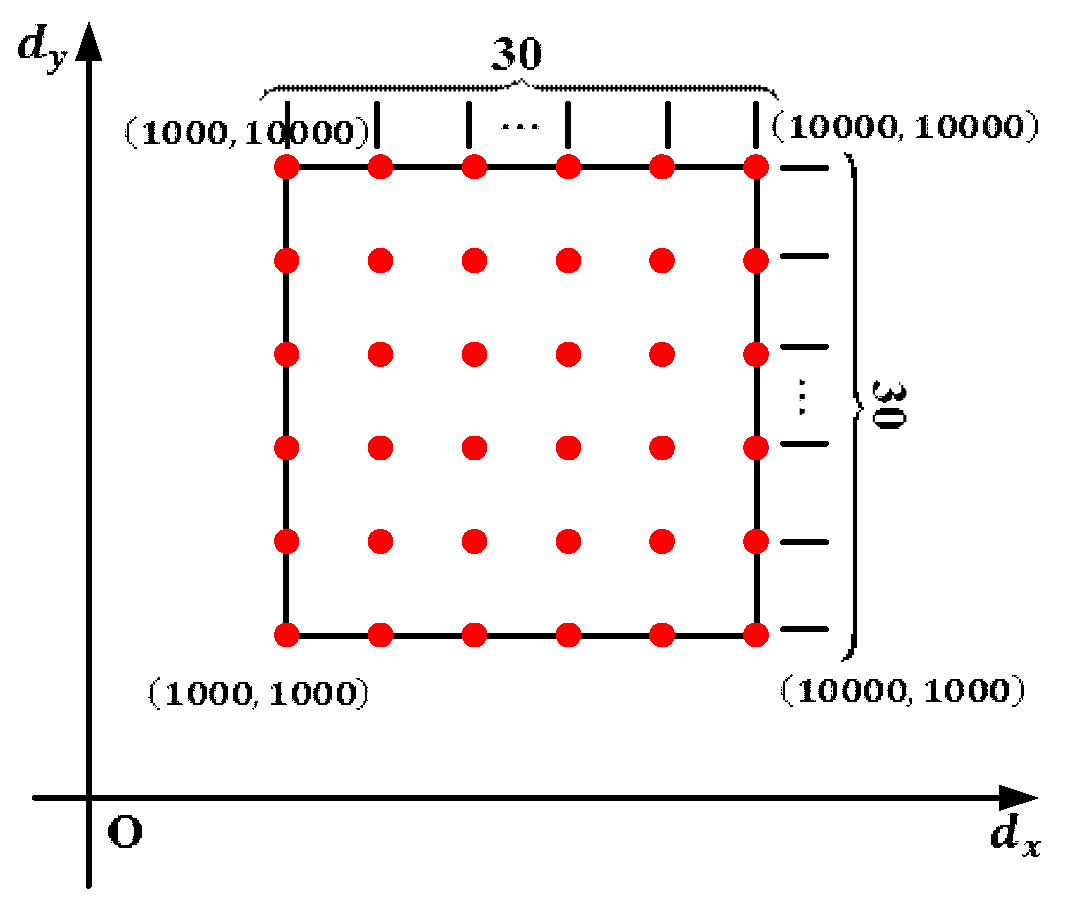

Nine hundred (900) pairs of digital voltages

are input to generate 900 outgoing laser beams in the field-of-view of the binocular system. The 900 pairs of digital voltages are uniformly spaced on the virtual digital plane as shown in

Figure 5. According to the method in

Section 2.2.2, the sample data set (

,

)

is obtained. Here the number of the position

is set as 100. The distance between the first position

and the last position

is about 1 m, and the first position

is approximately 2 m away from the GLS system. With the help of the moving mechanism, the whole sampling procedure costs less than 10 min.

To evaluate the performance of the new calibration method, the 10-fold cross validation is adopted. We randomly draw the one-tenth of the sample data as the test data, and use the rest of the sample data to train the SLFN model by means of the method in

Section 2.2.3. For arbitrary input voltages

in the test data set, its corresponding outgoing beam

is represented by the 6D vector

, which is fitted out with the process in

Section 2.2.2.

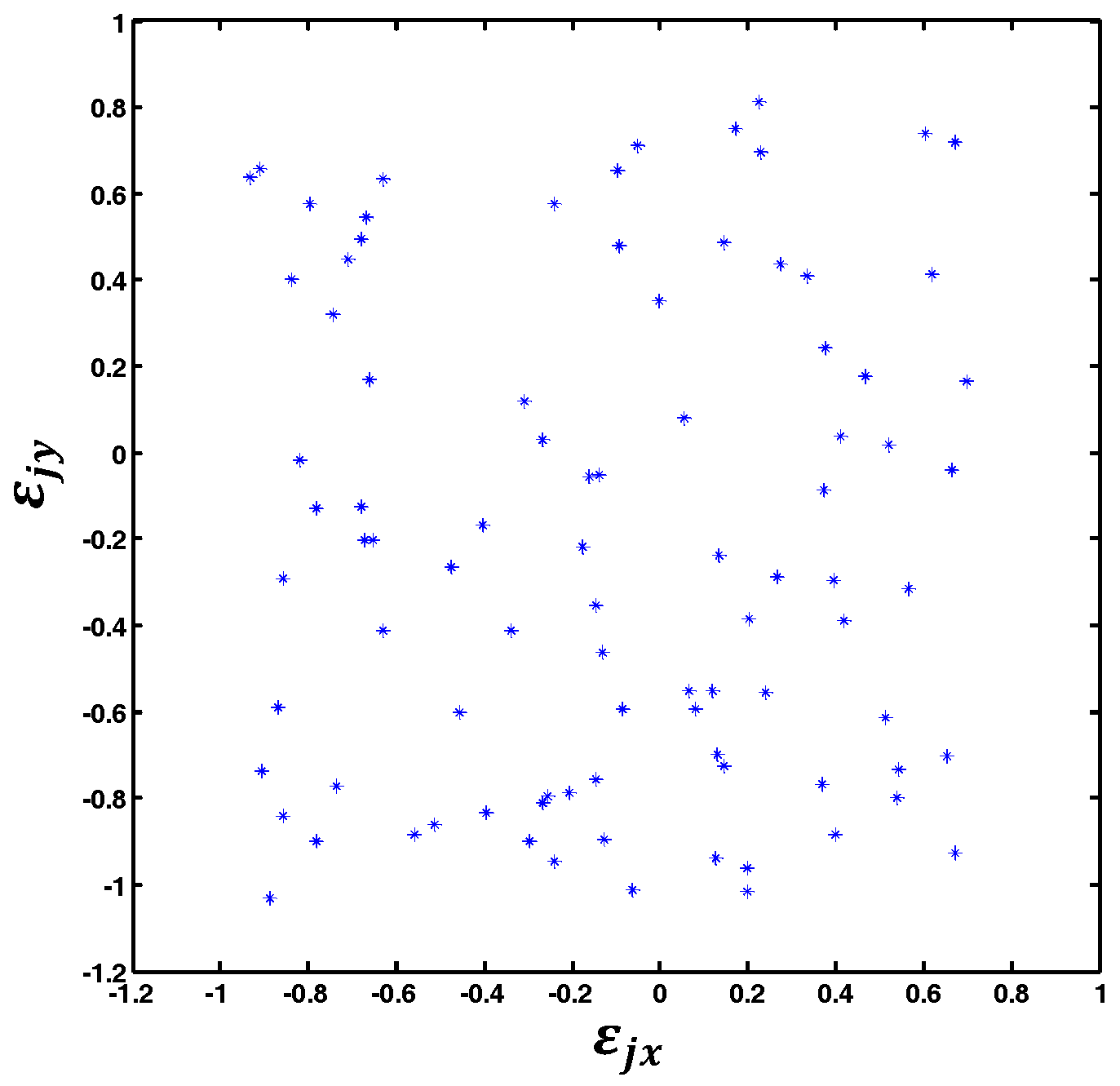

Meanwhile, we calculate a 6D vector denoted as for every through the established mapping . If the error of the established mapping tends to be zero, the beam and the beam determined by should be the same line. However, the evaluation of the difference between and is difficult. So, an equivalent evaluation method is introduced here.

The corresponding input digital voltages

of the spatial beam

is given by:

where

is the distance from the point

to the corresponding laser beam of the digital voltages

, and

is a point on the beam

. The difference between

and

is denoted as

and defined by Equation (8). In the ideal situation that

and

exactly coincide,

is a zero vector:

Then we use the root mean squared error (RMSE) measure in Equation (9) to estimate the error of the calibrated mapping

. Obviously, the smaller the value of

is, the higher the accuracy of the calibration is:

where

is the number of the test samples.

In the process of solving the SLFN model by the method mentioned in

Section 2.2.3, an inevitable problem is the determination of the number of hidden neurons

in generalized SLFN. To investigate the influence of the hidden neuron number

on the calibration accuracy, we also tested the variation tendency of regression error

with respect to the equal increase of

. Considering the possible influence of the training sample number

on the variation tendency, three groups of test experiments with different number of training samples (

Q = 810,405,270) are respectively conducted.

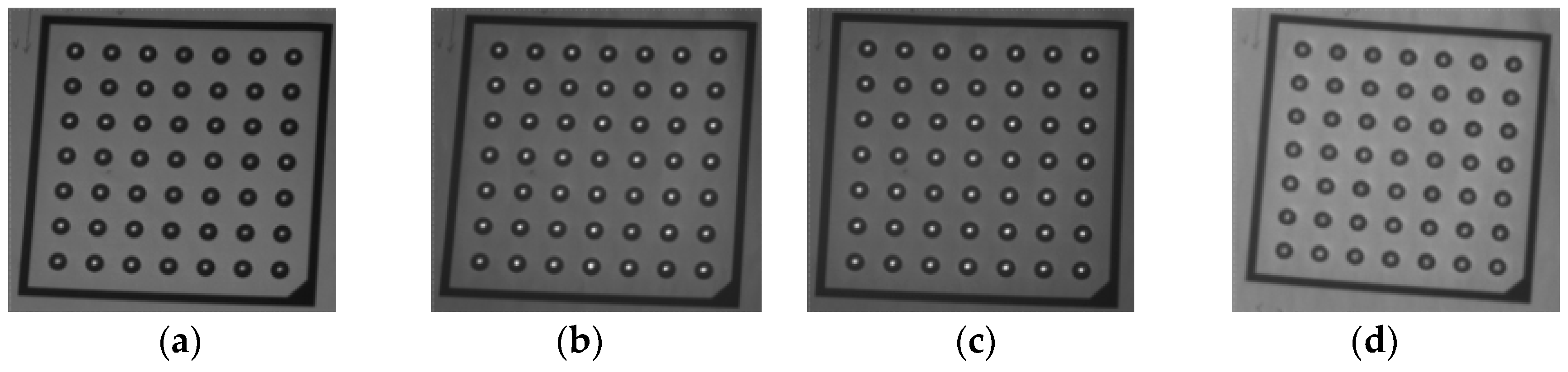

2.3.2. Target Shooting

To further verify the proposed calibration method, we design a pattern of 49 target circles (shown in

Figure 6a) to be shot by the laser beam of the calibrated GLS system. The pattern is placed in the field-of-view of the binocular system, and the 3D coordinates

of the circle centers

are obtained by the binocular system. By using the calibrated mapping

and the coordinates

, the digital voltages

are achieved by:

where

is the distance from the 3D point

to the spatial beam

, which is the corresponding laser beam of the digital voltages

. Utilizing these digital voltages, we control the GLS system to shoot the target circles as shown in

Figure 6b. The coordinates of the laser spot centers shot on the pattern

are also obtained by the binocular system. Then we use the standard deviation of the distance

to measure the shooting precision as shown in

Figure 6c.

2.3.3. 3D Reconstruction

3D reconstruction is the representative of forward applications of GLS system. In this application, pre-defined input digital voltages are known and the 3D coordinates of the laser spots hitting on the object need to be solved. More specifically, digital voltages

,

(

is the number of the digital signals) are input to control the GLS system to project

laser spots on the surface of an object to be reconstructed in 3D space, then the image of the laser spots is taken by the left camera. Using the extracted pixel coordinates

of the laser spots and the intrinsic parameters of the left camera, we get a set of straight lines

through the origin of the camera coordinate system by Equation (11):

where

is the intrinsic parameter matrix of the left camera, and

is the direction vector of

. The vector

of the laser beam

going through the spot is easily obtained by substituting the input digital voltages

into the calibrated mapping

. We then make use of the coordinate

of the midpoint

of the common perpendicular between

and

to represent the intersection between the two beams, i.e., the 3D coordinates of the laser spot. In this way, we get a number of coordinates

of all the laser spots on the surface.

In the real 3D Reconstruction experiment, we choose the surface of an engine blade as reconstructed object since it is a free-form surface. In addition, 3D reconstruction of the engine blade by the date-driven triangulation method [

20] is also implemented for comparison. To evaluate the reconstruction accuracy, we use the commercial ATOS system (GOM, Brunswick, Germany) to measure the surface in advance. The measurement accuracy of the ATOS system is high (0.03 mm), so we approximately take the measuring result of ATOS system as the real value for comparison. By the iterative closest point (ICP) method [

26], we can achieve the registration result between the reconstructed 3D points and the measuring result of ATOS system. Then we calculate the root mean square error (RMSE) by Equation (12) to measure the reconstruction error:

where

represents the distance between the 3D point

and the closest point belong to the data cloud measured by ATOS system after the ICP registration.

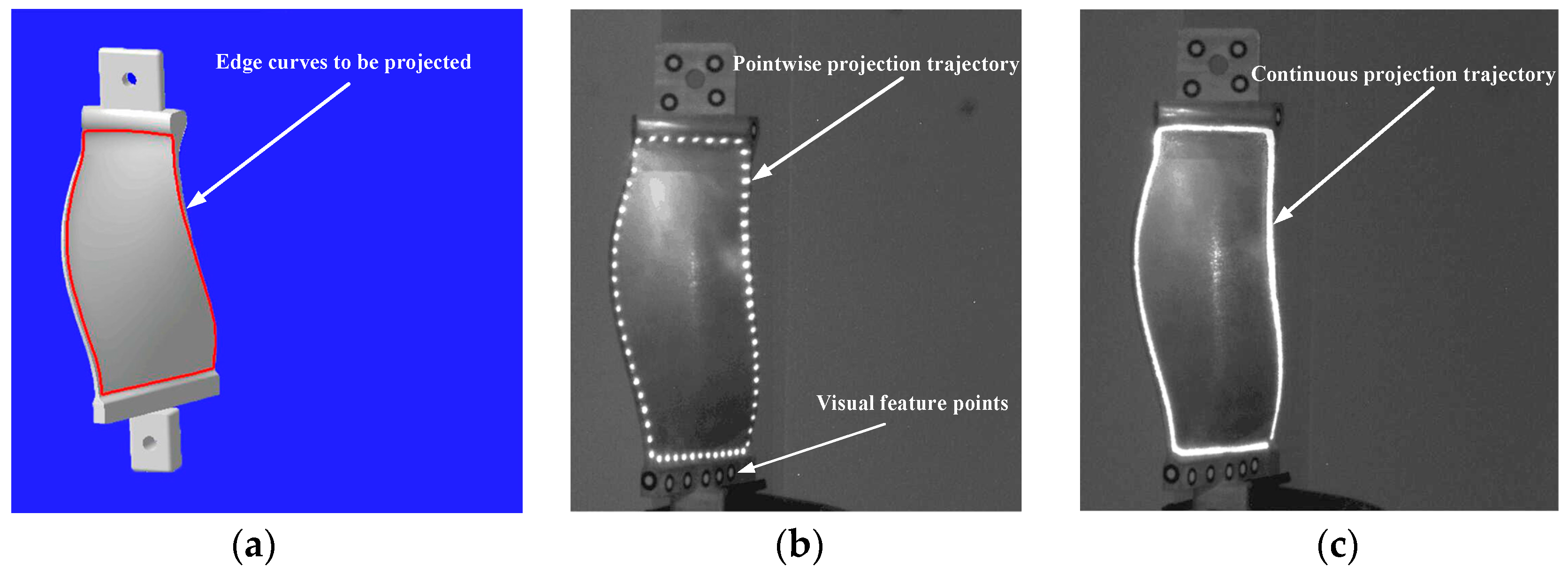

2.3.4. Projection Positioning

Laser projection positioning is the representative of backward applications of GLS system. In this application, the position of the laser spot on the 3D object is pre-defined and the digital control voltages need to be solved accordingly. In this validation, a serial of laser spots needs to be projected on the edge contour of an object by the calibrated GLS system. The 3D coordinates of the visual feature points placed on the object and the CAD model of the object in the object coordinate system are measured in advance. By the visual feature points, the coordinates

(

is the number of the discrete points).

on the edge contour in the camera coordinate system are achieved. Utilizing

and the calibrated mapping

, the digital voltages

are obtained by:

where

is the distance from the 3D point

to the corresponding laser beam of the digital voltages

. We then send the digital voltages

to the GLS system to realize the laser projection positioning of the edge contour of the object.

In the real projection positioning experiment, we choose the edge of an engine blade as the target to be projected since the edge is the 3D free curve. The CAD contour of the edge is discretized into 131 points, and 3D coordinates of these discrete points in the binocular coordinate system are obtained by the feature points placed on the surface of the engine blade. The engine blade is about 2.5 m away from the GLS system.

4. Discussion

In the solution of the SLFN model of the GLS system, we determine the number of hidden neurons

based on the investment in

Section 3.1. The value of

is also suitable for other GLS systems or different sampling date set since

represents the system complexity that is constant. The SLFN determined by

is equivalent to the physical model of the GLS system built in physical-model-based calibration, and the parameter setting of the physical model is not changed for a GLS system whose inner structure is fixed. According to the actual distribution of

in

Section 3.1, we consider the established SLFN model has good imitation ability on the simulation of the real GLS system. In addition, we indirectly test the calibration accuracy at different positions of the sampling region by the target shooting experiment. The good accuracy performance of shooting indicates the consistency of the calibration result in the whole sampling range (1 m) is also great. This accuracy consistency allows the follow-up GLS-based applications to be less affected with the size or the position of the experimental object.

The contrast experiment with the other two calibration methods of GLS system is also conducted, and the results are shown in

Table 1. The main reason for the poor performance of the physical-model-driven method is that the physical model of the GLS system cannot involve some affecting factors, such as the nonlinear relation between the mirror rotation angles and the applied digital voltages. Furthermore, the calibration results is wrong sometimes since it optimizes too many parameters and strongly depends on the given initial values of the parameters. Both the LUT method and the proposed method have a better performance when sampling in the virtual digital plane shown in

Figure 9a, but the accuracy of the LUT method declines faster than the proposed method as the sample density reduces. This phenomenon of precision falling reflect a fact that the accuracy of the LUT-based calibration is more affected by the sampling density than other calibrations with a model. Substantially, the accuracy of a location in the constructed LUT mainly depends on the neighborhood sampling points since the calibration outcome of the location is achieved by neighborhood point interpolation. Relatively, the accuracy of the calibration with a model is the combination of all the sample data. On top of that, constructing a high resolution lookup table shows higher computational cost (

Table 1). The reason lies in that the space vectors corresponding to the non-sampled digital voltages in a certain digital area needs to be calculated by interpolation. As the virtual digital plane (

Figure 9) grows in size, the computational burden of interpolation significantly increases, which results in the ever-increasing time consumption (

Table 1). As mentioned in

Section 2.2.3, the model in Equation (1) is a linear system, so the proposed method is much more efficient (only 0.872 s). The shorter the training time is, the quicker the whole calibration process is. The rapid calibration brings great convenience to various GLS-based field applications.

In the 3D reconstruction experiment, although the reconstruction accuracy of the proposed method is closed to that of the data-driven triangulation method, the proposed method has two obvious advantages. On the one hand, the training time of our method (0.872 s) is much less than that of the data-driven triangulation method (8.16 s). When the sampling density and sampling region of the two methods are the same, fitting the laser spots that belong to the same laser beam into a straight line (the 6D vector) in our method can greatly decrease the number of the training data. Less input training data means the less number of equations in Equation (6), which can remarkably reduce the computation time for solving the equations. The linear fitting itself in our method costs 0.801 s in this experiment, which has been involved in the total training time (0.872 s). On the other hand, the calibration result

of our method is the relation between the input digital voltages and the corresponding outgoing laser beam, which is independent of the binocular system. So, it is applicable to both forward and backward applications. However, the calibrated mapping

of the data-driven triangulation method [

20] contains the 2D image coordinates

of the laser spot in its 4D input, leading to the inapplicability for the backward applications.

The proposed calibration also applies to the projection positioning of 3D contour, which is widely used in prepreg layup of composite. Compared with the accuracy requirement of aeronautic composite layer (

) [

28], the projection errors (

Figure 14b) indicates that the accuracy of the projection positioning experiment satisfies the requirement. Similar to the principle of target shooting, this application changes the target point into the discrete point of 3D contour to be projected. Therefore, without consideration to the machining precision of the impeller to be projected, the target shooting accuracy determines the projection positioning accuracy. The standard deviation

calculated in

Section 3.4, representative of the positioning precision, is in line with the expected accuracy range.

Besides the calibration method, the sampling data for training the system model is another factor to determine the calibration precision. In other words, the accuracy of the space vector corresponding to the digital is important for a good calibration result. For achieving good space line fitting results, we usually need to collect the 3D sample points in a relative long distance (1 m in this paper). However the depth of the binocular vision system is limited. Inevitably, the accuracy of the 3D sample points outside of the depth is affected due to the degradation of the sampling image quality. Therefore, we can take some methods (such as the image deblurring) to improve the accuracy of the sampling data in the future.