A Survey on Data Quality for Dependable Monitoring in Wireless Sensor Networks

Abstract

:1. Introduction

2. Overview of Key Issues

2.1. Motivation

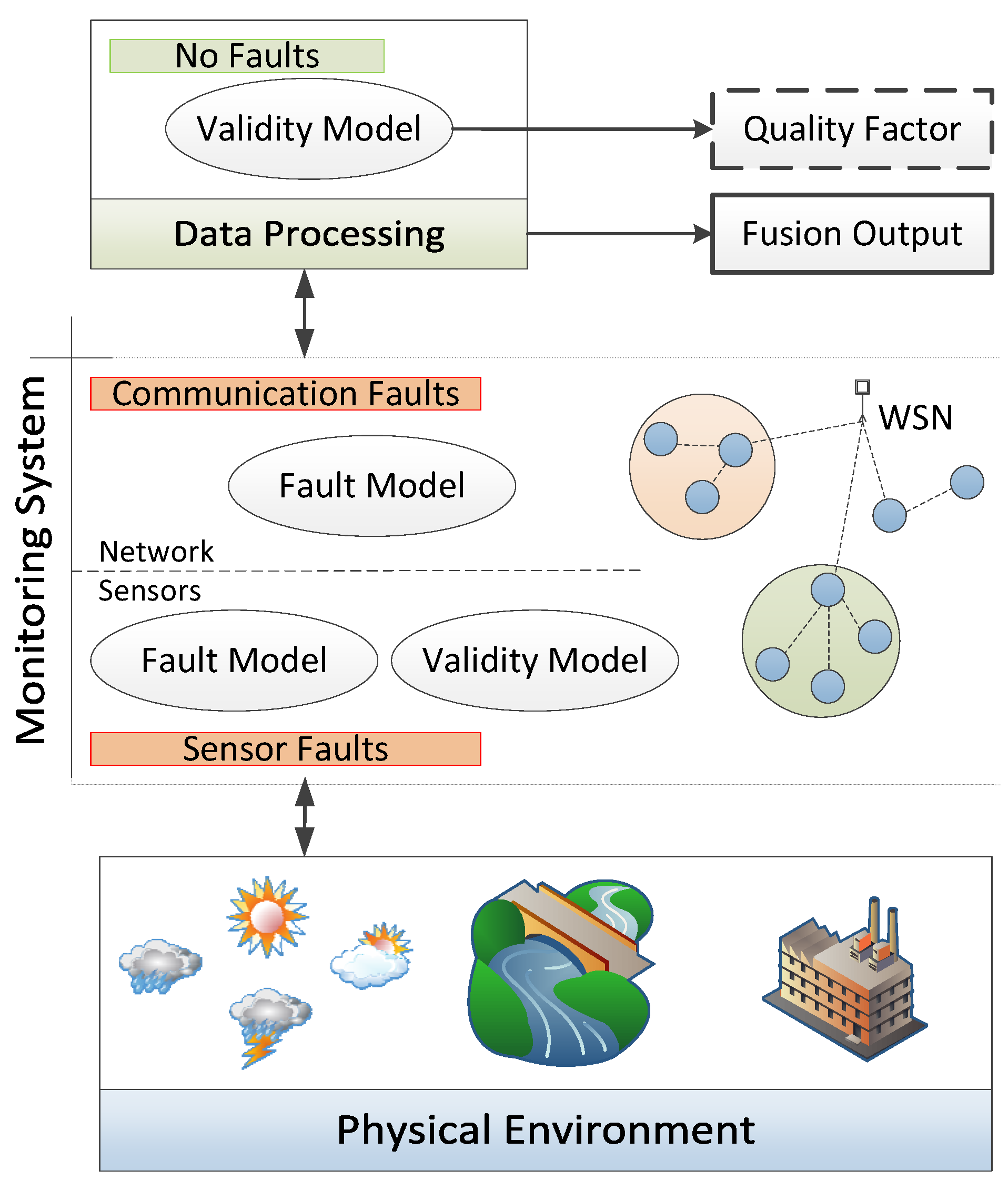

2.2. Monitoring and Data Processing

2.3. Dependability Strategies

3. Sensor Data Quality

3.1. Expressing Data Quality

- Confidence is an attribute that may be elaborated from the continuous observation of sensor data, without the need for a quality requirement to be available. It is generally used when datasets are available and can be characterized in a probabilistic way, along with model fitting or threshold definition techniques, to yield continuous or multi-level confidence measures [11].

- Reliability is a typical dependability attribute [12], expressing the ability of a system to provide the correct service (or the correct data, for that matter) over a period of time. The term data reliability in sensor networks is often considered when transmissions and/or communications may be subject to faults like omissions or a total crash [13,14].

- Trustworthiness is mostly employed in connection with security concerns, namely when it is assumed that data can be altered in a malicious way. In the context of sensor networks, it characterizes the degree to which it is possible to trust that sensor data have not been tampered with and have thus the needed quality [15].

- Authenticity is also used, in particular in a security context, but to express the degree to which it is possible to trust the claimed data origin [16]. This is particularly important when the overall quality of the system or application depends on the correct association of some data to their producer.

- Rule-based methods that use expert knowledge about the variables that sensors are measuring to determine thresholds or heuristics with which the sensors must comply.

- Estimation methods that define a “normal” behavior by considering spatial and temporal correlations from sensor data. A sensor reading is matched alongside its forecasted value to assess its validity.

- Learning-based methods that define models for correct and faulty sensor measurements, using collected data for building the models.

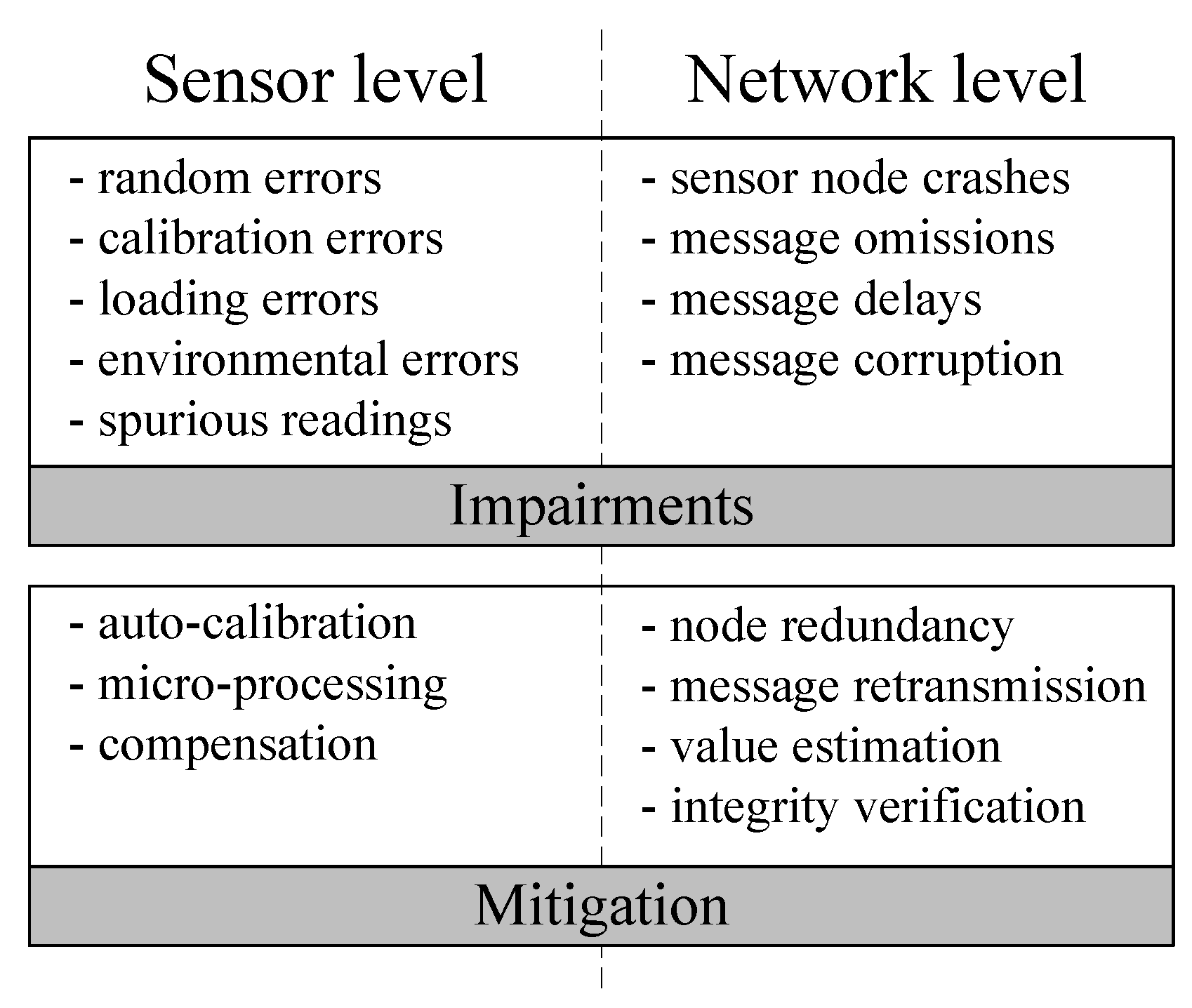

3.2. Sensor Level Faults

3.2.1. Sensor Characteristics

- Resistance: Resistive sensors, also termed potentiometers, are based on an electromechanical instrument that transforms a mechanical variation, like a displacement, into an electrical signal capable of being monitored following conditioning;

- Induction: Inductive sensors are primarily based on the principles of magnetic circuits and may be categorized as self-generating or passive;

- Capacitance: Capacitive sensors depend on variations in capacitance in reply to physical changes. A capacitive level pointer uses the changes in the comparative permittivity among the plates;

- Piezoelectricity: Piezoelectricity is the term used to determine the capacity of specific materials to create an electric charge that is relative to a directly applied mechanical pressure;

- Laser: Laser sensors compare changes in optical path length and in the wavelength of light, which can be determined with very little uncertainty. Laser sensors achieve a high precision in the length and displacement measurements, where the precision achieved by mechanical means is not enough;

- Ultrasonic: Uses the time-of-flight method as the standard for the use of ultrasound for monitoring purposes. A pulse of ultrasound is transmitted in a medium, reflecting when it reaches another medium, and the time from emission to recognition of the reflected pulsation is read;

- Optical: Optical sensors encompass a variety of parts that use light as the means to convert kinetics into electrical signals, comprised mostly of two components: a main diffraction grating, representing the measurement standard (scale); and a detection system. What is detected is the position of one regarding the other;

- Magnetic: A magnetic sensor is either triggered to function by a magnetic field or the use of the field that defines the properties of the sensor;

- Random errors are described by an absence of repeatability in the readings of the sensor, for instance due to measurement noise. These errors tend to happen on a permanent basis, but have a stochastic nature;

- Systematic errors are described through consistency and repeatability in the temporal domain. There are three types of systematic errors at the sensor level:

- -

- Calibration errors result from errors in the calibration procedure, often in relation to linearization procedures;

- -

- Loading errors emerge when the intrusive nature of the sensor modifies the measurand. Along with calibration errors, loading errors are caused by internal processes;

- -

- Environmental errors emerge when the sensor experiences the surrounding environment and these influences are not considered. In contrast with the previous two types of errors, environmental errors are due to external factors;

- Spurious readings are non-systematic reading errors. They occur when some spurious physical occurrence leads to a measurement value that does not reflect the intended reality. For instance, a light intensity measurement in a room can provide the wrong value if obtained precisely when a picture of the room is taken and the camera flash is triggered.

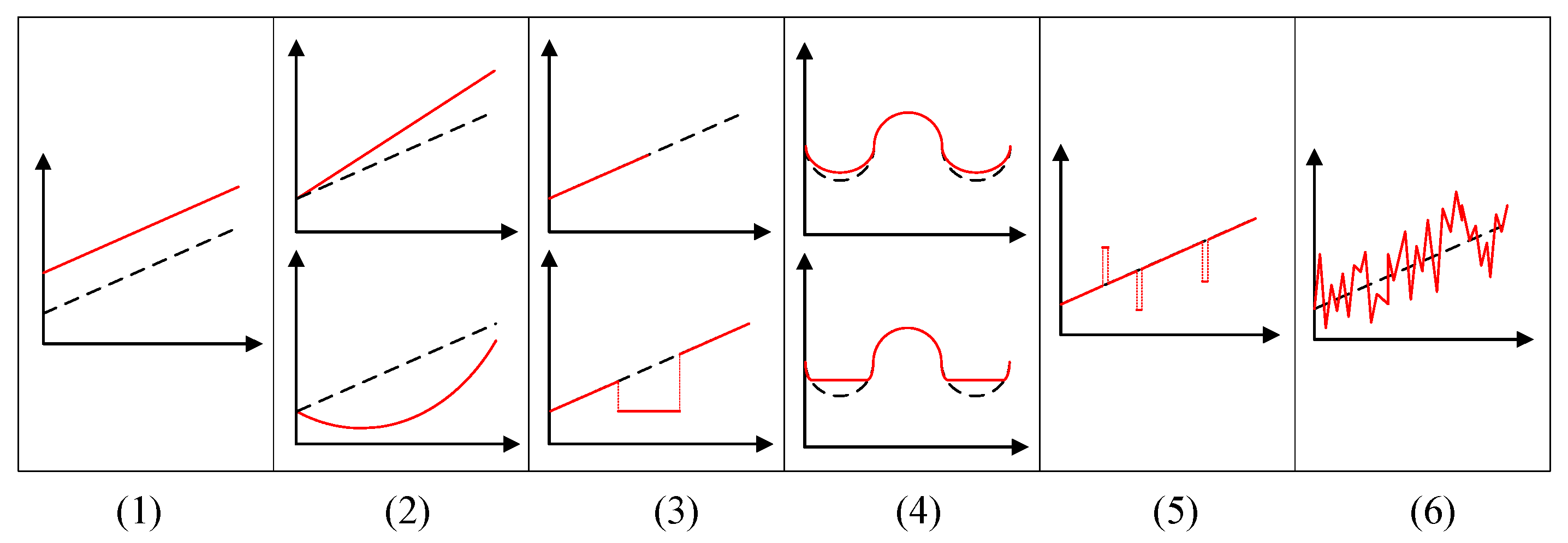

3.2.2. Sensor Failure Modes

- Constant or offset failure mode: The observations continuously deviate from the expected value by a constant offset.

- Continuous varying or drifting failure mode: The deviation between the observations and the expected value is continuously changing according to some continuous time-dependent function (linear or non-linear).

- Crash or jammed failure mode: The sensor stops providing any readings on its interface or gets jammed and stuck in some incorrect value.

- Trimming failure mode: The observations are correct for values within some interval, but are modified for values outside that interval. Beyond the interval, the observation can be trimmed at the interval boundary or may vary proportionally with the expected value.

- Outliers failure mode: The observations occasionally deviate from the expected value, at random points in the time domain;

- Noise failure mode: The observations deviate from the expected value stochastically in the value domain and permanently in the temporal domain.

3.2.3. Mitigation Techniques

3.3. Communication Faults in WSNs

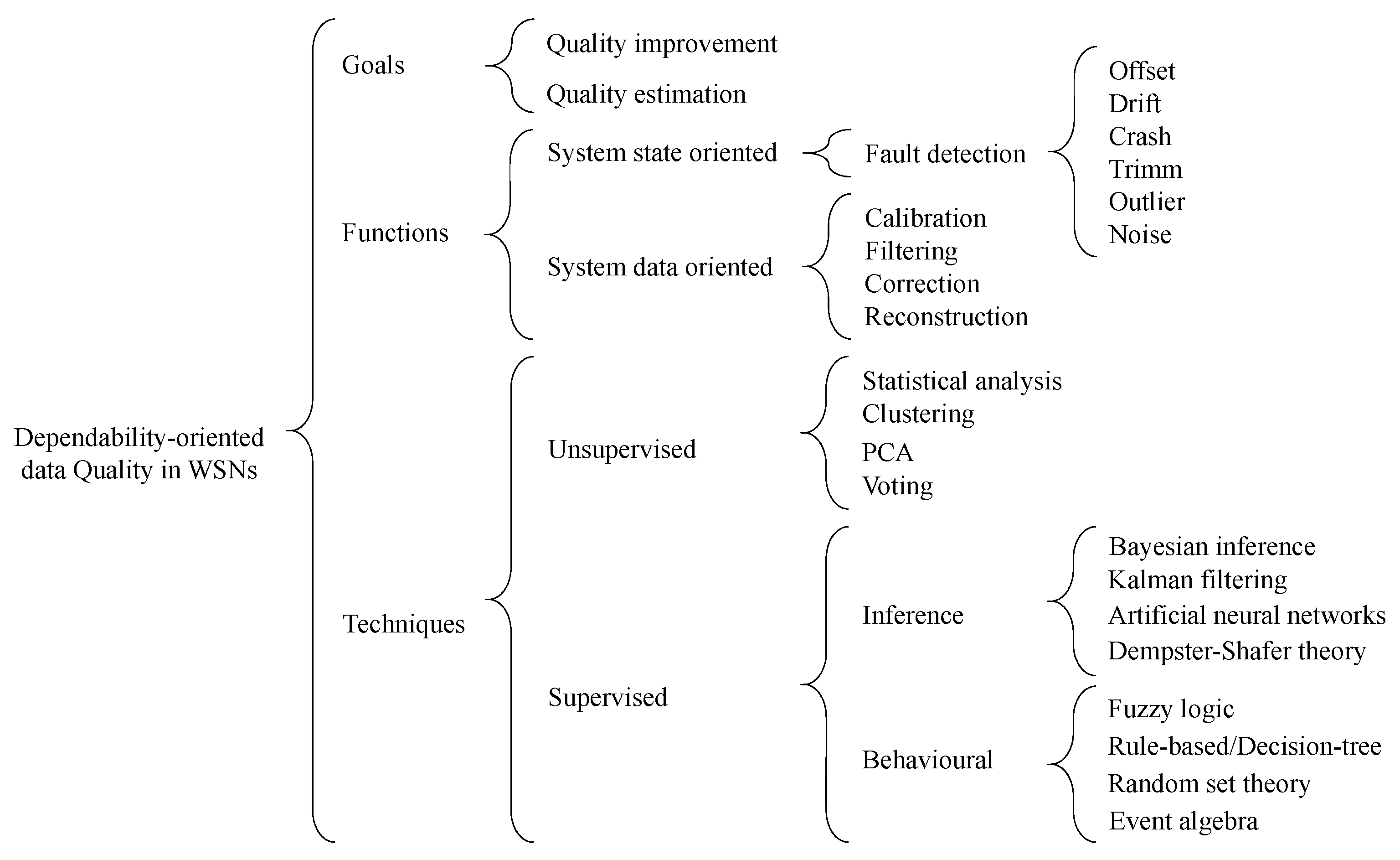

4. Solutions for Dependable Data Quality

4.1. Exploiting Redundancy

4.1.1. Spatial Redundancy

- across sensors when several sensors observe the same variable; for instance, when the temperature of a particular object is monitored by a set of temperature sensors;

- across attributes when sensors observe several quantities related with one event; for instance, when measurements of water temperature and water conductivity are combined to define the water salinity;

- across domains when sensors observe one specific attribute in several places. An example is when sensors in different places measure the temperature and the measured values are somehow correlated.

- across time when new readings are fused with past data. For example, historical information from a former calibration can be incorporated to make adjustments on current measurements. Note that this is a particular case that applies to systems with single sensors, which we specifically discuss later as a form of temporal redundancy.

- competitive when every sensor conveys an autonomous reading of the same variable. The purpose of this type of fusion is to diminish the effects of uncertain and incorrect monitoring. Competitive fusion corresponds to sensor fusion across sensors, in the terminology of [40];

- cooperative when the data measured by many autonomous sensors is utilized to infer information that would not be accessible through each of the sensors. This corresponds to sensor fusion across attributes;

- complementary when sensors are not directly dependent, but might be merged with the specific goal of providing a more comprehensive view of what the network is trying to observe. Thus, complementary fusion can assist in solving the incompleteness problem. This category does not entirely match the categories by [40]; it is closer to sensor fusion across attributes, but the idea is not to extract information, but to complement it.

4.1.2. Value Redundancy

- Signal analysis or analytical redundancy: This is used to monitor parameters such as frequency response, signal noise and amplitude change velocity among others [46]. It is a robust approach in the case of strange behavior in a controlled system. If there is a strong variability of a variable, then a sensor is categorized as faulty (or the system under monitoring has been altered). This necessarily requires some bounds to be established a priori, against which the parameters can be fused to perform the intended classification.

- Model-based redundancy: With the help of simulation/mathematical models of the monitored system, it is possible to obtain values to validate the measurements. The author in Reference [47] was a big promoter of this type of redundancy, where the system model calculates the measured variable, and then it, is compared to the sensor measurement.

4.1.3. Temporal Redundancy

4.2. A Taxonomy for Dependability-Oriented Data Quality in WSNs

4.2.1. Supervised Techniques

4.2.2. Unsupervised Techniques

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Brade, T.; Kaiser, J.; Zug, S. Expressing validity estimates in smart sensor applications. In Proceedings of the 2013 26th International Conference on Architecture of Computing Systems (ARCS), Prague, Czech Republic, 19–22 February 2013; pp. 1–8. [Google Scholar]

- Dietrich, A.; Zug, S.; Kaiser, J. Detecting external measurement disturbances based on statistical analysis for smart sensors. In Proceedings of the 2010 IEEE International Symposium on Industrial Electronics (ISIE), Bari, Italy, 4–7 July 2010; pp. 2067–2072. [Google Scholar]

- Rodriguez, M.; Ortiz Uriarte, L.; Jia, Y.; Yoshii, K.; Ross, R.; Beckman, P. Wireless sensor network for data-center environmental monitoring. In Proceedings of the 2011 Fifth International Conference on Sensing Technology (ICST), Palmerston North, New Zealand, 28 November–1 December 2011; pp. 533–537. [Google Scholar]

- Scherer, T.; Lombriser, C.; Schott, W.; Truong, H.; Weiss, B. Wireless Sensor Network for Continuous Temperature Monitoring in Air-Cooled Data Centers: Applications and Measurement Results. In Ad-hoc, Mobile, and Wireless Networks; Li, X.Y., Papavassiliou, S., Ruehrup, S., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7363, pp. 235–248. [Google Scholar]

- Cristian, F. Understanding Fault-Tolerant Distributed Systems. Commun. ACM 1991, 34, 56–78. [Google Scholar] [CrossRef]

- Yick, J.; Mukherjee, B.; Ghosal, D. Wireless sensor network survey. Comput. Netw. 2008, 52, 2292–2330. [Google Scholar] [CrossRef]

- Arampatzis, T.; Lygeros, J.; Manesis, S. A Survey of Applications of Wireless Sensors and Wireless Sensor Networks. In Proceedings of the 2005 IEEE International Symposium on Intelligent Control 13th Mediterrean Conference on Control and Automation, Limassol, Cyprus, 27–29 June 2005; pp. 719–724. [Google Scholar]

- Veríssimo, P.; Rodrigues, L. Distributed Systems for System Architects; Springer: New York, NY, USA, 2001; p. 623. [Google Scholar]

- Ibargiengoytia, P.; Sucar, L.; Vadera, S. Real time intelligent sensor validation. IEEE Trans. Power Syst. 2001, 16, 770–775. [Google Scholar] [CrossRef]

- Rodger, J. Toward reducing failure risk in an integrated vehicle health maintenance system: A fuzzy multi-sensor data fusion Kalman filter approach for IVHMS. Expert Syst. Appl. 2012, 39, 9821–9836. [Google Scholar] [CrossRef]

- Frolik, J.; Abdelrahman, M.; Kandasamy, P. A confidence-based approach to the self-validation, fusion and reconstruction of quasi-redundant sensor data. IEEE Trans. Instrum. Meas. 2001, 50, 1761–1769. [Google Scholar] [CrossRef]

- Avizienis, A.; Laprie, J.C.; Randell, B.; Landwehr, C. Basic Concepts and Taxonomy of Dependable and Secure Computing. IEEE Trans. Dependable Secur. Comput. 2004, 1, 11–33. [Google Scholar] [CrossRef]

- Zhang, D.; Zhao, C.; Liang, Y.; Liu, Z. A new medium access control protocol based on perceived data reliability and spatial correlation in wireless sensor network. Comput. Electr. Eng. 2012, 38, 694–702. [Google Scholar] [CrossRef]

- Luo, H.; Tao, H.; Ma, H.; Das, S. Data Fusion with Desired Reliability in Wireless Sensor Networks. IEEE Trans. Parallel Distrib. Syst. 2011, 22, 501–513. [Google Scholar] [CrossRef]

- Tang, L.; Yu, X.; Kim, S.; Gu, Q.; Han, J.; Leung, A.; La Porta, T. Trustworthiness analysis of sensor data in cyber-physical systems. J. Comput. Syst. Sci. 2013, 79, 383–401. [Google Scholar] [CrossRef]

- Ayday, E.; Delgosha, F.; Fekri, F. Data Authenticity and Availability in Multihop Wireless Sensor Networks. ACM Trans. Sens. Netw. 2012, 8. [Google Scholar] [CrossRef]

- Prathiba, B.; Sankar, K.J.; Sumalatha, V. Enhancing the data quality in wireless sensor networks—A review. In Proceedings of the IEEE International Conference on Automatic Control and Dynamic Optimization Techniques (ICACDOT), Pune, India, 9–10 September 2016; pp. 448–454. [Google Scholar]

- Sharma, A.; Golubchik, L.; Govindan, R. Sensor Faults: Detection Methods and Prevalence in Real-world Datasets. ACM Trans. Sens. Netw. 2010, 6. [Google Scholar] [CrossRef]

- Nguyen, T.T.; Spehr, J.; Uhlemann, M.; Zug, S.; Kruse, R. Learning of lane information reliability for intelligent vehicles. In Proceedings of the 2016 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Baden-Baden, Germany, 19–21 September 2016; pp. 142–147. [Google Scholar]

- Golle, P.; Greene, D.; Staddon, J. Detecting and Correcting Malicious Data in VANETs. In Proceedings of the 1st ACM International Workshop on Vehicular Ad Hoc Networks, Philadelphia, PA, USA, 1 October 2004; ACM: New York, NY, USA, 2004; pp. 29–37. [Google Scholar]

- Nimier, V. Supervised multisensor tracking algorithm. In Proceedings of the 9th European Signal Processing Conference, Island of Rhodes, Greece, 8–11 September 1998; pp. 1–4. [Google Scholar]

- Patra, J.; Chakraborty, G.; Meher, P. Neural-network-based robust linearization and compensation technique for sensors under nonlinear environmental influences. IEEE Trans. Circuits Syst. I Regul. Pap. 2008, 55, 1316–1327. [Google Scholar] [CrossRef]

- Webster, J.; Eren, H. Measurement, Instrumentation, and Sensors Handbook, 2nd ed.; Spatial, Mechanical, Thermal, and Radiation Measurement; CRC Press: Boca Raton, FL, USA, 2014; p. 1640. [Google Scholar]

- De Silva, C. Control Sensors and Actuators; Prentice Hall: Upper Saddle River, NJ, USA, 1989. [Google Scholar]

- Tumanski, S. Sensors and Actuators—Control System Instrumentation; de Silva, C.W., Ed.; CRC Press: Boca Raton, FL, USA, 2007; Volume 10. [Google Scholar]

- Mitchell, H. Multi-Sensor Data Fusion: An Introduction; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Whitehouse, K.; Culler, D. Calibration As Parameter Estimation in Sensor Networks. In Proceedings of the 1st ACM International Workshop on Wireless Sensor Networks and Applications, Atlanta, GA, USA, 28 September 2002; ACM: New York, NY, USA, 2002; pp. 59–67. [Google Scholar]

- Patra, J.; Meher, P.; Chakraborty, G. Development of Laguerre Neural-Network-Based Intelligent Sensors for Wireless Sensor Networks. IEEE Trans. Instrum. Meas. 2011, 60, 725–734. [Google Scholar] [CrossRef]

- Rivera, J.; Carrillo, M.; Chacón, M.; Herrera, G.; Bojorquez, G. Self-Calibration and Optimal Response in Intelligent Sensors Design Based on Artificial Neural Networks. Sensors 2007, 7, 1509. [Google Scholar] [CrossRef]

- Barwicz, A.; Massicotte, D.; Savaria, Y.; Santerre, M.A.; Morawski, R. An integrated structure for Kalman-filter-based measurand reconstruction. IEEE Trans. Instrum. Meas. 1994, 43, 403–410. [Google Scholar] [CrossRef]

- Gubian, M.; Marconato, A.; Boni, A.; Petri, D. A Study on Uncertainty-Complexity Tradeoffs for Dynamic Nonlinear Sensor Compensation. IEEE Trans. Instrum. Meas. 2009, 58, 26–32. [Google Scholar] [CrossRef]

- Ganesan, D.; Estrin, D.; Heidemann, J. Dimensions: Why do we need a new data handling architecture for sensor networks. ACM SIGCOMM Comput. Commun. Rev. 2003, 33, 143–148. [Google Scholar] [CrossRef]

- Zhao, J.; Govindan, R.; Estrin, D. Computing aggregates for monitoring wireless sensor networks. In Proceedings of the First IEEE International Workshop on Sensor Network Protocols and Applications, Anchorage, AK, USA, 11 May 2003; pp. 139–148. [Google Scholar]

- Madden, S.; Franklin, M.; Hellerstein, J.; Hong, W. TAG: A Tiny Aggregation Service for Ad-hoc Sensor Networks. SIGOPS Oper. Syst. Rev. 2002, 36, 131–146. [Google Scholar] [CrossRef]

- Krishnamachari, L.; Estrin, D.; Wicker, S. The impact of data aggregation in wireless sensor networks. In Proceedings of the 22nd International Conference on Distributed Computing Systems Workshops, Vienna, Austria, 2–5 July 2002. [Google Scholar]

- Kopetz, H. Real-Time Systems: Design Principles for Distributed Embedded Applications; Real-Time Systems Series; Springer: NewYork, NY, USA, 2011. [Google Scholar]

- Marzullo, K. Tolerating Failures of Continuous-valued Sensors. ACM Trans. Comput. Syst. 1990, 8, 284–304. [Google Scholar] [CrossRef]

- Koushanfar, F.; Potkonjak, M.; Sangiovanni-Vincentelli, A. Proceedings of the 2003 IEEE On-Line Fault Detection of Sensor Measurements, Toronto, ON, Canada, 22–24 October 2003; Volume 2, pp. 974–979.

- Zhuang, P.; Wang, D.; Shang, Y. Distributed Faulty Sensor Detection. In Proceedings of the 2009 IEEE Global Telecommunications Conference, Honolulu, HI, USA, 30 November–4 December 2009; pp. 1–6. [Google Scholar]

- Boudjemaa, R.; Forbes, A. Parameter Estimation Methods for Data Fusion; NPL Report CMSC; National Physical Laboratory, Great Britain, Centre for Mathematics and Scientific Computing: Teddington, UK, 2004. [Google Scholar]

- Grime, S.; Durrant-Whyte, H. Data fusion in decentralized sensor networks. Control Eng. Pract. 1994, 2, 849–863. [Google Scholar] [CrossRef]

- Baptista, A. Environmental Observation and Forecasting Systems. In Encyclopedia of Physical Science and Technology (Third Edition); Meyers, R.A., Ed.; Academic Press: New York, NY, USA, 2003; pp. 565–581. [Google Scholar]

- Gomes, J.; Jesus, G.; Rodrigues, M.; Rogeiro, J.; Azevedo, A.; Oliveira, A. Managing a Coastal Sensors Network in a Nowcast-Forecast Information System. In Proceedings of the 2013 Eighth International Conference on Broadband and Wireless Computing, Communication and Applications (BWCCA), Compiegne, France, 28–30 October 2013; pp. 518–523. [Google Scholar]

- Brooks, R.; Iyengar, S. Multi-Sensor Fusion: Fundamentals and Applications with Software; Prentice-Hall, Inc.: Upper Saddle River, NJ, USA, 1998. [Google Scholar]

- Nakamura, E.; Loureiro, A.; Frery, A. Information Fusion for Wireless Sensor Networks: Methods, Models, and Classifications. ACM Comput. Surv. 2007, 39. [Google Scholar] [CrossRef]

- Worden, K.; Manson, G.; Fieller, N. Damage Detection using Outlier Analysis. J. Sound Vib. 2000, 229, 647–667. [Google Scholar] [CrossRef]

- Isermann, R. Model-based fault-detection and diagnosis—Status and applications. Ann. Rev. Control 2005, 29, 71–85. [Google Scholar] [CrossRef]

- Klein, L. Sensor and Data Fusion: A Tool for Information Assessment and Decision Making; Press Monographs, Society of Photo Optical: Bellingham, WA, USA, 2004. [Google Scholar]

- Mendonca, R.; Santana, P.; Marques, F.; Lourenco, A.; Silva, J.; Barata, J. Kelpie: A ROS-Based Multi-Robot Simulator for Water Surface and Aerial Vehicles. In Proceedings of the 2013 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Manchester, UK, 13–16 October 2013; pp. 3645–3650. [Google Scholar]

- Choi, S.; Yuh, J.; Takashige, G. Development of the Omni Directional Intelligent Navigator. IEEE Rob. Autom. Mag. 1995, 2, 44–53. [Google Scholar] [CrossRef]

- Crespi, A.; Ijspeert, A. Online optimization of swimming and crawling in an amphibious snake robot. IEEE Trans. Rob. 2008, 24, 75–87. [Google Scholar] [CrossRef]

- Zhang, Y.; Meratnia, N.; Havinga, P. Outlier Detection Techniques for Wireless Sensor Networks: A Survey. IEEE Commun. Surv. Tutor. 2010, 12, 159–170. [Google Scholar] [CrossRef]

- Durrant-Whyte, H. Sensor Models and Multisensor Integration. Int. J. Rob. Res. 1988, 7, 97–113. [Google Scholar] [CrossRef]

- Zoumboulakis, M.; Roussos, G. Escalation: Complex Event Detection in Wireless Sensor Networks. In Proceedings of the 2nd European Conference on Smart Sensing and Context (EuroSSC’07), Kendal, UK, 23–25 October 2007; Springer: Berlin/Heidelberg, Germany, 2007; pp. 270–285. [Google Scholar]

- Chandola, V.; Banerjee, A.; Kumar, V. Anomaly Detection: A Survey. ACM Comput. Surv. 2009, 41. [Google Scholar] [CrossRef]

- Kreibich, O.; Neuzil, J.; Smid, R. Quality-based multiple-sensor fusion in an industrial wireless sensor network for MCM. IEEE Trans. Ind. Electron. 2014, 61, 4903–4911. [Google Scholar] [CrossRef]

- Klein, L. Sensor and Data Fusion Concepts and Applications, 2nd ed.; Society of Photo-Optical Instrumentation Engineers (SPIE): Bellingham, WA, USA, 1999. [Google Scholar]

- Khaleghi, B.; Khamis, A.; Karray, F.; Razavi, S. Multisensor data fusion: A review of the state-of-the-art. Inf. Fusion 2013, 14, 28–44. [Google Scholar] [CrossRef]

- Hall, D.; McMullen, S. Mathematical Techniques in Multisensor Data Fusion (Artech House Information Warfare Library); Artech House, Inc.: Norwood, MA, USA, 2004. [Google Scholar]

- Hartl, G.; Li, B. infer: A Bayesian Inference Approach towards Energy Efficient Data Collection in Dense Sensor Networks. In Proceedings of the 25th IEEE International Conference on Distributed Computing Systems (ICDCS 2005), Columbus, OH, USA, 6–10 June 2005; pp. 371–380. [Google Scholar]

- Janakiram, D.; Reddy, V.; Kumar, A. Outlier Detection in Wireless Sensor Networks using Bayesian Belief Networks. In Proceedings of the First International Conference on Communication System Software and Middleware (Comsware 2006), New Delhi, India, 8–12 January 2006; pp. 1–6. [Google Scholar]

- Zhao, W.; Fang, T.; Jiang, Y. Data Fusion Using Improved Dempster-Shafer Evidence Theory for Vehicle Detection. In Proceedings of the Fourth International Conference on Fuzzy Systems and Knowledge Discovery (FSKD 2007), Haikou, China, 24–27 August 2007; Volume 1, pp. 487–491. [Google Scholar]

- Konorski, J.; Orlikowski, R. Data-Centric Dempster-Shafer Theory-Based Selfishness Thwarting via Trust Evaluation in MANETs and WSNs. In Proceedings of the 2009 3rd International Conference on New Technologies, Mobility and Security (NTMS), Cairo, Egypt, 20–23 December 2009; pp. 1–5. [Google Scholar]

- Sentz, K.; Ferson, S.; Laboratories, S.N. Combination of Evidence in Dempster-Shafer Theory; Sandia National Laboratories: Albuquerque, NM, USA, 2002.

- Ahmed, M.; Huang, X.; Sharma, D. A Novel Misbehavior Evaluation with Dempster-shafer Theory in Wireless Sensor Networks. In Proceedings of the Thirteenth ACM International Symposium on Mobile Ad Hoc Networking and Computing (MobiHoc ’12), Hilton Head, SC, USA, 11–14 June 2012; ACM: New York, NY, USA, 2012; pp. 259–260. [Google Scholar]

- Zhu, R. Efficient Fault-Tolerant Event Query Algorithm in Distributed Wireless Sensor Networks. IJDSN 2010. [Google Scholar] [CrossRef]

- Alferes, J.; Lynggaard-Jensen, A.; Munk-Nielsen, T.; Tik, S.; Vezzaro, L.; Sharma, A.; Mikkelsen, P.; Vanrolleghem, P. Validating data quality during wet weather monitoring of wastewater treatment plant influents. Proc. Water Environ. Fed. 2013, 2013, 4507–4520. [Google Scholar] [CrossRef]

- Moustapha, A.; Selmic, R. Wireless Sensor Network Modeling Using Modified Recurrent Neural Networks: Application to Fault Detection. IEEE Trans. Instrum. Meas. 2008, 57, 981–988. [Google Scholar] [CrossRef]

- Barron, J.; Moustapha, A.; Selmic, R. Real-Time Implementation of Fault Detection in Wireless Sensor Networks Using Neural Networks. In Proceedings of the Fifth International Conference on Information Technology: New Generations (ITNG 2008), Las Vegas, NV, USA, 7–9 April 2008; pp. 378–383. [Google Scholar]

- Obst, O. Poster Abstract: Distributed Fault Detection Using a Recurrent Neural Network. In Proceedings of the 2009 International Conference on Information Processing in Sensor Networks (IPSN ’09), San Francisco, CA, USA, 13–16 April 2009; IEEE Computer Society: Washington, DC, USA, 2009; pp. 373–374. [Google Scholar]

- Bahrepour, M.; Meratnia, N.; Poel, M.; Taghikhaki, Z.; Havinga, P. Distributed Event Detection in Wireless Sensor Networks for Disaster Management. In Proceedings of the 2010 2nd International Conference on Intelligent Networking and Collaborative Systems (INCOS), Thessaloniki, Greece, 24–26 November 2010; pp. 507–512. [Google Scholar]

- Archer, C.; Baptista, A.; Leen, T. Fault Detection for Salinity Sensors in the Columbia Estuary; Technical Report; Oregon Graduate Institute School of Science & Engineering: Hillsboro, OR, USA, 2002. [Google Scholar]

- Klein, L.; Mihaylova, L.; El Faouzi, N.E. Sensor and Data Fusion: Taxonomy, Challenges and Applications. In Handbook on Soft Computing for Video Surveillance, 1st ed.; Pal, S.K., Petrosino, A., Maddalena, L., Eds.; Chapman & Hall/CRC: Boca Raton, FL, USA, 2012; Chapter 6. [Google Scholar]

- Shell, J.; Coupland, S.; Goodyer, E. Fuzzy data fusion for fault detection in Wireless Sensor Networks. In Proceedings of the 2010 UK Workshop on Computational Intelligence (UKCI), Colchester, UK, 8–10 September 2010; pp. 1–6. [Google Scholar]

- Khan, S.; Daachi, B.; Djouani, K. Application of Fuzzy Inference Systems to Detection of Faults in Wireless Sensor Networks. Neurocomputing 2012, 94, 111–120. [Google Scholar] [CrossRef]

- Manjunatha, P.; Verma, A.; Srividya, A. Multi-Sensor Data Fusion in Cluster based Wireless Sensor Networks Using Fuzzy Logic Method. In Proceedings of the IEEE Region 10 and the Third international Conference on Industrial and Information Systems (ICIIS 2008), Kharagpur, India, 8–10 December 2008; pp. 1–6. [Google Scholar]

- Collotta, M.; Pau, G.; Salerno, V.; Scata, G. A fuzzy based algorithm to manage power consumption in industrial Wireless Sensor Networks. In Proceedings of the 2011 9th IEEE International Conference on Industrial Informatics (INDIN), Lisbon, Portugal, 26–29 July 2011; pp. 151–156. [Google Scholar]

- Su, I.J.; Tsai, C.C.; Sung, W.T. Area Temperature System Monitoring and Computing Based on Adaptive Fuzzy Logic in Wireless Sensor Networks. Appl. Soft Comput. 2012, 12, 1532–1541. [Google Scholar] [CrossRef]

- Castillo-Effer, M.; Quintela, D.; Moreno, W.; Jordan, R.; Westhoff, W. Wireless sensor networks for flash-flood alerting. In Proceedings of the Fifth IEEE International Caracas Conference on Devices, Circuits and Systems, Punta Cana, Dominican Republic, 3–5 November 2004; Volume 1, pp. 142–146. [Google Scholar]

- Bettencourt, L.; Hagberg, A.; Larkey, L. Separating the Wheat from the Chaff: Practical Anomaly Detection Schemes in Ecological Applications of Distributed Sensor Networks. In Distributed Computing in Sensor Systems; Aspnes, J., Scheideler, C., Arora, A., Madden, S., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2007; Volume 4549, pp. 223–239. [Google Scholar]

- Branch, J.; Giannella, C.; Szymanski, B.; Wolff, R.; Kargupta, H. In-network outlier detection in wireless sensor networks. Knowl. Inf. Syst. 2013, 34, 23–54. [Google Scholar] [CrossRef]

- Zubair, M.; Hartmann, K. Target classification based on sensor fusion in multi-channel seismic network. In Proceedings of the 2011 IEEE International Symposium on Signal Processing and Information Technology (ISSPIT), Bilbao, Spain, 14–17 December 2011; pp. 438–443. [Google Scholar]

- Chatzigiannakis, V.; Papavassiliou, S.; Grammatikou, M.; Maglaris, B. Hierarchical Anomaly Detection in Distributed Large-Scale Sensor Networks. In Proceedings of the 11th IEEE Symposium on Computers and Communications (ISCC ’06), Sardinia, Italy, 26–29 June 2006; pp. 761–767. [Google Scholar]

- Gao, J.; Xu, Y.; Li, X. Online distributed fault detection of sensor measurements. Tsinghua Sci. Technol. 2007, 12, 192–196. [Google Scholar] [CrossRef]

- Abid, A.; Kachouri, A.; Kaaniche, H.; Abid, M. Quality of service in wireless sensor networks through a failure-detector with voting mechanism. In Proceedings of the 2013 International Conference on Computer Applications Technology (ICCAT), Sousse, Tunisia, 20–22 January 2013; pp. 1–5. [Google Scholar]

- Li, F.; Wu, J. A Probabilistic Voting-based Filtering Scheme in Wireless Sensor Networks. In Proceedings of the 2006 International Conference on Wireless Communications and Mobile Computing (IWCMC’06), Vancouver, BC, Canada, 3–6 July 2006; ACM: New York, NY, USA, 2006; pp. 27–32. [Google Scholar]

- Zappi, P.; Stiefmeier, T.; Farella, E.; Roggen, D.; Benini, L.; Troster, G. Activity recognition from on-body sensors by classifier fusion: Sensor scalability and robustness. In Proceedings of the 3rd International Conference on Intelligent Sensors, Sensor Networks and Information (ISSNIP 2007), Melbourne, Australia, 3–6 December 2007; pp. 281–286. [Google Scholar]

| Displacement Effects | Advantages | Disadvantages |

|---|---|---|

| Resistance | Versatile; inexpensive; easy-to-use; precise. | Limited bandwidth; limited durability. |

| Induction | Robust; compact; not easily affected by external factors. | A significant part of the measurement is external, which must be well cleaned and calibrated. |

| Capacitance | Low-power consumption; non-contacting; resists shocks and intense vibrations; tolerant to high temperatures; high sensitivity over a wide temperature range. | Short sensing distance; humidity in coastal/water climates can affect sensing output; not at all selective for its target; non-linearity problems. |

| Piezoelectricity | Ideal for use in low-noise measurement systems; high sensitivity; low cost; broad frequency range; exceptional linearity; excellent repeatability; small size. | Cannot be used for static measurements; high temperatures cause a drop in internal resistance and sensitivity (characteristics vary with temperature). |

| Laser | Ideal for near real-time applications; low uncertainty and high precision in the measurements. | Weather and visual paths affect the sensor when measuring distance or related variables. |

| Ultrasonic | Independent of the surface color or optical reflectivity of the sensing object; excellent repeatability and sensing accuracy; response is linear with distance. | Requires a hard flat surface; not immune to loud noise; slow measurements in proximity sensors; changes in the environment affect the response; targets with low density may absorb sound energy; minimum sensing distance required. |

| Optical encoding | Inherently digital (which makes the interface easy for control systems); fast measurements; long durability. | Fairly complex; delicate parts; low tolerance to mechanical abuse; low tolerance to high temperatures. |

| Magnetic | Non-contacting; high durability; high sensitivity; small size; output is highly linear. | Very sensitive to fabrication tolerances; calibration needed after installation. |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jesus, G.; Casimiro, A.; Oliveira, A. A Survey on Data Quality for Dependable Monitoring in Wireless Sensor Networks. Sensors 2017, 17, 2010. https://doi.org/10.3390/s17092010

Jesus G, Casimiro A, Oliveira A. A Survey on Data Quality for Dependable Monitoring in Wireless Sensor Networks. Sensors. 2017; 17(9):2010. https://doi.org/10.3390/s17092010

Chicago/Turabian StyleJesus, Gonçalo, António Casimiro, and Anabela Oliveira. 2017. "A Survey on Data Quality for Dependable Monitoring in Wireless Sensor Networks" Sensors 17, no. 9: 2010. https://doi.org/10.3390/s17092010