1. Introduction

A GPS-based positioning system is a nearly perfect approach to UAV positioning in an outdoor environment, and it is widely used for many kinds of devices, including smartphones, self-driving cars, and so on. While the application is restricted in an indoor environment, it is hard to find a satisfying alternative sensor to provide accurate positioning information inside. Because of this, research into indoor localization systems is becoming more and more popular, and plays an important role in many complex applications of unmanned ground and aerial vehicles [

1]. We can apply an indoor localization system to find the location of specified products in warehouses [

2] or of firemen in a building, to assist in the automatic driving system of an unmanned vehicle, and to help blind people walk inside a building [

3,

4].

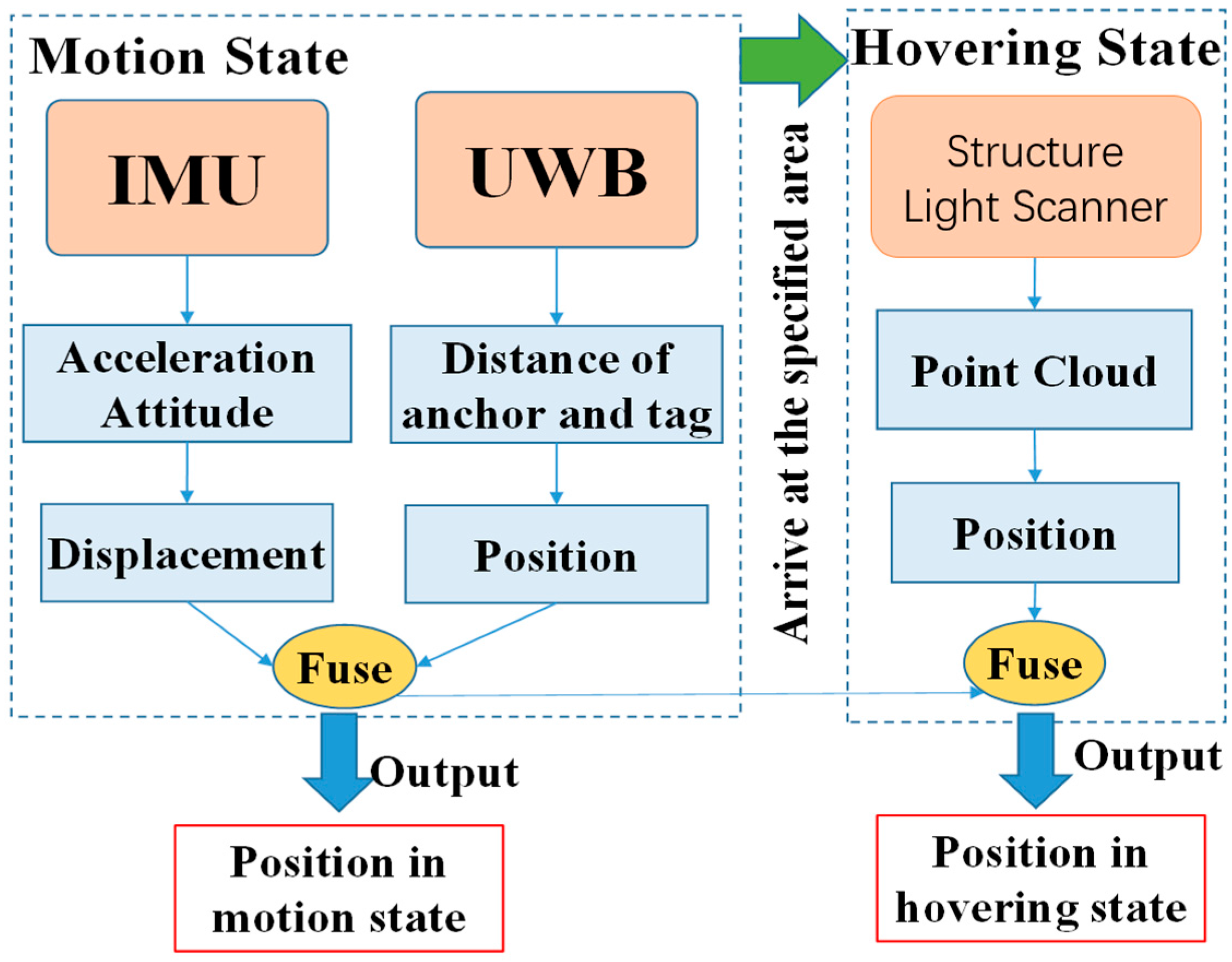

In some realistic applications, for example, to carry a box with a UAV requires that the positioning accuracy of the UAV must be on the order of centimeters. However, designing and implementing such an indoor positioning system for a UAV with that level of accuracy would be difficult and expensive. On the other hand, the decimeter level of positioning accuracy is sufficient for vehicles in motion. In this article, we proposed a low-cost heterogeneous sensing system based on INS, UWB, and a structured light scanner to solve the above problem. While the UAV is in motion, positioning accuracy at the level of centimeters is implemented through the integration navigation of the INS and UWB. When the UAV is executing tasks, a structured light scanner is introduced to provide more accurate positioning. In this case, the structured light scanner works only when the vehicle is in a hovering state.

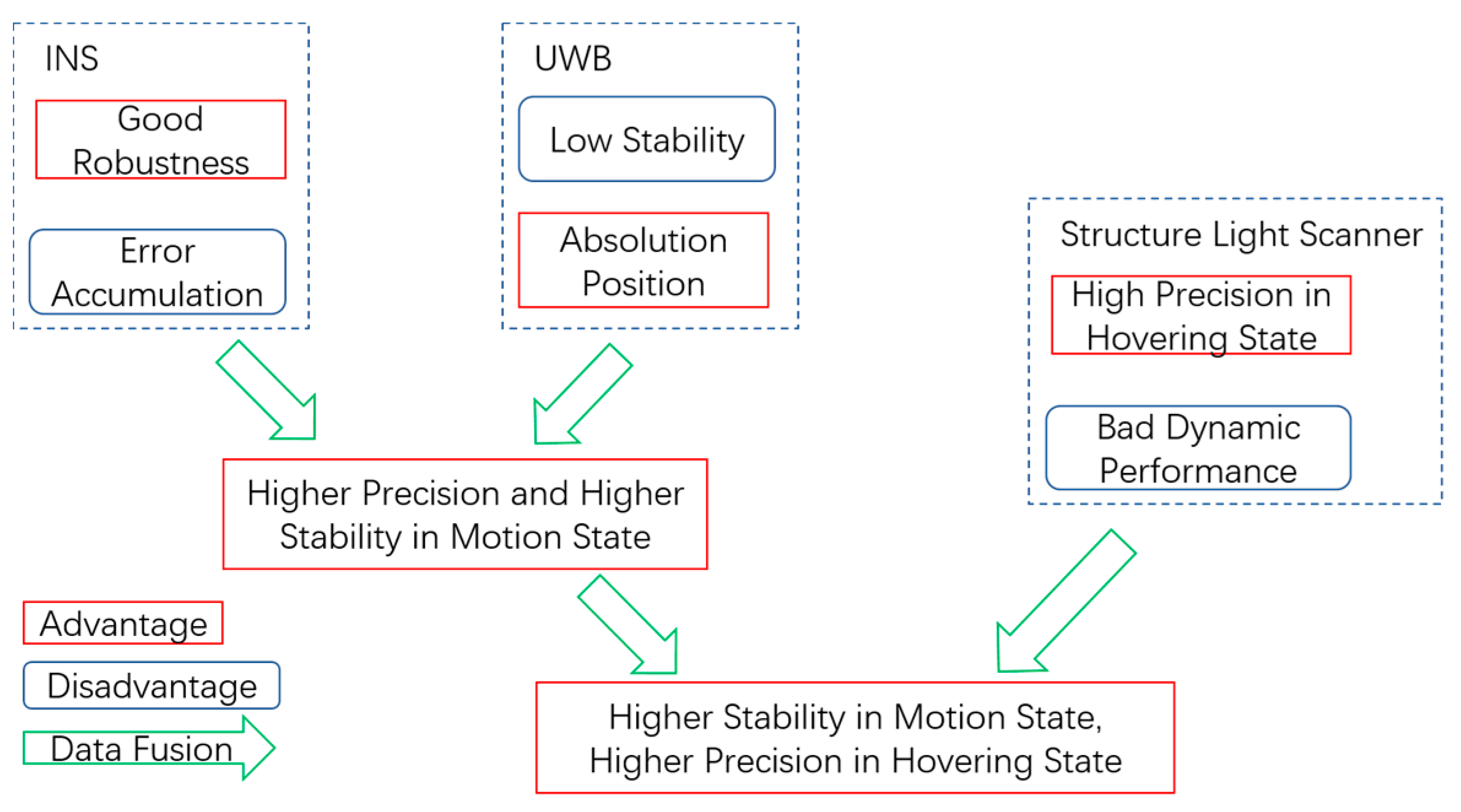

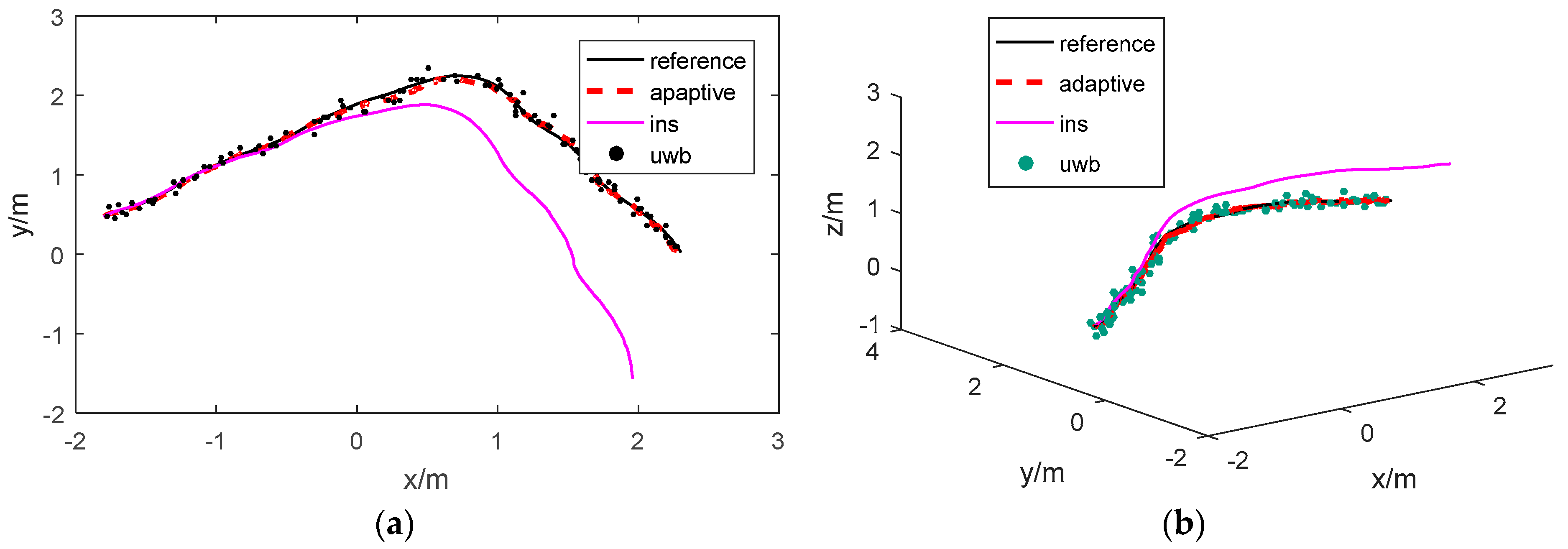

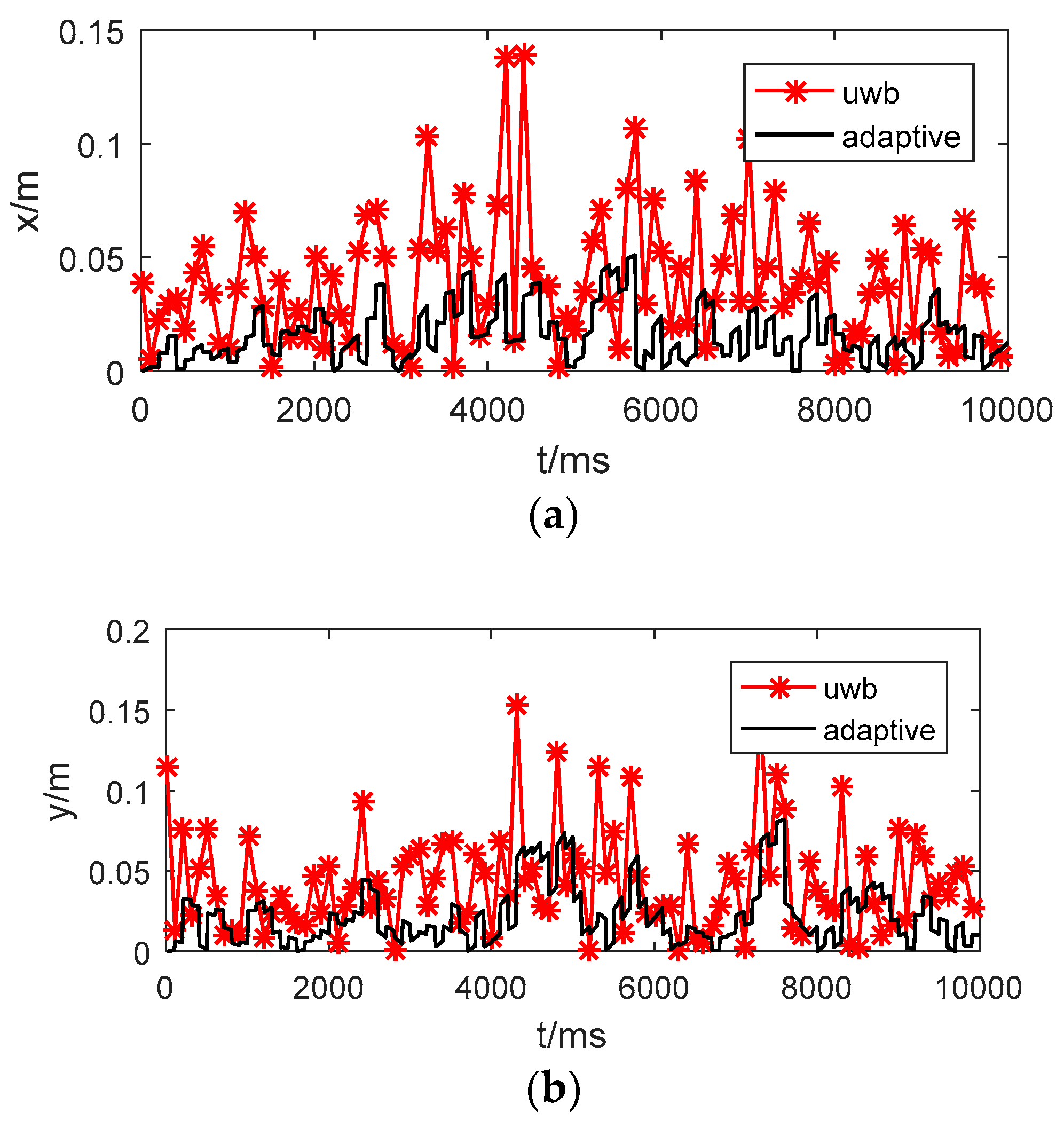

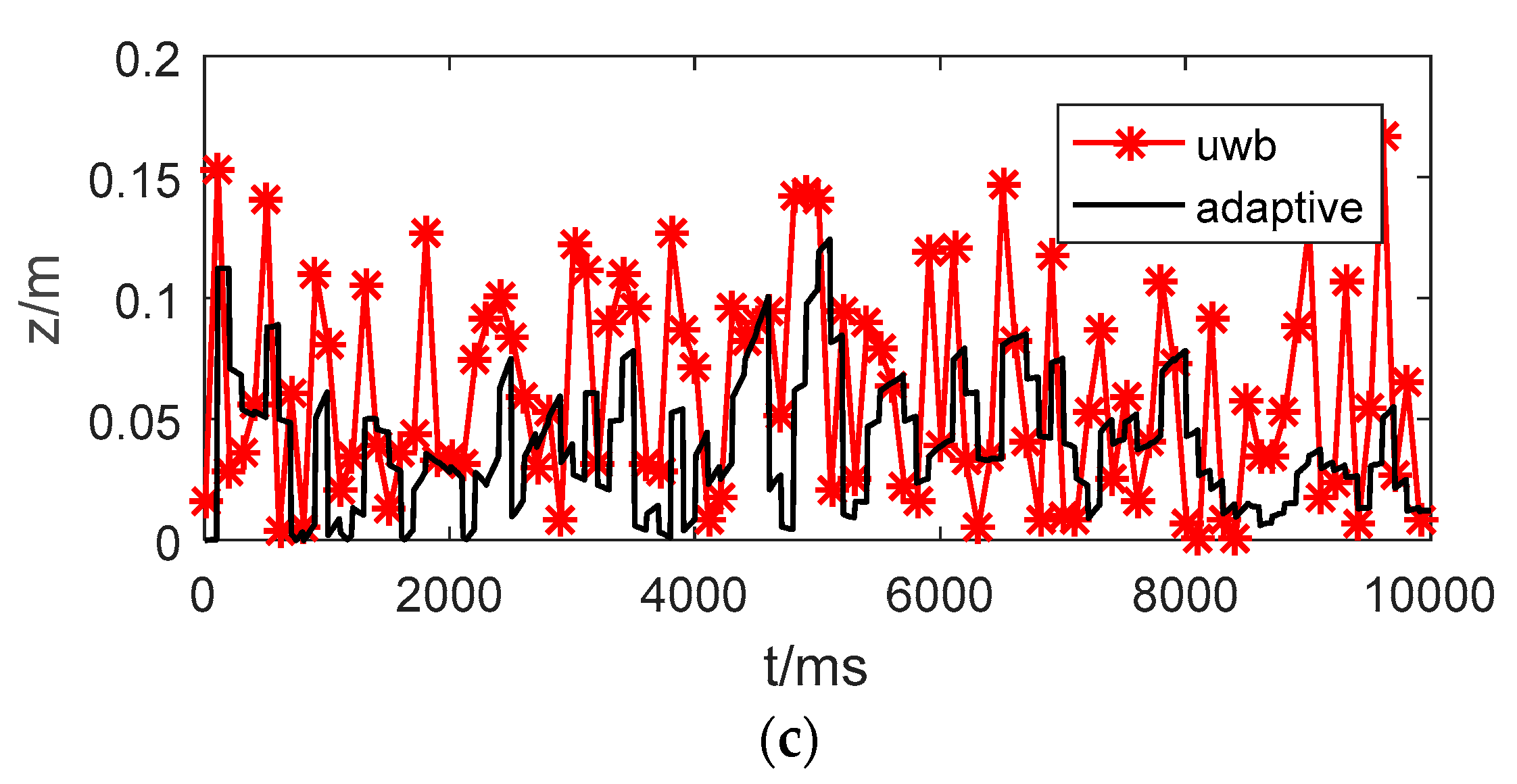

Figure 1 summarizes the advantages and disadvantages of the heterogeneous sensing system. With the fusion of the INS, UWB, and structured light scanner, it is possible to realize higher stability as well as precision for an apparatus in both the moving and hovering states. Although there are other technologies that can provide higher accuracy, the proposed method stands out for its all-round performance in both the motion and hovering state and is a low-cost solution in respect to the application of UAV.

We applied adaptive Kalman filtering to fuse the data from the INS and UWB while the UAV is in motion. The accumulated errors of the INS will be corrected by the absolute positioning data from the UWB. While the UAV is hovering in the specified area, the structured light scanner will start to work as an auxiliary device to retrieve more accurate positioning information. The data fusion strategy integrates the positioning data measured by three different sensors into a consistent, accurate, and useful representation in this paper.

The main contributions of this paper include the following: (1) A self-made structured light scanner based on structured light, which is used to improve the positioning accuracy of an apparatus in a hovering state; (2) Adaptive Kalman filtering to fuse the data of the INS and UWB while in motion; (3) Gauss filtering to fuse the data of the UWB and the structured light scanner in a hovering state. Moreover, we conducted experiments and simulations to verify the effectiveness of the proposed strategy.

In the following section, we introduce the setup of the hardware platform and analyze the mathematical model of different components in detail. Then, the optimized data fusion strategy is described, including the fusion of the INS and UWB in motion and the fusion of the UWB and structured light scanner in a hovering state. Finally, the performance of the proposed strategy is verified by simulations and experiments, and the results show that with the complement of the structured light scanner, the UWB and the INS, a micro UAV is able to achieve indoor positioning with high precision over a long distance.

2. Related Work on Heterogeneous Sensing Systems

Indoor localization technology provides robots with the ability to finish tasks automatically by detecting the location of an object. High-precision and robust indoor navigation remains a difficult task, although new technologies have developed rapidly [

5,

6] in recent decades. The development of indoor localization system benefits from the rapid growth of related sensors. Various sensors are utilized for indoor positioning tasks, such as camera work, laser Lidar, wireless sensing networks, IMU (Inertial Measurement Unit), and optical flow [

7,

8,

9]. Girish et al. integrated the data from an IMU and a monocular camera to accomplish indoor and outdoor navigation and control of unmanned aircraft [

10]. Wang et al. designed a comprehensive UAV indoor navigation system based on INS, optical flow, and scanning laser Lidar, and the UAV, thus enhanced, was able to estimate the position accurately [

11]. Huh et al. described an integrated navigation sensor module including a camera, a laser scanner, and an inertial sensor [

12]. Tiemann et al. build a UWB indoor positioning system, which enabled the autonomous flying of the UAV [

13]. Generally speaking, methods based on a camera, which are usually sensitive to changes in lighting conditions and environment, are rarely robust. Methods based on wireless positioning technology are unstable in complex environments. Therefore, positioning by means of a single sensor rarely yields a satisfying result. In this paper, we focus on a setup based on a combination of INS, UWB, and a structured light scanner that is expected to provide improved positioning capability.

INS is a navigation system that uses the IMU to track the velocity, position and orientation of a device. Development of Micro-Electro Mechanical System (MEMS) technology has made it possible to easily place a small and low-cost IMU on the UAV platform. INS can provide high precision navigation for a short period of time. As the time increases, the accuracy declines rapidly because of the drift error accumulations caused by inherent defects [

14].

A UWB wireless sensor network is a promising technology for indoor real-time localization. An indoor wireless positioning system consists of at least two separate hardware components: a signal transmitter and a measuring unit. The latter usually carries out the major “intelligence” work [

15]. The measuring unit of the UWB makes use of Time of Flight (TOF), and its large bandwidths enable high precision measurement [

16]. An obvious difference between a conventional wireless network and a UWB is that the former transmits information by varying the power level, frequency, or phase; the latter transmits information by generating radio energy at specific time intervals and occupying a large bandwidth, thus enabling the positioning service. A UWB technique could provide a localization service with high precision and low power consumption. Note, however, that in some complex indoor environments, a UWB system will be influenced by various multipath phenomena, especially in non-line-of-sight conditions.

Many indoor navigation robots use line structured light vision measurement systems for mapping, localization, and obstacle avoidance [

17,

18]. We have designed a structured light scanner based on triangulation technology with a camera, a low-cost light laser and some electronic devices. An important step in achieving this was to configure the light’s medial axis (also called the skeleton) with subpixel accuracy. Skeletonization provides an effective and compact representation of an object by reducing its dimensionality to a “media axis” or “skeleton,” while preserving the topology and geometric properties of the object [

19]. An object in two dimensions is transformed into a curved skeleton containing one-dimensional structures.

A low-cost light stripe laser is not expected to provide the superior performance, (e.g., little laser beam width, divergence angles and beam propagation ratios) of which a high-quality industrial laser is capable, especially when the laser projects a light stripe on a rough surface or a surface with a high reflection rate. The camera will capture a fuzzy light stripe with strong diffuse reflection, or specular reflection inevitably will occur. Thus, a theory called the fuzzy distance theory (FDT), which is designed to deal with fuzzy objects, is adapted here to solve the problems caused by fuzzy light stripes.

Distance Transform (DT) is a process that iteratively assigns a value at each location within an object that is the shortest distance between that location and the complement of the object. However, the notion of a hard DT cannot be applied to fuzzy objects in a meaningful way. The notion of DT for fuzzy objects, called fuzzy distance transform (FDT), becomes more important in many imaging applications, because we often deal with situations that involve data inaccuracies, graded object compositions, or limited image resolution [

20]. In general, FDT is useful, among other things, in feature extraction, determination of local thickness or scale computation, skeletonization, and the analysis of morphological and shape-based objects. Over the past few decades, DT has been popularly applied to hard objects only. Most DT methods approximate global Euclidean distance by propagating local distances between adjacent pixels.

INS, UWB, and a structured light scanner provide position information independently, but each has advantages and disadvantages. INS is used to quickly track the position of an object relative to a known starting point with high precision, but it suffers from accumulations of error. UWB could provide absolute positioning information, but its results are unstable due to the complexities of the indoor environment. A structured light scanner is capable of achieving centimeter-level accuracy, but it only works while the vehicle is hovering in a specified area. In order to utilize the above information effectively, a heterogeneous sensing system is constructed, and an optimized data fusion strategy is designed to fuse information gleaned from different sensors. Data fusion is a technology that combines information from several sources in order to form a unified result, which has several advantages, including enhancing data authenticity or availability. Data fusion technology is now widely deployed in many fields such as sensor networks, unmanned ground and aerial vehicles, robotics and image processing, and intelligent systems [

21,

22,

23]. Generally, the data fusion of multi-sensor devices provides significant advantages over single-source data, and the combination of multiple types of sensors may increase the accuracy of result.

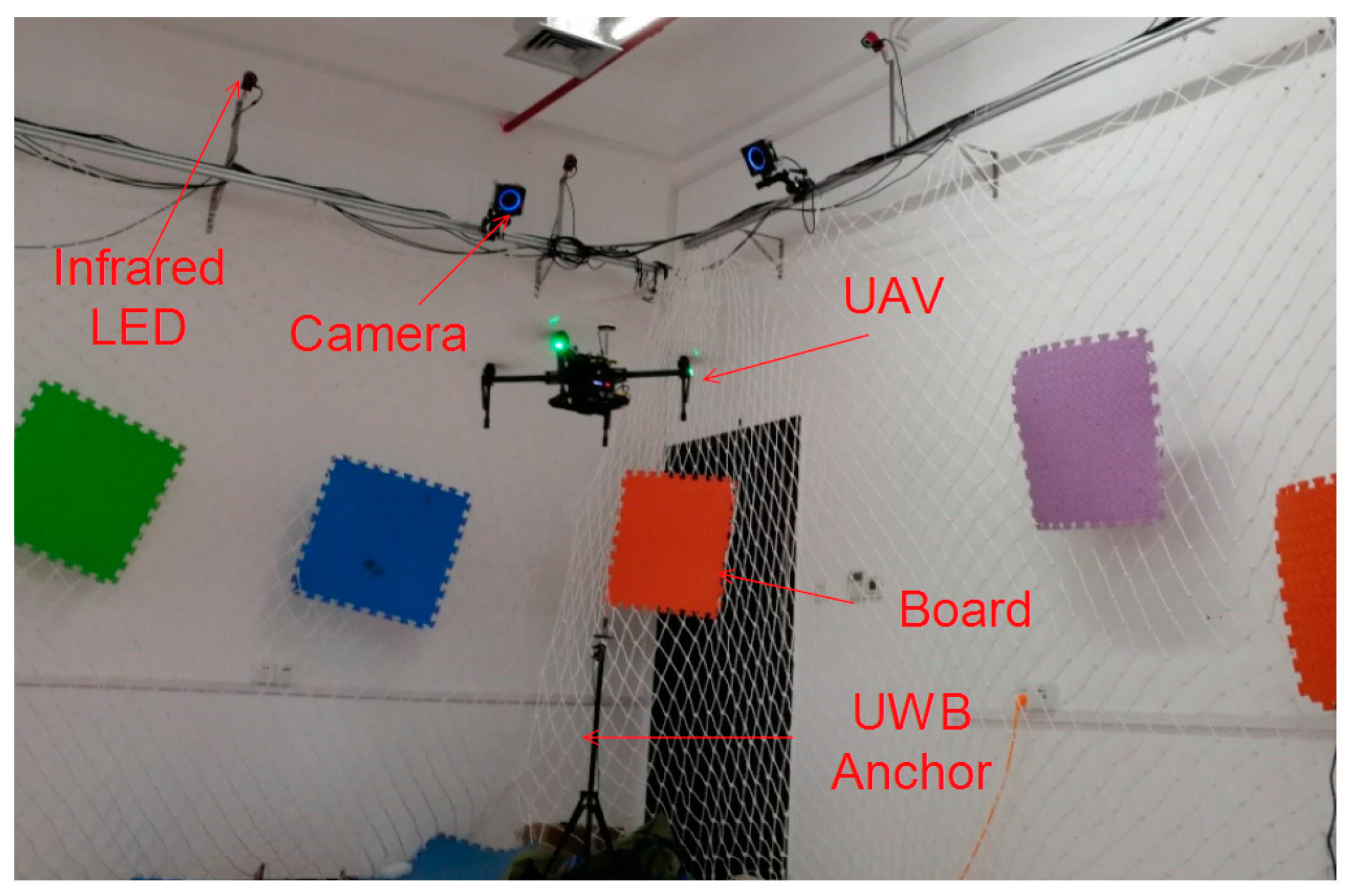

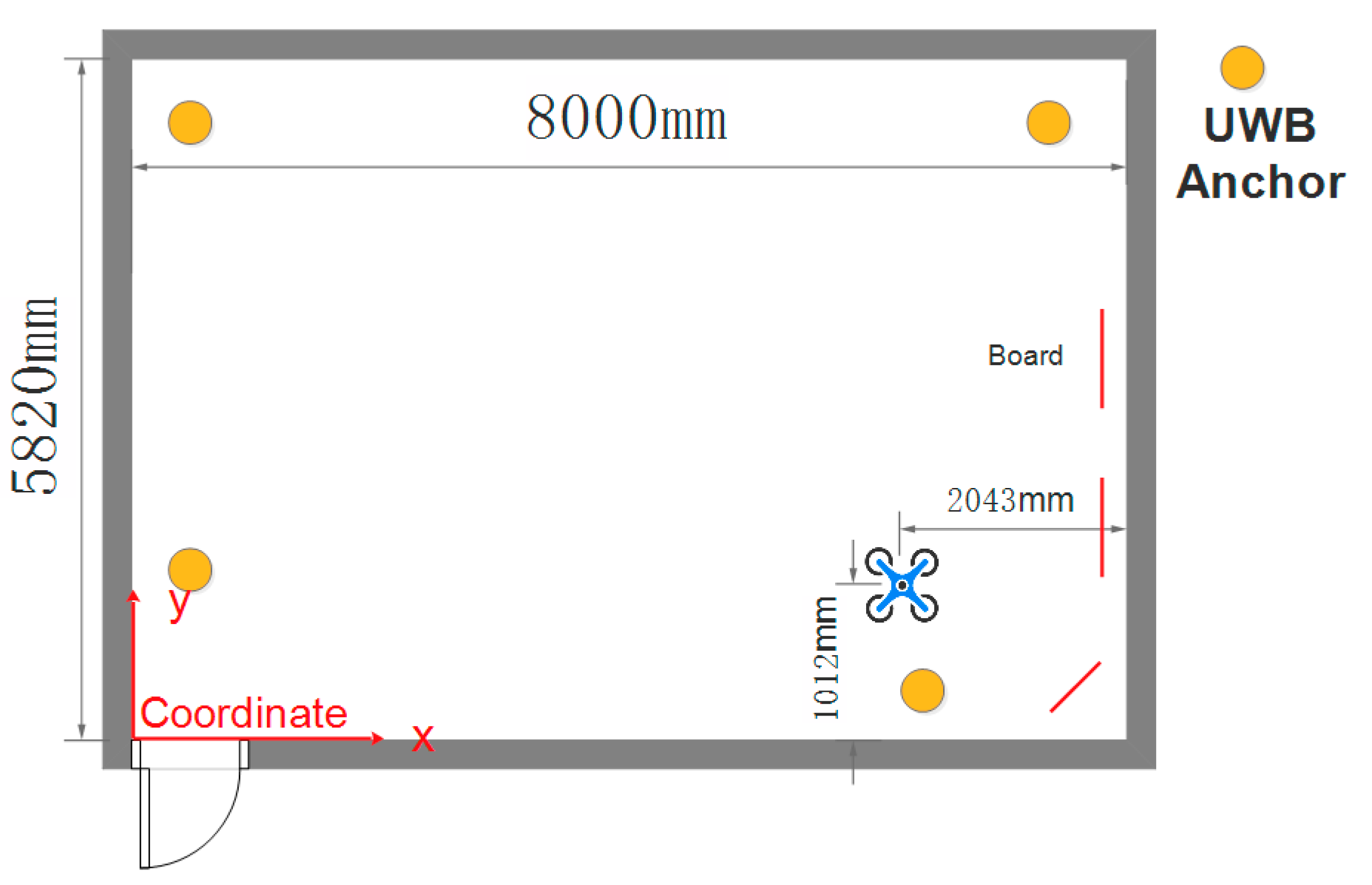

3. Hardware Setup

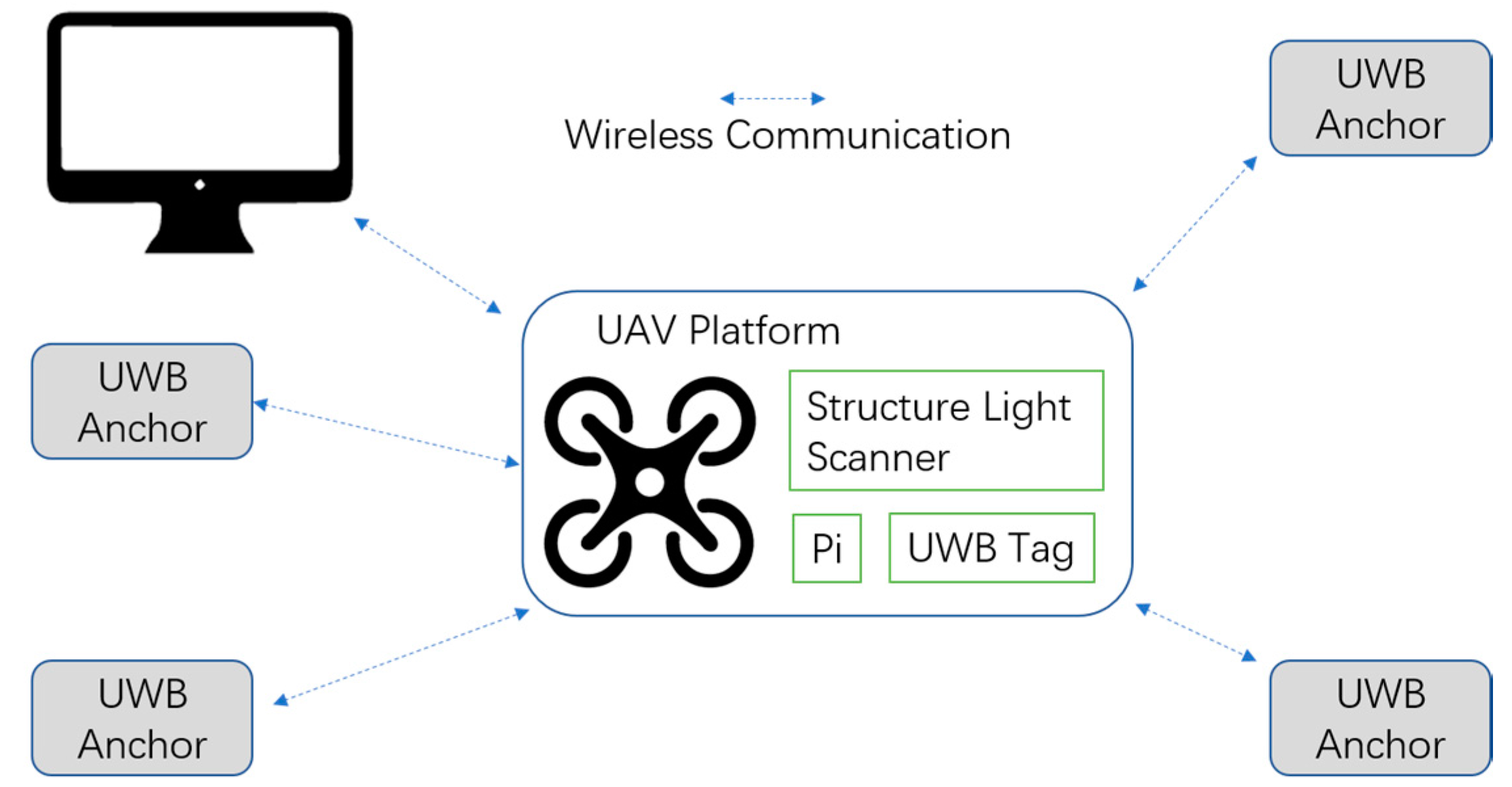

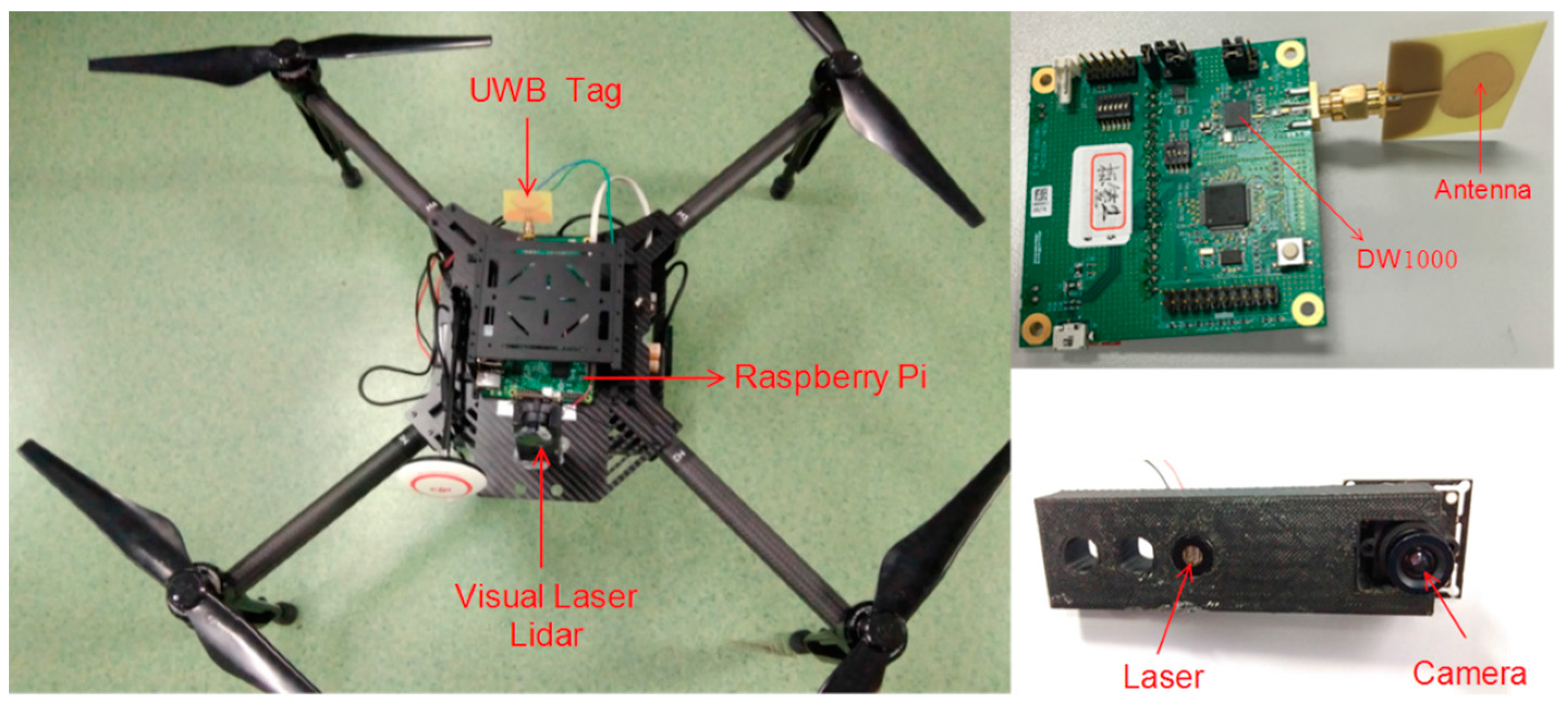

The hardware platform that we used is composed of a PC (with OS Windows 10, CPU i5-7300HQ, Memory 8G DDR3), a micro UAV (DJI M100, DJI-Innovations, Shenzhen, China), a Raspberry Pi 3B, IMU (integrated in the UAV), a UWB (DW1000), and a structured light scanner (self-made) that can obtain position information along the x and y axes. The system architecture is illustrated in

Figure 2. The main components are presented in

Figure 3. The computation work was finished using a Raspberry Pi, except that the algorithm with the structured light scanner was calculated on the PC and transmitted to the Raspberry Pi through a Wi-Fi connection.

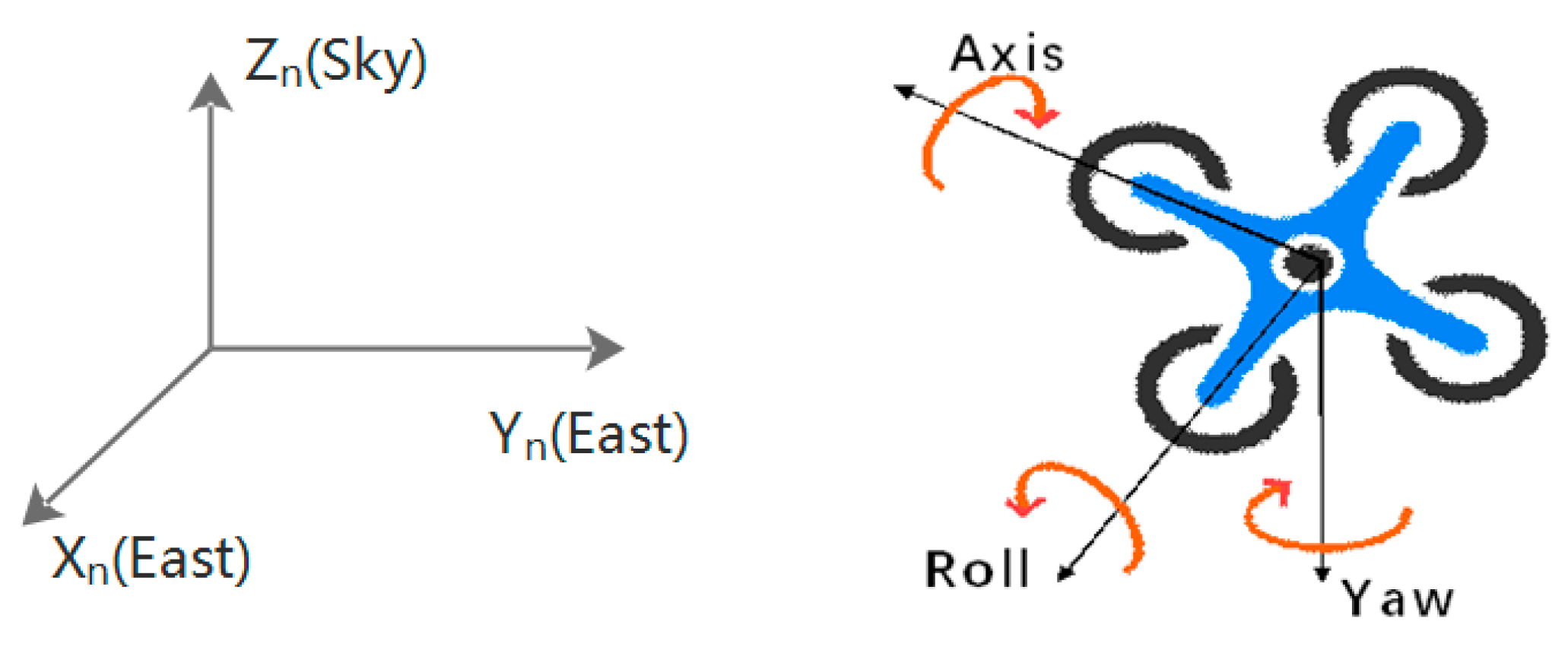

A nine axis IMU is integrated in the micro UAV, including three mono-axial accelerometers that allow for the detection of the current rate of acceleration; three mono-axial gyroscopes for the detection of changes in rotational attributes like pitch, roll, and yaw; and three magnetometers for the detection of the orientation of the body.

The UWB module is configured with DW1000, a fully integrated low power single chip CMOS radio transceiver IC compliant with the IEEE 802.15.4-2011 ultra-wideband (UWB) standard. The module supports a two-way ranging exchange. There are four anchor sensors, the positions of which are known, and they are used for station and other tag sensors placed on the UAV that are used for positioning. The system allows for the location of objects in real time location systems (RTLS) to a precision of 15 cm indoors and achieves an excellent communications range of up to 150 m, thanks to coherent receiver techniques. The application of a UWB in complex environments is still a challenge. Due to the effect of NLOS (Not Line of Sight) and Multi-Path situations, the positioning accuracy and robustness of the UWB is hard to guarantee. In this article, only the line-of-sight propagation is taken into account.

The low-cost structure of the light scanner made for this project is composed of a structured light device emitting line laser (908 nm) and an infrared camera, fixed on a platform. The infrared camera is covered with an optical filter, which absorbs 99.99% of the visible light and transmits 80% of the infrared light at 908 nm, thus ensuring that the light of the laser is clearly displayed in the image. This design improves the quality of the image significantly and reduces the difficulty of image processing. More details about the structured light scanner are given in

Section 4.

Ultrasound sensors are usually used for altitude control, but the errors of ultrasound sensors are hard to evaluate. Any obstacles on the floor, for example, would affect the accuracy of the sensors. In order to accurately evaluate the effectiveness of the proposed method, we did not employ ultrasound sensors.

5. Principle of Structured Light Scanner

5.1. Overall of Structured Light Scanner

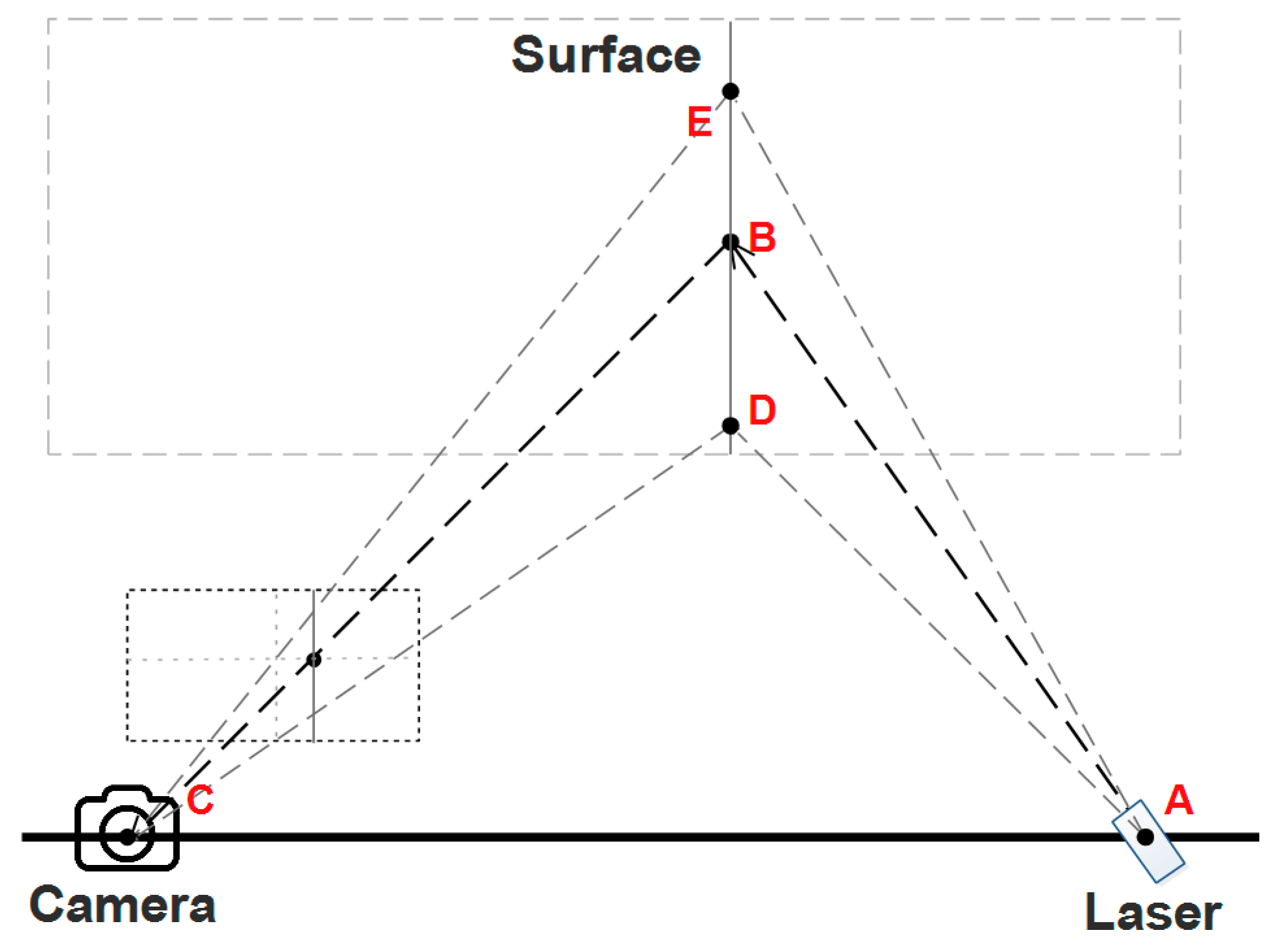

The principal of a structured light scanner based on triangulation is explained in

Figure 5. The scanner projects a laser line onto the surface of the object, and a photo of the line is captured by a CMOS camera. As the laser is fixed onto the platform, the equation of the AED plane can be calculated through a calibration process. The equation of line CB, however, can be calculated by a perspective projection model. The position of point B then can be determined as the intersection of line CB with plane AED, and so on with the other points on the projected curve ED.

In order to retrieve the depth values for each point on the curve ED, which is a projection of the laser stripe on the object surface, we need to extract the projected position for each point on the curve ED in the image plane of the camera. Instead of an ideal curve, ED is actually a stripe with a certain width. Therefore, a skeletonization algorithm may be applied to extract the skeleton of the projected curve in the image plane. In this paper, we utilized a skeletonization algorithm based on FDT theory, and the details are described in the following section.

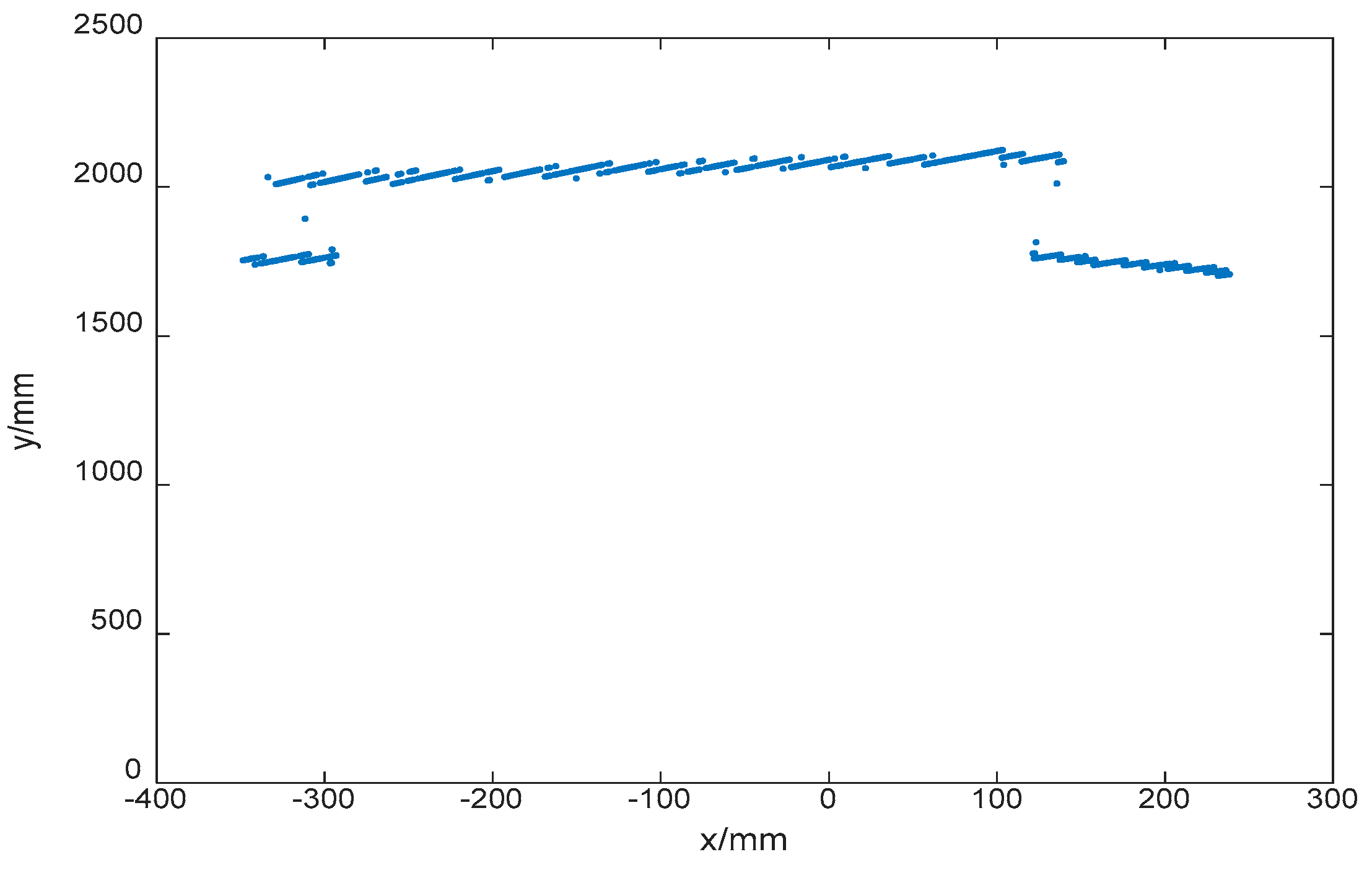

Once the depth value of the object is retrieved, we can utilize SLAM (Simultaneous localization and mapping) technology to determine localization using the device. The ICP (Iterative Closest Point) algorithm is adopted here to accomplish the localization, and it is hypothesized that the mapping of the environment is already known.

In order to get the positioning information, rotation matrix

and translation matrix

are calculated by matching the point cloud with the ICP algorithm, which minimizes the sum of the square error. The square error is defined as:

where

is source point cloud,

is reference point and

is the number of point.

5.2. FDT Theory in Digital Cubic Space

The accuracy of the depth estimation depends mainly on the skeletonization algorithm. FDT theory is adopted here for the extraction of the skeleton. We used a dynamic programming–based algorithm to calculate the FDT values.

Let be the reference set, then a fuzzy subset of is defined as a set of ordered pairs where is the membership function of in . A 2D fuzzy digital object is a fuzzy subset defined on , i.e., , where . A pixel belongs to the support of if .

The fuzzy distance between two points

and

in a fuzzy object

is defined as being the length of the shortest path between

and

. The fuzzy distance

between

and

in

is set to

where

is the spatial Euclidean distance between

and

, i.e., 1 if

and

are edge neighbors and

if they are vertex neighbors. We use

to denote the value of a point in a FDT and

for the distance function used to calculate a FDT. The Algorithm 1 is presented as follows.

| Algorithm 1: Compute FDT |

Input:

Output: an image () representing FDT of O.

1. For all , set = 0;

2. For all , set ;

3. For all such that is not empty,

Push into ;

4. While is not empty do

5. Remove a point from ;

6. Find

7. If then

8. Set

9. Push all points into

10. Output the FDT image . |

5.3. Experiments for FDT Theory

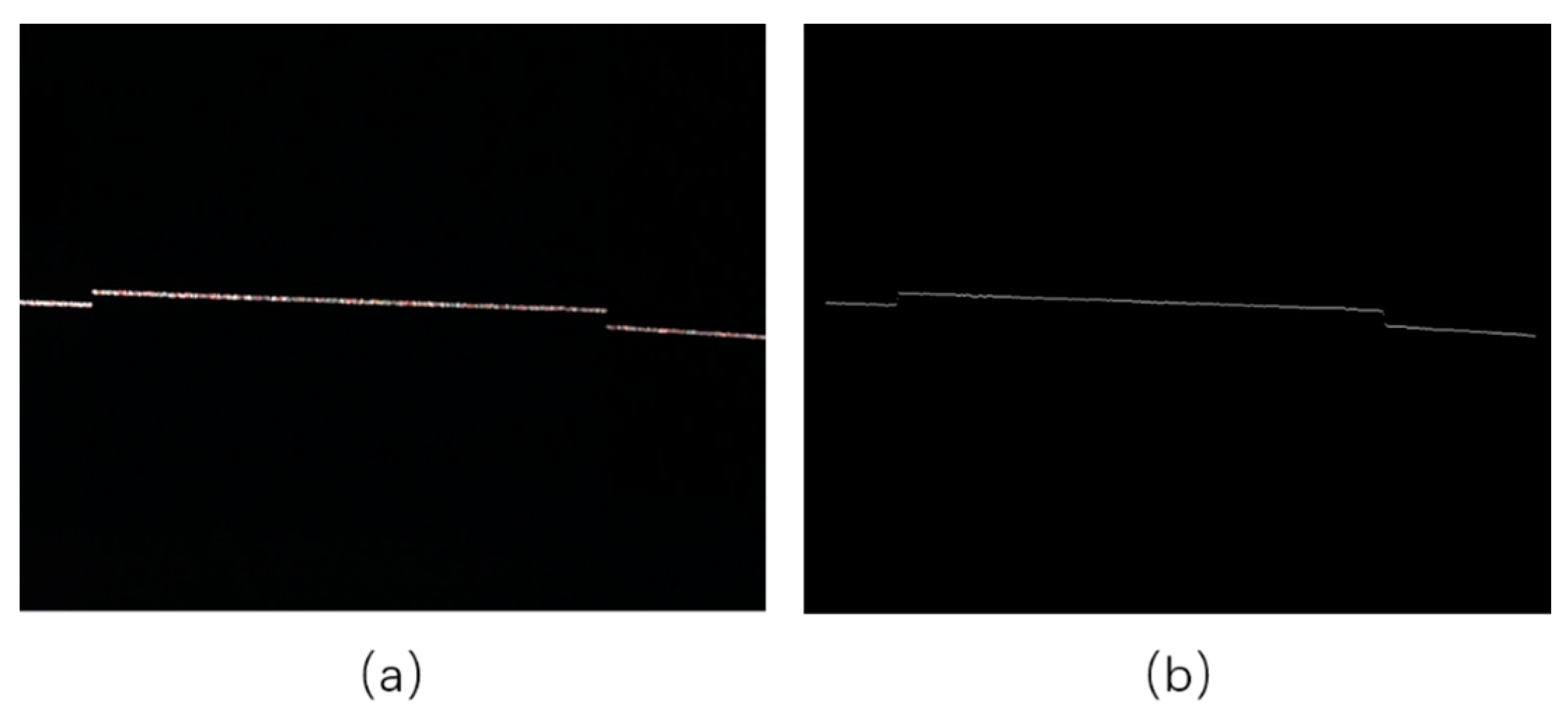

Since an image contains only one light stripe, we first extract and segment the stripe from the image to reduce the computational complexity. Then, we enhance the contrast and convert the color image to gray. Finally, we distinguish between the target part and the background part with thresholds set by the mean intensity values of the image. The process is illustrated in

Figure 6.

In this experiment, we verify the effectiveness of the proposed method based on FDT when extracting the skeleton of the light stripe. Compared with existing methods, the proposed algorithm can effectively deal with the highlighted part of the stripe due to the strong diffuse reflection.

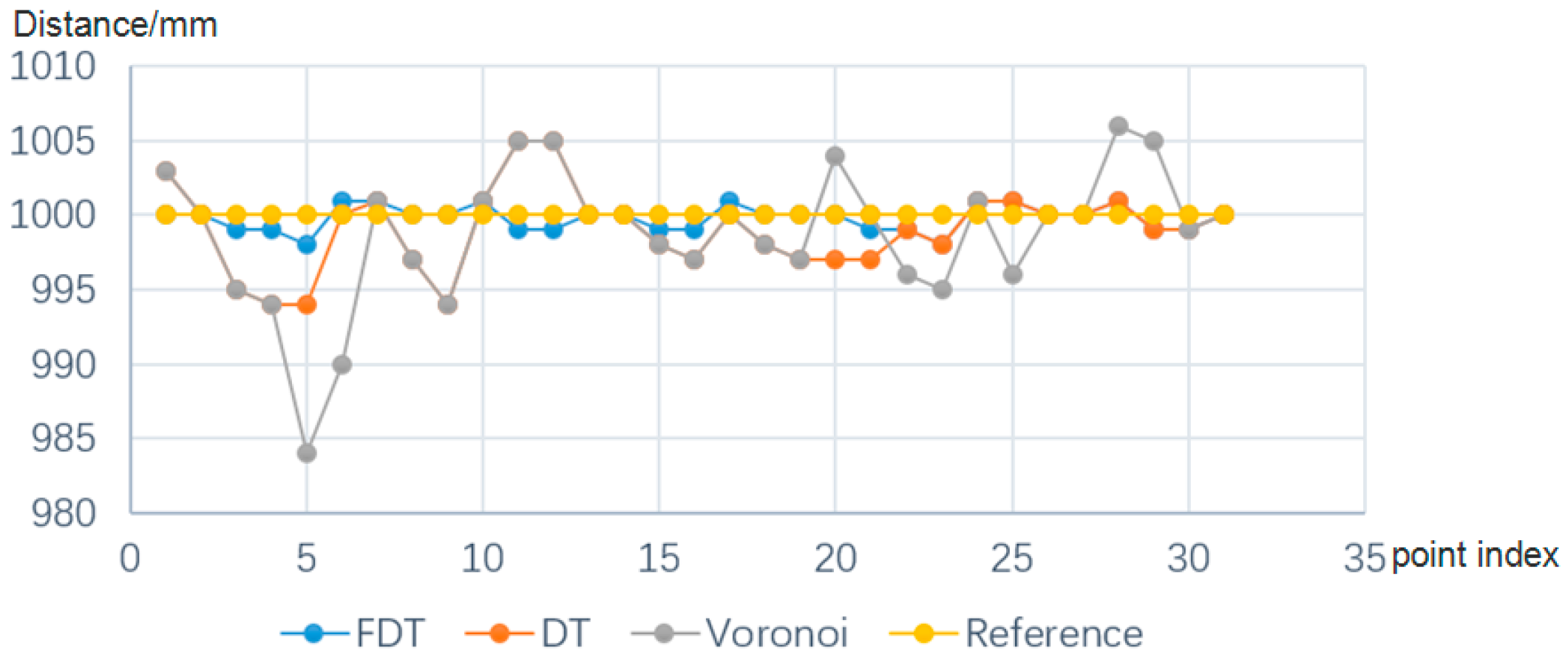

Figure 7 shows the skeletonization process, including the original image, the image after FDT, and the extracted skeleton of the light stripe. In order to evaluate the performance of different methods, we implemented three algorithms (FDT, DT, and Voronoi) to calculate the corresponding distance every five pixels along the skeleton and make a comparison reference to these distances.

Figure 8 illustrates the comparison result; we can see that the FDT curve is most similar to the reference curve, which implies that it is the best performance of the proposed FDT based method. The mean distance errors between the two corresponding pixels are shown in

Table 1.

Furthermore, a structured light scanner will work only when the UAV hovers, as the tilt or motion of the UAV body would cause poor performance. Also, it only provides positioning information for two dimensions of the horizontal plane. Thus, it works as an auxiliary device to improve the positioning accuracy when the vehicle is in a hovering state.

6. Data Fusion Strategy

A data fusion algorithm, which fuses the data from the INS, UWB and structured light scanner, is proposed in this section to achieve better positioning accuracy.

The overall information processing flow and data fusion strategy is illustrated in

Figure 9. While the UAV is in motion, the system fuses the information of the IMU and UWB with an adaptive Kalman filtering system [

25]. If the UAV arrives at the specified area, it will enter the hovering state to execute tasks, and the structured light scanner will be activated to ascertain the positioning information. The system then will fuse the data of the UWB and the structured light scanner with Gauss filtering in a hovering state.

6.1. Adaptive Kalman Filtering to Fuse the Data of INS and UWB in Motion State

A Kalman filter is a recursive estimator working in the time domain. It is hypothesized that the static function is linear and the noise accords with Gauss distribution. Only the prediction from the last time measurement, and the measurement of the current time, are required to produce the estimation for the current state. The algorithm works in a prediction phase and an update phase alternatively. The prediction phase predicts the state of the current time, and the update phase estimates the optimal state by incorporating the observation.

In a standard Kalman filter, the static matrix is:

where

denotes the estimate of the system's state at time step

, and

is the state transition model.

is the control-input model, which is applied to the control vector

.

is the process noise, which is assumed to fit

. The specific definitions are:

where

is the position, and

is the velocity;

where

is the time interval;

where a is the acceleration.

The measurement matrix is:

where

is the observation.

is the observation model, which maps the true state space into the observed space.

is the observation noise, which is assumed to be zero, indicating Gaussian white noise with covariance

. The specific definitions are:

Adaptive Kalman filtering is developed based on conventional Kalman filtering to estimate the system noise variance-covariance matrix

and measurement noise variance-covariance matrix

dynamically. This predicts the system and measurement noise from residual sequences. The residual sequence is defined as:

where

is the output of measurement, and

is the prediction of measurement.

is defined as:

where

is the measurement matrix, and

is the prediction of the state.

We assume that the measurement noise fits Gauss distribution, and then the probability density function of the measurement is:

where

is the adaptive parameter, and

is the number of measurements. While the length of windows is

, the estimation based on the maximum likelihood is:

After the differential operation, the expression is:

Then, we can get the base function of the adaptive Kalman filtering based on the maximum likelihood:

In order to get the estimation value of

, we assume that the measurement noise

is independent of the adaptive parameter

and the value of

is known. Let

, and then we can get:

Then, the estimation value of

is:

As with the measurement noise, we assume that the system noise

is independent of the adaptive parameter

, and the value is known. Let

, and then we can get:

The estimation value of

is:

After getting the estimation value of and , we can apply these values to the recursive process of the Kalman filtering.

6.2. Gauss Filtering to Fuse the Data of the UWB and the Structured Light Scanner in the Hovering State

According to the convolution property that two Gaussians convolve to make another Gaussian [

26], the result of new Gaussian form integrating the UWB and the structured light scanner can be written as:

where

and

We can utilize the above equation to fuse the data of the UWB and the structured light scanner. Compared to Kalman filtering, this is easier to understand and could easily fuse multiple sources of data with a simple mathematical model.

8. Conclusions

Choosing sensors for the localization of UAVs for indoor navigation can be a difficult task. In this paper, a setup including an INS, UWB, and structured light scanner is proposed. An optimized data fusion strategy is designed to improve the positioning accuracy in the moving and hovering states. The simulation results show that the INS cannot work for a long period of time without cooperation with the UWB. The integration of the INS and UWB can improve the positioning accuracy effectively. Moreover, we made a structured light scanner to retrieve a depth map and utilized an FDT algorithm to improve its performance. The structured light scanner can retrieve high-precision data about the position of the UAV around the target point and help to accomplish specified tasks effectively. Therefore, it can be used as an auxiliary device for high-precision positioning when the UAV is in a hovering state. With the proposed method, we achieved an accuracy of 15 cm for a device in motion and 5 cm while it is in a hovering state.

Currently, we have only tested our method in a simple environment. A complex environment remains a challenge for accurate positioning. The influence of the space configuration could be important in more complex cases, and the positioning accuracy of the UWB would be unstable. In future work, we will conduct more research on the UWB and try to upgrade our setup and methods to make them adaptable to complex environments. The structured light scanner is also expected to work for devices in motion, while the data fusion strategy will be further optimized for real-world applications. Also, the orientation is important in many applications. In this paper, we focus mainly on the positions of the UAV; therefore, evaluating the errors of orientation will be carried out in future work.