Monocular Vision-Based Underwater Object Detection

Abstract

:1. Introduction

2. Related Works

2.1. Underwater Object Detection

2.2. Comparison to Previous Work

2.3. Proposed Method

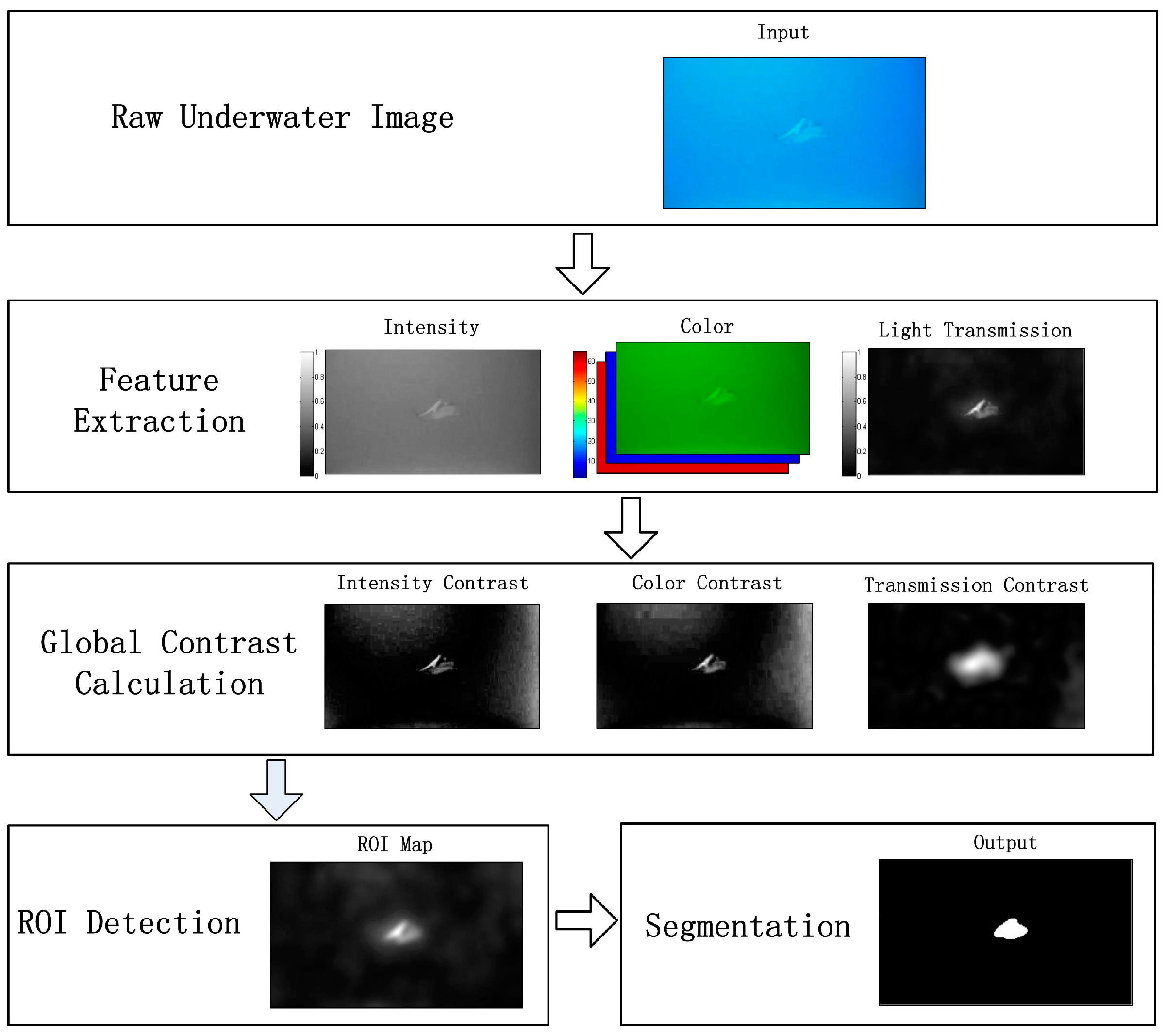

3. ROI Detection

- Global contrast considerations: separating natural aquatic objects from the background and highlighting the entire body of the objects.

- Consideration of various features: detecting a reliable ROI using multiple cues including the color, intensity, and transmission features extracted from the underwater images.

- Efficiency considerations: ROI detection should be fast, have low memory footprints, and be easy to apply in underwater scenes.

4. Light Transmission Estimation

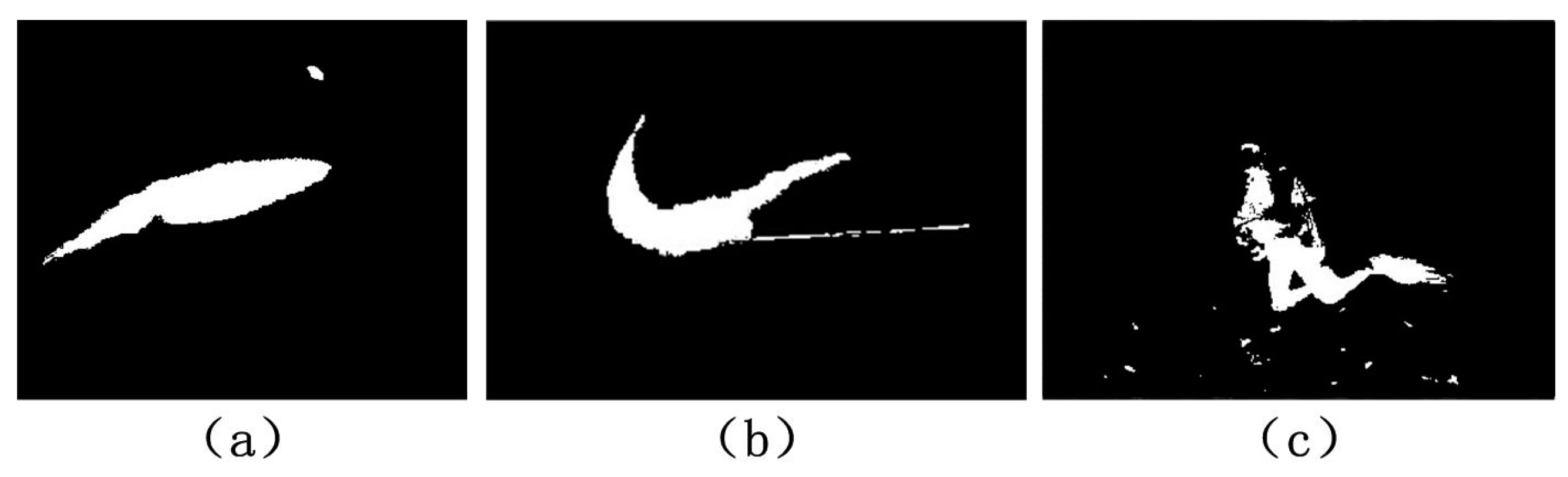

5. Image Segmentation

6. Experimental Evaluation and Analysis

6.1. Dataset and Experimental Setup

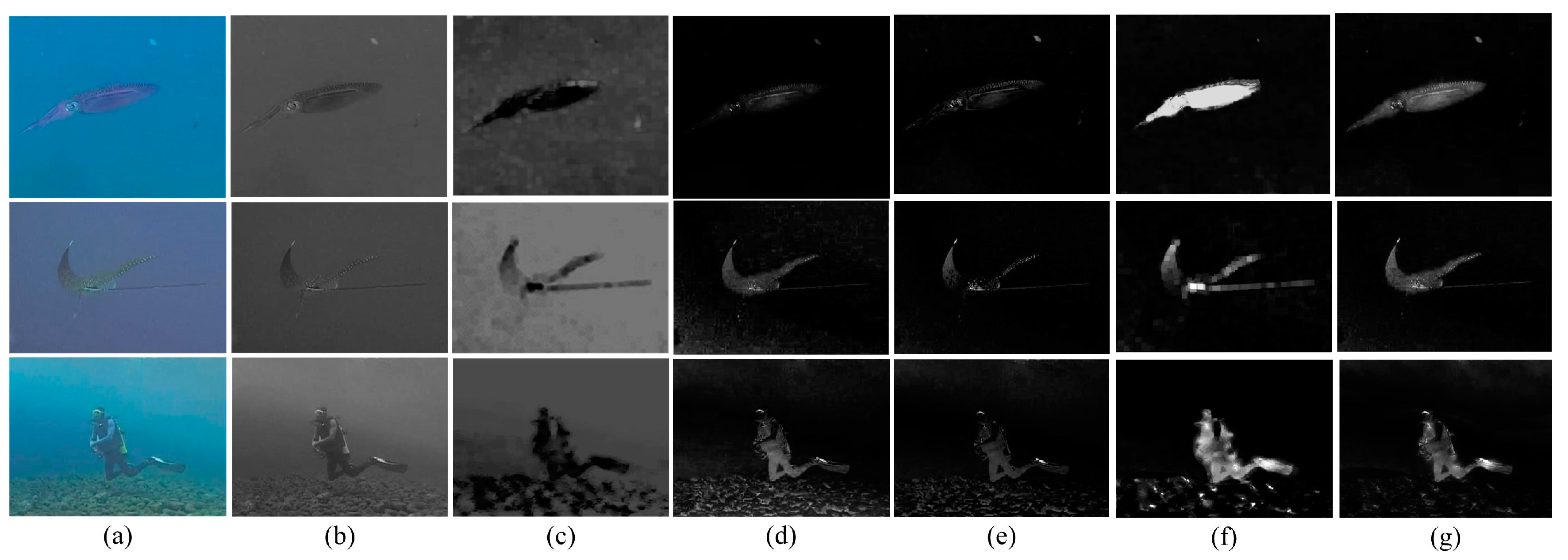

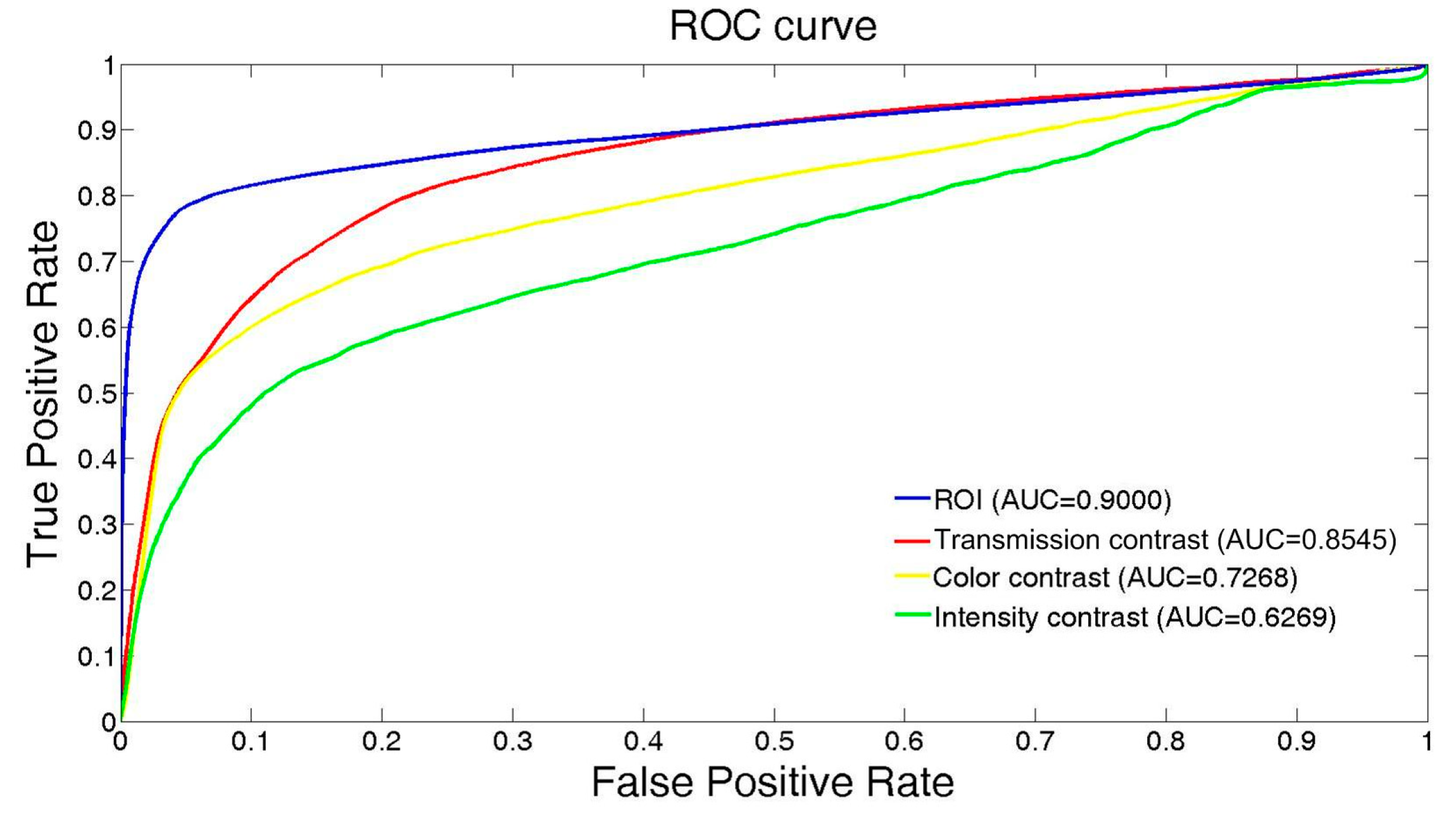

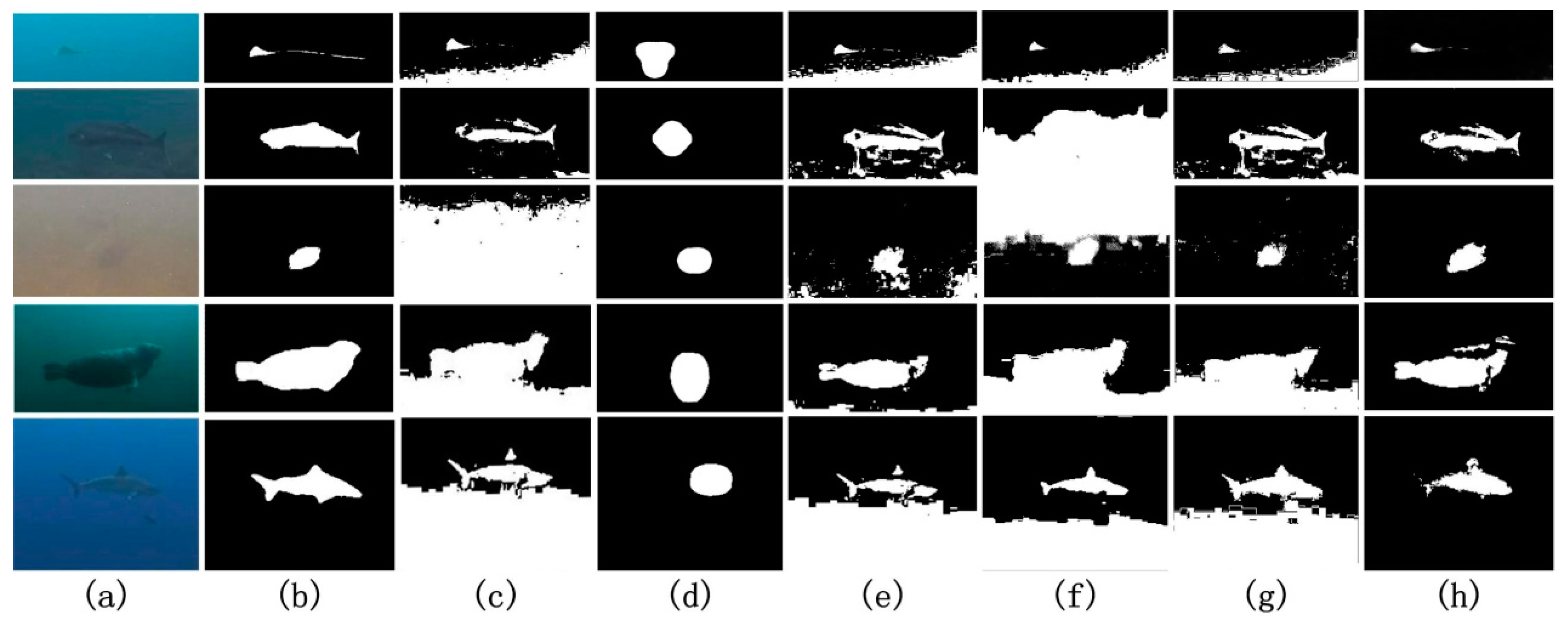

6.2. ROI Detection

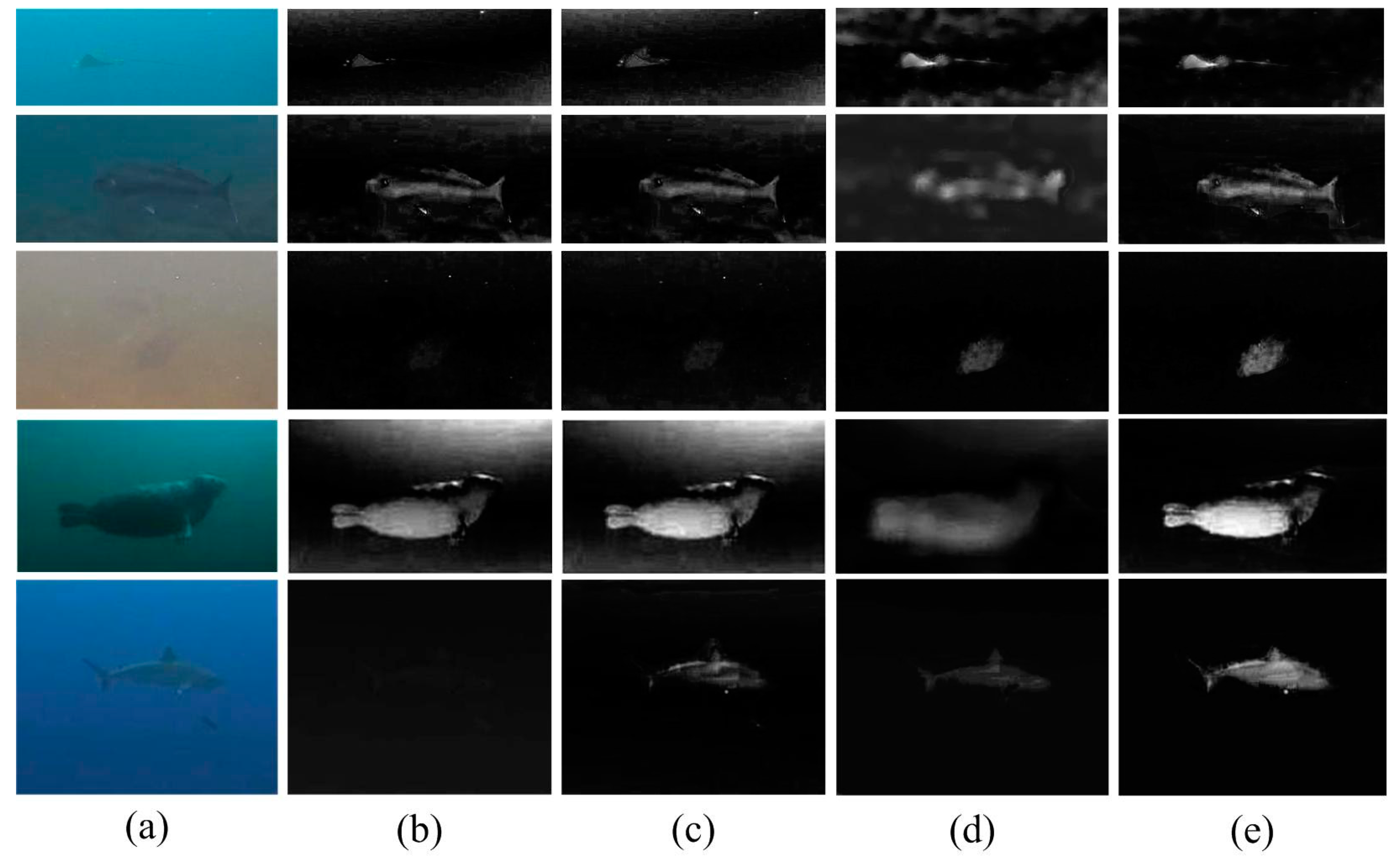

6.3. Underwater Object Detection

7. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Xue, X.; Pan, D.; Zhang, X.; Luo, B.; Chen, J.; Guo, H. Faraday anomalous dispersion optical filter at 133 Cs weak 459 nm transition. Photonics Res. 2015, 3, 275–278. [Google Scholar] [CrossRef]

- Liu, W.; Xu, Z.; Yang, L. SIMO detection schemes for underwater optical wireless communication under turbulence. Photonics Res. 2015, 3, 48–53. [Google Scholar] [CrossRef]

- Spampinato, C.; Chen-Burger, Y.H.; Nadarajan, G.; Fisher, R.B. Detecting, Tracking and Counting Fish in Low Quality Unconstrained Underwater Videos. VISAPP 2008, 2008, 514–519. [Google Scholar]

- Foresti, G. L.; Gentili, S. A vision based system for object detection in underwater images. Int. J. Pattern Recognit. Artif. Intell. 2000, 14, 167–188. [Google Scholar] [CrossRef]

- Cho, H.; Gu, J.; Joe, H.; Asada, A.; Yu, S.C. Acoustic beam profile-based rapid underwater object detection for an imaging sonar. J. Mar. Sci. Technol. 2015, 20, 180–197. [Google Scholar] [CrossRef]

- Masmitja, I.; Gomariz, S.; Del Rio, J.; Kieft, B.; O’Reilly, T. Range-only underwater target localization: Path characterization. In Proceedings of the OCEANS 2016 MTS/IEEE Monterey, Monterey, CA, USA, 19–23 September 2016; pp. 1–7. [Google Scholar]

- Gostnell, C.; Yoos, J. Efficacy of an interferometric sonar for hydrographic surveying: Do interferometers warrant an in-depth examination. Hydrogr. J. 2005, 118, 17–22. [Google Scholar]

- Ballard, R.D.; Stager, L.E.; Master, D.; Yoerger, D.; Mindell, D.; Whitcomb, L.L.; Singh, H.; Piechota, D. Iron age shipwrecks in deep water off Ashkelon, Israel. Am. J. Archaeol. 2002, 106, 151–168. [Google Scholar] [CrossRef]

- Piper, D.J.; Cochonat, P.; Morrison, M.L. The sequence of events around the epicentre of the 1929 Grand Banks earthquake: Initiation of debris flows and turbidity current inferred from sidescan sonar. Sedimentology 1999, 46, 79–97. [Google Scholar] [CrossRef]

- Caffaz, A.; Caiti, A.; Casalino, G.; Turetta, A. The hybrid glider/AUV Folaga. IEEE Robot. Autom. Mag. 2010, 17, 31–44. [Google Scholar] [CrossRef]

- Ortiz, A.; Simó, M.; Oliver, G. A vision system for an underwater cable tracker. Mach. Vis. Appl. 2002, 13, 129–140. [Google Scholar] [CrossRef]

- Bruno, F.; Bianco, G.; Muzzupappa, M.; Barone, S.; Razionale, A.V. Experimentation of structured light and stereo vision for underwater 3D reconstruction. ISPRS J. Photogram. Remote Sens. 2011, 66, 508–518. [Google Scholar] [CrossRef]

- Johnson-Roberson, M.; Pizarro, O.; Williams, S.B.; Mahon, I. Generation and visualization of large-scale three-dimensional reconstructions from underwater robotic surveys. J. Field Robot. 2010, 27, 21–51. [Google Scholar] [CrossRef]

- Negahdaripour, S.; Madjidi, H. Stereovision imaging on submersible platforms for 3-D mapping of benthic habitats and sea-floor structures. IEEE J. Ocean. Eng. 2003, 28, 625–650. [Google Scholar] [CrossRef]

- Brown, B.E.; Dunne, R.P.; Goodson, M.S.; Douglas, A.E. Marine ecology: Bleaching patterns in reef corals. Nature 2000, 404, 142–146. [Google Scholar] [CrossRef] [PubMed]

- Holjevac, I.A. A vision of tourism and the hotel industry in the 21st century. Int. J. Hosp. Manag. 2003, 22, 129–134. [Google Scholar] [CrossRef]

- Smith, R.C.; Baker, K.S. Optical properties of the clearest natural waters (200–800 nm). Appl. Opt. 1981, 20, 177–184. [Google Scholar] [CrossRef] [PubMed]

- Schettini, R.; Corchs, S. Underwater image processing: State of the art of restoration and image enhancement methods. EURASIP J. Adv. Signal Process. 2010, 2010, 746–752. [Google Scholar] [CrossRef]

- Underwater Vision. Available online: http://www.youtube.com/user/bubblevision and http://www.youtube.com/watch?v=NKmc5dlVSRk&hd=1 (accessed on 5 November 2016).

- Yu, S.C.; Ura, T.; Fujii, T.; Kondo, H. Navigation of autonomous underwater vehicles based on artificial underwater landmarks. In Proceedings of the MTS/IEEE Oceans 2001, Honolulu, HI, USA, 5–8 November 2001. [Google Scholar]

- Lee, P.M.; Jeon, B.H.; Kim, S.M. Visual servoing for underwater docking of an autonomous underwater vehicle with one camera. In Proceedings of the Oceans 2003, San Diego, CA, USA, 22–26 September 2003. [Google Scholar]

- Dudek, G.; Jenkin, M.; Prahacs, C.; Hogue, A.; Sattar, J.; Giguere, P.; Simhon, S. A visually guided swimming robot. In Proceedings of the 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005. [Google Scholar]

- Sattar, J.; Dudek, G. Robust servo-control for underwater robots using banks of visual filters. In Proceedings of the IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009. [Google Scholar]

- Negre, A.; Pradalier, C.; Dunbabin, M. Robust vision-based underwater target identification and homing using self-similar landmarks. In Field and Service Robotics; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Maire, F.D.; Prasser, D.; Dunbabin, M.; Dawson, M. A vision based target detection system for docking of an autonomous underwater vehicle. In Proceedings of the 2009 Australasion Conference on Robotics and Automation, Sydney, Australia, 2–4 December 2009. [Google Scholar]

- Lee, D.; Kim, G.; Kim, D.; Myung, H.; Choi, H.T. Vision-based object detection and tracking for autonomous navigation of underwater robots. Ocean Eng. 2012, 48, 59–68. [Google Scholar] [CrossRef]

- Kim, D.; Lee, D.; Myung, H.; Choi, H.T. Artificial landmark-based underwater localization for AUVs using weighted template matching. Intell. Serv. Robot. 2014, 7, 175–184. [Google Scholar] [CrossRef]

- Rizzini, D.L.; Kallasi, F.; Oleari, F.; Caselli, S. Investigation of vision-based underwater object detection with multiple datasets. Int. J. Adv. Robot. Syst. 2015, 12, 77. [Google Scholar] [CrossRef]

- Edgington, D.R.; Salamy, K.A.; Risi, M.; Sherlock, R.E.; Walther, D.; Koch, C. Automated event detection in underwater video. In Proceedings of the OCEANS 2003, San Diego, CA, USA, 22–26 September 2003. [Google Scholar]

- Chuang, M.C.; Hwang, J.N.; Williams, K. A feature learning and object recognition framework for underwater fish images. IEEE Trans. Image Process. 2016, 25, 1862–1872. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Y.; Chang, L.; Dai, J.; Zheng, H.; Zheng, B. Automatic object detection and segmentation from underwater images via saliency-based region merging. In Proceedings of the OCEANS, Shanghai, China, 10–13 April 2016. [Google Scholar]

- Li, J.; Eustice, R.M.; Johnson-Roberson, M. High-level visual features for underwater place recognition. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015. [Google Scholar]

- Chiang, J.Y.; Chen, Y.C. Underwater image enhancement by wavelength compensation and dehazing. IEEE Trans. Image Process. 2012, 21, 1756–1769. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar] [PubMed]

- Duntley, S.Q. Light in the sea. JOSA 1963, 53, 214–233. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Walther, D.; Edgington, D.R.; Koch, C. Detection and tracking of objects in underwater video. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; Volume 1, pp. 544–549. [Google Scholar]

- Bazeille, S.; Quidu, I.; Jaulin, L. Color-based underwater object recognition using water light attenuation. Intell. Serv. Robot. 2012, 5, 109–118. [Google Scholar] [CrossRef]

- Gao, G.; Wen, C.; Wang, H. Fast and robust image segmentation with active contours and Student’s-t mixture model. Pattern Recognit. 2017, 63, 71–86. [Google Scholar] [CrossRef]

- Chen, Y.; Ma, Y.; Kim, D.H.; Park, S.K. Region-based object recognition by color segmentation using a simplified PCNN. IEEE Trans. Neural Netw. Learn. Syst. 2015, 26, 1682–1697. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; He, Z. Foreground object extraction through motion segmentation. Chin. Opt. Lett. 2015, 13, 27–31. [Google Scholar]

- Smeulders, A.W.; Chu, D.M.; Cucchiara, R.; Calderara, S.; Dehghan, A.; Shah, M. Visual tracking: An experimental survey. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1442–1468. [Google Scholar] [PubMed]

| Method | ||||||

|---|---|---|---|---|---|---|

| Otsu | 0.3969 | 0.8808 | 0.5473 | 0.3767 | 0.2716 | 24.5898 |

| Saliency | 0.7847 | 0.3674 | 0.5005 | 0.3337 | 0.0201 | 12.2007 |

| Compatible color | 0.8068 | 0.6151 | 0.6980 | 0.5361 | 0.0318 | 9.4436 |

| Contour | 0.4090 | 0.9026 | 0.5629 | 0.3917 | 0.2495 | 22.5067 |

| PCNN | 0.3210 | 0.6733 | 0.4347 | 0.2777 | 0.2795 | 28.7212 |

| Our method | 0.9654 | 0.7260 | 0.8288 | 0.7076 | 0.0066 | 6.0863 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Z.; Zhang, Z.; Dai, F.; Bu, Y.; Wang, H. Monocular Vision-Based Underwater Object Detection. Sensors 2017, 17, 1784. https://doi.org/10.3390/s17081784

Chen Z, Zhang Z, Dai F, Bu Y, Wang H. Monocular Vision-Based Underwater Object Detection. Sensors. 2017; 17(8):1784. https://doi.org/10.3390/s17081784

Chicago/Turabian StyleChen, Zhe, Zhen Zhang, Fengzhao Dai, Yang Bu, and Huibin Wang. 2017. "Monocular Vision-Based Underwater Object Detection" Sensors 17, no. 8: 1784. https://doi.org/10.3390/s17081784