1. Introduction

With the population of the world increasing, the number of older people is growing inevitably. For personal comfort and due to limited medical resources, most of them live alone within their own house instead of nursing houses [

1]. However, in their private space, emergency situations may not be noticed by others in time. For example, lying on the floor for a long time after a fall is one of the most dangerous situations. This will cause complications, and even death for the elderly [

2]. Hence, how to assist them to live conveniently and safely has become an important social issue.

To achieve the automatic recognition of human daily activities for healthy aging, the methods proposed by scientists can be roughly divided into three categories [

3]. The first category is based on vision sensors. Vision-based systems can monitor the entire scenario and capture the detailed movement of the human target [

4,

5]. However, because of the data association problem, it is challenging to handle the huge volume of vision data effectively [

6]. Environmental factors such as occlusions and poor illumination conditions will deteriorate this problem. Besides, many people are uncomfortable living with cameras, which make them feel infringement on their privacy [

7,

8]. The second category is based on wearable sensors. Compared to vision sensors, the acceptance of wearable sensors is more preferable, and the volume of data to process is much less; there is also no data association problem [

9,

10]. However, the attachment of wearable sensors on the human body—even only one sensor—will feel obtrusive and uncomfortable to the resident [

11]. What is more, people usually change their clothes daily and forget to attach the wearable sensors again or sometimes are not sufficiently clothed to wear sensors when the indoor temperature is high [

12]. Even after the careful design of the power management unit, the batteries inside the wearable sensors need to be recharged or changed regularly, which feels inconvenient for the users [

13]. The third category is dense sensing-based [

3]. Dense sensing refers to the deployment of numerous low-cost low-power sensors in the ambient intelligent environment. These sensors include microphones, vibration sensors, switch sensors, pressure mat sensors, etc. The interaction between the human and the object with sensors attached often provides powerful clues about the activity being undertaken. However, compared with wearable sensors, each of these dense sensors needs “fine tuning” after deployment, which means that they are hardly used ubiquitously [

2]. To sum up, there is a great demand to “fill in the blanks” when these three categories of sensors are unsuitable for use in daily life.

Pyroelectric infrared (PIR) sensors are an excellent candidate for pervasive sensing. They are well accepted, because they appear in numerous places as part of security systems, including homes, banks, libraries, etc. [

14]. They are inexpensive and can be attached to any indoor environment, which makes them “invisible” to the occupants. They also do not need to be worn or carried, which avoids the problems of forgetting to carry sensors and recharge batteries. They are a kind of passive infrared sensor; their performances are not affected changes of illumination [

15]. However, for a single PIR sensor, its output is a raw sine-like signal and can only be used to detect whether or not human motion occurs. We have to carefully design the sensing paradigm and classification algorithm to develop their full potential.

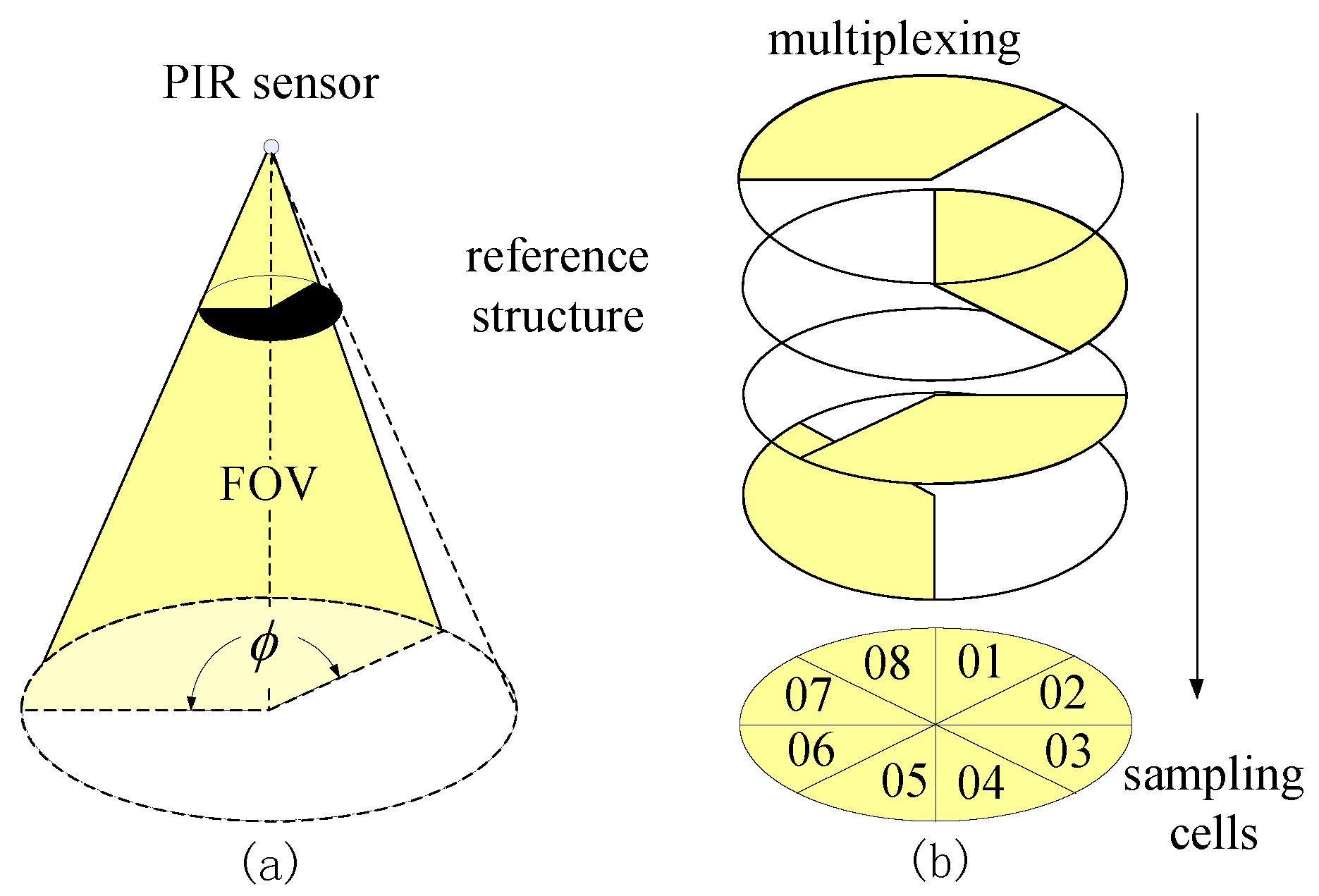

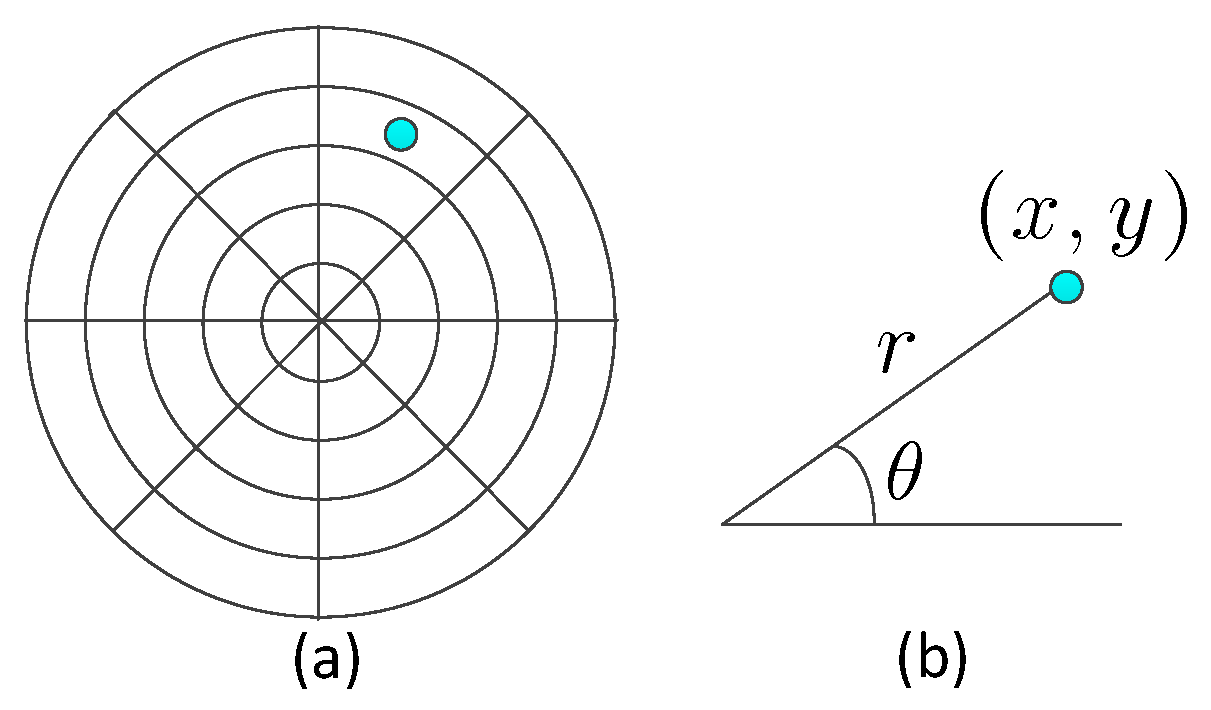

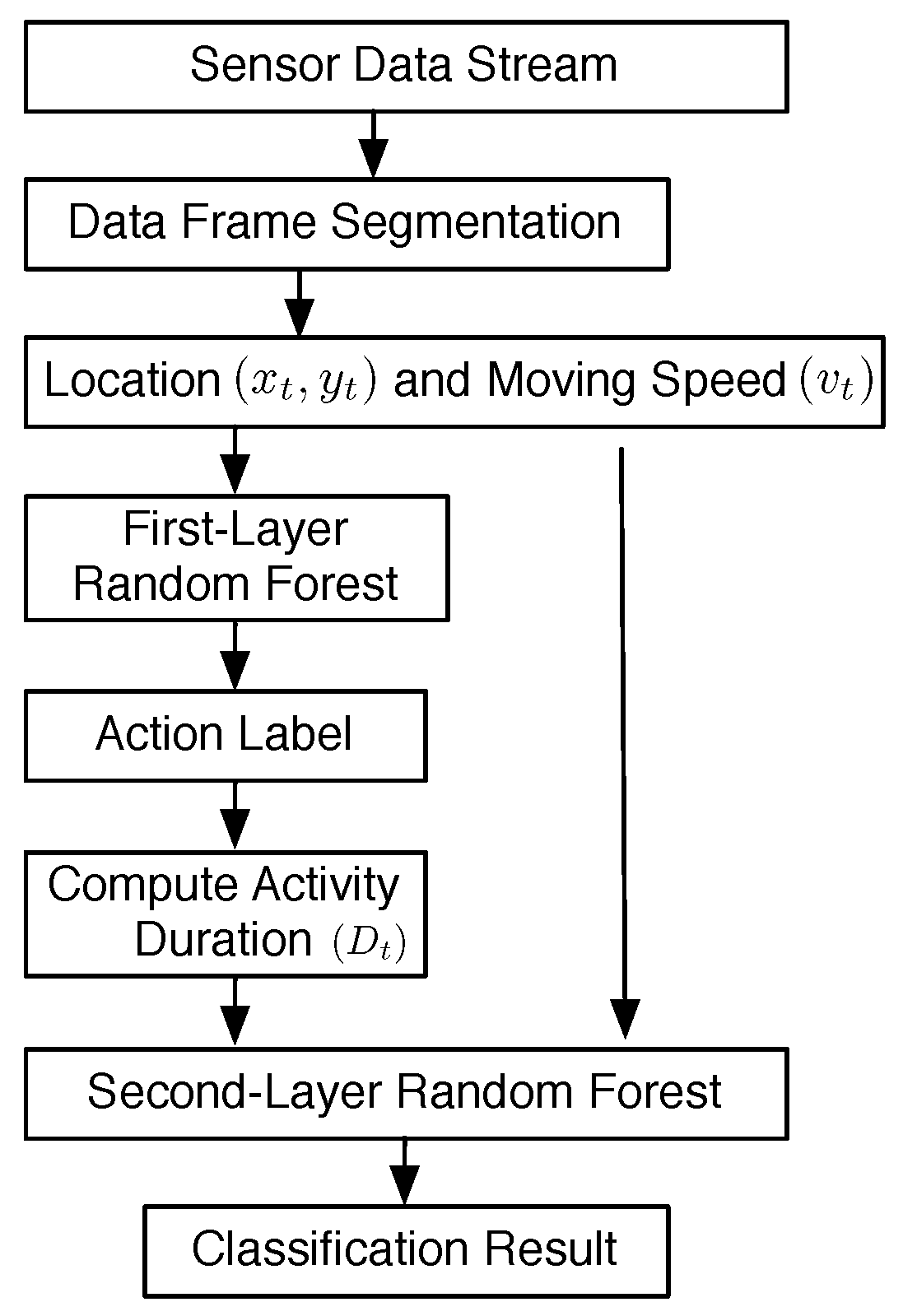

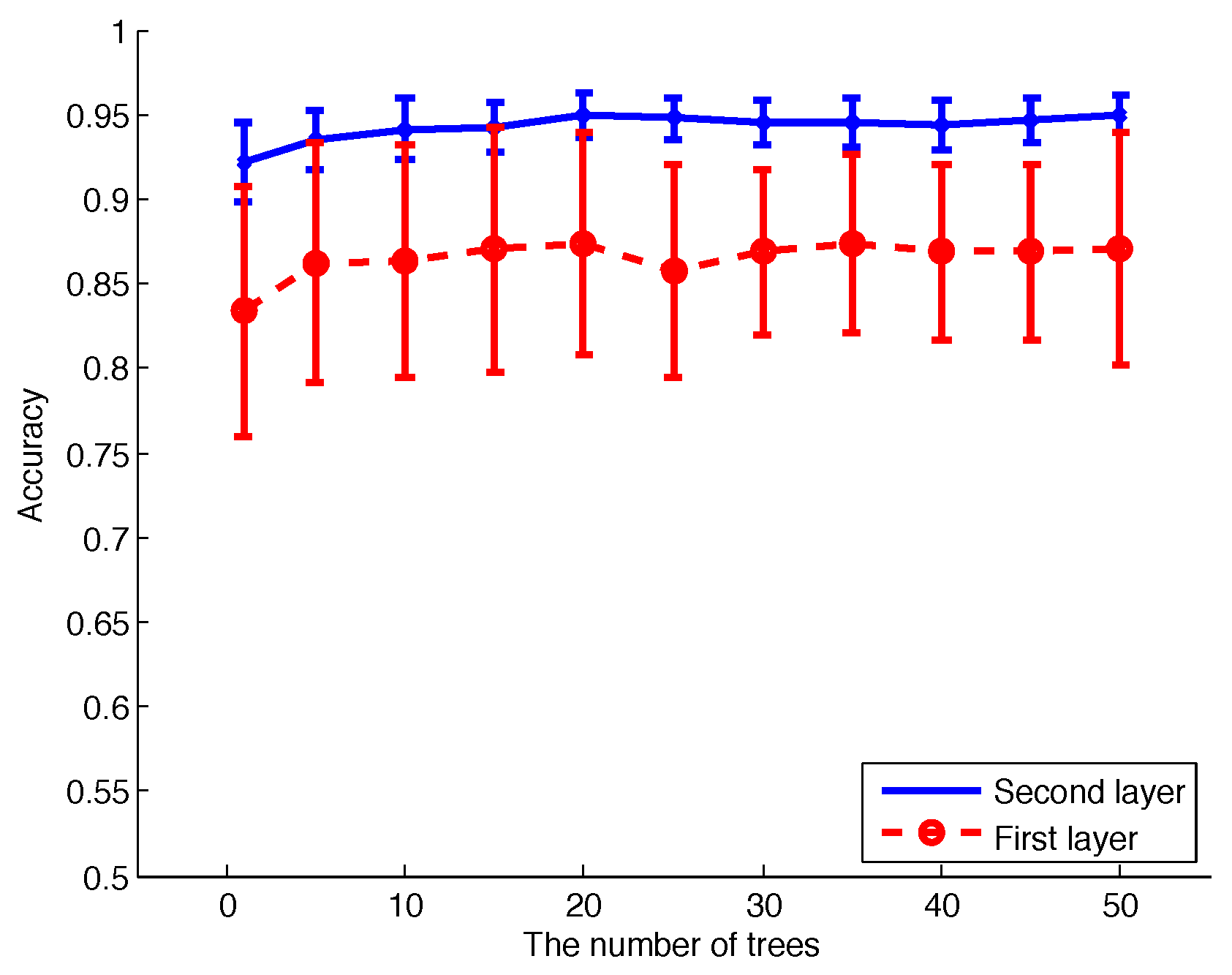

In this paper, we propose an approach to extract and fuse the location information and motion information from the PIR sensor data stream simultaneously. To monitor indoor environments, we built a wireless sensor network (WSN). In the WSN, sensor nodes consist of pyroelectric infrared (PIR) sensors. The field of view (FOV) of each PIR sensor is modulated by a two degrees of freedom (DOF) segmentation, including bearing segmentation and circle segmentation, which provide the spatio-temporal information of the human target. The sensor nodes are attached to the ceiling; data fusion of adjacent sensor nodes will improve the localization accuracy. The speed of human locomotion could also be acquired. To achieve human activity classification, we propose a two-layer random forest (RF) classifier. Based on the location and moving speed of the human, the first layer of RF will label the activity type for each data frame. To boost the performance of our system, we incorporate prior knowledge of human activities. Because the duration of each kind of activity is a useful feature for activity classification, we employ a finite-state machine (FSM) to record the duration of the same activity for successive data frames. All of the features—including location, speed, and duration—will be input to the second-layer RF for final activity classification.

The main contributions of this paper are two-fold:

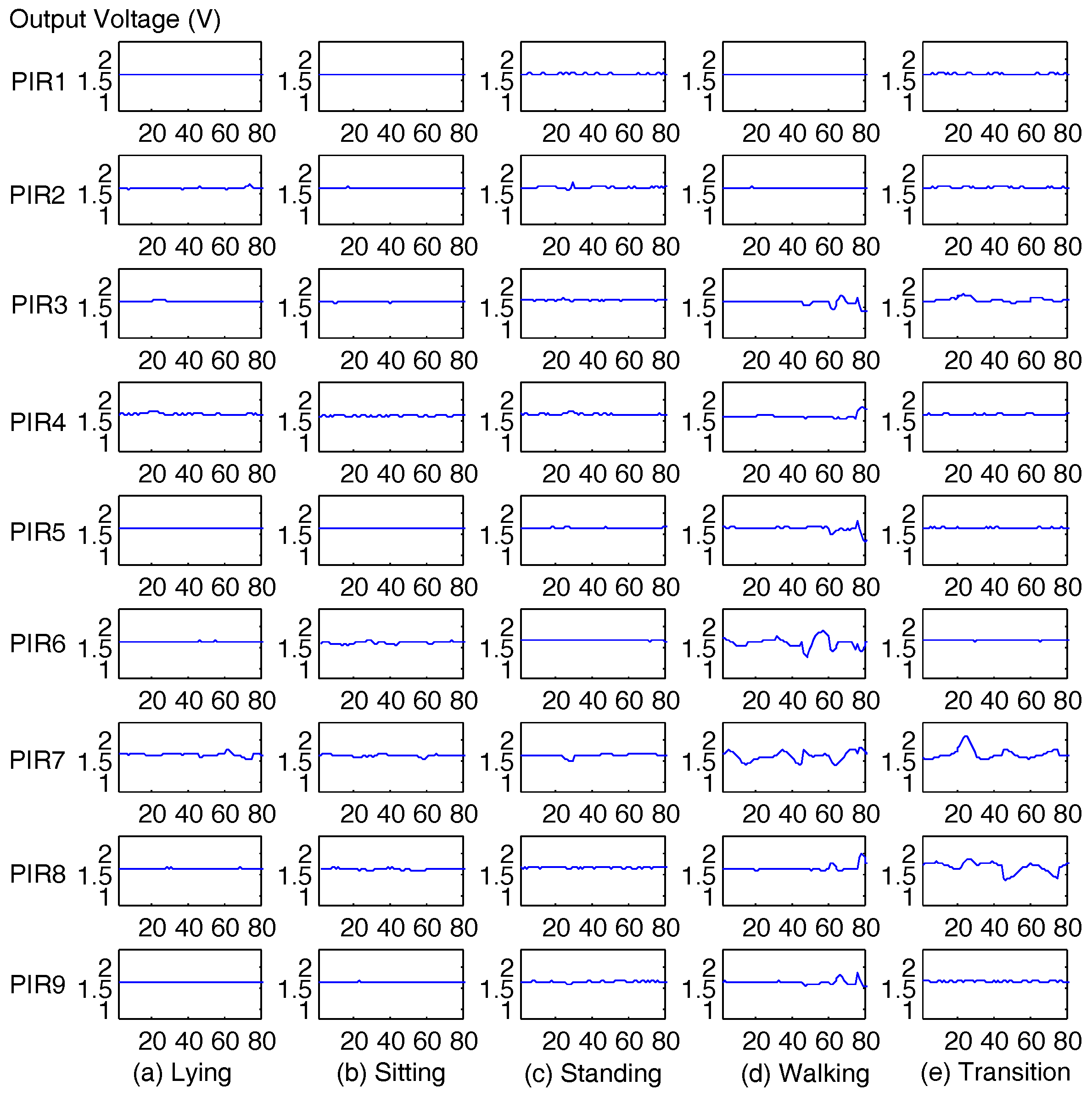

1. We propose a scalable framework that can decompose basic individual activities (“walking”, “lying”, “sitting”, “standing”, and “transitional activities”) into simple PIR data streams. With relatively low communication burden, our system could be expanded to cover any size indoor environment and fulfill the real-time processing. Non-intrusive PIR sensors are embedded in the indoor environment to achieve ambient intelligence, which can reduce the feeling of obtrusiveness to the minimum.

2. We propose a two-layer RF algorithm that leverages three simple yet powerful features (“location”, “speed”, and “duration of successive activity”) to achieve the recognition of human activities. Our approach is validated using data gathered from a mock apartment to make our results more confident. No human effort is needed to segment the monitored region for different activities.

2. Related Work

To avoid complicated data processing, especially feature extraction from the continuous vision sensor data stream [

6], some researchers apply wearable or binary sensors instead for human daily activity recognition. Wilson et al. [

16] proposed the simultaneously tracking and activity recognition of the occupants. Four kinds of binary sensors were employed to capture the human motion within each room. A dynamic Bayes net was used to infer the human location and achieve activity recognition by fusing heterogeneous sensor data. The Rao–Blackwellised particle filter (RBPF) was employed to solve the data association problem. Zhu et al. [

11] integrated the location information and motion information to infer the daily human activity. An optical motion capture system was installed on the corner of the ceilings to provide the human location information. The target human body had an inertial sensor attached to capture the human motion. Neural networks were used to achieve coarse granularity activity classification, and hidden Markov networks were utilized to refine the fine granularity activity classification result. Finally, the location and motion information was fused based on Bayes’ rule.

Due to their simplicity and robustness to illumination variance, PIR sensors have recently been gaining increasing attention. In [

17], Hao et al. proposed the use of side-view-based PIR sensor nodes to locate human targets. Within its FOV, each sensor node can detect the angular displacement of a moving human target; multiple sensor nodes enhanced the localization accuracy. They applied the same hardware setting for multiple human tracking [

18]. Their sensor nodes were deployed to facilitate the data association problem. An expectation-maximization-Bayesian tracking scheme was proposed to enhance the system performance.

To avoid the region partitioning and region classifier, Yang et al. [

19] proposed a special optical cone to model the FOVs of the PIR sensors into petal shapes. Intersections of detection lines formed by these petal shapes defined the measurement points, which would be assigned credits to represent the probability of the human target falling within the FOV. The data association problem of multiple human targets can also be addressed by this credit-based method after cluster analyzing.

However, in the research mentioned above, the PIR sensors were oriented in side-view or placed on the ground, which means that they were easily occluded by furniture or other obstacles in the real deployment. To overcome this drawback, Tao et al. [

20] attached binary infrared sensors to the ceiling of an office. Weak evidence such as people location, moving direction, and personal desks was synthesized to achieve soft tracking. They declared that their system can track up to eight persons with high accuracy. To increase the space resolution and improve the deployment efficiency of sensor nodes, Luo et al. [

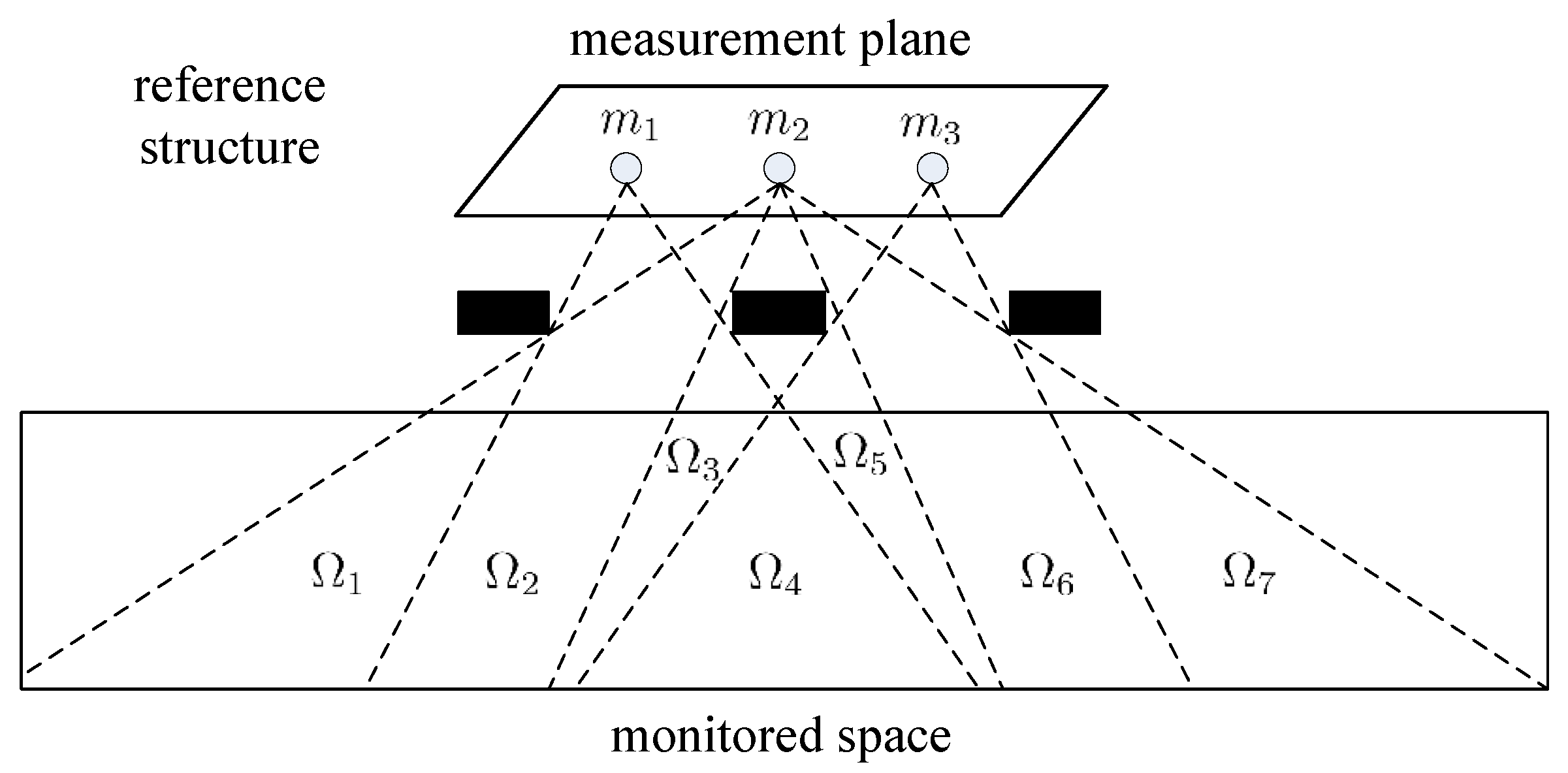

21] applied another scheme of FOV modulation to realize human indoor localization. In their system, the FOVs of PIR sensors were modulated by two degrees of freedom (DOF) of spatial segmentation, which provided the flexible localization schema for information fusion. The Kalman filter and smoother were utilized to refine the human motion trajectory.

Some researchers have been devoted to exploiting the potential of PIR sensors for activity recognition. In [

22], Liu et al. proposed the employment of pseudo-random code, based on compressive sensing, to modulate the FOVs of the PIR sensors. The human activity within a confined region was cast into low-dimensional data streams, and could be classified by the Hausdorff distance directly. Luo et al. [

23] proposed a method for abnormal behavior detection by investigating the temporal feature of the sensor data stream. Modified Kullback–Leibler (KL) divergence accompanied with self-tuning spectral clustering were leveraged to profile and cluster similar normal activities. Feature vectors were formed by hidden Markov models (HMMs). Finally, one-class support vector machines (SVMs) were employed to detect abnormal activities.

Guan et al. [

24] employed PIR sensors to capture the thermal radiation changes induced by human motion. Three sensing nodes were utilized to construct a multi-view motion sensing system, including one ceiling-mounted node and two nodes on tripods facing each other. HMMs and SVMs were employed to classify six types of activities.

In summary, the above-mentioned research of PIR sensors focused on human localization or human activity recognition separately. This paper endeavors to provide a framework to address these two synergy problems simultaneously.

7. Discussion

To compare the effectiveness of RF with other classifiers, we used SVM and naive Bayes to replace RF in both layers of our classifier framework and ran the experiments again [

29,

30]. The mean accuracy and standard deviation of 10-fold CV are listed in

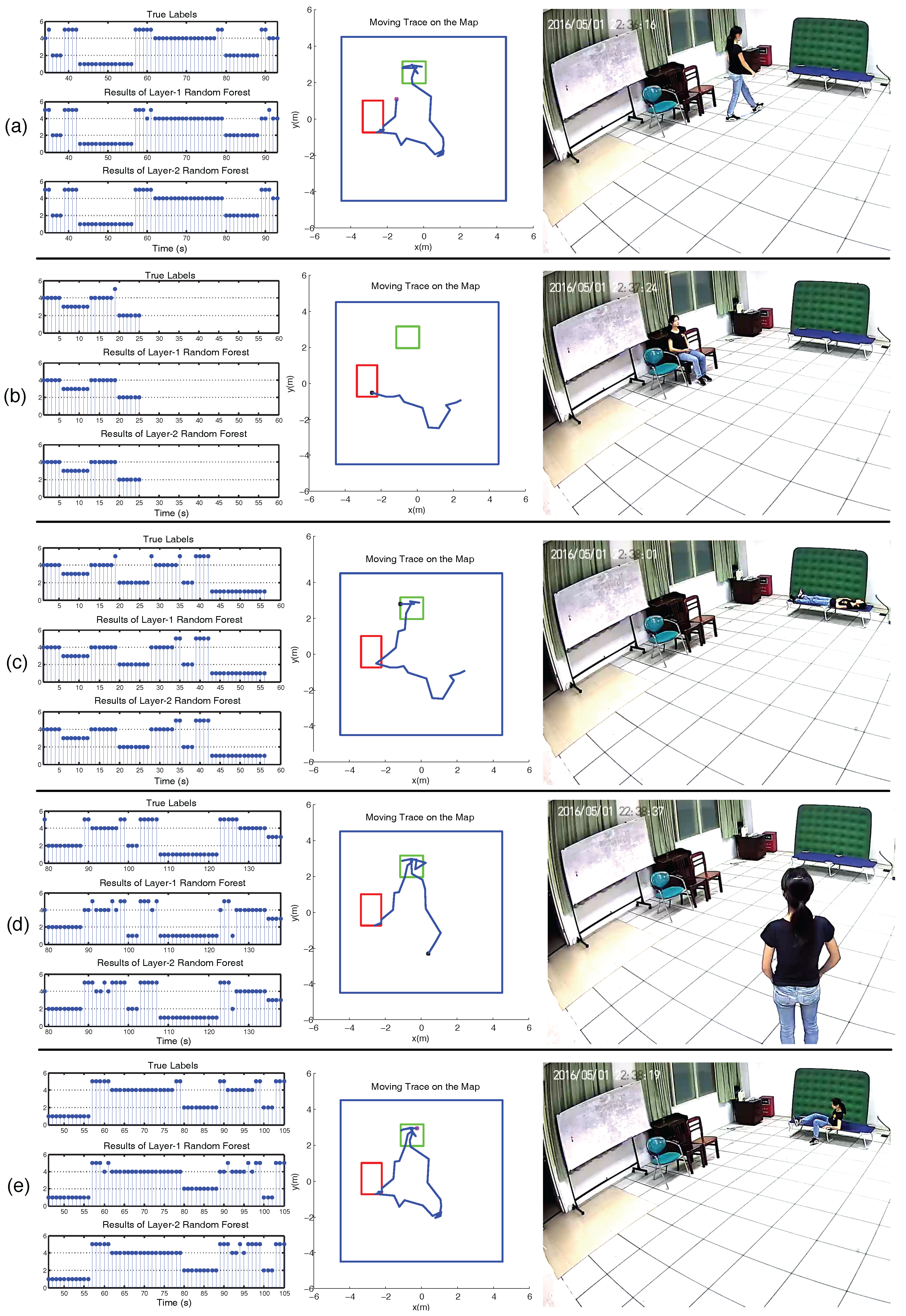

Table 5. It shows that the accuracy of SVM and naive Bayes is lower than RF. The reason lies in that the decision boundary of naive Bayes is linear, which is not consistent with the fact that the locations of the activities that occurred inside the mock apartment were regional, as shown in the middle column of

Figure 12. Within the regions of the chairs and the bed, sitting and lying will occur with high probabilities; outside these regions, walking and standing will occur with high probabilities. Thus, the location of the human target

is a good indicator. However, using naive Bayes, after assigning the fixed weights to

x-axis and

y-axis separately, the input feature is not linearly separable. The performance of SVM is better than naive Bayes, because the hyperspace produced by the inner product of the input feature can model the non-linearly separable feature. To enhance the performance of naive Bayes and SVM, much effort is needed to segment the activity region manually and to calculate the probability of each activity occurring at each location.

The RF can model the activity region inside the mock apartment better than SVM and naive Bayes. RF is composed of many decision trees, which are more interpretable to model the square region where different activities may happen; the decision thresholds applied to x-axes and y-axes can be different for each decision tree. The majority vote scheme can boost the decision accuracy. Thus, the decision boundary of RF can be square, which is consistent with the layout of the mock apartment. Furthermore, the RF is also a useful framework to incorporate heterogeneous features such as location, speed, and the duration of successive activities.

We recorded the training time and testing time of different algorithms, as listed in

Table 5. In the host PC, we used MATLAB 2013b for data processing. The CPU of the host PC was an Intel(R) Core(TM) i5-6400 2.70 GHz and 8.00 GB RAM. For each CV, the total training time of naive Bayes was the least, and the RF was the most. In the testing phase, the testing time of SVM was the least, and the RF was the most. However, the testing time of RF for each data frame (two seconds) was 0.12 s, which fulfills the requirement of real-time processing.

Because our PIR sensors are ceiling mounted, they will not be easily affected by the existence of obstacles such as the furniture in the mock house. However, when the position of a certain piece of furniture is modified, the location information of the human activities will change as well. We must re-train the RF again, because the statistical distributions of the features are shifted. In such a situation, one of the advantages of our system is that we only need to label the type of activities being performed, with no need to segment the activity region manually and assign different probability for each region.

The performance of our system was compared with some recent existing systems based on wearable sensors or video sensors, as listed in

Table 6. The recognition accuracy of our system is comparable to or even higher than other systems in some activity types. However, because the experimental configurations and the daily activities to be classified are not identical, the mere comparison of accuracy is not comprehensive enough. The method proposed in this paper is focused mainly on daily basic activities, which are the elements for more complicated activities’ recognition. Our system could not only work independently, but could also cooperate with existing systems; it could be regarded as complementary to the wearable or vision-based sensors. Our approach will increase the robustness of the smart home system. In future work, we will focus on how to recognize complex activities such as “house keeping” and “cooking” by leveraging more sophisticated algorithms to capture the spatio-temporal features of human activities. The quality of activities (e.g., the quality of walking after sitting) will also be investigated.