Performance Analysis of the Direct Position Determination Method in the Presence of Array Model Errors

Abstract

:1. Introduction

2. Notation and Nomenclature

3. Signal Models for Direct Position Determination

3.1. Time-Domain Signal Model

- is the nth array response to the signal transmitted from position ,

- is the unknown signal waveform transmitted at unknown time ,

- is the signal propagation time from the emitter to the nth base station (i.e., distance divided by signal propagation speed),

- is an unknown complex scalar representing the channel attenuation between the transmitter and the nth base station,

- is temporally white, circularly symmetric complex Gaussian random noise with zero mean and covariance matrix .

3.2. Frequency-Domain Signal Model

- is the kth known discrete frequency point,

- is the kth Fourier coefficient of the unknown signal corresponding to frequency ,

- is the kth Fourier coefficient of the random noise corresponding to frequency .

4. Direct Position Determination Method

5. Statistical Assumption and Effects of Array Model Errors

6. MSE of Direct Position Determination Method in Presence of Array Model Errors

6.1. Perturbation Analysis on the Eigenvalues of Positive Semidefinite Matrix

6.2. Second-Order Perturbation Analysis on the Cost Function

6.3. MSE of Direct Position Determination Method

7. Success Probability of Direct Position Determination Method in Presence of Array Model Errors

7.1. The First Success Probability of Direct Position Determination

7.2. The Second Success Probability of Direct Position Determination

8. Cramér-Rao Bound on Covariance Matrix of Localization Errors

8.1. Cramér-Rao Bound on Position Estimate in Absence of Array Model Errors

8.2. Cramér-Rao Bound on Position Estimate in Presence of Array Model Errors

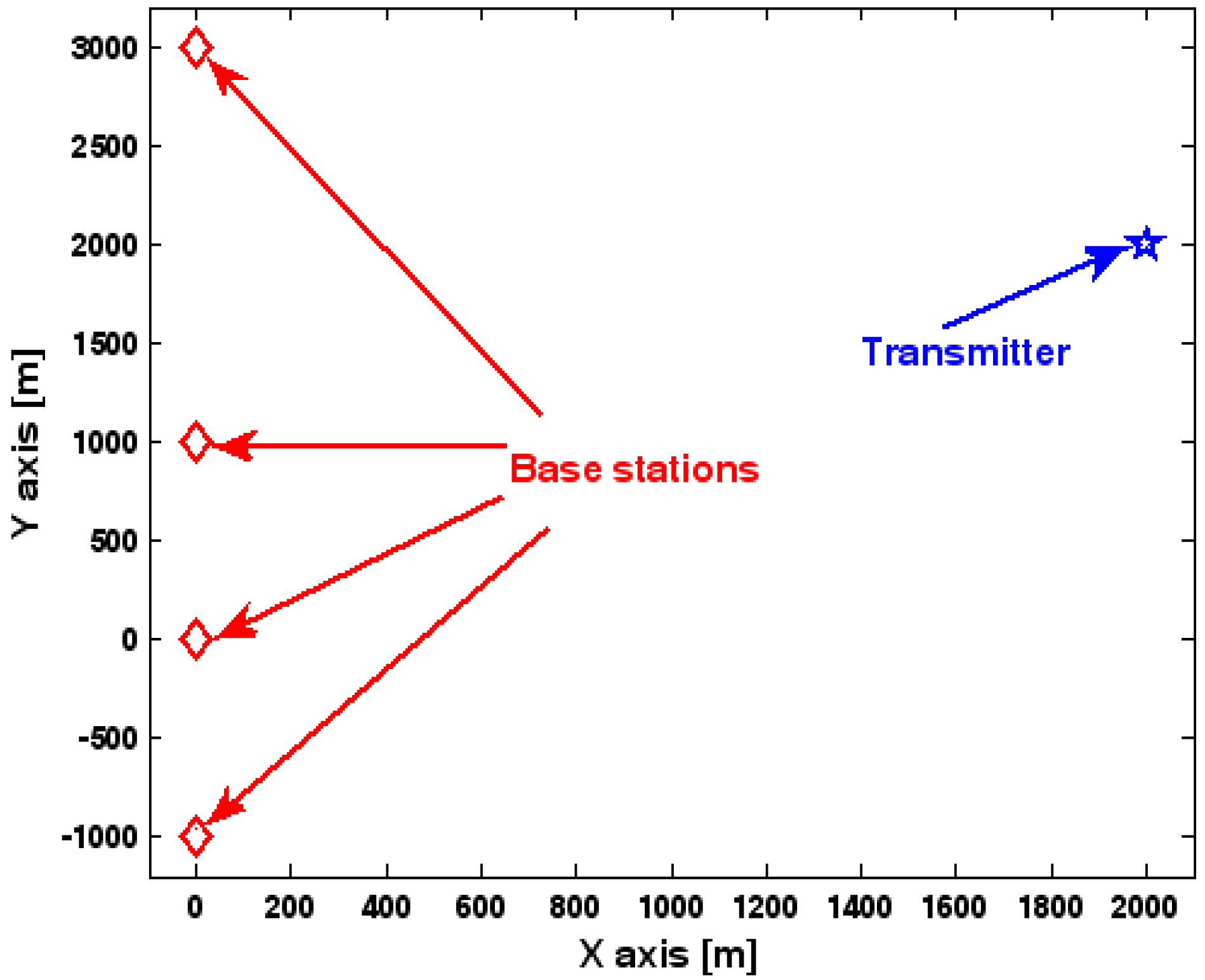

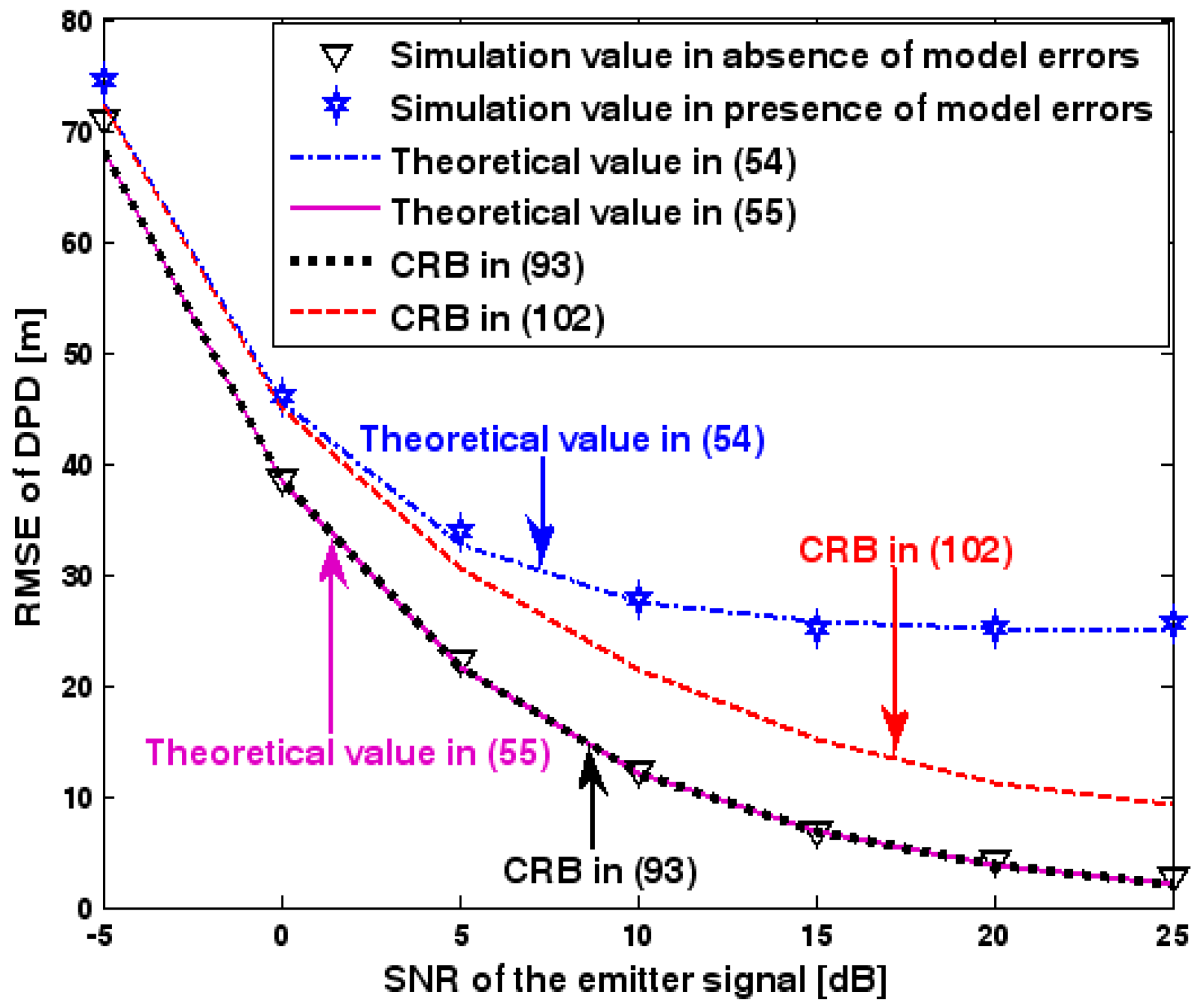

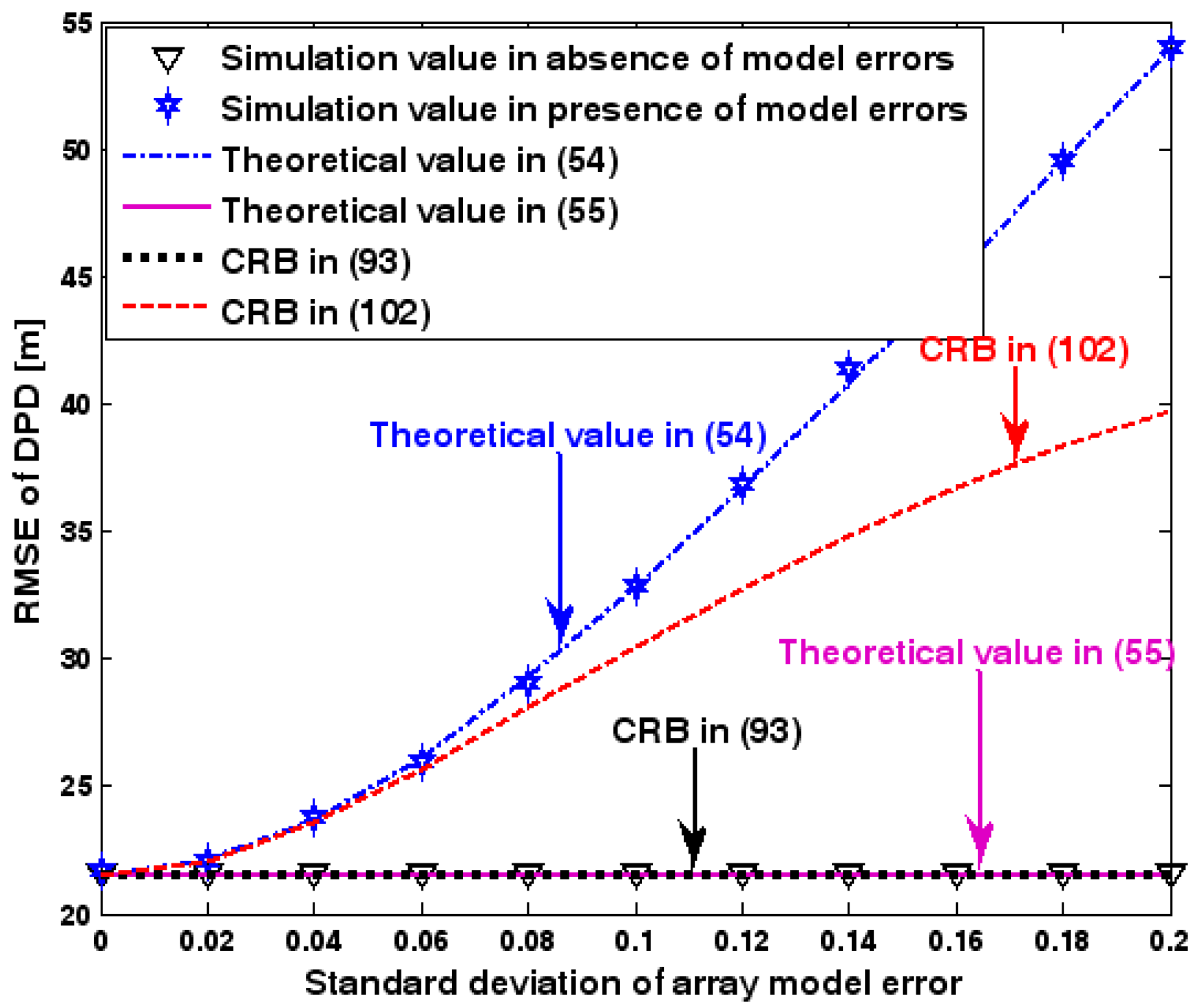

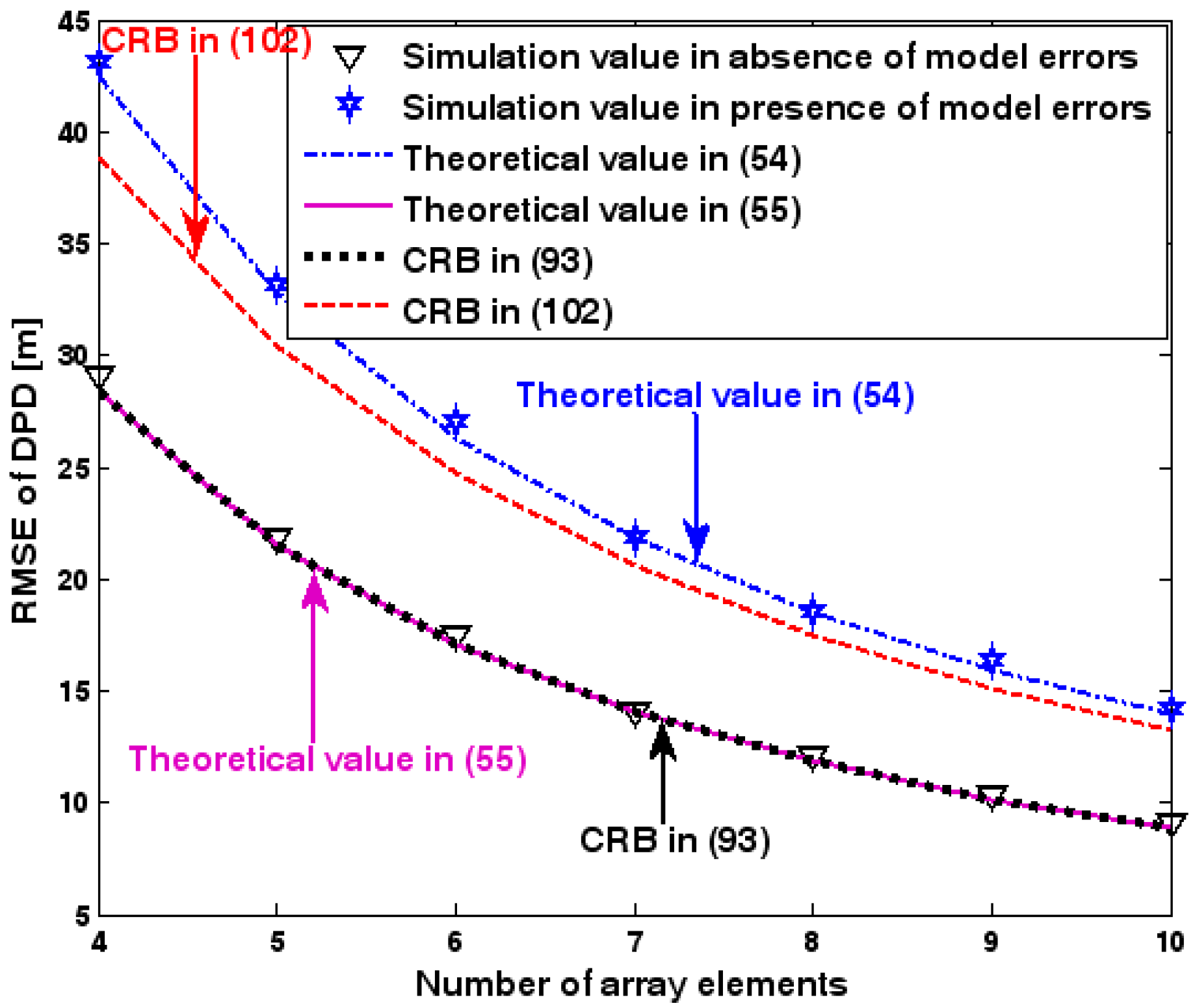

9. Simulation Results

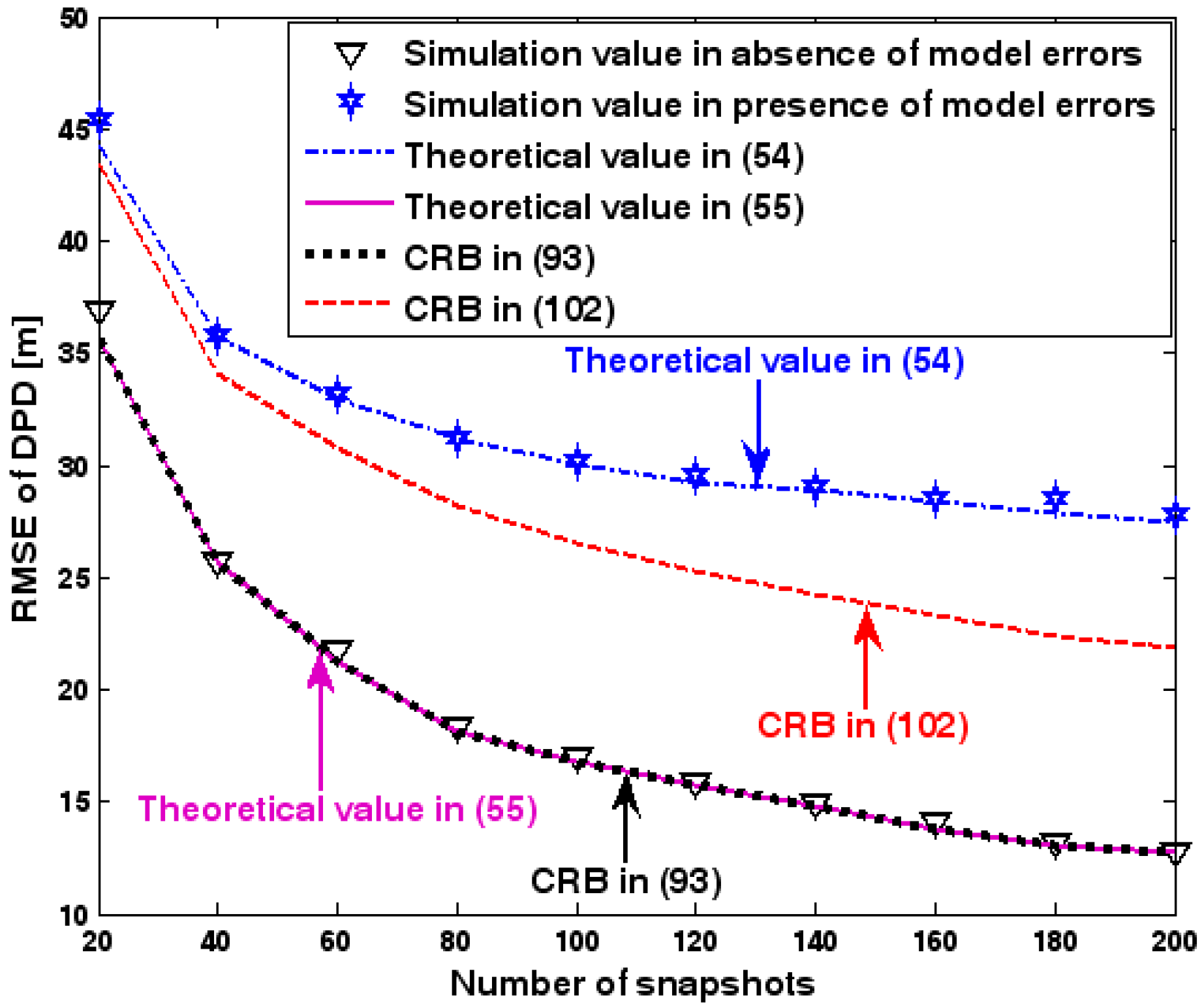

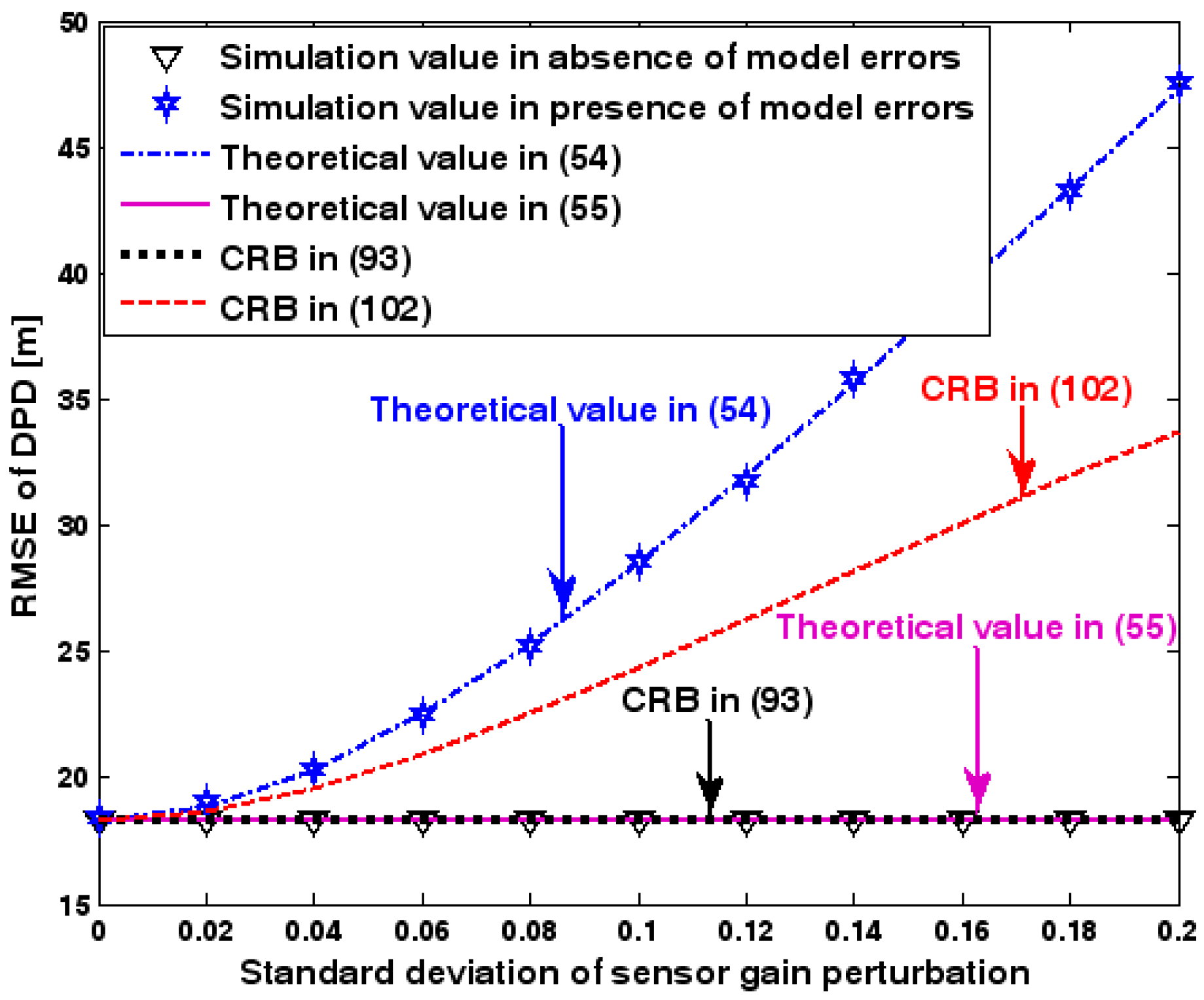

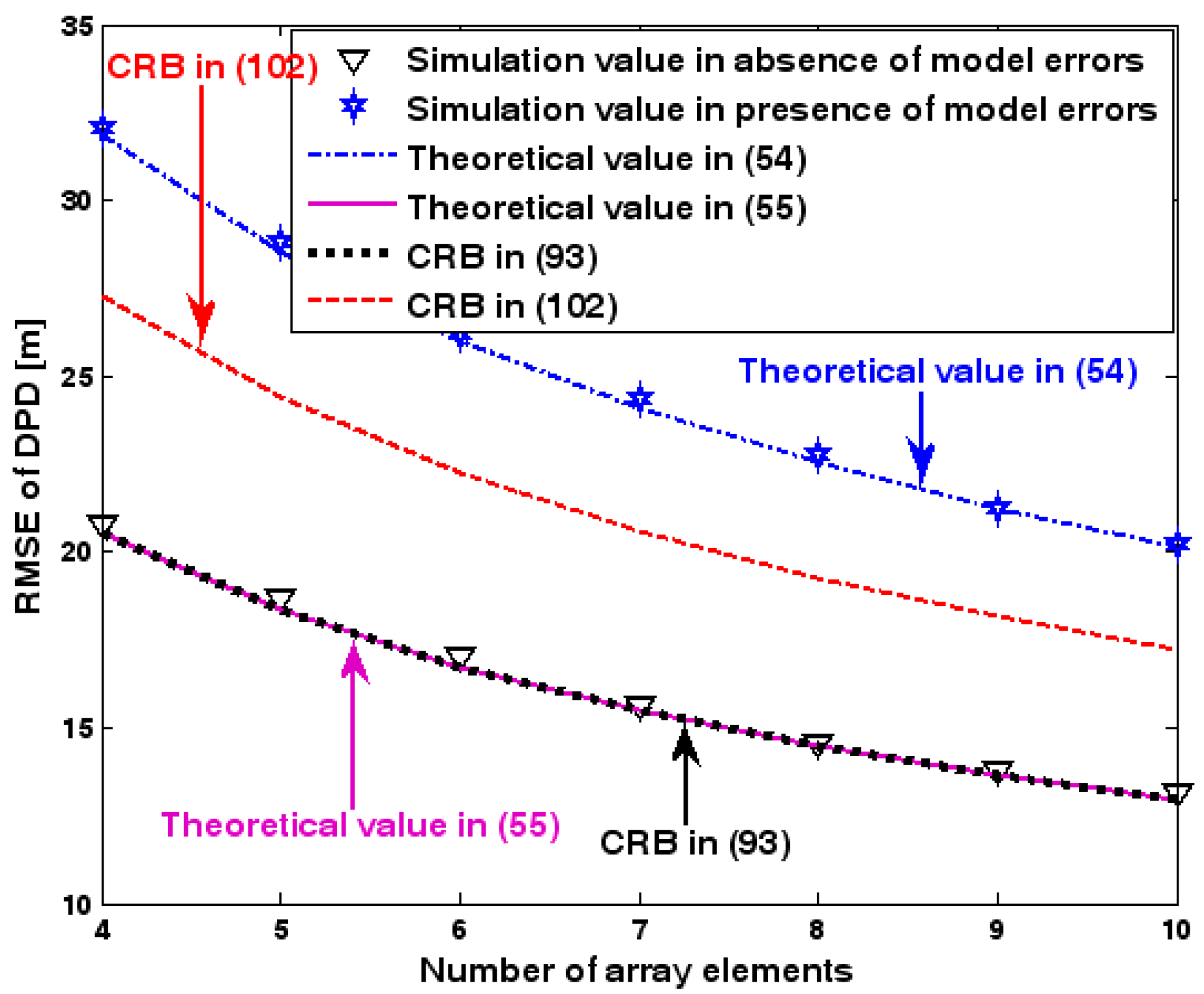

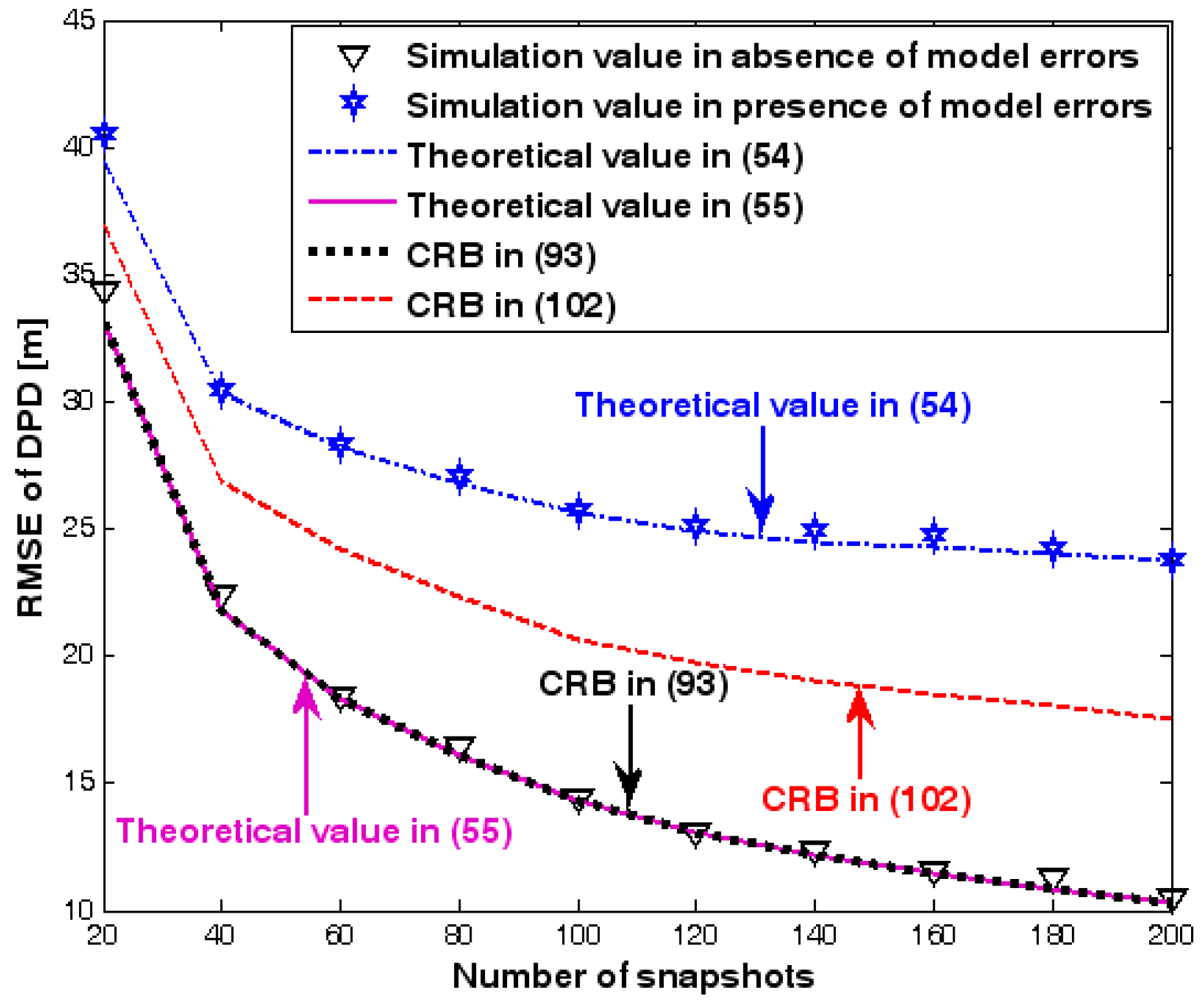

9.1. Discussion on RMSE of Direct Localization

9.1.1. The First Set of Experiments

9.1.2. The Second Set of Experiments

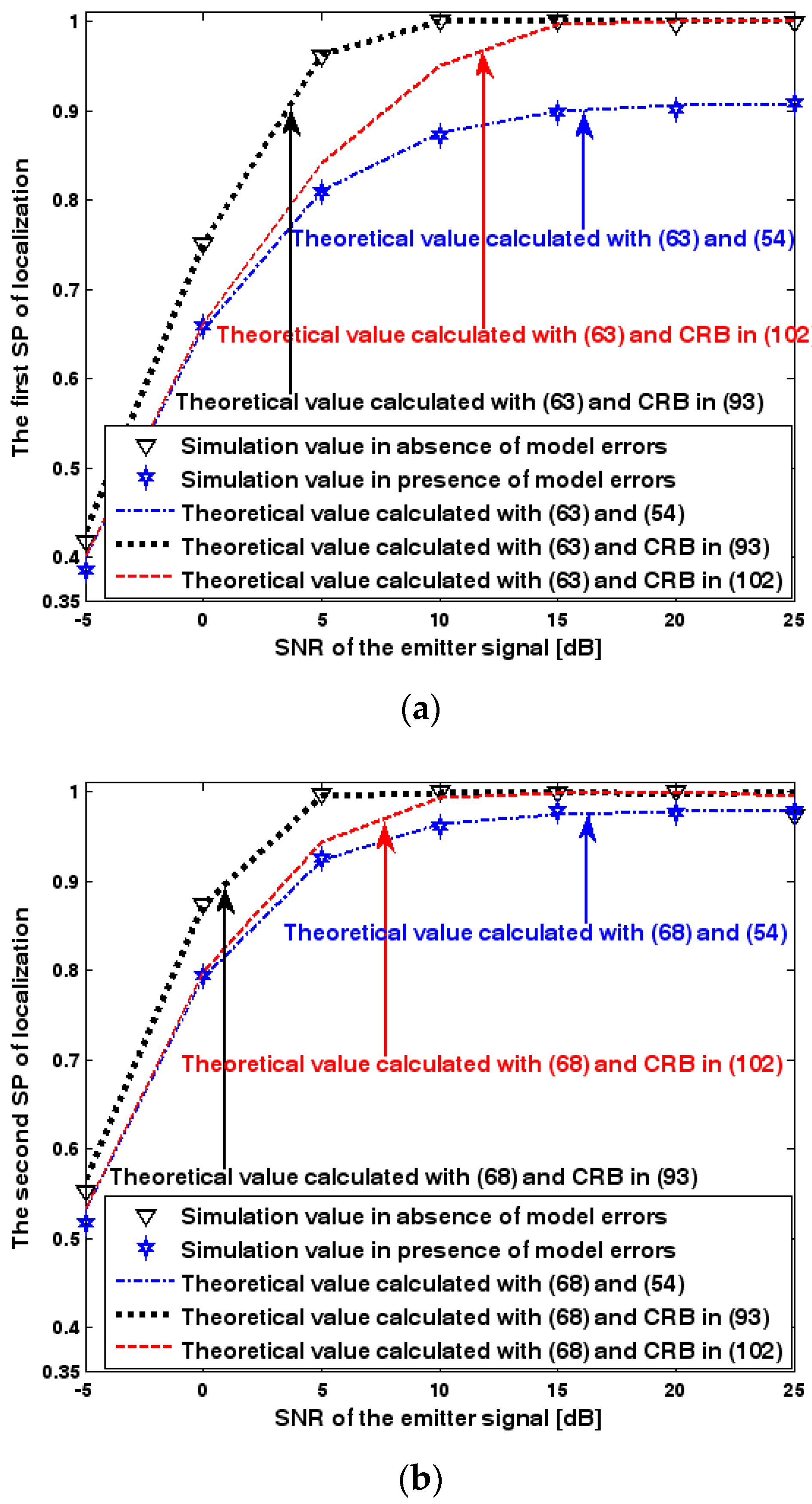

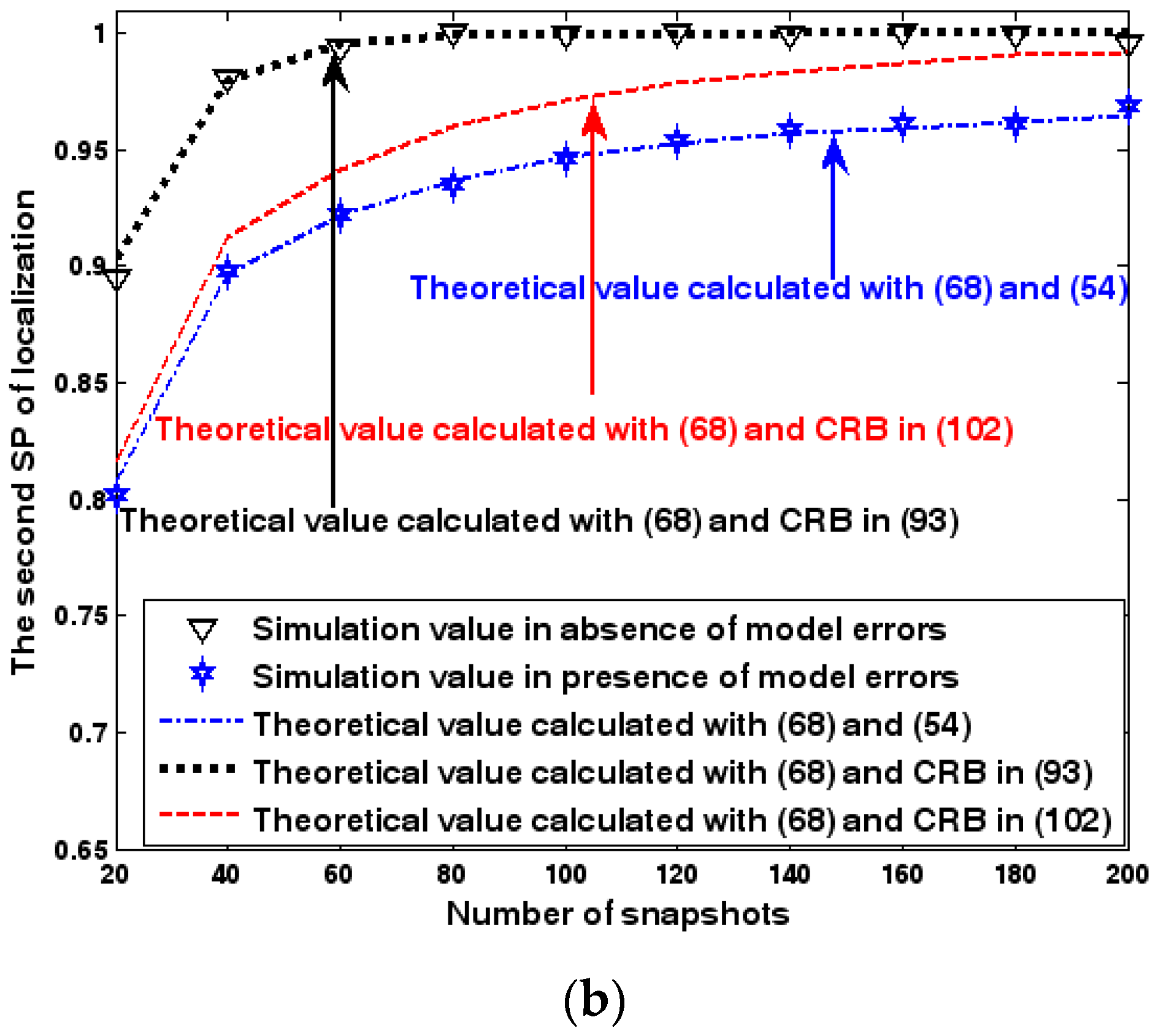

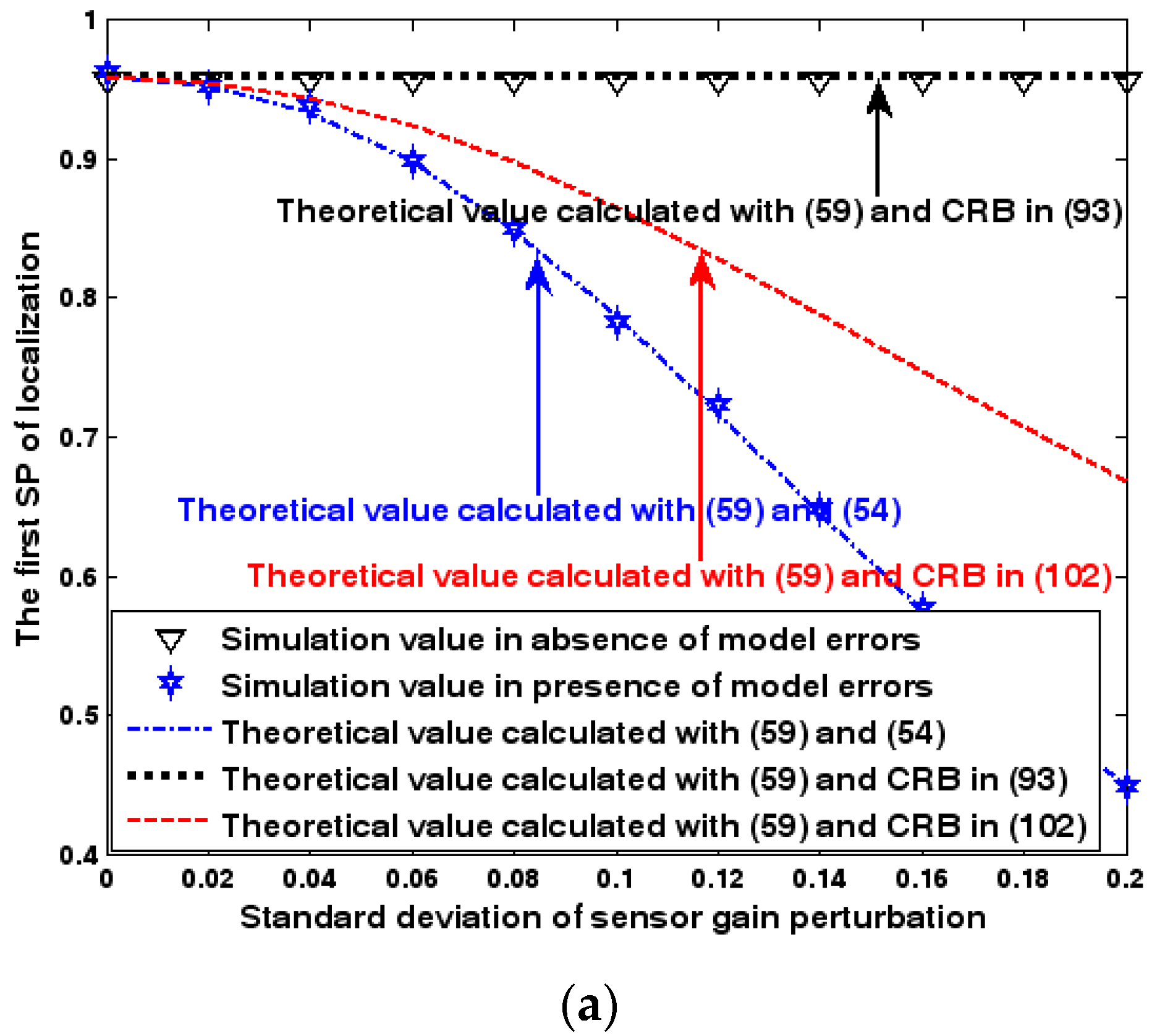

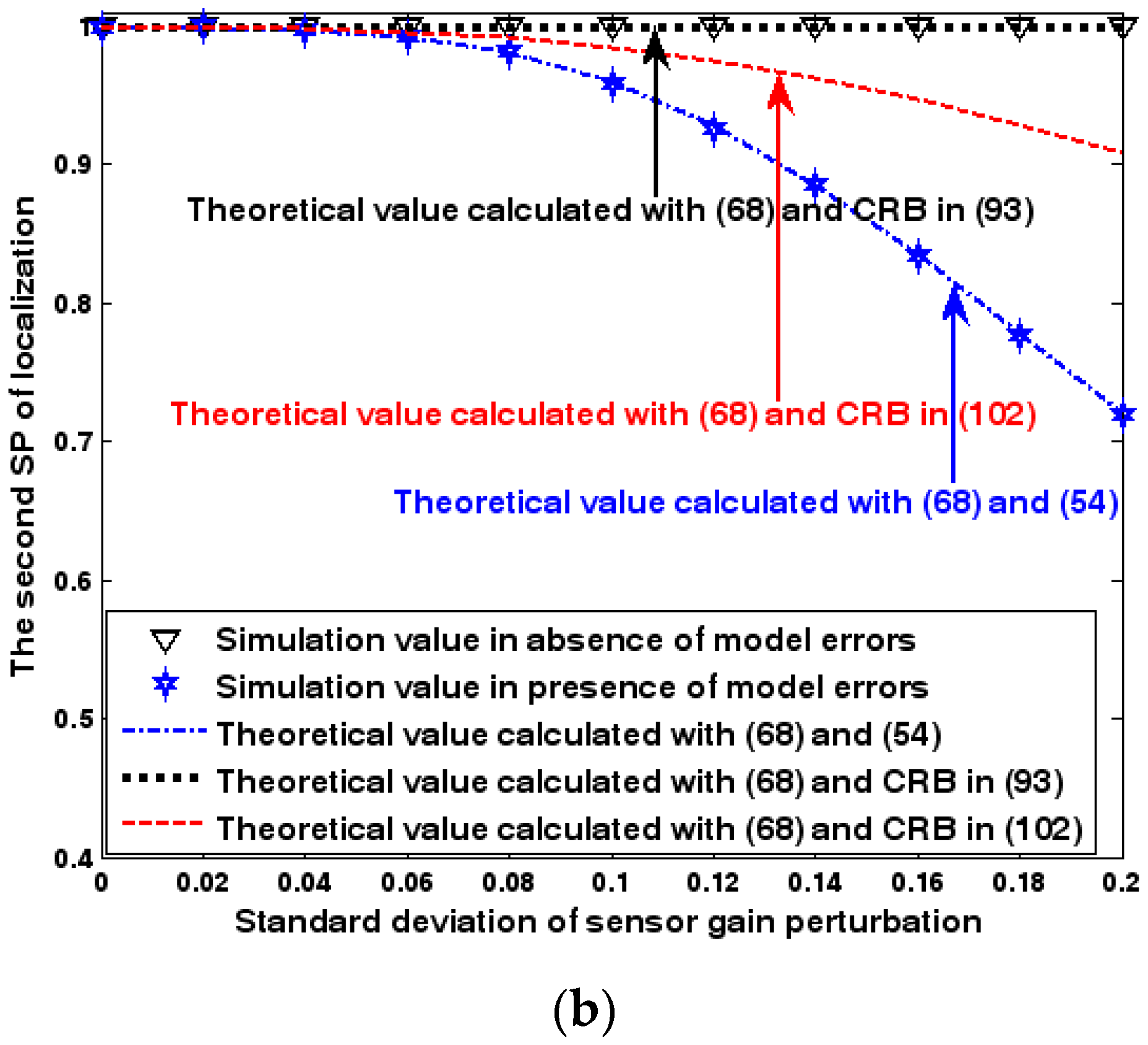

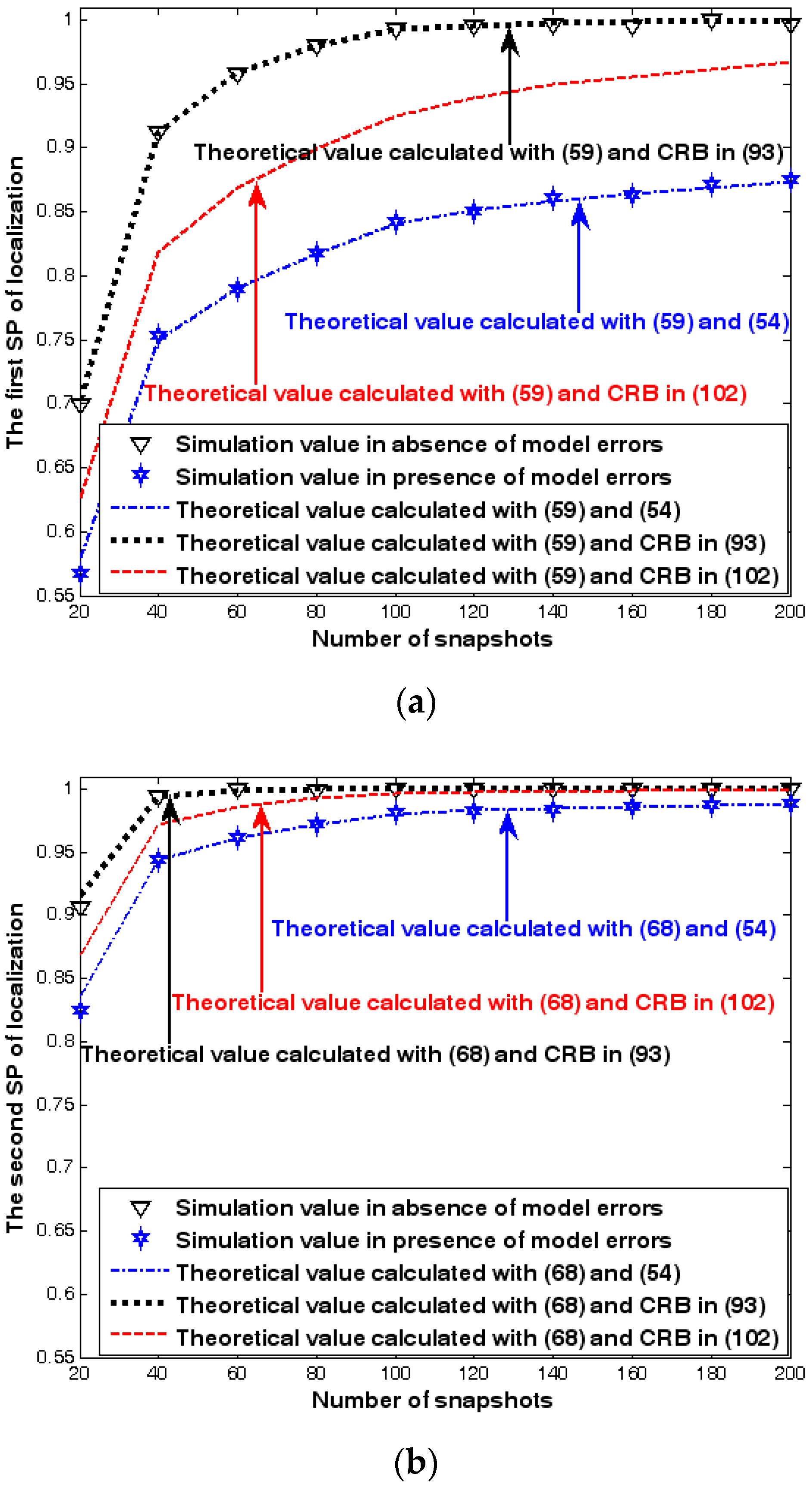

9.2. Discussion on Success Probability of Direct Localization

9.2.1. The First Set of Experiments

9.2.2. The Second Set of Experiments

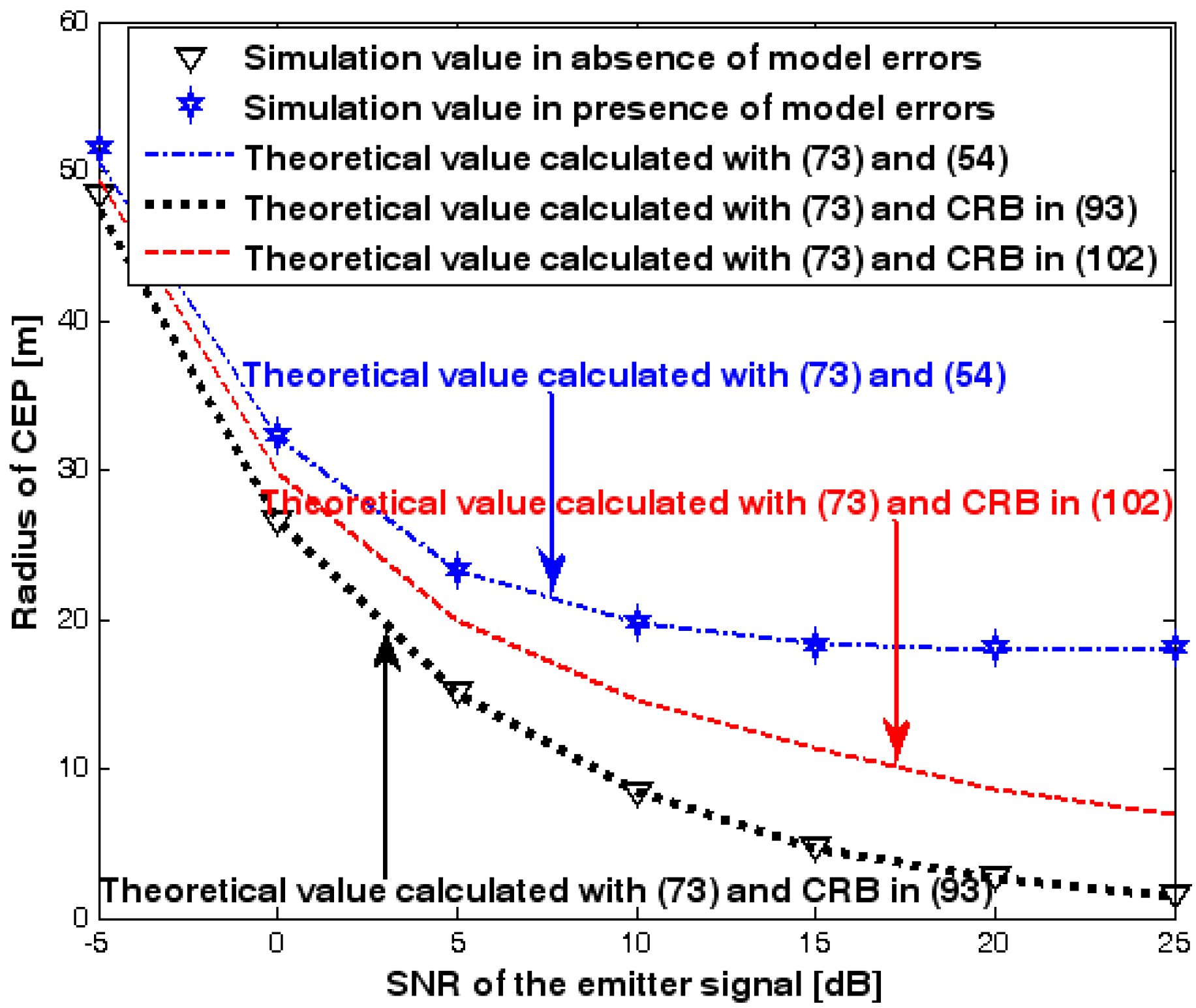

9.3. Discussion on Radius of CEP

10. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix A—Detailed Derivation of Matrices in (30)

Appendix B—Proof of (34) and (35)

Appendix C—Proof of (36) to (38)

Appendix D—Proof of (39) to (44)

Appendix E—Proof of Proposition 2

Appendix F—Proof of (62)

Appendix G—Detailed Derivation of Matrices in (92)

Appendix H—Proof of (96)

Appendix I—Detailed Derivation of Matrices in (101)

References

- Schmidt, R.O. Multiple emitter location and signal parameter estimation. IEEE Trans. Antennas Propag. 1986, 34, 267–280. [Google Scholar] [CrossRef]

- Stoica, P.; Nehorai, A. MUSIC, maximum likelihood, and Cramér-Rao bound. IEEE Trans. Acoust. Speech Signal Process. 1989, 37, 720–741. [Google Scholar] [CrossRef]

- Viberg, M.; Ottersten, B. Sensor array processing based on subspace fitting. IEEE Trans. Signal Process. 1991, 39, 1110–1121. [Google Scholar] [CrossRef]

- Liao, B.; Chan, S.C.; Huang, L.; Guo, C. Iterative methods for subspace and DOA estimation in nonuniform noise. IEEE Trans. Signal Process. 2016, 64, 3008–3020. [Google Scholar] [CrossRef]

- Sun, F.; Gao, B.; Chen, L.; Lan, P. A low-complexity ESPRIT-based DOA estimation method for co-prime linear arrays. Sensors 2016, 16, 1367. [Google Scholar] [CrossRef] [PubMed]

- Nardone, S.C.; Graham, M.L. A closed-form solution to bearings-only target motion analysis. IEEE J. Ocean. Eng. 1997, 22, 168–178. [Google Scholar] [CrossRef]

- Kutluyil, D. Bearings-only target localization using total least squares. Signal Process. 2005, 85, 1695–1710. [Google Scholar]

- Lin, Z.; Han, T.; Zheng, R.; Fu, M. Distributed localization for 2-D sensor networks with bearing-only measurements under switching topologies. IEEE Trans. Signal Process. 2016, 64, 6345–6359. [Google Scholar] [CrossRef]

- Yang, K.; An, J.; Bu, X.; Sun, G. Constrained total least-squares location algorithm using time-difference-of-arrival measurements. IEEE Trans. Veh. Technol. 2010, 59, 1558–1562. [Google Scholar] [CrossRef]

- Jiang, W.; Xu, C.; Pei, L.; Yu, W. Multidimensional Scaling-Based TDOA Localization Scheme Using an Auxiliary Line. IEEE Signal Process. Lett. 2016, 23, 546–550. [Google Scholar] [CrossRef]

- Ma, Z.H.; Ho, K.C. TOA localization in the presence of random sensor position errors. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Prague, Czech, 22–27 May 2011; pp. 2468–2471. [Google Scholar]

- Shen, H.; Ding, Z.; Dasgupta, S.; Zhao, C. Multiple source localization in wireless sensor networks based on time of arrival measurement. IEEE Trans. Signal Process. 2014, 62, 1938–1949. [Google Scholar] [CrossRef]

- Yu, H.G.; Huang, G.M.; Gao, J.; Liu, B. An efficient constrained weighted least squares algorithm for moving source location using TDOA and FDOA measurements. IEEE Trans. Wirel. Commun. 2012, 11, 44–47. [Google Scholar] [CrossRef]

- Wang, G.; Li, Y.; Ansari, N. A semidefinite relaxation method for source localization Using TDOA and FDOA Measurements. IEEE Trans. Veh. Technol. 2013, 62, 853–862. [Google Scholar] [CrossRef]

- Mason, J. Algebraic two-satellite TOA/FOA position solution on an ellipsoidal earth. IEEE Trans. Aerosp. Electron. Syst. 2004, 40, 1087–1092. [Google Scholar] [CrossRef]

- Cheung, K.W.; So, H.C.; Ma, W.K.; Chan, Y.T. Received signal strength based mobile positioning via constrained weighted least squares. In Proceedings of the IEEE International Conference on Acoustic, Speech and Signal Processing, Hong Kong, China, 6–8 April 2003; pp. 137–140. [Google Scholar]

- Ho, K.C.; Sun, M. An accurate algebraic closed-form solution for energy-based source localization. IEEE Trans. Audio Speech Lang. Process. 2007, 15, 2542–2550. [Google Scholar] [CrossRef]

- Wax, M.; Kailath, T. Decentralized processing in sensor arrays. IEEE Trans. Signal Process. 1985, 33, 1123–1129. [Google Scholar] [CrossRef]

- Stoica, P. On reparametrization of loss functions used in estimation and the invariance principle. Signal Process. 1989, 17, 383–387. [Google Scholar] [CrossRef]

- Amar, A.; Weiss, A.J. Localization of narrowband radio emitters based on Doppler frequency shifts. IEEE Trans. Signal Process. 2008, 56, 5500–5508. [Google Scholar] [CrossRef]

- Wang, D.; Wu, Y. Statistical performance analysis of direct position determination method based on doppler shifts in presence of model errors. Multidimens. Syst. Signal Process. 2017, 28, 149–182. [Google Scholar] [CrossRef]

- Tzoreff, E.; Weiss, A.J. Expectation-maximization algorithm for direct position determination. Signal Process. 2017, 97, 32–39. [Google Scholar] [CrossRef]

- Vankayalapati, N.; Kay, S.; Ding, Q. TDOA based direct positioning maximum likelihood estimator and the Cramer-Rao bound. IEEE Trans. Aerosp. Electron. Syst. 2014, 50, 1616–1634. [Google Scholar] [CrossRef]

- Xia, W.; Liu, W.; Zhu, L.F. Distributed adaptive direct position determination based on diffusion framework. J. Syst. Eng. Electron. 2016, 27, 28–38. [Google Scholar]

- Weiss, A.J. Direct geolocation of wideband emitters based on delay and Doppler. IEEE Trans. Signal Process. 2011, 59, 2513–5520. [Google Scholar] [CrossRef]

- Pourhomayoun, M.; Fowler, M.L. Distributed computation for direct position determination emitter location. IEEE Trans. Aerosp. Electron. Syst. 2014, 50, 2878–2889. [Google Scholar] [CrossRef]

- Bar-Shalom, O.; Weiss, A.J. Emitter geolocation using single moving receiver. Signal Process. 2014, 94, 70–83. [Google Scholar] [CrossRef]

- Li, J.Z.; Yang, L.; Guo, F.C.; Jiang, W.L. Coherent summation of multiple short-time signals for direct positioning of a wideband source based on delay and Doppler. Digit. Signal Process. 2016, 48, 58–70. [Google Scholar] [CrossRef]

- Weiss, A.J. Direct position determination of narrowband radio frequency transmitters. IEEE Signal Process. Lett. 2004, 11, 513–516. [Google Scholar] [CrossRef]

- Weiss, A.J.; Amar, A. Direct position determination of multiple radio signals. EURASIP J. Appl. Signal Process. 2005, 2005, 37–49. [Google Scholar] [CrossRef]

- Amar, A.; Weiss, A.J. A decoupled algorithm for geolocation of multiple emitters. Signal Process. 2007, 87, 2348–2359. [Google Scholar] [CrossRef]

- Tirer, T.; Weiss, A.J. High resolution direct position determination of radio frequency sources. IEEE Signal Process. Lett. 2016, 23, 192–196. [Google Scholar] [CrossRef]

- Tzafri, L.; Weiss, A.J. High-resolution direct position determination using MVDR. IEEE Trans. Wirel. Commun. 2016, 15, 6449–6461. [Google Scholar] [CrossRef]

- Amar, A.; Weiss, A.J. Direct position determination in the presence of model errors—known waveforms. Digit. Signal Process. 2006, 16, 52–83. [Google Scholar] [CrossRef]

- Demissie, B. Direct localization and detection of multiple sources in multi-path environments. In Proceedings of the IEEE International Conference on Information Fusion, Chicago, IL, USA, 5–8 July 2011; pp. 1–8. [Google Scholar]

- Papakonstantinou, K.; Slock, D. Direct location estimation for MIMO systems in multipath environments. In Proceedings of the IEEE International Conference on Global Telecommunications, New Orleans, LA, USA, 30 November–4 December 2008; pp. 1–5. [Google Scholar]

- Bar-Shalom, O.; Weiss, A.J. Efficient direct position determination of orthogonal frequency division multiplexing signals. IET Radar Sonar Navig. 2009, 3, 101–111. [Google Scholar] [CrossRef]

- Reuven, A.M.; Weiss, A.J. Direct position determination of cyclostationary signals. Signal Process. 2009, 89, 2448–2464. [Google Scholar] [CrossRef]

- Oispuu, M.; Nickel, U. Direct detection and position determination of multiple sources with intermittent emission. Signal Process. 2010, 90, 3056–3064. [Google Scholar] [CrossRef]

- Shen, J.; Shen, J.; Chen, X.F.; Huang, X.Y.; Susilo, W. An efficient public auditing protocol with novel dynamic structure for cloud data. IEEE Trans. Inf. Forensics Secur. 2017, PP, 1. [Google Scholar] [CrossRef]

- Fu, Z.J.; Ren, K.; Shu, J.G.; Sun, X.M.; Huang, F.X. Enabling personalized search over encrypted outsourced data with efficiency improvement. IEEE Trans. Parallel Distrib. Syst. 2016, 27, 2546–2559. [Google Scholar] [CrossRef]

- Sun, Y.J.; Gu, F.H. Compressive sensing of piezoelectric sensor response signal for phased array structural health monitoring. Int. J. Sens. Netw. 2017, 23, 258–264. [Google Scholar] [CrossRef]

- Friedlander, B. A sensitivity analysis of the MUSIC algorithm. IEEE Trans. Acoust. Speech Signal Process. 1990, 38, 1740–1751. [Google Scholar] [CrossRef]

- Swindlehurst, A.; Kailath, T. A performance analysis of subspace-based methods in the presence of model errors, part I: The MUSIC algorithm. IEEE Trans. Signal Process. 1992, 40, 1758–1774. [Google Scholar] [CrossRef]

- Ferréol, A.; Larzabal, P.; Viberg, M. On the asymptotic performance analysis of subspace DOA estimation in the presence of modeling errors: Case of MUSIC. IEEE Trans. Signal Process. 2006, 54, 907–920. [Google Scholar] [CrossRef]

- Ferréol, A.; Larzabal, P.; Viberg, M. On the resolution probability of MUSIC in presence of modeling errors. IEEE Trans. Signal Process. 2008, 56, 1945–1953. [Google Scholar] [CrossRef]

- Ferréol, A.; Larzabal, P.; Viberg, M. Statistical analysis of the MUSIC algorithm in the presence of modeling errors, taking into account the resolution probability. IEEE Trans. Signal Process. 2010, 58, 4156–4166. [Google Scholar] [CrossRef]

- Inghelbrecht, V.; Verhaevert, J.; van Hecke, T.; Rogier, H. The influence of random element displacement on DOA estimates obtained with (Khatri-Rao-) root-MUSIC. Sensors 2014, 14, 21258–21280. [Google Scholar] [CrossRef] [PubMed]

- Huang, Z.T.; Liu, Z.M.; Liu, J.; Zhou, Y.Y. Performance analysis of MUSIC for non-circular signals in the presence of mutual coupling. IET Signal Process. 2010, 4, 703–711. [Google Scholar] [CrossRef]

- Khodja, M.; Belouchrani, A.; Abed-Meraim, K. Performance analysis for time-frequency MUSIC algorithm in presence of both additive noise and array calibration errors. EURASIP J. Adv. Signal Process. 2012, 2012, 94–104. [Google Scholar] [CrossRef]

- Hari, K.V.S.; Gummadavelli, U. Effect of spatial smoothing on the performance of subspace methods in the presence of array model errors. Automatica 1994, 30, 11–26. [Google Scholar] [CrossRef]

- Soon, V.C.; Huang, Y.F. An analysis of ESPRIT under random sensor uncertainties. IEEE Trans. Signal Process. 1992, 40, 2353–2358. [Google Scholar] [CrossRef]

- Swindlehurst, A.; Kailath, T. A performance analysis of subspace-based methods in the presence of model errors: Part II-Multidimensional algorithm. IEEE Trans. Signal Process. 1993, 41, 2882–2890. [Google Scholar] [CrossRef]

- Friedlander, B. Sensitivity analysis of the maximum likelihood direction-finding algorithm. IEEE Trans. Aerosp. Electron. Syst. 1990, 26, 708–717. [Google Scholar] [CrossRef]

- Ferréol, A.; Larzabal, P.; Viberg, M. Performance prediction of maximum-likelihood direction-of-arrival estimation in the presence of modeling errors. IEEE Trans. Signal Process. 2008, 56, 4785–4793. [Google Scholar] [CrossRef]

- Cao, X.; Xin, J.M.; Nishio, Y.; Zheng, N.N. Spatial signature estimation with an uncalibrated uniform linear array. Sensors 2015, 15, 13899–13915. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.J.; Ren, S.W.; Ding, Y.T.; Wang, H.Y. An efficient algorithm for direction finding against unknown mutual coupling. Sensors 2014, 14, 20064–20077. [Google Scholar] [CrossRef] [PubMed]

- Weiss, A.J.; Friedlander, B. DOA and steering vector estimation using a partially calibrated array. IEEE Trans. Aerosp. Electron. Syst. 1996, 32, l047–l057. [Google Scholar] [CrossRef]

- Pesavento, M.; Gershman, A.B.; Wong, K.M. Direction finding in partly-calibrated sensor arrays composed of multiple subarrays. IEEE Trans. Signal Process. 2002, 55, 2103–2115. [Google Scholar] [CrossRef]

- Wang, B.; Wang, W.; Gu, Y.J.; Lei, S.J. Underdetermined DOA estimation of quasi-stationary signals using a partly-calibrated array. Sensors 2017, 17, 702. [Google Scholar] [CrossRef] [PubMed]

- Amar, A.; Weiss, A.J. Analysis of the direct position determination approach in the presence of model errors. In Proceedings of the IEEE Convention on Electrical and Electronics Engineers, Telaviv, Israel, 6–7 September 2004; pp. 408–411. [Google Scholar]

- Amar, A.; Weiss, A.J. Analysis of direct position determination approach in the presence of model errors. In Proceedings of the IEEE Workshop on Statistical Signal Processing, Novosibirsk, Russia, 17–20 July 2005; pp. 521–524. [Google Scholar]

- Rao, C.R. Linear Statistical Inference and Its Application, 2nd ed.; Wiley: New York, NY, USA, 2002. [Google Scholar]

- Imhof, J.P. Computing the distribution of quadratic forms in normal variables. Biometrika 1961, 48, 419–426. [Google Scholar] [CrossRef]

- Torrieri, D.J. Statistical theory of passive location systems. IEEE Trans. Aerosp. Electron. Syst. 1984, 20, 183–198. [Google Scholar] [CrossRef]

- Gu, H. Linearization method for finding Cramér-Rao bounds in signal processing. IEEE Trans. Signal Process. 2000, 48, 543–545. [Google Scholar]

- Stoica, P.; Larsson, E.G. Comments on “Linearization method for finding Cramér-Rao bounds in signal processing”. IEEE Trans. Signal Process. 2001, 49, 3168–3169. [Google Scholar] [CrossRef]

- Viberg, M.; Swindlehurst, A.L. A Bayesian approach to auto-calibration for parametric array signal processing. IEEE Trans. Signal Processing 1994, 42, 3495–3507. [Google Scholar] [CrossRef]

- Jansson, M.; Swindlehurst, A.L.; Ottersten, B. Weighted subspace fitting for general array error models. IEEE Trans. Signal Process. 1998, 46, 2484–2498. [Google Scholar] [CrossRef]

- Wang, D. Sensor array calibration in presence of mutual coupling and gain/phase errors by combining the spatial-domain and time-domain waveform information of the calibration sources. Circuits Syst. Signal Process. 2013, 32, 1257–1292. [Google Scholar] [CrossRef]

| Notation | Explanation |

|---|---|

| Kronecker product | |

| Schur product | |

| a diagonal matrix with diagonal entries formed from the vector | |

| a block-diagonal matrix formed from the matrices or vectors | |

| Moore-Penrose inverse of the matrix | |

| identity matrix | |

| the kth column vector of | |

| matrix of zeros | |

| vector of ones | |

| the largest eigenvalue of the matrix | |

| Euclidean norm | |

| the nth entry of the vector | |

| the nmth entry of the matrix | |

| real part of the argument | |

| imaginary part of the argument | |

| probability of the given event | |

| mathematical expectation of the random variable | |

| variance of the random variable |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, D.; Yu, H.; Wu, Z.; Wang, C. Performance Analysis of the Direct Position Determination Method in the Presence of Array Model Errors. Sensors 2017, 17, 1550. https://doi.org/10.3390/s17071550

Wang D, Yu H, Wu Z, Wang C. Performance Analysis of the Direct Position Determination Method in the Presence of Array Model Errors. Sensors. 2017; 17(7):1550. https://doi.org/10.3390/s17071550

Chicago/Turabian StyleWang, Ding, Hongyi Yu, Zhidong Wu, and Cheng Wang. 2017. "Performance Analysis of the Direct Position Determination Method in the Presence of Array Model Errors" Sensors 17, no. 7: 1550. https://doi.org/10.3390/s17071550