1. Introduction

Over the past few decades, the application of unmanned aircraft has increased enormously in both civil and military scenarios. Although aerial robots have successfully been implemented in several applications, there are still new research directions related to them. Floreano [

1] and Kumar et al. [

2] outlined the opportunities and challenges of this developing field, from the model design to high-level perception capability. All of these issues are concentrating on improving the degree of autonomy, which supports that UAVs continue to be used in novel and surprising ways. No matter whether fixed-wing or rotor-way platforms, a standard fully-unmanned autonomous system (UAS) involves performs takeoffs, waypoint flight and landings. Among them, the landing maneuver is the most delicate and critical phase of UAV flights. Two technical reports [

3] argued that nearly 70% of mishaps of Pioneer UAVs were encountered during the landing process caused by human factors. Therefore, a proper assist system is needed to enhance the reliability of the landing task. Generally, two main capabilities of the system are required. The first one is localization and navigation of UAVs, and the second one is generating the appropriate guidance command to guide UAVs for a safe landing.

For manned aircraft, the traditional landing system uses a radio beam directed upward from the ground [

4,

5]. By measuring the angular deviation from the beam through onboard equipment, the pilot knows the perpendicular displacement of the aircraft in the vertical channel. For the azimuth information, additional equipment is required. However, due to the size, weight and power (SWaP) constraints, it is impossible to equip these instruments in UAV. Thanks to the GNSS technology, we have seen many successful practical applications of autonomous UAVs in outdoor environments such as transportation, aerial photography and intelligent farming. Unfortunately, in some circumstances, such as urban or low altitude operations, the GNSS receiver antenna is prone to lose line-of-sight with satellites, making GNSS unable to deliver high quality position information [

6]. Therefore, autonomous landing in an unknown or global navigation satellite system (GNSS)-denied environment is still an open problem.

The visual-based approach is an obvious way to achieve the autonomous landing by estimating flight speed and distance to the landing area, in a moment-to-moment fashion. Generally, two types of visual methods can be considered. The first category is the vision-based onboard system, which has been widely studied. The other is to guide the aircraft using a ground-based camera system. Once the aircraft is detected by the camera during the landing process, its characteristics, such as type, location, heading and velocity, can be derived by the guidance system. Based on this information, the UAV could align itself carefully towards the landing area and adapt its velocity and acceleration to achieve safe landing. In summary, two key elements of the landing problem are detecting the UAV and its motion, calculating the location of the UAV relative to the landing field.

To achieve better performance in GNSS-denied environments, some other types of sensors, such as laser range finders, millimeter wavelength radar, have been explored for UAV autonomous landing. Swiss company RUAG (Bern, Switzerland) solved the landing task by the OPATS (object position and tracking sensor) [

7].

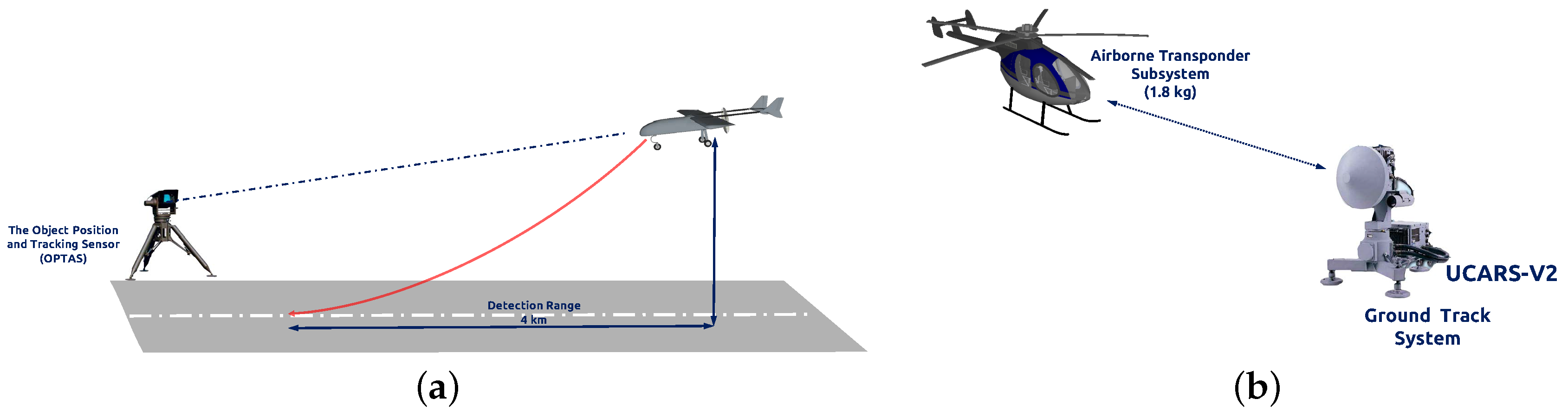

Figure 1a presents this laser-based automatic landing system, the infrared laser beam of which is echoed back from a passive and optionally heated retro reflector on the aircraft. This system could measure the position of approaching aircraft around 4000 m. Moreover, the Sierra Nevada Corporation provides an alternative to the laser-based method. They developed the UAS common automatic recovery system (UCARS) [

8] based on millimeter wavelength ground radar for MQ-8B Fire Scout autonomous landing, as shown in

Figure 1b. Benefiting from the short bandwidth, UCARS provides precision approach (within 2.5 cm) in adverse weather condition. While those solutions are effective, they require the use of radar or laser emissions, which can be undesirable in a tactical situation. Furthermore, the limited payload of a small UAV constrains the onboard modules.

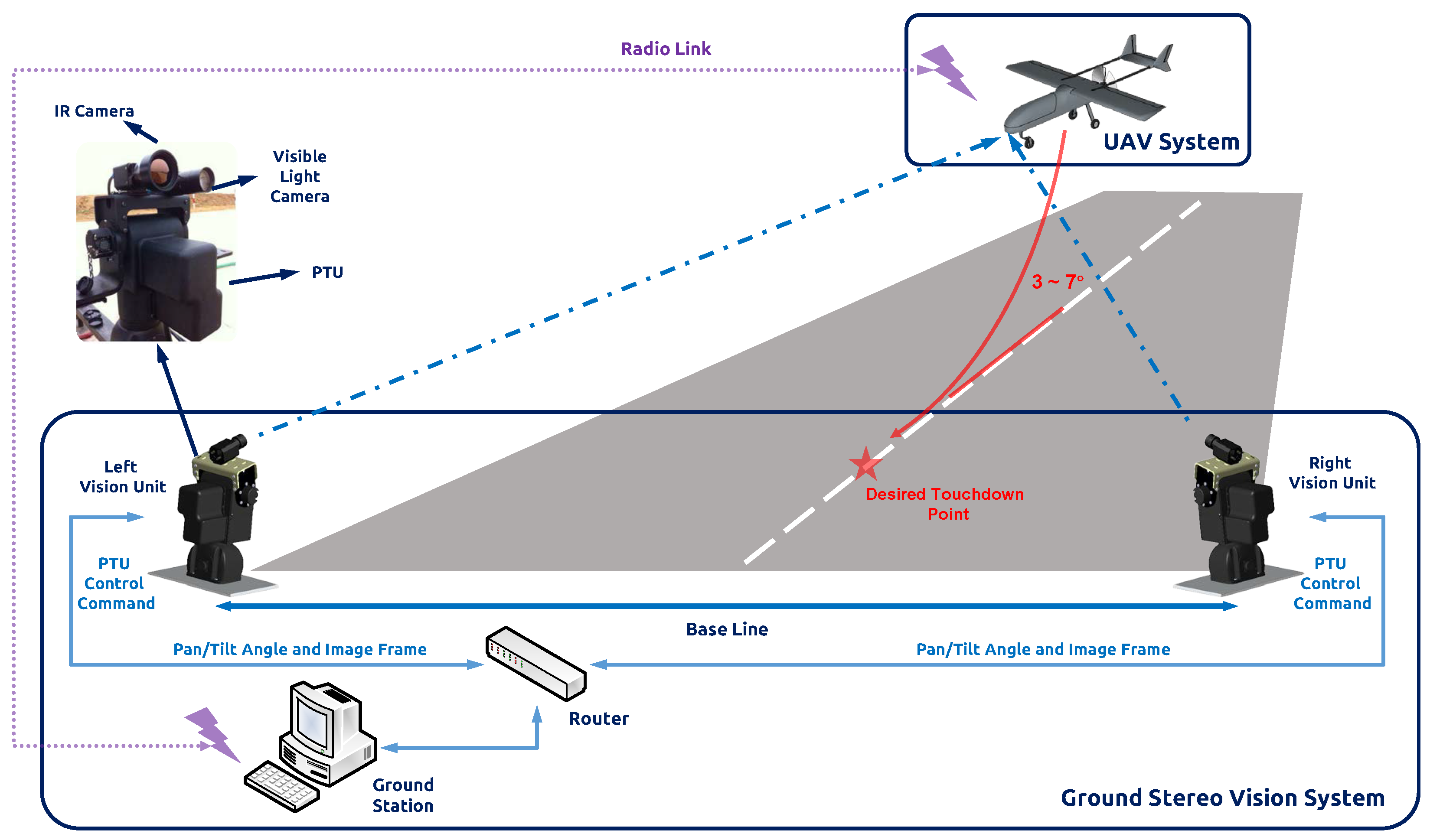

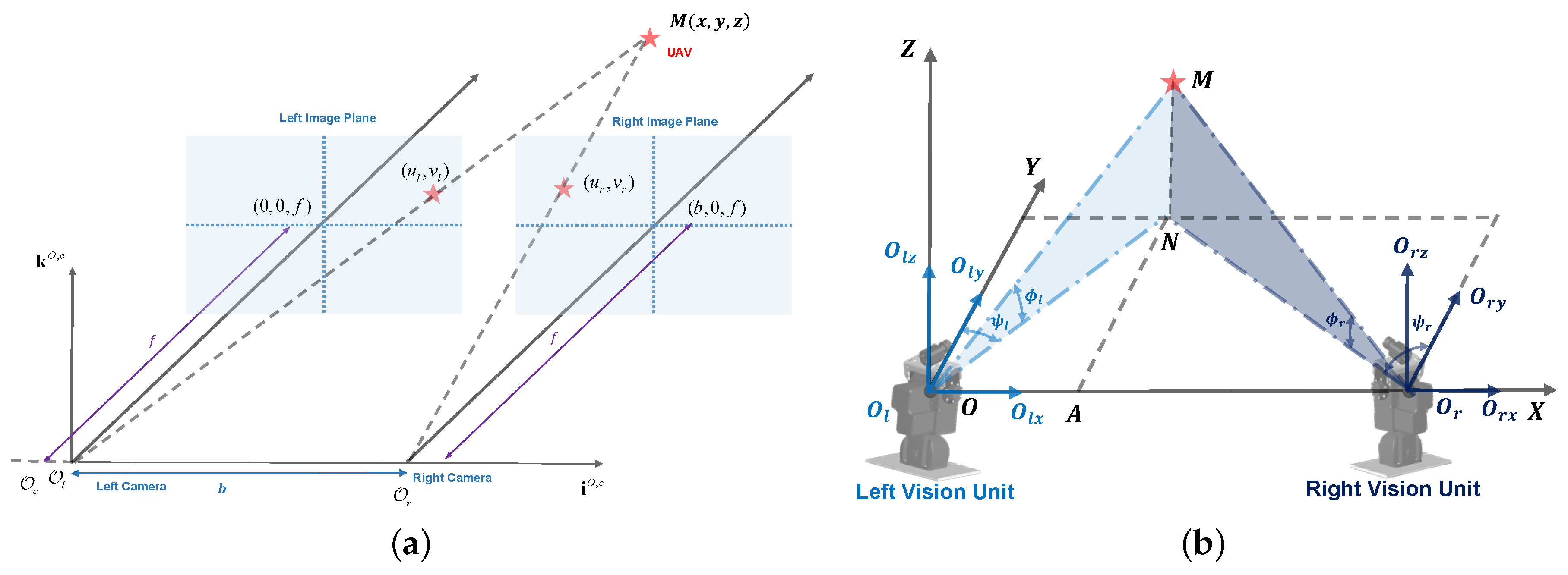

Motivated by these mentioned challenges, we propose and develop a novel on-ground deployment of the visual landing system. In this paper, we mainly focus on the localization and navigation issue and try to improve the navigation accuracy and robustness. The essential contributions of this work are as follows: (1) an extendable baseline and wide-angle field of view (FOV) vision guidance system is developed by using a physically-separated and informationally-connected deployment of the two PTUs on both sides of the runway; (2) localization error and its transferring mechanism in practical situations are unveiled with both theoretical and computational analyses. In particular, the developed approach is experimentally validated with fair accuracy and better performance in timeliness, as well as practicality against the previous works.

The remainder of this paper is organized as follows.

Section 2 briefly reviews the related works. In

Section 3, the architecture of the on-ground deployed stereo system is proposed and designed.

Section 4 conducts the accuracy evaluation, and its transferring mechanism is conducted through theoretical and computational analysis. Dataset-driven validation is followed in

Section 5. Finally, concluding remarks are presented in

Section 6.

2. Related Works

While several techniques have been applied for onboard vision-based control of UAVs, few have shown landing of a fixed-wing guiding by a ground-based system. In 2006, Wang [

9] proposed a system using a step motor controlling a web camera to track and guide a micro-aircraft. This camera rotation platform expands the recognition area from 60 cm × 60 cm–140 cm × 140 cm, but the range of the recognition is only 1 m. This configuration cannot be used to determine the position of a fixed-wing in the field.

At Chiba University [

10], a ground-based Bumblebee stereo vision system was used to calculate the 3D position of a quadrotor at the altitude of 6 m. The Bumblebee has a 15.7-cm baseline with a 66° horizontal field of view. The sensor was mounted on a tripod with the height of 45 cm, and the drawback of this system is the limited baseline leading to a narrow field of view (FOV).

To increase the camera FOV, multi-camera systems are considered attractive. This kind of system could solve the common vision problems and track objects to compute their 3D locations. In addition, Martinez [

11] introduced a trinocular on-ground system, which is composed of three or more cameras for extracting key features of the UAV to obtain robust 3D position estimation. The lenses of the FireWire cameras are 3.4 mm and capture images of a

size at 30 fps. They employed the continuously-adaptive mean shift (CamShift) algorithm to track the four cooperation markers with independent color, which were distributed on the bottom of the helicopter. The precision of this system in the vertical and horizontal direction is around 5 cm and in depth estimation is 10 cm with a 3-m recognition range. The maximum range for depth estimation is still not sufficient for fixed-wing UAV. Additionally, another drawback of the multi-camera system is the calibration process, whose parameters are nontrivial to obtain.

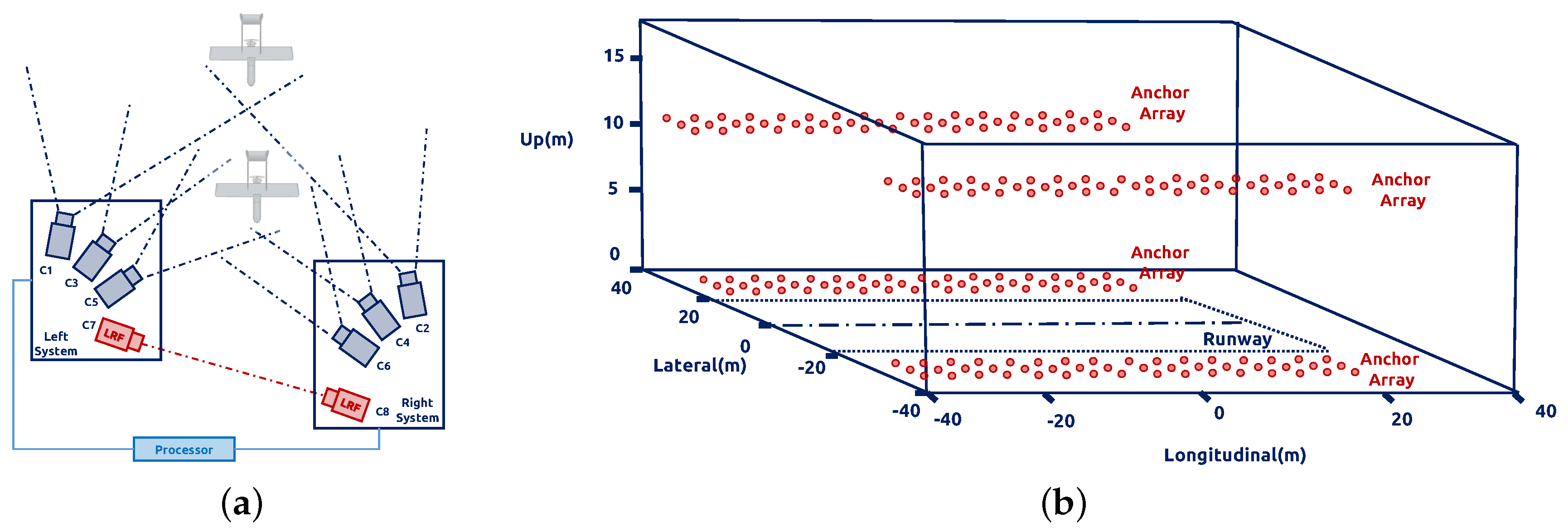

A state-of-the-art study from Guan et al. [

12] proposed a multi-camera network with laser rangefinders to estimate an aircraft’s motion. This system is composed of two sets of measurement units that are installed on both sides of the runway. Each unit has three high-speed cameras with different focal lengths and FOV to captures the target in the near-filed (20 m–100 m), middle-field (100 m–500 m) and far-field (500 m–1000 m), respectively. A series of field experiments shows that the RMS error of the distance is 1.32 m. Due to the configuration of the system, they have to apply a octocopter UAV equipped with a prism to calibrate the whole measurement system.

Except the camera-based ground navigation system, the ultra-wide band (UWB) positioning network is also discussed in the community. Kim and Choi [

13] deployed the passive UWB anchors by the runway, which listen for the UWB signals emitted from the UAV. The ground system computes the position of the target based on the geometry of the UWB anchors and sends it back to the UAV through the aviation communication channel. There are a total of 240 anchor possible locations, as shown in

Figure 2b, distributed at each side of the runway, and the longitudinal range is up to 300 m with a positioning accuracy of 40 cm.

Our group first developed the traditional stereo ground-based system with infrared cameras [

14], while this system has limited detection distance. For short-baseline configuration, cameras were setup on one PTU, and the system should be mounted on the center line of the runway. However, the short-baseline limits the maximum range for UAV depth estimation. To enhance the operating capability, we conducted the triangular geometry localization method for the PTU-based system [

15]. As shown in

Figure 3, we fixed the cameras with separate PTUs on the both sides of the runway. Therefore, the landing aircraft can be locked by our system around 1 km. According to the previous work, the localization accuracy largely depends on the aircraft detection precision in the camera image plane. Therefore, we implemented the Chan–Vese method [

16] and the saliency-inspired method [

17] to detect and track the vehicle more accurately; however, these approaches are not suitable for real-time requirements.

For more information, we also reviewed various vision-based landing approaches performed on different platforms [

18], and Gautam provides another general review of the autonomous landing techniques for UAVs [

19].